Inclusive Human Intention Prediction with Wearable Sensors: Machine Learning Techniques for the Reaching Task Use Case †

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

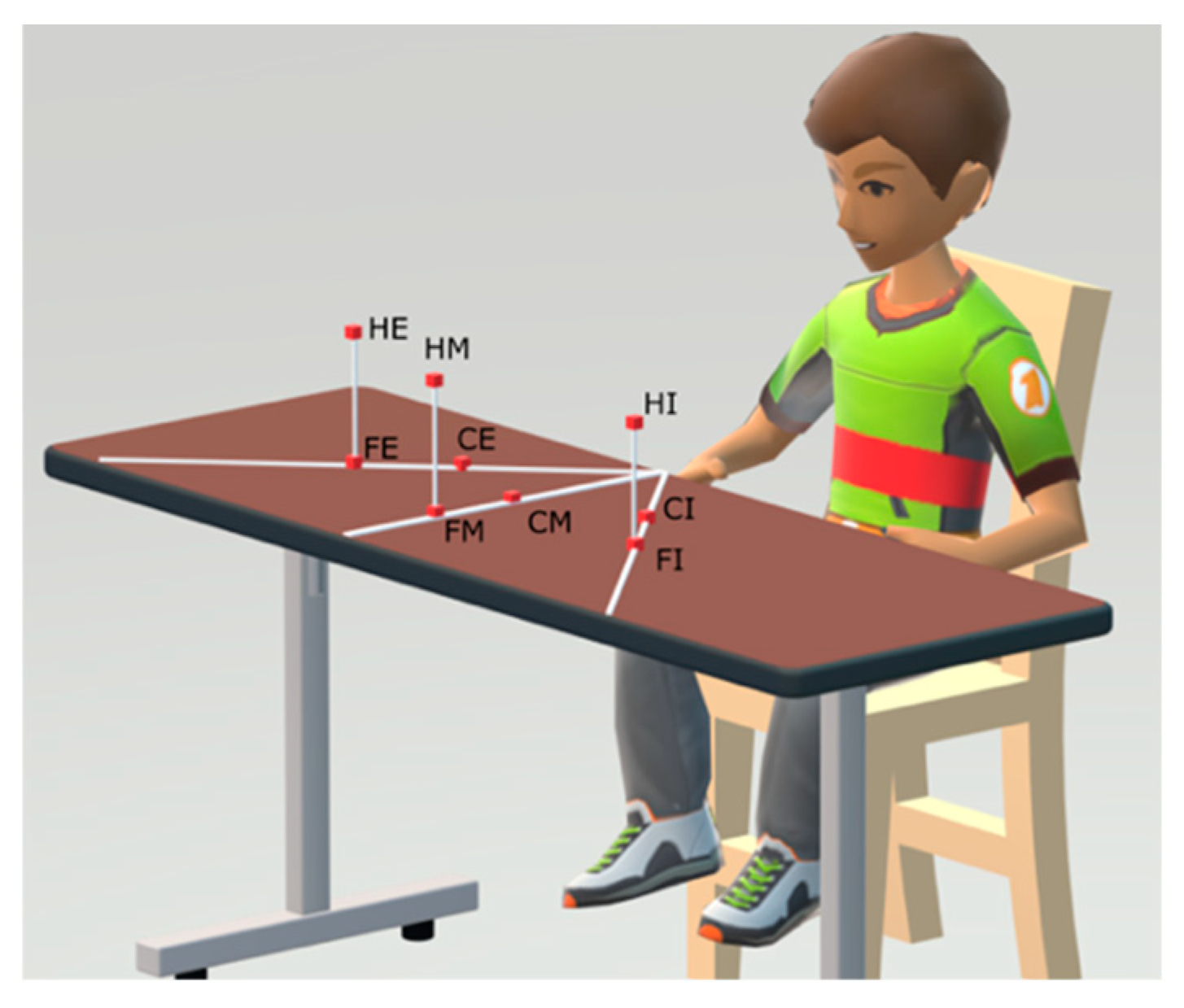

2.2. Protocol

2.3. Experimental Setup

2.4. Data Treatment

3. Results

4. Discussion

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Preatoni, E.; Nodari, S.; Lopomo, N.F. Supervised Machine Learning Applied to Wearable Sensor Data Can Accurately Classify Functional Fitness Exercises Within a Continuous Workout. Front. Bioeng. Biotechnol. 2020, 8, 1–13. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, G.; Han, B.; Wang, Z.; Zhang, T. SEMG Based Human Motion Intention Recognition. J. Robot. 2019, 2019, 3679174. [Google Scholar] [CrossRef]

- Cangelosi, A.; Invitto, S. Human–Robot Interaction and Neuroprosthetics: A review of new technologies. IEEE Consum. Electron. Mag. 2017, 6, 24–33. [Google Scholar] [CrossRef]

- Balasubramanian, S.; Garcia-Cossio, E.; Birbaumer, N.; Burdet, E.; Ramos-Murguialday, A. Is EMG a Viable Alternative to BCI for Detecting Movement Intention in Severe Stroke? IEEE Trans. Biomed. Eng. 2018, 65, 2790–2797. [Google Scholar] [CrossRef] [PubMed]

- Merad, M.; de Montalivet, É.; Touillet, A.; Martinet, N.; Roby-Brami, A.; Jarrassé, N. Can we achieve intuitive prosthetic elbow control based on healthy upper limb motor strategies? Front. Neurorobot. 2018, 12, 1. [Google Scholar] [CrossRef] [PubMed]

- Ragni, F.; Amici, C.; Borboni, A.; Faglia, R.; Cappellini, V.; Pedersini, P.; Villafañe, J.H. Effects of Soft Tissue Artifact in the Measurement of Hand Kinematics. Int. Rev. Mech. Eng. 2020, 14, 230–242. [Google Scholar] [CrossRef]

- Negrini, S.; Serpelloni, M.; Amici, C.; Gobbo, M.; Silvestro, C.; Buraschi, R.; Borboni, A.; Crovato, D.; Lopomo, N.F. Use of wearable inertial sensor in the assessment of Timed-Up-and-Go Test: Influence of device placement on temporal variable estimation. In Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, Proceedings of the International Conference on Wireless Mobile Communication and Healthcare, Milan, Italy, 14–16 November 2016; Springer: Cham, Switzerland, 2017; Volume 192. [Google Scholar]

- Saint-Bauzel, L.; Pasqui, V.; Morel, G.; Gas, B. Real-time human posture observation from a small number of joint measurements. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 3956–3961. [Google Scholar]

- Endres, F.; Hess, J.; Burgard, W. Graph-based action models for human motion classification. In Proceedings of the ROBOTIK 2012, 7th German Conference on Robotics, Munich, Germany, 21–22 May 2012; pp. 1–6. [Google Scholar]

- Yang, C.; Kerr, A.; Stankovic, V.; Stankovic, L.; Rowe, P. Upper limb movement analysis via marker tracking with a single-camera system. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 2285–2289. [Google Scholar]

- He, J.; Chen, S.; Guo, Z.; Pirbhulal, S.; Wu, W.; Feng, J.; Dan, G. A comparative study of motion recognition methods for efficacy assessment of upper limb function. Int. J. Adapt. Control Signal Process. 2018, 33, 1248–1256. [Google Scholar] [CrossRef]

- Roby-Brami, A.; Feydy, A.; Combeaud, M.; Biryukova, E.V.; Bussel, B.; Levin, M.F. Motor compensation and recovery for reaching in stroke patients. Acta Neurol. Scand. 2003, 107, 369–381. [Google Scholar] [CrossRef] [PubMed]

- Molteni, F.; Gasperini, G.; Cannaviello, G.; Guanziroli, E. Exoskeleton and End-Effector Robots for Upper and Lower Limbs Rehabilitation: Narrative Review. PM R 2018, 10, S174–S188. [Google Scholar] [CrossRef] [PubMed]

- Romaszewski, M.; Glomb, P.; Gawron, P. Natural hand gestures for human identification in a Human-Computer Interface. In Proceedings of the 2014 4th International Conference on Image Processing Theory, Tools and Applications (IPTA), Paris, France, 14–17 October 2014. [Google Scholar]

- Li, B.; Bai, B.; Han, C. Upper body motion recognition based on key frame and random forest regression. Multimed. Tools Appl. 2020, 79, 5197–5212. [Google Scholar] [CrossRef]

- Robertson, J.V.G.; Roche, N.; Roby-Brami, A. Influence of the side of brain damage on postural upper-limb control including the scapula in stroke patients. Exp. Brain Res. 2012, 218, 141–155. [Google Scholar] [CrossRef] [PubMed]

- Polhemus. SPACE FASTRAK User’s Manuel; Polhemus: Colchester, VT, USA, 1993. [Google Scholar]

- Amici, C.; Ghidoni, M.; Ceresoli, F.; Gaffurini, P.; Bissolotti, L.; Mor, M.; Fausti, D.; Anton, M.; Ragni, F.; Tiboni, M. Preliminary Validation of a Device for the Upper and Lower Limb Robotic Rehabilitation. In Proceedings of the ICMT 2019|23rd International Conference on Mechatronics Technology, Salerno, Italy, 23–26 October 2019. [Google Scholar]

- Godfrey, A.; Bourke, A.K.; Ólaighin, G.M.; van de Ven, P.; Nelson, J. Activity classification using a single chest mounted tri-axial accelerometer. Med. Eng. Phys. 2011, 33, 1127–1135. [Google Scholar] [CrossRef] [PubMed]

- Moyle, W.; Arnautovska, U.; Ownsworth, T.; Jones, C. Potential of telepresence robots to enhance social connectedness in older adults with dementia: An integrative review of feasibility. Int. Psychogeriatr. 2017, 29, 1951–1964. [Google Scholar] [CrossRef] [PubMed]

- Nuzzi, C.; Pasinetti, S.; Lancini, M. Deep Learning-Based Hand Collaborative Robots. IEEE Instrum. Meas. Mag. 2019, 22, 44–51. [Google Scholar] [CrossRef]

- Nuzzi, C.; Pasinetti, S.; Lancini, M.; Docchio, F.; Sansoni, G. Deep Learning Based Machine Vision: First Steps Towards a Hand Gesture Recognition Set Up for Collaborative Robots. In Proceedings of the 2018 2018 Workshop on Metrology for Industry 4.0 and IoT, Brescia, Italy, 16–18 April 2018; pp. 28–33. [Google Scholar]

| Tests | Sensors Position (SP) | Sensors Velocity | SensorsAcceleration | SensorsEuler Angles |

|---|---|---|---|---|

| 1, 6, 11, 16 | x | x | ||

| 2, 7, 12, 17 | x | x | x | |

| 3, 8, 13, 18 | x | |||

| 4, 5, 9, 10, 14, 15, 19, 20 | x |

| Test n. | RF | LDA | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| OOB Accuracy | SD | OOB Accuracy sgn_N | SD | ori_sgn–sgn_N Residual | Accuracy | SD | Accuracy sgn_N | SD | ori_sgn–sgn_N Residual | |

| 1 | 72.85% | 0.032 | 49.40% | 0.017 | 23.45% | 81.00% | 0.042 | 57.04% | 0.056 | 23.96% |

| 2 | 73.73% | 0.015 | 57.48% | 0.015 | 16.25% | 86.13% | 0.036 | 68.73% | 0.044 | 17.40% |

| 3 | 61.78% | 0.016 | 47.91% | 0.017 | 13.87% | 73.11% | 0.045 | 53.12% | 0.053 | 19.99% |

| 4 | 59.71% | 0.015 | 58.71% | 0.014 | 1.00% | 58.27% | 0.054 | 57.40% | 0.049 | 0.87% |

| 5 | 71.97% | 0.013 | 70.69% | 0.013 | 1.28% | 73.56% | 0.047 | 72.81% | 0.047 | 0.75% |

| 6 | 82.92% | 0.011 | 70.10% | 0.014 | 12.82% | 88.97% | 0.032 | 81.07% | 0.044 | 7.90% |

| 7 | 84.60% | 0.01 | 76.38% | 0.014 | 8.22% | 92.80% | 0.027 | 86.60% | 0.038 | 6.20% |

| 8 | 76.58% | 0.013 | 69.69% | 0.014 | 6.89% | 85.45% | 0.041 | 76.36% | 0.044 | 9.09% |

| 9 | 64.48% | 0.016 | 64.34% | 0.016 | 0.14% | 63.84% | 0.054 | 63.26% | 0.052 | 0.58% |

| 10 | 75.70% | 0.012 | 75.68% | 0.011 | 0.02% | 78.82% | 0.045 | 78.76% | 0.044 | 0.06% |

| 11 | 68.04% | 0.015 | 49.55% | 0.017 | 18.49% | 78.80% | 0.041 | 57.47% | 0.049 | 21.33% |

| 12 | 71.40% | 0.015 | 58.21% | 0.016 | 13.19% | 83.26% | 0.042 | 71.17% | 0.05 | 12.09% |

| 13 | 60.05% | 0.016 | 50.04% | 0.017 | 10.01% | 69.56% | 0.049 | 54.78% | 0.045 | 14.78% |

| 14 | 60.92% | 0.015 | 60.69% | 0.016 | 0.23% | 59.57% | 0.054 | 58.65% | 0.05 | 0.92% |

| 15 | 72.57% | 0.013 | 72.47% | 0.013 | 0.10% | 74.09% | 0.048 | 74.31% | 0.043 | -0.22% |

| 16 | 80.27% | 0.012 | 65.22% | 0.015 | 15.05% | 86.80% | 0.04 | 74.97% | 0.045 | 11.83% |

| 17 | 82.88% | 0.013 | 74.31% | 0.013 | 8.57% | 90.61% | 0.034 | 84.06% | 0.041 | 6.55% |

| 18 | 73.65% | 0.012 | 66.25% | 0.014 | 7.40% | 81.15% | 0.044 | 73.81% | 0.047 | 7.34% |

| 19 | 66.26% | 0.014 | 65.80% | 0.015 | 0.46% | 70.04% | 0.048 | 69.62% | 0.045 | 0.42% |

| 20 | 78.17% | 0.011 | 77.99% | 0.011 | 0.18% | 83.59% | 0.04 | 82.06% | 0.044 | 1.53% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Archetti, L.; Ragni, F.; Saint-Bauzel, L.; Roby-Brami, A.; Amici, C. Inclusive Human Intention Prediction with Wearable Sensors: Machine Learning Techniques for the Reaching Task Use Case. Eng. Proc. 2020, 2, 13. https://doi.org/10.3390/ecsa-7-08234

Archetti L, Ragni F, Saint-Bauzel L, Roby-Brami A, Amici C. Inclusive Human Intention Prediction with Wearable Sensors: Machine Learning Techniques for the Reaching Task Use Case. Engineering Proceedings. 2020; 2(1):13. https://doi.org/10.3390/ecsa-7-08234

Chicago/Turabian StyleArchetti, Leonardo, Federica Ragni, Ludovic Saint-Bauzel, Agnès Roby-Brami, and Cinzia Amici. 2020. "Inclusive Human Intention Prediction with Wearable Sensors: Machine Learning Techniques for the Reaching Task Use Case" Engineering Proceedings 2, no. 1: 13. https://doi.org/10.3390/ecsa-7-08234

APA StyleArchetti, L., Ragni, F., Saint-Bauzel, L., Roby-Brami, A., & Amici, C. (2020). Inclusive Human Intention Prediction with Wearable Sensors: Machine Learning Techniques for the Reaching Task Use Case. Engineering Proceedings, 2(1), 13. https://doi.org/10.3390/ecsa-7-08234