Abstract

Breast cancer is the type of cancer that affects women the most frequently in the world. Additionally, it is the highest cause of death for women. For the detection and treatment of breast cancer, there are numerous imaging techniques. For medical image analysts, making a diagnosis is arduous, routine, time-consuming and tedious. Additionally, the growing volume of ultrasounds to interpret has overloaded practitioners and analysts. In the past, investigations have been performed using mammogram images. This research aims to take a different approach. The hypothesis is that by using artificial intelligence (AI) for ultrasound analysis, the process of computer-aided diagnosis (CAD) can be made more effective, interesting and free from subjectivity. The research’s purpose is to classify benign (non-cancerous), malignant (cancerous) and normal samples. The dataset contains 780 images in total. Data were split into 70% for training and 30% for validation. In this dataset, data augmentation and data preprocessing are also applied. Three models are used to classify samples. While ResNet50 scores 85.4% accuracy, ResNeXt50 scores 85.83%, and VGG16 scores 81.11%. Making the diagnosis by artificial intelligence will provide relief in the field of medicine. Computer vision models may be used in medicine. Therefore, providing more data and testing data more broadly will help improve the model.

1. Introduction

Breast tissue is the starting point of breast cancer. A mass of tissue is produced when breast cells mutate and grow out of control (tumor). Breast cancer can spread to the tissue surrounding the breast, just as other types of cancer. Although the symptoms of breast cancer vary from person to person, sometimes it does not show any symptoms. Common breast cancer symptoms are nipple discharge or redness, and change in breast size, among others. When the causes of breast cancer, which is formed by the division and proliferation of abnormal cells, are investigated, the initial cause cannot be clearly understood. However, there are many factors that increase the risk of breast cancer. These factors are being over 55 years old, genetics, family history, alcohol, smoking, radiation, obesity, etc. In the diagnosis of breast cancer, testing may be requested in addition to the medical history and symptoms. Devices that can be used for this are mammogram, magnetic resonance imaging (MRI), positron emission tomography (PET) scanning and ultrasonography [1]. In the scope of the study, ultrasonography images were used. This research dataset contains three classes. These are benign, malignant, and normal ultrasound images. The purpose of the research is to classify these three classes with deep learning models. Diagnosis of diseased patients from ultrasound images could be vital for time and accuracy because of the growing volume of patient images. The novelty and main contributions of the study are as follows:

- Within the scope of the study, multi-class classification was made on breast cancer ultrasound images. Thus, unlike the binary classifications in the literature, benign and malignant classes of breast cancer can also be detected.

- Instead of using the ultrasound images directly, various preprocessing and augmentation processes have been applied. This contributed positively to the classification results.

- The most appropriate classification model was determined by comparing the results for breast cancer detection using more than one deep learning model.

- Convolutional neural network-based deep learning models are customized to be suitable for the detection of breast cancer classes.

2. Related Works

When the literature is researched, it is seen that many different classification studies have been carried out using deep learning on breast cancer images. Burçak et al. found that, as a result of the classification performed by combining the DCNN model and SVM ReliefF models on the BreakHis dataset, classification reached F1: 92%, precision: 93%, sensitivity: 92%, AUC: 97.8% in 40× images [2]. Lin et al., using the breast cancer images taken from the BSCS site, achieved 91.3% as a result of AlexNet, ResNet101, and Inception v3 models [3]. Zaalouk et al., as a result of the classification performed by the Xception model on the BreakHis dataset, while the learning rate was 0.0001 in 40× images, reached F1: 100%, precision: 100%, sensitivity: 100%, AUC: 100%, accuracy: 100% [4]. Escorcia-Gutierrez, as a result of the classification performed by the ADL-BCD technique on the Mammographic Image Analysis Society (MIAS) dataset, achieved F1: 92.75%, precision: 93.54%, recall: 92.15%, specificity: 95.9%, accuracy: 96.07% in 40× images [5]. Wang et al., as a result of the classification performed with the CNN-GRU model on the PCam dataset shared on Kaggle, achieved accuracy: 86%, precision: 85%, sensitivity: 85%, F1 score: 86%, and AUC: 89% [6].

In the literature, it is seen that mostly VGG, Inception, and ResNet deep learning models are used in the classification of breast cancer images, especially on datasets obtained from many different hospitals. Within the scope of this study, different from the literature, classification processes were carried out on open-source breast cancer ultrasound images with three different deep learning models on randomly distributed datasets, divided into 70% training, 30% validation, and testing.

3. Materials and Methods

An open-source dataset on the Kaggle platform [7] was used to classify breast cancer images. This dataset collected from 600 patients aged 25 to 75 years in 2018 was obtained by Al-Dhabyani et al., shared as open source [8]. Class type, quantity, number, percentage, and training/validation/testing information regarding this dataset are given in the Table 1 below.

Table 1.

Distribution of dataset [8].

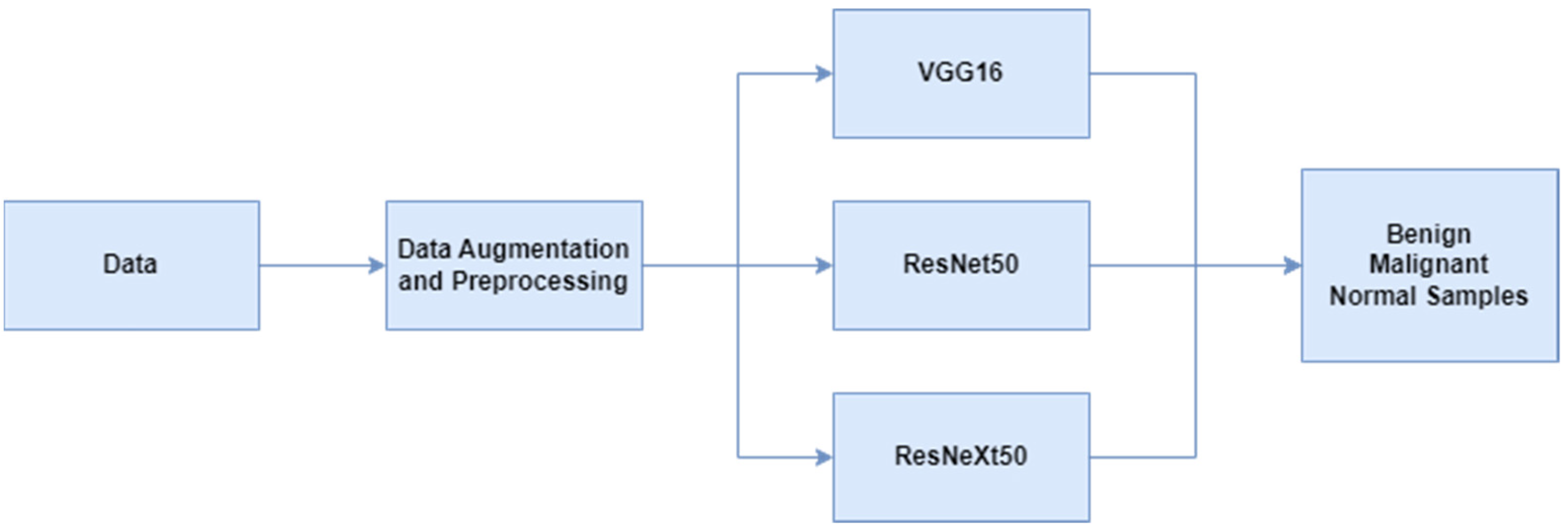

Breast cancer ultrasound images used in the study were first processed through data preprocessing and augmentation steps. Then, multi-class classification was made with deep learning-based VGG16, ResNet50 and ResNeXt50 models. The scheme related to this is given in Figure 1. The preprocessing steps performed on the dataset are center-crop and normalization. Input dimensions are resized to 400 × 400 with preprocessing. Data augmentation steps are shift scale rotate, rgb shift, random brightness contrast and color jitter. Classification studies were performed on the P100 GPU offered on the Kaggle platform.

Figure 1.

Flowchart of breast cancer classification.

The first model used was VGG16. If the structure of this model is examined, VGG16 has fixed core dimensions. The architecture is based on a detailed analysis of networks with increasing depth, utilizing an architecture with extremely small (3 × 3) convolution filters, which demonstrates that increasing the depth to 16–19 weight layers can significantly outperform existing configurations. The total number of “convolutional” and “fully connected layers” of VGG16 is 16 [9]. In addition, the last layers of the architecture were altered with three nodes.

The second model used was ResNet50. Looking at the nature of this model, this network uses a technique called skip connection. Skip connections skip several layers and connect directly to the output. In this way, the problem of an exploding/vanishing gradient is avoided [10]. In addition, three nodes were added to the last layers of the architecture.

The third model used was ResNeXt. Observation of this model’s structure reveals a recurring building block that connects a number of transformations that follow the same logic. In addition to the depth and width dimensions, the ResNet design adds a third crucial parameter called cardinality (size of the transform set) [11]. Additionally, three nodes were added to the architecture’s final levels to change them.

4. Results

The results obtained by deep learning classification processes in breast cancer images are expressed in the tables below. When Table 2 is examined, you can see the results obtained in VGG16, ResNet50 and ResNeXt models. In this research, accuracy, F1 score and AUC scores have been obtained. The first metric values represent benign samples, the second values represent malignant samples, and the last values represent normal samples in Table 2.

Table 2.

Classification results.

When the literature is examined, it is observed that there are different preprocessing, augmentation processes and different test percentages performed on the datasets in the classification processes carried out within the scope of this study. Since this difference may affect the results positively or negatively, it is more appropriate to evaluate the study itself. However, the comparison table with the classification results recently performed, and especially in this and some other datasets, is as in the Table 3 below.

Table 3.

Comparison with other studies.

5. Conclusions and Future Works

In this study, we observed that an accuracy score up to 85.83% can be achieved with ResNeXt50 in ultrasound images. In the VGG16 and ResNeXt50, the results for normal samples are generally lower than the others. This may be due to the insufficient number of normal samples. In addition, if we look at Table 2, we can observe that the ResNet50 model’s AUC and F1 scores are more stable and closer to each other than the others. Although ResNeXt50 gives better accuracy, ResNet50 seems better in terms of stability and class-based results. Different or more preprocessing operations and balancing the data with augmentation will enable better results to be obtained. Moreover, the usage of various transfer learning models may improve the metrics. Within the scope of the results obtained from this study, ensemble learning, hybrid models and data replication with GAN will be tried in the future. Considering the studies that have been done and will be done, computer vision models can help both patients and healthcare personnel. Thus, they will increase time efficiency and help eliminate subjectivity during classification.

Author Contributions

Conceptualization, F.U. and M.M.K.; methodology, F.U. and M.M.K.; software, F.U. and M.M.K.; validation, F.U. and M.M.K.; formal analysis, F.U. and M.M.K.; investigation, F.U. and M.M.K.; resources, F.U. and M.M.K.; data curation, F.U. and M.M.K.; writing—original draft preparation, F.U. and M.M.K.; writing—review and editing, F.U. and M.M.K.; visualization, F.U. and M.M.K.; supervision, F.U. and M.M.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data used in this study are available at https://www.kaggle.com/datasets/aryashah2k/breast-ultrasound-images-dataset (accessed on 1 August 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cleveland Clinic. Available online: https://my.clevelandclinic.org/health/diseases/3986-breast-cancer (accessed on 21 January 2022).

- Burçak, K.C.; Uğuz, H. A New Hybrid Breast Cancer Diagnosis Model Using Deep Learning Model and Relief. Traitement Du Signal 2022, 39, 521–529. [Google Scholar] [CrossRef]

- Lin, R.H.; Kujabi, B.K.; Chuang, C.L.; Lin, C.S.; Chiu, C.J. Application of Deep Learning to Construct Breast Cancer Diagnosis Model. Appl. Sci. 2022, 12, 1957. [Google Scholar] [CrossRef]

- Zaalouk, A.M.; Ebrahim, G.A.; Mohamed, H.K.; Hassan, H.M.; Zaalouk, M.M. A Deep Learning Computer-Aided Diagnosis Approach for Breast Cancer. Bioengineering 2022, 9, 391. [Google Scholar] [CrossRef] [PubMed]

- Escorcia-Gutierrez, J.; Mansour, R.F.; Beleño, K.; Jiménez-Cabas, J.; Pérez, M.; Madera, N.; Velasquez, K. Automated deep learning empowered breast cancer diagnosis using biomedical mammogram images. Comput. Mater. Contin. 2022, 71, 3–4221. [Google Scholar] [CrossRef]

- Wang, X.; Ahmad, I.; Javeed, D.; Zaidi, S.A.; Alotaibi, F.M.; Ghoneim, M.E.; Eldin, E.T. Intelligent Hybrid Deep Learning Model for Breast Cancer Detection. Electronics 2022, 11, 2767. [Google Scholar] [CrossRef]

- Kaggle. Available online: https://www.kaggle.com/datasets/aryashah2k/breast-ultrasound-images-dataset (accessed on 1 August 2022).

- Al-Dhabyani, W.; Gomaa, M.; Khaled, H.; Fahmy, A. Dataset of breast ultrasound images. Data Brief. 2020, 28, 104863. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Jabeen, K.; Khan, M.A.; Alhaisoni, M.; Tariq, U.; Zhang, Y.-D.; Hamza, A.; Mickus, A.; Damaševičius, R. Breast Cancer Classification from Ultrasound Images Using Probability-Based Optimal Deep Learning Feature Fusion. Sensors 2022, 22, 807. [Google Scholar] [CrossRef] [PubMed]

- da Silva, D.S.; Nascimento, C.S.; Jagatheesaperumal, S.K.; Albuquerque, V.H.C.d. Mammogram Image Enhancement Techniques for Online Breast Cancer Detection and Diagnosis. Sensors 2022, 22, 8818. [Google Scholar] [CrossRef] [PubMed]

- Ragab, M.; Albukhari, A.; Alyami, J.; Mansour, R.F. Ensemble Deep-Learning-Enabled Clinical Decision Support System for Breast Cancer Diagnosis and Classification on Ultrasound Images. Biology 2022, 11, 439. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).