Anomaly Detection on Video by Detecting and Tracking Feature Points †

Abstract

1. Introduction

2. Related Works

2.1. Feature Points Detection Methods

2.2. Methods of a Local Optical Flow Construction

2.3. Anomaly Detection Algorithms

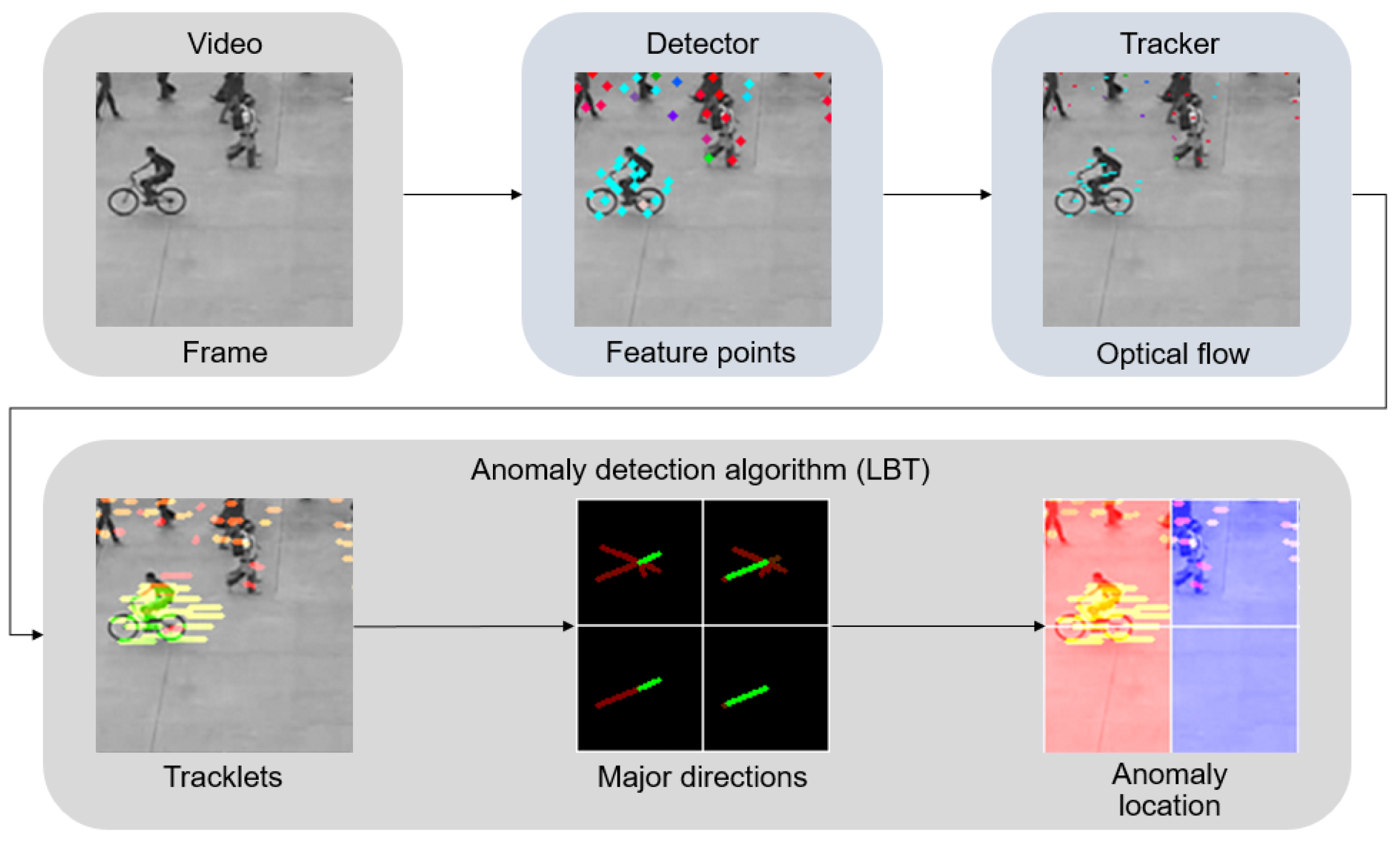

3. The Tracklet Analysis Algorithm

4. Experiments

4.1. Test Datasets

4.2. Quality Metrics

4.3. Configuring Algorithm Parameters

4.4. Comparison of Detection and Tracking Algorithms

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rezaee, K.; Rezakhani, S.M.; Khosravi, M.R.; Moghimi, M.K. A survey on deep learning-based real-time crowd anomaly detection for secure distributed video surveillance. Pers. Ubiquitous Comput. 2021, 1–17. [Google Scholar] [CrossRef]

- Xu, J.; Denman, S.; Sridharan, S.; Fookes, C.; Rana, R. Dynamic texture reconstruction from sparse codes for unusual event detection in crowded scenes. In Proceedings of the 2011 Joint ACM Workshop on Modeling and Representing Events, Scottsdale, AZ, USA, 30 November 2011; pp. 25–30. [Google Scholar]

- Mehran, R.; Oyama, A.; Shah, M. Abnormal crowd behavior detection using social force model. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 935–942. [Google Scholar]

- Zhou, B.; Wang, X.; Tang, X. Understanding collective crowd behaviors: Learning a mixture model of dynamic pedestrian-agents. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2871–2878. [Google Scholar]

- Ravanbakhsh, M.; Mousavi, H.; Nabi, M.; Marcenaro, L.; Regazzoni, C. Fast but not deep: Efficient crowd abnormality detection with local binary tracklets. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Shi, J. Good features to track. In Proceedings of the 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Mair, E.; Hager, G.D.; Burschka, D.; Suppa, M.; Hirzinger, G. Adaptive and generic corner detection based on the accelerated segment test. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 183–196. [Google Scholar]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust wide-baseline stereo from maximally stable extremal regions. Image Vis. Comput. 2004, 22, 761–767. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. Brief: Binary robust independent elementary features. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 778–792. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Alcantarilla, P.F.; Bartoli, A.; Davison, A.J. KAZE features. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 214–227. [Google Scholar]

- Alcantarilla, P.F.; Solutions, T. Fast explicit diffusion for accelerated features in nonlinear scale spaces. IEEE Trans. Patt. Anal. Mach. Intell. 2011, 34, 1281–1298. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence, Vienna, Austria, 23–29 July 2022. [Google Scholar]

- Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Proceedings of the Scandinavian Conference on Image Analysis, Halmstad, Sweden, 29 June–2 July 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 363–370. [Google Scholar]

- Senst, T.; Eiselein, V.; Sikora, T. Robust local optical flow for feature tracking. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1377–1387. [Google Scholar] [CrossRef]

- Su, H.; Yang, H.; Zheng, S.; Fan, Y.; Wei, S. The large-scale crowd behavior perception based on spatio-temporal viscous fluid field. IEEE Trans. Inf. Forensics Secur. 2013, 8, 1575–1589. [Google Scholar] [CrossRef]

- Benabbas, Y.; Ihaddadene, N.; Djeraba, C. Motion pattern extraction and event detection for automatic visual surveillance. EURASIP J. Image Video Process. 2010, 2011, 163682. [Google Scholar] [CrossRef]

- Li, T.; Chang, H.; Wang, M.; Ni, B.; Hong, R.; Yan, S. Crowded scene analysis: A survey. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 367–386. [Google Scholar] [CrossRef]

- Kratz, L.; Nishino, K. Tracking pedestrians using local spatio-temporal motion patterns in extremely crowded scenes. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 987–1002. [Google Scholar] [CrossRef] [PubMed]

- Cong, Y.; Yuan, J.; Liu, J. Abnormal event detection in crowded scenes using sparse representation. Pattern Recognit. 2013, 46, 1851–1864. [Google Scholar] [CrossRef]

- Lewandowski, M.; Simonnet, D.; Makris, D.; Velastin, S.A.; Orwell, J. Tracklet reidentification in crowded scenes using bag of spatio-temporal histograms of oriented gradients. In Proceedings of the Mexican Conference on Pattern Recognition, Querétaro, Mexico, 26–29 June 2013; pp. 94–103. [Google Scholar]

- Zhou, B.; Wang, X.; Tang, X. Random field topic model for semantic region analysis in crowded scenes from tracklets. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20-25 June 2011; pp. 3441–3448. [Google Scholar]

- Brun, L.; Saggese, A.; Vento, M. Dynamic scene understanding for behavior analysis based on string kernels. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 1669–1681. [Google Scholar] [CrossRef]

- Piciarelli, C.; Micheloni, C.; Foresti, G.L. Trajectory-based anomalous event detection. IEEE Trans. Circuits Syst. Video Technol. 2008, 18, 1544–1554. [Google Scholar] [CrossRef]

- Song, X.; Shao, X.; Zhang, Q.; Shibasaki, R.; Zhao, H.; Cui, J.; Zha, H. A fully online and unsupervised system for large and high-density area surveillance: Tracking, semantic scene learning and abnormality detection. ACM Trans. Intell. Syst. Technol. (TIST) 2013, 4, 1–21. [Google Scholar] [CrossRef]

- Popoola, O.P.; Wang, K. Video-based abnormal human behavior recognition—A review. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2012, 42, 865–878. [Google Scholar] [CrossRef]

- Yuan, Y.; Feng, Y.; Lu, X. Statistical hypothesis detector for abnormal event detection in crowded scenes. IEEE Trans. Cybern. 2016, 47, 3597–3608. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.J.; Yeh, Y.R.; Wang, Y.C.F. Anomaly detection via online oversampling principal component analysis. IEEE Trans. Knowl. Data Eng. 2012, 25, 1460–1470. [Google Scholar] [CrossRef]

- Cong, Y.; Yuan, J.; Tang, Y. Video anomaly search in crowded scenes via spatio-temporal motion context. IEEE Trans. Inf. Forensics Secur. 2013, 8, 1590–1599. [Google Scholar] [CrossRef]

- Yu, K.; Lin, Y.; Lafferty, J. Learning image representations from the pixel level via hierarchical sparse coding. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1713–1720. [Google Scholar]

- Zhao, B.; Li, F.-F.; Xing, E.P. Online detection of unusual events in videos via dynamic sparse coding. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3313–3320. [Google Scholar]

- Xu, K.; Jiang, X.; Sun, T. Anomaly detection based on stacked sparse coding with intraframe classification strategy. IEEE Trans. Multimed. 2018, 20, 1062–1074. [Google Scholar] [CrossRef]

- Ma, K.; Doescher, M.; Bodden, C. Anomaly Detection in Crowded Scenes Using Dense Trajectories; University of Wisconsin-Madison: Madison, WI, USA, 2015. [Google Scholar]

- Mahadevan, V.; Li, W.; Bhalodia, V.; Vasconcelos, N. Anomaly detection in crowded scenes. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1975–1981. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| Ped | ||||||||

|---|---|---|---|---|---|---|---|---|

| GFTT | FAST | AGAST | Sblob | SIFT | MSER | KAZE | AKAZE | |

| F1 | 0.72 | 0.66 | 0.58 | 0.63 | 0.7 | 0.73 | 0.73 | 0.72 |

| PR AUC | 0.58 | 0.57 | 0.49 | 0.51 | 0.59 | 0.59 | 0.65 | 0.59 |

| FPS | 263 | 333 | 284 | 230 | 102 | 108 | 34 | 174 |

| UMN | ||||||||

| GFTT | FAST | AGAST | Sblob | SIFT | MSER | KAZE | AKAZE | |

| F1 | 0.91 | 0.88 | 0.82 | 0.8 | 0.87 | 0.81 | 0.89 | 0.81 |

| PR AUC | 0.94 | 0.88 | 0.89 | 0.88 | 0.9 | 0.9 | 0.92 | 0.84 |

| FPS | 252 | 398 | 291 | 247 | 102 | 178 | 36 | 176 |

| Ped | ||||||||

|---|---|---|---|---|---|---|---|---|

| GFTT | FAST | AGAST | Sblob | SIFT | MSER | KAZE | AKAZE | |

| F1 | 0.7 | 0.53 | 0.41 | 0.63 | 0.69 | 0.7 | 0.71 | 0.71 |

| PR AUC | 0.61 | 0.37 | 0.3 | 0.51 | 0.54 | 0.57 | 0.62 | 0.6 |

| FPS | 259 | 322 | 274 | 210 | 99 | 108 | 34 | 165 |

| UMN | ||||||||

| GFTT | FAST | AGAST | Sblob | SIFT | MSER | KAZE | AKAZE | |

| F1 | 0.87 | 0.84 | 0.87 | 0.86 | 0.85 | 0.86 | 0.87 | 0.85 |

| PR AUC | 0.9 | 0.88 | 0.91 | 0.92 | 0.88 | 0.91 | 0.91 | 0.89 |

| FPS | 217 | 345 | 267 | 221 | 101 | 76 | 35 | 161 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fomin, I.; Rezets, Y.; Smirnova, E. Anomaly Detection on Video by Detecting and Tracking Feature Points. Eng. Proc. 2023, 33, 34. https://doi.org/10.3390/engproc2023033034

Fomin I, Rezets Y, Smirnova E. Anomaly Detection on Video by Detecting and Tracking Feature Points. Engineering Proceedings. 2023; 33(1):34. https://doi.org/10.3390/engproc2023033034

Chicago/Turabian StyleFomin, Ivan, Yurii Rezets, and Ekaterina Smirnova. 2023. "Anomaly Detection on Video by Detecting and Tracking Feature Points" Engineering Proceedings 33, no. 1: 34. https://doi.org/10.3390/engproc2023033034

APA StyleFomin, I., Rezets, Y., & Smirnova, E. (2023). Anomaly Detection on Video by Detecting and Tracking Feature Points. Engineering Proceedings, 33(1), 34. https://doi.org/10.3390/engproc2023033034