Research of a Virtual Infrastructure Network with Hybrid Software-Defined Switching †

Abstract

1. Introduction

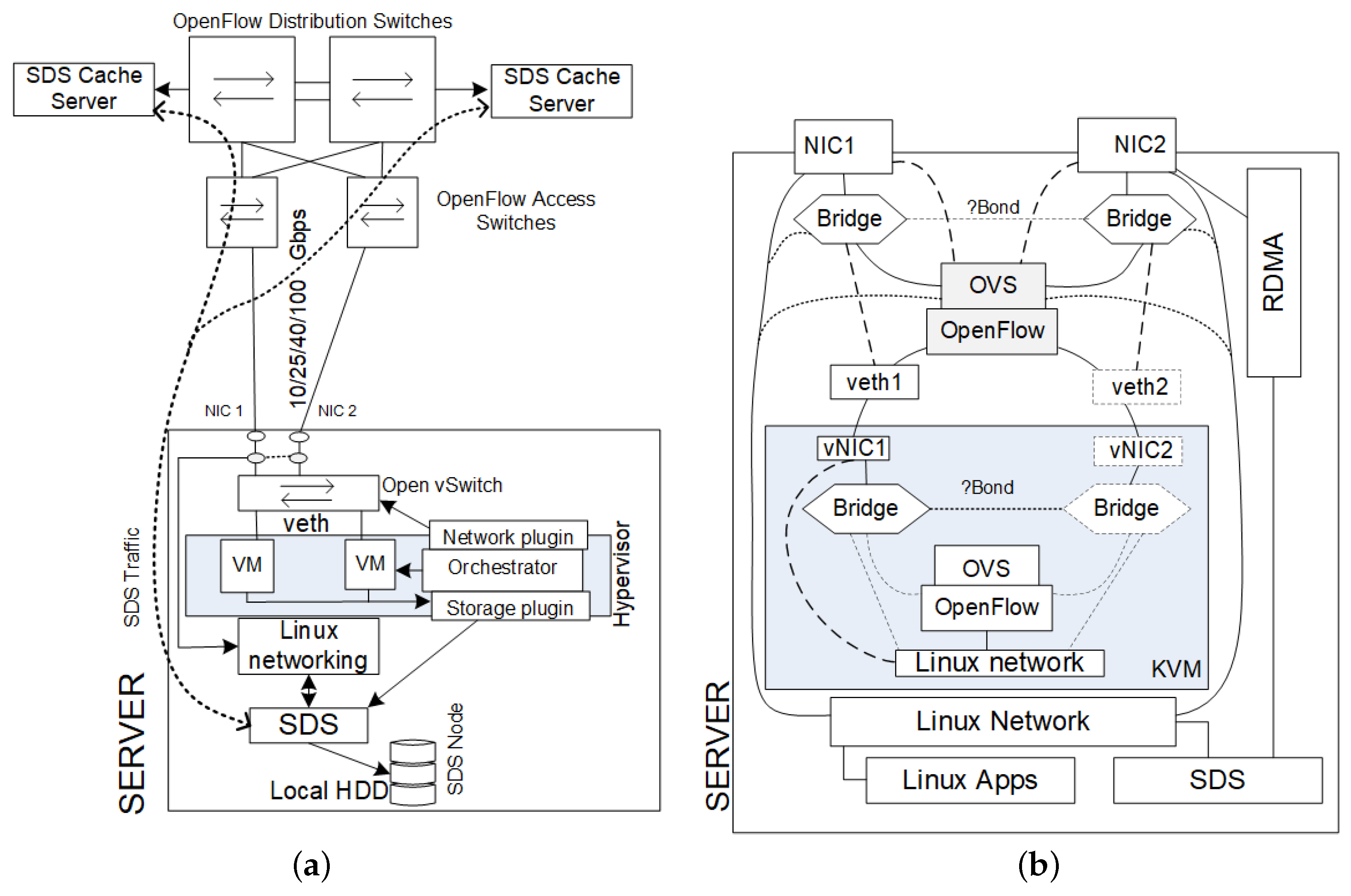

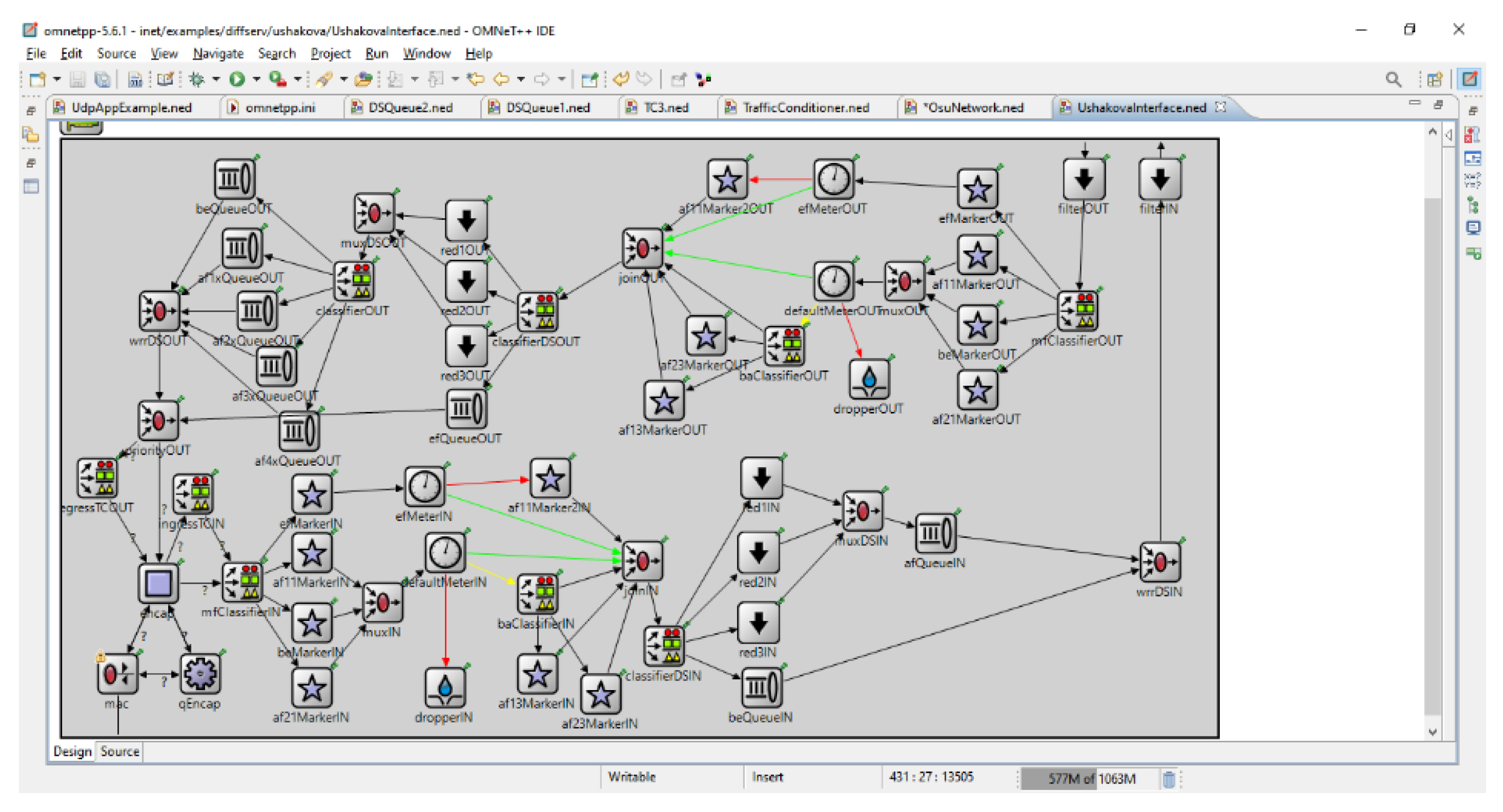

2. Theoretical Part

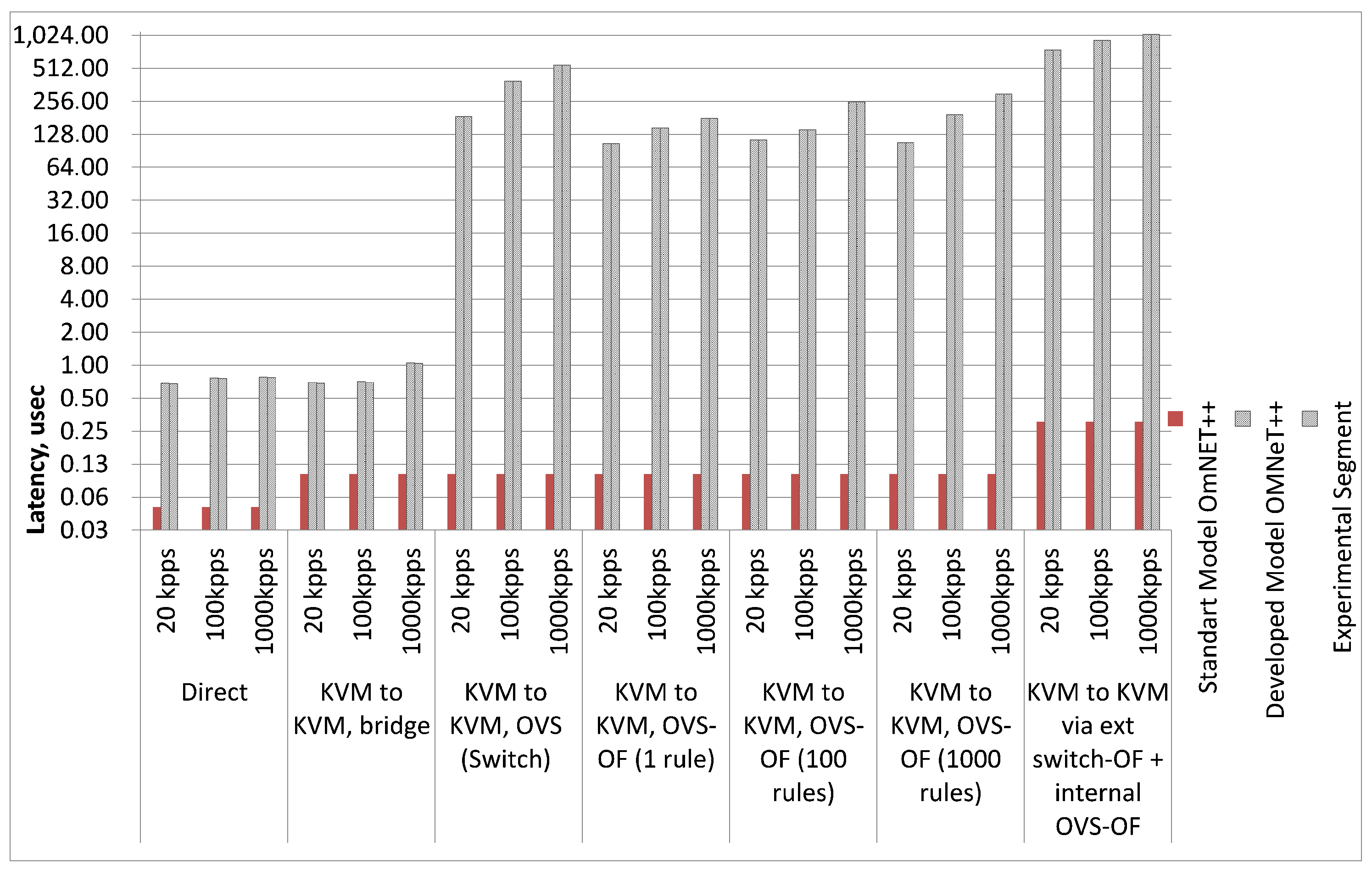

3. Experimental Research

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Melo, C.; Dantas, J.; Maciel, P.; Oliveira, D.M.; Araujo, J.; Matos, R.; Fé, I. Models for hyper-converged cloud computing infrastructures planning. Int. J. Grid Util. Comput. 2020, 11, 196–208. [Google Scholar] [CrossRef]

- Bhaumik, S.; Chanda, K.; Chakraborty, S. Poster: Controlling Quality of Service of Container Networks in a Hyperconverged Platform. In Proceedings of the IFIP Networking Conference (Networking), Paris, France, 22–26 June 2020; pp. 661–663. [Google Scholar]

- Meneses-Narvaez, S.E.; Dominguez-Limaico, P.; Rosero-Montalvo, D.; Narvaez-Pupiales, S.; Vizuete, M.Z.; Peluffo-Ordónez, D.H. Design and Tests to Implement Hyperconvergence into a DataCenter: Preliminary Results. Adv. Emerg. Trends Technol. 2019, 1066, 54–67. [Google Scholar]

- Risdianto, A.C.; Usman, M.; Kim, J.W. SmartX box: Virtualized hyper-converged resources for build-ing an affordable playground. Electronics 2019, 8, 1242. [Google Scholar] [CrossRef]

- Kim, J.W. Realizing Diverse Services Over Hyper-converged Boxes with SmartX Automation Frame-work. In Conference on Complex, Intelligent, and Software Intensive Systems; Springer: Cham, Switzerland, 2017; pp. 817–822. [Google Scholar]

- Meneses, S.; Maya, E.; Vasquez, C. Network Design Defined by Software on a Hyper-converged Infra-structure. Case Study: Northern Technical University FICA Data Center. In International Conference on Systems and Information Sciences; Springer: Cham, Switzerland, 2020; pp. 272–280. [Google Scholar]

- Melo, C.; Dantas, J.; Maciel, R.; Silva, P.; Maciel, P. Models to evaluate service provisioning over cloud computing environments-A block-chain-as-A-service case study. Revista de Informática Teórica e Aplicada 2019, 26, 65–74. [Google Scholar] [CrossRef]

- Wen, H. Improving Application Performance in the Emerging Hyper-Converged Infrastructure. Ph.D. Thesis, University of Minnesota, Minneapolis, MN, USA, 2019. [Google Scholar]

- Bök, P.B.; Noack, A.; Müller, M.; Behnke, D. Quality of Service. Computernetze und Internet of Things; Springer Vieweg: Wiesbaden, Germany, 2020; pp. 251–291. [Google Scholar]

- Szigeti, T.; Zacks, D.; Falkner, M.; Arena, S. Cisco Digital Network Architecture: Intent-based Net-working for the Enterprise; Cisco Press: Indianapolis, IN, USA, 2018. [Google Scholar]

- Karakus, M.; Durresi, A. Quality of service (QoS) in software defined networking (SDN): A survey. J. Netw. Comput. Appl. 2017, 80, 200–218. [Google Scholar] [CrossRef]

- Lei, Y.; Tai, K.C. In-parameter-order: A test generation strategy for pairwise testing. In Proceedings of the Third IEEE International High-Assurance Systems Engineering Symposium, Washington, DC, USA, 13–14 November 1998; pp. 254–261. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ushakov, Y.; Ushakova, M.; Legashev, L. Research of a Virtual Infrastructure Network with Hybrid Software-Defined Switching. Eng. Proc. 2023, 33, 52. https://doi.org/10.3390/engproc2023033052

Ushakov Y, Ushakova M, Legashev L. Research of a Virtual Infrastructure Network with Hybrid Software-Defined Switching. Engineering Proceedings. 2023; 33(1):52. https://doi.org/10.3390/engproc2023033052

Chicago/Turabian StyleUshakov, Yuri, Margarita Ushakova, and Leonid Legashev. 2023. "Research of a Virtual Infrastructure Network with Hybrid Software-Defined Switching" Engineering Proceedings 33, no. 1: 52. https://doi.org/10.3390/engproc2023033052

APA StyleUshakov, Y., Ushakova, M., & Legashev, L. (2023). Research of a Virtual Infrastructure Network with Hybrid Software-Defined Switching. Engineering Proceedings, 33(1), 52. https://doi.org/10.3390/engproc2023033052