Unraveling Imaginary and Real Motion: A Correlation Indices Study in BCI Data †

Abstract

:1. Introduction

2. Materials and Method

2.1. Dataset

2.1.1. Participants and Data Collection

2.1.2. Experimental Design

2.1.3. Preprocessing

2.2. Computation

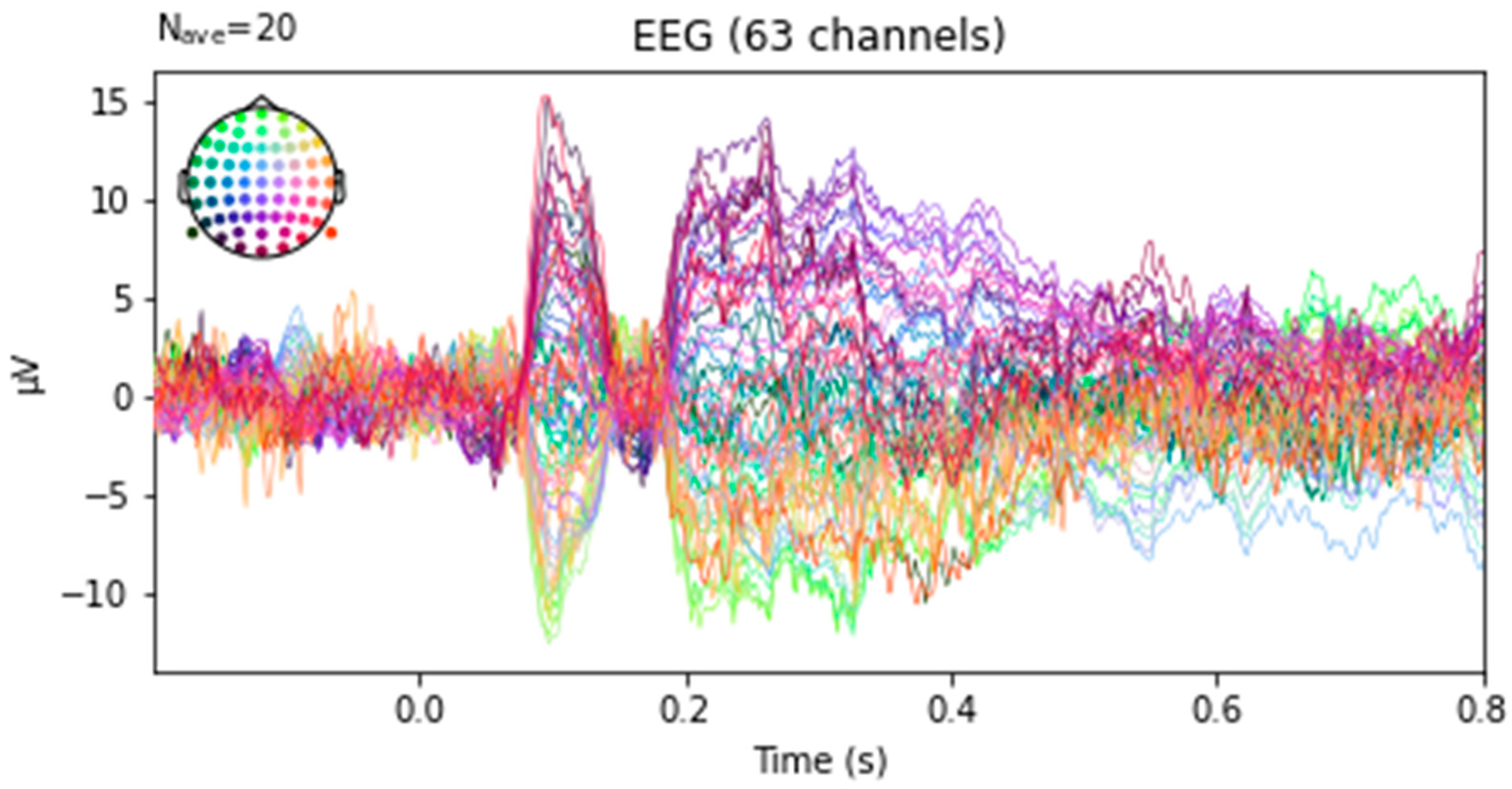

2.2.1. ERP Creation and Feature Extraction

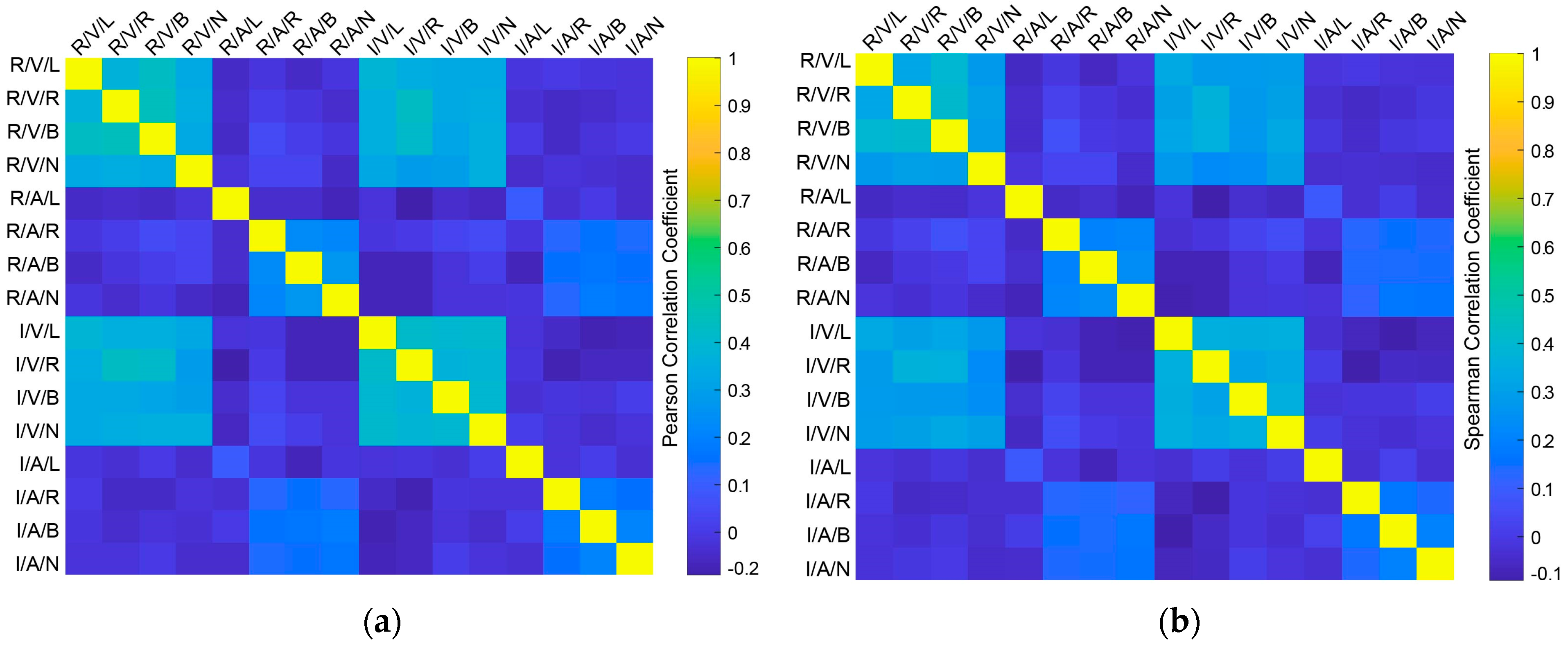

2.2.2. Correlation Computation

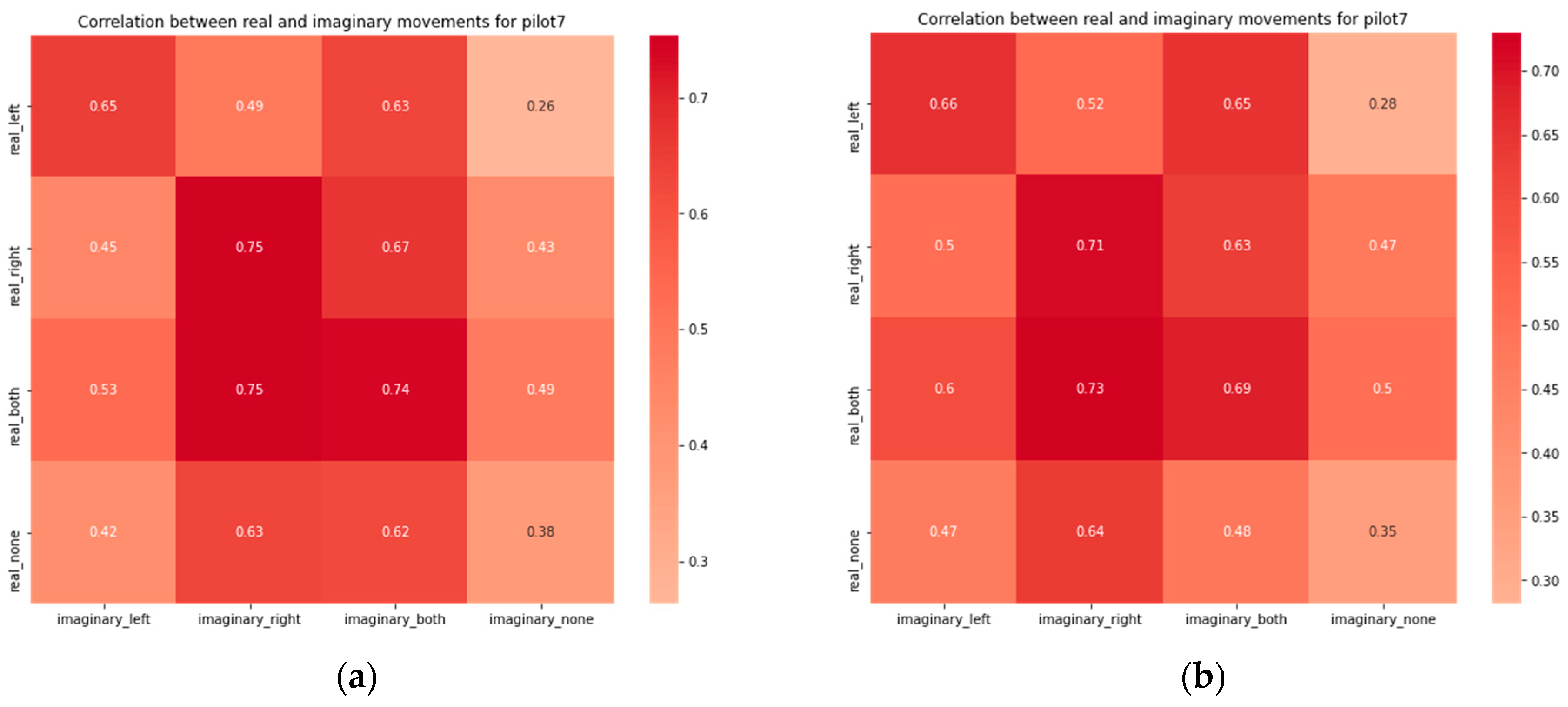

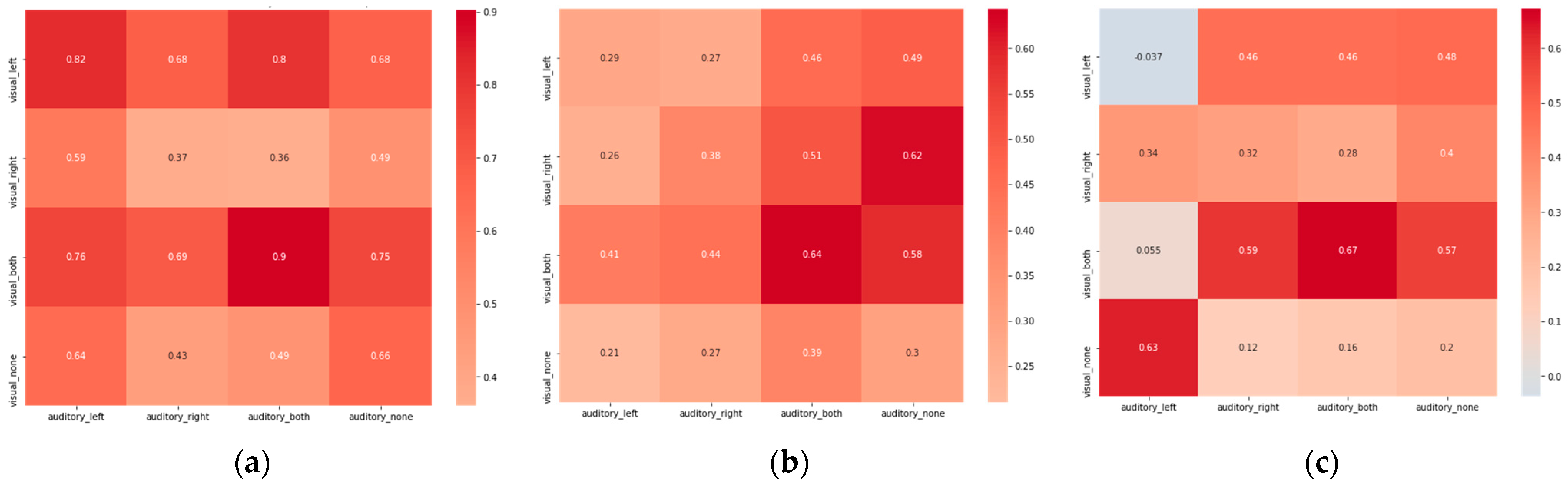

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kakkos, I.; Miloulis, S.-T.; Gkiatis, K.; Dimitrakopoulos, G.N.; Matsopoulos, G.K. Human–Machine Interfaces for Motor Rehabilitation. In Advanced Computational Intelligence in Healthcare-7: Biomedical Informatics; Maglogiannis, I., Brahnam, S., Jain, L.C., Eds.; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2020; pp. 1–16. ISBN 978-3-662-61114-2. [Google Scholar]

- Saha, S.; Mamun, K.A.; Ahmed, K.; Mostafa, R.; Naik, G.R.; Darvishi, S.; Khandoker, A.H.; Baumert, M. Progress in Brain Computer Interface: Challenges and Opportunities. Front. Syst. Neurosci. 2021, 15, 578875. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Lee, H.J.; Tae, K.S. Classification of EEG Signals Related to Real and Imagery Knee Movements Using Deep Learning for Brain Computer Interfaces. Technol. Health Care Off. J. Eur. Soc. Eng. Med. 2023, 31, 933–942. [Google Scholar] [CrossRef] [PubMed]

- Sugata, H.; Hirata, M.; Yanagisawa, T.; Matsushita, K.; Yorifuji, S.; Yoshimine, T. Common Neural Correlates of Real and Imagined Movements Contributing to the Performance of Brain–Machine Interfaces. Sci. Rep. 2016, 6, 24663. [Google Scholar] [CrossRef] [PubMed]

- Gutierrez-Martinez, J.; Mercado-Gutierrez, J.A.; Carvajal-Gámez, B.E.; Rosas-Trigueros, J.L.; Contreras-Martinez, A.E. Artificial Intelligence Algorithms in Visual Evoked Potential-Based Brain-Computer Interfaces for Motor Rehabilitation Applications: Systematic Review and Future Directions. Front. Hum. Neurosci. 2021, 15, 772837. [Google Scholar] [CrossRef] [PubMed]

- Backer, K.C.; Kessler, A.S.; Lawyer, L.A.; Corina, D.P.; Miller, L.M. A Novel EEG Paradigm to Simultaneously and Rapidly Assess the Functioning of Auditory and Visual Pathways. J. Neurophysiol. 2019, 122, 1312–1329. [Google Scholar] [CrossRef] [PubMed]

- Kakkos, I.; Ventouras, E.M.; Asvestas, P.A.; Karanasiou, I.S.; Matsopoulos, G.K. A Condition-Independent Framework for the Classification of Error-Related Brain Activity. Med. Biol. Eng. Comput. 2020, 58, 573–587. [Google Scholar] [CrossRef]

- Dimitrakopoulos, G.N.; Kakkos, I.; Vrahatis, A.G.; Sgarbas, K.; Li, J.; Sun, Y.; Bezerianos, A. Driving Mental Fatigue Classification Based on Brain Functional Connectivity. In Proceedings of the Engineering Applications of Neural Networks; Boracchi, G., Iliadis, L., Jayne, C., Likas, A., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 465–474. [Google Scholar]

- Gramfort, A.; Luessi, M.; Larson, E.; Engemann, D.; Strohmeier, D.; Brodbeck, C.; Goj, R.; Jas, M.; Brooks, T.; Parkkonen, L.; et al. MEG and EEG Data Analysis with MNE-Python. Front. Neurosci. 2013, 7, 276. [Google Scholar] [CrossRef]

- Pawan; Dhiman, R. Electroencephalogram Channel Selection Based on Pearson Correlation Coefficient for Motor Imagery-Brain-Computer Interface. Meas. Sens. 2023, 25, 100616. [Google Scholar] [CrossRef]

- Vidaurre, C.; Haufe, S.; Jorajuría, T.; Müller, K.-R.; Nikulin, V.V. Sensorimotor Functional Connectivity: A Neurophysiological Factor Related to BCI Performance. Front. Neurosci. 2020, 14, 575081. [Google Scholar] [CrossRef]

- Oken, B.; Memmott, T.; Eddy, B.; Wiedrick, J.; Fried-Oken, M. Vigilance State Fluctuations and Performance Using Brain-Computer Interface for Communication. Brain Comput. Interfaces Abingdon Engl. 2018, 5, 146–156. [Google Scholar] [CrossRef]

- Roberts, J.W.; Wood, G.; Wakefield, C.J. Examining the Equivalence between Imagery and Execution within the Spatial Domain—Does Motor Imagery Account for Signal-Dependent Noise? Exp. Brain Res. 2020, 238, 2983–2992. [Google Scholar] [CrossRef]

- Jacquet, T.; Lepers, R.; Poulin-Charronnat, B.; Bard, P.; Pfister, P.; Pageaux, B. Mental Fatigue Induced by Prolonged Motor Imagery Increases Perception of Effort and the Activity of Motor Areas. Neuropsychologia 2021, 150, 107701. [Google Scholar] [CrossRef]

- Moran, A.; O’Shea, H. Motor Imagery Practice and Cognitive Processes. Front. Psychol. 2020, 11, 394. [Google Scholar] [CrossRef] [PubMed]

- Miloulis, S.T.; Kakkos, I.; Karampasi, A.; Zorzos, I.; Ventouras, E.-C.; Matsopoulos, G.K.; Asvestas, P.; Kalatzis, I. Stimulus Effects on Subject-Specific BCI Classification Training Using Motor Imagery. In Proceedings of the 2021 International Conference on e-Health and Bioengineering (EHB), Iasi, Romania, 18–19 November 2021; pp. 1–4. [Google Scholar]

- Rasheed, S. A Review of the Role of Machine Learning Techniques towards Brain–Computer Interface Applications. Mach. Learn. Knowl. Extr. 2021, 3, 835–862. [Google Scholar] [CrossRef]

- Roc, A.; Pillette, L.; Mladenović, J.; Benaroch, C.; N’Kaoua, B.; Jeunet, C.; Lotte, F. A Review of User Training Methods in Brain Computer Interfaces Based on Mental Tasks. J. Neural Eng. 2020, 18, 011002. [Google Scholar] [CrossRef] [PubMed]

- Kakkos, I.; Dimitrakopoulos, G.N.; Sun, Y.; Yuan, J.; Matsopoulos, G.K.; Bezerianos, A.; Sun, Y. EEG Fingerprints of Task-Independent Mental Workload Discrimination. IEEE J. Biomed. Health Inform. 2021, 25, 3824–3833. [Google Scholar] [CrossRef] [PubMed]

- Miloulis, S.-T.; Kakkos, I.; Dimitrakopoulos, G.Ν.; Sun, Y.; Karanasiou, I.; Asvestas, P.; Ventouras, E.-C.; Matsopoulos, G. Evaluating Memory and Cognition via a Wearable EEG System: A Preliminary Study. In Proceedings of the Wireless Mobile Communication and Healthcare; Ye, J., O’Grady, M.J., Civitarese, G., Yordanova, K., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 52–66. [Google Scholar]

- Ron-Angevin, R.; Medina-Juliá, M.T.; Fernández-Rodríguez, Á.; Velasco-Álvarez, F.; Andre, J.-M.; Lespinet-Najib, V.; Garcia, L. Performance Analysis with Different Types of Visual Stimuli in a BCI-Based Speller Under an RSVP Paradigm. Front. Comput. Neurosci. 2021, 14, 587702. [Google Scholar] [CrossRef] [PubMed]

- Škola, F.; Tinková, S.; Liarokapis, F. Progressive Training for Motor Imagery Brain-Computer Interfaces Using Gamification and Virtual Reality Embodiment. Front. Hum. Neurosci. 2019, 13, 329. [Google Scholar] [CrossRef] [PubMed]

- Kakkos, I.; Dimitrakopoulos, G.N.; Gao, L.; Zhang, Y.; Qi, P.; Matsopoulos, G.K.; Thakor, N.; Bezerianos, A.; Sun, Y. Mental Workload Drives Different Reorganizations of Functional Cortical Connectivity Between 2D and 3D Simulated Flight Experiments. IEEE Trans. Neural Syst. Rehabil. Eng. Publ. IEEE Eng. Med. Biol. Soc. 2019, 27, 1704–1713. [Google Scholar] [CrossRef] [PubMed]

- Stieger, J.R.; Engel, S.A.; He, B. Continuous Sensorimotor Rhythm Based Brain Computer Interface Learning in a Large Population. Sci. Data 2021, 8, 98. [Google Scholar] [CrossRef]

- Larson, E.; Taulu, S. Reducing Sensor Noise in MEG and EEG Recordings Using Oversampled Temporal Projection. IEEE Trans. Biomed. Eng. 2018, 65, 1002–1013. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miloulis, S.T.; Zorzos, I.; Kakkos, I.; Karampasi, A.; Ventouras, E.C.; Kalatzis, I.; Papageorgiou, C.; Asvestas, P.; Matsopoulos, G.K. Unraveling Imaginary and Real Motion: A Correlation Indices Study in BCI Data. Eng. Proc. 2023, 50, 11. https://doi.org/10.3390/engproc2023050011

Miloulis ST, Zorzos I, Kakkos I, Karampasi A, Ventouras EC, Kalatzis I, Papageorgiou C, Asvestas P, Matsopoulos GK. Unraveling Imaginary and Real Motion: A Correlation Indices Study in BCI Data. Engineering Proceedings. 2023; 50(1):11. https://doi.org/10.3390/engproc2023050011

Chicago/Turabian StyleMiloulis, Stavros T., Ioannis Zorzos, Ioannis Kakkos, Aikaterini Karampasi, Errikos C. Ventouras, Ioannis Kalatzis, Charalampos Papageorgiou, Panteleimon Asvestas, and George K. Matsopoulos. 2023. "Unraveling Imaginary and Real Motion: A Correlation Indices Study in BCI Data" Engineering Proceedings 50, no. 1: 11. https://doi.org/10.3390/engproc2023050011

APA StyleMiloulis, S. T., Zorzos, I., Kakkos, I., Karampasi, A., Ventouras, E. C., Kalatzis, I., Papageorgiou, C., Asvestas, P., & Matsopoulos, G. K. (2023). Unraveling Imaginary and Real Motion: A Correlation Indices Study in BCI Data. Engineering Proceedings, 50(1), 11. https://doi.org/10.3390/engproc2023050011