Abstract

Human emotions trigger reflective transformations within the brain, leading to unique patterns of neural activity and behaviour. This study connects the power of electroencephalogram (EEG) data to investigate the intricate impacts of emotions, considering their reflective significance in our daily lives, in depth. The versatile applications of EEG signals encompass an array of domains, from the categorisation of motor imagery activities to the control of advanced prosthetic devices. However, EEG data present a difficult challenge due to their inherent noisiness and non-stationary nature, making it imperative to extract salient features for classification purposes. In this paper, we introduce a novel and effective framework reinforced by Grey Wolf Optimisation (GWO) for the recognition and interpretation of EEG signals of emotion dataset. The core objective of our research is to unravel the intricate neural signatures that underlie emotional experiences and pave the way for more nuanced emotion recognition systems. To measure the efficacy of our proposed framework, we conducted experiments utilising EEG recordings from a unit of 32 participants. During the experiments, participants were exposed to emotionally charged video stimuli, each lasting one minute. Subsequently, the collected EEG data of emotion were meticulously analysed, and a support vector machine (SVM) classifier was employed for the robust categorisation of the extracted EEG features. Our results underscore the potential of the GWO-based framework, achieving an impressive accuracy rate of 93.32% in accurately identifying and categorising emotional states. This research not only provides valuable insights into the neural underpinnings of emotions but also lays a solid foundation for the development of more sophisticated and emotionally intelligent human–computer interaction systems.

1. Introduction

Emotion identification using facial expressions, speech, body motions, and image processing has been a major area of study in the past few decades [1]. Still, psychologists have recently come to agree that a person’s brain waves are a good indicator of how they feel “inside”. How we feel about the world and other people shows how actively or passively we interact with them. The question is whether feelings are related to the body or, more specifically, to the central nervous system. Researchers’ attention is now being directed towards the brain and spinal cord [2]. They employed several types of neuro-imaging techniques, including fMRI, MRI, EEG, and so on, to back up their assertions. The study team claims that the EEG technique provides the most in-depth understanding of the human brain and may be used to diagnose neurological conditions, measure mental effort, and build recognition models [3]. As EEG can measure even the most refined changes in brain activity it is a useful technique for investigating emotional diversity. This includes a wide range of feelings, including delight, sadness, tranquillity, contempt, worry, exhaustion, and astonishment. One difficulty for an EEG-based emotion detection system is determining how to classify the wide variety of emotional responses people have to different motivations. The electroencephalogram (EEG) power spectrum density function and SVM classifier were used by Jatupaiboon et al. [1] to figure out how to group emotions. Only two states of mind—happiness and sadness—were used in their study of 10 participants. In another study [2], images from the International Affective Picture System were used to elicit emotional responses. A quadratic discriminant analysis with 36.8% accuracy classified the five different emotions.

It has been shown that EEG signals, which are made when the brain processes emotions, can be used to figure out how someone is feeling. The authors extracted separate frequency bands to identify feelings using discrete Fourier and wavelet transform techniques. The results indicate that the radial base function performs better when classifying gamma and beta wave bands. Alpha and beta waves from 15 participants were analysed for mood using spectral characteristics. The precision of the system was evaluated using the Fp1, Fp2, F3, and F4 channels.

Using the spatial filters, feature vectors were made, and then they are put into groups using a non-linear SVM kernel. The outcomes indicated a two-class classification accuracy of between 68% and 78%. Moreover, spatial filters are susceptible to noise and artefacts, which negatively affect data categorisation.

Kamalakkannan et al. [3] used a bipolar neural network to classify data based on parameters like variance, mean, average power, and standard deviation. Overall, they were successful in classifying 44% of the data. They concluded that the EEG recorded data that were not available elsewhere. An EEG pattern was also detected using principal component analysis, which resulted in enhanced pattern recognition [4]. The inherent noise in EEG data means that this approach, while widely employed in the literature to choose the most critical functions, does not yield satisfactory results. Yet, it has been discovered that the characteristics retrieved might affect the accuracy of such BCI systems.

The high number of dimensions in the feature space makes it hard to choose which features to use in EEG signal processing. Because there are so many features, it is not possible to search the whole function space to find the best combination of features. In the past, authors have used a variety of heuristic procedures to pick functions. Single and multi-objective ratings were applied to characteristics discovered with a genetic algorithm [5]. Significant characteristics for SVM categorisation of epileptic and normal participants were selected using the artificial bee colony and the whale optimisation method [6]. Selecting characteristics from EEG has been performed using a number of heuristic techniques. However, because of their difficulty in finding a global optimum, the proposed framework makes use of GWO. The concept of gravitational waves has inspired a novel optimisation method called gravitational wave optimisation (GWO). It belongs to the class of metaheuristic optimisation methods, which are applied to hard issues in optimisation. Important criteria to evaluate when contrasting GWO with other optimisation methods used in EEG (electroencephalogram) analysis include convergence speed, solution quality, robustness, and ease of implementation. Eliminating superfluous functions and dropping the dimensionality curse are also reasons why feature selection is so important. As a result, it is crucial to choose the best features and classifiers for emotion identification. Affective computing, which focuses on the development of technology that can detect, understand, and respond to human emotions, has significant practical implications and societal significance in a number of key areas, including emotion detection for diagnosis, remote monitoring, sentiment analysis, and driver monitoring.

The EEG data processing and emotion categorisation in this work make use of the grey wolf optimiser and SVM classifier. The manuscript is divided into five sections. In Section 1, the introduction of EEG-based emotion recognition is discussed; furthermore, in Section 2, a literature review is discussed. Moreover, Section 3 presents a proposed framework in which all steps are discussed in detail, such as preprocessing, data acquisition, and feature extraction; after that, in Section 4, results and analysis are discussed. In Section 5, conclusions and future directions are discussed.

Contributions of This Paper

- We provide a framework for emotion recognition based on GWO and SVM methods.

- We provide a literature review based on parameters such as highlights, datasets, and accuracy.

- We analyse the performance of the proposed framework using MATLAB.

2. Materials and Methods

In this section, a review of the literature on emotion recognition on EEG datasets is discussed. To perform this review, we considered research papers from 2019 to 2023 with parameters such as the highlights of the paper, the dataset, and accuracy. Table 1 depicts the literature review.

Table 1.

Literature review.

3. Methodology

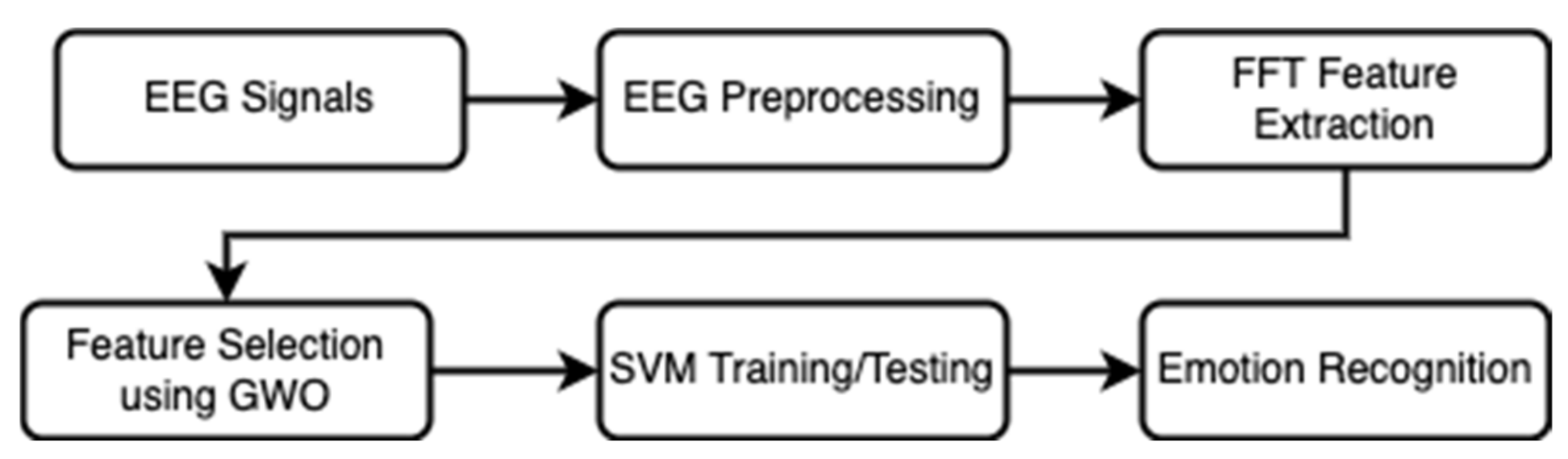

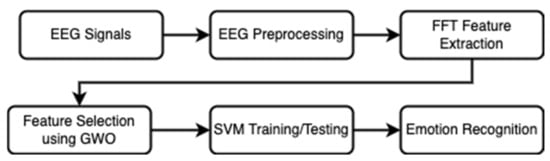

In this section, a proposed framework is presented. Understanding the background and difficulties of EEG based emotion recognition is essential before discussing the specific contributions of a suggested framework to this discipline. Neuroscience, signal processing, and machine learning are all brought together in the study of emotion identification using EEG waves. Data collection, pre-processing, feature extraction, model construction, evaluation, and ethical considerations should all be part of the overarching framework for emotion recognition using EEG [15]. The field benefits from its ability to handle transdisciplinary and ethical concerns while also increasing precision, generalisation, and applicability. Such a paradigm has the potential to improve our knowledge of human emotions and find use in areas as diverse as mental health assessment and computer–human interaction [16]. The proposed framework’s flow chart is depicted in Figure 1. Initially, an EEG dataset is fetched from DEAP data for the analysis [17]. EEG data of emotion was recorded at sampling rate of 512 Hz using thirty two active electrodes as per international (10-20) system. DEAP Dataset has the thirty two number of participants which are stimulated by showing videos and music of one minute and in between that response of each participant is recorded and pre-processed to retrieve features. Furthermore, these features are extracted using the GWO algorithm. After that, an SVM classifier is used to predict the accuracy of the proposed framework for emotion recognition. The choice of SVM kernel, such as linear, RBF, polynomial, or sigmoid, in EEG-based emotion classification significantly influences accuracy. For complex and nonlinear EEG–emotion relationships, the RBF kernel is often preferred, while linear kernels suit linearly separable data. Optimal kernel selection depends on the data characteristics. EEG recordings are susceptible to various sources of noise, including muscle artifacts, eye movements, and environmental interference. Preprocessing techniques such as filtering and artifact removal algorithms were employed to mitigate their impact. Filtering removed high-frequency noise, while artifact removal algorithms detect and eliminated muscle and eye movement artifacts, ensuring cleaner EEG data for analysis. In both scientific and medical contexts, electroencephalography (EEG) has proven to be an invaluable signal using electrodes for capturing brain electrical activity. However, noise and non-stationarity in EEG signals can severely compromise data quality and interpretation. To overcome these obstacles and derive useful information from EEG signals, robust feature selection is essential.

Figure 1.

Block diagram.

3.1. EEG Signal Acquisition

EEG datasets of emotion can be recorded, processed, and stored in electronic media for further study and analysis by utilizing DEAP dataset for emotion recognition. To remove noise and other artefacts from the recording, 128 Hz of sampling has been performed on each signal of dataset for detection of three state of emotions. All three states of mind—happiness, sadness, and neutrality—are of interest to the researchers here. As the detection of emotions is greatly aided by visual input, videos are used to create feelings. The participants were instructed to pay close attention to the viewing of the video clip. The participants’ brain waves were monitored using EEG equipment as they watched video clip. Before receiving the stimulus, subjects were instructed to unwind for one minutes.

3.2. EEG Preprocessing

MATLAB (Version 9.5 R2018a) was used to analyse the collected EEG data. The notch filter is applied to the electrical line to eliminate interference. Other artefacts like eye blinks, head movements, and so on, can be eliminated with the help of a Fourier transform. Then, for each participant and each channel, five subfrequency bands (delta, theta, alpha, beta, and gamma) were recovered from the filtered EEG data. The GWO algorithm selected specific EEG features, such as spectral power in different frequency bands as well as coherence and asymmetry measures between brain regions.

3.3. Features and Feature Selection

To categorise the feelings, we calculated eight features: the mean, standard deviation, variance, entropy, peak-to-peak amplitude, band power, and kurtosis. In order to pick the best features, we employed the GWO algorithm.

Grey Wolf Optimisation (GWO) Algorithm

One such metaheuristic optimisation approach is the GWO. The core concept seems to be that, like the GWO algorithm in the environment, the parts might gain from cooperating with one another. The social structure and hunting techniques of grey wolves serve as inspiration. Feature selection in machine learning and data mining are only two examples of how this metaheuristic algorithm (GWO) might be put to use. Let us take a closer look at how the GWO algorithm works and why it is a good fit for enhancing feature selection in EEG (electroencephalogram) data.

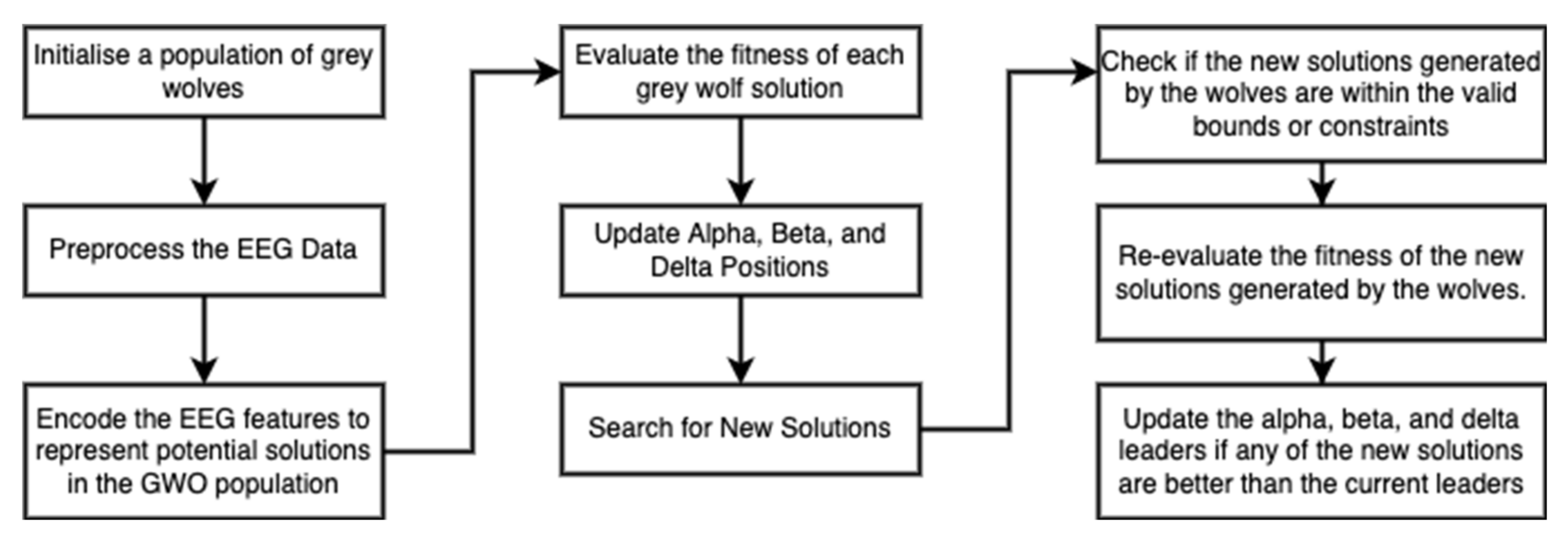

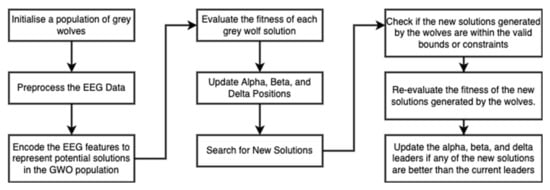

The algorithm of GWO is unique in comparison to other frameworks. Figure 2 depicts the workflow steps of the GWO. GWO algorithms consist of the following steps:

Figure 2.

GWO algorithm.

- Enter the population size in either grey wolves or search agents.

- The search agents’ position directions will first be set to the upper and lower boundary zones of the grey wolves, so they can start exploring for and encircling prey. Maximum iterations are determined by plugging a value into Equations (1)–(4).

- Analyse the levels of fitness for each of the possible solutions. The fitness numbers represent the relative sizes of the wolf and its prey. The fitness value is supported by the top three wolf types, which are types a, b, and c. Many subspecies of grey wolves modify their pursuing habit from (6) to (8).

- Equations are used to continually improve the search agent’s location, (8)–(11).

- Repeat steps 3–5 until the wolves are within striking distance of their target.

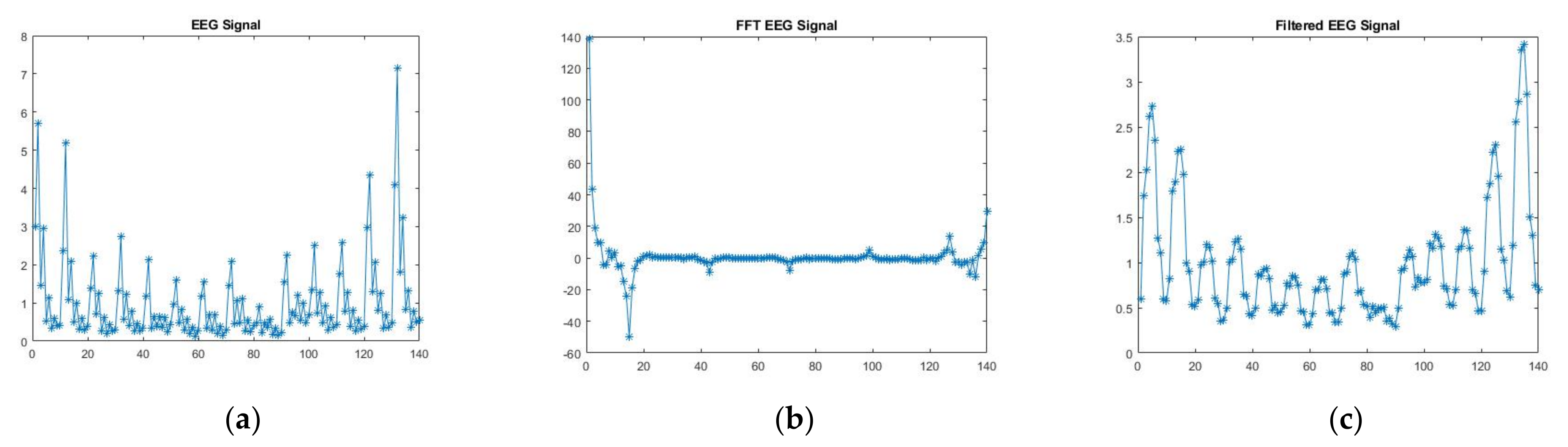

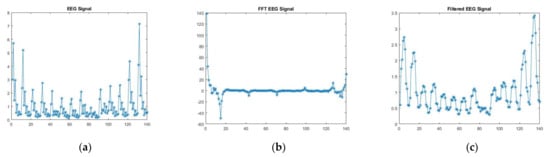

- The process of termination is finished once a predetermined number of iterations has been completed. The Input_EEG Signal, FFT_EEG Signal and processed Clean_EEG Signal has been depicted in Figure 3a–c.

Figure 3. (a) Input_EEG Signal; (b) FFT_EEG Signal; (c) Clean_EEG Signal.

Figure 3. (a) Input_EEG Signal; (b) FFT_EEG Signal; (c) Clean_EEG Signal.

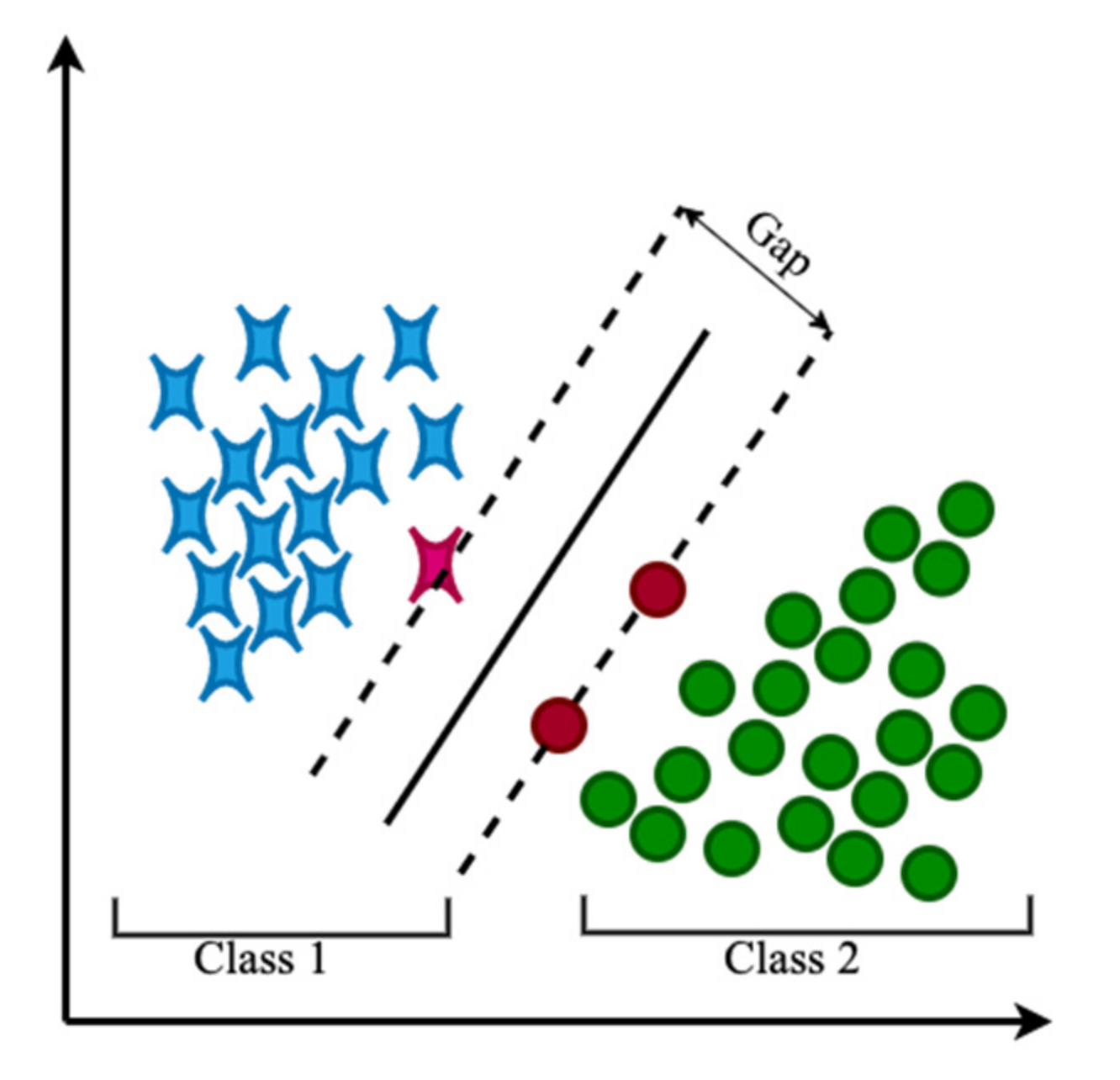

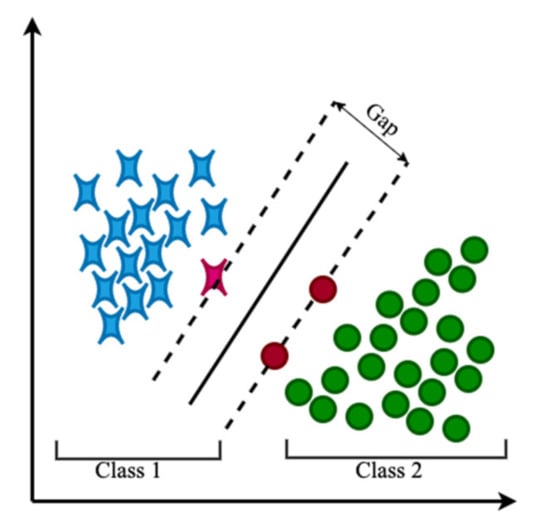

3.4. Classification

To perform the classification operation we use an SVM classifier. It uses an optimal line to distinguish a dataset into two different classes, as depicted in Figure 4. The choice of kernel in a support vector machine (SVM) classifier can have a substantial effect on the classification accuracy of emotions. Here in Figure 4, the emotions are divided into two classes called happy and sad. The green colour shows sad emotion while blue colour shows happy emotion. The kernel determines how the SVM maps the input data into a higher-dimensional space, where it can more effectively classify data points. The distance between class and data points is considered as margin or gap. Data points of both classes placed closer to the hyperplane are termed as vectors and the hyperplane should be chosen in an optimised way so that margin or gap should be maximum.

Figure 4.

Support vector machine system.

4. Results and Discussion

The proposed framework is implemented using MATLAB R-2018a. To analyse the performance, a real emotion dataset of EEG is taken from DEAP datasets.

Performance Metrics

- Accuracy: It represents the overall percentage of accurate predictions in relation to the entire number of predictions.

- Confusion matrix: A confusion matrix, also known as an error matrix, is a method in machine learning and statistics for classifying data. Classification in the confusion matrix can be seen as well as the confusion matrix itself.

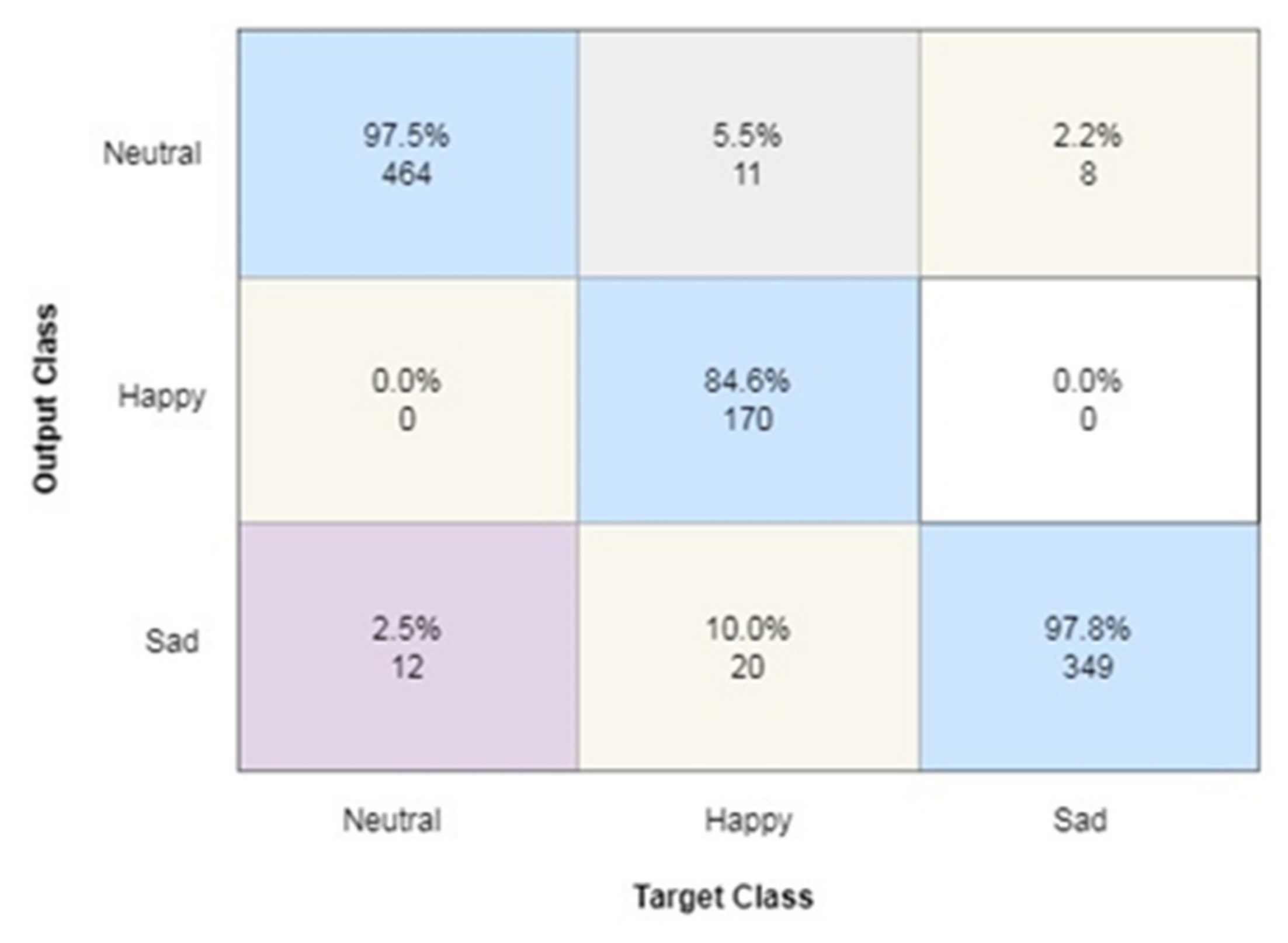

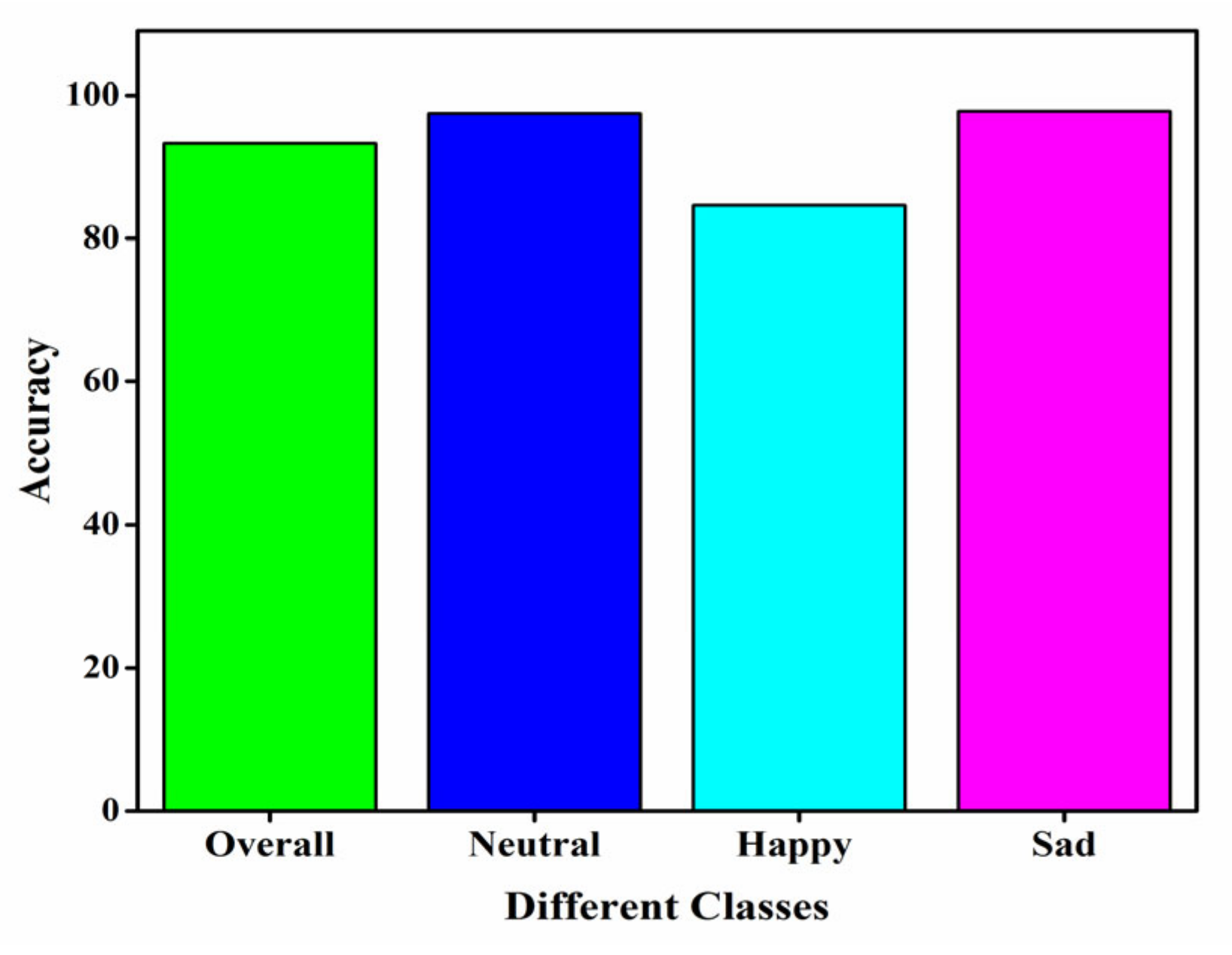

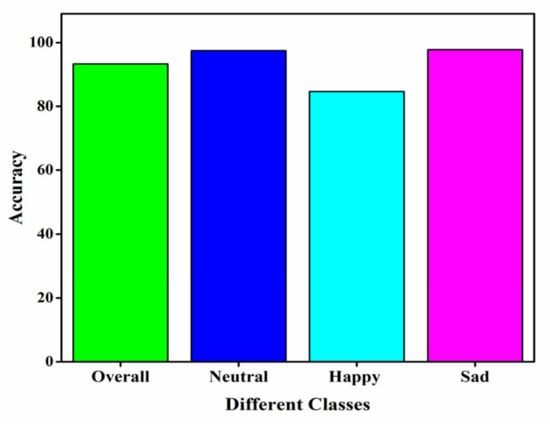

Discussion: Emotions were found to have a statistically significant impact by analysis of variance. Eight feature vectors and 700 iterations were input into GWO. Binary numbers serve as the wolves’ representation. There are a set of five characteristics that must be met to achieve an average fitness level. The sample size is 1022 data points. The SVM classifier was then fed the retrieved features, and 5-fold cross validation was carried out. The classifier was trained using 80% of the data, and then tested with 20%. Classification rates for the neutral, happy, and sad categories are 97.5, 84.6, and 97.8 percent, respectively. For all classes combined, an average accuracy of 93.32% was achieved in categorisation. Figure 5 depicts the confusion matrix, which is used to present the attributes in the dataset, while Figure 6 displays the accuracy rates for each attribute. A 93.32% accuracy in EEG-based emotion recognition is promising, but real-world implications should consider false positives and negatives. High accuracy indicates good overall performance, but false positives (incorrectly identifying an emotion) and false negatives (missing actual emotions) can impact user experience. In applications like human–computer interaction or mental health monitoring, minimising false results is critical to ensure system reliability and user trust.

Figure 5.

Confusion matrix.

Figure 6.

Accuracy with different classes.

The findings are compared to earlier research. Emotions may be recognised by an SVM classifier with a 78% accuracy rate [4], according to another study. A maximum accuracy of 49.63% was achieved with five subjects. A unique emotion detection system has been proposed, and it makes use of two frontal (Fp1 and Fp2) channels. Average accuracy for the same stimulus over 32 subjects and two emotions was 75.18%. The proposed method is superior to GWO for a dataset consisting of 32 subjects, 14 channels, and 3 emotions, as compared to previously published work.

5. Conclusions

As human–machine systems and automation technologies advance, the relevance of emotion recognition in the HCI sector has increased. The field of affective computing has paid more and more attention in recent years to BCI emotion identification based on EEG data. The development of affordable and user-friendly BCI devices has prompted a plethora of studies. In this paper, we provide a framework for emotion recognition using the GWO algorithm for feature selection and SVM for the categorisation of EEG records related to three emotions. Our proposed model has been shown to improve classification accuracy over prior work by employing fewer features selected by GWO to categorise EEG data. The classifier was trained using 80% of the data, and then tested with 20%. Classification rates for the neutral, joyful, and sad categories are 97.5, 84.6, and 97.8 percent, respectively. For all classes combined, an average accuracy of 93.32% was achieved in categorisation. The proposed EEG-based emotion recognition framework, with its 93.32% accuracy and innovative grey wolf optimisation feature selection, advances the field by enhancing accuracy and practicality. It has potential applications in affective computing and human–computer interaction, improving technology’s emotional intelligence.

In future, to improve assessments, it is anticipated that we will continue exploring the emotion recognition area by expanding the types of test data available. In addition, after obtaining EEG signals from more people, self-supervised learning techniques might be utilised to possibly create a plug-and-play legitimate EEG emotion recognition system. Approaches like the contrastive learning model teach knowledge on their own from unlabelled data. There is also a need to boost performance using 32 channels EEG devices for emotion recognition. In addition to this, participating in other forms of digital gaming will enhance the sample size, allowing for a more detailed analysis of the impact of this type of stimulation. Further, in forthcoming work, we will create a human–computer interface tool that can perform real-time emotion analysis.

Author Contributions

Conceptualization, S.D. and R.A.J.; methodology, S.D.; software, S.D.; validation, S.D. and R.A.J.; formal analysis, S.D.; investigation, S.D.; resources, S.D.; data curation, R.A.J.; writing—original draft preparation, S.D.; writing—review and editing, R.A.J.; visualization, S.D.; supervision, S.D.; project administration, S.D.; funding acquisition, R.A.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be available on DEAPdatasets.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jaswal, R.A.; Dhingra, S. EEG Signal Accuracy for Emotion Detection Using Multiple Classification Algorithm. Harbin Gongye Daxue Xuebao/J. Harbin Inst. Technol. 2022, 54, 1–12. [Google Scholar]

- Jaswal, R.A.; Dhingra, S.; Kumar, J.D. Designing Multimodal Cognitive Model of Emotion Recognition Using Voice and EEG Signal. In Recent Trends in Electronics and Communication; Springer: Singapore, 2022; pp. 581–592. [Google Scholar]

- Yildirim, E.; Kaya, Y.; Kiliç, F. A channel selection method for emotion recognition from EEG based on swarm-intelligence algorithms. IEEE Access 2021, 9, 109889–109902. [Google Scholar] [CrossRef]

- Ghane, P.; Hossain, G.; Tovar, A. Robust understanding of EEG patterns in silent speech. In Proceedings of the 2015 National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 15–19 June 2015; pp. 282–289. [Google Scholar]

- Cîmpanu, C.; Ferariu, L.; Ungureanu, F.; Dumitriu, T. Genetic feature selection for large EEG data with commutation between multiple classifiers. In Proceedings of the 2017 21st International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 19–21 October 2017; pp. 618–623. [Google Scholar]

- Houssein, E.H.; Hamad, A.; Hassanien, A.E.; Fahmy, A.A. Epileptic detection based on whale optimization enhanced support vector machine. J. Inf. Optim. Sci. 2019, 40, 699–723. [Google Scholar] [CrossRef]

- Marzouk, H.F.; Qusay, O.M. Grey Wolf Optimization for Facial Emotion Recognition: Survey. J. Al-Qadisiyah Comput. Sci. Math. 2023, 15, 1–11. [Google Scholar] [CrossRef]

- Alyasseri, Z.A.; Alomari, O.A.; Makhadmeh, S.N.; Mirjalili, S.; Al-Betar, M.A.; Abdullah, S.; Ali, N.S.; Papa, J.P.; Rodrigues, D.; Abasi, A.K. Eeg channel selection for person identification using binary grey wolf optimizer. IEEE Access 2022, 10, 10500–10513. [Google Scholar] [CrossRef]

- Shahin, I.; Alomari, O.A.; Nassif, A.B.; Afyouni, I.; Hashem, I.A.; Elnagar, A. An efficient feature selection method for arabic and english speech emotion recognition using Grey Wolf Optimizer. Appl. Acoust. 2023, 205, 109279. [Google Scholar] [CrossRef]

- Algarni, M.; Saeed, F.; Al-Hadhrami, T.; Ghabban, F.; Al-Sarem, M. Deep learning-based approach for emotion recognition using electroencephalography (EEG) signals using Bi-directional long short-term memory (Bi-LSTM). Sensors 2022, 22, 2976. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Lyu, X.; Zhao, L.; Chen, Z.; Gong, A.; Fu, Y. Identification of emotion using electroencephalogram by tunable Q-factor wavelet transform and binary gray wolf optimization. Front. Comput. Neurosci. 2021, 15, 732763. [Google Scholar] [CrossRef] [PubMed]

- Jasim, S.S.; Abdul Hassan, A.K.; Turner, S. Driver Drowsiness Detection Using Gray Wolf Optimizer Based on Voice Recognition. Aro-Sci. J. Koya Univ. 2022, 10, 142–151. [Google Scholar] [CrossRef]

- Ghosh, R.; Sinha, N.; Biswas, S.K.; Phadikar, S. A modified grey wolf optimization based feature selection method from EEG for silent speech classification. J. Inf. Optim. Sci. 2019, 40, 1639–1652. [Google Scholar] [CrossRef]

- Yin, Y.; Zheng, X.; Hu, B.; Zhang, Y.; Cui, X. EEG emotion recognition using fusion model of graph convolutional neural networks and LSTM. Appl. Soft Comput. 2021, 100, 106954. [Google Scholar] [CrossRef]

- Padhmashree, V.; Bhattacharyya, A. Human emotion recognition based on time–frequency analysis of multivariate EEG signal. Knowl. Based Syst. 2022, 238, 107867. [Google Scholar]

- Ozdemir, M.A.; Degirmenci, M.; Izci, E.; Akan, A. EEG-based emotion recognition with deep convolutional neural networks. Biomed. Eng./Biomed. Tech. 2021, 66, 43–57. [Google Scholar] [CrossRef] [PubMed]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis; Using physiological signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).