Abstract

Skin diseases are a prevalent and diverse group of medical conditions that affect a significant portion of the global population. One critical drawback includes difficulty in accurately diagnosing certain skin conditions, as many diseases can share similar symptoms or appearances. In this paper, we propose a Weighted Ensemble Region-based Convolutional Network (WERCNN) methodology that consolidates a Mask R-CNN (Mask Region-based Convolutional Neural Network) with the weighted average ensemble technique to enhance the performance of segmentation tasks. A skin disease image dataset obtained from kaggle is utilized to segment the skin disease image. This study investigates the utilization of a Mask R-CNN in skin disease segmentation, where it is prepared on a skin disease image dataset of dermatological pictures. The weighted average ensemble model is utilized to optimize the weights of the Mask R-CNN model. The performance metrics accuracy, precision, recall, specificity, and F1-score are to be employed; this can achieve the values of 94.7%, 93.6%, 93.9%, 92.6%, and 93.7%, respectively. With regard to skin disease segmentation, the WERCNN has shown extraordinary in accurately segmenting the impacted regions of skin images by providing valuable insights to dermatologists for diagnosis and treatment planning.

1. Introduction

The accurate and timely identification of skin diseases is crucial for effective diagnosis, treatment planning, and patient care [1]. Traditional methods for skin disease diagnosis heavily rely on manual inspection and assessment by dermatologists, which can be time-consuming, subjective, and prone to inter-observer variability. In recent years, various approaches have transformed the field of medical image analysis, offering the potential to automate and enhance skin disease diagnosis [2]. One of the primary challenges in skin disease segmentation lies in the complexity and diversity of skin conditions. Different diseases exhibit distinct visual patterns, textures, and morphological characteristics, making it essential for segmentation models to be adaptive and robust [3]. The use of deep learning techniques allows these models to learn and extract meaningful features from skin images, enabling them to generalize well across different diseases and variations [4].

Deep learning models, particularly convolutional neural networks (CNNs), have demonstrated exceptional performance in various image analysis tasks, that includes image classification, object detection, as well as segmentation [5]. Skin disease segmentation, in particular, is a critical task in medical image analysis, where the goal is to precisely delineate the affected regions in skin images. By providing pixel-level masks, segmentation enables a more granular understanding of the disease extent and location, facilitating better treatment decisions and disease monitoring [6]. In this study, we explore the application of deep learning, specifically the Mask Region-based Convolutional Neural Network (Mask R-CNN) architecture, for the segmentation of skin diseases [7]. A Mask R-CNN is an extension of the Faster R-CNN object detection model that can generate both bounding boxes and instance-level segmentation masks. By utilizing the inherent strengths of a Mask R-CNN, we aim to attain the accurate and fine-grained segmentation of various skin diseases [8].

To further improve the segmentation performance, we employ a weighted average ensemble of multiple Mask R-CNN models [9]. The ensemble approach combines the outputs of individual models with different characteristics, allowing us to capture diverse patterns and features present in the data. By aggregating the predictions of multiple models, we anticipate a more robust and reliable skin disease segmentation system [10].

Skin disease may be the fourth leading cause of non-fatal disease worldwide, yet researcher’s efforts and funding do not match the relative incapacity of skin diseases. Skin and subcutaneous diseases have grown by 46.6% between 1990 and 2022, with 21 to 87% of skin diseases reported in 13 pediatric-specific datasets. So, the identification of the skin disease is necessary, and for this reason, a novel method WERCNN is established. Weight average or weight sum ensemble is an ensemble machine learning approach that combines the predictions from multiple models, where the contribution of each model is weighted proportionally to its capability or skill. This can be employed to enhance the performance of the model by introducing diversity to reduce variance. The major contributions of this system are as follows:

- This study contributes to the advancement of automated skin disease diagnosis by utilizing state-of-the-art deep learning techniques.

- The proposed weighted average ensemble approach, which combines the outputs of multiple Mask R-CNN models and this ensemble strategy, capitalizes on the diverse strengths of individual models, resulting in improved segmentation performance and robustness across different skin diseases and variations.

- The proposed ensemble methodology can be easily extended to incorporate new models or adapt to diverse datasets. Its versatility makes it applicable to various clinical scenarios, providing a flexible solution for accurate skin disease segmentation.

2. Literature Survey

Khouloud et al. [11] employed convolutional neural networks (CNNs), including U-Net and DeepLab, which was developed by Samanta et al. [12], and Khan et al. [10] used Mask R-CNN. These have emerged as popular choices for their ability to learn hierarchical features from skin images, leading to the precise and pixel-level segmentation of affected regions. Comparative analyses have been conducted to assess the performance, computational efficiency, and applicability of these architectures for medical image analysis.

The studies by Cao et al. [13] have focused on expanding and curating datasets that cover a wide spectrum of skin conditions, patient demographics, and imaging modalities to ensure the generalizability and robustness of segmentation models utilized by Wibowo et al. [14]. Ethical considerations have been emphasized as well, with efforts to identify and mitigate potential biases in AI models to avoid disparities in skin disease diagnosis. Ensuring transparency, accountability, and privacy in AI-driven diagnostic tools has been a subject of exploration as utilized by Anand et al. [15].

Ravi et al. [16] developed ensemble methods, such as weighted averaging, which have gained popularity for combining the outputs of multiple models to improve segmentation accuracy and reliability. Transfer learning and domain adaptation techniques have been employed to utilize pre-trained models on large-scale datasets to fine-tune them on specialized skin disease datasets, effectively overcoming the challenge of limited annotated data.

3. Materials and Methods/Methodology

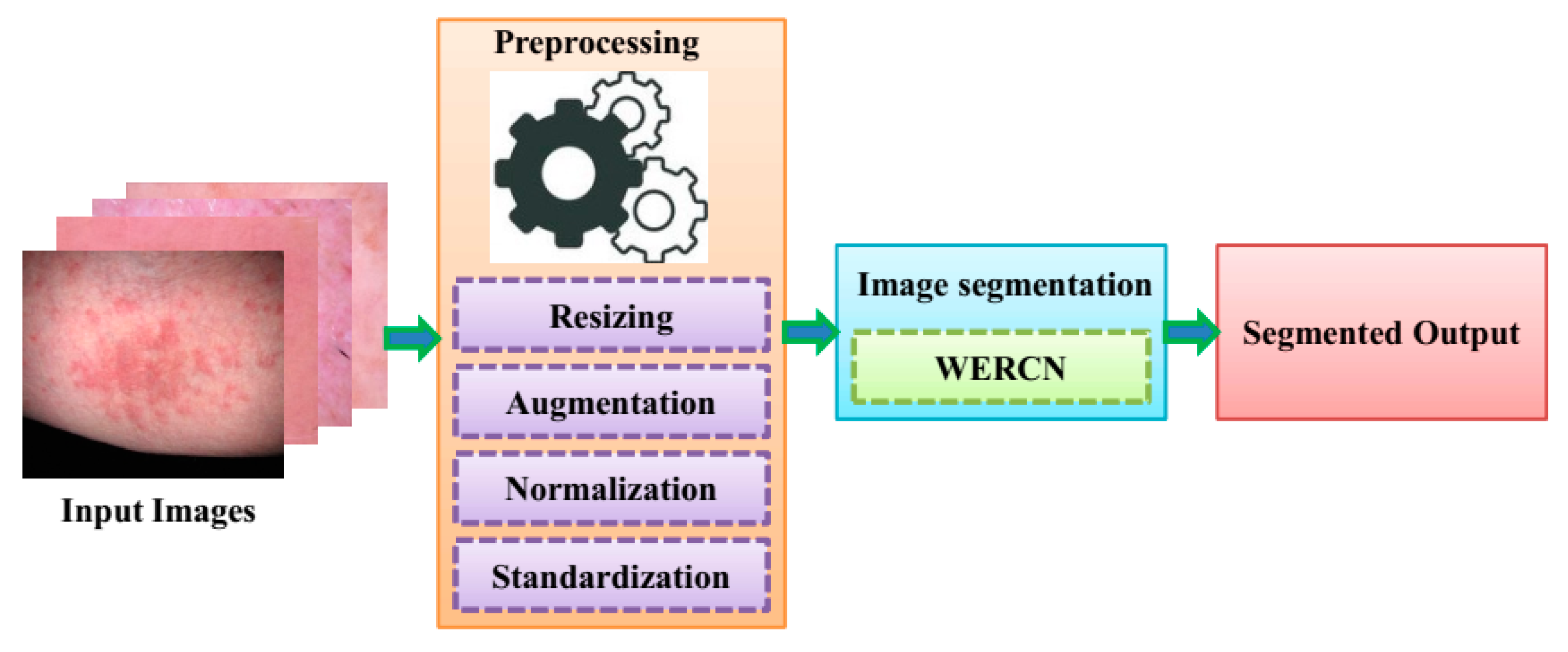

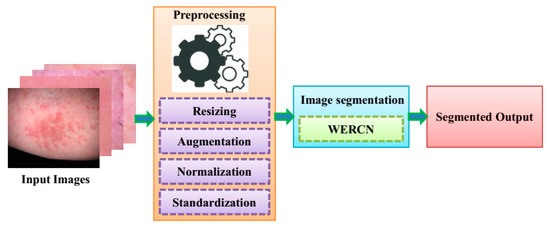

Segmentation offers the advantage of removing unwanted detail from the skin image, such as air, as well as allowing for the isolation of various tissues such as bone and soft tissue. In order to identify important areas in medical images and videos, medical image segmentation is used. When training computer vision models for healthcare purposes, image segmentation is a cost-effective and time-efficient method of labeling and annotation that can improve accuracy and outputs. The images used in this analysis are sourced from a dataset of skin disease images. For the segmentation of the skin disease from the dataset, a Mask R-CNN is illustrated. In the Mask R-CNN, the segmentation process is performed by pixel-level segmentation on the detected dataset images. This can also accommodate various classes and overlapping images of datasets. The Mask R-CNN can have greater efficiency, but this cannot work in a large number of dataset as it will have weight-optimizing issues. To overcome these issues, a weighted ensemble average model is employed; this can enhance the performance of segmentation in the skin disease image dataset. To prepare these images for analysis, they undergo preprocessing techniques. Next, the images are segmented using a specific technique. The resulting segmented images are then classified as either segmented or non-segmented. Finally, the predicted output is obtained, and the same is shown in Figure 1.

Figure 1.

Workflow of the proposed model.

3.1. Preprocessing

To ensure accurate results, the input data undergo a preprocessing method. This is necessary because the skin images taken may contain unwanted elements like noise, poor background, and low illumination [17]. By applying preprocessing techniques to the raw data, the proposed method can achieve better performance accuracy. Various techniques involved in preprocessing are Resizing, Augmentation, Normalization, and Standardization.

3.2. Skin Image Segmentation Using WERCNN

Analyzing an image and dividing it into different parts based on pixel characteristics is called image segmentation. This technique helps process images and conduct detailed analyses, like separating foreground and background images. After the preprocessing stage, the skin images are segmented by using a Weighted Ensemble Region-based Convolutional Network (WERCN). For better performance, image segmentation can be customized based on specific circumstances. In the next section, Mask R-CNN and the weighted average ensemble method (WAEM) are explained. These two techniques can be combined to segment skin disease images.

Mask R-CNN: The potential of a Mask R-CNN in the field of skin-related diseases is significant due to its advanced computerized vision model [18]. A Mask R-CNN is an extension of Faster R-CNN, which is widely used for detecting and segmenting areas of interest in medical images, including dermatological scans and photographs. With high accuracy, it can identify and isolate regions of potential concern. Mask R-CNN adds a branch to the Faster R-CNN structure to accurately forecast the object mask. The alignment achieved by the network between the input and output is pixel-to-pixel, which results in favorable outcomes [19]. They are characterized to perform various loss function tasks during the training stage, which is given as per the following:

where indicates the classification box, and denotes the bounding box. Here, and are identical to each other. By implementing this particular definition , the network can generate distinct masks for each class, without any conflict or overlap between them. , an ROI, is determined as follows:

where from the above equation, indicates the object class, and , which indicates the probability distribution. The relapse loss of the bouncing box for an RoI is communicated as;

Here, indicates the precise bounding-box regression of an RoI, and denotes the forecasted bounding-box regression for Class u. The above equation with is expressed as;

The binary loss function is denoted by the variable and is determined by computing the average binary cross entropy. Here, the output for every RoI is denoted as , where indicates the branch mask within the resolution . is determined by

where indicates the true mask, while represents the forecasted mask for the RoIs in class u, which is given as and . The masks predicted by this model correspond to each of the key point types (e.g., various skin diseases). The detection algorithm has been trained to accurately identify the exact location of key points on an object or patient in a single attempt. It is important to note that the processes of person detection and key point detection are completely separate from each other and do not rely on one another. By analyzing vital information, we can accurately determine the movement and position of body organs. This allows us to promptly identify any signs of discomfort or diseases in the patient’s body. It is important to closely monitor the movements of a particular body part to accurately assess any discomfort a patient may be experiencing within the coordinates . To determine the distance between , the following equation can be utilized:

From Equation (6), the Euclidean distance between a key point in consecutive frames and , respectively, can be determined. and indicates the key points, and are located within the coordinates using the frames and , respectively.

Here, the value of “S” acts as a threshold to decide the distance between crucial points. In this work, refers to keeping an eye on specific areas of a patient’s skin condition that are considered critical. To accomplish this objective, we have thoroughly examined the Euclidean distances of every single key point within the patient’s organs by utilizing the following calculation:

where is the numerical values within the range span from one to a maximum value, denoted by the variable . The key components of human skin B refer to the essential parts of a patient’s anatomy, denoted as .

where describes the patient’s condition. indicates the threshold time, which is capable of differentiating between regular movements and those caused by a medical condition. R-CNN models can precisely distinguish and depict different skin conditions using this precise condition, which enable the arrangement of early and accurate diagnosis treatment and the further development of disease monitoring.

Weighted Ensemble Average Method: By multiplying the various weight values to the CNN outputs, the weighted average method increases the unweighted average [20]. Furthermore, the total sum of weight values is one.

In Equation (10), for the weighted average approach, β signifies the weight value which multiplies weight vector and t, and signifies the number of ensemble CNN models. In this work, WAE was predicted using a variety of parameter weightings. In the case of SAE, it is different because all parameters are given equal weights. Equation (11) provides the formula for WAE:

where S(i), Xi, si(i), and Q signify SAE output. Similarly, Qi was calculated using Equation (12):

where DCt is the performance efficiency of the ”t”th single model. The classification outcomes in the majority of research papers are based on a single model. The ensemble model can perform better than the single model, according to a large body of research. All of the features in the dataset cannot be extracted by a single model. Therefore, to improve performance, researchers use a variety of models. The optimal weighted average ensemble method is applied to multi-class classification in this study. The average ensemble method, in which each model generates predictions equally, is improved by this ensemble technique. In the weighted average ensemble method, each model is given a weight to calculate its contribution. Additionally, this study uses the grid search methodology to optimize the allocated weights.

Proposed Weighted Ensemble Region-based Convolutional Network (WERCN) for skin disease segmentation:

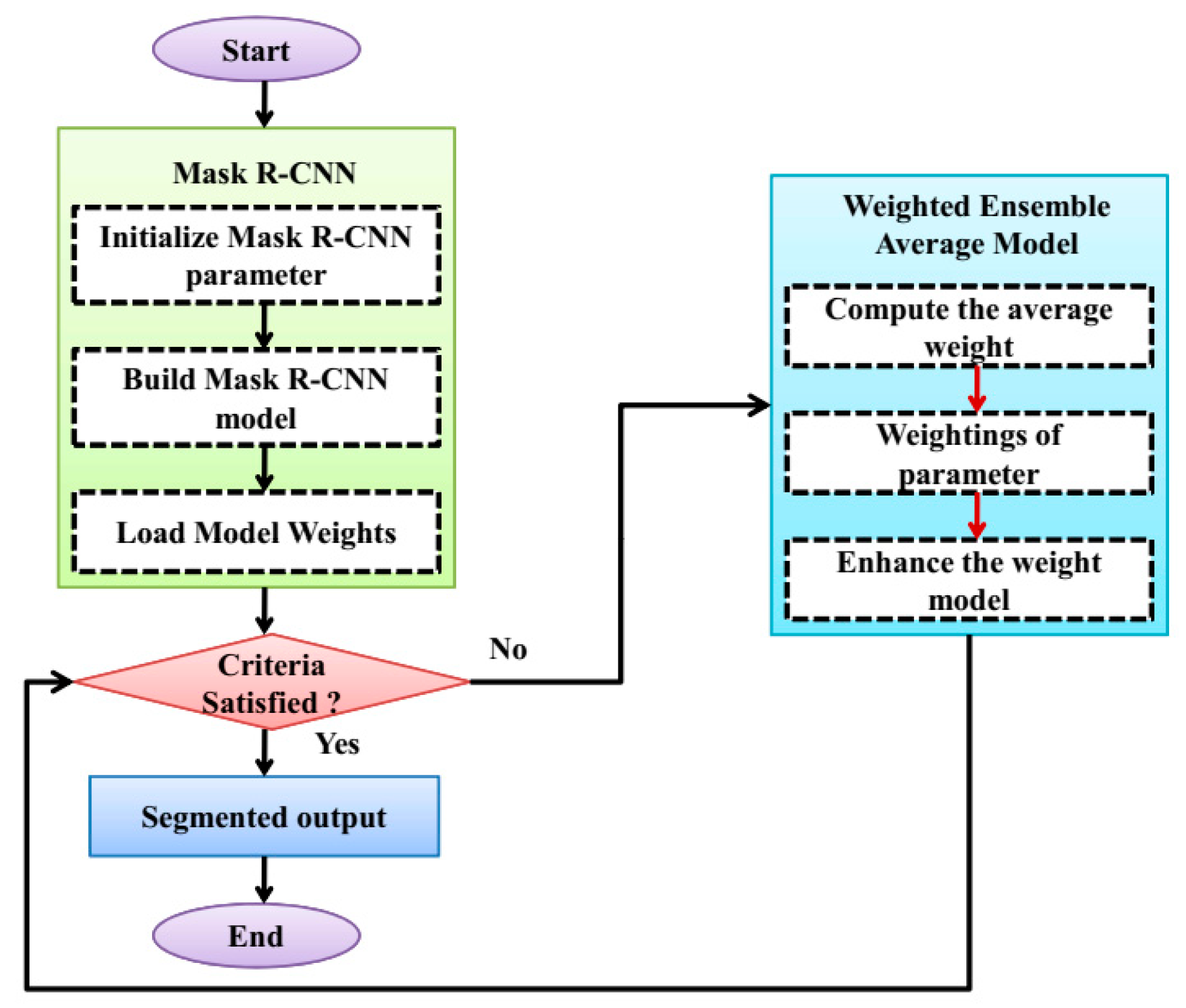

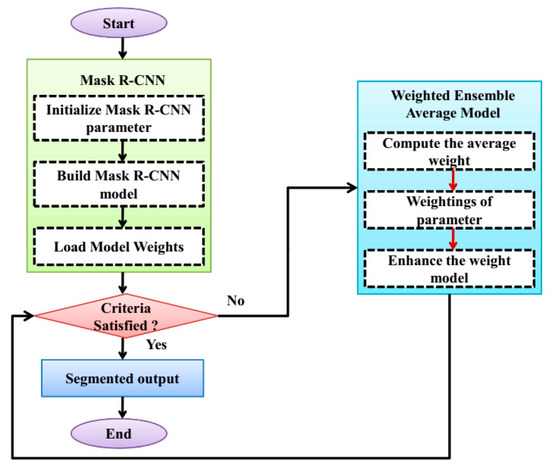

The specific areas with a skin lesion are precisely divided, and the accuracy of the skin image is enhanced by using the image segmentation technique. For the segmentation, a WERCN is proposed, this can be segmented by combining the Mask R-CNN and weighted average ensemble method. The Mask R-CNN is employed to enhance the segmentation process that can be performed by using pixel-level segmentation. Also, this can accommodate the multiple classes and overlapping of the skin disease images. To optimize the weight, a weighted average ensemble is utilized for this purpose. The weighted average ensemble enhances the accuracy and performance of the segmentation process; this can be especially for the complex and noisy problems. Also, this minimizes the overfitting issues and reduces the spread or dispersion of the predictions and performance model. The process of the WERCN is shown in Figure 2. First, the images are preprocessed and then segmented using the Mask R-CNN. The parameters are initialized and used to build the Mask R-CNN. Next, the model’s weights are loaded and optimized with a weighted average ensemble model. This weighted average ensemble model improves the Mask R-CNN’s weights. If the weights are optimized, then a segmented output is produced; if not, the process repeats.

Figure 2.

Flowchart of the WERCN model.

4. Results

The proposed method aims to segment skin diseases within a time limit, and it utilizes a dataset, the skin disease image dataset, for the collection of images to provide efficient results. The WERCN method is compared to the existing methods such as Convolutional Neural Network (CNN) [11], full-resolution Convolutional Neural Network (FrCN) [21], Support Vector Machine (SVM) [22], and Adaptive Neuro-Fuzzy Classifier (ANFC) [23]. This comparison was performed based on the general performance metrics, i.e., f1-score, recall, precision, specificity, and accuracy [24,25], to determine the performance of each method. In this section, the experimental setup, parameters used for the method, utilized performance metrics [26], and datasets are explained.

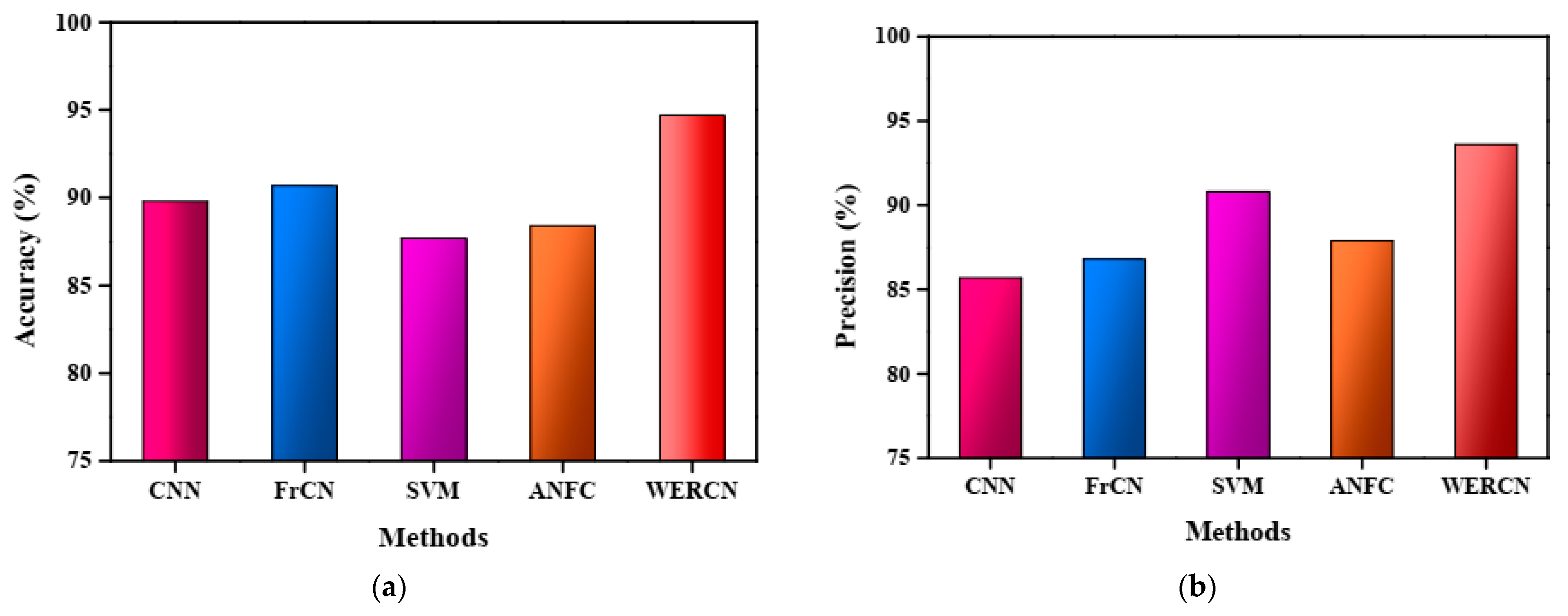

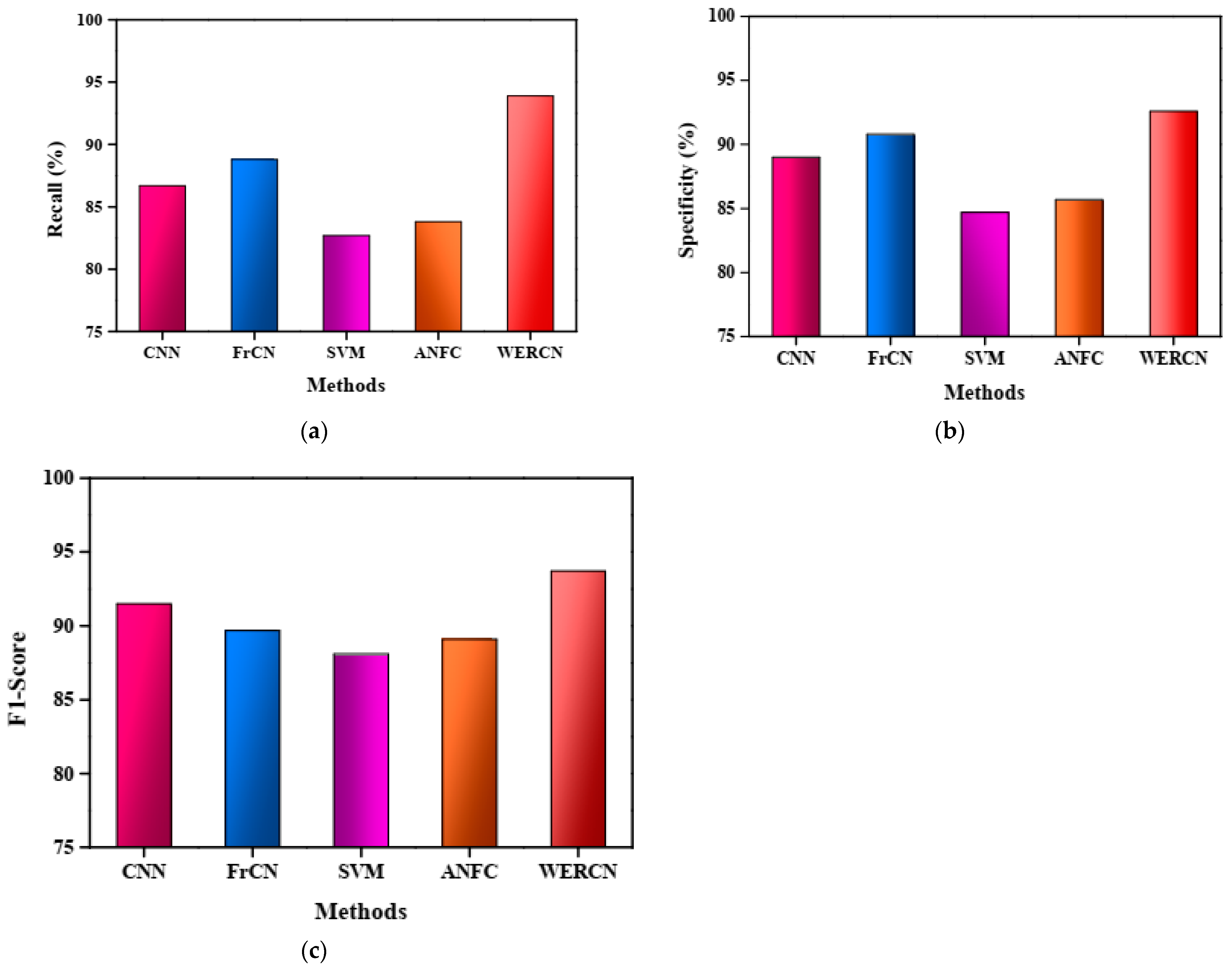

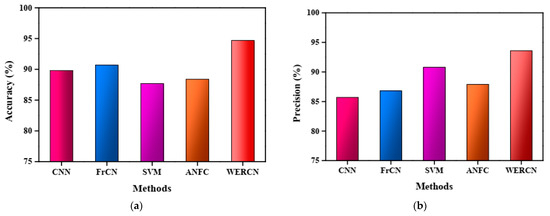

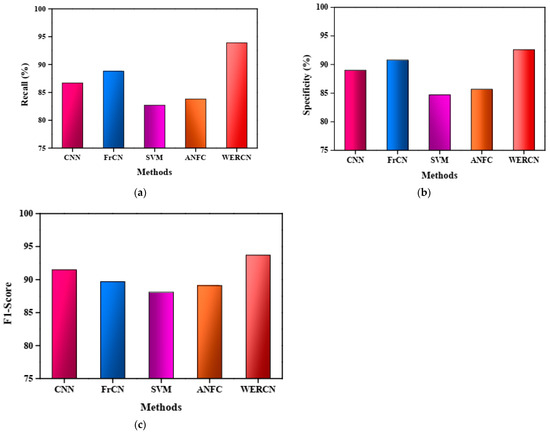

The results demonstrate that the proposed WERCN outperforms these existing methods, achieving higher performance scores. Table 1 presents a detailed comparison of the proposed method with the existing methods based on various performance measures. The superior performance of WERCN indicates its effectiveness in skin disease segmentation compared to the other methods evaluated. In the CNN, the performance value of the accuracy is 89.8%, with a precision of 85.7%, recall of 86.7%, specificity of 89.0%, and F1-score of 91.5%. In the FrCNN, the performance value of the accuracy is 90.7%, with a precision of 86.8%, recall of 88.8%, specificity of 90.8%, and F1-score of 89.7%. Also, in the SVM and ANFC, the performance value of the accuracy is 87.7% and 88.4%, with a precision of 90.8% and 87.9%, recall of 82.7% and 83.8%, specificity of 84.7% and 85.7%, and F1-score of 88.1% and 89.1%. However, the proposed WERCN attains a greater value of 94.7% accuracy, 93.6% precision, 93.9% recall, 92.6% specificity, and 93.7% F1-score than the other methods.

Table 1.

Comparison of the proposed WERCN.

The accuracy analysis of the proposed WERCN model is represented in Figure 3a. From the figure, it is noted that the proposed approach attains a greater accuracy in comparison to the other methods. The attained accuracy for the proposed WERCN is 94.7%, 89.8% for the CNN, 90.7% for the FrCN, 87.7% for the SVM, and 88.4% for the ANFC. The accuracy performance of the WERCN is better than the existing methods. Figure 3b depicts the graphical representation of the precision analysis for the proposed WERCN method, which achieves a higher precision of 93.6%.

Figure 3.

(a) Accuracy analysis. (b) Precision analysis.

In Figure 4b, the specificity measure is used to compare the existing methods with the proposed WERCN for image segmentation. Additionally, Figure 4c illustrates the F1-score analysis, with the WERCN achieving a higher F1-score of 93.7% compared to the CNN (91.5%), FrCN (89.7%), SVM (88.1%), and ANFC (89.1%). Overall, the proposed WERCN method demonstrates superior performance in skin disease image segmentation when compared to the existing methods, as evident from the higher values of precision, recall, specificity, and F1-score. These results indicate that WERCN is an effective approach for accurately segmenting skin disease images.

Figure 4.

(a) Recall analysis. (b) Specificity analysis. (c) F1-score analysis.

5. Conclusions

The proposed Weighted Ensemble Region-Based Convolutional Network (WERCN) is developed for effective skin image segmentation. First, input images are gathered from the skin disease image dataset, and then they undergo preprocessing. Next, the Mask RCNN method effectively segments the skin disease images, and the weight of the Mask RCNN is optimized using the Weighted Average Ensemble model. The results show that the proposed WERCN method achieves superior effectiveness in image segmentation, with an accuracy of 94.7%, an f1-score of 93.7%, a recall of 93.9%, a precision of 93.6%, and a specificity of 92.6%. In the future, our research aims to expand the WERCN method to detect other types of skin diseases and improve skin lesion detection accuracy. Additionally, we plan to explore real-time-based skin disease detection and early prediction techniques to enhance the practical applications of our research. Also, the WERCNN can be employed to identify other types of diseases like cancer, brain tumors, diabetes, tuberculosis, etc.

Author Contributions

Conceptualization, N. and V.; methodology, N. and V.; software, N. and V.; validation, N. and V.; formal analysis, V.; investigation, V.; resources, N. and V.; data curation, V.; writing—original draft preparation, N. and V.; writing—review and editing, N. and V.; visualization, N.; supervision, V.; project administration, N. and V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Acknowledgments

The authors would like to thank the reviewers for the constructive comments, which improved the overall quality of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Aaqib, M.; Ghani, M.; Khan, A. Deep Learning-Based Identification of Skin Cancer on Any Suspicious Lesion. In Proceedings of the 2023 4th International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 17–18 March 2023; pp. 1–6. [Google Scholar]

- Liao, Y.H.; Chang, P.C.; Wang, C.C.; Li, H.H. An Optimization-Based Technology Applied for Face Skin Symptom Detection. Healthcare 2022, 10, 2396. [Google Scholar] [CrossRef] [PubMed]

- Mekhalfi, M.L.; Nicolò, C.; Bazi, Y.; Al Rahhal, M.M.; Al Maghayreh, E. Detecting crop circles in google earth images with mask R-CNN and YOLOv3. Appl. Sci. 2021, 11, 2238. [Google Scholar] [CrossRef]

- Zafar, M.; Sharif, M.I.; Sharif, M.I.; Kadry, S.; Bukhari, S.A.C.; Rauf, H.T. Skin lesion analysis and cancer detection based on machine/deep learning techniques: A comprehensive survey. Life 2023, 13, 146. [Google Scholar] [CrossRef]

- Virupakshappa, A. Diagnosis of melanoma with region and contour based feature extraction and KNN classification. Int. J. Innov. Sci. Eng. Res. 2021, 8, 157–164. [Google Scholar]

- Naeem, A.; Anees, T.; Fiza, M.; Naqvi, R.A.; Lee, S.W. SCDNet: A Deep Learning-Based Framework for the Multiclassification of Skin Cancer Using Dermoscopy Images. Sensors 2022, 22, 5652. [Google Scholar] [CrossRef]

- Leite, M.; Parreira, W.D.; Fernandes, A.M.D.R.; Leithardt, V.R.Q. Image Segmentation for Human Skin Detection. Appl. Sci. 2022, 12, 12140. [Google Scholar] [CrossRef]

- Rout, R.; Parida, P.; Dash, S. Automatic Skin Lesion Segmentation using a Hybrid Deep Learning Network. Network 2023, 15, 238–249. [Google Scholar]

- Maknuna, L.; Kim, H.; Lee, Y.; Choi, Y.; Kim, H.; Yi, M.; Kang, H.W. Automated structural analysis and quantitative characterization of scar tissue using machine learning. Diagnostics 2022, 12, 534. [Google Scholar] [CrossRef]

- Khan, M.; Akram, T.; Zhang, Y.; Sharif, M. Attributes based Skin Lesion Detection and Recognition: A Mask RCNN and Transfer Learning-based Deep Learning Framework. Pattern Recognit. Lett. 2021, 143, 58–66. [Google Scholar] [CrossRef]

- Khouloud, S.; Ahlem, M.; Fadel, T.; Amel, S. W-net and inception residual network for skin lesion segmentation and classification. Appl. Intell. 2022, 52, 3976–3994. [Google Scholar] [CrossRef]

- Samanta, P.K.; Rout, N.K. Skin lesion classification using deep convolutional neural network and transfer learning approach. In Advances in Smart Communication Technology and Information Processing: OPTRONIX; Springer: Berlin/Heidelberg, Germany, 2020; pp. 327–335. [Google Scholar]

- Cao, X.; Pan, J.S.; Wang, Z.; Sun, Z.; Haq, A.; Deng, W.; Yang, S. Application of generated mask method based on Mask R-CNN in classification and detection of melanoma. Comput. Methods Programs Biomed. 2021, 207, 106174. [Google Scholar] [CrossRef] [PubMed]

- Wibowo, A.; Purnama, S.R.; Wirawan, P.W.; Rasyidi, H. Lightweight encoder-decoder model for automatic skin lesion segmentation. Inform. Med. Unlocked 2021, 25, 100640. [Google Scholar] [CrossRef]

- Anand, V.; Gupta, S.; Gupta, D.; Gulzar, Y.; Xin, Q.; Juneja, S.; Shah, A.; Shaikh, A. Weighted Average Ensemble Deep Learning Model for Stratification of Brain Tumor in MRI Images. Diagnostics 2023, 13, 1320. [Google Scholar] [CrossRef] [PubMed]

- Ravi, V. Attention cost-sensitive deep learning-based approach for skin cancer detection and classification. Cancers 2022, 14, 5872. [Google Scholar] [CrossRef] [PubMed]

- Xie, F.; Zhang, P.; Jiang, T.; She, J.; Shen, X.; Xu, P.; Zhao, W.; Gao, G.; Guan, Z. Lesion segmentation framework based on convolutional neural networks with dual attention mechanism. Electronics 2021, 10, 3103. [Google Scholar] [CrossRef]

- Bagheri, F.; Tarokh, M.J.; Ziaratban, M. Skin lesion segmentation from dermoscopic images by using Mask R-CNN, Retina-Deeplab, and graph-based methods. Biomed. Signal Process. Control 2021, 67, 102533. [Google Scholar] [CrossRef]

- Ahmed, I.; Jeon, G.; Piccialli, F. A deep-learning-based smart healthcare system for patient’s discomfort detection at the edge of internet of things. IEEE Internet Things J. 2021, 8, 10318–10326. [Google Scholar] [CrossRef]

- Chompookham, T.; Surinta, O. Ensemble methods with deep convolutional neural networks for plant leaf recognition. ICIC Express Lett. 2021, 15, 553–565. [Google Scholar]

- Vasconcelos, F.F.X.; Medeiros, A.G.; Peixoto, S.A.; Reboucas Filho, P.P. Automatic skin lesions segmentation based on a new morphological approach via geodesic active contour. Cogn. Syst. Res. 2019, 55, 44–59. [Google Scholar] [CrossRef]

- Melbin, K.; Raj, Y.J.V. Integration of modified ABCD features and support vector machine for skin lesion types classification. Multimed. Tools Appl. 2021, 80, 8909–8929. [Google Scholar] [CrossRef]

- YacinSikkandar, M.; Alrasheadi, B.A.; Prakash, N.B.; Hemalakshmi, G.R.; Mohanarathinam, A.; Shankar, K. Deep learning based an automated skin lesion segmentation and intelligent classification model. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 3245–3255. [Google Scholar] [CrossRef]

- Uplaonkar, D.S.; Patil, N. Ultrasound liver tumor segmentation using adaptively regularized kernel-based fuzzy C means with enhanced level set algorithm. Int. J. Intell. Comput. Cybern. 2021, 15, 438–453. [Google Scholar] [CrossRef]

- Virupakshappa; Amarapur, B. An improved segmentation approach using level set method with dynamic thresholding for tumor detection in MRI images. HELIX 2017, 7, 2059–2066. [Google Scholar]

- Goyal, M.; Oakley, A.; Bansal, P.; Dancey, D.; Yap, M.H. Skin Lesion Segmentation in Dermoscopic Images with Ensemble Deep Learning Methods. IEEE Access 2020, 8, 4171–4181. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).