Cascade Machine Learning Approach Applied to Short-Term Medium Horizon Demand Forecasting †

Abstract

1. Introduction

2. Methodology

2.1. Data Imputation

2.2. Long Short Term Memory (LSTM)

2.2.1. Water Demand Forecasting

2.2.2. Minimum Night Flow Forecasting

2.3. Cascade Approach

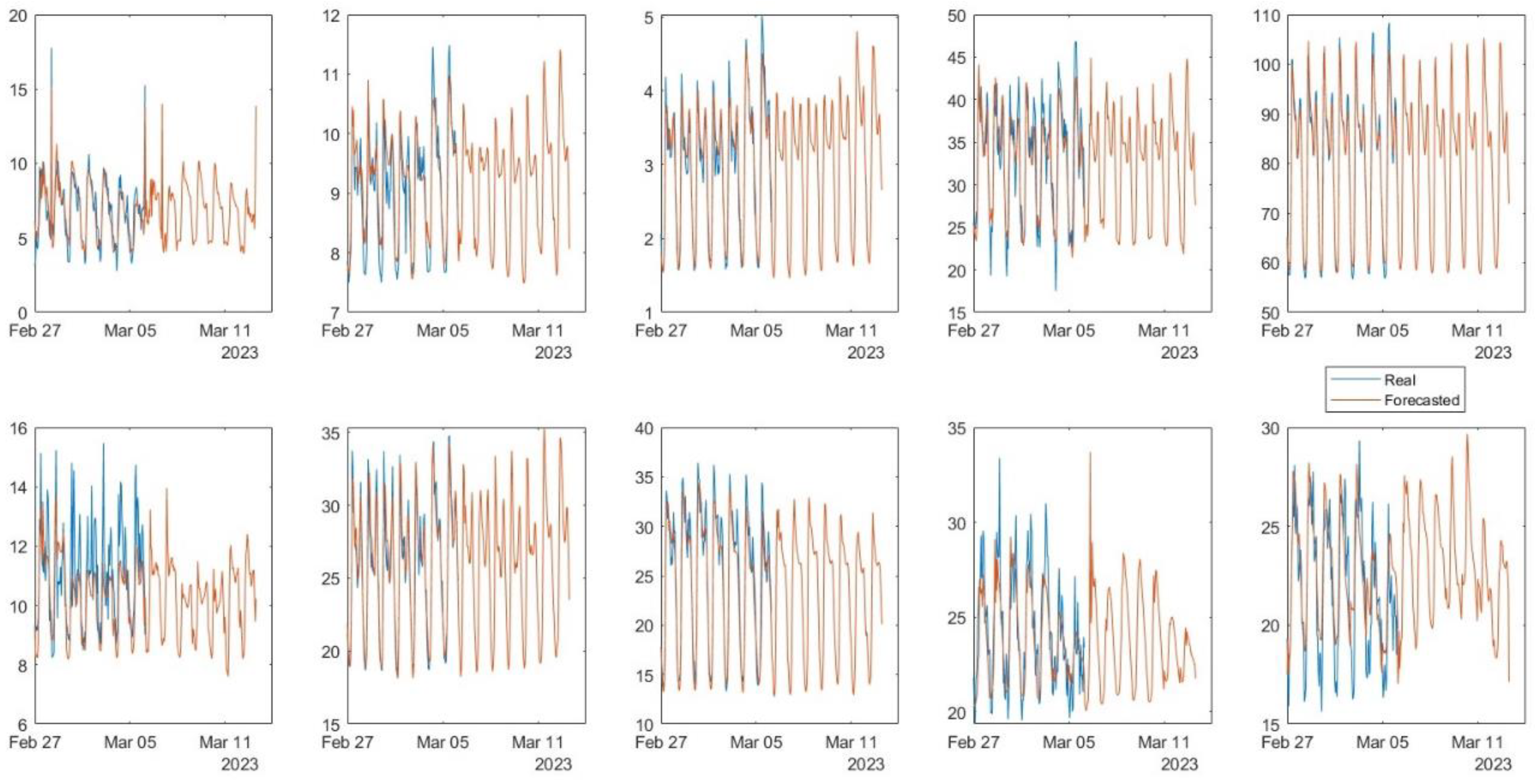

3. Results

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Barzegar, R.; Aalami, M.T.; Adamowski, J. Short-term water quality variable prediction using a hybrid CNN–LSTM deep learning model. Stoch. Environ. Res. Risk Assess. 2020, 34, 415–433. [Google Scholar] [CrossRef]

- Zanfei, A.; Brentan, B.M.; Menapace, A.; Righetti, M. A short-term water demand forecasting model using multivariate long short-term memory with meteorological data. J. Hydroinform. 2022, 24, 1053–1065. [Google Scholar] [CrossRef]

- Zanfei, A.; Brentan, B.M.; Menapace, A.; Righetti, M.; Herrera, M. Graph convolutional recurrent neural networks for water demand forecasting. Water Resour. Res. 2022, 58, e2022WR032299. [Google Scholar] [CrossRef]

- Zanfei, A.; Menapace, A.; Brentan, B.M.; Righetti, M. How does missing data imputation affect the forecasting of urban water demand? J. Water Res. Plan. Manag. 2022, 148, 04022060. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 2018, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Mikolov, T.; Joulin, A.; Chopra, S.; Mathieu, M.; Ranzato, M.A. Learning longer memory in recurrent neural networks. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 8 May 2015. [Google Scholar]

| DMA | A | B | C | D | E | F | G | H | I | J |

|---|---|---|---|---|---|---|---|---|---|---|

| WEEK 1 | ||||||||||

| Total Score | 3.16 | 1.76 | 1.52 | 5.88 | 4.50 | 2.55 | 4.19 | 1.89 | 2.91 | 4.54 |

| Max 24 h | 1.65 | 0.83 | 0.72 | 3.19 | 2.48 | 1.14 | 2.09 | 0.95 | 1.43 | 2.60 |

| Aver. 24 h | 0.68 | 0.61 | 0.52 | 1.31 | 1.16 | 0.91 | 1.28 | 0.42 | 0.80 | 1.15 |

| Aver. Week | 0.83 | 0.33 | 0.29 | 1.39 | 0.85 | 0.50 | 0.82 | 0.51 | 0.69 | 0.79 |

| WEEK 2 | ||||||||||

| Total Score | 7.60 | 1.74 | 1.41 | 8.62 | 10.02 | 3.62 | 3.14 | 5.84 | 7.43 | 5.47 |

| Max 24 h | 5.09 | 0.85 | 0.79 | 4.53 | 6.44 | 1.99 | 1.66 | 3.20 | 4.36 | 2.16 |

| Aver. 24 h | 1.32 | 0.57 | 0.39 | 2.21 | 1.82 | 0.87 | 0.89 | 1.37 | 1.82 | 2.30 |

| Aver. Week | 1.20 | 0.33 | 0.23 | 1.87 | 1.77 | 0.75 | 0.59 | 1.28 | 1.25 | 1.01 |

| WEEK 3 | ||||||||||

| Total Score | 4.39 | 1.59 | 1.63 | 8.82 | 7.75 | 4.73 | 3.25 | 5.84 | 6.01 | 5.47 |

| Max 24 h | 2.12 | 0.78 | 0.87 | 4.61 | 4.84 | 2.71 | 1.67 | 3.14 | 3.11 | 2.16 |

| Aver. 24 h | 1.36 | 0.47 | 0.52 | 2.25 | 1.66 | 1.03 | 0.95 | 1.38 | 1.83 | 2.30 |

| Aver. Week | 0.92 | 0.34 | 0.25 | 1.96 | 1.25 | 0.98 | 0.63 | 1.33 | 1.07 | 1.01 |

| WEEK 4 | ||||||||||

| Total Score | 2.48 | 1.49 | 0.86 | 10.52 | 5.77 | 2.63 | 2.90 | 4.45 | 4.33 | 4.31 |

| Max 24 h | 1.33 | 0.84 | 0.45 | 6.10 | 2.45 | 1.43 | 1.34 | 2.24 | 2.33 | 2.28 |

| Aver. 24 h | 0.71 | 0.32 | 0.23 | 2.21 | 2.36 | 0.69 | 1.06 | 1.33 | 1.20 | 1.28 |

| Aver. Week | 0.44 | 0.33 | 0.18 | 2.22 | 0.96 | 0.51 | 0.50 | 0.88 | 0.81 | 0.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brentan, B.; Zanfei, A.; Oberascher, M.; Sitzenfrei, R.; Izquierdo, J.; Menapace, A. Cascade Machine Learning Approach Applied to Short-Term Medium Horizon Demand Forecasting. Eng. Proc. 2024, 69, 42. https://doi.org/10.3390/engproc2024069042

Brentan B, Zanfei A, Oberascher M, Sitzenfrei R, Izquierdo J, Menapace A. Cascade Machine Learning Approach Applied to Short-Term Medium Horizon Demand Forecasting. Engineering Proceedings. 2024; 69(1):42. https://doi.org/10.3390/engproc2024069042

Chicago/Turabian StyleBrentan, Bruno, Ariele Zanfei, Martin Oberascher, Robert Sitzenfrei, Joaquin Izquierdo, and Andrea Menapace. 2024. "Cascade Machine Learning Approach Applied to Short-Term Medium Horizon Demand Forecasting" Engineering Proceedings 69, no. 1: 42. https://doi.org/10.3390/engproc2024069042

APA StyleBrentan, B., Zanfei, A., Oberascher, M., Sitzenfrei, R., Izquierdo, J., & Menapace, A. (2024). Cascade Machine Learning Approach Applied to Short-Term Medium Horizon Demand Forecasting. Engineering Proceedings, 69(1), 42. https://doi.org/10.3390/engproc2024069042