Efficient Battery Management and Workflow Optimization in Warehouse Robotics Through Advanced Localization and Communication Systems †

Abstract

1. Introduction

2. Materials and Methods

2.1. System Functionalities

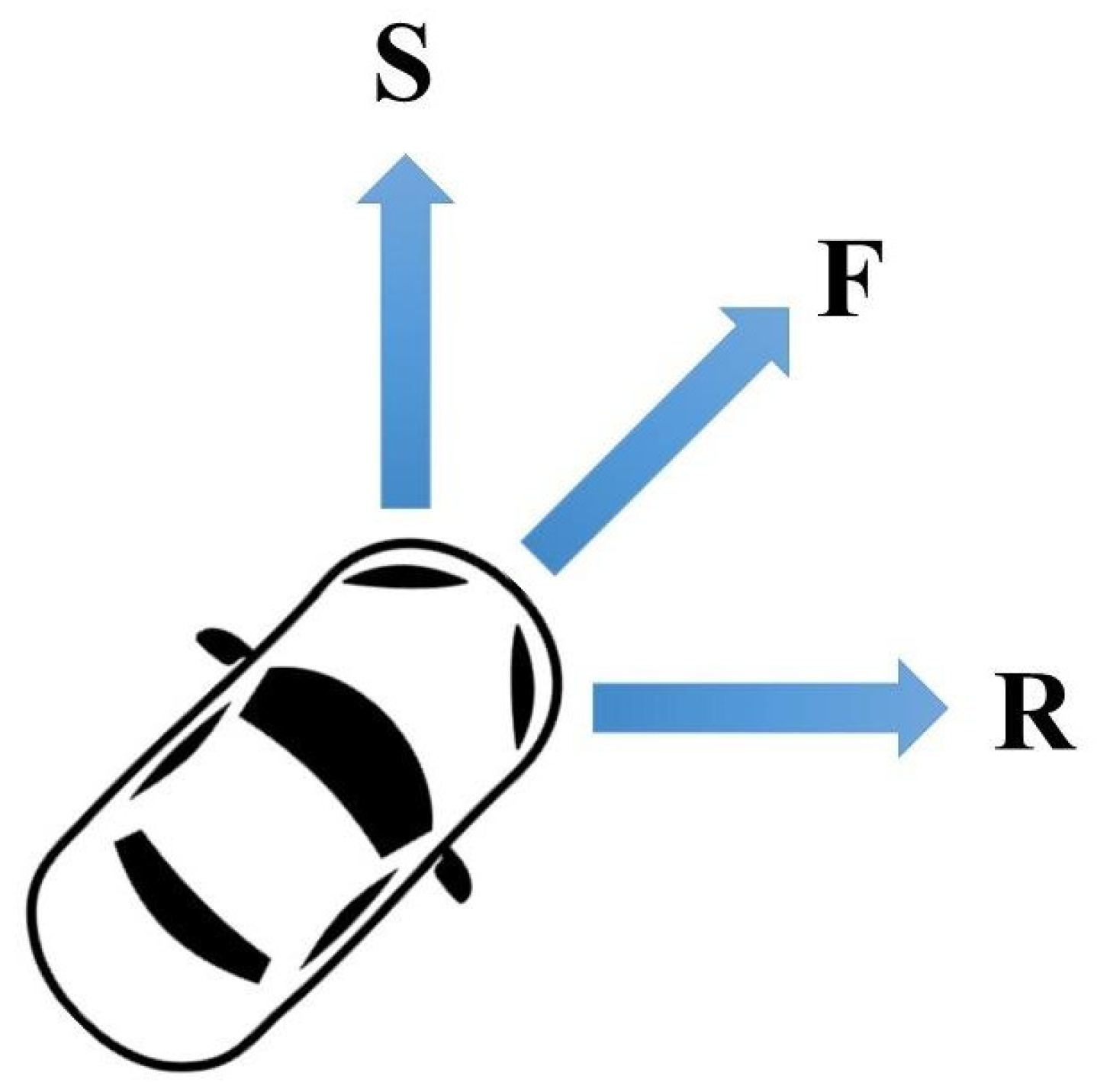

2.1.1. Main System Functionalities

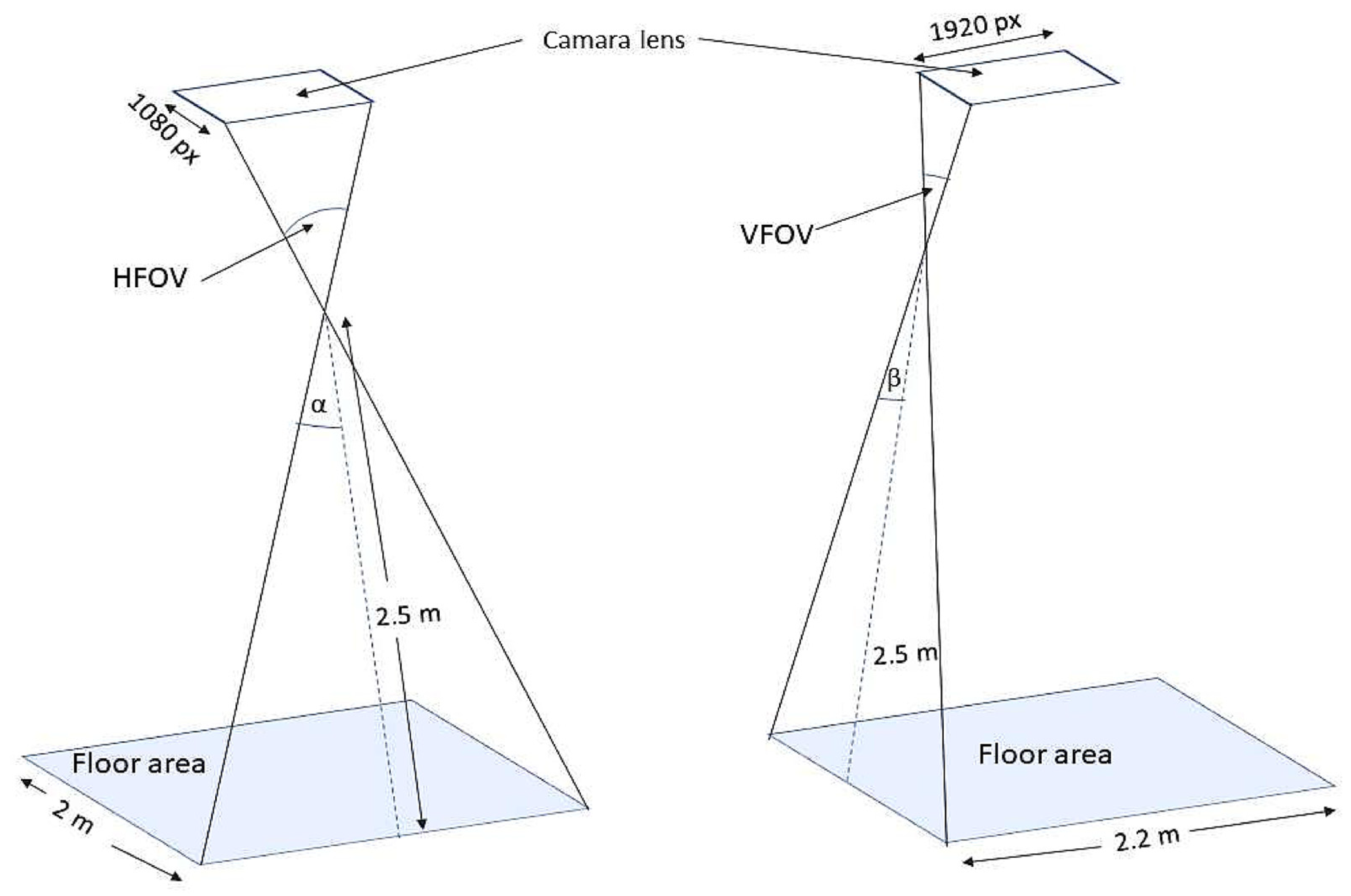

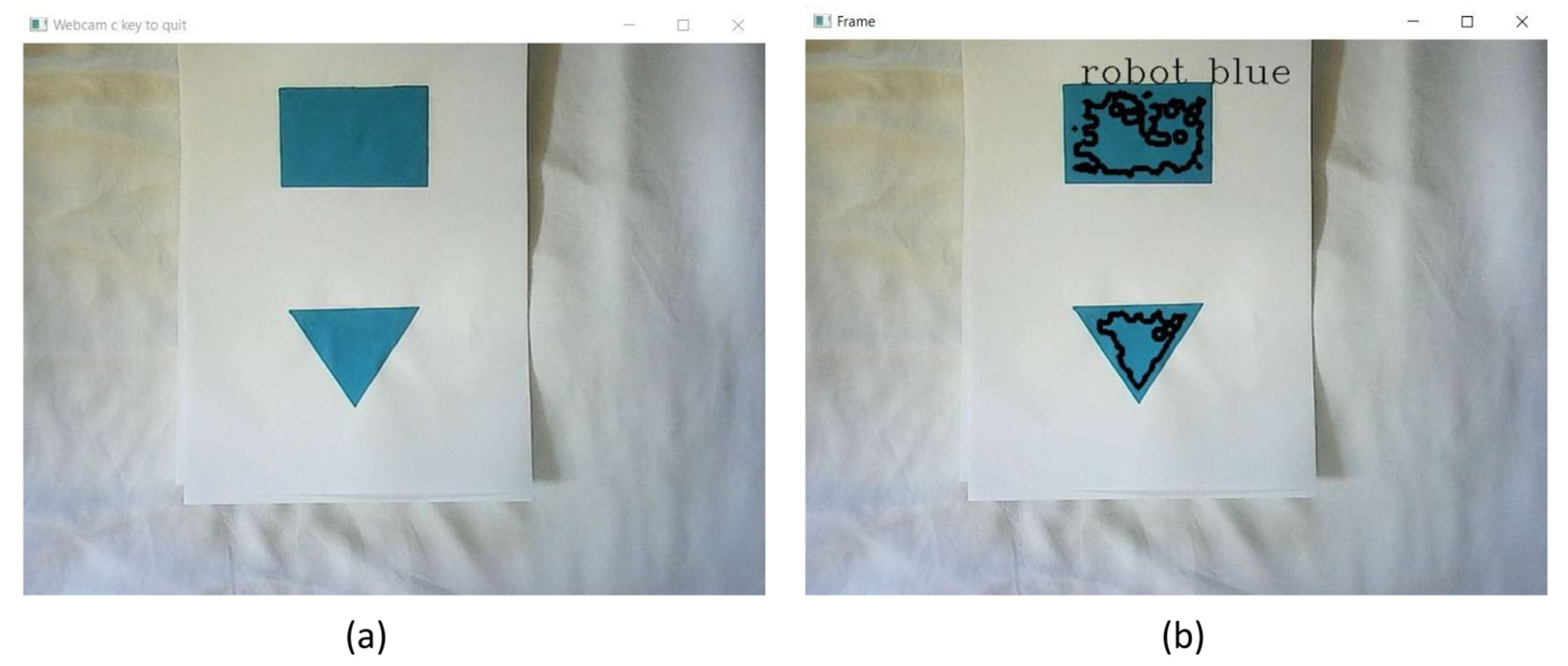

2.1.2. Mapping Functionality

2.1.3. Communication Functionalities

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AGV | Autonomous Guided Vehicle |

| UGV | Unmanned Ground Vehicle |

| GPS | Global Positioning System |

| Wi-Fi | Wireless Fidelity |

| IMU | Inertial Measurement Unit |

| GUI | Graphical User Interface |

| RAM | Random Access Memory |

| FOV | Field of View |

| VFOV | Vertical Field of View |

References

- Xie, W.; Peng, X.; Liu, Y.; Zeng, J.; Li, L.; Eisaka, T. Conflict-Free Coordination Planning for Multiple Automated Guided Vehicles in an Intelligent Warehousing System. Simul. Model. Pract. Theory 2024, 134, 102945. [Google Scholar] [CrossRef]

- Raguraman, P.; Meghana, A.; Navya, Y.; Karishma, S.; Iswarya, S. Color Detection of RGB Images Using Python and OpenCv. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2021, 7, 109–112. [Google Scholar] [CrossRef]

- Mohd Ali, N.; Md Rashid, N.K.A.; Mustafah, Y.M. Performance Comparison between RGB and HSV Color Segmentations for Road Signs Detection. Appl. Mech. Mater. 2013, 393, 550–555. [Google Scholar] [CrossRef]

- Department of Computer Science, Lady Doak College, Madurai; Hema, D.; Kannan, D.S.; Department of Computer Applications, School of Information Technology, Madurai Kamaraj University. Madurai Interactive Color Image Segmentation Using HSV Color Space. Sci. Technol. J. 2019, 7, 37–41. [Google Scholar] [CrossRef]

- Simbolon, S.; Jumadi, J.; Khairil, K.; Yupianti, Y.; Yulianti, L.; Supiyandi, S.; Windarto, A.P.; Wahyuni, S. Image Segmentation Using Color Value of the Hue in CT Scan Result. J. Phys. Conf. Ser. 2022, 2394, 012017. [Google Scholar] [CrossRef]

- Hossen, M.K.; Bari, S.M.; Barman, P.P.; Roy, R.; Das, P.K. Application of Python-OpenCV to Detect Contour of Shapes and Colour of a Real Image. Int. J. Nov. Res. Comput. Sci. Softw. Eng. 2022, 9, 20–25. [Google Scholar] [CrossRef]

- Wang, H.; Yu, K.; Yu, H. Mobile Robot Localisation Using ZigBee Wireless Sensor Networks and a Vision Sensor. Int. J. Model. Identif. Control. 2010, 10, 184. [Google Scholar] [CrossRef]

- Atali, G.; Garip, Z.; Karayel, D.; Ozkan, S.S. Localization of Mobile Robot Using Odometry, Camera Images and Extended Kalman Filter. Acta Phys. Pol. A 2018, 134, 204–207. [Google Scholar] [CrossRef]

- Rachmawati, D.; Gustin, L. Analysis of Dijkstra’s Algorithm and A* Algorithm in Shortest Path Problem. J. Phys. Conf. Ser. 2020, 1566, 012061. [Google Scholar] [CrossRef]

- Batik Garip, Z.; Karayel, D.; Ozkan, S.S.; Atali, G. Path Planning for Multiple Mobile Robots Using A* Algorithm. Acta Phys. Pol. A 2017, 132, 685–688. [Google Scholar] [CrossRef]

- Abdhul Rahuman, M.A.; Kahatapitiya, N.S.; Amarakoon, V.N.; Wijenayake, U.; Silva, B.N.; Jeon, M.; Kim, J.; Ravichandran, N.K.; Wijesinghe, R.E. Recent Technological Progress of Fiber-Optical Sensors for Bio-Mechatronics Applications. Technologies 2023, 11, 157. [Google Scholar] [CrossRef]

- Xu, X.; Yao, W.W.; Gong, L. Information Technology in Intelligent Warehouse Management System Based on ZigBee. Adv. Mater. Res. 2014, 977, 468–471. [Google Scholar] [CrossRef]

- Li, Z.; Barenji, A.V.; Jiang, J.; Zhong, R.Y.; Xu, G. A Mechanism for Scheduling Multi Robot Intelligent Warehouse System Face with Dynamic Demand. J. Intell. Manuf. 2020, 31, 469–480. [Google Scholar] [CrossRef]

- Ganesan, P.; Sajiv, G.; Leo, L.M. Warehouse Management System Using Microprocessor Based Mobile Robotic Approach. In Proceedings of the 2017 Third International Conference on Science Technology Engineering & Management (ICONSTEM), Chennai, India, 23–24 March 2017; pp. 868–872. [Google Scholar]

- Ahmad, U.; Poon, K.; Altayyari, A.M.; Almazrouei, M.R. A Low-Cost Localization System for Warehouse Inventory Management. In Proceedings of the 2019 International Conference on Electrical and Computing Technologies and Applications (ICECTA), Ras Al Khaimah, United Arab Emirates, 19–21 November 2019; pp. 1–5. [Google Scholar]

- Likhouzova, T.; Demianova, Y. Robot Path Optimization in Warehouse Management System. Evol. Intel. 2022, 15, 2589–2595. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dhanushka, S.; Hasaranga, C.; Kahatapitiya, N.S.; Wijesinghe, R.E.; Wijethunge, A. Efficient Battery Management and Workflow Optimization in Warehouse Robotics Through Advanced Localization and Communication Systems. Eng. Proc. 2024, 82, 50. https://doi.org/10.3390/ecsa-11-20416

Dhanushka S, Hasaranga C, Kahatapitiya NS, Wijesinghe RE, Wijethunge A. Efficient Battery Management and Workflow Optimization in Warehouse Robotics Through Advanced Localization and Communication Systems. Engineering Proceedings. 2024; 82(1):50. https://doi.org/10.3390/ecsa-11-20416

Chicago/Turabian StyleDhanushka, Shakeel, Chamoda Hasaranga, Nipun Shantha Kahatapitiya, Ruchire Eranga Wijesinghe, and Akila Wijethunge. 2024. "Efficient Battery Management and Workflow Optimization in Warehouse Robotics Through Advanced Localization and Communication Systems" Engineering Proceedings 82, no. 1: 50. https://doi.org/10.3390/ecsa-11-20416

APA StyleDhanushka, S., Hasaranga, C., Kahatapitiya, N. S., Wijesinghe, R. E., & Wijethunge, A. (2024). Efficient Battery Management and Workflow Optimization in Warehouse Robotics Through Advanced Localization and Communication Systems. Engineering Proceedings, 82(1), 50. https://doi.org/10.3390/ecsa-11-20416