Generative Artificial Intelligence Image Tools among Future Designers: A Usability, User Experience, and Emotional Analysis

Abstract

1. Introduction

2. Literature Review

3. Method, Procedure, and Materials

3.1. Quantitative Experimental Design

3.2. Procedure

3.3. Participants of the Study

3.4. Data Gathering

- (a)

- The USEQ was chosen because it is a valid and reliable [35] survey instrument with 30 items that examine four dimensions of Usability: Usefulness, Ease of Use, Ease of Learning, and Satisfaction. Each item was rated on a seven-point Likert scale ranging from 1 = Strongly Disagree to 7 = Strongly Agree. This questionnaire evaluated the self-perceived Usability of GenAI image tools.

- (b)

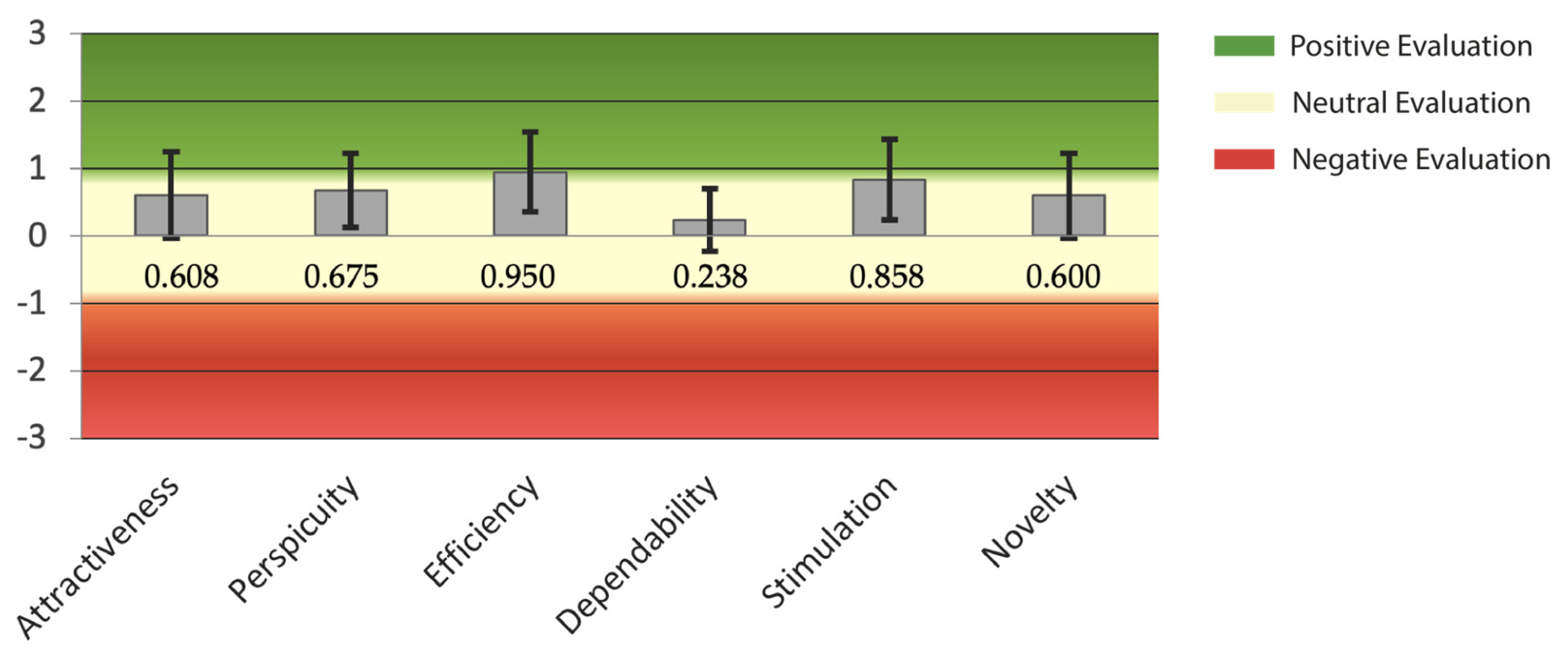

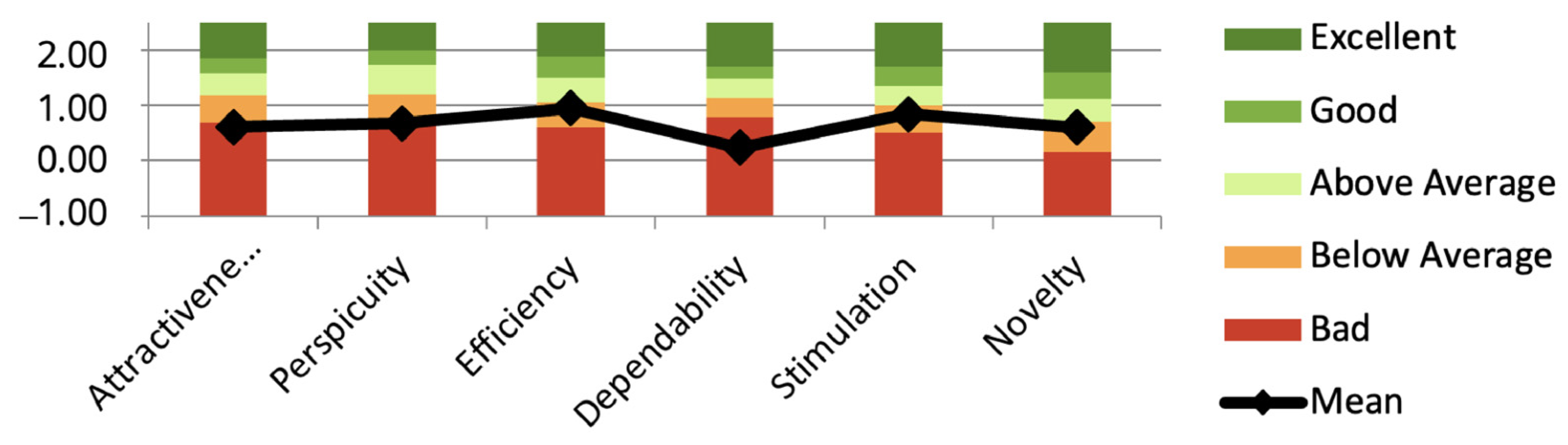

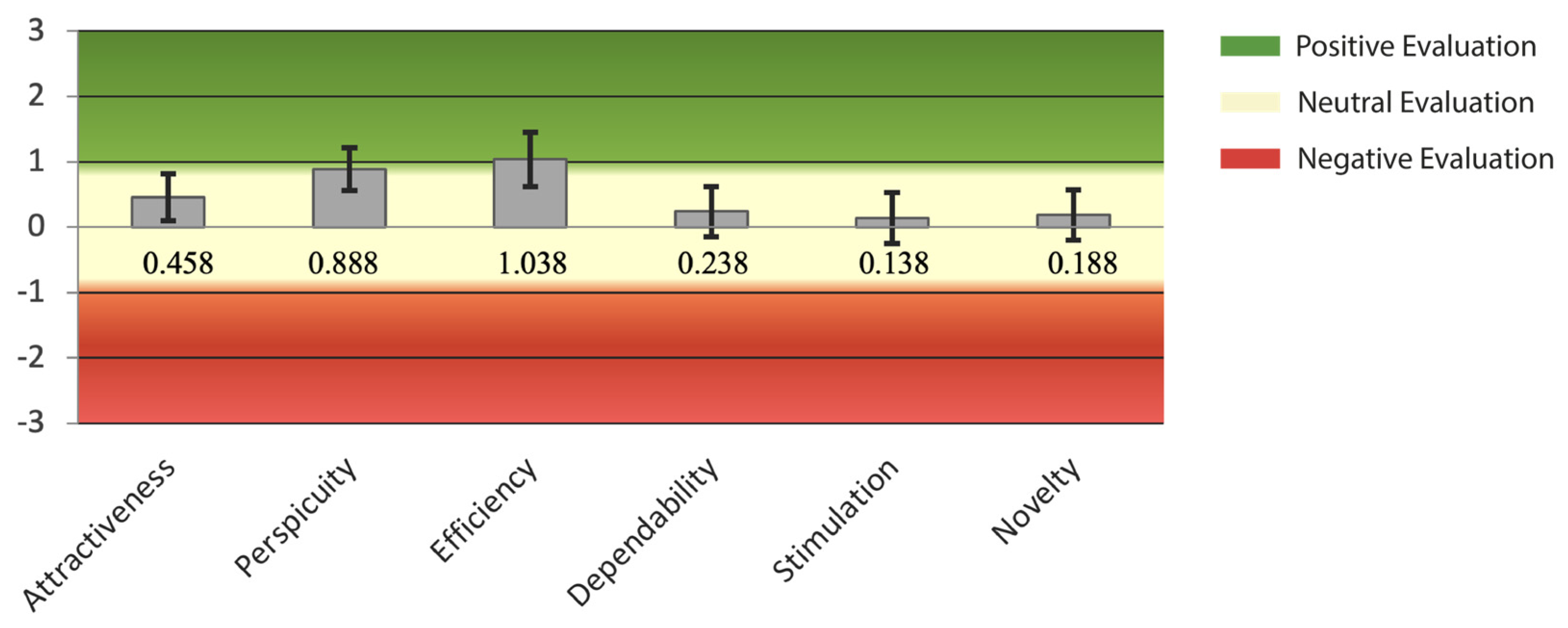

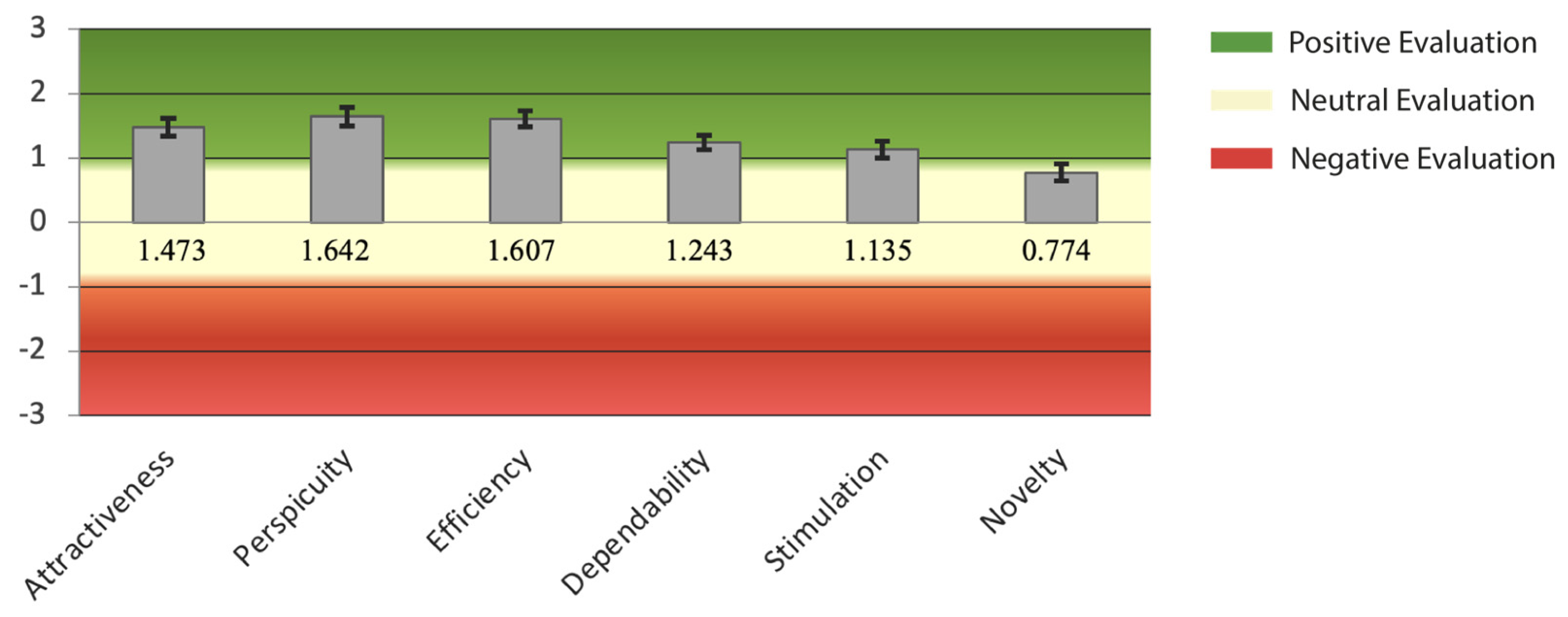

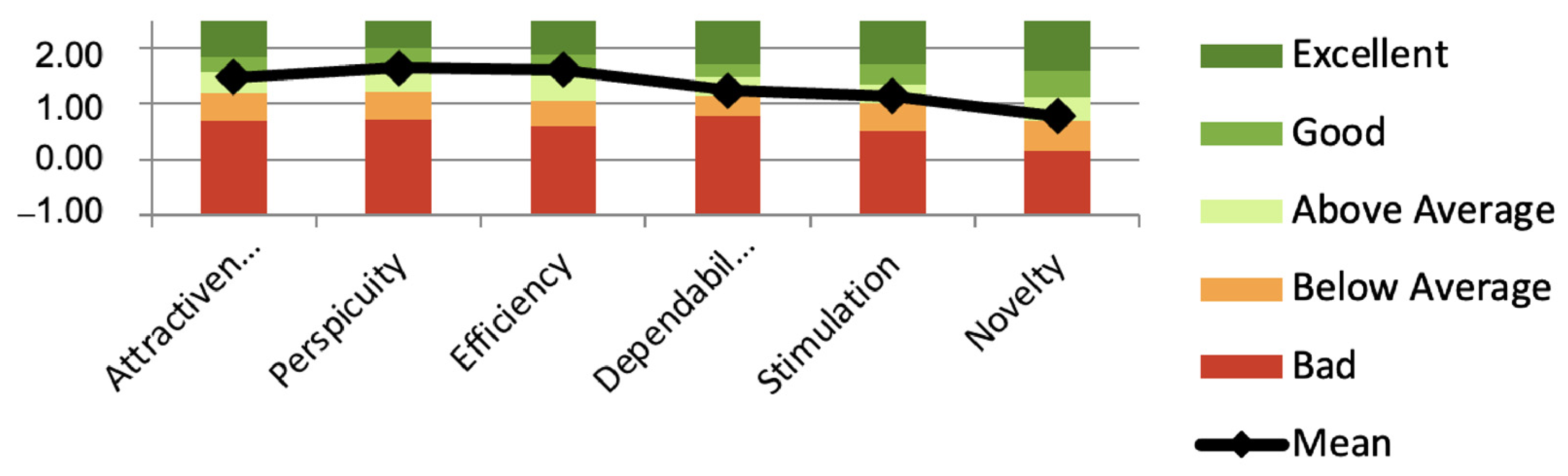

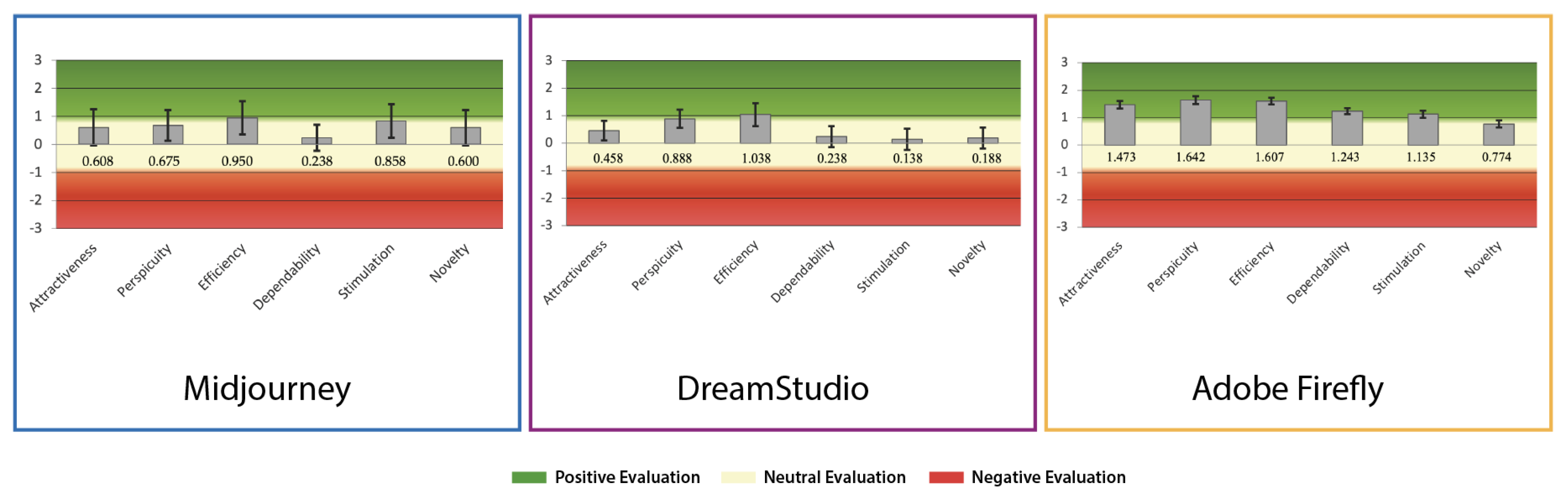

- The UEQ was used because it facilitated the analysis of the complete UX beyond mere Usability. The questionnaire scales encompass Usability elements such as Perspicuity, Efficiency, and Dependability as well as UX factors like Novelty and Stimulation. This comprehensive approach provides a holistic understanding of the UX across product/system touchpoints [32]. The UEQ consists of 26 items spread across six seven-point Likert-type scales including Perspicuity, Attractiveness, Stimulation, Dependability, Novelty, and Efficiency. Each item in the UEQ is structured as a semantic differential, with two opposing terms representing each item. The reliability and validity of the UEQ have undergone thorough scrutiny in numerous studies [32,36].

- (c)

- The PANAS is among the most frequently utilized scales for assessing moods or emotions. This brief scale comprises 20 items, with half measuring positive effects (e.g., inspired and excited) and the other half measuring negative effects (e.g., afraid and upset). Each item employs a five-point Likert scale, from 1 = Very Slightly to 5 = Extremely, to measure the degree of experienced emotions within a defined timeframe [7]. This scale can measure emotional responses to events, such as the experience with GenAI image tools. Emotions are an increasingly important factor in human–computer interaction. Nevertheless, traditional Usability has mostly ignored the affective factors of the user and the user interface [37]. This scale can complement Usability testing by adding an information layer about the user’s affective state during testing.

- (d)

- A question about the satisfaction with the results obtained with GenAI image tools was included, and was rated on a seven-point Likert scale.

3.5. Materials

- (1)

- Independent variables

- (2)

- Dependent variables

- (a)

- Usability: This variable was explored to ascertain the extent to which participants feel the system is easy to use and provides benefits in helping users obtain information or create a result. Four dimensions were used to evaluate this variable: Usefulness, Ease of Use, Ease of Learning, and Satisfaction. Given this aim, thirty statements and two open questions were used. As important as for other interfaces, the described Usability components are not sufficient concerning the evaluation of GenAI image tools, because they are not a traditional interactive device; their process is highly random and unexplainable, and the results may interfere with the Usability evaluation; because of this, other variables were considered in this study.

- (b)

- UX: UX focuses on the perceptions and behaviors of the user during their interactions with technical systems or products (ISO 9241-210:2019) [38]. Measuring UX means measuring hedonic (non-goal-oriented aspects) and pragmatic dimensions (goal-oriented aspects). Six dimensions were used to evaluate this variable: Efficiency (shows whether users can accomplish their tasks without undue effort), Attractiveness (indicates the overall impression of the product and gauges users’ preferences towards it), Perspicuity (shows whether users can quickly become acquainted with the product or grasp its usage), Stimulation (indicates whether using the product is stimulating and engaging), Dependability (demonstrates whether users perceive a sense of control during interaction), and Novelty (reflects the product’s level of innovation and creativity and its ability to capture the user’s interest). Attractiveness represents a liability dimension. Efficiency, Perspicuity, and Dependability pertain to pragmatic quality, while Stimulation and Novelty relate to hedonic quality [39].

- (c)

- Emotional Induction: This variable was studied to determine the emotional impact exerted by the independent variable on participants. Participant motivation, ease of memorization, and ability to solve problems can be influenced by positive emotions [40]. Also, some studies consider the existence of carry-over effects of affective states on Usability appraisals [41]. We used twenty items to assess the emotions experienced during the experiment, and participants were queried about the extent to which they felt each emotion. The positive emotion items were attentive, active, enthusiastic, alert, determined, excited, interested, inspired, strong, and proud. The negative emotion items were scared, afraid, jittery, nervous, irritable, hostile, ashamed, guilty, distressed, and upset.

- (d)

- Generated Results Satisfaction: Because users’ evaluations of GenAI image tools may tend to the results generated and confuse the ability to understand the other measurable scales, we opted to measure the users’ satisfaction regarding the results generated.

3.6. Data Analysis

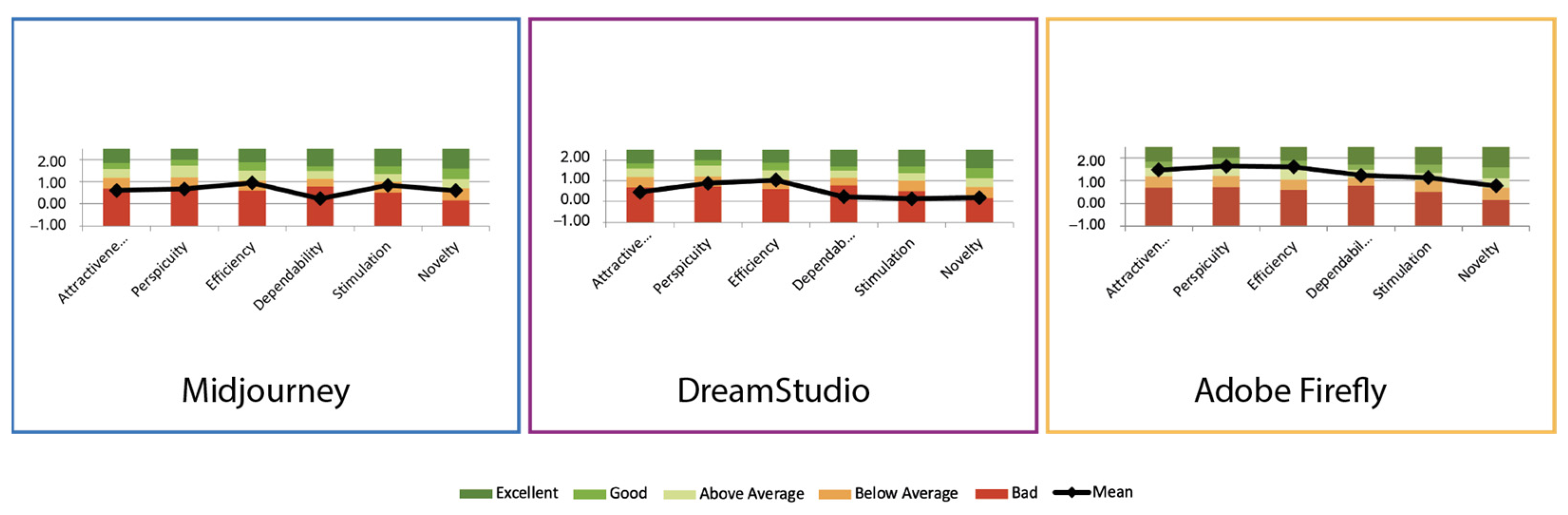

- Excellent: the evaluated product is among the best 10% of results.

- Good: 10% of the benchmark results are better than the evaluated product, and 75% of the results are worse than the evaluated product.

- Above Average: 25% of the benchmark results are better than the evaluated product, so 50% of the results are worse.

- Below Average: 50% of the results in the benchmark are better than the evaluated product, and 25% of the results are worse.

- Bad: the evaluated product is among the worst 25% of results.

4. Results

4.1. Impact upon Usability

4.2. Impact on UX

4.2.1. Midjourney GenAI Tool

4.2.2. DreamStudio GenAI Tool

4.2.3. Adobe Firefly GenAI Tool

4.2.4. UX Comparison between the Three GenAI Tools

4.3. Impact on Emotional Induction

4.4. Generated Results Satisfaction

5. Discussion

6. Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lessard, J. Generative AI in Content Creation: Revolutionizing the Creative Process with Innovative Solutions. Medium. Available online: https://medium.com/@jacobylessard/generative-ai-in-content-creation-revolutionizing-the-creative-process-with-innovative-solutions-e5049f9ed292 (accessed on 29 January 2024).

- Suryadevara, C. Generating free images with openai’s generative models. Int. J. Innov. Eng. Res. Technol. 2020, 7, 49–56. [Google Scholar]

- Nielsen, J. AI: First New UI Paradigm in 60 Years. Nielsen Norman Group. Available online: https://www.nngroup.com/articles/ai-paradigm/ (accessed on 29 January 2024).

- Yu, H.; Dong, Y.; Wu, Q. User-centric AIGC products: Explainable Artificial Intelligence and AIGC products. In Proceedings of the 1st International Workshop on Explainable AI for the Arts (XAIxArts), ACM Creativity and Cognition (C&C), Online, 19 June 2023; ACM: New York, NY, USA, 2023. [Google Scholar]

- Lund, A. Measuring Usability with the USE Questionnaire. STC Usability SIG. Newsletter 2001, 8, 3–6. [Google Scholar]

- Cota, M.; Thomaschewski, J.; Schrepp, M.; Goncalves, R. Efficient Measurement of the User Experience. A Portuguese Version. Procedia Comput. Sci. 2014, 27, 491–498. [Google Scholar] [CrossRef]

- Tran, V. Positive Affect Negative Affect Scale (PANAS). In Encyclopedia of Behavioral Medicine; Gellman, M., Turner, J., Eds.; Springer: New York, NY, USA, 2013; pp. 1508–1509. [Google Scholar] [CrossRef]

- Watson, D.; Clark, L.; Tellegen, A. Development and validation of brief measures of positive and negative affect: The PANAS scales. J. Personal. Soc. Psychol. 1988, 54, 1063–1070. [Google Scholar] [CrossRef] [PubMed]

- Casais, M. Emotions as an Inspiration for Design. In Advances in Industrial Design; Shin, C., Di Bucchianico, G., Fukuda, S., Ghim, Y., Montagna, G., Carvalho, C., Eds.; AHFE 2021. Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2021; Volume 260, pp. 924–932. [Google Scholar] [CrossRef]

- Huang, J.; Chen, Y.; Yip, D. Crossing of the Dream Fantasy: AI Technique Application for Visualizing a Fictional Character’s Dream. In Proceedings of the IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Brisbane, Australia, 10–14 July 2023; pp. 338–342. [Google Scholar] [CrossRef]

- Liu, V.; Vermeulen, J.; Fitzmaurice, G.; Justin Matejka, J. 3DALL-E: Integrating Text-to-Image AI in 3D Design Workflows. In Proceedings of the 2023 ACM Designing Interactive Systems Conference (DIS ’23), Pittsburgh, PA, USA, 10–14 July 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 1955–1977. [Google Scholar] [CrossRef]

- Brisco, R.; Hay, L.; Dhami, S. Exploring the role of text-to-image ai in concept generation. Proc. Des. Soc. 2023, 3, 1835–1844. [Google Scholar] [CrossRef]

- Paananen, V.; Oppenlaender, J.; Visuri, A. Using text-to-image generation for architectural design ideation. arXiv 2023, arXiv:2304.10182. [Google Scholar] [CrossRef]

- Oppenlaender, J. The Creativity of Text-to-Image Generation. In Proceedings of the 25th International Academic Mindtrek Conference (Academic Mindtrek ’22), Tampere, Finland, 16–18 November 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 192–202. [Google Scholar] [CrossRef]

- Oppenlaender, J. The Cultivated Practices of Text-to-Image Generation. arXiv 2023, arXiv:2306.11393. [Google Scholar]

- Schetinger, V.; Di Bartolomeo, S.; El-Assady, M.; McNutt, A.; Miller, M.; Passos, J.; Adams, J. Doom or Deliciousness: Challenges and Opportunities for Visualization in the Age of Generative Models. Comput. Graph. Forum 2023, 42, 423–435. [Google Scholar] [CrossRef]

- Ferreira, Â.; Casteleiro-Pitrez, J. Inteligência Artificial no Design de Comunicação em Portugal Estudo de Caso sobre as Perspetivas de 10 Designers Profissionais de Pequenas e Médias Empresas. ROTURA—Rev. Comun. Cult. Artes 2023, 3, 114–133. [Google Scholar]

- Lively, J.; Hutson, J.; Melick, E. Integrating AI-Generative Tools in Web Design Education: Enhancing Student Aesthetic and Creative Copy Capabilities Using Image and Text-Based AI Generators. J. Artif. Intell. Robot. 2023, 1, 23–33. Available online: https://digitalcommons.lindenwood.edu/faculty-research-papers/482 (accessed on 29 January 2024).

- Amer, S. AI Imagery and the Overton Window. arXiv 2023, arXiv:2306.00080. [Google Scholar]

- Martínez, G.; Watson, L.; Reviriego, P.; Hernández, J.; Juarez, M.; Sarkar, R. Towards Understanding the Interplay of Generative Artificial Intelligence and the Internet. arXiv 2023, arXiv:2306.06130. [Google Scholar]

- Samuelson, P. Generative AI meets copyright. Science 2023, 381, 158–161. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Borji, A. Generated Faces in the Wild: Quantitative Comparison of Stable Diffusion, Midjourney and DALL-E 2. arXiv 2023, arXiv:2210.00586. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans trained by a two time-scale up- date rule converge to a local nash equilibrium. Adv. Neural Inf. Process. Syst. 2017, 30, 6626–6637. [Google Scholar]

- Betzalel, E.; Penso, C.; Navon, A.; Fetaya, E. A Study on the Evaluation of Generative Models. arXiv 2022, arXiv:2206.10935. [Google Scholar]

- Achterberg, J.; Arel, R.; Grinberg, T.; Chaibi, A.; Bach, J.; Tzagkarakis, N. Generative Image Model Benchmark for Reasoning and Representation (GIMBRR). In Proceedings of the AAAI 2023 Spring Symposium Series EDGeS, San Mateo, CA, USA, 27–29 March 2023. [Google Scholar]

- Shneiderman, B. Human Centered AI; Oxford University Press: Glasgow, UK, 2022. [Google Scholar]

- Amershi, S.; Weld, D.; Vorvoreanu, M.; Fourney, A.; Nushi, B.; Collisson, P.; ShamsiIqbal, J.; Bennett, P.; Inkpen, K. Guidelines for human-AI interaction. In Proceedings of the 2019 Chi Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–13. [Google Scholar]

- Bubaš, G.; Čižmešija, A.; Kovačić, A. Development of an Assessment Scale for Measurement of Usability and User Experience Characteristics of Bing Chat Conversational AI. Future Internet 2024, 16, 4. [Google Scholar] [CrossRef]

- Rossouw, A.; Smuts, H. Key Principles Pertinent to User Experience Design for Conversational User Interfaces: A Conceptual Learning Model. In Innovative Technologies and Learning; Huang, Y., Rocha, T., Eds.; ICITL 2023; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2023; Volume 14099, pp. 174–186. [Google Scholar] [CrossRef]

- Shen, S.; Chen, Y.; Hua, M.; Ye, M. Measuring designers use of Midjourney on the Technology Acceptance Model. In Life-Changing Design; De Sainz Molestina, D., Galluzzo, L., Rizzo, F., Spallazzo, D., Eds.; IASDR 2023; IASDR: Milan, Italy, 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Applying the User Experience Questionnaire (UEQ) in Different Evaluation Scenarios. Lecture Notes. In Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2014; Volume 8517 LNCS, pp. 383–392. [Google Scholar] [CrossRef]

- Dantas, C.; Jegundo, A.; Quintas, J.; Martins, A.; Queirós, A.; Rocha, N. European Portuguese Validation of Usefulness, Satisfaction and Ease of Use Questionnaire (USE). In Recent Advances in Information Systems and Technologies; Rocha, Á., Correia, A., Adeli, H., Reis, L., Costanzo, S., Eds.; Springer: Cham, Switzerland, 2017; Volume 570, pp. 561–570. [Google Scholar] [CrossRef]

- Kocaballi, A.; Laranjo, L.; Coiera, E. Understanding and Measuring User Experience in Conversational Interfaces. Interact. Comput. 2019, 31, 192–207. [Google Scholar] [CrossRef]

- Gao, M.; Kortum, P.; Oswald, F. Psychometric Evaluation of the USE (Usefulness, Satisfaction, and Ease of use) Questionnaire for Reliability and Validity. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Philadelphia, PA, USA, 1–5 October 2018; pp. 1414–1418. [Google Scholar]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and Evaluation of a Short Version of the User Experience Questionnaire (UEQ-S). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 103–108. [Google Scholar] [CrossRef]

- Zimmermann, P.; Gomez, P.; Danuser, B.; Schär, S. Extending usability: Putting affect into the user-experience. In Proceedings of the 4th Nordic Conference on Human-Computer Interaction, Oslo, Norway, 14–18 October 2006; pp. 27–32. [Google Scholar]

- ISO 9241-210:2019; Ergonomics of Human-System Interaction Part 210: Human-Centred Design for Interactive Systems. ISO: Geneva, Switzerland, 2019. Available online: https://www.iso.org/standard/77520.html (accessed on 17 March 2024).

- Laugwitz, B.; Schrepp, M.; Held, T. Construction and evaluation of a user experience questionnaire. In HCI and Usability for Education and Work; Holzinger, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 63–76. [Google Scholar]

- Isen, A.; Reeve, J. The influence of positive affect on intrinsic and extrinsic motivation: Facilitating enjoyment of play, responsible work behavior, and self-control. Motiv. Emot. 2005, 29, 297–325. [Google Scholar] [CrossRef]

- Velazquez, M. Understanding the Effects of Positive and Negative Affect on Perceived Usability. Ph.D. Thesis, PennState University, State College, PA, USA, 2010. [Google Scholar]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Construction of a benchmark for the User Experience Questionnaire (UEQ). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 40–44. [Google Scholar] [CrossRef]

- Skjuve, M.; Følstad, A.; Brandtzaeg, P. The User Experience of ChatGPT: Findings from a Questionnaire Study of Early Users. In Proceedings of the 5th International Conference on Conversational User Interfaces (CUI ’23), Eindhoven, The Netherlands, 19–21 July 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 1–10. [Google Scholar] [CrossRef]

- Baek, T.; Kim, M. Is ChatGPT scary good? How user motivations affect creepiness and trust in generative artificial intelligence. Telemat. Inform. 2023, 83, 102030. [Google Scholar] [CrossRef]

- Mortazavi, A. Enhancing User Experience Design Workflow with Artificial Intelligence Tools. Master’s Thesis, Linköping University, Linköping, Sweden, 2023. [Google Scholar]

| Midjourney | DreamStudio | Adobe Firefly | ||||

|---|---|---|---|---|---|---|

| Mean | % | Mean | % | Mean | % | |

| Total Usability | 4.72 | 67.43% | 4.12 | 58.86% | 4.36 | 62.29% |

| Usefulness | 4.32 | 61.71% | 3.19 | 45.57% | 3.82 | 54.57% |

| Ease of Use | 4.61 | 65.86% | 4.41 | 63.00% | 4.17 | 59.57% |

| Ease of Learning | 5.34 | 76.29% | 5.26 | 75.14% | 5.29 | 75.57% |

| Satisfaction | 4.62 | 66.00% | 3.63 | 51.86% | 4.16 | 59.43% |

| Midjourney | DreamStudio | Adobe Firefly | |

|---|---|---|---|

| Positive | 29.455 | 19.600 | 25.800 |

| Negative | 15.954 | 16.200 | 18.100 |

| Midjourney | DreamStudio | Adobe Firefly | ||||

|---|---|---|---|---|---|---|

| Mean | % | Mean | % | Mean | % | |

| Satisfaction with the results | 4.70 | 67.14% | 3.80 | 54.29% | 4.59 | 65.57% |

| GenAI Tool | Prompt/Image Upload/ID, Seed | Results |

|---|---|---|

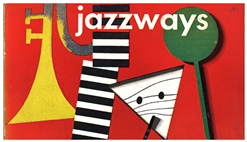

| Midjourney | /imagine prompt create a poster of a circus based on the work of the designer Paul Rand—v 5.2 Id: 933008ae-8519-4c48-8043-628c79c9191b |  |

| Midjourney | /imagine prompt contortionists and jugglers for a circus poster in the designer paul rand style—v 5.2 Id: 7d3a0416-1c5e-4368-869a-2bdc4324c57e |  |

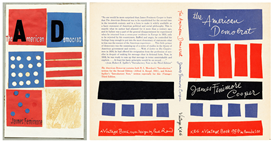

| DreamStudio | Prompt: Poster for a circus with the influence of Paul Rand, that is, with various geometric figures and color. The poster needs to contain objective elements of the circus. Seed: 554,335 Image:  |  |

| DreamStudio | Prompt: Colorful poster for a circus, white background, geometrical objects in primary colors, different texture, minimalistic design. Seed: 93,581 |  |

| Adobe Firefly | Prompt: Inside circus tent vector old retro vintage style of Paul Rand.  |  |

| Adobe Firefly | Prompt: Vintage circus host with big pointed black hat, two women seated and two circus tents on the background vector poster Paul Rand style.  |  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Casteleiro-Pitrez, J. Generative Artificial Intelligence Image Tools among Future Designers: A Usability, User Experience, and Emotional Analysis. Digital 2024, 4, 316-332. https://doi.org/10.3390/digital4020016

Casteleiro-Pitrez J. Generative Artificial Intelligence Image Tools among Future Designers: A Usability, User Experience, and Emotional Analysis. Digital. 2024; 4(2):316-332. https://doi.org/10.3390/digital4020016

Chicago/Turabian StyleCasteleiro-Pitrez, Joana. 2024. "Generative Artificial Intelligence Image Tools among Future Designers: A Usability, User Experience, and Emotional Analysis" Digital 4, no. 2: 316-332. https://doi.org/10.3390/digital4020016

APA StyleCasteleiro-Pitrez, J. (2024). Generative Artificial Intelligence Image Tools among Future Designers: A Usability, User Experience, and Emotional Analysis. Digital, 4(2), 316-332. https://doi.org/10.3390/digital4020016