1. Introduction

Image data cubes are a new paradigm for providing access to large spatio-temporal Earth Observation (EO) data [

1]. They represent a generic concept used to organize data with multiple dimensions, with EO imagery data cubes typically having three dimensions: latitude, longitude, and time [

2]. This paradigm is being used in various national and global EO data repositories, such as the Swiss Data Cube [

3], the Earth Observation Data Cube [

4], the Earth System Data Cube [

5], and Google Earth Engine [

6]. Such EO repositories recognized the need for enabling data access with web-based workflows [

7].

The disruptive changes brought by web-based workflows imply that data cubes also need to evolve in how they are physically stored, compressed, and made available using cloud-native access patterns, based on the hypertext transfer protocol (HTTP) capabilities. This evolution can already be observed in the recent development of the Cloud Optimized GeoTIFF (COG), an “imagery format for cloud-native geospatial processing” [

8]. COG is a capable format for web-optimized access to raster data, having a particular internal organization of pixels that enables clients to request portions of the file by issuing HTTP range requests. The COG internal organization is based on tiles (covering square areas from the main raster image) while compression is being used for a “more efficient passage online” [

8]. The COG state-of-the-art format is applicable to single raster images.

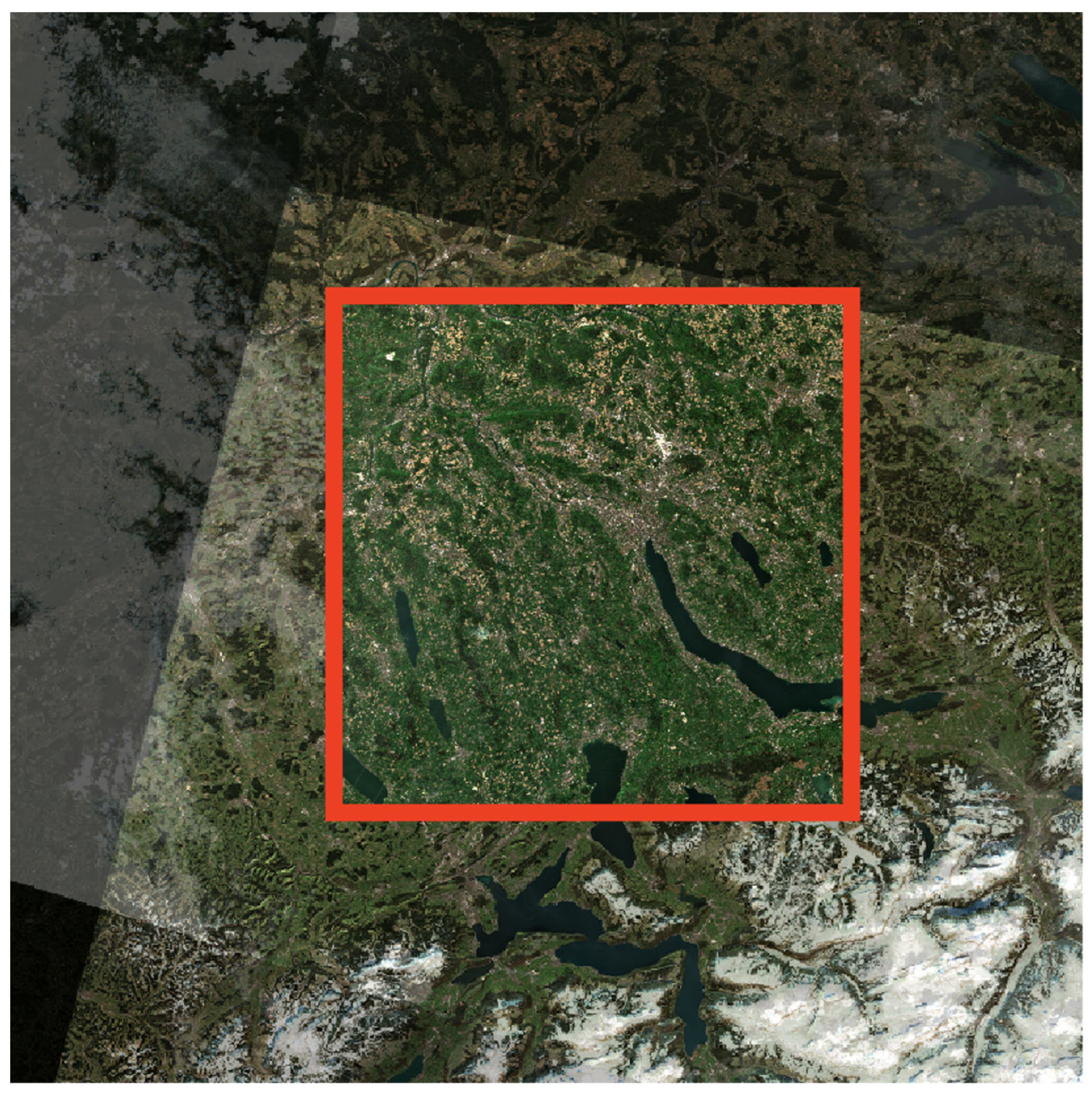

It remains challenging to make available large environmental geodata sets in an efficient manner, especially when they are in the form of time series. For example, scientists at the Swiss Federal Research Institute WSL aspire to provide open and easy access to modified Copernicus data for Switzerland (

www.copernicus.eu, accessed on 10 August 2021), with a special interest in contemporary high-resolution data of the Sentinel missions [

9]. Cloud-cleared composite reflectance or index products provided as analysis ready data (ARD) will relieve users from costly preprocessing steps [

10,

11]. The data volume produced by the Sentinel missions is quite significant, with one single compressed (.zip) tile having around 1 GB. This is due to the fact that the Multi Spectral Instrument (MSI) of the Sentinel-2 (S2) mission produces high spatial resolution data (from 10 m to 60 m depending on the spectral band) with a total of 13 bands covering the visible to the short-wave infrared [

12]. Similarly, climate model outputs represent other instances of large data volumes being published by WSL scientists. Examples for climate model outputs are the “High resolution climate data for Europe” [

13] and the latest versions of the “Climatologies at high resolution for the Earth’s land surface areas—CHELSA [

14]”. These data sets contain climate model data such as temperature and precipitation patterns at high resolution for various time periods, in the form of gridded time series with thousands of layers for a single climate variable.

Environmental data publishing repositories need to undertake a substantial transformation towards managing and visualizing large datasets with web-based workflows. EnviDat (accessible at

www.envidat.ch, accessed on 10 August 2021) is the environmental data portal of the Swiss Federal Research Institute WSL providing unified and managed access to environmental monitoring and research data. EnviDat actively promotes good practices for Open Science and Research Data Management (RDM) at WSL. It supports scientists with the formal publishing of environmental datasets from forest, landscape, biodiversity, natural hazards, and snow and ice research, according to FAIR (Findability, Accessibility, Interoperability, and Reusability) principles [

15,

16]. The scientists’ requirements are driving EnviDat’s forthcoming bottom-up evolution towards integrating comprehensive visualization functionalities, including semi-automated (meta)data visualization on interactive web maps for highly heterogeneous environmental data.

Visualizing and managing large environmental geodata sets in a data publishing repository is, however, not without challenge [

17]. Even basic functionalities are quite demanding, for example: (1) automated display of the environmental data contents on an interactive 2D map and/or on a 3D globe; (2) spatial, thematic and temporal navigation through a very large number of environmental data layers of a time series; and (3) extracting and displaying data subsets over an entire time series for any interactively selected location on the map. Large environmental data layers cannot be efficiently configured and interactively delivered by traditional Spatial Data Infrastructures (SDIs) and conventional Web Map Services (WMS). Implementing and managing a local SDI requires substantial computing and ample storage for supporting efficient web mapping, i.e., by creating tile caches. Furthermore, sampling, clipping, and subsetting smaller areas of interest from large time series would take a significant amount of time with any SDI’s standard geoprocessing services, thereby preventing any real-time parallel interactions with a multitude of concurrent users, as required by interactive web applications. Finally, existing web-mapping frameworks are not adapted for providing user-friendly navigation along a time axis for such large numbers of data layers [

17].

The challenges regarding the proper management and visualization of large environmental time series such as were uncovered during an early proof-of-concept for the implementation of web-mapping requirements in EnviDat [

17]. This proof-of-concept helped evaluate some of the features that could become part of EnviDat, as well as highlighted the need for innovative concepts and technologies beyond what is presently available in contemporary state of the art [

17]. Consequently, we conceptualized and implemented a new data hypercube format in order to provide user-friendly, fast, and efficient access to large time series of environmental raster data layers.

3. Results

3.1. Cloud Optimized Data Hypercube

The essential methods described in the previous section have allowed us to successfully define and implement a CORE data cube for storing a series of fifty full-size, non-tiled, S2 TCI raster layers. All results can be reproduced using free and open-source software (FOSS) libraries.

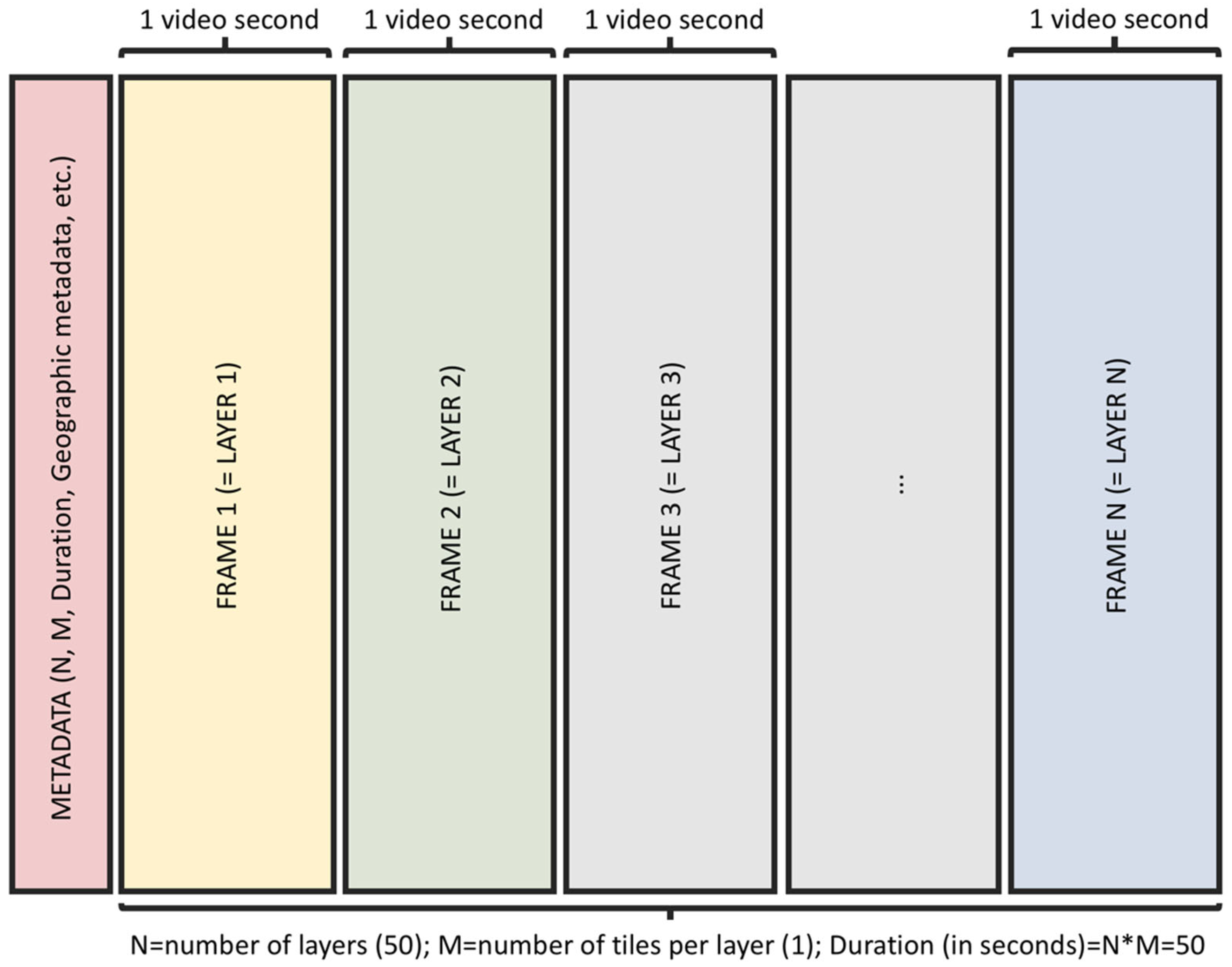

The CORE format combines multiple layers of a time series in streamable video files. CORE can be compared to an array of COGs, with all individual COGs being compressed in one single video file. In order to introduce the CORE concept more easily, we have chosen a straightforward encoding of untiled layers. Consequently, in untiled CORE files, each layer is stored in one video frame with the frame rate of one frame per second (1 FPS) as sketched in

Figure 3.

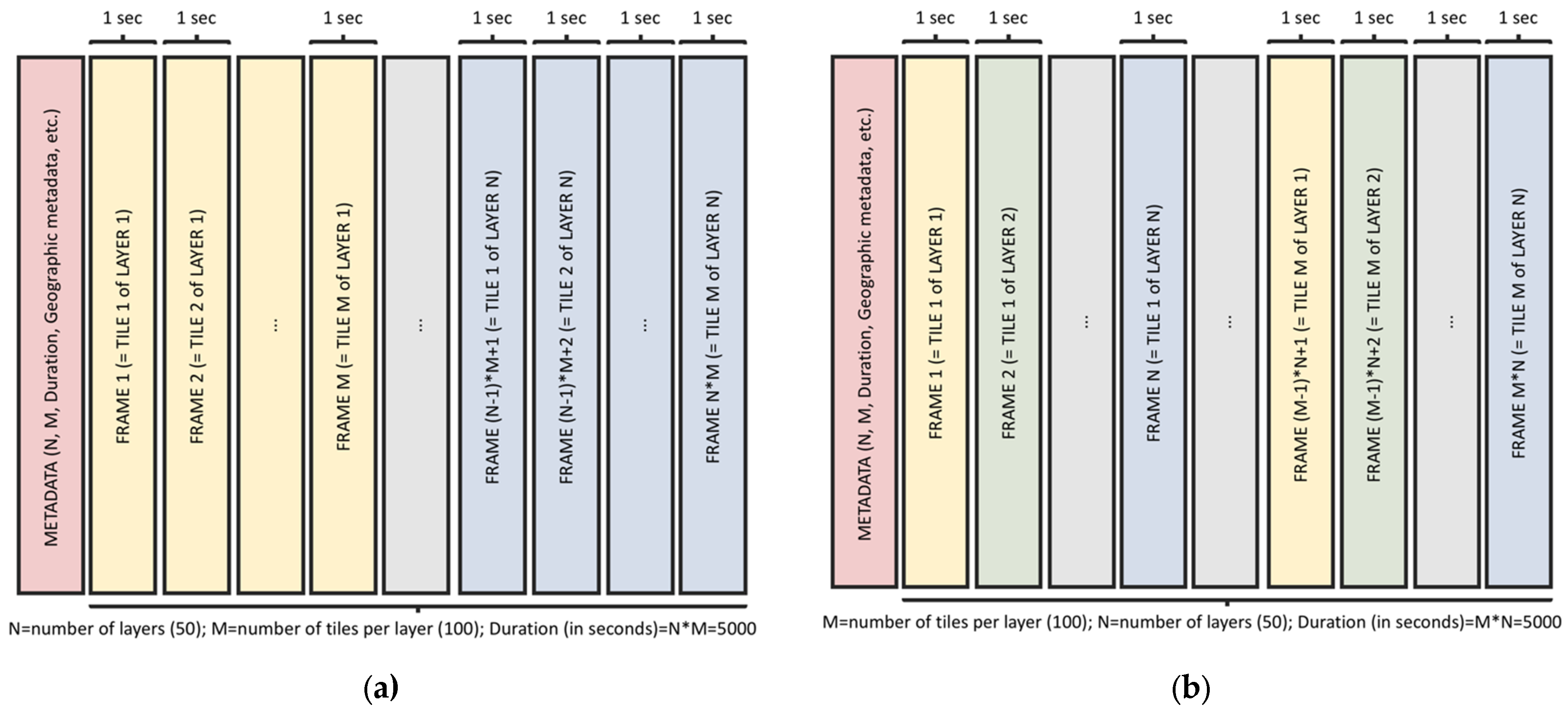

In production, the raster imagery should always be tiled, similarly to COG. The internal tile arrangement needs to support a fast and efficient navigation inside the CORE video format. The order of tiles as video frames in CORE is given by the main use case when accessing the data: either fast access to individual full layers or to specific subregions over all the layers in the time series. This allows for an optimized contiguous retrieval of either layers or tiles.

Figure 4 sketches side-by-side the two modes of packing internal tiles in video frames: either layer contiguous (LC) or tile contiguous (TC).

Due to the 1 FPS encoding, the video duration (in seconds) represents the number of layers × the number of tiles (N*M), hence seeking and retrieving individual tiles or layers is intuitive. In our example S2A data, we have a total of 50 layers, with each layer segmented in 100 tiles. Therefore, in the S2A LC CORE example, the first 100 frames will hold all the tiles of the first layer, the next 100 the second layer and so on, with the last 100 frames of the video containing the tiles of the final layer of the time series. In the S2A TC CORE example, the first 50 frames will hold the first tile of all the 50 layers from the time series, followed by the next 50 frames containing the second tile of each 50 layers and so on, with the last 50 frames containing the last tile over the entire time series.

With the above CORE results, we prove the initial Hypotheses H1 and H2, namely that a hypercube-based data organization of large raster time series is possible as a physical file (H1) for an efficient web visualization due to cloud-native access patterns (H2).

3.2. CORE Data Compression Gains

The third initial hypothesis is concentrated on potentially achieving a more efficient compression for raster time series (H3). Using the described reproducible methods, we have generated a series of CORE files for analyzing the compression of the entire input S2 TCI PNG time series (with 50 images). Each CORE file was encoded with a variety of CRF values starting from 0 (lossless) up to a maximum of 23 as represented in

Figure 5.

In the lossless mode (CRF 0), the CORE file sizes are between 2.1 GB and 2.3 GB, depending on the tile schema, which are comparable to the input data size (2.3 GB). However, as visible in

Figure 5, a steady decrease of the CORE file sizes (in GB) can be observed with increasing CRF (from 0 to 23). The first significant compression gains are noted at CRF 1, which already reduces the file sizes in half when compared to the lossless versions. When reaching CRF 17–18, we can observe that the CORE files have become considerably smaller at below one quarter of the corresponding CRF 0 versions. Moreover, when reaching CRF 23, the untiled CORE file sizes has shrunk to reach almost one tenth of the sizes of the lossless versions. Furthermore, the file sizes of the LC variants are slightly larger than the TC variants, which are in turn marginally larger than the U variants.

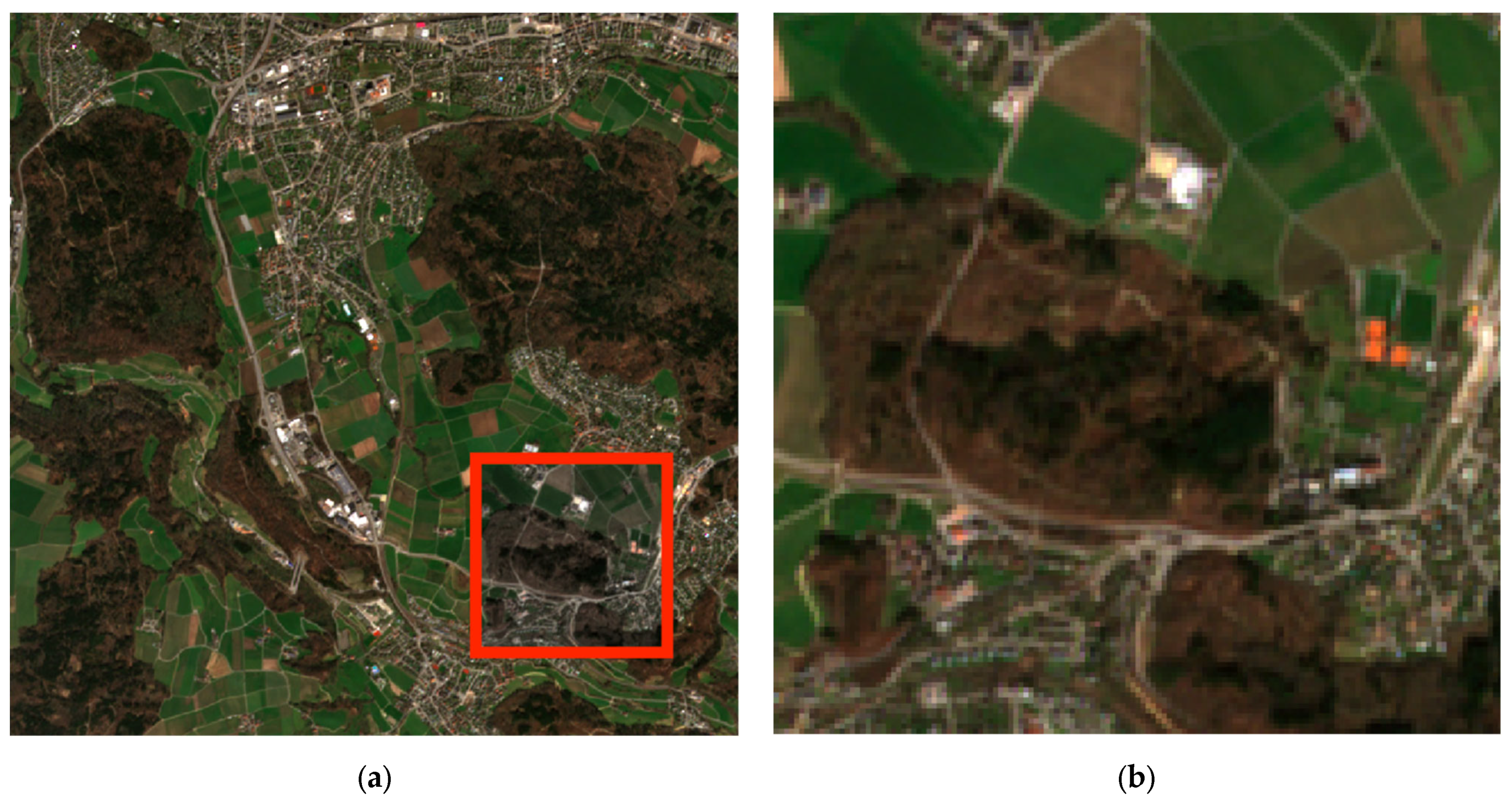

Since the file size reduction using the H.264 video codec was unexpectedly high, we have exported the data from the corresponding CORE files in order to perform a visual data quality comparison with the original data. The capabilities of video encoding algorithms (such as H.264) to preserve the perceived data quality are exciting. A side-by-side visual comparison of selected highly zoomed regions is provided in

Figure 6. CORE versions encoded with CRF 18 or less can be considered to be “visually lossless or nearly so” for the human perception [

22]. Even data layers encoded with CRF 23 still retain sufficient information to visually interpret the geographical features, as visible in

Figure 6d.

Due to our focus of the current work on the CORE format definition, an in-depth appraisal of the data quality is beyond the scope of this current work. However, it is absolutely necessary to perform a future quantitative analysis for assessing the information loss corresponding to every CORE encoding level (CRF) in its entirety. The time-consuming evaluation of the fitness of CORE-encoded imagery requires the definition of objective indicators for quantifying the degradation of data quality for a variety of typical remote sensing applications.

For the purpose of independent verification of the data quality degradation with increasing compression, we provide access to all exported data layers and tiles from the CORE files with CRF 00, CRF 01, CRF 18, and CRF 23 as georeferenced PNGs. The data is available in directories starting with “3_export_CORE_h264_” that correspond to a particular CRF and tiling mode (tile_contiguous, layer_contiguous and untiled), as for example “3_export_CORE_h264_18_untiled” (directly accessible at

https://envicloud.wsl.ch/#/?prefix=wsl/CORE_S2A/3_export_CORE_h264_00_untiled/, accessed on 10 August 2021).

With the above results, we demonstrate that CORE files can compress RGB data at near lossless quality, i.e., by using a minimal lossy compression with CRF 1 in half of the lossless data size, thus supporting our initial hypothesis for the possibility of achieving a more efficient compression for raster time series (H3).

3.3. CORE Specifications

Based on the successful implementation of the new concept, we distillate the following CORE format specifications:

A video digital multimedia container format such as MP4, WEBM, or any future video container playable as HTML video in major browsers;

Encoded using a free or open video compression codec such as H.264, AV1, or any future open-source/free video codec;

Encoding frame rate is of one frame per second (1 FPS), with each data layer segmented in internal tiles, similar to COG, ordered by the main use case when accessing the data (either LC or TC);

Optimized for streaming with the metadata placed at the beginning of the file;

Contains metadata tags for describing and using geographic raster data (similarly to the COG GeoTIFF) and CORE-specific metadata (number of layers, number of tiles, tiling schema, etc.);

Encodes similar source rasters, having the same image size and spatial resolution;

Provides lower resolution overviews for an optimized scale dependent visualization.

The above specifications are preliminary and subject to additional improvements based on community feedback for a future standardization. To support full reproducibility, the CORE specifications and data mentioned in this study are openly published in EnviDat under a Creative Commons 4.0–CC0 “No Rights Reserved” international license, accessible at

https://doi.org/10.16904/envidat.230 [

30], accessed on 10 August 2021.

4. Discussion and Outlook

Motivated by the challenges posed by managing and visualizing large raster time series in a future web-EGIS module of EnviDat—we developed and specified the CORE format. The CORE video files were created in web-native and streamable MP4 containers, with 1 FPS. The 1 FPS is needed in order to avoid data loss performed by encoding optimizations at high frame rates, we well as to enable a straightforward seeking for individual data frames. The CORE video data is made streamable by moving metadata (moov atom) to the beginning of the MP4 file. Early access to metadata enables web browsers to access the video using cloud native access patterns with HTTP range requests without the need to download the entire file. Consequently, CORE is able to support fast data access when previewing and using geodata layers of a time series. This fast preview is enabled by the HTML5 video inherent streaming capabilities. H.264 is the fastest codec that we were able to apply in order to reliably create CORE files in a reasonable time. However, there exist newer and improved open codecs such as AV1, which is expected to achieve higher compression rates at the same perceptual quality when compared with H.264 [

28].

Further research has to address the limitations of this current work. First, the handling of internal overviews needs to be developed. Currently, the overviews are external, and the goal would be to chain overviews as lower resolution videos in the same MP4 container. Internal overviews will enable fast visualization of a time series access using a single CORE file, in a similar manner to how COG works for single raster layers. Metadata encoding also needs to be improved, considering how to best encode the spatial extent, projection, layer names, and other geographical metadata. Properly defining the metadata for CORE files in future work is especially important for an appropriate implementation of the hypercube concept, where each of the layers (and by extensions its corresponding tiles) of the time series, may have a different geographical projection and/or theme. Additional research is also needed to apply CORE beyond RGB–Byte datasets. For this purpose, we will need to define extended specifications for a standardized conversion of cell values from popular GIS one-band raster data types (such as Int16, UInt16, UInt32, Int32, Float32) into multi-band red, green, blue, and alpha (RGBA) Byte values, and reconstructing them back to the original data type within acceptable thresholds. For data types that have at most 4 Bytes a standard translation to CORE is theoretically possible without information loss, because of the evolution of modern video codecs towards encoding four-channel RGBA for web videos.

In this study, fifty TCIs were selected to provide a realistic example of a data cube for time series, while keeping the processing time of subsequent steps at reasonable levels and enable reproducibility on standard computing hardware. Future work will test the applicability of the data hypercubes to the management of many thousands of original high-resolution RGB tiles for different products, with the note that a High-Performance Computing Cluster (HPC) will be needed for processing and encoding such a massive amount of imagery into CORE.

Finally, the evaluation of the fitness of CORE-encoded imagery for remote sensing applications is essential for future research and development of the CORE format. Lossless data compression with CRF 0 will be able to preserve the data values but it will forego significant compression gains. A slight lossy encoding with CRF 1 is able to cut the data size in half, while compressing with higher CRF will reduce the CORE files to a fraction of the input. Consequently, we can obtain significant storage savings and faster network data transmission, but it is unknown how much lossy encoding can be tolerated by various scientific applications. We need objective indicators for assessing the information loss corresponding to different CORE CRF encoding levels, in order to accurately understand how compression-caused value errors will influence data or model uncertainty, especially for applications where error inflation in subsequent analysis is of concern.

5. Conclusions

User requirements related to mapping and visualization are transforming EnviDat into a next-generation environmental data-publishing portal that fuses publication repository functionalities with web-EGIS and EO data cube functionalities. The key CORE innovation is reusing and adapting COG principles to a collection of many layers in a time series. Time series of EO data or climate model outputs are especially suited for an efficient encoding and publication in CORE, instead of complex directory structures containing large numbers of individual files. The CORE format enables an efficient storage and management of uniform gridded data by applying video encoding algorithms. The layers should be segmented in smaller internal tiles for enabling a faster access to the data, in a similar manner to how COG works for single raster layers. The tiling schema should be adapted to the main data use case, by using a specific ordering of the tiles following a TC/LC approach. The CORE format has native support for data hypercubes. CORE can contain any number of raster data layers with uniform pixel size and image size dimensions, irrespective of their theme or spatial extent. Another innovation is that CORE, unlike COG, specifically uses a web format container that can be decoded by major web browsers, hence enabling a web-EGIS to provide user-friendly, fast, and efficient access to CORE data hypercubes, containing considerable numbers of raster data layers in a time series. The design of CORE is based on the COG principles for enabling more efficient workflows on the cloud, but adapted to solving web-EGIS visualization challenges for large environmental time series in geosciences.

The results obtained so far demonstrate a possible solution for an effective management and visualization of large environmental raster time series by significantly reducing their overall size at near lossless quality. Consequently, CORE is suitable not only for a faster web visualization but also for a more efficient exchange and preservation of time series raster data in environmental data repositories such as EnviDat. Geospatial information has the potential to become a central element for any specialized environmental data portal. With the development of time series in CORE format, EnviDat will further improve its capabilities for presentation, documentation, and exchange of scientific geoinformation. The CORE format specifications are open source and can be used by other platforms to manage and visualize large environmental time series. We hope EnviDat can serve as a model for other FAIR platforms and repositories that are being specifically developed for the management and publication of environmental data.