The Impact of Artificial Intelligence on Future Aviation Safety Culture

Abstract

1. Introduction

1.1. Overview of Paper

1.2. Safety Culture—An Essential Ingredient of Aviation Safety

“The safety culture of an organization is the product of individual and group values, attitudes, perceptions, competencies, and patterns of behavior that determine the commitment to, and the status and proficiency of, an organization’s health and safety management.”

“Shared values (what is important) and beliefs (how things work) that interact with an organization’s structures and control systems to produce behavioral norms (the way we do things around here).”

1.3. The Emergence of a Safety Culture Evaluation Method in Aviation

1.4. Measuring Safety Culture

- Management commitment to safety

- Collaboration and involvement

- Just culture and reporting

- Communication and learning

- Colleague commitment to safety

- Risk handling

- Staff and equipment

- Procedures and training

1.5. Safety Culture and Future AI—An Unexplored Landscape

- The origins of AI;

- AI today;

- Generative AI;

- Narrow AI;

- Visions of future aviation human-AI systems;

- Trustworthy AI;

- Accountable AI;

- AI and just culture;

- Ethical AI;

- Maintaining human agency for safety;

- AI anthropomorphism and emotional AI;

- AI and safety governance.

2. The Developing Artificial Intelligence Landscape in Aviation

2.1. The Origins of Artificial Intelligence

2.2. AI Today

“…the broad suite of technologies that can match or surpass human capabilities, particularly those involving cognition”.

2.3. Generative AI

2.4. Narrow AI

- A lack of data (most AI systems have vast data appetites. This can also be seen as a scalability issue when moving from research to wider industry). Even though aviation has a lot of data, much of it is not shared for commercial competition reasons, and using ‘proxy’ or synthetically generated data risks diluting and distorting real operational experience;

- Business leaders lack an even basic understanding of the data science and the technological skills necessary to sustain operational applications of AI;

- A failure to develop the ‘social capital’ required to foster such a change, leading to users rejecting the AI tool’s implementation (for example, because it threatens job losses).

2.5. Visions of Future Human-AI Teaming Concepts in Aviation

- 1A—

- Machine learning support (existing today);

- 1B—

- Cognitive assistant (equivalent to advanced automation support);

- 2A—

- Cooperative agent, able to complete tasks as demanded by the operator;

- 2B—

- Collaborative agent—an autonomous agent that works with human colleagues, but which can take initiative and execute tasks, as well as being capable of negotiating with its human counterparts;

- 3A—

- AI executive agent—the AI is basically running the show, but there is human oversight, and the human can intervene (sometimes called management by exception);

- 3B—

- the AI is running everything, and the human cannot intervene.

- UC1—a cockpit IA to help a single pilot recover from a sudden event that may induce ‘startle response’ and direct the pilot in terms of which instruments to focus on to resolve the emergency. This cognitive assistant is 1B in EASA’s categorization, and the pilot remains in charge throughout;

- UC2—a cockpit IA used to help flight crew re-route an aircraft to a new airport destination due to deteriorating weather or airport closure, for example. The IA must consider a large number of factors including category of aircraft, runway length, remaining fuel available and distance to airport, connections possible for individual passenger given their ultimate destinations, etc. The flight crew remain in charge but communicate/negotiate with the AI to derive the optimal solution. This is category 2B;

- UC3—an IA that monitors and coordinates urban air traffic (drones and sky-taxis). The AI is an executive agent with a human overseer and is handling most of the traffic, with the human intervening only when necessary. This is category 3A;

- UC4—a digital assistant for remote tower operations, to ease the tower controller’s workload by carrying out repetitive tasks. The human monitors the situation and will intervene if there is a deviation from normal (e.g., a go-around situation, or an aircraft that fails to vacate the runway). This is therefore category 2A;

- UC5—a digital assistant to help airport safety staff deal with difficult incident patterns that are hard to eradicate, using data science techniques to analyze large, heterogeneous datasets. At the moment, this is a retrospective analysis approach, though if effective it could be made to operate in real-time, warning of impending incident occurrence or hotspots. This is currently 1A/1B, but could evolve to become 2A;

- UC6—a chatbot for passengers and airport staff to warn them in case of an outbreak of an airborne pathogen (such as COVID), telling passengers where to go in the airport to avoid contact with the pathogen. This is 1B.

2.6. The Need for Trustworthy AI in Safety-Critical Systems

- Human agency and oversight;

- Robustness and safety;

- Privacy and data governance;

- Transparency;

- Diversity;

- Non-discrimination and fairness;

- Societal and environmental wellbeing;

- Accountability.

2.7. Accountability, Certification, and the Double-Bind

2.8. AI and Just Culture in Aviation

“Just Culture means a culture in which front-line operators or other persons are not punished for actions, omissions or decisions taken by them that are commensurate with their experience and training, but in which gross negligence, willful violations and destructive acts are not tolerated”.

“The burden of responsibility gravitates towards the organization to provide sufficient and appropriate training for air traffic controllers. If they are not well trained, it will be hard to blame them for actions, omissions or decisions arising from AI/ML situations…”.

“The functioning of AI challenges traditional tests of intent and causation, which are used in virtually every field of law”.

2.9. Ethical AI—Maintaining Meaningful Human Work

- Respect for human autonomy: AI systems should not subordinate, coerce, deceive, manipulate, condition or herd humans. AI systems should augment, complement and empower human cognitive, social and cultural skills, leave opportunity for human choice and secure human oversight over work processes, and support the creation of meaningful work;

- Prevention of harm: AI must not cause harm or adversely affect humans, and should protect human dignity, and not be open to malicious use or adverse effects because of information asymmetries or unequal balance of power;

- Fairness: This principle links to solidarity and justice, including redress against decisions made by AI or the companies operating/making them.

2.10. Human Agency for Safety—Maintaining Safety Citizenship

- Safety role ambiguity;

- Safety role conflict;

- Role overload;

- Job insecurity;

- Job characteristics;

- Interpersonal safety conflicts;

- Safety restrictions.

2.11. AI Anthropomorphism and Emotional AI

2.12. Governance of AI, and Organizational Leadership

“It seems counter-intuitive, then, to categorize the level of automation by degree of autonomous control gained over human control lost, when in practice both are needed to ensure safety/” [48]

“Machines are good at doing things right; humans are good at doing the right thing.”

- Where is AI the right solution?

- Do we have the right data?

- Do we have the right computing power?

- Do we have fit-for-purpose models?

“Failures in leadership and organizational safety culture led to the Nimrod incident where the aircraft developed serious technical failures, preceded by deficiencies in safety case and a lack of proper documentation and communication between the relevant organizations”.On p. 474: “The ownership of risk is fragmented and dispersed, and there is a lack of clear understanding or guidance what levels of risk can be owned/managed/mitigated and by whom”.And, p. 403: “These organizational failures were both failure of leadership and collective failures to keep safety and airworthiness at the top of the agenda despite the seas of change during the period”.

3. Safety Culture Evaluation of Future Human–AI Teaming in Aviation

3.1. Materials and Method

3.2. Methodological Shortcomings and Countermeasures

4. Results

- ○

- High impact: Colleague commitment to safety, just culture and reporting, risk handling;

- ○

- Medium impact: Staff and equipment, procedures and training, communication and learning, collaboration and involvement

- ○

- Low impact: Management commitment to safety

5. Discussion of Results

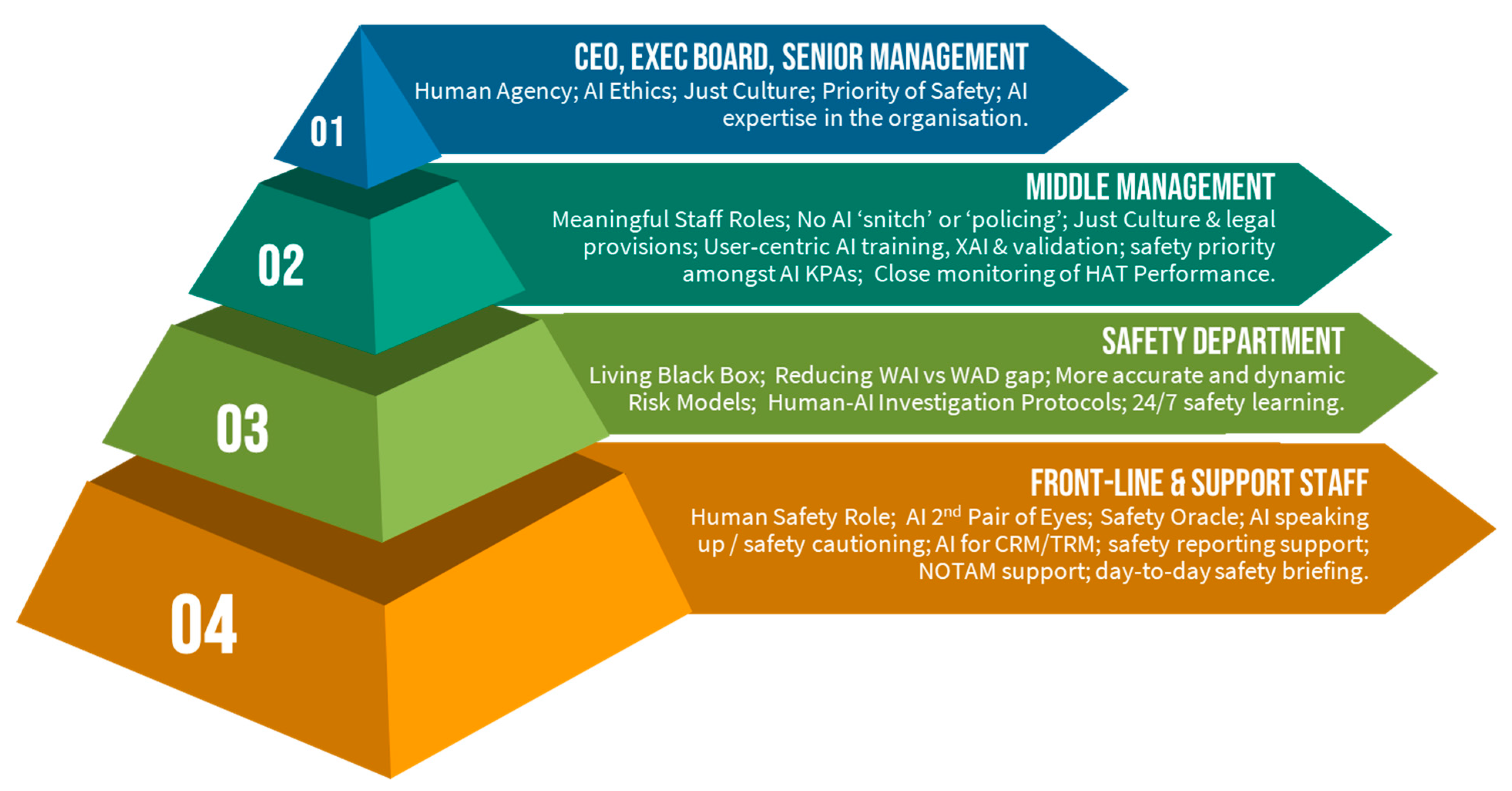

5.1. Safeguards and Organizational Risk Owners

5.2. Further Research Needed

- Just culture—if just culture is to be preserved, rationales and arguments need to be developed that will stand up in courts of law. These must protect crew and workers who made an honest (i.e., a priori reasonable) judgement about whether to follow AI advice, and whether to intervene, contravening AI autonomous actions seen as potentially dangerous. Such development of just culture argumentation and supporting principles regarding AI and human-AI teaming should include simulated test cases being run in ‘legal sandboxes’.

- Safety management systems (SMS)—the key counterpart of safety culture in aviation—the SMS—will also need to adapt to higher levels of AI autonomy, as is already being suggested in [31,33]. This will probably require new thinking and new approaches, for example with respect to the treatment of human-AI teaming in risk models, rather than simply producing ‘old wine in new bottles.’ SMS maturity models, such as those that are used in air traffic organizations around the globe [52], will also need to adapt to address advanced AI integration into operations.

- Human factors have a key role to play in the development of human-AI teaming [53], especially if such systems are truly intended to be human-centric. This will require co-development work between human factors practitioners/researchers and data scientists/AI developers, so that the human—who after all literally has ‘skin in the game’—is always fully represented in the determination of optimal solutions for explainability, team-working, shared situation awareness, supervised learning, human-AI interaction means and devices, and training strategies. Several new research projects are paving the way forward. This applied research focus needs to be sustained, and a clear method developed for assuring usable and trustworthy human-AI teaming arrangements.

- There are currently several human-AI teaming options on the table, e.g., from EASA’s 1B to 3A; see also [54]), with 2A, 2B and 3A offering the most challenges to the human’s agency for safety, and hence the most potential impacts on safety culture. Yet, these are the levels of AI autonomy that could also bring significant safety advantages. It would be useful, therefore, to explore the actual relative safety advantages and concomitant risks of these and other AI autonomy levels, via risk evaluations of aviation safety-related use cases. Such analysis could lead to common and coordinated design philosophies and practices for aviation system manufacturers.

- Inter-sector collaboration will be beneficial, whether between human-AI teaming developments in different transport modalities (e.g., road, sea, and rail) or different industry sectors, including the military, who are likely to be most challenged with both ethical dilemmas and high intensity, high-risk human-AI team-working. This paper has already highlighted learning points from maritime and military domains for aviation, so closer collaboration is likely to be beneficial. At the least, collaboration between the transport domains makes sense, given that in the foreseeable future, AIs from different transport modes will likely be interacting with each other.

6. Limitations of the Study and Further Work

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- EUROSTAT. Air Safety Statistics in the EU. 2023. Available online: https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Air_safety_statistics_in_the_EU&oldid=587873 (accessed on 27 March 2024).

- Guldenmund, F. Understanding safety culture through models and metaphors: Taking stock and moving forward. In Safety Cultures, Safety Models; Gilbert, C., Journé, B., Laroche, H., Bieder, C., Eds.; Springer Open: Cham, Switzerland, 2018. [Google Scholar]

- Cox, S.; Flin, R. Safety Culture: Philosopher’s Stone or Man of Straw? Work Stress 1998, 12, 189–201. [Google Scholar] [CrossRef]

- Zohar, D. Thirty years of safety climate research: Reflections and future directions. Accid. Anal. Prev. 2010, 42, 1517–1522. [Google Scholar] [CrossRef] [PubMed]

- Reader, T.W.; Noort, M.C.; Kirwan, B.; Shorrock, S. Safety sans frontieres: An international safety culture model. Risk Anal. 2015, 35, 770–789. [Google Scholar] [CrossRef] [PubMed]

- Advisory Committee on the Safety of Nuclear Installations (ACSNI) Study Group. Third Report: Organizing for Safety; H.M. Stationery Office: Sheffield, UK, 1993. [Google Scholar]

- IAEA. Safety Culture; Safety Series No. 75-INSAG-4; International Atomic Energy Agency: Vienna, Austria, 1991. [Google Scholar]

- Cullen, D. The Public Enquiry into the Piper Alpha Disaster; HMSO: London, UK, 1990. [Google Scholar]

- Hidden, A. Investigation into the Clapham Junction Railway Accident; HMSO: London, UK, 1989. [Google Scholar]

- Turner, R.; Pidgeon, N. Man-Made Disasters, 2nd ed.; Butterworth-Heineman: Oxford, UK, 1997. [Google Scholar]

- Reason, J.T. Managing the Risks of Organizational Accidents; Ashgate: Aldershot, UK, 1997. [Google Scholar]

- AAIB. Report No: 4/1990. Report on the Accident to Boeing 737-400, G-OBME, near Kegworth, Leicestershire on 8 January 1989; Air Accident Investigation Board, Dept of Transport: Hampshire, UK, 1990. Available online: https://www.gov.uk/aaib-reports/4-1990-boeing-737-400-g-obme-8-january-1989 (accessed on 27 March 2024).

- Nunes, A.; Laursen, T. Identifying the factors that led to the Uberlingen mid-air collision: Implications for overall system safety. In Proceedings of the 48th Annual Chapter Meeting of the Human Factors and Ergonomics Society, New Orleans, LA, USA, 20–24 September 2004. [Google Scholar]

- ANSV. Accident Report 20A-1-04, Milan Linate Airport 8 October 2001. Agenzia Nazionale Per La Sicurezza Del Volo, 00156 Rome. 20 January 2004. Available online: https://skybrary.aero/bookshelf/ansv-accident-report-20a-1-04-milan-linate-ri (accessed on 27 March 2024).

- Mearns, K.; Kirwan, B.; Reader, T.W.; Jackson, J.; Kennedy, R.; Gordon, R. Understanding Safety Culture in Air Traffic ManagementDevelopment of a methodology for understanding and enhancing safety culture in Air Traffic Management. Saf. Sci. 2011, 53, 123–133. [Google Scholar] [CrossRef]

- Noort, M.; Reader, T.W.; Shorrock, S.; Kirwan, B. The relationship between national culture and safety culture: Implications for international safety culture assessments. J. Occup. Organ. Psychol. 2016, 89, 515–538. [Google Scholar] [CrossRef] [PubMed]

- Kirwan, B.; Shorrock, S.T.; Reader, T. The Future of Safety Culture in European ATM—A White Paper; EUROCONTROL: Brussels, Belgium, 2021; Available online: https://skybrary.aero/bookshelf/future-safety-culture-european-air-traffic-management-white-paper (accessed on 27 March 2024).

- Kirwan, B.; Reader, T.W.; Parand, A.; Kennedy, R.; Bieder, C.; Stroeve, S.; Balk, A. Learning Curve: Interpreting the Results of Four Years of Safety Culture Surveys; Aerosafety World, Flight Safety Foundation: Alexandria, VA, USA, 2019. [Google Scholar]

- Kirwan, B. CEOs on Safety Culture; A EUROCONTROL-FAA Action Plan 15 White Paper. October; EUROCONTROL: Brussels, Belgium, 2015. [Google Scholar] [CrossRef]

- Zweifel, T.D.; Vyal, V. Crash: BOEING and the power of culture. J. Intercult. Manag. Ethics Issue 2021, 4, 13–26. [Google Scholar] [CrossRef]

- Dias, M.; Teles, A.; Lopes, R. Could Boeing 737 Max crashes be avoided? Factors that undermined project safety. Glob. Sci. J. 2020, 8, 187–196. [Google Scholar]

- Turing, A.M.; Copeland, B.J. The Essential Turing: Seminal Writings in Computing, Logic, Philosophy, Artificial Intelligence, and Artificial Life Plus the Secrets of Enigma; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Turing, A.M. Computing machinery and intelligence. Mind 1950, 49, 433–460. [Google Scholar] [CrossRef]

- Pearle, J.; Mackenzie, D. The Book of Why: The New Science of Cause and Effect; Penguin: London, UK, 2018. [Google Scholar]

- European Commission. CORDIS Results Pack on AI in Air Traffic Management: A Thematic Collection of Innovative EU-Funded Research results. October 2022. Available online: https://www.sesarju.eu/node/4254 (accessed on 27 March 2024).

- DeCanio, S. Robots and Humans—Complements or substitutes? J. Macroecon. 2016, 49, 280–291. [Google Scholar] [CrossRef]

- Kaliardos, W. Enough Fluff: Returning to Meaningful Perspectives on Automation; FAA, US Department of Transportation: Washington, DC, USA, 2023. Available online: https://rosap.ntl.bts.gov/view/dot/64829 (accessed on 27 March 2024).

- Wikipedia on ChatGPT. 2022. Available online: https://en.wikipedia.org/wiki/ChatGPT (accessed on 27 March 2024).

- Uren, V.; Edwards, J.S. Technology readiness and the organizational journey towards AI adoption: An empirical study. Int. J. Inf. Manag. 2023, 68, 102588. [Google Scholar] [CrossRef]

- Defoe, A. AI Governance—A Research Agenda. Future of Humanity Institute. 2017. Available online: https://www.fhi.ox.ac.uk/ai-governance/#1511260561363-c0e7ee5f-a482 (accessed on 27 March 2024).

- EASA. EASA Concept Paper: First Usable Guidance for Level 1 & 2 Machine Learning Applications. February 2023. Available online: https://www.easa.europa.eu/en/newsroom-and-events/news/easa-artificial-intelligence-roadmap-20-published (accessed on 27 March 2024).

- EU Project Description for HAIKU. Available online: https://cordis.europa.eu/project/id/101075332 (accessed on 27 March 2024).

- HAIKU Website. Available online: https://haikuproject.eu/ (accessed on 27 March 2024).

- SAFETEAM EU Project. 2023. Available online: https://safeteamproject.eu/ (accessed on 27 March 2024).

- Eurocontrol. Technical Interchange Meeting (TIM) on Human-Systems Integration; Eurocontrol Innovation Hub: Bretigny sur Orge, France, 2023; Available online: https://www.eurocontrol.int/event/technical-interchange-meeting-tim-human-systems-integration (accessed on 27 March 2024).

- Diaz-Rodriguez, N.; Ser, J.D.; Coeckelbergh, M.; de Pardo, M.L.; Herrera-Viedma, E.; Herrera, F. Connecting the dots in trustworthy AI: From AI principles, ethics and key requirements to responsible AI systems and Regulation. Inf. Fusion 2023, 99, 101896. [Google Scholar] [CrossRef]

- MARC Baumgartner & Stathis Malakis. Just Culture and Artificial Intelligence: Do We Need to Expand the Just Culture Playbook? Hindsight 35, November; EUROCONTROL: Brussels, Belgium, 2023; pp. 43–45. Available online: https://skybrary.aero/articles/hindsight-35 (accessed on 27 March 2024).

- Kumar, R.S.S.; Snover, J.; O’Brien, D.; Albert, K.; Viljoen, S. Failure Modes in Machine Learning; Microsoft Corporation & Berkman Klein Center for Internet and Society at Harvard University: Cambridge, MA, USA, 2019. [Google Scholar]

- Franchina, F. Artificial Intelligence and the Just Culture Principle; Hindsight 35, November; EUROCONTROL: Brussels, Belgium, 2023; pp. 39–42. Available online: https://skybrary.aero/articles/hindsight-35 (accessed on 27 March 2024).

- Ramchum, S.D.; Stein, S.; Jennings, N.R. Trustworthy human-AI partnerships. IScience 2021, 24, 102891. [Google Scholar] [CrossRef] [PubMed]

- European Commission. Ethics Guidelined for Trustworthy AI. High Level Expert Group (HLEG) on Ethics and AI. 2019. Available online: https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai (accessed on 27 March 2024).

- Lees, M.J.; Johnstone, M.C. Implementing safety features of Industry 4.0 without compromising safety culture. Int. Fed. Autom. Control (IFAC) Pap. Online 2021, 54, 680–685. [Google Scholar]

- Macey-Dare, R. How Soon Is Now? Predicting the Expected Arrival Date of AGI-Artificial General Intelligence. 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4496418 (accessed on 27 March 2024).

- Schecter, A.; Hohenstein, J.; Larson, L.; Harris, A.; Hou, T.; Lee, W.; Lauharatanahirun, N.; DeChurch, L.; Contractor, N.; Jung, M. Vero: An accessible method for studying human-AI teamwork. Comput. Hum. Behav. 2023, 141, 107606. [Google Scholar] [CrossRef]

- Zhang, G.; Chong, L.; Kotovsky, K.; Cagan, J. Trust in an AI versus a Human teammate: The effects of teammate identity and performance on Human-AI cooperation. Comput. Hum. Behav. 2023, 139, 107536. [Google Scholar] [CrossRef]

- Ho, M.-T.; Mantello, P.; Ho, M.-T. An analytical framework for studying attitude towards emotional AI: The three-pronged approach. MethodsX 2023, 10, 102149. [Google Scholar] [CrossRef] [PubMed]

- European Commission. Proposal for a Regulation Laying down Harmonised Rules on Artificial Intelligence. 21 April 2021. Available online: https://data.consilium.europa.eu/doc/document/ST-8115-2021-INIT/en/pdf (accessed on 27 March 2024).

- Veitch, E.; Alsos, O.A. A systematic review of human-AI interaction in autonomous ship design. Saf. Sci. 2022, 152, 105778. [Google Scholar] [CrossRef]

- UK Ministry of Defence. Defense Artificial Intelligence Strategy. 2022. Available online: https://www.gov.uk/government/publications/defence-artificial-intelligence-strategy (accessed on 27 March 2024).

- Grote, G. Safety and autonomy—A contradiction forever? Saf. Sci. 2020, 127, 104709. [Google Scholar] [CrossRef]

- Haddon-Cave, C. An Independent Review into the Broader Issues Surrounding the Loss of the RAF Nimrod MR2 Aircraft XV230 in Afghanisatan in 2006; HMSO: London, UK, 2009; ISBN 9780102962659. [Google Scholar]

- CANSO. CANSO (Civil Air Navigation Services Organisation) Standard of Excellence in Safety Management Systems. 2023. Available online: https://canso.org/publication/canso-standard-of-excellence-in-safety-management-systems (accessed on 27 March 2024).

- CIEHF. The Human Dimension in Tomorrow’s Aviation System; Chartered Institute for Ergonomics and Human Factors (CIEHF): Loughborough, UK, 2020; Available online: https://ergonomics.org.uk/resource/tomorrows-aviation-system.html (accessed on 27 March 2024).

- Dubey, A.; Abhinav, K.; Jain, S.; Arora, V.; Puttaveerana, A. HACO: A framework for developing Human-AI Teaming. In Proceedings of the 13th Innovations in Software Engineering Conference (ISEC), Jabalpur, India, 27–29 February 2020; pp. 1–9. [Google Scholar]

| Questionnaire Item | Dimension | IA Impact | H/M/L |

|---|---|---|---|

| B01 My colleagues are committed to safety. | Colleague commitment to safety | The IA would effectively be a digital colleague. The IA’s commitment to safety would likely be judged according to the IA’s performance. Human-supervised training, using domain experts with the IA, would help engender trust. The concern is that humans might ‘delegate’ some of their responsibility to the IA. A key issue here is to what extent the IA sticks rigidly to ‘golden rules’, such as aircraft separation minima (5NM lateral separation and 1000 feet vertical separation) or is slightly flexible about them as controllers may (albeit rarely) need to be. The designer needs to decide whether to ‘hard code’ some of these rules or allow a little leeway (within limits); this determines whether the IA behaves like ‘one of the guys’ or never, ever breaks rules. | High |

| B04 Everyone I work with in this organization feels that safety is their personal responsibility. | Colleague commitment to safety | Since an IA cannot effectively take responsibility, someone else may be held accountable for an IA’s ‘actions’. If a supervisor fails to see an IA’s ‘mistake’, who will be blamed? HAIKU use cases may shed light on this, if there can be scenarios where the IA gives ‘poor’ or incorrect advice. If an IA is fully autonomous, this may affect the human team’s collective sense of responsibility, since in effect they can no longer be held responsible. | High |

| B07 I have confidence in the people that I interact with in my normal working situation. | Colleague commitment to safety | As for B01, this will be judged according to performance. Simulator training with IAs should help pilots and others ‘calibrate’ their confidence in the IA. This may overlap significantly with B01. | High |

| B02 Voicing concerns about safety is encouraged. | Just culture and reporting | The IA could ‘speak up’ if a key safety concern is not being discussed or has been missed. This could be integrated into crew resource management (CRM) and threat and error management (TEM) practices, and team resources management (TRM) in air traffic management. However, then the IA may be considered a ‘snitch’, a tool of management to check up on staff. This could also be a two-way street, so that the crew could report on the IA’s performance. | High |

| B08 People who report safety related occurrences are treated in a just and fair manner. | Just culture and reporting | The IA could monitor and record all events and interactions in real time and would be akin to a ‘living’ black box recorder. This could affect how humans behave and speak around the IA, if AI ‘testimony’ via data forensics was ever used against a controller in a disciplinary or legal prosecution case. | High |

| B12 We get timely feedback on the safety issues we raise. | Just culture and reporting | The IA could significantly increase reporting rates, depending on how its reporting threshold is set, and record and track how often a safety issue is raised. | Medium |

| B14 If I see an unsafe behavior by a colleague I would talk to them about it. | Just culture and reporting | [See also B02] The IA can ‘query’ behavior or decisions that may be unsafe. Rather than ‘policing’ the human team, the IA could possibly bring the risk to the human’s attention more sensitively, as a query. | High |

| B16 I would speak to my manager if I had safety concerns about the way that we work. | Just culture and reporting | If managers have full access to IA records, the IA might become a ‘snitch’ for management. This would most likely be a deal-breaker for honest team-working. | Low |

| C01 Incidents or occurrences that could affect safety are properly investigated. | Just culture and reporting | As for B08, the IA’s record of events could shed light on the human colleagues’ states of mind and decision-making. There needs to be safeguards around such use, however, so that it is only used for safety learning. | High |

| C06 I am satisfied with the level of confidentiality of the reporting and investigation process. | Just culture and reporting | As for B16, the use of IA recordings as information or even evidence during investigations needs to be considered. Just culture policies will need to adapt/evolve to the use of IAs in operational contexts. | High |

| C09 A staff member prosecuted for an incident involving a genuine error or mistake would be supported by the management of this organization. | Just culture and reporting | This largely concerns management attitudes to staff and provision of support. However, the term ‘genuine error or mistake’ needs to encompass the human choice between following IA advice which turns out to be wrong, and ignoring such advice which turns out to be right, since in either case there was no human intention to cause harm. This can be enshrined in just culture policies, but judiciaries (and the travelling public) may take an alternative viewpoint. In the event of a fatal accident, black-and-white judgements sharpened by hindsight may be made, which do not reflect the complexity of IA’s and human-AI teams’ operating characteristics and the local rationality at the time. | High |

| C13 Incident or occurrence reporting leads to safety improvement in this organization. | Just culture and reporting | This is partly administrative and depends on financial costs of safety recommendations. Nevertheless, the IA may be seen as adding dispassionate evidence and more balanced assessment of severity, and how close an event came to being an accident (e.g., via Bayesian and other statistical analysis techniques). It will be interesting to see if the credence given to the IA by management is higher than that given to its human counterparts. | High |

| C17 A staff member who regularly took unacceptable risks would be disciplined or corrected in this organization. | Just culture and reporting | As for C09, an IA may know an individual who takes more risks than others. However, there is a secondary aspect, linked to B07, that the IA may be trained by humans, and may be biased by their own level of risk tolerance and safety–productivity trade-offs. If an IA is offering solutions judged too risky, or conversely ‘too safe’, nullifying operational efficiency, the IA will need ‘re-training’ or re-coding. | High |

| B03 We have sufficient staff to do our work safely. | Staff and equipment | Despite many assurances that AI will not replace humans, many see strong commercial imperatives for doing exactly that (e.g., a shortage of commercial pilots and impending shortage of air traffic controllers, post-COVID low return-to-work rate at airports, etc.). | High |

| B23 We have support from safety specialists. | Staff and equipment | The IA could serve as a ‘safety encyclopedia’ for its team, with all safety rules, incidents and risk models stored in its knowledge base. | Medium |

| C02 We have the equipment needed to do our work safely. | Staff and equipment | The perceived safety value of IAs will depend on how useful the IA is for safety and will be a major question for the HAIKU use cases. One ‘wrong call’ could have a big impact on trust. | High |

| B05 My manager is committed to safety. | Management commitment to safety | The advent of IAs needs to be discussed with senior management, to understand if it affects their perception of who/what is keeping their organization safe. They may come to see the IA as a more manageable asset than people, one that can be ‘turned up or down’ with respect to safety. | High |

| B06 Staff have high trust in management regarding safety. | Management commitment to safety | Conversely, operational managers may simply be reluctant to allow the introduction of IAs into the system, because of both safety and operational concerns. | Medium |

| B10 My manager acts on the safety issues we raise. | Management commitment to safety | See C13 above. | Low |

| B19 Safety is taken seriously in this organization. | Management commitment to safety | Depends on how much the IA focuses on safety. The human team will watch the IA’s ‘behavior’ closely and judge for themselves whether the IA is there for safety or for other purposes. These could include profitability, but also a focus on environment issues. Ensuring competing AI priorities do not conflict may be challenging. | Medium |

| B22 My manager would always support me if I had a concern about safety. | Management commitment to safety | See B16, C09, C17. If the IA incorporates a dynamically updated risk model, concerns about safety could be rapidly assessed and addressed according to their risk importance (this is the long-term intent of Use Case 5 in HAIKU). | Low |

| B28 Senior management takes appropriate action on the safety issues that we raise. | Management commitment to safety | See B12. A further aspect is whether (and how quickly) the management supports getting the IA ‘fixed’ if its human teammates think it is not behaving safely. | Low |

| B09 People in this organization share safety related information. | Communication | The IA could become a source of safety information sharing, but this would still depend on the organization in terms of how the information would be shared and with whom. The IA could however share important day-to-day operational observations, e.g., by flight crew who can pass on their insights to the next crew flying the same route, for example, or by ground crew at an airport. Some airports already use a ‘community app’ for rapid sharing of such information. | Medium |

| B11 Information about safety related changes within this organization is clearly communicated to staff. | Communication | The IA could again be an outlet for information sharing, e.g., notices could be uploaded instantly, and the IA could ‘brief’ colleagues or inject new details as they become relevant during operations. The IA could also upload daily NOTAMs (Notices to Airmen) and safety briefings for controllers, and could distill the key safety points, or remind the team if they forget something from procedures/NOTAMs/briefings notes. | Medium |

| B17 There is good communication up and down this organization about safety. | Communication | An IA could reduce the reporting burden of operational staff if there could be an IA function to transmit details of concerns and safety observations directly to safety departments (though the ‘narrative’ should still be written by humans). An IA ‘network’ or hub could be useful for safety departments to assess safety issues rapidly and prepare messages to be cascaded down by senior/middle management. | Medium |

| B21 We learn lessons from safety-related incident or occurrence investigations. | Communication | The IA could provide useful and objective input for safety investigations, including inferences on causal and contributory factors. Use of Bayesian inference and other similar statistical approaches could avoid some typical human statistical biases, to help ensure the right lessons are learned and are considered proportionately to their level of risk. Alternatively, if information is biased or counterfactual evidence is not considered, the way the IA judges risk may be incorrect, leading to a lack of trust by operational people. It could also leave managers focusing on the wrong issues. | High |

| B24 I have good access to information regarding safety incidents or occurrences within the organization. | Communication | IAs or other AI-informed safety intelligence units could store a good deal of information on incidents and accidents, with live updates, possibly structured around risk models, and capture more contextual factors than are currently reported (this is the aim of HAIKU Use Case 5). Information can then be disseminated via an app or via the IA itself to various crews/staff. | High |

| B26 I know what the future plans are for the development of the services we provide. | Communication | The implementation and deployment of IAs into real operational systems needs careful and sensitive introduction, as there will be many concerns and practical questions. Failure to address such concerns may lead to very limited uptake of the IA. | Medium |

| C03 I read reports of incidents or occurrences that apply to our work. | Communication | The IA could store incidents, but this would require nothing so sophisticated as an IA. However, if the IA is used to provide concurrent (in situ) training, it could bring up past incidents related to the current operating conditions. | Low |

| C12 We are sufficiently involved in safety risk assessments. | Communication | Working with an IA might give the team a better appreciation of underlying risk assessments and their relevance to current operations. | Low |

| C15 We are sufficiently involved in changes to procedures. | Communication | The IA could build up evidence of procedures that regularly require workarounds or are no longer fit for purpose. The IA could highlight gaps between ‘work as designed’ and ‘work as done’. | Medium |

| C16 We openly discuss incidents or occurrences to learn from them. | Communication | [See C03] Unless this becomes an added function of the IA, it has low relevance. However, if a group learning review, or threat and error management is used in the cockpit following an event, the IA could provide a dispassionate and detailed account of the sequence of events and interactions. | Low |

| C18 Operational staff are sufficiently involved in system changes. | Communication | There is a risk that if the IA is a very good information collector, people at the sharp end might be gradually excluded in updates to system changes, as the system’s developers will consult data from the IA instead. | Medium |

| B13 My involvement in safety activities is sufficient. | Collaboration | As for C15 and C18. | Low |

| B15r People who raise safety issues are seen as troublemakers. | Collaboration | It needs to be seen whether an IA could itself be perceived as a troublemaker if it continually questions its human teammates’ decisions and actions. | Medium |

| B20 My team works well with the other teams within the organization. | Collaboration | The way different teams ‘do’ safety in the same job may vary (both inside companies, and between companies). The IA might need to be tailored to each team, or be able to vary/nuance its responses accordingly. If people move from one team or department to another, they may need to learn ‘the way the IA does things around here’. | Medium |

| B25r There are people who I do not want to work with because of their negative attitude to safety. | Collaboration | There could conceivably be a clash between an IA and a team member who, for example, was taking significant risks or continually overriding/ignoring safety advice, or an IA that was giving poor advice. If the IA is a continual learning system, its behavior may evolve over time, and diverge from optimum, even if it starts off safe when first implemented. | High |

| B27 Other people in this organization understand how my job contributes to safety. | Collaboration | The implementation of an IA in a particular work area (e.g., a cockpit, an air traffic ops room, an airport/airline operational control center) itself suggests safety criticality of human tasks in those areas. If an IA becomes an assimilator of all safety relevant information and activities, it may become clearer how different roles contribute to safety. | Medium |

| C05 Good communication exists between Operations and Engineering/Maintenance to ensure safety. | Collaboration | If engineering/maintenance ‘own’ the IA, i.e., are responsible for its maintenance and upgrades, then there will need to be good communication between these departments and ops/safety. A secondary aspect here is that IAs used in ops could transmit information to other departments concerning engineering and maintenance needs observed during operations. | Medium |

| C10 Maintenance always consults Operations about plans to maintain operational equipment | Collaboration | It needs to be determined who can upgrade an IA’s system and performance characteristics, e.g., if a manual change is made to the IA to better account for an operational circumstance that has caused safety issues, who makes this change and who needs to be informed? | Medium |

| B18 Changes to the organization, systems and procedures are properly assessed for safety risk. | Risk handling | The IA could have a model of how things work and how safety is maintained, so any changes will need to be incorporated into that model, which may identify safety issues that may have been overlooked or played down. This is like current use of AIs for continuous validation and verification of operating systems, looking for bugs or omissions. Conversely, the IA may give advice that makes little sense to the human team, or the organization yet be unable to explain its rationale. Humans may find it difficult to adhere to such advice. | High |

| C07r We often have to deviate from procedures. | Risk handling | The IA will observe (and perhaps be party to) procedural deviation and can record associated reasons and frequencies (highlighting common ‘workarounds’). Such data could identify procedures that are no longer fit for purpose, or else inform retraining requirements if the procedures are in fact still fit for purpose. | High |

| C14r I often have to take risks that make me feel uncomfortable about safety. | Risk handling | The IA will likely be unaware of any discomfort on the human’s part (unless emotion detection is employed), but the human can probably use the IA’s advice to err on the side of caution. Conversely, a risk-taker, or someone who puts productivity first, may consult an IA until it gets around the rules (human ingenuity can be used for the wrong reasons). | High |

| C04 The procedures describe how I actually do my job. | Procedures and training | People know how to ‘fill in the gaps’ when procedures do not really fit the situation, and it is not clear how an IA will do this. [This was in part why the earlier expert systems movement failed to deliver, leading to the infamous ‘AI winter’]. Also, the IA could record ‘work as done’ and contrast it to ‘work as imagined’ (the procedures). This would, over time, create an evidence base on procedural adequacy (see also C07r). | High |

| C08 I receive sufficient safety-related refresher training. | Procedures and training | The IA could note human fluency with the procedures and how much support it has to give, thus gaining a picture of whether more refresher training might be beneficial. | Medium |

| C11 Adequate training is provided when new systems and procedures are introduced. | Procedures and training | As for C08. | Medium |

| C19 The procedures associated with my work are appropriate. | Procedures and training | When humans find themselves outside the procedures, e.g., in a flight upset situation in the cockpit, an IA could rapidly examine all sensor information and supply a course of action for the flight crew. | High |

| C20 I have sufficient training to understand the procedures associated with my work. | Procedures and training | As for C08 and C11. | Medium |

| Safety Culture Concerns | Safety Culture Affordances |

|---|---|

| Humans may become less concerned with safety if the IA is seen as handling safety aspects. This is an extension of the ‘complacency’ issue with automation and may be expected to increase as the IA’s autonomy increases. | The IA could ‘speak up’ if it assesses a human course of action as unsafe. |

| Humans may perceive a double-bind; if they follow ‘bad’ IA advice or fail to follow ‘good’ advice, and there are adverse consequences, they might find themselves being prosecuted. This will lead to lack of trust in the IA. | The IA could be integrated into crew resource management practices, helping decision-making and post-event review in the cockpit or air traffic ops room. |

| If the IA reports on human error or human risk-taking or other ‘non-nominal behavior’ it could be considered a ‘snitch’ for management and may not be trusted. | The IA could serve as a living black box recorder, recording more decision-making rationales than is the case today. |

| If IA recordings are used by incident and accident investigators, just culture policies will need to address such usage both for ethical reasons and to the satisfaction of the human teams involved. Fatal accidents in which an IA was a part of the team are likely to raise new challenges for legal institutions. | If the IA can collect and analyze day-to-day safety occurrence information it may be seen as adding objective (dispassionate) evidence and a more balanced assessment of severity, as well as an unbiased evaluation of how close an event came to being an accident (e.g., via Bayesian analysis). |

| An IA that is human trained may adopt its human trainers’ level of risk tolerance, which may not always be optimal for safety. | The IA could significantly increase reporting rates, depending on how its reporting threshold is set, and could also record and track how often a safety-related issue is raised. |

| Introducing intelligent assistants may inexorably lead to less human staff. Although there are various ways to ‘sugar-coat’ this, e.g., current and predicted shortfalls in staffing across the aviation workforce, it may lead to resentment against IAs. This factor will likely be influenced by how society gets on with advanced AI and IAs. | The IA could serve as a safety encyclopedia, or oracle, able to give instant information on safety rules, risk assessments, hazards, etc. |

| If the IA queries humans too often, it may be perceived as policing them, or as a troublemaker. | The IA can upload all NOTAMs and briefings etc., so as to keep the human team current, or to advise them if they have missed something. |

| If the IA makes unsafe suggestions, trust will be eroded rapidly. | If the IA makes one notable ‘save’, its perceived utility and trustworthiness will increase. |

| The IA may have multiple priorities (e.g., safety, environment, efficiency/profit). This may lead to advice that humans find conflicted or confusing. | The IA could share important day-to-day operational observations, e.g., by flight crew, controllers, or ground crew, who can pass on their insights to the incoming crew. |

| Management may come to see the IA as a more manageable safety asset than people, one where they can either ‘turn up’ or ‘tone down’ the accent on safety. | The IA could reduce the reporting ‘burden’ of operational staff by transmitting details of human concerns and safety observations directly to safety departments. An IA ‘network’ or hub would allow safety departments to assess safety issues rapidly and prepare messages to be cascaded down by senior/middle management. |

| Operational managers may simply be reluctant to allow the introduction of IAs into the system, because of both safety and operational reservations. | The IA could provide objective input for safety investigations, including inferences on causal and contributory factors. Use of Bayesian inference and other similar statistical approaches could help avoid typical human statistical biases, ensuring the right lessons are learned and are considered proportionately to their level of risk. |

| If information is biased or counterfactual evidence is not considered, the way the IA judges risk may be incorrect, leading to a lack of trust by operational people. It could also have managers focusing on the wrong issues. | IAs could store information on incidents and associated (correlated) contextual factors, with live updates structured around risk models, and disseminate warnings of potential hazards on the day via an app or via the IA itself communicating with crews/staff. |

| There is a risk that if the IA is a very good information collector, that people at the sharp end are gradually excluded in updates to system changes, as the systems developers will consult data from the IA instead. | The IA might serve as a bridge between the way operational people and safety analysts think about risks, via considering more contextual factors not normally encoded in risk assessments. |

| There could conceivably be a clash between an IA and a team member who, for example, was taking significant risks or continually over-riding/ignoring safety advice, or, conversely, an IA that was giving bad advice. | The IA could build up evidence of procedures that regularly require workarounds or are no longer fit for purpose. The IA could highlight gaps between ‘work as designed’, and ‘work as done’. |

| IAs may need regular maintenance and fine-tuning, which may affect the perceived ‘stability’ of the IA by ops people, resulting in loss of trust or ‘rapport’. | IAs used in ops could transmit information to other departments concerning engineering and maintenance needs observed during operations. |

| The IA may give (good) advice that makes little sense to the human team or the organization, yet it cannot explain its rationale. Managers and operational staff may find it difficult to adhere to such advice. | The IA could have a model of how things work and how safety is maintained, so that any changes will need to be incorporated into the model, which may identify safety issues that have been overlooked or ‘played down’. This is like current use of AIs for continuous validation and verification of operating systems, looking for bugs or omissions. |

| A human risk-taker or someone who puts productivity first, may consult (‘game’) an IA until it gets around the rules. | The human can use the IA’s safety advice to err on the side of caution, if she or he feels pressured to cut safety corners either because of self, peer, or management pressure. |

| People know how to fill in the gaps when procedures don’t really fit the situation, and it is not clear how an IA will do this. The AI’s advice might not be so helpful unless it is human-supervisory trained. | When humans find themselves outside the procedures, e.g., in a flight upset situation in the cockpit, an IA could rapidly examine all sensor information and supply a course of action for the flight crew. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kirwan, B. The Impact of Artificial Intelligence on Future Aviation Safety Culture. Future Transp. 2024, 4, 349-379. https://doi.org/10.3390/futuretransp4020018

Kirwan B. The Impact of Artificial Intelligence on Future Aviation Safety Culture. Future Transportation. 2024; 4(2):349-379. https://doi.org/10.3390/futuretransp4020018

Chicago/Turabian StyleKirwan, Barry. 2024. "The Impact of Artificial Intelligence on Future Aviation Safety Culture" Future Transportation 4, no. 2: 349-379. https://doi.org/10.3390/futuretransp4020018

APA StyleKirwan, B. (2024). The Impact of Artificial Intelligence on Future Aviation Safety Culture. Future Transportation, 4(2), 349-379. https://doi.org/10.3390/futuretransp4020018