Unmanned Agricultural Tractors in Private Mobile Networks

Abstract

:1. Introduction

2. Requirements from Autonomy and Remote Control in Smart Farms

- Level 0—No Driving Automation

- Level 1—Driver Assistance

- Level 2—Partial Driving Automation (“hands off”)

- Level 3—Conditional Driving Automation (“eyes off”)

- Level 4—High Driving Automation (“mind off”)

- Level 5—Full Driving Automation

2.1. Requirements for Communications Networks

2.2. Requirements for Cyber and Network Security

3. Field Trialed System

3.1. Local Private Network

3.2. Remote Controlled Tractor

3.2.1. Remote Controlling Tunnel between the Tractor and the Remote Control Cabin

3.2.2. Filtering, Frame Aggregation, and Overhead Measurements

3.2.3. Remote View from the Tractor

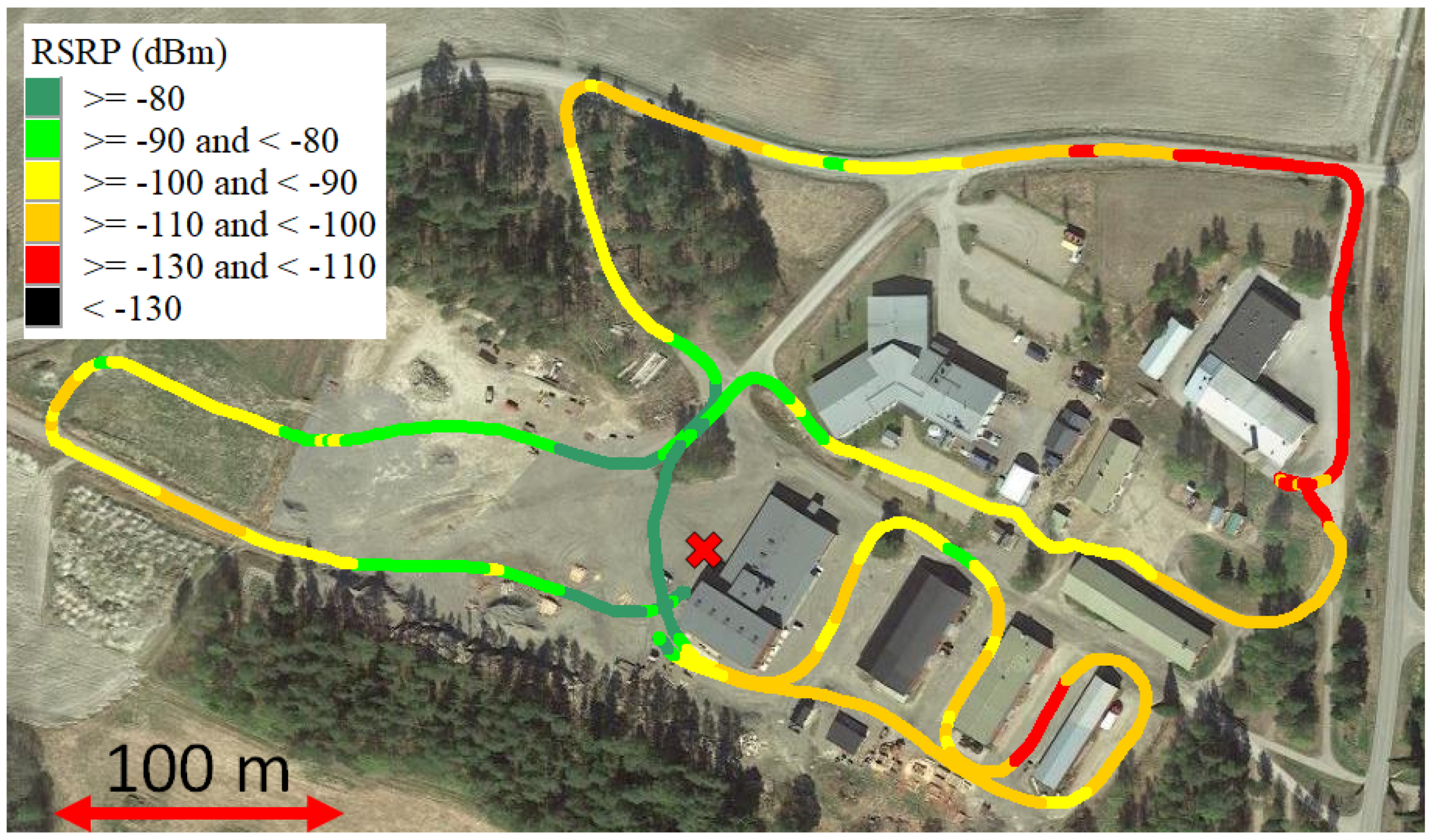

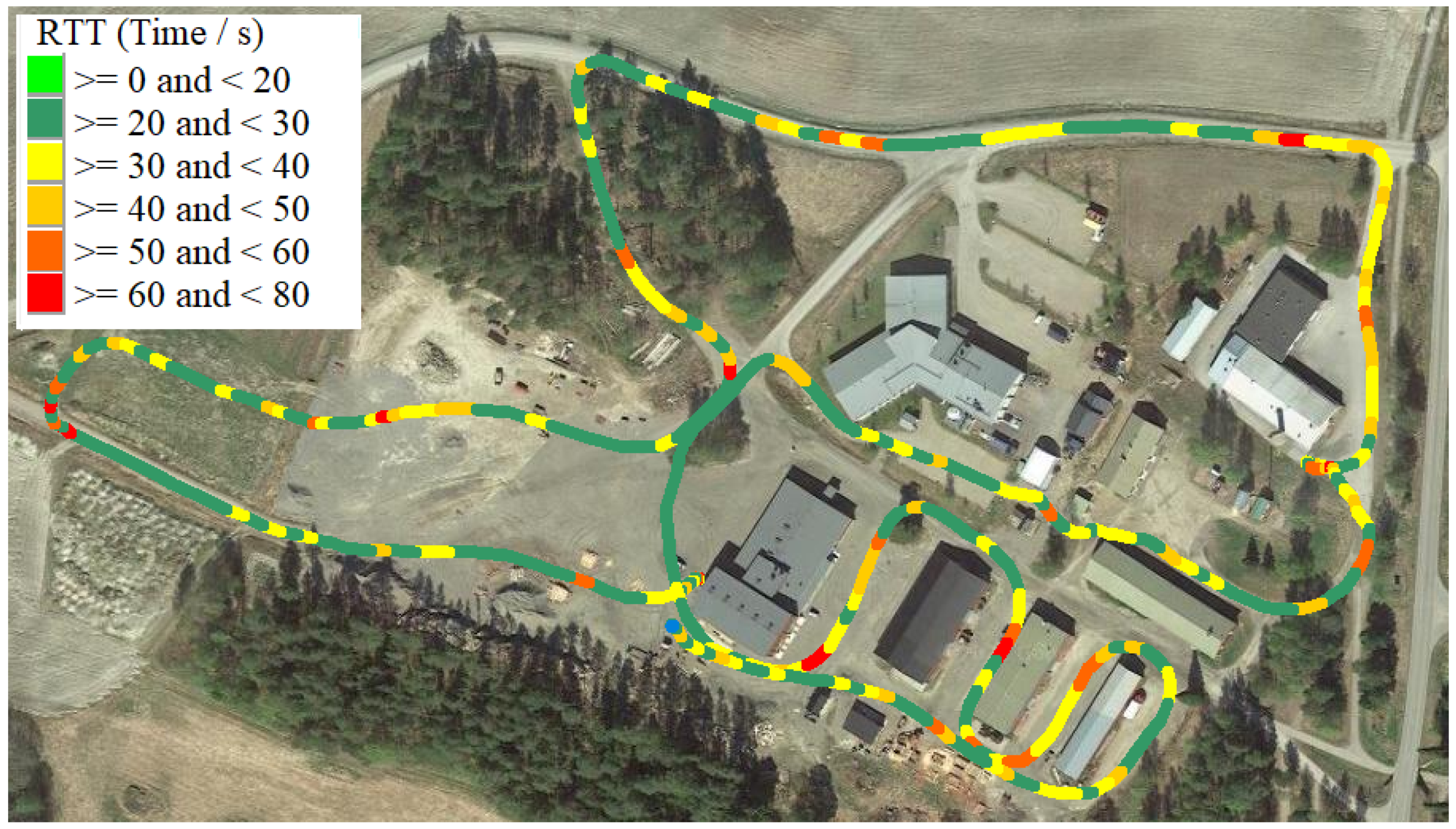

3.2.4. Network Quality Measurements

3.3. Cyber and Network Security

3.3.1. Access Control and Security Posture Management for the IoT

3.3.2. Resilience through a Satellite Link

4. Related Works

5. Conclusions and Future Research

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| 3GPP | Third Generation Partnership Project |

| CAN | Controller Area Network |

| DNS | Domain Name System |

| DDNS | Dynamic DNS |

| ECU | Electronic Control Unit |

| GEO | Geostationary |

| IP | Internet Protocol |

| IPsec | Internet Protocol Security |

| IoT | Internet of Things |

| LEO | Low Earth Orbit |

| LoRaWAN | Long Range Wide Area Network |

| MDPI | Multidisciplinary Digital Publishing Institute |

| NAT | Network address translation |

| OAuth | Open Authorization |

| QoS | Quality of Service |

| QR code | Quick Response code |

| RSRP | Reference Signal Received Power |

| RTT | Round Trip Time |

| SAE | Society of Automotive Engineers |

| SCTP | Stream Control Transmission Protocol |

| SF | Smart Farming |

| SIM | Subscriber Identity Module |

| SRTP | Secure Real-time Transport Protocol |

| SSH | Secure Shell |

| TCP | Transmission Control Protocol |

| TLS | Transport Layer Security |

| UDP | User Datagram Protocol |

| UE | User Equipment |

| V2X | Vehicle-to-Everything |

| VPN | Virtual Private Network |

| Wi-Fi | Wireless Fidelity |

| WPA | Wi-Fi Protected Access |

References

- Thomasson, J.; Baillie, C.; Antille, D.; Lobsey, C.; McCarthy, C.; Agricultural, A.S.; Engineers, B. Autonomous Technologies in Agricultural Equipment: A Review of the State of the Art; ASABE Distinguished Lecture Series: Tractor Design; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2019; pp. 1–17. [Google Scholar]

- Zhang, X.; Geimer, M.; Noack, P.; Grandl, L. A semi-autonomous tractor in an intelligent master—Slave vehicle system. Intell. Serv. Robot. 2010, 3, 263–269. [Google Scholar] [CrossRef]

- Mehrabi, Z.; McDowell, M.; Ricciardi, V.; Levers, C.; Martinez, J.; Mehrabi, N.; Wittman, H.; Ramankutty, N.; Jarvis, A. The global divide in data-driven farming. Nat. Sustain. 2021, 4, 154–160. [Google Scholar] [CrossRef]

- Relf-Eckstein, J.; Ballantyne, A.T.; Phillips, P.W. Farming Reimagined: A case study of autonomous farm equipment and creating an innovation opportunity space for broadacre smart farming. Wagening. J. Life Sci. 2019, 90–91, 100307. [Google Scholar] [CrossRef]

- Ünal, I.; Topakci, M. Design of a Remote-Controlled and GPS-Guided Autonomous Robot for Precision Farming. Int. J. Adv. Robot. Syst. 2015, 12, 1. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez-De-Santos, P.; Fernández, R.; Sepúlveda, D.; Navas, E.; Armada, M. Unmanned ground vehicles for smart farms. Agron.-Clim. Chang. Food Secur. 2020, 6, 73. [Google Scholar]

- Bonadies, S.; Lefcourt, A.; Gadsden, S.A. A survey of unmanned ground vehicles with applications to agricultural and environmental sensing. In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping; International Society for Optics and Photonics: Washington, DC, USA, 2016; Volume 9866. [Google Scholar]

- Roldán, J.J.; del Cerro, J.; Garzón-Ramos, D.; Garcia-Aunon, P.; Garzón, M.; de León, J.; Barrientos, A. Robots in agriculture: State of art and practical experiences. Serv. Robot. 2018, 67–90. [Google Scholar] [CrossRef] [Green Version]

- Ni, J.; Hu, J.; Xiang, C. A review for design and dynamics control of unmanned ground vehicle. Proc. Inst. Mech. Eng. Part J. Automob. Eng. 2021, 235, 1084–1100. [Google Scholar] [CrossRef]

- International Organization for Standardization. Tractors and Machinery for Agriculture and Forestry—Serial Control and Communications Data Network—Part 1: General Standard for Mobile Data Communication; ISO 11783-1:2017 (E); International Organization for Standardization: Geneva, Switzerland, 2017. [Google Scholar]

- Standard SAE J1939-1; On-Highway Equipment Control and Communication Network. SAE International: Warrendale, PA, USA, 2021.

- Wang, H.; Noguchi, N. Autonomous maneuvers of a robotic tractor for farming. In Proceedings of the 2016 IEEE/SICE International Symposium on System Integration (SII), Sapporo, Japan, 13–15 December 2016; pp. 592–597. [Google Scholar] [CrossRef]

- Ditze, M.; Bernhardi-Grisson, R.; Kämper, G.; Altenbernd, P. Porting the Internet Protocol to the Controller Area Network. In Proceedings of the 2nd International Workshop on Real-Time LANs in the Internet Age (RTLIA 2003), Porto, Portugal, 2–4 July 2003. [Google Scholar]

- Lindgren, P.; Aittamaa, S.; Eriksson, J. IP over CAN, Transparent Vehicular to Infrastructure Access. In Proceedings of the 2008 5th IEEE Consumer Communications and Networking Conference, Las Vegas, NV, USA, 10–12 January 2008; pp. 758–759. [Google Scholar] [CrossRef] [Green Version]

- Bayilmis, C.; Erturk, I.; Ceken, C. Extending CAN segments with IEEE 802.11 WLAN. In Proceedings of the The 3rd ACS/IEEE International Conference on Computer Systems and Applications, Cairo, Egypt, 6 January 2005. [Google Scholar] [CrossRef]

- Johanson, M.; Karlsson, L.; Risch, T. Relaying Controller Area Network Frames over Wireless Internetworks for Automotive Testing Applications. In Proceedings of the 2009 Fourth International Conference on Systems and Networks Communications, Porto, Portugal, 20–25 September 2009; pp. 1–5. [Google Scholar] [CrossRef]

- Reinhardt, D.; Güntner, M.; Kucera, M.; Waas, T.; Kühnhauser, W. Mapping CAN-to-ethernet Communication Channels within Virtualized Embedded Environments. In Proceedings of the 10th IEEE International Symposium on Industrial Embedded Systems (SIES), Siegen, Germany, 8–10 June 2015. [Google Scholar] [CrossRef]

- Socat—Multipurpose Relay. Available online: http://www.dest-unreach.org/socat/ (accessed on 24 November 2021).

- Tang, Y.; Dananjayan, S.; Hou, C.; Guo, Q.; Luo, S.; He, Y. A survey on the 5G network and its impact on agriculture: Challenges and opportunities. Comput. Electron. Agric. 2021, 180, 105895. [Google Scholar] [CrossRef]

- Wang, F. Technology Related to Agricultural Transformation and Development based on 5G Technology; Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; Volume 1574, p. 012015. [Google Scholar]

- Friha, O.; Ferrag, M.A.; Shu, L.; Maglaras, L.A.; Wang, X. Internet of Things for the Future of Smart Agriculture: A Comprehensive Survey of Emerging Technologies. IEEE CAA J. Autom. Sin. 2021, 8, 718–752. [Google Scholar] [CrossRef]

- Storck, C.R.; Duarte-Figueiredo, F. A survey of 5G technology evolution, standards, and infrastructure associated with vehicle-to-everything communications by internet of vehicles. IEEE Access 2020, 8, 117593–117614. [Google Scholar] [CrossRef]

- Chi, H.; Welch, S.; Vasserman, E.; Kalaimannan, E. A framework of cybersecurity approaches in precision agriculture. In Proceedings of the ICMLG2017 5th International Conference on Management Leadership and Governance, St. Petersburg, Russia, 16–17 March 2017; Academic Conference Publisher Int.: Reading, UK, 2017; pp. 90–95. [Google Scholar]

- Barreto, L.; Amaral, A. Smart farming: Cyber security challenges. In Proceedings of the 2018 International Conference on Intelligent Systems (IS), Funchal, Portugal, 25–27 September 2018; pp. 870–876. [Google Scholar]

- Ahmad, I.; Shahabuddin, S.; Kumar, T.; Okwuibe, J.; Gurtov, A.; Ylianttila, M. Security for 5G and beyond. IEEE Commun. Surv. Tutor. 2019, 21, 3682–3722. [Google Scholar] [CrossRef]

- Sontowski, S.; Gupta, M.; Chukkapalli, S.S.L.; Abdelsalam, M.; Mittal, S.; Joshi, A.; Sandhu, R. Cyber attacks on smart farming infrastructure. In Proceedings of the 2020 IEEE 6th International Conference on Collaboration and Internet Computing (CIC), Atlanta, GA, USA, 1–3 December 2020; pp. 135–143. [Google Scholar]

- Tange, K.; De Donno, M.; Fafoutis, X.; Dragoni, N. A systematic survey of industrial Internet of Things security: Requirements and fog computing opportunities. IEEE Commun. Surv. Tutor. 2020, 22, 2489–2520. [Google Scholar] [CrossRef]

- Lu, R.; Zhang, L.; Ni, J.; Fang, Y. 5G vehicle-to-everything services: Gearing up for security and privacy. Proc. IEEE 2019, 108, 373–389. [Google Scholar] [CrossRef]

- Airbus. Verde-Crop Imagery & Analytics for Precision Agriculture. Available online: https://oneatlas.airbus.com/service/verde (accessed on 28 November 2021).

- Heikkilä, M.; Koskela, P.; Suomalainen, J.; Lähetkangas, K.; Kippola, T.; Eteläaho, P.; Erkkilä, J.; Pouttu, A. Field Trial with Tactical Bubbles for Mission Critical Communications. Trans. Emerg. Telecommun. Technol. 2021, 32, e4385. [Google Scholar] [CrossRef]

- Standard J3016-202104; Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. SAE International: Warrendale, PA, USA, 2021.

- Basu, S.; Omotubora, A.; Beeson, M.; Fox, C. Legal framework for small autonomous agricultural robots. Ai Soc. 2020, 35, 113–134. [Google Scholar] [CrossRef] [Green Version]

- International Organization for Standardization; ISO 11898-1:2015. Road Vehicles—Controller Area Network (CAN)—Part 1: Data Link Layer and Physical Signalling; International Organization for Standardization: Geneva, Switzerland, 2015. [Google Scholar]

- Vickers, J.N. Perception, Cognition, and Decision Training: The Quiet Eye in Action; Human Kinetics: Champaign, IL, USA, 2007. [Google Scholar]

- Lee, K.; Kim, J.; Park, Y.; Wang, H.; Hong, D. Latency of cellular-based V2X: Perspectives on TTI-proportional latency and TTI-independent latency. IEEE Access 2017, 5, 15800–15809. [Google Scholar] [CrossRef]

- 5GAA Automotive Association. C-V2X Use Cases and Service Level Requirements; Technical Report; Version 3; 5GAA: München, Germany, 2020; Volume I. [Google Scholar]

- 3GPP. Architecture Enhancements for 5G System (5GS) to Support Vehicle-to-Everything (V2X) Services; Technical Specification TS23.287, Release 16; 3GPP: Valbonne, France, 2021. [Google Scholar]

- 3GPP. System Architecture for the 5G System (5GS); Technical Specification 23.287, Release 17; 3GPP: Valbonne, France, 2021. [Google Scholar]

- 3GPP. Security Architecture and Procedures for 5G System; Technical Specification, TS 33.501, Release 15; 3GPP: Valbonne, France, 2018. [Google Scholar]

- Valtra. Valtra N Series Tractors 135–201 HP. Available online: https://www.valtra.co.uk/products/nseries.html (accessed on 22 November 2021).

- Bittium. Tactical Wireless IP Network. Available online: https://www.bittium.com/tactical-communications/bittium-tactical-wireless-ip-network (accessed on 30 October 2021).

- Digita. LoRaWAN Technology. Available online: https://www.digita.fi/en/services/iot/lorawan-technology/#/ (accessed on 24 November 2021).

- LoRa Alliance. LoRaWAN 1.1 Specification. 2017. Available online: https://lora-alliance.org/resource_hub/lorawan-specification-v1-1/ (accessed on 30 October 2021).

- Wireless Soil Moisture Sensor for Agriculture. Available online: https://soilscout.com/applications/agriculture (accessed on 30 October 2021).

- Vehkaperä, M.; Hoppari, M.; Suomalainen, J.; Roivainen, J.; Rantala, S.J. Testbed for Local-Area Private Network with Satellite-Terrestrial Backhauling. In Proceedings of the International Conference on Electrical, Communication, and Computer Engineering (ICECCE), Kuala Lumpur, Malaysia, 12–13 June 2021; pp. 1–6. [Google Scholar]

- 3GPP; 3GPP System Architecture Evolution (SAE). Security Architecture; Technical Specification TS 33.401, Release 16; 3GPP: Valbonne, France, 2020. [Google Scholar]

- Wi-Fi Alliance. Wi-Fi Protected Access—WPA 3, Specification, Version 3.0. 2020. Available online: https://www.wi-fi.org/download.php?file=/sites/default/files/private/WPA3_Specification_v3.0.pdf (accessed on 28 November 2021).

- Goodmill Systems. Goodmill Systems w24h-S Managed Multichannel Router. Data Sheet. Available online: https://goodmillsystems.com/application/files/2615/8860/4850/Goodmill_w24h-S_Datasheet.pdf (accessed on 28 November 2021).

- SocketCAN—Controller Area Network. Available online: https://www.kernel.org/doc/html/latest/networking/can.html (accessed on 24 November 2021).

- Hardt, D. The OAuth 2.0 Authorization Framework; RFC 6749; IETF: Fremont, CA, USA, 2012. [Google Scholar]

- Julku, J.; Suomalainen, J.; Markku, K. Delegated Device Attestation for IoT. In Proceedings of the 8th International Conference on Internet of Things: Systems, Management and Security (IoTSMS), Gandia, Spain, 6–9 December 2021. [Google Scholar]

- Wu, Z.; Shen, X.; Gao, M.; Zeng, Y. Design of Distributed Remote Data Monitoring System Based on CAN Bus. In Proceedings of the 2018 Eighth International Conference on Instrumentation & Measurement, Computer, Communication and Control (IMCCC), Harbin, China, 19–21 July 2018; pp. 1403–1406. [Google Scholar]

- Rohrer, R.A.; Pitla, S.K.; Luck, J.D. Tractor CAN bus interface tools and application development for real-time data analysis. Comput. Electron. Agric. 2019, 163, 104847. [Google Scholar] [CrossRef]

- Dhivya, M.; Devi, K.G.; Suguna, S.K. Design and Implementation of Steering Based Headlight Control System Using CAN Bus. In International Conference on Computer Networks and Inventive Communication Technologies; Springer: Berlin, Germany, 2019; pp. 373–383. [Google Scholar]

- Fugiglando, U.; Massaro, E.; Santi, P.; Milardo, S.; Abida, K.; Stahlmann, R.; Netter, F.; Ratti, C. Driving behavior analysis through CAN bus data in an uncontrolled environment. IEEE Trans. Intell. Transp. Syst. 2018, 20, 737–748. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Niu, W.; Fu, W.; Ren, Y.; Hu, S.; Meng, Z. A Low-cost Tractor Navigation System with Changing Speed Adaptability. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021; pp. 96–102. [Google Scholar]

- Hu, S.; Fu, W.; Li, Y.; Cong, Y.; Shang, Y.; Meng, Z. Research on Automatic Steering Control System of Full Hydraulic Steering Tractor. In International Conference on Computer and Computing Technologies in Agriculture; Springer: Berlin, Germany, 2017; pp. 517–528. [Google Scholar]

- Isihibashi, R.; Tsubaki, T.; Okada, S.; Yamamoto, H.; Kuwahara, T.; Kavamura, K.; Wakao, K.; Moriyama, T.; Ospina, R.; Okamoto, H.; et al. Experiment of Integrated Technologies in Robotics, Network, and Computing for Smart Agriculture. IEICE Trans. Commun. 2021. [Google Scholar] [CrossRef]

- You, K.; Ding, L.; Zhou, C.; Dou, Q.; Wang, X.; Hu, B. 5G-based earthwork monitoring system for an unmanned bulldozer. Autom. Constr. 2021, 131, 103891. [Google Scholar] [CrossRef]

- Drenjanac, D.; Tomic, S.; Agüera, J.; Perez-Ruiz, M. Wi-fi and satellite-based location techniques for intelligent agricultural machinery controlled by a human operator. Sensors 2014, 14, 19767–19784. [Google Scholar] [CrossRef] [Green Version]

- Chukkapalli, S.S.L.; Mittal, S.; Gupta, M.; Abdelsalam, M.; Joshi, A.; Sandhu, R.; Joshi, K. Ontologies and artificial intelligence systems for the cooperative smart farming ecosystem. IEEE Access 2020, 8, 164045–164064. [Google Scholar] [CrossRef]

- Cathey, G.; Benson, J.; Gupta, M.; Sandhu, R. Edge Centric Secure Data Sharing with Digital Twins in Smart Ecosystems. arXiv 2021, arXiv:2110.04691. [Google Scholar]

- Raniwala, A.; Chiueh, T.C. Evaluation of a wireless enterprise backbone network architecture. In Proceedings of the 12th Annual IEEE Symposium on High Performance Interconnects, Stanford, CA, USA, 27 August 2004; pp. 98–104. [Google Scholar]

- Pawgasame, W.; Wipusitwarakun, K. Tactical wireless networks: A survey for issues and challenges. In Proceedings of the 2015 Asian Conference on Defence Technology (ACDT), Hua Hin, Thailand, 23–25 April 2015; pp. 97–102. [Google Scholar]

- Brewster, C.; Roussaki, I.; Kalatzis, N.; Doolin, K.; Ellis, K. IoT in agriculture: Designing a Europe-wide large-scale pilot. IEEE Commun. Mag. 2017, 55, 26–33. [Google Scholar] [CrossRef]

- Miles, B.; Bourennane, E.B.; Boucherkha, S.; Chikhi, S. A study of LoRaWAN protocol performance for IoT applications in smart agriculture. Comput. Commun. 2020, 164, 148–157. [Google Scholar] [CrossRef]

- Serizawa, K.; Mikami, M.; Moto, K.; Yoshino, H. Field trial activities on 5G NR V2V direct communication towards application to truck platooning. In Proceedings of the 2019 IEEE 90th Vehicular Technology Conference (VTC2019-Fall), Honolulu, HI, USA, 22–25 September 2019; pp. 1–5. [Google Scholar]

- Nikander, J.; Manninen, O.; Laajalahti, M. Requirements for cybersecurity in agricultural communication networks. Comput. Electron. Agric. 2020, 179, 105776. [Google Scholar] [CrossRef]

- Gupta, M.; Abdelsalam, M.; Khorsandroo, S.; Mittal, S. Security and privacy in smart farming: Challenges and opportunities. IEEE Access 2020, 8, 34564–34584. [Google Scholar] [CrossRef]

- Adkisson, M.; Kimmel, J.C.; Gupta, M.; Abdelsalam, M. Autoencoder-based Anomaly Detection in Smart Farming Ecosystem. arXiv 2021, arXiv:2111.00099. [Google Scholar]

- Sharma, V.; You, I.; Guizani, N. Security of 5G-V2X: Technologies, Standardization, and Research Directions. IEEE Netw. 2020, 34, 306–314. [Google Scholar] [CrossRef]

- Hussain, R.; Hussain, F.; Zeadally, S. Integration of VANET and 5G Security: A review of design and implementation issues. Future Gener. Comput. Syst. 2019, 101, 843–864. [Google Scholar] [CrossRef]

- Gupta, M.; Sandhu, R. Authorization framework for secure cloud assisted connected cars and vehicular internet of things. In Proceedings of the 23nd ACM on Symposium on Access Control Models and Technologies, Indianapolis, IN, USA, 13–15 June 2018; pp. 193–204. [Google Scholar]

- Stachowski, S.; Bielawski, R.; Weimerskirch, A. Cybersecurity Research Considerations for Heavy Vehicles; Report DOT HS 812 636; Technical Report; U.S. Department of Transportation: Washington, DC, USA, 2019.

- Bilbao-Arechabala, S.; Jorge-Hernandez, F. Security Architecture for Swarms of Autonomous Vehicles in Smart Farming. Appl. Sci. 2021, 11, 4341. [Google Scholar]

- Rahimi, H.; Zibaeenejad, A.; Rajabzadeh, P.; Safavi, A.A. On the security of the 5G-IoT architecture. In Proceedings of the International Conference on Smart Cities and Internet of Things, Mashhad, Iran, 26–27 September 2018; pp. 1–8. [Google Scholar]

- Suomalainen, J.; Julku, J.; Vehkaperä, M.; Posti, H. Securing Public Safety Communications on Commercial and Tactical 5G Networks: A Survey and Future Research Directions. IEEE Open J. Commun. Soc. 2021, 2, 1590–1615. [Google Scholar] [CrossRef]

- Gong, B.; Zhang, Y.; Wang, Y. A remote attestation mechanism for the sensing layer nodes of the Internet of Things. Future Gener. Comput. Syst. 2018, 78, 867–886. [Google Scholar] [CrossRef]

- Khodari, M.; Rawat, A.; Asplund, M.; Gurtov, A. Decentralized firmware attestation for in-vehicle networks. In Proceedings of the 5th on Cyber-Physical System Security Workshop, Auckland, New Zealand, 8 July 2019; pp. 47–56. [Google Scholar]

- Ali, T.; Nauman, M.; Amin, M.; Alam, M. Scalable, Privacy-Preserving Remote Attestation in and through Federated Identity Management Frameworks. In Proceedings of the 2010 International Conference on Information Science and Applications, Seoul, Korea, 21–23 April 2010; pp. 1–8. [Google Scholar] [CrossRef]

- Leicher, A.; Schmidt, A.U.; Shah, Y.; Cha, I. Trusted Computing enhanced OpenID. In Proceedings of the 2010 International Conference for Internet Technology and Secured Transactions, London, UK, 8–11 November 2010; pp. 1–8. [Google Scholar]

- Trusted Computing Group. Implicit Identity Based Device Attestation; Reference Version 1.0, Revision 0.93; Trusted Computing Group: Beaverton, OR, USA, 2018. [Google Scholar]

- Uitto, M.; Heikkinen, A. Exploiting and Evaluating Live 360° Low Latency Video Streaming Using CMAF. In Proceedings of the 2020 European Conference on Networks and Communications (EuCNC), Dubrovnik, Croatia, 15–18 June 2020; pp. 276–280. [Google Scholar]

- Uitto, M.; Heikkinen, A. Evaluation of Live Video Streaming Performance for Low Latency Use Cases in 5G. In Proceedings of the 2021 Joint European Conference on Networks and Communications & 6G Summit (EuCNC/6G Summit), Porto, Portugal, 8–11 June 2021; pp. 431–436. [Google Scholar]

- Erillisverkot. OneWeb and Erillisverkot Demonstrated Low Earth Orbit Satellite Connectivity to Finnish Government Agencies at Westcott Venture Park. Available online: https://www.erillisverkot.fi/en/oneweb-and-erillisverkot-demonstrated-low-earth-orbit-satellite-connectivity-to-finnish-government-agencies-at-westcott-venture-park/ (accessed on 24 November 2021).

| Services | Packet Delay Budget | Packet Error Rate |

|---|---|---|

| V2X messages, remote driving [37] | 5 ms | |

| Information sharing for automated driving between vehicles or vehicle and road side unit [38] | 100 ms | |

| Platooning informative exchange [38] | 20 ms | – |

| Sensor sharing [38] | 50–100 ms | |

| Video sharing [38] | 10–50 ms | |

| Cooperative collision avoidance [38] | 10 ms | |

| Notification of dangerous situations [36] | 10 ms | |

| Remote control of a tractor (15 km/h) | 34 ms |

| Security Objectives | Requirements and Potential Solutions |

|---|---|

| Communications security | Access technology specific confidentiality, authenticity, integrity, and replay protection solutions. 3GPP security and Wi-Fi security cover essentially wireless channels. The 3GPP specifications [39] extend protection to backhaul and support application layer communication. |

| End-to-end security | Application layer solutions, including SSH, TLS, and SRTP, secure critical communications when infrastructure cannot be completely trusted. In private networks where edge computing is used, for example, for accelerating computation or interoperability, end-to-end security may not be feasible. These cases demand that security breakpoints in the edge are trusted. |

| Network and subsystem authorizations | Practical access control requires easy solutions to distribute credentials, that authorize access to networked assets—services and data stored in the network. Fine-grained service specific access control mitigates potential insider and malware attacks. Firewalls, which enforce authorizations, are needed in network borders and in many hosts within the network. Network credentials, such as eSIM, can be delivered, for example, through QR codes. Application credentials, for example, through delegated authorization, such as OAuth2. |

| Resilience | Solutions to assure availability under denial of service attacks or in congestion situations. Reactive security, access control, and traffic prioritization solutions as well as sufficient and redundant capacity, such as satellite links, should be applied when possible. |

| Secure cooperation | Secure networked business models require trusted external partners. Access to partners’ external assets must be secure and integrity verified. Externals access to assets and functions in smart farm should be restricted by time and capabilities to minimize insider threats. |

| Security posture of subsystems | Technical and procedural approaches to manage and verify that different devices in farm and tractors are secure, for example, are enforcing security policies and are running trusted up-to-date software. The network is monitored to detect security policy and integrity violations indicating security breaches or malware. |

| Technology Capabilities in Trialed Private Networks | |||

|---|---|---|---|

| Characteristics | StandAlone LTE/Cow Shed Bubble | StandAlone LTE van + Field Bubbles | Satellite & Wi-Fi |

| Coverage avg | 1000 m | 500–1500 m | Global |

| Capacity uplink avg/max | 2 Mbps/8 Mbps | N/A/10 | 9.8 Mbps |

| Capacity downlink avg/max | 7 Mbps/108 Mbps | N/A/50 | 34.0 Mbps |

| Latency (RTT median) | 50–70 ms | 30–85 ms | 700 ms (geostationary) |

| Security | 3GPP [46] | 3GPP [46] | WPA [47] |

| Frequency | 2350 MHz | 2310 MHz | 26.5–40 GHz |

| Band | 40 | 40 | Ka |

| Bandwidth | 20 MHz | 20 MHz | N/A |

| Transmit power | 5 W | 1 W | N/A |

| Ant. height | 5 m | 10–21 m | 0.75 m |

| Rx antenna gain | Omni 2 dBi | Omni 2 dBi | N/A |

| CAN Messages | CAN Data | SCTP | TCP | Total | Extra bytes | Overhead |

|---|---|---|---|---|---|---|

| 1 | 13 | 48 | 50 | 184 | 171 | 93% |

| 2 | 26 | 60 | 63 | 200 | 174 | 87% |

| 4 | 52 | 88 | 89 | 224 | 172 | 77% |

| 8 | 104 | 140 | 141 | 280 | 176 | 63% |

| 16 | 208 | 244 | 245 | 384 | 176 | 46% |

| 32 | 416 | 452 | 453 | 592 | 176 | 30% |

| 64 | 832 | 868 | 869 | 1008 | 176 | 17% |

| 96 | 1248 | 1284 | 1285 | 1424 | 176 | 12% |

| 100 | 1300 | 1336 | 1337 | 1472 | 172 | 12% |

| Device | Name |

|---|---|

| Camera | AXIS P3935-LR |

| Camera | Hikvision DS-2CD2326G2-ISU/SL |

| Camera | Dahua IPC-HDW4231EM-ASE |

| Camera | Dahua IPC-B1B20P () |

| Switch | Tenda TEF1105P PoE-switch |

| Router | Teltonika RUT955 |

| Router | Goodmill w24h-S |

| PC | HP ProDesk 600 G3 DM Mini PC Core i5-7500T 2.7 GHz 8/256 SSD (SATA) Win 10 Pro |

| PC | Lenovo C24-25 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Heikkilä, M.; Suomalainen, J.; Saukko, O.; Kippola, T.; Lähetkangas, K.; Koskela, P.; Kalliovaara, J.; Haapala, H.; Pirttiniemi, J.; Yastrebova, A.; et al. Unmanned Agricultural Tractors in Private Mobile Networks. Network 2022, 2, 1-20. https://doi.org/10.3390/network2010001

Heikkilä M, Suomalainen J, Saukko O, Kippola T, Lähetkangas K, Koskela P, Kalliovaara J, Haapala H, Pirttiniemi J, Yastrebova A, et al. Unmanned Agricultural Tractors in Private Mobile Networks. Network. 2022; 2(1):1-20. https://doi.org/10.3390/network2010001

Chicago/Turabian StyleHeikkilä, Marjo, Jani Suomalainen, Ossi Saukko, Tero Kippola, Kalle Lähetkangas, Pekka Koskela, Juha Kalliovaara, Hannu Haapala, Juho Pirttiniemi, Anastasia Yastrebova, and et al. 2022. "Unmanned Agricultural Tractors in Private Mobile Networks" Network 2, no. 1: 1-20. https://doi.org/10.3390/network2010001

APA StyleHeikkilä, M., Suomalainen, J., Saukko, O., Kippola, T., Lähetkangas, K., Koskela, P., Kalliovaara, J., Haapala, H., Pirttiniemi, J., Yastrebova, A., & Posti, H. (2022). Unmanned Agricultural Tractors in Private Mobile Networks. Network, 2(1), 1-20. https://doi.org/10.3390/network2010001