Approximating Solutions of Nonlinear Equations Using an Extended Traub Method

Abstract

:1. Introduction

2. Convergence

- (H1)

- solves Equation (1) and is simple.

- (H2)

- ∃ a minimal positive solution of the following equation:where is some nondecreasing and continuous function such that the following is the case:

- (H3)

- ∃ functions continuous and nondecreasing such thatandholds for allDefine functions by the following:In particular, if define the following:and

- (H4)

- Equations have minimal solutions respectively. Define the following parameter:and

- (H5)

3. Numerical Experiments

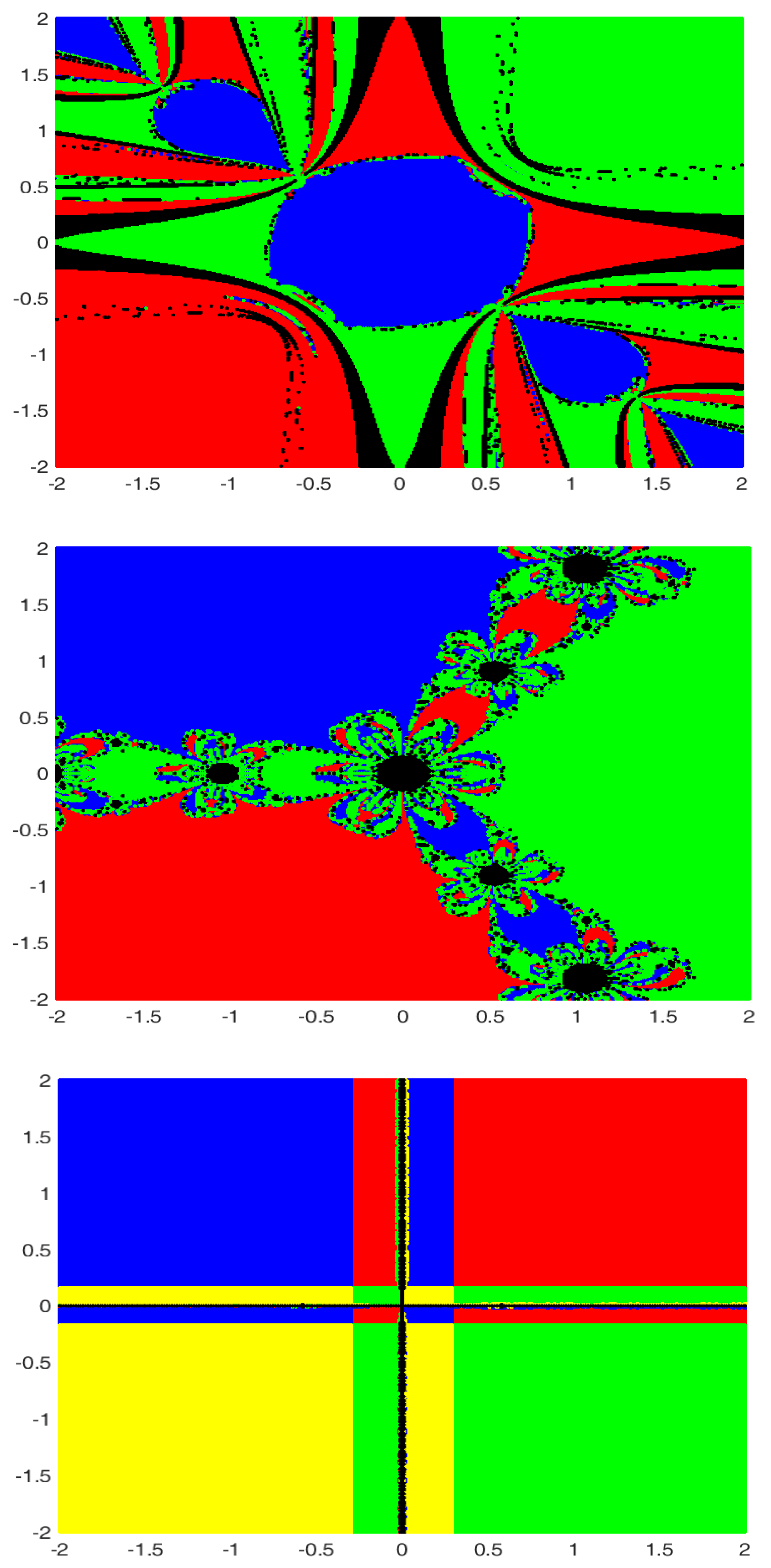

4. Basins of Attractions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables, Volume 30 of Classics in Applied Mathematics; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 2000. [Google Scholar]

- Argyros, I.K. Unified Convergence Criteria for Iterative Banach Space Valued Methods with Applications. Mathematics 2021, 9, 1942. [Google Scholar] [CrossRef]

- Argyros, I.K. The Theory and Applications of Iteration Methods, 2nd ed.; Engineering Series; CRC Press: Boca Raton, FL, USA; Taylor and Francis Group: Abingdon, UK, 2022. [Google Scholar]

- Ostrowski, A.M. Solution of Equations and Systems of Equations, 2nd ed.; Pure and Applied Mathematics; Academic Press: New York, NY, USA; London, UK, 1966; Volume 9. [Google Scholar]

- Amat, S.; Busquier, S.; Bermudez, C.; Plaza, S. On two families of high order Newton type methods. Appl. Math. Lett. 2012, 25, 2209–2222. [Google Scholar] [CrossRef]

- Magréñan, A.A.; Gutiérrez, J.M. Real dynamics for damped Newton’s method applied to cubic polynomials. J. Comput. Appl. Math. 2015, 275, 527–538. [Google Scholar] [CrossRef]

- Behl, R.; Maroju, P.; Martinez, E.; Singh, S. A study of the local convergence of a fifth order iterative method. Indian J. Pure Appl. Math. 2020, 51, 439–455. [Google Scholar]

- Petković, M.S.; Neta, B.; Petković, L.D.; Dzunić, J. Multipoint methods for solving nonlinear equations: A survey. Appl. Math. Comput. 2014, 226, 635–660. [Google Scholar] [CrossRef]

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice Hall: Hoboken, NJ, USA, 1964. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

George, S.; Argyros, I.K.; Argyros, C.I.; Senapati, K. Approximating Solutions of Nonlinear Equations Using an Extended Traub Method. Foundations 2022, 2, 617-623. https://doi.org/10.3390/foundations2030042

George S, Argyros IK, Argyros CI, Senapati K. Approximating Solutions of Nonlinear Equations Using an Extended Traub Method. Foundations. 2022; 2(3):617-623. https://doi.org/10.3390/foundations2030042

Chicago/Turabian StyleGeorge, Santhosh, Ioannis K. Argyros, Christopher I. Argyros, and Kedarnath Senapati. 2022. "Approximating Solutions of Nonlinear Equations Using an Extended Traub Method" Foundations 2, no. 3: 617-623. https://doi.org/10.3390/foundations2030042

APA StyleGeorge, S., Argyros, I. K., Argyros, C. I., & Senapati, K. (2022). Approximating Solutions of Nonlinear Equations Using an Extended Traub Method. Foundations, 2(3), 617-623. https://doi.org/10.3390/foundations2030042