Exploring 3D Object Detection for Autonomous Factory Driving: Advanced Research on Handling Limited Annotations with Ground Truth Sampling Augmentation †

Abstract

1. Introduction

2. Related Work

2.1. 3D Object Detection with Infrastructural LiDAR Sensors

2.2. Data Augmentation

3. Methods

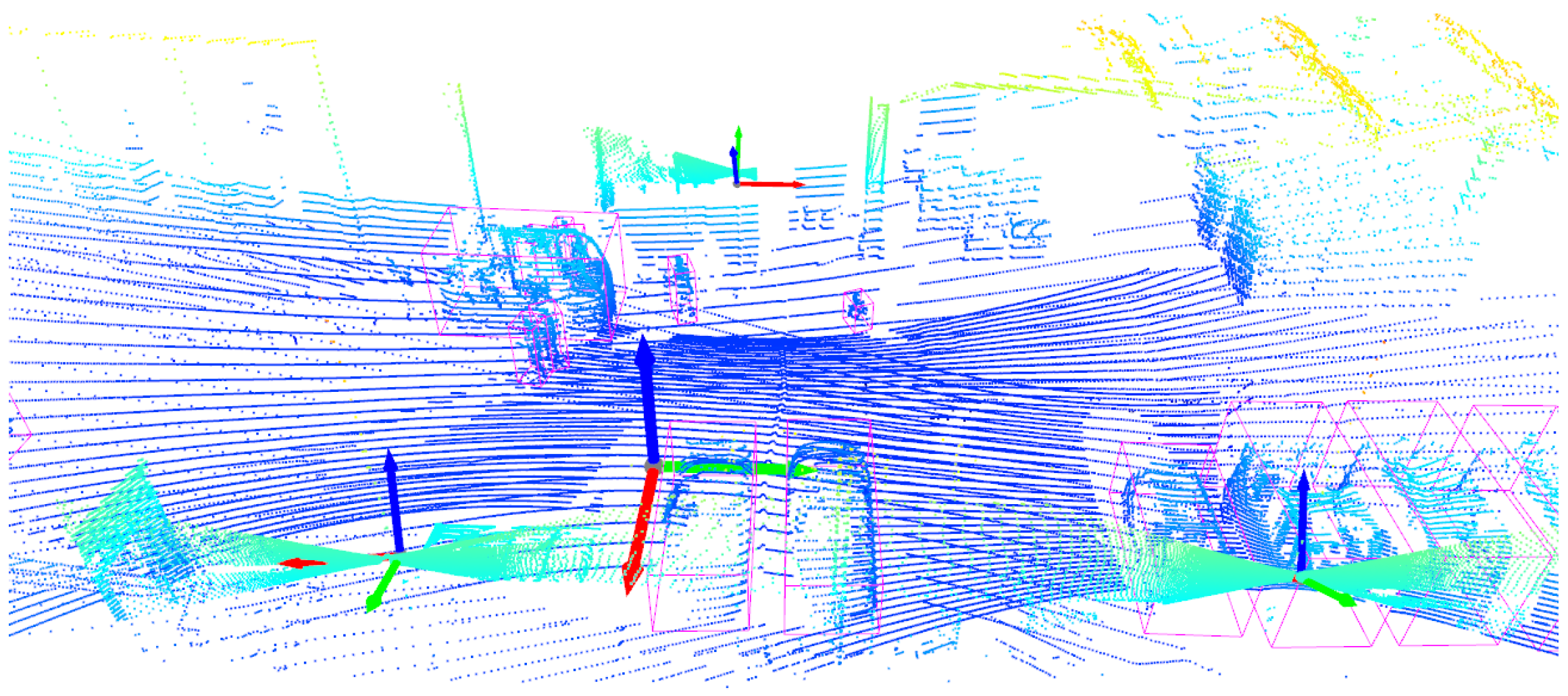

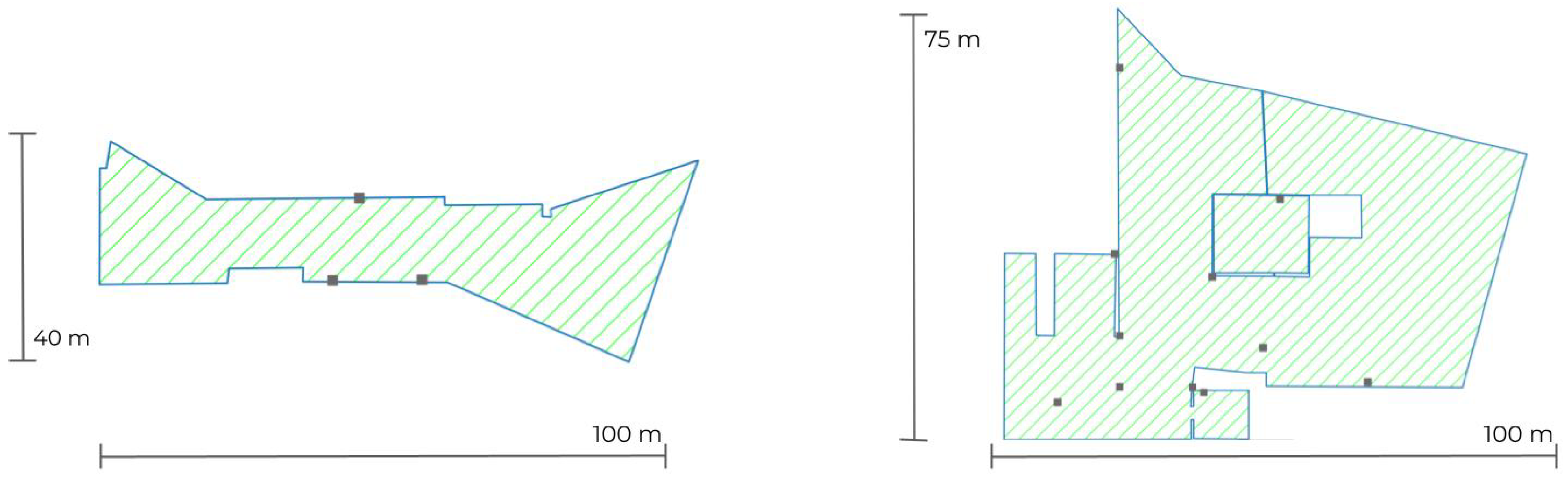

3.1. Sensor Setup & Data

3.2. 3D Object Detectors

3.3. Ground Truth Sampling

3.4. Evaluation Metrics

4. Experiments

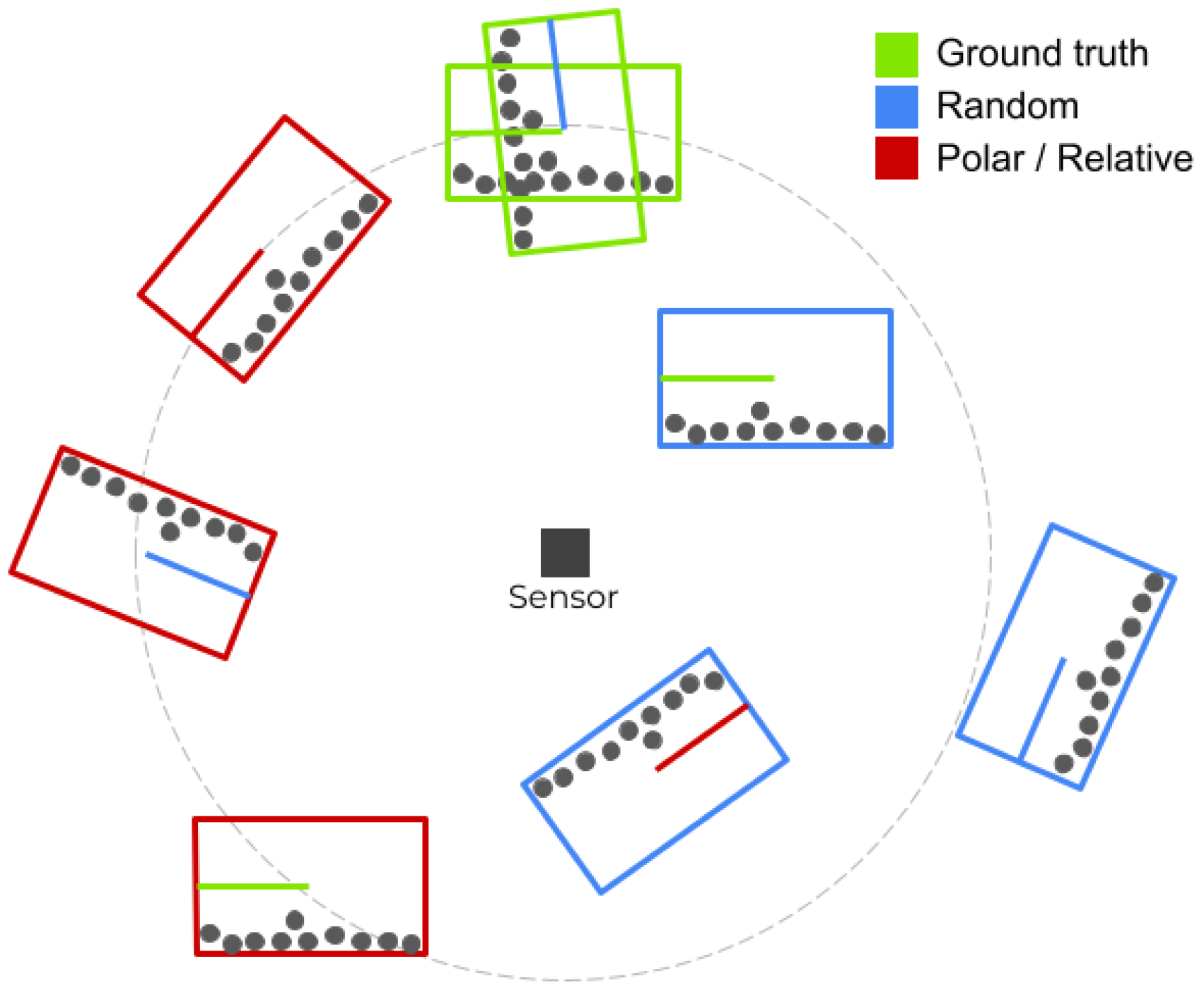

4.1. Ground Truth Sampling Methods

4.2. Reduction of Dataset Size

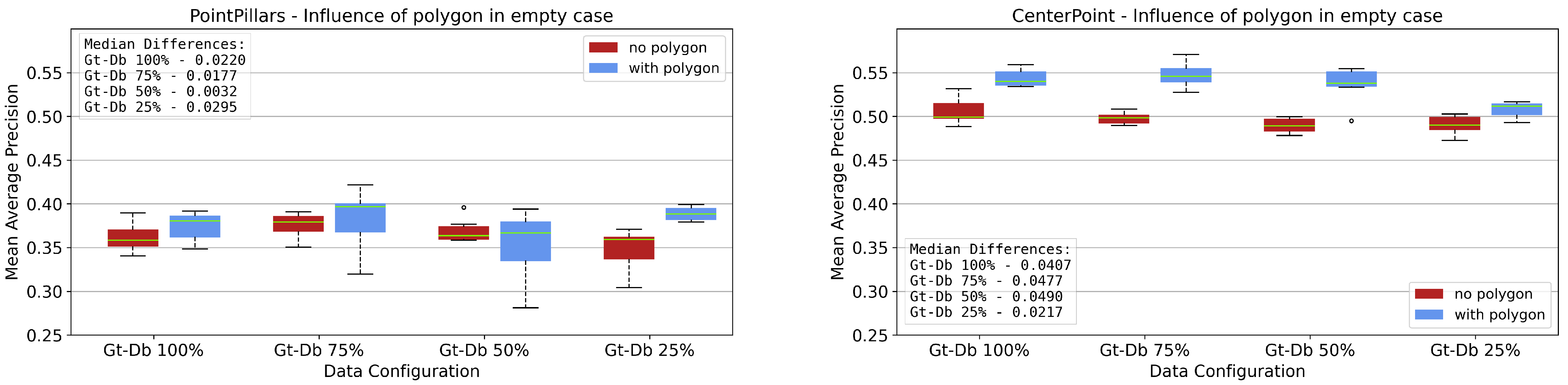

4.3. Empty Case Experiments

5. Conclusions & Further Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Chang, M.F.; Lambert, J.; Sangkloy, P.; Singh, J.; Bak, S.; Hartnett, A.; Wang, D.; Carr, P.; Lucey, S.; Ramanan, D.; et al. Argoverse: 3D tracking and forecasting with rich maps. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8740–8749. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Geyer, J.; Kassahun, Y.; Mahmudi, M.; Ricou, X.; Durgesh, R.; Chung, A.S.; Hauswald, L.; Pham, V.H.; Mühlegg, M.; Dorn, S.; et al. A2d2: Audi autonomous driving dataset. arXiv 2020, arXiv:2004.06320. [Google Scholar]

- Houston, J.; Zuidhof, G.; Bergamini, L.; Ye, Y.; Jain, A.; Omari, S.; Iglovikov, V.; Ondruska, P. One Thousand and One Hours: Self-driving Motion Prediction Dataset. In Proceedings of the Conference on Robot Learning (CoRL), Cambridge, MA, USA, 16–18 November 2020; pp. 1–10. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

- Creß, C.; Zimmer, W.; Strand, L.; Lakshminarasimhan, V.; Fortkord, M.; Dai, S.; Knoll, A. A9-Dataset: Multi-Sensor Infrastructure-Based Dataset for Mobility Research. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 4–9 June 2022; pp. 965–970. [Google Scholar]

- Wang, H.; Zhang, X.; Li, J.; Li, Z.; Yang, L.; Pan, S.; Deng, Y. IPS300+: A Challenging Multimodal Dataset for Intersection Perception System. arXiv 2021, arXiv:2106.02781. [Google Scholar]

- Yongqiang, D.; Dengjiang, W.; Gang, C.; Bing, M.; Xijia, G.; Yajun, W.; Jianchao, L.; Yanming, F.; Juanjuan, L. BAAI-VANJEE Roadside Dataset: Towards the Connected Automated Vehicle Highway technologies in Challenging Environments of China. arXiv 2021, arXiv:2105.14370. [Google Scholar]

- Yu, H.; Luo, Y.; Shu, M.; Huo, Y.; Yang, Z.; Shi, Y.; Guo, Z.; Li, H.; Hu, X.; Yuan, J.; et al. DAIR-V2X: A Large-Scale Dataset for Vehicle-Infrastructure Cooperative 3D Object Detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 21361–21370. [Google Scholar]

- Kloeker, L.; Liu, C.; Wei, C.; Eckstein, L. Framework for Quality Evaluation of Smart Roadside Infrastructure Sensors for Automated Driving Applications. arXiv 2023, arXiv:2304.07745. [Google Scholar]

- Wu, A.; He, P.; Li, X.; Chen, K.; Ranka, S.; Rangarajan, A. An Efficient Semi-Automated Scheme for Infrastructure LiDAR Annotation. arXiv 2023, arXiv:2301.10732. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef]

- Mao, J.; Shi, S.; Wang, X.; Li, H. 3D Object Detection for Autonomous Driving: A Comprehensive Survey. Int. J. Comput. Vis. 2023, 1–55. [Google Scholar] [CrossRef]

- Qian, R.; Lai, X.; Li, X. 3D Object Detection for Autonomous Driving: A Survey. Pattern Recognit. 2022, 130. [Google Scholar] [CrossRef]

- Pan, X.; Xia, Z.; Song, S.; Li, L.E.; Huang, G. 3D Object Detection with Pointformer. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7463–7472. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D object proposal generation and detection from point cloud. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar] [CrossRef]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3DSSD: Point-based 3D Single Stage Object Detector. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11040–11048. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. STD: Sparse-to-Dense 3D Object Detector for Point Cloud. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1951–1960. [Google Scholar]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel R-CNN: Towards High Performance Voxel-based 3D Object Detection. In Proceedings of the Conference on Artificial Intelligence (AAAI), virtual, 2–9 February 2021; pp. 1201–1209. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; Volume 2019-June, pp. 12689–12697. [Google Scholar] [CrossRef]

- Liu, Z.; Zhao, X.; Huang, T.; Hu, R.; Zhou, Y.; Bai, X. TANet: Robust 3D Object Detection from Point Clouds with Triple Attention. In Proceedings of the Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Mao, J.; Xue, Y.; Niu, M.; Bai, H.; Feng, J.; Liang, X.; Xu, H.; Xu, C. Voxel Transformer for 3D Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 3164–3173. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. PV-RCNN: Point-Voxel Feature Set Abstraction for 3D Object Detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From Points to Parts: 3D Object Detection from Point Cloud with Part-aware and Part-aggregation Network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2647–2664. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Wen, C.; Li, W.; Li, X.; Yang, R.; Wang, C. Transformation-Equivariant 3D Object Detection for Autonomous Driving. arXiv 2022, arXiv:2211.11962. [Google Scholar] [CrossRef]

- Yin, T.; Zhou, X.; Krähenbühl, P. Center-based 3D Object Detection and Tracking. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 11784–11793. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar] [CrossRef]

- Chen, H.; Liu, B.; Zhang, X.; Qian, F.; Mao, Z.M.; Feng, Y.; Author, C. A Cooperative Perception Environment for Traffic Operations and Control. arXiv 2022, arXiv:2208.02792. [Google Scholar]

- Kloeker, L.; Geller, C.; Kloeker, A.; Eckstein, L. High-Precision Digital Traffic Recording with Multi-LiDAR Infrastructure Sensor Setups. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–8. [Google Scholar]

- Zimmer, W.; Birkner, J.; Brucker, M.; Nguyen, H.T.; Petrovski, S.; Wang, B.; Knoll, A.C. InfraDet3D: Multi-Modal 3D Object Detection based on Roadside Infrastructure Camera and LiDAR Sensors. arXiv 2023, arXiv:2305.00314. [Google Scholar]

- Cai, X.; Jiang, W.; Xu, R.; Zhao, W.; Ma, J.; Liu, S.; Li, Y. Analyzing Infrastructure LiDAR Placement with Realistic LiDAR Simulation Library. arXiv 2022, arXiv:2211.15975. [Google Scholar]

- Zimmer, W.; Wu, J.; Zhou, X.; Knoll, A.C. Real-Time And Robust 3D Object Detection with Roadside LiDARs. In Proceedings of the 12th International Scientific Conference on Mobility and Transport: Mobility Innovations for Growing Megacities, Singapore, 5–7 April 2022; pp. 199–219. [Google Scholar]

- Zimmer, W.; Grabler, M.; Knoll, A. Real-Time and Robust 3D Object Detection within Road-Side LiDARs Using Domain Adaptation. arXiv 2022, arXiv:2204.00132. [Google Scholar]

- Arnold, E.; Dianati, M.; de Temple, R.; Fallah, S. Cooperative Perception for 3D Object Detection in Driving Scenarios using Infrastructure Sensors. IEEE Trans. Intell. Transp. Syst. 2020, 23, 1852–1864. [Google Scholar] [CrossRef]

- Bai, Z.; Wu, G.; Qi, X.; Liu, Y.; Oguchi, K.; Barth, M.J. Infrastructure-Based Object Detection and Tracking for Cooperative Driving Automation: A Survey. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 5–9 June 2022; pp. 1366–1373. [Google Scholar]

- Sun, P.; Sun, C.; Wang, R.; Zhao, X. Object Detection Based on Roadside LiDAR for Cooperative Driving Automation: A Review. Sensors 2022, 22, 9316. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; López, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the Conference on Robot Learning (CoRL), Mountain View, CA, USA, 13–15 November 2017. [Google Scholar]

- Strigel, E.; Meissner, D.; Seeliger, F.; Wilking, B.; Dietmayer, K. The Ko-PER Intersection Laserscanner and Video Dataset. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 1900–1901. [Google Scholar]

- Busch, S.; Koetsier, C.; Axmann, J.; Brenner, C. LUMPI: The Leibniz University Multi-Perspective Intersection Dataset. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 5–9 June 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022; Volume 2022-June, pp. 1127–1134. [Google Scholar] [CrossRef]

- Bai, Z.; Wu, G.; Barth, M.J.; Liu, Y.; Sisbot, E.A.; Oguchi, K. PillarGrid: Deep Learning-based Cooperative Perception for 3D Object Detection from Onboard-Roadside LiDAR. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 24–28 September 2022; pp. 1743–1749. [Google Scholar] [CrossRef]

- Bai, Z.; Wu, G.; Qi, X.; Liu, Y.; Oguchi, K.; Barth, M.J. Cyber Mobility Mirror for Enabling Cooperative Driving Automation in Mixed Traffic: A Co-Simulation Platform. arXiv 2022, arXiv:2201.09463. [Google Scholar] [CrossRef]

- Hahner, M.; Dai, D.; Liniger, A.; Gool, L.V. Quantifying Data Augmentation for LiDAR based 3D Object Detection. arXiv 2020, arXiv:2004.01643. [Google Scholar]

- Reuse, M.; Simon, M.; Sick, B. About the Ambiguity of Data Augmentation for 3D Object Detection in Autonomous Driving. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 979–987. [Google Scholar]

- Cheng, S.; Leng, Z.; Cubuk, E.D.; Zoph, B.; Bai, C.; Ngiam, J.; Song, Y.; Caine, B.; Vasudevan, V.; Li, C.; et al. Improving 3D Object Detection through Progressive Population Based Augmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 279–294. [Google Scholar]

- Choi, J.; Song, Y.; Kwak, N. Part-Aware Data Augmentation for 3D Object Detection in Point Cloud. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 3391–3397. [Google Scholar]

- Xiao, A.; Huang, J.; Guan, D.; Cui, K.; Lu, S.; Shao, L. PolarMix: A General Data Augmentation Technique for LiDAR Point Clouds. arXiv 2022, arXiv:2208.00223. [Google Scholar]

- Fang, J.; Zuo, X.; Zhou, D.; Jin, S.; Wang, S.; Zhang, L. LiDAR-Aug: A General Rendering-based Augmentation Framework for 3D Object Detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4710–4720. [Google Scholar]

- Zhu, B.; Jiang, Z.; Zhou, X.; Li, Z.; Yu, G. Class-balanced Grouping and Sampling for Point Cloud 3D Object Detection. arXiv 2019, arXiv:1908.09492. [Google Scholar]

- Šebek, P.; Pokorný, Š.; Vacek, P.; Svoboda, T. Real3D-Aug: Point Cloud Augmentation by Placing Real Objects with Occlusion Handling for 3D Detection and Segmentation. arXiv 2022, arXiv:2206.07634. [Google Scholar]

- Lee, D.; Park, J.; Kim, J. Resolving Class Imbalance for LiDAR-based Object Detector by Dynamic Weight Average and Contextual Ground Truth Sampling. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Waikola, HI, USA, 2–7 January 2023; pp. 682–691. [Google Scholar]

- Shi, P.; Qi, H.; Liu, Z.; Yang, A. Context-guided ground truth sampling for multi-modality data augmentation in autonomous driving. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems (ITSC), Bilbao, Spain, 24–28 September 2023; John Wiley and Sons Inc.: Hoboken, NJ, USA, 2023; Volume 17, pp. 463–473. [Google Scholar] [CrossRef]

- OpenPCDet Development Team. OpenPCDet: An Open-Source Toolbox for 3D Object Detection from Point Clouds. 2020. Available online: https://github.com/open-mmlab/OpenPCDet (accessed on 17 November 2023).

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D Object Detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4604–4612. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Smith, L.N. A disciplined approach to neural network hyper-parameters: Part 1—Learning rate, batch size, momentum, and weight decay. arXiv 2018, arXiv:1803.09820. [Google Scholar]

| Orientation | mAP of Median in % ↑ | ||||||

|---|---|---|---|---|---|---|---|

| PointPillars (62.82) | CenterPoint (68.58) | ||||||

| Position | Ground Truth | Random | Relative | Ground Truth | Random | Relative | |

| Ground truth | 63.31/63.54 | 63.92/64.26 | —/— | 71.78/70.71 | 72.04/72.01 | —/— | |

| Random | 63.92/66.61 | 63.22/65.49 | 64.35/65.70 | 72.14/72.95 | 72.51/72.96 | 72.46/72.64 | |

| Polar | 64.16/65.16 | 63.84/64.81 | 63.63/65.72 | 72.32/73.50 | 72.27/72.02 | 72.16/71.58 | |

| Training Data | mAP of Median in % ↑ | |||

|---|---|---|---|---|

| 100% | 75% | 50% | 25% | |

| KITTI + GT Sampling | 51.90 | 51.08 | 47.35 | 45.25 |

| KITTI − GT Sampling | 48.18 | 46.95 | 42.45 | 34.48 |

| Difference | 3.73 | 4.13 | 4.90 | 10.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reuse, M.; Amende, K.; Simon, M.; Sick, B. Exploring 3D Object Detection for Autonomous Factory Driving: Advanced Research on Handling Limited Annotations with Ground Truth Sampling Augmentation. Comput. Sci. Math. Forum 2024, 9, 5. https://doi.org/10.3390/cmsf2024009005

Reuse M, Amende K, Simon M, Sick B. Exploring 3D Object Detection for Autonomous Factory Driving: Advanced Research on Handling Limited Annotations with Ground Truth Sampling Augmentation. Computer Sciences & Mathematics Forum. 2024; 9(1):5. https://doi.org/10.3390/cmsf2024009005

Chicago/Turabian StyleReuse, Matthias, Karl Amende, Martin Simon, and Bernhard Sick. 2024. "Exploring 3D Object Detection for Autonomous Factory Driving: Advanced Research on Handling Limited Annotations with Ground Truth Sampling Augmentation" Computer Sciences & Mathematics Forum 9, no. 1: 5. https://doi.org/10.3390/cmsf2024009005

APA StyleReuse, M., Amende, K., Simon, M., & Sick, B. (2024). Exploring 3D Object Detection for Autonomous Factory Driving: Advanced Research on Handling Limited Annotations with Ground Truth Sampling Augmentation. Computer Sciences & Mathematics Forum, 9(1), 5. https://doi.org/10.3390/cmsf2024009005