Computational Imaging and Sensing

A topical collection in Sensors (ISSN 1424-8220). This collection belongs to the section "Sensing and Imaging".

Viewed by 41821Editors

Interests: imaging; single-pixel imaging; single-photon imaging; ultrafast imaging; quantum imaging

Special Issues, Collections and Topics in MDPI journals

Interests: ultrafast imaging; photoacoustic imaging; laser beam/pulse shaping

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

Computational imaging refers to the technique, in which optical capture methods and computational algorithms are jointly designed in the imaging system, aiming to produce images with more information. One clear feature of computational imaging is that the captured data may not actually look like an image in the conventional sense but are transformed to better suit the algorithm layer, where essential information are computed. Therefore, computational imaging senses the required information of targets and/or scenes, blurring the boundary of imaging and sensing.

Benefiting from the ever developing processor industry, computational imaging and sensing begin to play an important role in imaging techniques and have many applications in areas of smartphone photography, 3D sensing and reconstruction, biological and medical imaging, synthetic aperture radar, remote sensing and so on. A lot of recent researches have accelerated the imaging and sensing performance of those applications.

The topical collection focuses on computational imaging to obtain high-quality images and to solve problems which cannot be solved by optical capturing or post processing alone, in order to improve imaging and sensing performance of physical or optical devices in terms of image quality, imaging speed, and functionality. The purpose of this topical collection is to broadly engage the communities of optical imaging, image processing and signal sensing to provide a forum for the researchers and engineers related to this rapidly developing field and share their novel and original research regarding the topic of computational imaging and sensing. Survey papers addressing relevant topics are also welcome. Topics of interest include but are not limited to:

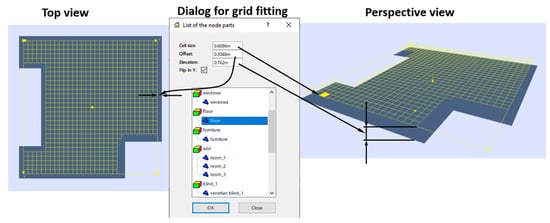

- Computational photography for 3D imaging;

- Depth estimation and 3D sensing;

- Biological imaging (FLIM/FPM/SIM)

- Medical imaging (CT/MRI/PET image reconstruction);

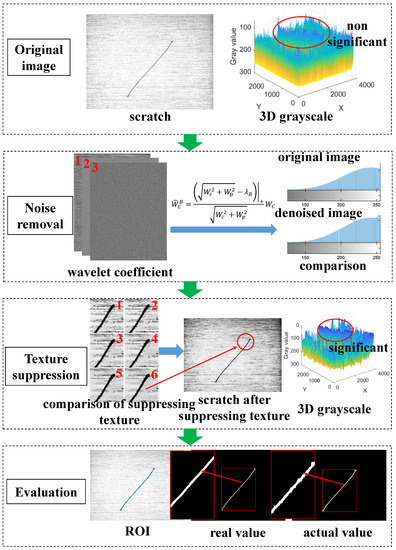

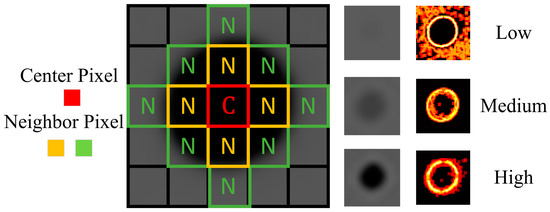

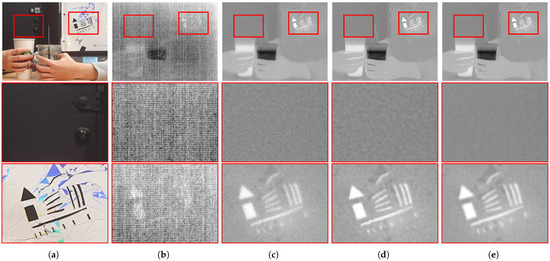

- Image restoration and denoising;

- Image registration and super-resolution imaging;

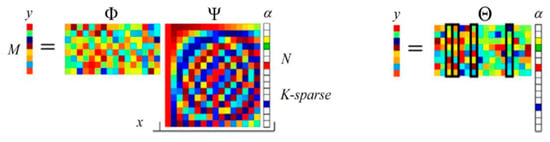

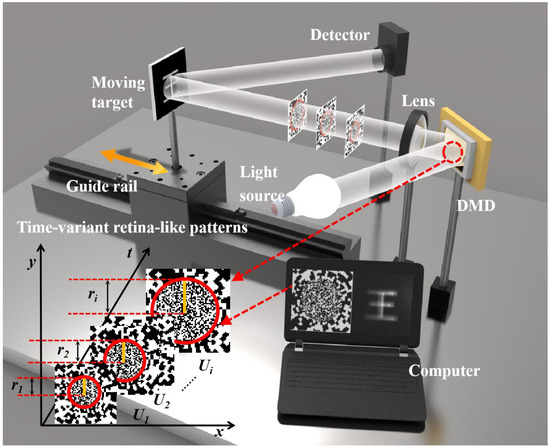

- Ghost imaging and single-pixel imaging;

- Photon counting and single-photon imaging;

- High-speed imaging systems and bandwidth reduction;

- Computational sensing for advanced driver assistance systems (ADAS);

- Synthetic aperture radar (SAR) imaging;

- Remote sensing;

- Ultrasound imaging;

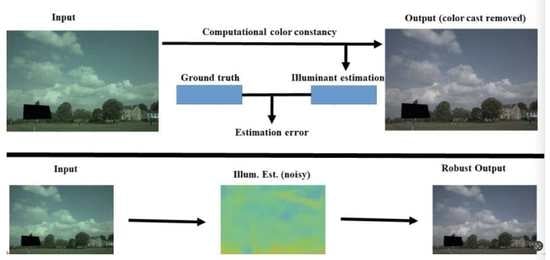

- Computational sensing for advanced image signal processor (ISP);

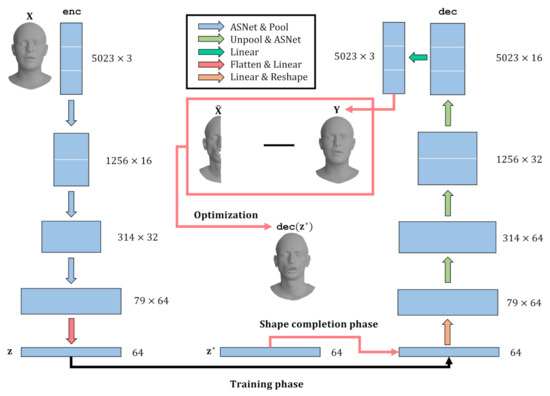

- Deep learning for image reconstruction;

- Remote sensing and UAV image processing;

- Under-water imaging and dehazing.

Prof. Dr. Ming-Jie Sun

Prof. Dr. Jinyang Liang

Collection Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.