Development and Initial Validation of the in-Session Patient Affective Reactions Questionnaire (SPARQ) and the Rift In-Session Questionnaire (RISQ)

Abstract

:1. Introduction

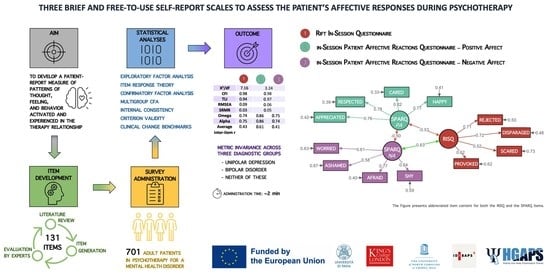

Aim

2. Materials and Methods

2.1. Procedures and Sample Characteristics

2.2. Item Generation

2.3. Additional Measures

2.4. Statistical Analyses

3. Results

3.1. Sample Characteristics

3.2. Preliminary Analyses

3.3. Item Pool Reduction—Iterative Exploratory Factor Analysis

3.4. Item Response Theory

3.5. Confirmatory Factor Analysis

3.6. Invariance Testing with Multigroup CFA

3.7. Internal Consistency and Score Precision

3.8. Criterion Validity

3.9. Sensitivity Analyses

4. Discussion

Strengths, Limitations, and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. in-Session Patient Affective Reactions Questionnaire (SPARQ)

| Item nr. | Not at All | A Little | Somewhat | A Lot | Very Much | |

|---|---|---|---|---|---|---|

| 1 | I felt happy to see my therapist. | 0 | 1 | 2 | 3 | 4 |

| 2 | I felt ashamed with my therapist about my fantasy, desires, mindset, behavior, or symptoms. | 0 | 1 | 2 | 3 | 4 |

| 3 | I felt worried my therapist couldn’t help me. | 0 | 1 | 2 | 3 | 4 |

| 4 | I felt shy, like I wanted to hide from my therapist or end the session early. | 0 | 1 | 2 | 3 | 4 |

| 5 | I felt afraid to spoke my mind, for fear of being judged, criticized, disliked by my therapist. | 0 | 1 | 2 | 3 | 4 |

| 6 | I felt my therapist cared about me. | 0 | 1 | 2 | 3 | 4 |

| 7 | I felt respected by my therapist. | 0 | 1 | 2 | 3 | 4 |

| 8 | I felt appreciated by my therapist. | 0 | 1 | 2 | 3 | 4 |

Appendix A.2. Rift In-Session Questionnaire (RISQ)

| Item nr. | |||

| 1 | I felt provoked or attacked by my therapist. | No | Yes |

| 2 | I felt scared, uneasy, like my therapist might harm me. | No | Yes |

| 3 | I felt rejected by my therapist. | No | Yes |

| 4 | I felt disparaged or belittled by my therapist. | No | Yes |

References

- Robinson, M.D.; Watkins, E.; Harmon-Jones, E. (Eds.) Handbook of Cognition and Emotion; Guilford Press: New York, NY, USA, 2013; ISBN 9781462509997. [Google Scholar]

- Faustino, B. Minding My Brain: Fourteen Neuroscience-based Principles to Enhance Psychotherapy Responsiveness. Clin. Psychol. Psychoth 2022, 29, 1254–1275. [Google Scholar] [CrossRef]

- Lane, R.D.; Subic-Wrana, C.; Greenberg, L.; Yovel, I. The Role of Enhanced Emotional Awareness in Promoting Change across Psychotherapy Modalities. J. Psychother. Integr. 2022, 32, 131–150. [Google Scholar] [CrossRef]

- Hayes, J.A.; Gelso, C.J.; Goldberg, S.; Kivlighan, D.M. Countertransference Management and Effective Psychotherapy: Meta-Analytic Findings. Psychotherapy 2018, 55, 496–507. [Google Scholar] [CrossRef]

- Pascual-Leone, A. How Clients “Change Emotion with Emotion”: A Programme of Research on Emotional Processing. Psychother. Res. 2018, 28, 165–182. [Google Scholar] [CrossRef]

- Peluso, P.R.; Freund, R.R. Therapist and Client Emotional Expression and Psychotherapy Outcomes: A Meta-Analysis. Psychotherapy 2018, 55, 461–472. [Google Scholar] [CrossRef] [PubMed]

- Greenberg, L.S. Emotions, the Great Captains of Our Lives: Their Role in the Process of Change in Psychotherapy. Am. Psychol. 2012, 67, 697–707. [Google Scholar] [CrossRef] [PubMed]

- Stefana, A. Erotic Transference. Br. J. Psychother. 2017, 33, 505–513. [Google Scholar] [CrossRef]

- Bradley, R.; Heim, A.K.; Westen, D. Transference Patterns in the Psychotherapy of Personality Disorders: Empirical Investigation. Br. J. Psychiatry 2005, 186, 342–349. [Google Scholar] [CrossRef] [PubMed]

- Tanzilli, A.; Colli, A.; Gualco, I.; Lingiardi, V. Patient Personality and Relational Patterns in Psychotherapy: Factor Structure, Reliability, and Validity of the Psychotherapy Relationship Questionnaire. J. Personal. Assess. 2018, 100, 96–106. [Google Scholar] [CrossRef]

- Gabbard, G.O. Psychodynamic Psychiatry in Clinical Practice, 5th ed.; American Psychiatric Publishing: Arlington, VA, USA, 2014. [Google Scholar]

- McWilliams, N. Psychoanalytic Diagnosis: Understanding Personality Structure in the Clinical Process; The Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Lingiardi, V.; McWilliams, N. Psychodynamic Diagnostic Manual: PDM-2; Guilford Press: New York, NY, USA, 2017. [Google Scholar]

- Clarkin, J.F.; Caligor, E.; Sowislo, J.F. Transference-Focused Psychotherapy for Levels of Personality Pathology Severity. In Gabbard’s Textbook of Psychotherapeutic Treatments; Crisp, H., Gabbard, G.O., Eds.; American Psychiatric Publishing: Washington, DC, USA, 2022. [Google Scholar]

- Høglend, P. Exploration of the Patient-Therapist Relationship in Psychotherapy. AJP 2014, 171, 1056–1066. [Google Scholar] [CrossRef]

- Markin, R.D. Toward a Common Identity for Relationally Oriented Clinicians: A Place to Hang One’s Hat. Psychotherapy 2014, 51, 327–333. [Google Scholar] [CrossRef]

- Ulberg, R.; Hummelen, B.; Hersoug, A.G.; Midgley, N.; Høglend, P.A.; Dahl, H.-S.J. The First Experimental Study of Transference Work–in Teenagers (FEST–IT): A Multicentre, Observer- and Patient-Blind, Randomised Controlled Component Study. BMC Psychiatry 2021, 21, 106. [Google Scholar] [CrossRef]

- Bhatia, A.; Gelso, C.J. Therapists’ Perspective on the Therapeutic Relationship: Examining a Tripartite Model. Couns. Psychol. Q. 2018, 31, 271–293. [Google Scholar] [CrossRef]

- Høglend, P.; Hagtvet, K. Change Mechanisms in Psychotherapy: Both Improved Insight and Improved Affective Awareness Are Necessary. J. Consult. Clin. Psychol. 2019, 87, 332–344. [Google Scholar] [CrossRef]

- Markin, R.D.; McCarthy, K.S.; Barber, J.P. Transference, Countertransference, Emotional Expression, and Session Quality over the Course of Supportive Expressive Therapy: The Raters’ Perspective. Psychother. Res. 2013, 23, 152–168. [Google Scholar] [CrossRef] [PubMed]

- Greenberg, L.S. Theory of Functioning in Emotion-Focused Therapy. In Clinical Handbook of Emotion-Focused Therapy; Greenberg, L.S., Goldman, R.N., Eds.; American Psychological Association: Washington, DC, USA, 2019; pp. 37–59. ISBN 9781433829772. [Google Scholar]

- Greenberg, L.S.; Goldman, R.N. Theory of Practice of Emotion-Focused Therapy. In Clinical Handbook of Emotion-Focused Therapy; Greenberg, L.S., Goldman, R.N., Eds.; American Psychological Association: Washington, DC, USA, 2019; pp. 61–89. ISBN 9781433829772. [Google Scholar]

- Stefana, A.; Bulgari, V.; Youngstrom, E.A.; Dakanalis, A.; Bordin, C.; Hopwood, C.J. Patient Personality and Psychotherapist Reactions in Individual Psychotherapy Setting: A Systematic Review. Clin. Psychol. Psychother. 2020, 27, 697–713. [Google Scholar] [CrossRef] [PubMed]

- Stefana, A. History of Countertransference: From Freud to the British Object Relations School, 1st ed.; Routledge: London, UK, 2017; ISBN 9781315445601. [Google Scholar] [CrossRef]

- Stefana, A.; Hinshelwood, R.D.; Borensztejn, C.L. Racker and Heimann on Countertransference: Similarities and Differences. Psychoanal. Q. 2021, 90, 105–137. [Google Scholar] [CrossRef]

- Norcross, J.C.; Lambert, M.J. Psychotherapy Relationships That Work, Volume 1: Evidence-Based Therapist Contributions, 3rd ed.; Oxford University Press: Oxford, UK, 2019. [Google Scholar]

- Stefana, A.; Fusar-Poli, P.; Gnisci, C.; Vieta, E.; Youngstrom, E.A. Clinicians’ Emotional Reactions toward Patients with Depressive Symptoms in Mood Disorders: A Narrative Scoping Review of Empirical Research. Int. J. Environ. Res. Public Health 2022, 19, 15403. [Google Scholar] [CrossRef]

- Luborsky, L.; Crits-Christoph, P. Understanding Transference: The Core Conflictual Relationship Theme Method; Subsequent Edition; American Psychological Association: Washington, DC, USA, 1998; ISBN 9781557984531. [Google Scholar]

- Bänninger-Huber, E.; Salvenauer, S. Different Types of Laughter and Their Function for Emotion Regulation in Dyadic Interactions. Curr. Psychol. 2022. [Google Scholar] [CrossRef]

- Benecke, C.; Peham, D.; Bänninger-Huber, E. Nonverbal Relationship Regulation in Psychotherapy. Psychother. Res. 2005, 15, 81–90. [Google Scholar] [CrossRef]

- Wieder, G.; Fischer, M.S.; Einsle, F.; Baucom, D.H.; Hahlweg, K.; Wittchen, H.-U.; Weusthoff, S. Fundamental Frequency during Cognitive Preparation and Its Impact on Therapy Outcome for Panic Disorder with Agoraphobia. Behav. Res. Ther. 2020, 135, 103728. [Google Scholar] [CrossRef]

- Multon, K.D.; Patton, M.J.; Kivlighan, D.M. Development of the Missouri Identifying Transference Scale. J. Couns. Psychol. 1996, 43, 243–252. [Google Scholar] [CrossRef]

- Zittel Conklin, C.; Westen, D. The Countertransference Questionnaire; Emory University Departments of Psychology and Psychiatry and Behavioral Sciences: Atlanta, GE, USA, 2003. [Google Scholar]

- McLaughlin, A.A.; Keller, S.M.; Feeny, N.C.; Youngstrom, E.A.; Zoellner, L.A. Patterns of Therapeutic Alliance: Rupture–Repair Episodes in Prolonged Exposure for Posttraumatic Stress Disorder. J. Consult. Clin. Psychol. 2014, 82, 112–121. [Google Scholar] [CrossRef] [Green Version]

- Izard, C.E.; Libero, D.Z.; Putnam, P.; Haynes, O.M. Stability of Emotion Experiences and Their Relations to Traits of Personality. J. Personal. Soc. Psychol. 1993, 64, 847–860. [Google Scholar] [CrossRef] [PubMed]

- Wampold, B.E.; Flückiger, C. The Alliance in Mental Health Care: Conceptualization, Evidence and Clinical Applications. World Psychiatry 2023, 22, 25–41. [Google Scholar] [CrossRef] [PubMed]

- Flückiger, C.; Del Re, A.C.; Wampold, B.E.; Horvath, A.O. The Alliance in Adult Psychotherapy: A Meta-Analytic Synthesis. Psychotherapy 2018, 55, 316–340. [Google Scholar] [CrossRef]

- Faul, L.; LaBar, K.S. Mood-Congruent Memory Revisited. Psychol. Rev. 2022. [Google Scholar] [CrossRef]

- Harris, P.A.; Scott, K.W.; Lebo, L.; Hassan, N.; Lightner, C.; Pulley, J. ResearchMatch: A National Registry to Recruit Volunteers for Clinical Research. Acad. Med. 2012, 87, 66–73. [Google Scholar] [CrossRef] [Green Version]

- Chandler, J.; Shapiro, D. Conducting Clinical Research Using Crowdsourced Convenience Samples. Annu. Rev. Clin. Psychol. 2016, 12, 53–81. [Google Scholar] [CrossRef] [Green Version]

- DeVellis, R.F.; Thorpe, C.T. Scale Development: Theory and Applications, 5th ed.; Sage: Thousand Oaks, CA, USA, 2022. [Google Scholar]

- McCoach, D.B.; Gable, R.K.; Madura, J.P. Instrument Development in the Affective Domain: School and Corporate Applications, 3rd ed.; Springer Science + Business Media: New York, NY, USA, 2013; ISBN 978-1-4614-7134-9. [Google Scholar]

- Tellegen, A.; Watson, D.; Clark, L.A. On the Dimensional and Hierarchical Structure of Affect. Psychol. Sci. 1999, 10, 297–303. [Google Scholar] [CrossRef]

- Clark, L.A.; Watson, D. Constructing Validity: New Developments in Creating Objective Measuring Instruments. Psychol. Assess. 2019, 31, 1412–1427. [Google Scholar] [CrossRef]

- Johnson, S.L.; Leedom, L.J.; Muhtadie, L. The Dominance Behavioral System and Psychopathology: Evidence from Self-Report, Observational, and Biological Studies. Psychol. Bull. 2012, 138, 692–743. [Google Scholar] [CrossRef] [Green Version]

- Gray, J.A.; McNaughton, N. The Neuropsychology of Anxiety: Reprise. In Perspectives in Anxiety, Panic and Fear; Hope, D.A., Ed.; University of Nebraska Press: Lincoln, NE, USA, 1996; Volume 43, pp. 61–134. [Google Scholar]

- Gilbert, P.; Allan, S.; Brough, S.; Melley, S.; Miles, J.N.V. Relationship of Anhedonia and Anxiety to Social Rank, Defeat and Entrapment. J. Affect. Disord. 2002, 71, 141–151. [Google Scholar] [CrossRef] [PubMed]

- Plutchik, R. Emotion: A Psychoevolutionary Synthesis; Harper & Row: Manhattan, NY, USA, 1980. [Google Scholar]

- Eagle, M.N. Core Concepts in Contemporary Psychoanalysis: Clinical, Research Evidence and Conceptual Critiques, 1st ed.; Routledge: Abingdon-on-Thames, UK, 2017; ISBN 9781315142111. [Google Scholar]

- Ulberg, R.; Amlo, S.; Critchfield, K.L.; Marble, A.; Høglend, P. Transference Interventions and the Process between Therapist and Patient. Psychotherapy 2014, 51, 258–269. [Google Scholar] [CrossRef]

- Racker, H. Transference and Countertransference, 1st ed.; Routledge: Abingdon-on-Thames, UK, 2018; ISBN 9780429484209. [Google Scholar]

- Gelso, C.J.; Kline, K.V. The Sister Concepts of the Working Alliance and the Real Relationship: On Their Development, Rupture, and Repair. Res. Psychother. Psychopathol. Process Outcome 2019, 22, 373. [Google Scholar] [CrossRef] [PubMed]

- Gelso, C.J.; Kivlighan, D.M.; Markin, R.D. The Real Relationship. In Psychotherapy Relationships that Work; Oxford University Press: Oxford, UK, 2019; pp. 351–378. ISBN 9780190843953. [Google Scholar]

- Streiner, D.L.; Norman, G.R.; Cairney, J. Health Measurement Scales: A Practical Guide to Their Development and Use, 5th ed.; Oxford University Press: Oxford, UK, 2015; ISBN 9780199685219. [Google Scholar]

- Lambert, M.J.; Burlingame, G.M.; Umphress, V.; Hansen, N.B.; Vermeersch, D.A.; Clouse, G.C.; Yanchar, S.C. The Reliability and Validity of the Outcome Questionnaire. Clin. Psychol. Psychother. 1996, 3, 249–258. [Google Scholar] [CrossRef]

- Russell, J.A.; Carroll, J.M. On the Bipolarity of Positive and Negative Affect. Psychol. Bull. 1999, 125, 3–30. [Google Scholar] [CrossRef]

- Revelle, W.; Condon, D.M. Reliability from α to ω: A Tutorial. Psychol. Assess. 2019, 31, 1395–1411. [Google Scholar] [CrossRef] [Green Version]

- Sellbom, M.; Tellegen, A. Factor Analysis in Psychological Assessment Research: Common Pitfalls and Recommendations. Psychol. Assess. 2019, 31, 1428–1441. [Google Scholar] [CrossRef] [PubMed]

- Youngstrom, E.A.; Van Meter, A.; Frazier, T.W.; Youngstrom, J.K.; Findling, R.L. Developing and Validating Short Forms of the Parent General Behavior Inventory Mania and Depression Scales for Rating Youth Mood Symptoms. J. Clin. Child Adolesc. Psychol. 2020, 49, 162–177. [Google Scholar] [CrossRef]

- Hair, J.F., Jr.; Black, W.C.; Babin, B.J.; Anderson, R.A. Multivariate Data Analysis, 8th ed.; Cenage: Boston, MA, USA, 2023. [Google Scholar]

- Dinno, A. Paran: Horn’s Test of Principal Components/Factors. 2018. Available online: https://cran.r-project.org/web/packages/paran/paran.pdf (accessed on 2 August 2023).

- Steiner, M.; Grieder, S. EFAtools: An R Package with Fast and Flexible Implementations of Exploratory Factor Analysis Tools. J. Open Source Softw. 2020, 5, 2521. [Google Scholar] [CrossRef]

- Nunnally, J.C.; Bernstein, I.H. Psychometric Theory. In McGraw-Hill Series in Psychology, 3rd ed.; McGraw-Hill: New York, NY, USA, 1994; ISBN 9780070478497. [Google Scholar]

- Raykov, T.; Marcoulides, G.A. Introduction to Psychometric Theory; Routledge: Abingdon-on-Thames, UK, 2011; ISBN 9781136900037. [Google Scholar]

- Chalmers, R.P. Mirt: A Multidimensional Item Response Theory Package for the R Environment. J. Stat. Soft. 2012, 48. [Google Scholar] [CrossRef] [Green Version]

- Rosseel, Y. Lavaan: An R Package for Structural Equation Modeling. J. Stat. Soft. 2012, 48, 1–36. [Google Scholar] [CrossRef] [Green Version]

- Nickodem, K.; Halpin, P. Kfa: K-Fold Cross Validation for Factor Analysis. 2022. Available online: https://cran.r-project.org/web/packages/kfa/kfa.pdf (accessed on 2 August 2023).

- Horn, J.L. A Rationale and Test for the Number of Factors in Factor Analysis. Psychometrika 1965, 30, 179–185. [Google Scholar] [CrossRef] [PubMed]

- Glorfeld, L.W. An Improvement on Horn’s Parallel Analysis Methodology for Selecting the Correct Number of Factors to Retain. Educ. Psychol. Meas. 1995, 55, 377–393. [Google Scholar] [CrossRef]

- Lorenzo-Seva, U.; Timmerman, M.E.; Kiers, H.A.L. The Hull Method for Selecting the Number of Common Factors. Multivar. Behav. Res. 2011, 46, 340–364. [Google Scholar] [CrossRef] [PubMed]

- Ruscio, J.; Roche, B. Determining the Number of Factors to Retain in an Exploratory Factor Analysis Using Comparison Data of Known Factorial Structure. Psychol. Assess. 2012, 24, 282–292. [Google Scholar] [CrossRef] [Green Version]

- Samejima, F. The General Graded Response Model. In Handbook of Polytomous Item Response Theory Models; Nering, M.L., Ostini, R., Eds.; Routledge: Abingon-on-Thames, UK, 2010; pp. 77–107. [Google Scholar]

- Jacobson, N.S.; Truax, P. Clinical Significance: A Statistical Approach to Defining Meaningful Change in Psychotherapy Research. J. Consult. Clin. Psychol. 1991, 59, 12–19. [Google Scholar] [CrossRef]

- Norman, G.R.; Sloan, J.A.; Wyrwich, K.W. Interpretation of Changes in Health-Related Quality of Life: The Remarkable Universality of Half a Standard Deviation. Med. Care 2003, 41, 582–592. [Google Scholar] [CrossRef]

- Ægisdóttir, S.; White, M.J.; Spengler, P.M.; Maugherman, A.S.; Anderson, L.A.; Cook, R.S.; Nichols, C.N.; Lampropoulos, G.K.; Walker, B.S.; Cohen, G.; et al. The Meta-Analysis of Clinical Judgment Project: Fifty-Six Years of Accumulated Research on Clinical Versus Statistical Prediction. Couns. Psychol. 2006, 34, 341–382. [Google Scholar] [CrossRef]

- Markin, R.D.; Kivlighan, D.M. Bias in Psychotherapist Ratings of Client Transference and Insight. Psychother. Theory Res. Pract. Train. 2007, 44, 300–315. [Google Scholar] [CrossRef] [PubMed]

- Carlier, I.V.E.; Meuldijk, D.; Van Vliet, I.M.; Van Fenema, E.; Van der Wee, N.J.A.; Zitman, F.G. Routine Outcome Monitoring and Feedback on Physical or Mental Health Status: Evidence and Theory: Feedback on Physical or Mental Health Status. J. Eval. Clin. Pract. 2012, 18, 104–110. [Google Scholar] [CrossRef] [PubMed]

- Lambert, M.J.; Whipple, J.L.; Kleinstäuber, M. Collecting and Delivering Progress Feedback: A Meta-Analysis of Routine Outcome Monitoring. Psychotherapy 2018, 55, 520–537. [Google Scholar] [CrossRef] [PubMed]

- Høglend, P.; Gabbard, G.O. When Is Transference Work Useful in Psychodynamic Psychotherapy? A Review of Empirical Research. In Psychodynamic Psychotherapy Research; Levy, R.A., Ablon, J.S., Kächele, H., Eds.; Humana Press: Totowa, NJ, USA, 2012; pp. 449–467. ISBN 9781607617914. [Google Scholar]

- Poston, J.M.; Hanson, W.E. Meta-Analysis of Psychological Assessment as a Therapeutic Intervention. Psychol. Assess. 2010, 22, 203–212. [Google Scholar] [CrossRef] [PubMed]

- Bickman, L. A Measurement Feedback System (MFS) Is Necessary to Improve Mental Health Outcomes. J. Am. Acad. Child Adolesc. Psychiatry 2008, 47, 1114–1119. [Google Scholar] [CrossRef] [Green Version]

- MacKenzie, S.B.; Podsakoff, P.M.; Podsakoff, N.P. Construct Measurement and Validation Procedures in MIS and Behavioral Research: Integrating New and Existing Techniques. MIS Q. 2011, 35, 293–334. [Google Scholar] [CrossRef]

- Colli, A.; Tanzilli, A.; Gualco, I.; Lingiardi, V. Empirically Derived Relational Pattern Prototypes in the Treatment of Personality Disorders. Psychopathology 2016, 49, 364–373. [Google Scholar] [CrossRef]

- Prasko, J.; Ociskova, M.; Vanek, J.; Burkauskas, J.; Slepecky, M.; Bite, I.; Krone, I.; Sollar, T.; Juskiene, A. Managing Transference and Countertransference in Cognitive Behavioral Supervision: Theoretical Framework and Clinical Application. Psychol. Res. Behav. Manag. 2022, 15, 2129–2155. [Google Scholar] [CrossRef]

- Clarkin, J.F.; Yeomans, F.E.; Kernberg, O.F. Psychotherapy for Borderline Personality; Wiley: Hoboken, NJ, USA, 1999. [Google Scholar]

- Swift, J.K.; Greenberg, R.P. Premature Discontinuation in Adult Psychotherapy: A Meta-Analysis. J. Consult. Clin. Psychol. 2012, 80, 547–559. [Google Scholar] [CrossRef] [PubMed]

- Youngstrom, E.A.; Van Meter, A.; Frazier, T.W.; Hunsley, J.; Prinstein, M.J.; Ong, M.-L.; Youngstrom, J.K. Evidence-Based Assessment as an Integrative Model for Applying Psychological Science to Guide the Voyage of Treatment. Clin. Psychol. Sci. Pract. 2017, 24, 331–363. [Google Scholar] [CrossRef]

- Depue, R.A.; Slater, J.F.; Wolfstetter-Kausch, H.; Klein, D.; Goplerud, E.; Farr, D. A Behavioral Paradigm for Identifying Persons at Risk for Bipolar Depressive Disorder: A Conceptual Framework and Five Validation Studies. J. Abnorm. Psychol. 1981, 90, 381–437. [Google Scholar] [CrossRef] [PubMed]

- Betan, E.J.; Heim, A.K.; Zittel Conklin, C.; Westen, D. Countertransference Phenomena and Personality Pathology in Clinical Practice: An Empirical Investigation. Am. J. Psychiatry 2005, 162, 890–898. [Google Scholar] [CrossRef] [PubMed]

- Colli, A.; Tanzilli, A.; Dimaggio, G.; Lingiardi, V. Patient Personality and Therapist Response: An Empirical Investigation. Am. J. Psychiatry 2014, 171, 102–108. [Google Scholar] [CrossRef] [Green Version]

- Hayes, J.A.; Riker, J.; Ingram, K. Countertransference Behavior and Management in Brief Counseling: A Field Study. Psychother. Res. 1997, 7, 145–153. [Google Scholar] [CrossRef]

| Demographics | % (n) |

|---|---|

| Biological sex | |

| Male | 18% (126) |

| Female | 80% (564) |

| I prefer not to say | 2% (11) |

| Age (years) | |

| 18–29 | 40% (282) |

| 30–39 | 19% (131) |

| 40–49 | 15% (104) |

| 50–59 | 15% (105) |

| ≥60 | 11% (79) |

| Clinical Characteristics | |

| Average number of diagnoses, M (SD) | 2.55 (1.53) |

| Any anxiety disorder | 75% (529) |

| Any (unipolar) depressive disorder | 54% (378) |

| Any bipolar or related disorder | 17% (117) |

| Any personality disorder | 13% (93) |

| Any trauma- and stressor-related disorders | 30% (209) |

| Treatment Characteristics | |

| In psychotherapy from | |

| 0 to 3 months | 18% (124) |

| 4 to 6 months | 10% (72) |

| 7 to 12 months | 12% (86) |

| 13 to 24 months | 12% (84) |

| >24 months | 48% (335) |

| Session frequency | |

| ≤1 per month | 24% (171) |

| 2 to 3 per month | 35% (244) |

| 1 per week | 37% (256) |

| ≥2 per week | 4% (30) |

| Therapist’s biological sex Female | 77% (539) |

| Patient–Therapist biological sex match Same-sex | 74% (521) |

| Item Content | α | β1 | β2 | β3 | β4 | |

|---|---|---|---|---|---|---|

| Factor 1 | I felt disparaged or belittled by my therapist | 3.05 | 1.46 | |||

| RISQ | I felt rejected by my therapist | 4.73 | 1.28 | |||

| I felt provoked or attacked by my therapist | 2.31 | 1.84 | ||||

| I felt scared, uneasy, like my therapist might harm me | 2.23 | 2.06 | ||||

| Factor 2 | I felt respected by my therapist | 2.60 | −2.07 | −1.32 | −0.73 | 0.19 |

| SPARQ Positive | I felt my therapist cared about me | 2.98 | −1.95 | −1.04 | −0.40 | 0.51 |

| I felt happy to see my therapist | 2.47 | −1.83 | −0.91 | −0.25 | 0.61 | |

| Affect | I felt appreciated by my therapist | 2.44 | −1.29 | −0.57 | 0.22 | 1.14 |

| Factor 3 | I felt worried my therapist couldn’t help me | 1.42 | −0.51 | 0.68 | 1.54 | 2.32 |

| SPARQ Negative Affect | I felt afraid to spoke my mind, for fear of being judged, criticized, disliked by my therapist | 2.67 | 0.34 | 1.26 | 1.77 | 2.32 |

| I felt ashamed with my therapist about my fantasy, desires, mindset, behavior, or symptoms | 1.73 | 0.37 | 1.32 | 2.12 | 2.95 | |

| I felt shy, like I wanted to hide from my therapist or end the session early | 1.96 | 0.54 | 1.48 | 2.09 | 2.79 |

| RISQ | SPARQ | ||

|---|---|---|---|

| Positive Affect | Negative Affect | ||

| Descriptive statistics | |||

| Potential Range | 0 to 4 | 0 to 16 | 0 to 16 |

| Observed Range | 0 to 4 | 0 to 16 | 0 to 16 |

| Mean, SD | 0.36 (0.87) | 10.45 (4.16) | 3.03 (3.11) |

| POMP, SD | 9.00 (21.75) | 65.31 (26.00) | 18.94 (19.44) |

| Skew | 2.67 | −0.58 | 1.33 |

| Kurtosis | 6.75 | −0.53 | 1.57 |

| Standard Error of Measurement (SEm) | 0.44 | 1.56 | 1.55 |

| Standard Error of Difference (SEd) | 0.63 | 2.20 | 2.50 |

| Internal consistency reliability | |||

| X2/df | 7.16 | 3.24 | |

| CFI | 0.98 | 0.98 | |

| TLI | 0.94 | 0.97 | |

| RMSEA | 0.09 | 0.06 | |

| SRMR | 0.03 | 0.05 | |

| Average inter-item r | 0.43 | 0.61 | 0.41 |

| Alpha | 0.75 | 0.86 | 0.74 |

| Omega total | 0.74 | 0.86 | 0.75 |

| Clinical change benchmarks | |||

| 90% Critical Change | 1.02 | 3.63 | 3.63 |

| 95% Critical Change | 1.21 | 4.31 | 4.61 |

| Minimally important difference | 0.44 | 2.08 | 1.55 |

| Jacobson benchmark threshold (5% tail) | -- | LB: 2.30 | UB: 9.13 |

| Scale correlations | |||

| SPARQ—Positive Affect | −0.45 * | 1 | |

| SPARQ—Negative Affect | 0.49 * | −0.40 * | 1 |

| Criterion Variable | RISQ | SPARQ | |

|---|---|---|---|

| Positive Affect | Negative Affect | ||

| Age | −0.04 | 0.05 | −0.15 *** |

| Sex | −0.06 | 0.04 | −0.06 |

| Average # of diagnoses | 0.04 | −0.07 | 0.17 *** |

| Any anxiety disorder | −0.10 * | −0.00 | 0.03 |

| Any bipolar disorder | 0.05 | −0.05 | 0.03 |

| Any depressive disorder | −0.14 *** | 0.06 | 0.02 |

| Any personality disorder | 0.20 *** | −0.11 * | 0.17 *** |

| Cluster A PD | 0.08 * | −0.08 | 0.08 * |

| Cluster B PD | 0.19 *** | −0.12 ** | 0.13 ** |

| Cluster C PD | 0.07 | −0.08 | 0.09 * |

| Any trauma- and stressor-related disorder | −0.02 | 0.04 | 0.05 |

| Therapy length | −0.11 * | 0.13 ** | −0.05 |

| Session frequency | 0.03 | 0.10 * | 0.06 |

| Therapist’s sex | −0.13 ** | 0.10 * | −0.07 |

| Patient–Therapist sex match | −0.10 * | 0.09 * | −0.12 ** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stefana, A.; Langfus, J.A.; Vieta, E.; Fusar-Poli, P.; Youngstrom, E.A. Development and Initial Validation of the in-Session Patient Affective Reactions Questionnaire (SPARQ) and the Rift In-Session Questionnaire (RISQ). J. Clin. Med. 2023, 12, 5156. https://doi.org/10.3390/jcm12155156

Stefana A, Langfus JA, Vieta E, Fusar-Poli P, Youngstrom EA. Development and Initial Validation of the in-Session Patient Affective Reactions Questionnaire (SPARQ) and the Rift In-Session Questionnaire (RISQ). Journal of Clinical Medicine. 2023; 12(15):5156. https://doi.org/10.3390/jcm12155156

Chicago/Turabian StyleStefana, Alberto, Joshua A. Langfus, Eduard Vieta, Paolo Fusar-Poli, and Eric A. Youngstrom. 2023. "Development and Initial Validation of the in-Session Patient Affective Reactions Questionnaire (SPARQ) and the Rift In-Session Questionnaire (RISQ)" Journal of Clinical Medicine 12, no. 15: 5156. https://doi.org/10.3390/jcm12155156