Performance Evaluation of State of the Art Systems for Physical Activity Classification of Older Subjects Using Inertial Sensors in a Real Life Scenario: A Benchmark Study

Abstract

:1. Introduction

- (i).

- Dataset: Nature of the datasets differs in terms of the population studied, how and where the ADLs are performed and the type of ADLs included in the dataset. Majority of the existing PAC systems developed in the literature have used datasets collected in a laboratory setting or in a controlled environment with predefined sets of activities [13,14,17,18].

- (ii).

- (iii).

- (iv).

- (v).

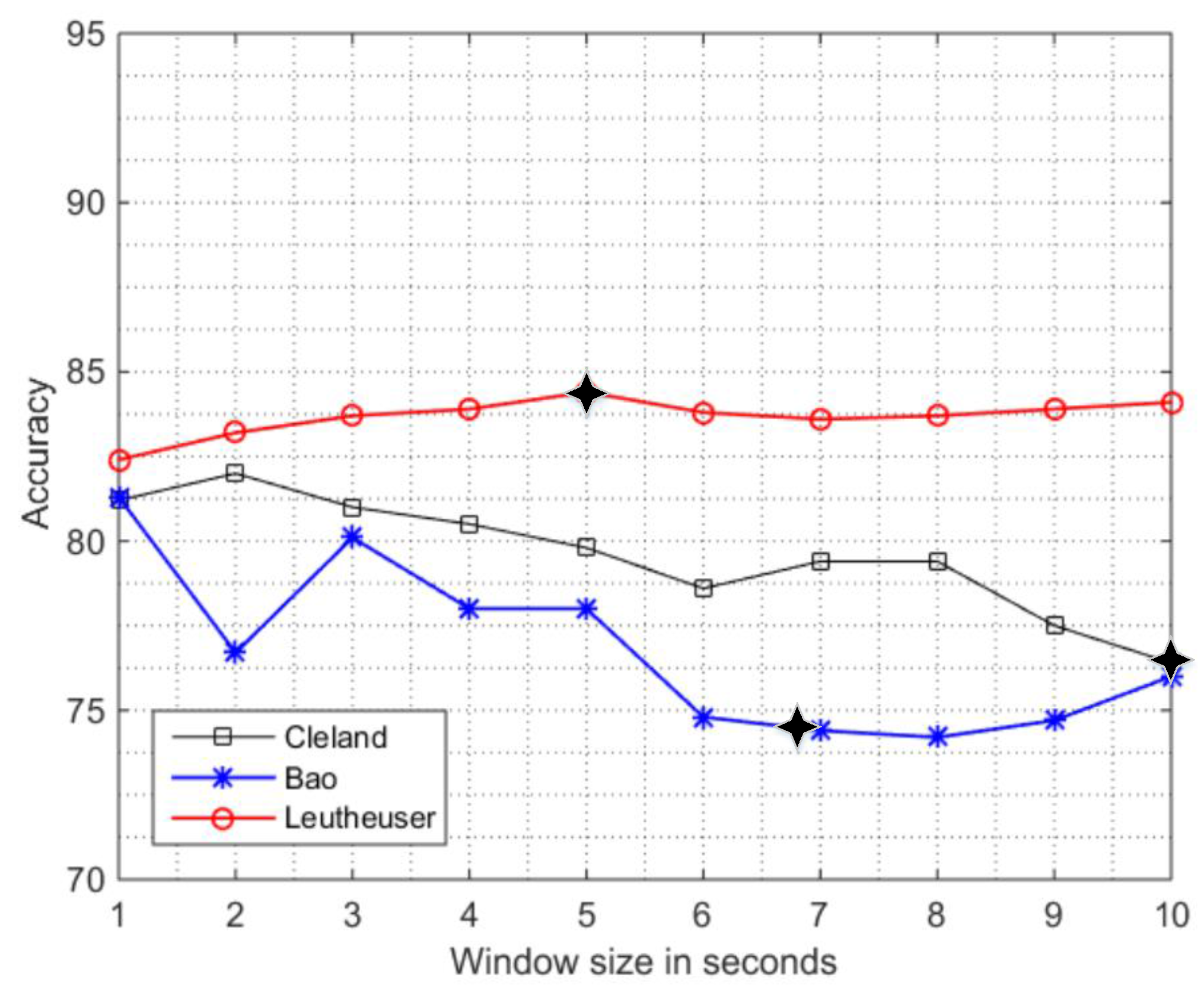

- Window size: Window size and overlapping intervals used for the feature computation vary and they may affect the performance of machine learning algorithms and classifiers. The window sizes largely differs across the PAC systems proposed in the literature: 2 s [4], 2.5 s [11], 5 s [5], 5.12 s [3], 6.7 s [2], and 10 s [9]. The overlapping interval used in most of the PAC systems is 50% of the window size [20].

- (vi).

- Classifier: In most of the PAC systems, a single classifier is used to differentiate between all the different ADLs in the dataset. A common choice for such classifiers may include a decision tree classifier [2], support vector machine (SVM), artificial neural network (ANN) [13], and K-nearest neighbors (KNN) [4]. However, some systems have attempted to integrate the base level classifiers either by plurality voting [3] or by defining a hierarchical classification process which uses different classifiers for each subset of ADL [6,10,15].

- (1)

- To compare the performance of existing PAC systems in a common dataset of activities of older subjects in an unbiased way (i.e., with the same subjects, sensors, sampling frequency, window size and cross-validation procedure), and to investigate the effect of varying window size on system’s performance.

- (2)

- To validate and compare the performance of the PAC systems in real-life scenarios compared to an in-lab setting in order to check if these systems are transferrable to real life settings.

- (3)

- To evaluate the impact of the number of sensors on the performance in the analyses in (1) and (2) using a reductionist approach (i.e., analyzing only the sensing unit worn at the lower back instead of the multi-sensor setup). The lower back location is chosen since it is a very common case that shows no major drawbacks for the monitoring of the activities of older subjects.

2. Materials and Methods

2.1. Data Collection in Real-Life Scenarios

2.2. Implementation of the SOA Systems for PACs Using Their Original Framework

2.3. Implementation of the SOA Systems for PAC Using a Reductionist Framework

3. Results and Discussion

3.1. Performance Comparison of the PAC Systems in the In-Lab Setting Using Their Original Framework and Sensitivity Analysis to the Window Size

3.2. Performance of the PAC Systems in Real-Life Scenarios

3.2.1. In-Lab vs. Out-of-Lab

3.2.2. In-Lab Training/Out-Lab Testing

- (i)

- Most of the existing PAC systems are developed using a standardized protocol which does not include the ADLs performed under real-life conditions.

- (ii)

- The order and way of performing these activities in a more natural and quite different environment to the one performed in a laboratory environment.

3.3. Computational Complexity in the Real-Life Setting

3.4. Performance Comparison of the PAC Systems in the In-Lab Setting Using a Reductionist Approach and Sensitivity Analysis to the Window Size

3.5. Performance of the PAC Systems in Real-Life Scenarios Using a Reductionist Approach

3.5.1. In-Lab vs. Out-of-Lab

3.5.2. In-Lab Training/Out-Lab Testing

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A. Computation of Accuracy and Sensitivity by Class in the In-Lab Training/Out-Lab Testing Scenario of All SOA for PAC

| Stand | Walk | Sit | Lie | ←Classified as |

|---|---|---|---|---|

| 9214 | 571 | 4 | 0 | stand |

| 2329 | 4000 | 2 | 9 | walk |

| 24 | 16 | 19,260 | 197 | sit |

| 233 | 0 | 2 | 278 | lie |

Appendix B. Detailed Description of the Training and Classification Process Used

| Authors | Classifier Used | Cross-Validation Procedure |

|---|---|---|

| Cleland et al. | SVM Classifier (with universal Pearson VII function based kernel and complexity value of 100 using WEKA libraries) | Leave-one-subject-out-cross-validation |

| Bao et al. | Decision Tree Classifier (J48 with default parameters using WEKA libraries) | Leave-one-subject-out-cross-validation |

| Leutheuseur et al. | Hierarchical Classification (KNN and SVM using WEKA libraries) | Leave-one-subject-out-cross-validation |

References

- World Health Organization for Europe: Policies and Priority Interventions for Healthy Ageing. Available online: http://www.euro.who.int/__data/assets/pdf_file/0006/161637/WHD-Policies-and-Priority-Interventions-for-Healthy-Ageing.pdf?ua=1 (accessed on 18 July 2016).

- Bao, L.; Intille, S.S. Activity recognition from user-annotated acceleration data. In International Conference on Pervasive Computing; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1–17. [Google Scholar]

- Ravi, N.; Dandekar, N.; Mysore, P.; Littman, M.L. Activity recognition from accelerometer data. In Proceedings of the Twentieth National Conference on Artificial Intelligence, Pittsburgh, PA, USA, 9–13 July 2005; pp. 1541–1546.

- Preece, S.J.; Goulermas, J.Y.; Kenney, L.P.; Howard, D. A comparison of feature extraction methods for the classification of dynamic activities from accelerometer data. IEEE Trans. Biomed. Eng. 2009, 56, 871–879. [Google Scholar] [CrossRef] [PubMed]

- Altun, K.; Barshan, B.; Tuncel, O. Comparative study on classifying human activities with miniature inertial and magnetic sensors. Pattern Recognit. 2010, 43, 3605–3620. [Google Scholar] [CrossRef] [Green Version]

- Khan, A.M.; Lee, Y.K.; Lee, S.Y.; Kim, T.S. A triaxial accelerometer-based physical-activity recognition via augmented-signal features and a hierarchical recognizer. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 1166–1172. [Google Scholar] [CrossRef] [PubMed]

- Mannini, A.; Sabatini, A.M. Machine learning methods for classifying human physical activity from on-body accelerometers. Sensors 2010, 10, 1154–1175. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Gao, R.; Freedson, P. Computational methods for estimating energy expenditure in human physical activities. Med. Sci. Sports Exerc. 2012, 44, 2138–2146. [Google Scholar] [CrossRef] [PubMed]

- Cleland, I.; Kikhia, B.; Nugent, C.; Boytsov, A.; Hallberg, J.; Synnes, K.; McClean, S.; Dinlay, D. Optimal placement of accelerometers for the detection of everyday activities. Sensors 2013, 13, 9183–9200. [Google Scholar] [CrossRef] [PubMed]

- Leutheuser, H.; Schuldhaus, D.; Eskofier, B.M. Hierarchical, multi-sensor based classification of daily life activities: Comparison with state-of-the-art algorithms using a benchmark dataset. PLoS ONE 2013, 8, e75196. [Google Scholar] [CrossRef] [PubMed]

- Rosario, M.B.D.; Wang, K.; Wang, J.; Liu, Y.; Brodie, M.; Delbaere, K.; Lovell, N.H.; Lord, S.R.; Redmond, S.J. A comparison of activity classification in younger and older cohorts using a smartphone. Physiol. Meas. 2014, 35, 2269–2286. [Google Scholar] [CrossRef] [PubMed]

- Ellis, K.; Kerr, J.; Godbole, S.; Lanckriet, G.; Wing, D.; Marshall, S. A random forest classifier for the prediction of energy expenditure and type of physical activity from wrist and hip accelerometers. Physiol. Meas. 2014, 35, 2191–2203. [Google Scholar] [CrossRef] [PubMed]

- Gao, L.; Bourke, A.; Nelson, J. Evaluation of accelerometer based multi-sensor versus single-sensor activity recognition systems. Med. Eng. Phys. 2014, 36, 779–785. [Google Scholar] [CrossRef] [PubMed]

- Guiry, J.J.; Van, P.; Nelson, J.; Warmerdam, L.; Riper, H. Activity recognition with smartphone support. Med. Eng. Phys. 2014, 36, 670–675. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y. Human activity recognition based on the hierarchical feature selection and classification framework. J. Electr. Comput. Eng. 2015, 2015. [Google Scholar] [CrossRef]

- Kamada, M.; Shiroma, E.J.; Harris, T.B.; Lee, I.M. Comparison of physical activity assessed using hip-and wrist-worn accelerometers. Gait Posture 2016, 44, 23–28. [Google Scholar] [CrossRef] [PubMed]

- Trabelsi, D.; Mohammed, S.; Chamroukhi, F.; Oukhellou, L.; Amirat, Y. An unsupervised approach for automatic activity recognition based on hidden Markov model regression. IEEE Trans. Autom. Sci. Eng. 2013, 10, 829–835. [Google Scholar] [CrossRef]

- Torres, A.M.; Leuenberger, K.; Gonzenbach, R.; Luft, A.; Gassert, R. Activity classification based on inertial and barometric pressure sensors at different anatomical locations. Physiol. Meas. 2014, 35, 1245–1263. [Google Scholar] [CrossRef] [PubMed]

- Aminian, K.; Robert, P.; Buchser, E.; Rutschmann, B.; Hayoz, D.; Depairon, M. Physical activity monitoring based on accelerometry: Validation and comparison with video observation. Med. Biol. Eng. Comput. 1999, 37, 304–308. [Google Scholar] [CrossRef] [PubMed]

- Figo, D.; Diniz, P.C.; Ferreira, D.R.; Cardoso, J.M. Preprocessing techniques for context recognition from accelerometer data. Pers. Ubiquitous Comput. 2010, 14, 645–662. [Google Scholar] [CrossRef]

- Fida, B.; Bernabucci, I.; Bibbo, D.; Conforto, S.; Schmid, M. Varying behavior of different window sizes on the classification of static and dynamic physical activities from a single accelerometer. Med. Eng. Phys. 2015, 37, 705–711. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J. Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors 2016, 16, 426. [Google Scholar] [CrossRef] [PubMed]

- Najafi, B.; Aminian, K.; Ionescu, A.P.; Loew, F.; Bula, C.J.; Robert, P. Ambulatory system for human motion analysis using a kinematic sensor: Monitoring of daily physical activity in the elderly. IEEE Trans. Biomed. Eng. 2003, 50, 711–723. [Google Scholar] [CrossRef] [PubMed]

- Lyons, G.; Culhane, K.; Hilton, D.; Grace, P.; Lyons, D. A description of an accelerometer-based mobility monitoring technique. Med. Eng. Phys. 2005, 27, 497–504. [Google Scholar] [CrossRef] [PubMed]

- Godfrey, A.; Bourke, A.; Olaighin, G.; Ven, P.; Nelson, J. Activity classification using a single chest mounted tri-axial accelerometer. Med. Eng. Phys. 2011, 33, 1127–1135. [Google Scholar] [CrossRef] [PubMed]

- Ganea, R.; Lonescu, A.P.; Aminian, K. Detection and classification of postural transitions in real-world conditions. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 688–696. [Google Scholar] [CrossRef] [PubMed]

- Awais, M.; Mellone, S.; Chiari, L. Physical activity classification meets daily life: Review on existing methodologies and open challenges. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 5050–5053.

- Lindemann, U.; Zijlstra, W.; Aminian, K.; Chastin, S.F.; Bruin, E.D.D.; Helbostad, J.L.; Bussman, B.J. Recommendations for standardizing validation procedures assessing physical activity of older persons by monitoring body postures and movements. Sensors 2014, 14, 1267–1277. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mannini, A.; Intille, S.S.; Rosenberger, M.; Sabatini, A.M.; Haskell, W. Activity recognition using a single accelerometer placed at the wrist or ankle. Med. Sci. Sports Exerc. 2013, 45, 2193–2203. [Google Scholar] [CrossRef] [PubMed]

- Arif, M.; Bilal, M.; Kattan, A.; Ahamed, S.I. Better physical activity classification using smartphone acceleration sensor. J. Med. Syst. 2014, 38, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Bourke, A.K.; Ihlen, E.A.F.; Bergquist, R.; Wik, P.B.; Helbostad, J.L. Validation of Existing Systems and Development of New Algorithms for Body-Worn Activity Classification Systems for Independent Living Older Adults-Description of the Study Protocol and a Reference Dataset. Sensors 2016. (accepted). [Google Scholar]

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The WEKA data mining software: An update. ACM SIGKDD Explor. Newsl. 2009, 11, 10–18. [Google Scholar] [CrossRef]

) specifies the window size used in the original PAC system by the authors.

) specifies the window size used in the original PAC system by the authors.

) specifies the window size used in the original PAC system by the authors.

) specifies the window size used in the original PAC system by the authors.

) specifies the window size used in the original PAC system by the authors.

) specifies the window size used in the original PAC system by the authors.

) specifies the window size used in the original PAC system by the authors.

) specifies the window size used in the original PAC system by the authors.

| Sensor Type | Location | Sampling Frequency | Measured Signals |

|---|---|---|---|

| uSense | Thigh | 100 Hz | 3D Accelerometer, 3D Gyroscope |

| uSense | L5 | 100 Hz | 3D Accelerometer, 3D Gyroscope |

| ActiGraph | Waist | 100 Hz | 3D Accelerometer |

| uSense | Chest | 100 Hz | 3D Accelerometer, 3D Gyroscope |

| Shimmer | Wrist | 200 Hz | 3D Accelerometer, 3D Gyroscope |

| uSense | Feet * | 100 Hz | 3D Accelerometer, 3D Gyroscope |

| ADL | Total (h) | Occurrences | Mean * | STD * | Min * | Max * | Range * |

|---|---|---|---|---|---|---|---|

| sitting | 1.67 | 708 | 8.50 | 18.90 | 0.03 | 267.36 | 267.33 |

| standing | 2.67 | 1319 | 7.28 | 16.40 | 0.03 | 296.97 | 296.94 |

| walking | 0.90 | 613 | 5.29 | 2.79 | 0.96 | 20.07 | 19.11 |

| lying | 0.28 | 187 | 5.47 | 9.87 | 0.13 | 113.23 | 113.10 |

| ADL | Total (h) | Occurrences | Mean * | STD* | Min * | Max * | Range * |

|---|---|---|---|---|---|---|---|

| sitting | 13.45 | 497 | 97.44 | 200.74 | 0.04 | 2075.64 | 2075.60 |

| standing | 6.52 | 4304 | 5.45 | 12.27 | 0.03 | 388.52 | 388.49 |

| walking | 4.10 | 2617 | 5.64 | 8.75 | 0.28 | 139.56 | 139.28 |

| lying | 0.36 | 12 | 106.69 | 154.02 | 3.48 | 583.84 | 580.36 |

| Author | SIN | SOUT |

|---|---|---|

| Cleland et al. [9] | Chest, L5, Wrist, Waist, Thigh, Foot | Chest, L5, Wrist, Waist, Thigh |

| Bao et al. [2] | L5, Wrist, Thigh, Foot | L5, Wrist, Thigh |

| Leutheuser et al. | Wrist, L5, Chest, Foot | Wrist, L5, Chest |

| Author | Fs (W) | SO | Experiment Setting (Population) | Features | Activities | Accuracy Reported |

|---|---|---|---|---|---|---|

| Cleland et al. [9] | 51.2 (10 s) | Chest, lower back, wrist, hip, thigh, foot | Laboratory setting (8 young adults) (26.25 ± 2.86 years) | Mean, standard deviation, skewness, kurtosis, energy and correlation of axes (separately and average over 3 axes) | Walking, jogging on a treadmill, sitting, lying, standing, walking up stairs, walking down stairs | 97.26% SVM |

| Bao et al. [2] | 76.25 (6.7 s) | Hip, wrist, arm, thigh, ankle | Semi-naturalistic conditions (20 subjects) age group not reported | Mean, energy, frequency domain entropy, correlation between the acceleration signals | Walking, sitting, standing, eating or drinking, watching tv, reading, running, bicycling, stretching, strength-training, scrubbing, vacuuming, folding laundry, lying, brushing, climbing stairs, riding elevator, riding escalator | 84% using Decision tree |

| Leutheuser et al. [10] | 204.8 (5 s) | Wrist, hip, chest, ankle | Laboratory setting (23 young adults) (27 ± 7 years) | Minimum, maximum, mean and variance, spectral centroid, bandwidth, energy, gravitational component | Sitting, lying, standing, washing dishes, vacuuming, sweeping, walking, running, stairs climbing, bicycling, rope jumping | 89.6% hierarchical classifier |

| (a) Bao et al. | |||||

| Predicted Class | |||||

| Actual Class | stand | walk | sit | lie | ←classified as |

| 9214 | 571 | 4 | 0 | stand | |

| 2329 | 4000 | 2 | 9 | walk | |

| 24 | 16 | 19,260 | 197 | sit | |

| 233 | 0 | 2 | 278 | lie | |

| (b) Cleland et al. | |||||

| Predicted Class | |||||

| Actual Class | stand | walk | sit | lie | ←classified as |

| 9712 | 73 | 4 | 0 | stand | |

| 2474 | 3857 | 9 | 0 | walk | |

| 1 | 1 | 19,492 | 3 | sit | |

| 0 | 0 | 234 | 279 | lie | |

| (c) Leutheuser et al. | |||||

| Predicted Class | |||||

| Actual Class | stand | walk | sit | lie | ←classified as |

| 7423 | 350 | 1572 | 16 | stand | |

| 395 | 5397 | 94 | 0 | walk | |

| 5289 | 107 | 13,950 | 0 | sit | |

| 0 | 0 | 15 | 480 | lie | |

| Authors | Accuracy | Accuracy by Class | Sensitivity by Class | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Stand | Walk | Sit | Lie | Stand | Walk | Sit | Lie | ||

| Bao et al. | 90.6 | 91.3 | 91.9 | 99.3 | 98.8 | 94.1 | 63.1 | 98.8 | 54.2 |

| Cleland et al. | 92.3 | 92.9 | 92.9 | 99.3 | 99.3 | 99.2 | 60.8 | 100.0 | 54.4 |

| Leutheuser et al. | 77.7 | 78.3 | 97.3 | 79.8 | 99.9 | 79.3 | 91.7 | 72.1 | 97.0 |

| Author | Feature Computation Mean ± Std (s) | Testing Out-of-Lab Mean ± Std (s) |

|---|---|---|

| Bao et al. | 337.07 ± 3.10 | 25.27 ± 0.95 |

| Cleland et al. | 458.79 ± 6.57 | 738.21 ± 1.09 |

| Leutheuser et al. | 772.41 ± 11.99 | 957.83 ± 18.38 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Awais, M.; Palmerini, L.; Bourke, A.K.; Ihlen, E.A.F.; Helbostad, J.L.; Chiari, L. Performance Evaluation of State of the Art Systems for Physical Activity Classification of Older Subjects Using Inertial Sensors in a Real Life Scenario: A Benchmark Study. Sensors 2016, 16, 2105. https://doi.org/10.3390/s16122105

Awais M, Palmerini L, Bourke AK, Ihlen EAF, Helbostad JL, Chiari L. Performance Evaluation of State of the Art Systems for Physical Activity Classification of Older Subjects Using Inertial Sensors in a Real Life Scenario: A Benchmark Study. Sensors. 2016; 16(12):2105. https://doi.org/10.3390/s16122105

Chicago/Turabian StyleAwais, Muhammad, Luca Palmerini, Alan K. Bourke, Espen A.F. Ihlen, Jorunn L. Helbostad, and Lorenzo Chiari. 2016. "Performance Evaluation of State of the Art Systems for Physical Activity Classification of Older Subjects Using Inertial Sensors in a Real Life Scenario: A Benchmark Study" Sensors 16, no. 12: 2105. https://doi.org/10.3390/s16122105

APA StyleAwais, M., Palmerini, L., Bourke, A. K., Ihlen, E. A. F., Helbostad, J. L., & Chiari, L. (2016). Performance Evaluation of State of the Art Systems for Physical Activity Classification of Older Subjects Using Inertial Sensors in a Real Life Scenario: A Benchmark Study. Sensors, 16(12), 2105. https://doi.org/10.3390/s16122105