Reliability of 3D Depth Motion Sensors for Capturing Upper Body Motions and Assessing the Quality of Wheelchair Transfers

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

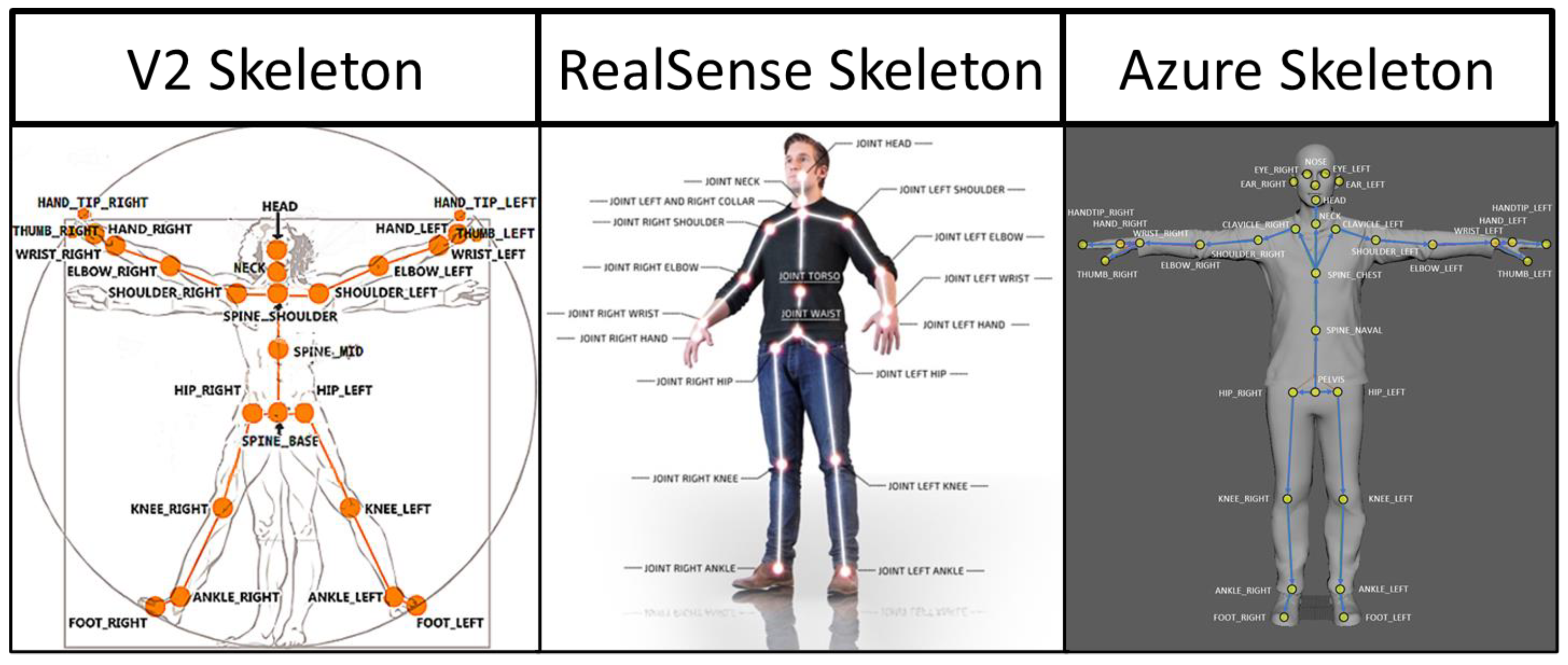

2.2. Equipment

2.3. Experimental Setup

2.4. Data Collection Protocol

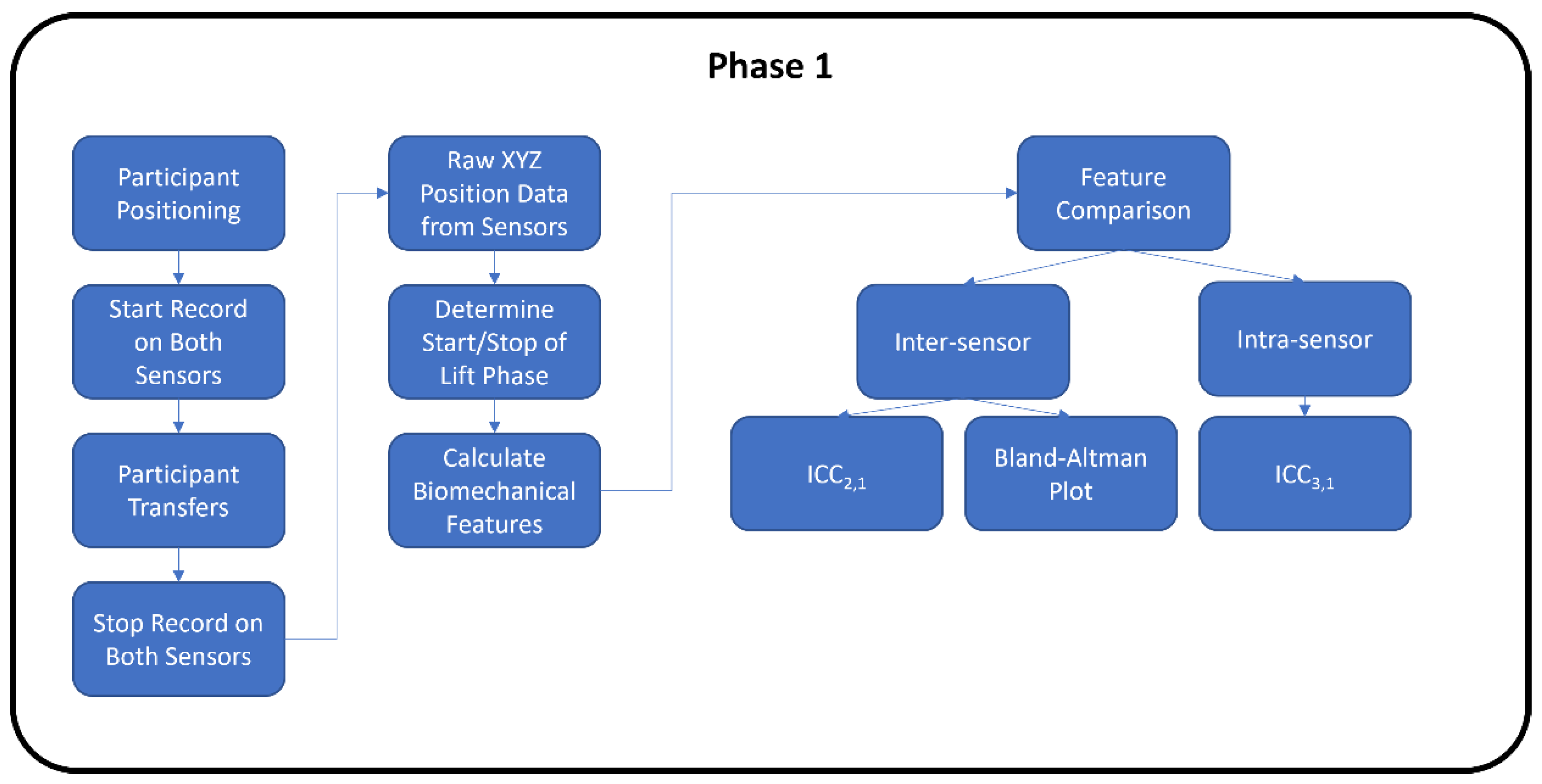

2.4.1. Phase 1 RealSense vs. V2

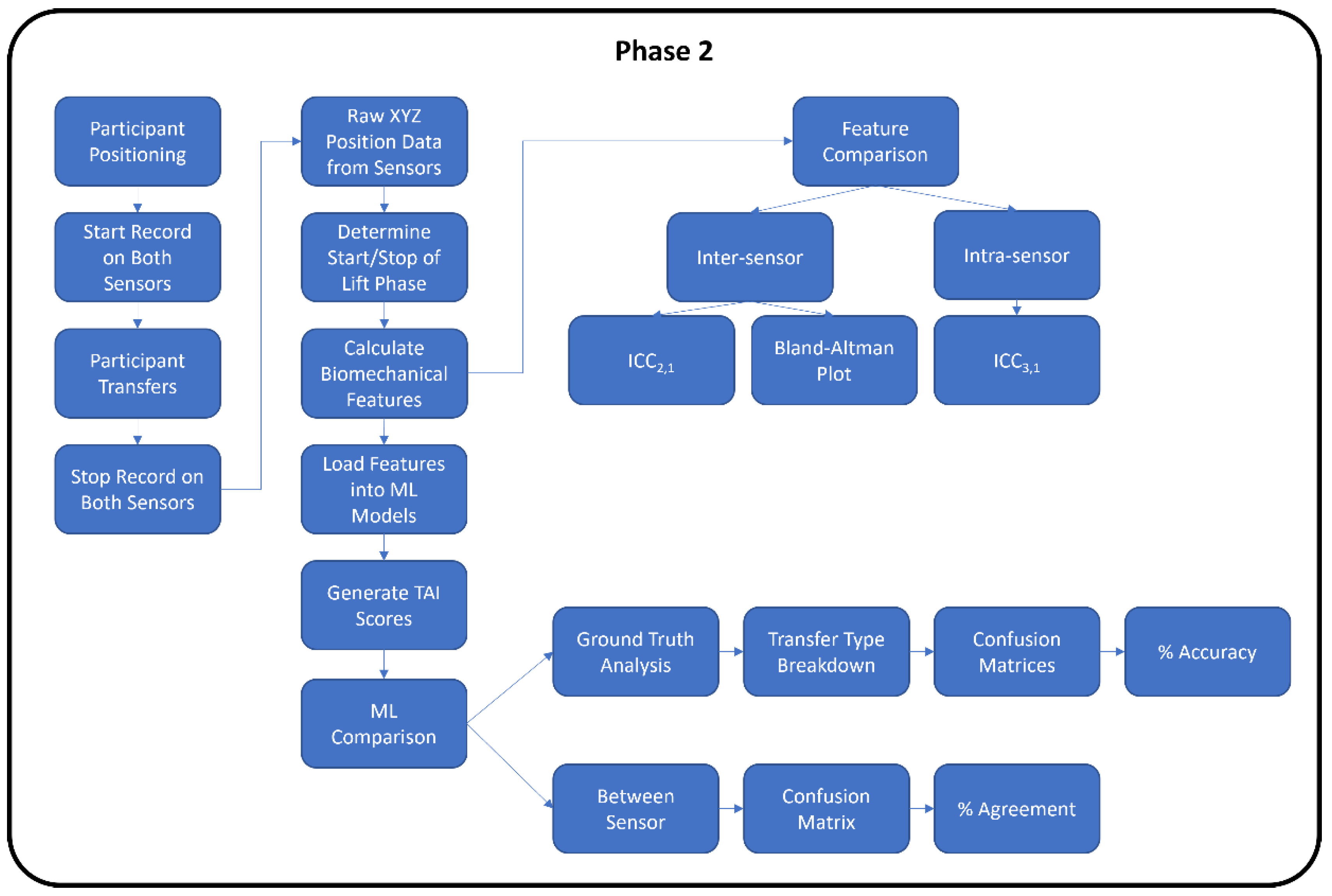

2.4.2. Phase 2 Azure vs. V2

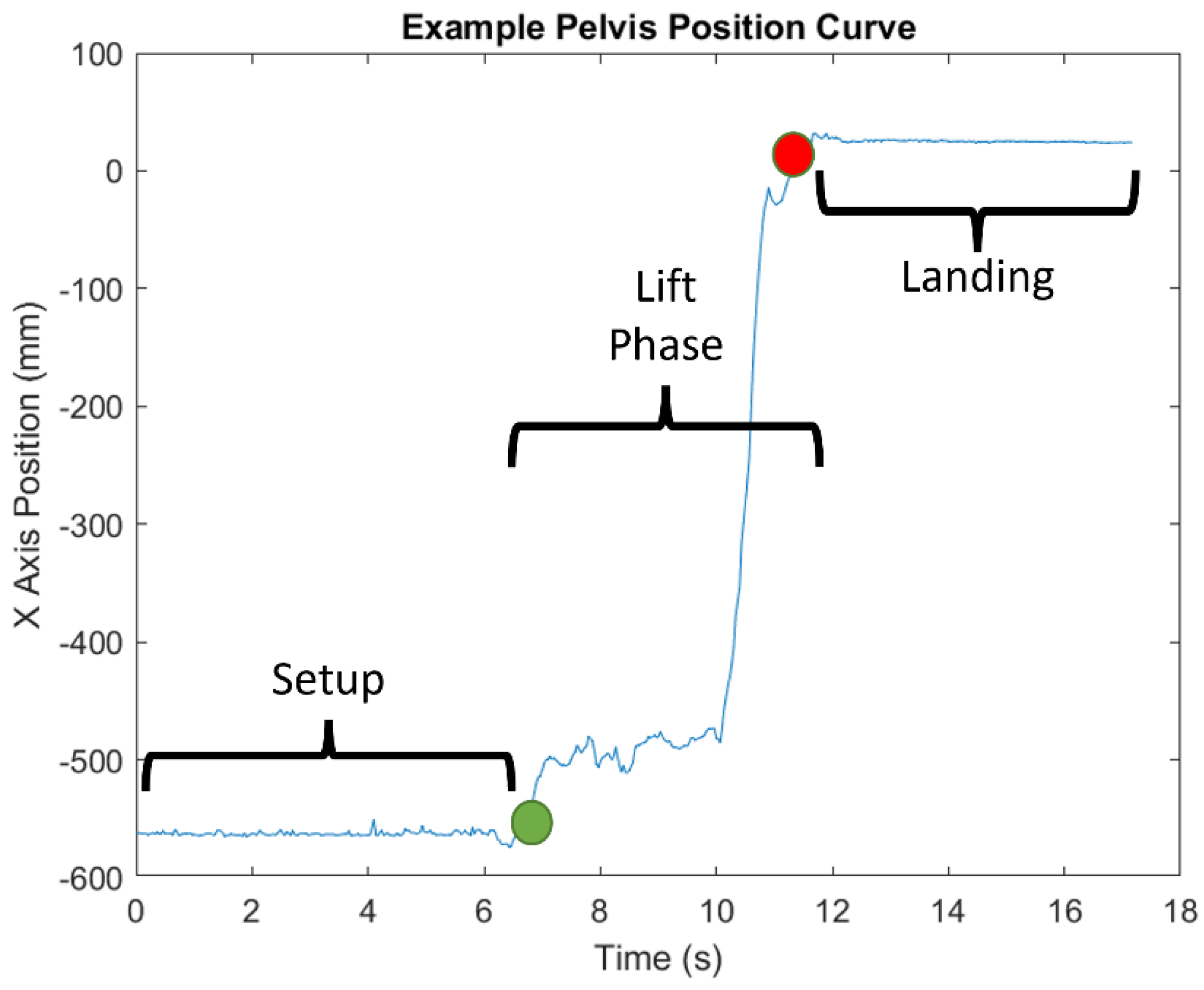

2.5. Data Processing

- DSWP—the displacement of the spine base/waist/pelvis joint center along the x-axis, calculated by taking the final position and subtracting the initial position. This value is given in millimeters and converted to centimeters.

- LPOE—the average plane of elevation angle on the leading side shoulder (the left side). This angle is calculated between two vectors: a normal vector to the chest and the upper arm (left shoulder to elbow), projected onto the transverse plane. The normal vector is calculated by taking the cross product of the trunk vector (e.g., Azure pelvis to upper spine marker) and the shoulder across vector (left to right shoulder markers). This value is given in degrees.

- LE—the average elevation angle on the leading side shoulder (the left side). This angle is calculated between two vectors: the trunk vector and the upper arm vector. This value is given in degrees.

- TF—the average flexion angle of the trunk calculated as the angle between the trunk vector and the vertical y-axis. This value is given in degrees.

2.6. Statistical Analysis

3. Results

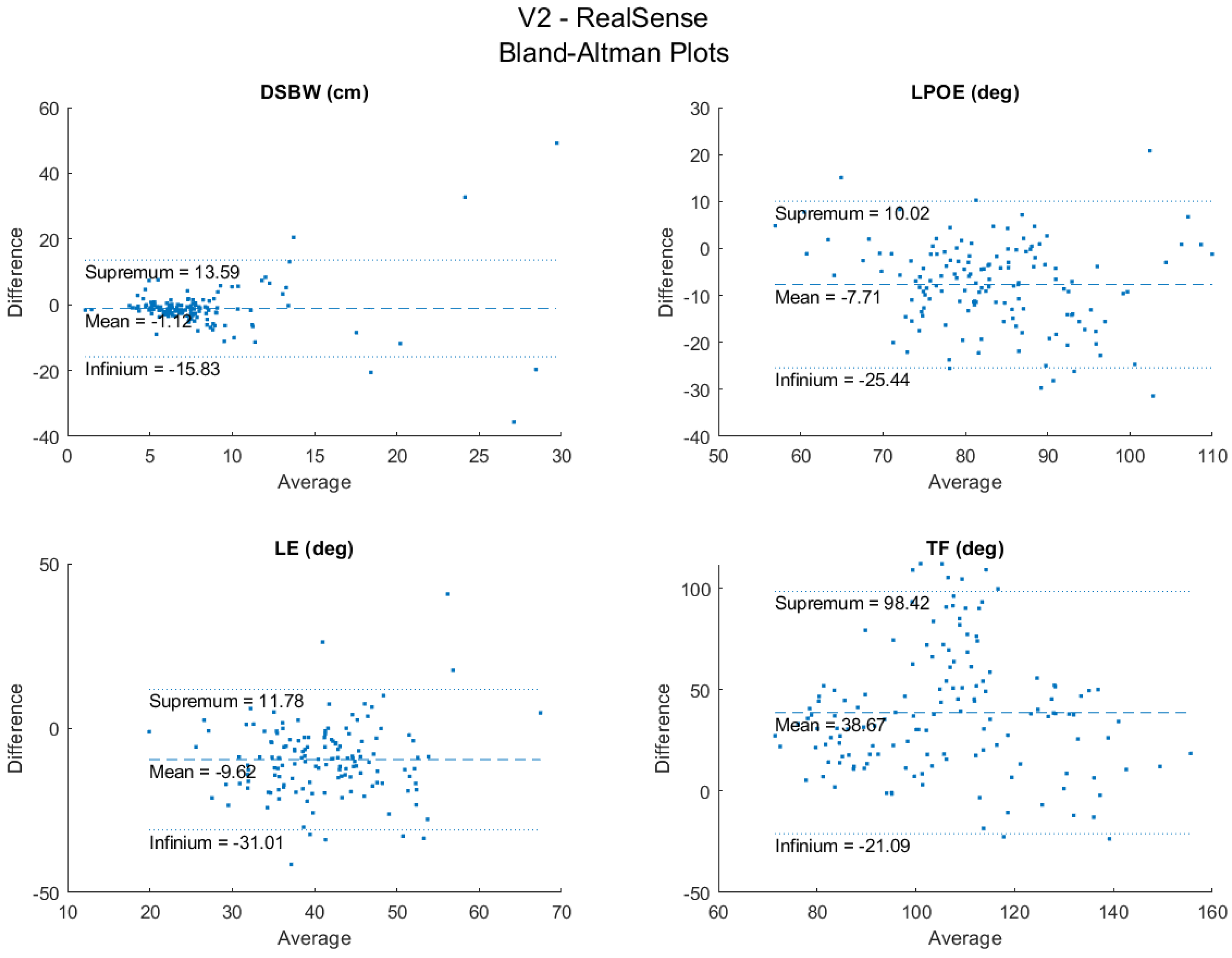

3.1. RealSense vs. V2 Reliability and Agreement

3.2. Azure vs. V2 Reliability and Agreement

3.3. ML Predicted TAI Scores for the Azure and V2

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- National Spinal Cord Injury Statistical Center. Spinal cord injury facts and figures at a glance. J. Spinal Cord Med. 2013, 36, 170–171. [Google Scholar] [CrossRef] [PubMed]

- Brault, M.W. Americans with Disabilities: 2010; US Department of Commerce, Economics and Statistics Administration: Washington, DC, USA, 2012. [Google Scholar]

- Hogaboom, N.; Worobey, L.; Boninger, M.L. Transfer Technique Is Associated With Shoulder Pain and Pathology in People With Spinal Cord Injury: A Cross-Sectional Investigation. Arch. Phys. Med. Rehabil. 2016, 97, 1770–1776. [Google Scholar] [CrossRef] [PubMed]

- Tsai, C.-Y.; Hogaboom, N.S.; Boninger, M.; Koontz, A.M. The Relationship between Independent Transfer Skills and Upper Limb Kinetics in Wheelchair Users. BioMed Res. Int. 2014, 2014, 984526. [Google Scholar] [CrossRef] [PubMed]

- Cooper, R.A.; Boninger, M.L.; Shimada, S.D.; Lawrence, B.M. Glenohumeral Joint Kinematics and Kinetics for Three Coordinate System Representations during Wheelchair Propulsion. Am. J. Phys. Med. Rehabil. 1999, 78, 435–446. [Google Scholar] [CrossRef] [PubMed]

- Gutierrez, D.D.; Thompson, L.; Kemp, B.; Mulroy, S.J. Physical Therapy Clinical Research Network; Rehabilitation Research and Training Center on Aging-Related Changes in Impairment for Persons Living with Physical Disabilities. The Relationship of Shoulder Pain Intensity to Quality of Life, Physical Activity, and Community Participation in Persons with Paraplegia. J. Spinal Cord Med. 2007, 30, 251–255. [Google Scholar]

- Gagnon, D.; Koontz, A.; Mulroy, S.; Nawoczenski, D.; Butler-Forslund, E.; Granstrom, A.; Nadeau, S.; Boninger, M. Biomechanics of Sitting Pivot Transfers Among Individuals with a Spinal Cord Injury: A Review of the Current Knowledge. Top. Spinal Cord Inj. Rehabil. 2009, 15, 33–58. [Google Scholar] [CrossRef]

- Worobey, L.A.; Zigler, C.; Huzinec, R.; Rigot, S.K.; Sung, J.; Rice, L.A. Reliability and Validity of the Revised Transfer Assessment Instrument. Top. Spinal Cord Inj. Rehabil. 2018, 24, 217–226. [Google Scholar] [CrossRef]

- Worobey, L.A.; Rigot, S.K.; Hogaboom, N.; Venus, C.; Boninger, M.L. Investigating the Efficacy of Web-Based Transfer Training on Independent Wheelchair Transfers Through Randomized Controlled Trials. Arch. Phys. Med. Rehabil. 2018, 99, 9–16. [Google Scholar] [CrossRef] [Green Version]

- Tsai, C.-Y.; Boninger, M.L.; Hastings, J.; Cooper, R.A.; Rice, L.; Koontz, A.M. Immediate Biomechanical Implications of Transfer Component Skills Training on Independent Wheelchair Transfers. Arch. Phys. Med. Rehabil. 2016, 97, 1785–1792. [Google Scholar] [CrossRef]

- Wei, L.; Chung, C.-S.; Koontz, A.M. Automating the Clinical Assessment of Independent Wheelchair Sitting Pivot Transfer Techniques. Top. Spinal Cord Inj. Rehabil. 2021, 27, 1–11. [Google Scholar] [CrossRef]

- Glowiński, S.; Blażejewski, A.; Krżyzyński, T. Body part accelerations evaluation for chosen techniques in martial arts. In Innovations in Biomedical Engineering; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Jiménez-Grande, D.; Atashzar, S.F.; Devecchi, V.; Martinez-Valdes, E.; Falla, D. A machine learning approach for the identification of kinematic biomarkers of chronic neck pain during single- and dual-task gait. Gait Posture 2022, 96, 81–86. [Google Scholar] [CrossRef] [PubMed]

- Guo, L.; Kou, J.; Wu, M. Ability of Wearable Accelerometers-Based Measures to Assess the Stability of Working Postures. Int. J. Environ. Res. Public Health 2022, 19, 4695. [Google Scholar] [CrossRef] [PubMed]

- Long, C.; Jo, E.; Nam, Y. Development of a yoga posture coaching system using an interactive display based on transfer learning. J. Supercomput. 2022, 78, 5269–5284. [Google Scholar] [CrossRef] [PubMed]

- Lai, K.-T.; Hsieh, C.-H.; Lai, M.-F.; Chen, M.-S. Human Action Recognition Using Key Points Displacement. In Image and Signal Processing; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Zhang, S.; Wei, Z.; Nie, J.; Shuang, W.; Wang, S.; Li, Z. A Review on Human Activity Recognition Using Vision-Based Method. J. Health Eng. 2017, 2017, 3090343. [Google Scholar] [CrossRef]

- Lee, J.; Ahn, B. Real-Time Human Action Recognition with a Low-Cost RGB Camera and Mobile Robot Platform. Sensors 2020, 20, 2886. [Google Scholar] [CrossRef]

- Dillhoff, A.; Tsiakas, K.; Babu, A.R.; Zakizadehghariehali, M.; Buchanan, B.; Bell, M.; Athitsos, V.; Makedon, F. An automated assessment system for embodied cognition in children: From motion data to executive functioning. In Proceedings of the 6th International Workshop on Sensor-Based Activity Recognition and Interaction, Rostock, Germany, 16–17 September 2019; Association for Computing Machinery: New York, NY, USA, 2019; p. 9. [Google Scholar]

- Manghisi, V.M.; Uva, A.E.; Fiorentino, M.; Bevilacqua, V.; Trotta, G.F.; Monno, G. Real time RULA assessment using Kinect v2 sensor. Appl. Ergon. 2017, 65, 481–491. [Google Scholar] [CrossRef]

- Dehbandi, B.; Barachant, A.; Smeragliuolo, A.H.; Long, J.D.; Bumanlag, S.J.; He, V.; Lampe, A.; Putrino, D. Using data from the Microsoft Kinect 2 to determine postural stability in healthy subjects: A feasibility trial. PLoS ONE 2017, 12, e0170890. [Google Scholar] [CrossRef] [Green Version]

- Wei, L.; Hyun, W.K.; Tsai, C.T.; Koontz, A.M. Can the Kinect Detect Differences between Proper and Improper Wheelchair Transfer Techniques? Rehabilitation Engineering and Assitive Technology Society of North America: Washington, DC, USA, 2016. [Google Scholar]

- Wei, L.; Hyun, W.K.; Koontz, A.M. Evaluating Wheelchair Transfer Technique by Microsoft Kinect. In Proceedings of the International Seating Symposium, Nashville, TN, USA, 2 March 2017. [Google Scholar]

- Mejia-Trujillo, J.D.; Castaño-Pino, Y.J.; Navarro, A.; Arango-Paredes, J.D.; Rincón, D.; Valderrama, J.; Muñoz, B.; Orozco, J.L. Kinect™ and Intel RealSense™ D435 comparison: A preliminary study for motion analysis. In Proceedings of the 2019 IEEE International Conference on E-health Networking, Bogota, Colombia, 14–16 October 2019. Application & Services (HealthCom). [Google Scholar]

- Özsoy, U.; Yıldırım, Y.; Karaşin, S.; Şekerci, R.; Süzen, L.B. Reliability and agreement of Azure Kinect and Kinect v2 depth sensors in the shoulder joint ROM estimation. J. Shoulder Elb. Surg. 2022, in press. [CrossRef]

- Yeung, L.-F.; Yang, Z.; Cheng, K.C.-C.; Du, D.; Tong, R.K.-Y. Effects of camera viewing angles on tracking kinematic gait patterns using Azure Kinect, Kinect v2 and Orbbec Astra Pro v2. Gait Posture 2021, 87, 19–26. [Google Scholar] [CrossRef]

- Sosa-León, V.A.L.; Schwering, A. Evaluating Automatic Body Orientation Detection for Indoor Location from Skeleton Tracking Data to Detect Socially Occupied Spaces Using the Kinect v2, Azure Kinect and Zed 2i. Sensors 2022, 22, 3798. [Google Scholar] [CrossRef]

- Microsoft. Kinect V2 SDK. Available online: https://www.microsoft.com/en-us/download/details.aspx?id=44561 (accessed on 1 April 2021).

- Microsoft. Kinect Azure SDK. Available online: https://docs.microsoft.com/en-us/azure/kinect-dk/ (accessed on 1 April 2021).

- INTEL. RealSense D435 Specs. Available online: https://ark.intel.com/content/www/us/en/ark/products/128255/intel-realsense-depth-camera-d435.html (accessed on 1 April 2021).

- NUITRACK. RealSense SDK. Available online: https://nuitrack.com/ (accessed on 1 April 2021).

- Tsai, C.-Y.; Rice, L.A.; Hoelmer, C.; Boninger, M.; Koontz, A.M. Basic Psychometric Properties of the Transfer Assessment Instrument (Version 3.0). Arch. Phys. Med. Rehabil. 2013, 94, 2456–2464. [Google Scholar] [CrossRef] [PubMed]

- Lopez, N.; Perez, E.; Tello, E.; Rodrigo, A.; Valentinuzzi, M.E. Statistical Validation for Clinical Measures: Repeatability and Agreement of Kinect™-Based Software. BioMed Res. Int. 2018, 2018, 6710595. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wei, L.; Tsai, C.Y.; Koontz, A.M. The Relationship between Joint Ranges of Motion and Joint Kinetics during Sitting Pivot Wheelchair Transfers. In Proceedings of the Rehabilitation Engineering and Assistive Technology Society of North America Conference, Arlington, VA, USA, 13–15 July 2018. [Google Scholar]

- Halilaj, E.; Rajagopal, A.; Fiterau, M.; Hicks, J.L.; Hastie, T.J.; Delp, S.L. Machine Learning in Human Movement Biomechanics: Best Practices, Common Pitfalls, and New Opportunities; Elsevier Ltd.: Amsterdam, The Netherlands, 2018; pp. 1–11. [Google Scholar]

| Sensor | Depth Camera Resolution (pixels) | Ideal Operating Range (m) | Sampling Frequency (Hz) | Overall Dimensions (mm) | Images |

|---|---|---|---|---|---|

| Kinect V2 | 512 × 424 | 0.5–4.5 | ≤30 | 249 × 66 × 67 |  |

| Kinect Azure | 640 × 576 | 0.5–4 | ≤30 | 103 × 39 × 126 |  |

| Intel RealSense | 1280 × 720 | 0.3–3 | ≤90 | 90 × 25 × 25 |  |

| Feature | Description | Relevant Joint Centers | |

|---|---|---|---|

| DSWP | Displacement of the SpineBase, waist, or pelvis along the x-axis, measured in millimeters. | V2 | SpineBase |

| RealSense | Waist | ||

| Azure | Pelvis | ||

| LPOE | Average joint angle between a normal vector orthogonal to the trunk and shoulder vector projected onto the transverse plane measured in degrees. | V2 | SpineBase, SpineShoulder, LeftShoulder, RightShoulder, LeftElbow |

| RealSense | Waist, left and right collar, LeftShoulder, RightShoulder, LeftElbow | ||

| Azure | Pelvis, SpineUpper *, ShoulderLeft, ShoulderRight, ElbowLeft | ||

| LE | Average joint angle between the trunk and the upper arm, measured in degrees. | V2 | SpineBase, SpineShoulder, LeftShoulder, LeftElbow |

| RealSense | Waist, left and right collar, LeftShoulder, LeftElbow | ||

| Azure | Pelvis, SpineUpper *, ShoulderLeft, ElbowLeft | ||

| TF | Average joint angle between the trunk and the vertical y −axis, measured in degrees. | V2 | SpineBase, SpineShoulder |

| RealSense | Waist, left and right collar | ||

| Azure | Pelvis, SpineUpper * | ||

| RealSense | V2 | ||||||

|---|---|---|---|---|---|---|---|

| ICC | Confidence Interval Lower Bound | Confidence Interval Upper Bound | ICC | Confidence Interval Lower Bound | Confidence Interval Upper Bound | Diff. | |

| DSWP | 0.25 | 0.11 | 0.44 | 0.82 | 0.72 | 0.90 | 0.57 |

| LPOE | 0.60 | 0.44 | 0.75 | 0.81 | 0.71 | 0.89 | 0.21 |

| LE | 0.38 | 0.22 | 0.57 | 0.60 | 0.44 | 0.75 | 0.22 |

| TF | 0.70 | 0.56 | 0.82 | 0.75 | 0.63 | 0.85 | 0.05 |

| ICC | Confidence Interval Lower Bound | Confidence Interval Upper Bound | Mean | Std | ||

|---|---|---|---|---|---|---|

| DSWP (cm) | 0.25 | 0.11 | 0.55 | RealSense | 75.11 | 59.59 |

| V2 | 86.31 | 54.18 | ||||

| LPOE (deg) | 0.57 | 0.07 | 0.84 | RealSense | 79.28 | 9.96 |

| V2 | 86.99 | 11.51 | ||||

| LE (deg) | 0.13 | 0.10 | 0.40 | RealSense | 36.15 | 9.27 |

| V2 | 45.77 | 8.67 | ||||

| TF (deg) | 0.63 | 0.06 | 0.85 | RealSense | 21.86 | 8.22 |

| V2 | 27.79 | 10.51 |

| Azure | V2 | ||||||

|---|---|---|---|---|---|---|---|

| ICC | Confidence Interval Lower Bound | Confidence Interval Upper Bound | ICC | Confidence Interval Lower Bound | Confidence Interval Upper Bound | Diff. | |

| DSWP | 0.92 | 0.79 | 0.98 | 0.84 | 0.58 | 0.97 | 0.08 |

| LPOE | 0.92 | 0.78 | 0.98 | 0.82 | 0.54 | 0.96 | 0.09 |

| LE | 0.92 | 0.79 | 0.98 | 0.89 | 0.71 | 0.98 | 0.03 |

| TF | 0.92 | 0.78 | 0.98 | 0.92 | 0.79 | 0.98 | 0.01 |

| ICC | Confidence Interval Lower Bound | Confidence Interval Upper Bound | Mean | Std | ||

|---|---|---|---|---|---|---|

| DSWP (cm) | 0.91 | 0.51 | 0.98 | Azure | 47.75 | 5.38 |

| V2 | 49.76 | 6.63 | ||||

| LPOE (deg) | 0.67 | −0.16 | 0.94 | Azure | 84.38 | 5.49 |

| V2 | 90.74 | 4.92 | ||||

| LE (deg) | 0.63 | −0.89 | 0.94 | Azure | 44.83 | 5.20 |

| V2 | 47.41 | 7.29 | ||||

| TF (deg) | 0.75 | −0.67 | 0.96 | Azure | 30.58 | 7.35 |

| V2 | 31.54 | 7.49 |

| TAI Items | Description | AZ == V2 | AZ =/= V2 | Percent Agreement |

|---|---|---|---|---|

| 1 | Distance Transferred | 135 | 15 | 90.0 |

| 2 | Angle of Approach | 149 | 1 | 99.3 |

| 7 | Feet Position | 89 | 61 | 59.3 |

| 8 | Scoot Forward | 135 | 15 | 90.0 |

| 9 | Leading Arm Before Transfer | 117 | 33 | 78.0 |

| 10 | Push-off Hand Grip | 133 | 17 | 88.7 |

| 11 | Leading Hand Grip | 107 | 43 | 71.3 |

| 12 | Leading Arm After Transfer | 119 | 31 | 79.3 |

| 13 | Trunk Lean | 134 | 16 | 89.3 |

| 14 | Smooth Transfer | 149 | 1 | 99.3 |

| 15 | Stable Landing | 150 | 0 | 100.0 |

| Average | 128.8 | 21.2 | 85.9 | |

| STD | 19.2 | 19.2 | 12.8 |

| Improper Transfers | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Good | Feet | Trunk | Arm | Fist | ||||||

| Items | V2 | AZ | V2 | AZ | V2 | AZ | V2 | AZ | V2 | AZ |

| 1 | 100.0 | 93.3 | 100.0 | 96.7 | 100.0 | 86.7 | 100.0 | 90.0 | 100.0 | 83.3 |

| 2 | 100.0 | 100.0 | 100.0 | 96.7 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 |

| 7 | 90.0 | 80.0 | 10.0 | 23.3 | 83.3 | 60.0 | 93.3 | 63.3 | 80.0 | 73.3 |

| 8 | 96.7 | 100.0 | 70.0 | 100.0 | 93.3 | 96.7 | 96.7 | 100.0 | 100.0 | 96.7 |

| 9 | 80.0 | 96.7 | 86.7 | 100.0 | 100.0 | 100.0 | 66.7 | 20.0 | 80.0 | 100.0 |

| 10 | 96.7 | 96.7 | 93.3 | 93.3 | 100.0 | 83.3 | 96.7 | 93.3 | 90.0 | 100.0 |

| 11 | 83.3 | 83.3 | 96.7 | 63.3 | 73.3 | 90.0 | 90.0 | 100.0 | 23.3 | 20.0 |

| 12 | 76.7 | 96.7 | 80.0 | 100.0 | 100.0 | 100.0 | 33.3 | 0.0 | 76.7 | 100.0 |

| 13 | 100.0 | 96.7 | 100.0 | 93.3 | 0.0 | 40.0 | 100.0 | 100.0 | 100.0 | 96.7 |

| 14 | 100.0 | 100.0 | 96.7 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 |

| 15 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koontz, A.M.; Neti, A.; Chung, C.-S.; Ayiluri, N.; Slavens, B.A.; Davis, C.G.; Wei, L. Reliability of 3D Depth Motion Sensors for Capturing Upper Body Motions and Assessing the Quality of Wheelchair Transfers. Sensors 2022, 22, 4977. https://doi.org/10.3390/s22134977

Koontz AM, Neti A, Chung C-S, Ayiluri N, Slavens BA, Davis CG, Wei L. Reliability of 3D Depth Motion Sensors for Capturing Upper Body Motions and Assessing the Quality of Wheelchair Transfers. Sensors. 2022; 22(13):4977. https://doi.org/10.3390/s22134977

Chicago/Turabian StyleKoontz, Alicia Marie, Ahlad Neti, Cheng-Shiu Chung, Nithin Ayiluri, Brooke A. Slavens, Celia Genevieve Davis, and Lin Wei. 2022. "Reliability of 3D Depth Motion Sensors for Capturing Upper Body Motions and Assessing the Quality of Wheelchair Transfers" Sensors 22, no. 13: 4977. https://doi.org/10.3390/s22134977

APA StyleKoontz, A. M., Neti, A., Chung, C.-S., Ayiluri, N., Slavens, B. A., Davis, C. G., & Wei, L. (2022). Reliability of 3D Depth Motion Sensors for Capturing Upper Body Motions and Assessing the Quality of Wheelchair Transfers. Sensors, 22(13), 4977. https://doi.org/10.3390/s22134977