1. Introduction

Anomalous diffusion processes differ from regular diffusion in that the dispersion of particles proceeds faster (superdiffusion) or slower (subdiffision) than for the regular case. Examples for physical anomalous diffusion processes are observed for instance in porous media [

1,

2], in biological tissues [

3,

4,

5], or in chemical systems [

6]; other examples are superdiffusive processes like target search (for instance foraging of albatrosses) [

7,

8,

9] or turbulent diffusion [

10,

11]. In order to describe anomalous diffusive processes analytically a number of different evolution equations based on fractional derivatives [

12,

13,

14,

15,

16,

17,

18,

19,

20] have been developed to describe the spreading of the probability to find a particle at a certain distance from the origin of the diffusive process. While some descriptions use non-linear dependencies on the probability density functions (PDF) (for instance see E. K. Lenzi [

21,

22]) we here focus on the space-fractional diffusion equation

where the PDF

is defined on

and

and

is the diffusion constant.

Here we focus on the space-fractional diffusion equation not as a modeling tool for a highly interesting class of superdiffusion processes with remarkable features [

23,

24] but as a bridge to link the usually unrelated regular diffusion equation (a paradigm for fully irreversible processes) to the wave equation (a paradigm for completely reversible processes). For that the parameter

α must vary between 1 (the (half) wave case) and 2 (the diffusion case).

This bridge thus not only provides a continuous mapping between diffusion and waves but also a continuously ordered sequence of equations between them. This in turn implies a continuous sequence of PDF’s running from the Gaussian density to the delta function limit for the wave equation. That sequence provides an inherent ordering in which we can compare any two members of the bridging PDF family to say which is “closer” to pure diffusion than the other. In that sense we can say which PDF is “closer” to a Gaussian. This ordering as mentioned is the most rudimentary but essential property of the notion of a distance, without specifying a metrical property. We will refer to this ordering as the “bridge ordering”.

We have been exploring this specific bridging domain between irreversible and reversible processes and found that it has some surprising properties with broader implications. One such property is known as the entropy production paradox [

23,

24,

25,

26]. Contrary to the ordering implied by the fractional exponent

α (the bridge ordering) the entropy production does not decrease as the reversible end of the bridging regime is approached. The same behavior was observed for a different one-parameter path defining a continuous sequence of fractional differential equations that connect the diffusion equation to the wave equation based on time-fractional diffusion equations [

25,

26]. Over several papers [

23,

24,

25,

26] it became increasingly clear that both distinct families exhibited remarkably comparable behaviors from a “thermodynamic” viewpoint. In particular entropies (classical, Tsallis, and Rényi) and their rates exhibited common features, suggesting that the bridge ordering of the fractional diffusion equations is not compatible with their increase or decrease.

We thus turned to the problem of ordering on a more fundamental basis. Ordering for functions or vectors is not an inherent property. One can of course impose an ordering, but generally there is no absolute ranking or ordering for functions or finite dimensional vectors. One can alter any imposed orderings simply by altering how the functions or vectors are mapped to . With one scheme, the ordering can call for a certain pair of functions to be ranked in one manner, while with another scheme the ranking can as easily be reversed. The reversal property can be illustrated simply with two row vectors and . In terms of ℓ-norms, while . The ordering is reversed without altering the vector in any way but by simply changing the ℓ-norm. Generally, if some exterior criterion imposes a particular mapping scheme then the ordering may only make sense in terms of that particular mapping scheme.

Ordering may also be used as a rudimentary form of distance, serving as an alternative to discussing “near” and “far” by recognizing that things between an object and a common reference, due to the ordering, are “closer” to the reference, independent of any metrical structure. Of course metric structure, like ordering, is not inherent between functions or vectors either. But ordering will often serve to discuss distance in a broader context, which often happens in physical applications. For example, in the framework of thermodynamical applications one may speak of physical systems being near or far from equilibrium, or of processes being more “irreversible” than another for example. Obviously these vague statements need specific frameworks to avoid unanticipated reversals arising from changes in context.

In this paper we will analyze the ordering implied by the Kullback–Leibler entropy or relative entropy and its extension in the non-extensive framework, the Tsallis relative entropy. In practice we compute relative entropy and its Tsallis generalization as a function of the bridging parameter α. If there are no maxima or minima in the resulting graphs over the bridging interval then the ordering is consistent between the relative entropy picture and the bridging picture. Tsallis relative entropies come into play because Kullback–Leibler can be divergent in some cases when comparing Gaussian PDF’s to the fat tail distributions emerging in the bridging regime. This problem does not seem to emerge for Tsallis relative entropies. Moreover the broader class of relative entropies can convey robustness in terms of the basic notion of entropy. As well, in so much as the various relative entropies are physically consistent, one should expect that they would have significance to the physically meaningful questions of irreversibility versus reversibility.

The strategy of this paper is to specify the equations of the bridging regime and the resulting PDF’s. We will limit discussion to the space-fractional case for simplicity and brevity. The relevant properties of the family of PDF’s noting the ordering with respect to the parameter α will be presented. We define the relative entropies and the Tsallis generalization, and then set up the ordering in terms of the relative entropy using the irreversible (Gaussian case) as the reference PDF. This is the only sensible choice as at the wave limit the family of bridging PDFs goes to a delta function. The cases where the relative entropy diverges and converges are outlined. Then finally the graphs comparing ordering are presented.

2. Introduction to Relative Entropies

What is now known as the Kullback entropy [

27], Kullback–Leibler entropy, relative entropy or information loss is a measure to compare two probability distributions given on the same domain. While being an information-theoretic concept it is also intimately connected to properties of thermodynamic systems [

28]. The relative entropy is typically used in cases of reliability [

29], which is important in the robust dynamic pricing problems [

30] and keystroke dynamics [

31], or in order to compare dynamical systems [

32] and Markov models [

33] as well as to measure their complexity, called thermodynamic depth [

34,

35]. Furthermore the relative entropy is an important measure in the quantum information theory [

36,

37], quantum mechanics [

38,

39], computer graphics [

40], or ecology [

41].

The Kullback–Leibler entropy

is here defined as [

27,

28,

42]

where the first term is the (negative) Shannon entropy

and

represents the cross entropy. It is a (positive) measure for the information gain possible if a probability distribution

is described by an encoding optimal for

rather than for a reference distribution

.

In 1998 C. Tsallis introduced a generalization of the Kullback–Leibler entropy in the framework of the non-extensive thermodynamics [

42,

43,

44], the Tsallis relative entropy or

q-relative entropy, which is given as

For uniform

the Tsallis relative entropy reduces to the (negative) Tsallis entropy

, for details see [

24].

Reference [

45] indicates that both relative entropies, the Kullback–Leibler and the Tsallis relative entropies, are useful for finding approximate time dependent solutions of fractional diffusion (or Fokker–Planck) equations. In the limit of

the Tsallis relative entropy becomes the Kullback–Leibler entropy. Both relative entropies are not symmetric,

i.e.,

and

.

3. Space-Fractional Diffusion Equation and Stable Distributions

In the one-dimensional space-fractional diffusion equation (

1) the fractional differential operator

is defined via the Fourier transformation

as

Choosing the initial distribution to be the

δ-function

we can determine the solution of the space-fractional diffusion equation (

1) in terms of a stable distribution

and using the definition given in [

46] and represent it as

where

and the parameters are chosen appropriately with

We stress that (

6) is a solution to the fractional diffusion equation (

1) only for

. In the fully asymmetric case of interest here with

we find in the limit

that the stable distribution has the scale parameter (dispersion indicator)

and the mode (

i.e., the maximum of the distribution)

. This represents a

δ-function moving in time centered at

, which is the one-sided solution of the wave equation with the initial distribution (

5) .

The stable distribution

is defined on the whole real axis with

, and

[

46]. Their definition is based on their characteristic functions via a Fourier transformation. In the literature different definitions exist for the same choice of the parameters. These are indexed by

n [

46], here we will use only those with

and

. For

the characteristic function is given in the parametrization

by

while for

the characteristic function is

In these definitions the characteristic exponent

α (or index of stability) and the skewness parameter

β determine the form of the distribution. The scale parameter

γ is a measure for the dispersion of the distribution, and finally, the location parameter

is related to the position of the mean and the mode.

In the parametrizations

and

the parameters

, and

γ are the same, but the location parameter

differs. In order to distinguish between the two parametrizations we supplement the location parameter

with the index

n. Then, we have for

the relation

which allows to shift between the two parametrizations.

Furthermore, the following useful mathematical properties of stable distributions are known [

24,

46]:

The stable distribution rescales as

For

stable distributions satisfy the reflection property [

47]

The position of the mean

μ of the distribution is given by

Stable distributions are unimodal distributions. The mode

depends on the parametrization and on the location parameter. For

it is given by

in the range

, where

is a function which for the case of interest below,

, stays bounded between 0 and 1 and can be determined numerically.

For the stable distributions do not have a finite second moment, i.e., a finite variance.

From (

16) and (

17) we find that in the

parametrization the location parameter indicates the mean of the distribution, whereas in the

parametrization the location parameter indicates where the mode and thus the bulk of the probability is located. Note that for

the location parameter gives the mode.

Although the inverse Fourier transforms of (

11) and (

12) are in general not known in a closed form, it is possible to give the asymptotic tail behavior [

46]. In general, for

and

, the left and the right tail follows asymptotic power laws:

with

and

being the Gamma-function. In the fully asymmetric cases

the left tail and

the right tail have a different tail behavior. Here we give the tail behavior for the case

, as this case represents the solution of the space-fractional diffusion equation (

1)

with the constants

which are both positive. The

case can be obtained via the reflection property (

15) .

4. Reference Distribution

The aim of this paper is to analyze the Kullback–Leibler entropy and its Tsallis generalization as a means to establish an ordering of the solutions

and in particular study its compatibility with bridge ordering expressed by

α. As a reference we will use the solution to the

regular diffusion case, for which the PDF is the well known Gaussian. Thus, for

the space-fractional diffusion equation (

1) reduces to the normal diffusion equation. Correspondingly the stable distribution

becomes the (Gaussian) normal distribution

where

is the normal distribution with mean

μ and variance

, here

and

, thus the standard deviation

[

46].

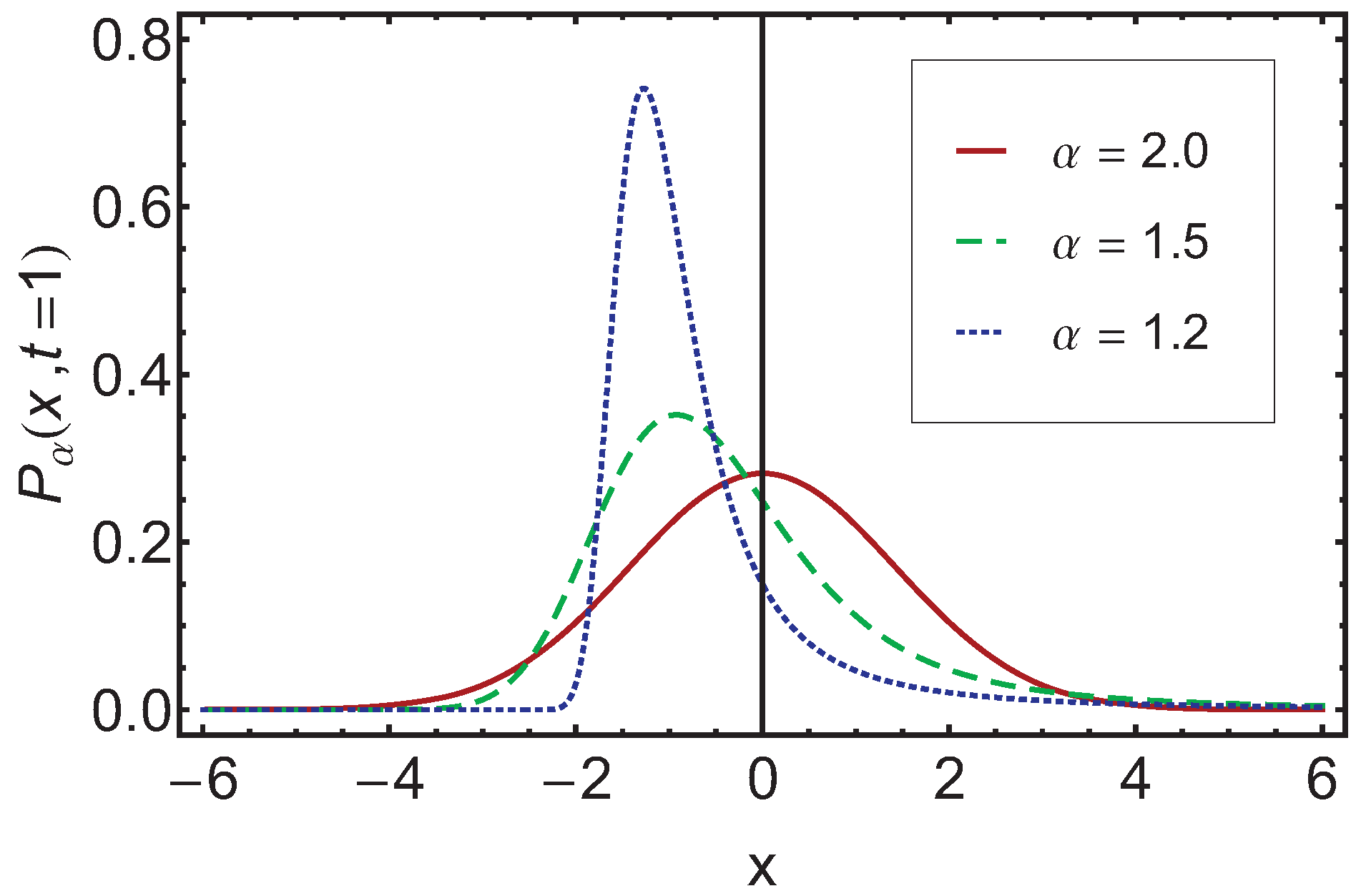

In

Figure 1 a comparison plot is given, where the stable distribution

is depicted over

x for

. Note that

and

is chosen.

Figure 1.

In this graph the solution for and is shown for different values of , and . Note that for the notation is used in the following.

Figure 1.

In this graph the solution for and is shown for different values of , and . Note that for the notation is used in the following.

5. Kullback–Leibler Entropy

In this section we will use the Kullback–Leibler entropy as a comparison measure for the solutions of the space-fractional diffusion equation for different α. We thus aim to calculate and .

While for standard distributions falling of fast enough as x approaches this poses no problem, we are here concerned with distributions with heavy tails, for which we already know, that higher moments do not exist. Thus, we first have to address the question whether the Kullback–Leibler entropy exists at all. We will discuss the convergence question by using a generic Gaussian and a generic with yet unspecified γ. At the end we will replace γ by .

As our aim is to establish an ordering of the distributions obtained for different

α we are here interested in

as well as

. We start with

and find

The first integral of Equation (

22) converges as shown in [

24], whereas we will show below that the second integral does not converge and thus

does not exist. We mention here that the use of escort distributions provide an interesting route to the analysis of heavy tailed distributions in the context of the Kullback–Leibler and the

q-relative entropies. For details see [

48]. Their implications for the ordering of the distributions and its comparison to the bridge ordering is beyond the scope of this paper, but provides certainly an interesting starting point for further research.

For this and the following analysis we divide the integrals into three parts, the left tail, the middle part, and the right tail. Due to the known asymptotic tail behavior (

18) – (

20) the left tail and right tail integral can be analyzed analytically, whereas the middle part is always finite and has to be determined numerically. We split the integration domain for an arbitrary

as follows

where

and

are chosen such that for the numerical treatment the stable distributions can be well approximated by their asymptotic tail behavior. Accordingly, we have

We start with

. Taking the logarithm of

we find

which leads to

for the right tail integral. The range of

α here is

, therefore the exponent of

x of the integrand in (

26) is

and thus the integral does not converge. This is compatible with the non-existence of the second moment for the stable distributions discussed here. As a result we cannot use

and thus

as a basis for defining a distance measure on the distributions in the transition regime between reversible and irreversible processes.

As the Kullback–Leibler entropy is an asymmetric measure, the non-existence of

does not imply the non-existence of

which we now analyze

The first integral yields

which is finite.

For the further discussion below we recall that

for any given

,

, and

a arbitrary but fixed. The logarithm

within the second integral of (

27) is obtained from the asymptotic tail behavior. For the right tail we get

which according to (

29) converges. For the left tail we have

Multiplied with

, one finds from (

29) that the integrals converge for

.

As a result

and thus

can provide a new basis of comparison between the probability distributions for different

α. In

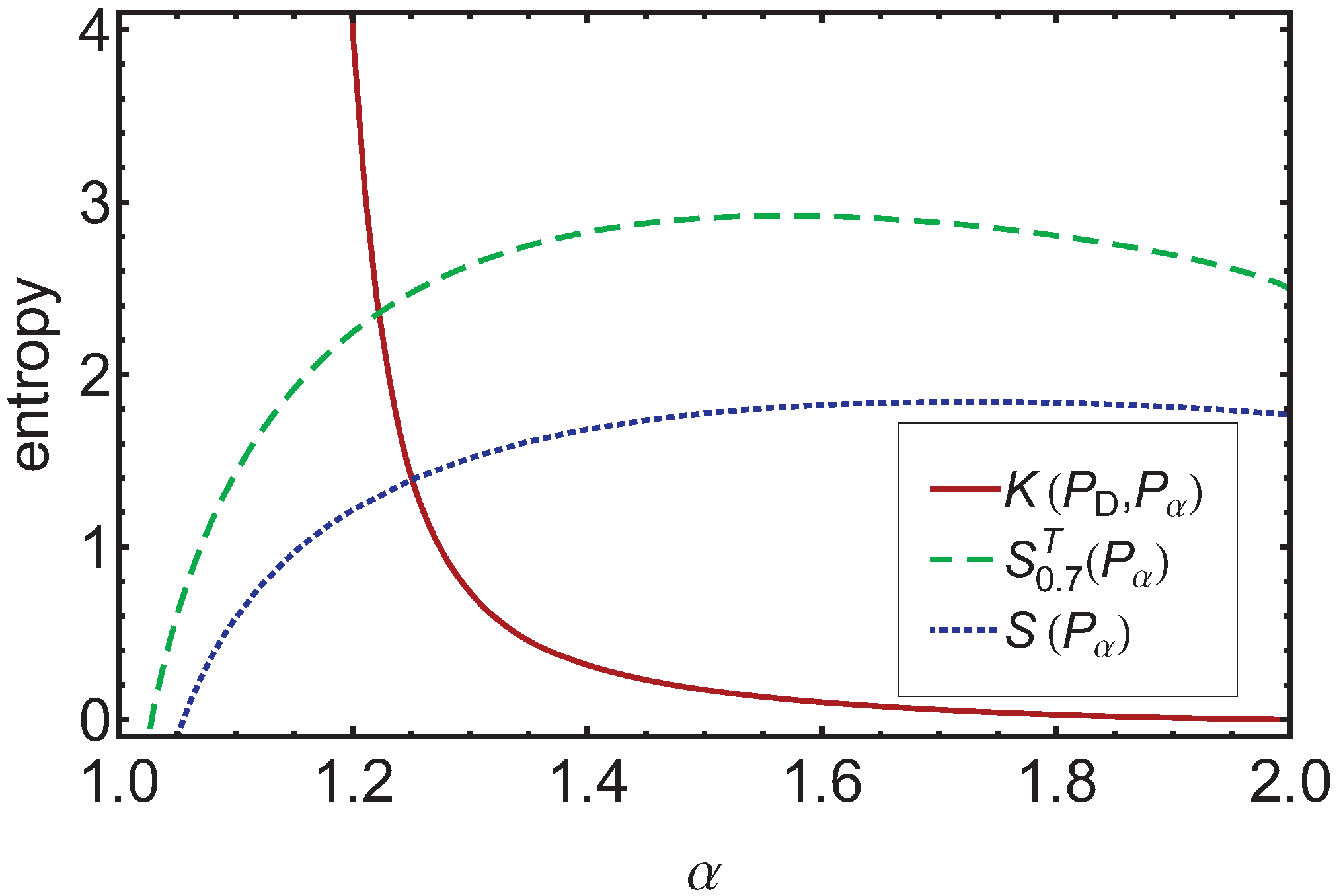

Figure 2 we show

as a function of

α for time

. The monotonous dependence indicates that

provides the same ordering of the diffusion processes in the bridging regime as does the entropy production rate, albeit in reversed order. The

-ordering is fully compatible with the ordering obtained by using the Shannon entropy and the Tsallis entropy as a means of comparison, when the appropriate times are chosen for the different processes, such that the internal quickness is separated out [

24]. If one does not separate out the internal quickness, then the Shannon entropy

as well as the Tsallis entropy

, see [

24], provides an ordering not compatible with the bridge ordering. The corresponding curves for

are shown in

Figure 2, too.

Figure 2.

Here the Kullback–Leibler entropy , the Tsallis entropy and the Shannon entropy are plotted over α at . We can see that the Kullback–Leibler entropy shows a monotonic decreasing behavior for increasing α, whereas the Tsallis and the Shannon entropy exhibit a maximum. Thus, is an appropriate ordering measure for the bridging regime even when other measure candidates are not monotonic.

Figure 2.

Here the Kullback–Leibler entropy , the Tsallis entropy and the Shannon entropy are plotted over α at . We can see that the Kullback–Leibler entropy shows a monotonic decreasing behavior for increasing α, whereas the Tsallis and the Shannon entropy exhibit a maximum. Thus, is an appropriate ordering measure for the bridging regime even when other measure candidates are not monotonic.

6. Tsallis Relative Entropy

Now, we turn to the Tsallis relative entropy. In the following section we will analyze for which values of the non-extensivity parameter q the integrals do converge. For this analysis we assume again a generic Gaussian and a generic stable distribution. Afterwards, we present the numerical results.

We start with

, for which we have

In analogy to the analysis of the Kullback–Leibler entropy, (

23) and (

24) , we split the Tsallis relative entropy integral into three parts, left tail, middle part, and right tail. Due to the asymptotic tail behavior of the stable distribution given in (

18) and (

20) we can investigate the left and the right tail analytically. For the Tsallis relative entropy we thus have

The left tail integral yields

First, we note that the constant

takes values from 0 to

monotonically increasing for

. Also we find that the exponent

lies within the range of

for

. Thus, the first term in the exponent of the exponential will dominate the second one and the integral will converge if the prefactor

of that term is negative, whereas for

the second term takes over. This sets

as a first requirement.

For the right tail integral

we obtain

that converges for

or

. This sets the second requirement on

q. Overall, the Tsallis relative entropy

and thus

exist for

.

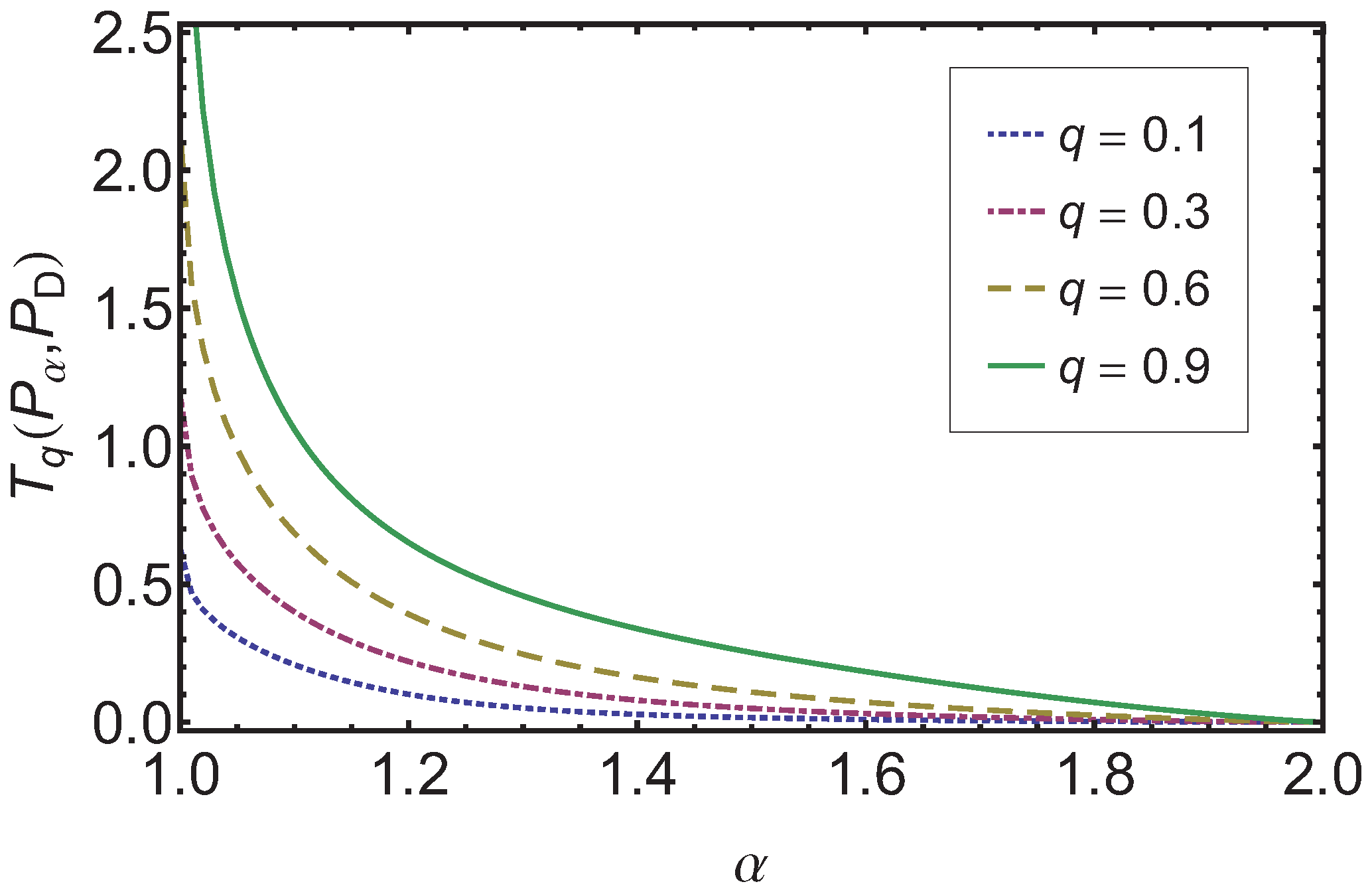

In

Figure 3 the dependence of

on

α is shown. Again one finds an ordering compatible with the original order as established by

α, the bridge ordering. This holds independent of the

q value used as long as

. We note that the larger

q is the easier it is to separate two processes with different

α. Also as expected for

the measure goes to zero, independent of

.

Figure 3.

The results for the Tsallis relative entropy are shown over α for four different values of q (, and ). As expected goes to zero as α approaches 2, independent of q.

Figure 3.

The results for the Tsallis relative entropy are shown over α for four different values of q (, and ). As expected goes to zero as α approaches 2, independent of q.

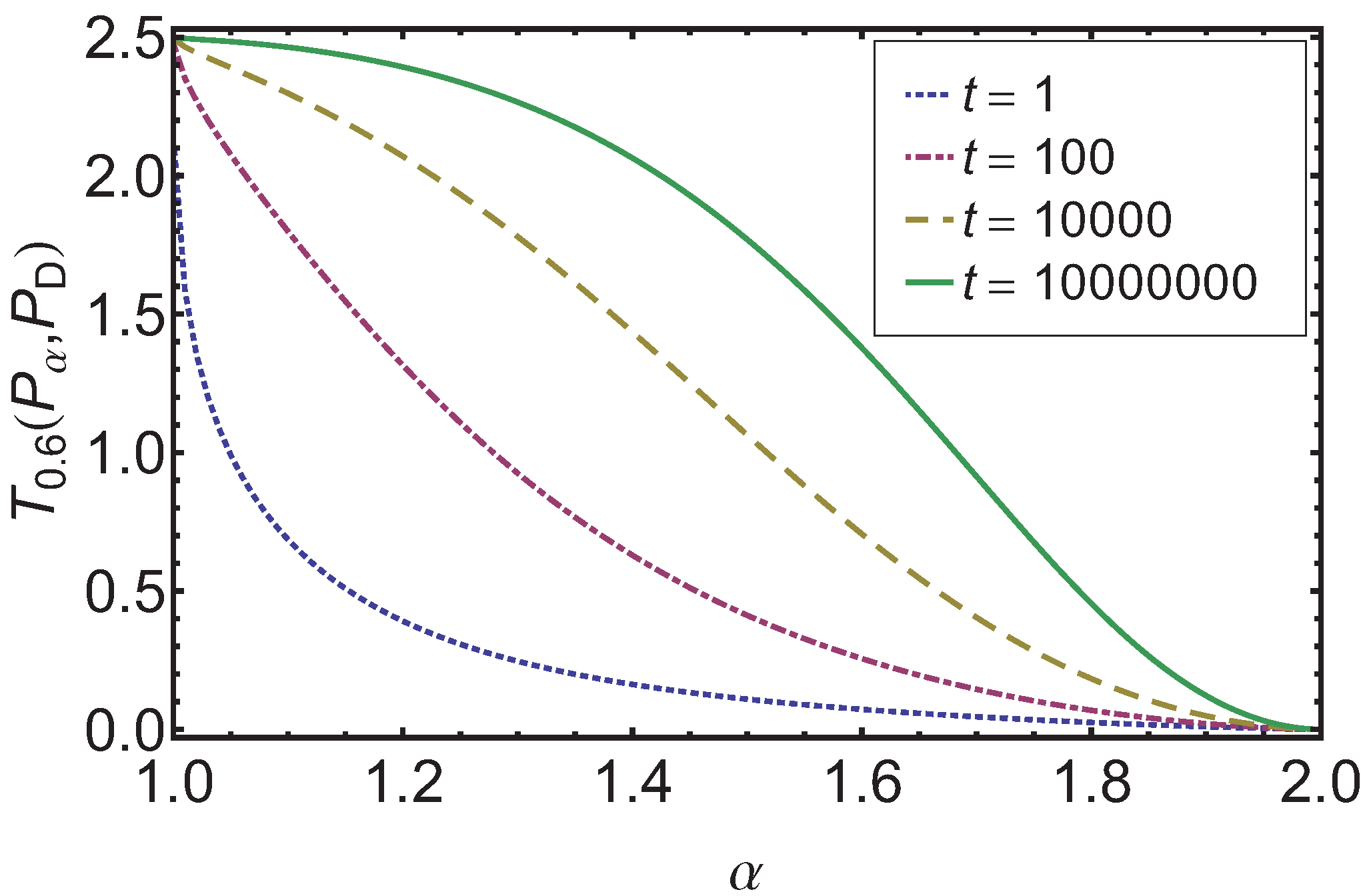

While the complete discussion of the time development of the Tsallis relative entropy is beyond the scope of this paper, we show in

Figure 4 how

(for

) behaves for different times. One sees that the monotonic behavior is preserved. An interesting question for future research is whether one can formulate an extension of the H-theorem for this measure.

Figure 4.

For the case of the results for the Tsallis relative entropy for different times t is given over α. One can observe that with increasing time the monotonic decreasing behavior is preserved.

Figure 4.

For the case of the results for the Tsallis relative entropy for different times t is given over α. One can observe that with increasing time the monotonic decreasing behavior is preserved.

Finally, we analyze for which values of

q the Tsallis relative entropy

does exist. It is given as

Again we have to investigate the tail integrals based on the asymptotic tail behaviors that are given in (

20) and (

18) . We split our integral into three part as explained in (

33) and in the case of the (

36) we obtain the subsequent left tail integral

as

In order to find the requirement on

q we proceed in analogy to the discussion for (

34) . Here the second term of the exponent of the exponential in the integrand will dominate the first term for large enough

. Thus the prefactor must not be negative for convergence,

i.e.,

, or it is zero and then the first term takes over, and thus the requirement is

, as for

the Tsallis relative entropy is not defined.

For the right tail integral

we get

which converges for all

for

. Therefore,

q has to be in the range

in order to insure that the Tsallis relative entropy

has a finite value.

In

Figure 5 we show the dependence of

on

α. The ordering of the superdiffusion processes in the bridging regime induced by

is again in full agreement with the bridge ordering by

α.

Figure 5.

Here, the Tsallis relative entropy is depicted over α for different values of q. We find that for goes down to zero, independent of q. Note that in the case of the corresponding Kullback–Leibler entropy is shown.

Figure 5.

Here, the Tsallis relative entropy is depicted over α for different values of q. We find that for goes down to zero, independent of q. Note that in the case of the corresponding Kullback–Leibler entropy is shown.

As both relative entropies, the Kullback–Leibler

as well as the Tsallis relative entropy

, preserve the ordering of the processes in the superdiffusion regime as induced by

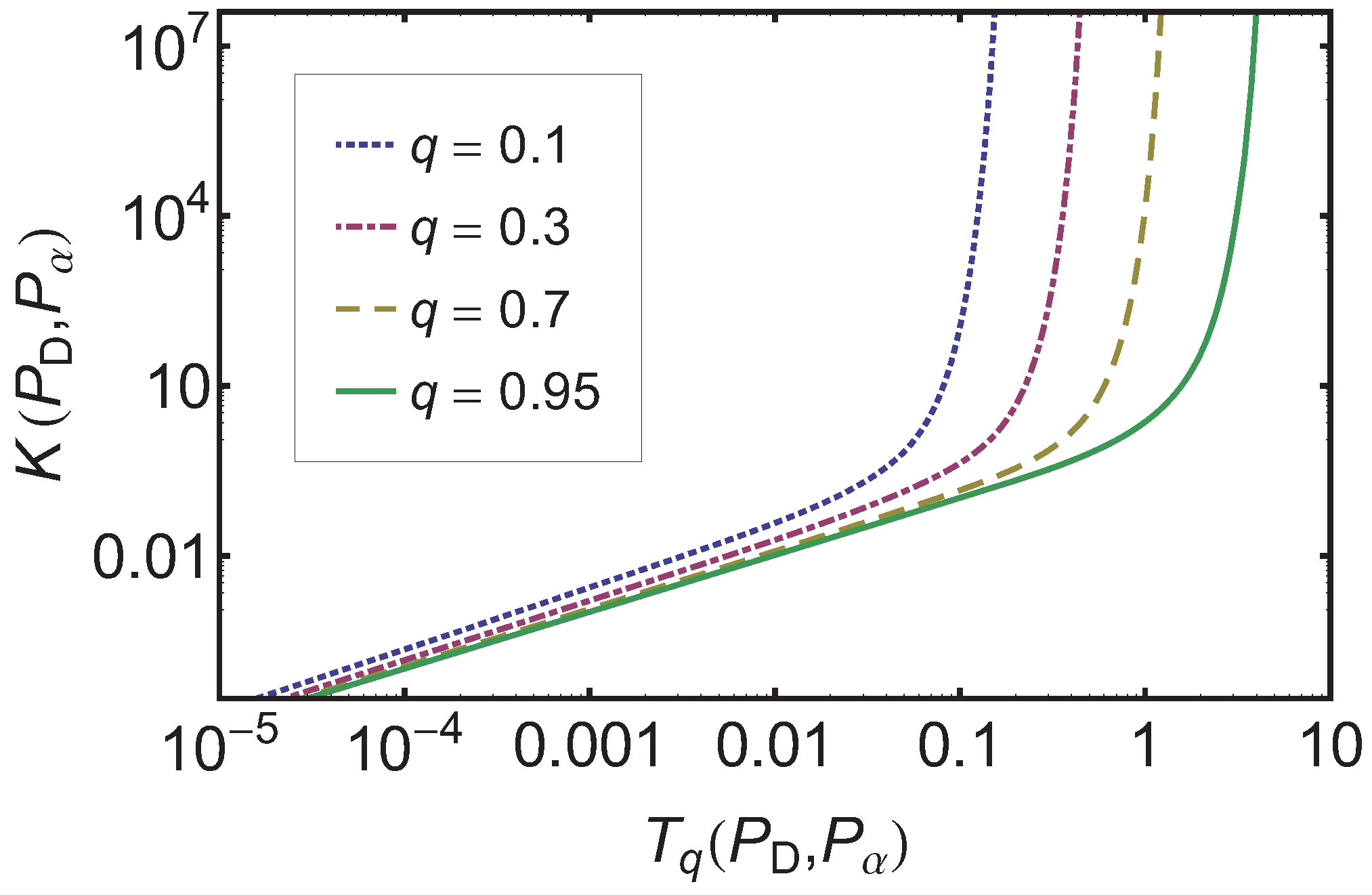

α, it is interesting to study their relative behavior. In

Figure 6 a log-log-plot of

versus is shown for different

q and identical

and

. The graph shows a clear monotonic relationship independent of the

q value used. As

approaches

in the limit

the close to straight line for

is expected. Interestingly, the curves for the other

q values also show this feature for small values of the relative entropies.

Figure 6.

The log-log-plot of the Kullback–Leibler entropy over the Tsallis relative entropy for different q-values is given. A clear monotonic monotonic relationship is observed independent of q.

Figure 6.

The log-log-plot of the Kullback–Leibler entropy over the Tsallis relative entropy for different q-values is given. A clear monotonic monotonic relationship is observed independent of q.

7. Summary and Discussion

The bridge ordering by α of the space-fractional diffusion equations and of the corresponding PDFs had previously shown a structure not compatible with an ordering based on the Shannon, the Tsallis, and the Rényi entropies, and their respective rates. While we were able to resolve this behavior known as the entropy production paradox by an in depth analysis of the intricate coupling of internal quickness to the form change of the PDFs, we here found that the Kullback or Kullback–Leibler entropy and its generalization, the Tsallis relative entropy, naturally establish an ordering on the consistent with the bridge ordering. We did this by calculating the Kullback entropy and the Tsallis relative entropy with respect to the solution of the regular diffusion equation, i.e., the case of the space-fractional diffusion equation.

We found that the Kullback–Leibler entropy for a versus a general Gaussian distribution does not exist as the integrals diverge. The reason for this divergence is rooted in the heavy tails of the stable distributions. These heavy tails lead to the non-existence of higher moments, and considering the fact that the logarithm of a Gaussian is proportional to the second moment, the divergence of the Kullback–Leibler entropy is not surprising.

The Tsallis relative entropy, which is a non-extensive extension of the Kullback–Leibler entropy depending on a non-extensivity parameter , however does exist and thus proves to be a highly useful generalization of the Kullback–Leibler entropy. In particular we showed that the Tsallis relative entropy can be determined for q-values in the range . Within that range the Tsallis relative entropy of with respect to as well as Tsallis relative entropy of with respect to for all q show an ordering of the consistent with the bridge ordering as induced by α.