1. Introduction

As a simple and effective controller, the proportional-integral-derivative controller (PID controller), which has advantages in terms of strong robustness, high reliability, good dynamic response and so on, has been widely used in most industrial control systems. Although it attracts many engineers’ attention, even today, the parameters of the traditional PID controller cannot be adjusted adaptively. Satisfactory control quality often cannot be obtained due to the inevitable nonlinearity and disturbances in real processes. Motivated by the structure and function of the cerebral neural networks, artificial neural networks (ANN) were originally developed by McCulloch and Pitts in 1943. For its specific stability of nonlinear adaptive information processing, ANN has been researched extensively by many scholars and engineers to deal with modern complex nonlinear systems [

1,

2,

3]. It is well known that most multi-layer neural networks adopt S-type functions, which increase the amount of calculation. In order to meet the requirements of fast neural control processes, the single neuron adaptive control strategy was proposed in [

4]. Besides the ability of self-learning and self-adaptation, the single neuron adaptive control strategy has real time ability due to its rapid numerical calculation. The model-free adaptive control system formed by single neuron, because of its simple structure, has been studied and some results of learning algorithm and applications can be found (see e.g., [

5,

6]).

Stochastic systems can be regarded as reasonable ones due to the inevitable randomness in practical industrial processes. Under the assumption that the noises obey a Gaussian distribution, some mature control strategies have been established, such as minimum variance control and linear quadratic Gaussian (LQG) control. However, the disturbances in practical systems are not necessarily non-Gaussian; moreover, nonlinearity in stochastic control systems could lead to non-Gaussian randomness even if the disturbances obey a Gaussian distribution. For this case, the stochastic distribution control (SDC) method was proposed by Wang [

7], where the shape of the output probability density function (PDF) rather than the output itself was considered. Recently, a linear-matrix-inequalities (LMI)-based convex optimization algorithm was used for the control of output PDF, filtering and fault detection for non-Gaussian systems by Guo and Wang in [

8], where a B-spline was utilized to approximate the output PDF. As such, the PDF was characterized by the weights and selected B-splines. Accordingly the evolution of the PDF can be represented by the state equation of the weights [

9,

10,

11]. However, not all the PDFs of the outputs are measurable. Several new methods were introduced to overcome these problems by investigating the closed-loop performance characterized by the entropy of the output tracking error [

12,

13,

14,

15]. Since the exact models are difficult to obtain in practical industrial processes, it is a promising approach to use the only statistic information of systems input and output data. [

16,

17,

18] presented the estimation of the α-order Renyi’s entropy directly from the on-line output data. In [

19], by using the above data-driven method, a novel run-to-run control methodology was proposed for semiconductor processes with uncertain metrology delay.

Motivated by the above results, in this paper a single neuron adaptive controller, which is a model-free controller, is developed for stochastic systems with non-Gaussian disturbances. The proposed control strategy is based on an improved minimum entropy criterion of closed loop stochastic systems. Our contributions are twofold. First, we present a simple control algorithm with the absence of the exact system model. Second, an improved non-Gaussian performance index is formulated to train the weights of the single neuron. Based on the optimal weights, the control algorithm could drive the tracking error approximating to zero with small deviation.

The remainder of the paper is organized as follows: the structure of the single neuron controller is illustrated in

Section 2. An improved performance index including the information potential of the closed loop tracking error, the mean value of squared tracking error and constraints on control input energy is introduced. Using the non-parametric estimation of the PDF of tracking errors, the performance index is then obtained in

Section 3. By minimizing the proposed performance index using the decreasing gradient method, weights of the single neuron are updated in

Section 4. In addition, mean-square convergent condition of the proposed control algorithm is formulated. Comparative simulation results are given to illustrate the efficiency and validity of the proposed method in

Section 5.

2. Structure of Single Neuron Adaptive Controller

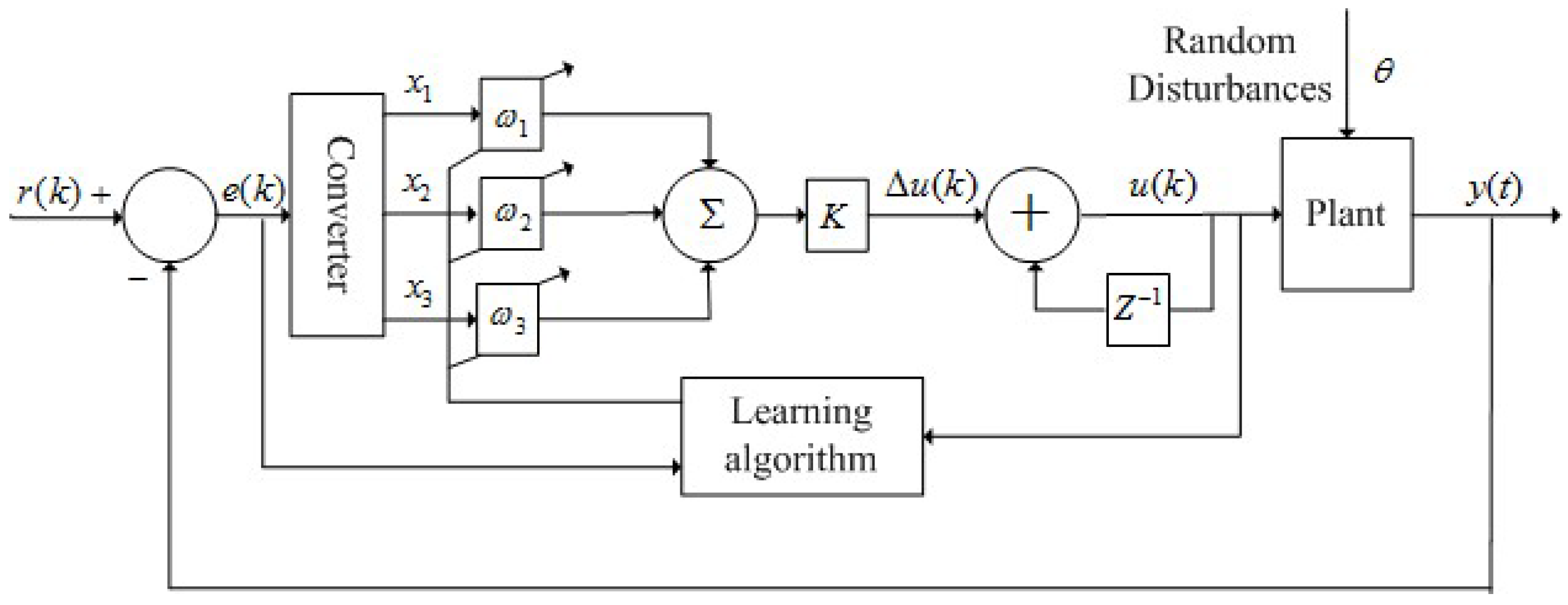

The general scheme of the single neuron adaptive control system is illustrated as

Figure 1.

Figure 1.

The schematic diagram of a single neuron adaptive control system.

Figure 1.

The schematic diagram of a single neuron adaptive control system.

In

Figure 1,

is the non-Gaussian random disturbance involved in the system.

and

are set-point and controlled variable respectively,

is the tracking error in the closed loop control system. The control input

can be expressed as:

where

,

is proportional coefficient of neuron.

stands for the weight corresponding to each input.

is the input of the neuron coming from the tracking error

and can be defined as follows:

Due to the non-Gaussian disturbances involved in the systems, it is necessary to utilize an appropriate performance index to characterize the randomness of the tracking error. Accordingly the main task is to train the weights by minimizing the given performance function so as to drive the tracking error approximation to zero with small randomness.

3. Improved Minimum Entropy Criterion

Since the disturbances in most control systems are not necessarily Gaussian, the variance of the output tracking error would not be sufficient to characterize the performance of the tracking error dynamic systems. Therefore, an alternative measure of uncertainty, entropy, is given to construct the performance index for measuring the dispersion of the stochastic systems. Ideally, small entropy of the tracking error means the error has narrow and sharp PDF. Owing to the computational efficiency of its non-parametric estimator, Renyi’s quadratic entropy (

,

) is selected here. It can be expressed by the following definition:

where

is defined as the information potential [

17]. It is clear from Equation (3) that Renyi’s quadratic entropy is a monotonic function of the information potential. Hence, in order to simplify computational complexity, the inverse of quadratic information potential is used instead of Renyi’s entropy.

In order to make the tracking error approach zero, the mean value should be considered. Furthermore, since the tracking error may be positive or negative, thus the mean value of the square error should also be included in the performance index:

Since control inputs in practical control systems are always constrained by physical limitations of actuators, the magnitude of the control input should be minimized too. Above all, the whole performance function can be formulated as the weighted sum of inverse of the information potential of tracking error, the square of tracking error and the constrains on control input:

In order to calculate the performance index, it is necessary to estimate the PDF of closed-loop tracking error

from error sequences

within a receding window whose width is

. The PDF of the tracking error can be estimated by Parzen windowing technique:

where

is the Gaussian kernel function,

and

represent typical symmetric variance and the total data number, respectively. Thus the quadratic Renyi’s entropy can be obtained as follows:

Subsequently, the quadratic information potential and the mathematical expectation of the tracking error can be formulated from samples within the receding window:

Finally, the performance index can be calculated in the following way:

5. A numerical Example

In order to illustrate the feasibility and efficiency of the presented optimal control algorithm, let us consider the following nonlinear non-Gaussian stochastic system described by:

The PDF of random variable

is defined by:

where α = 1 and

. The set-point of system Equation (26) is set to

. The sampling period is

and learning factor

. The weights in Equation (10) are

,

and

respectively.

The advantage of the proposed method is shown by comparing with a PID controller whose transfer function is

. The optimal PID parameters are tuned using the Matlab NCD toolbox:

,

and

. The comparative results are shown in

Figure 2,

Figure 3,

Figure 4,

Figure 5 and

Figure 6.

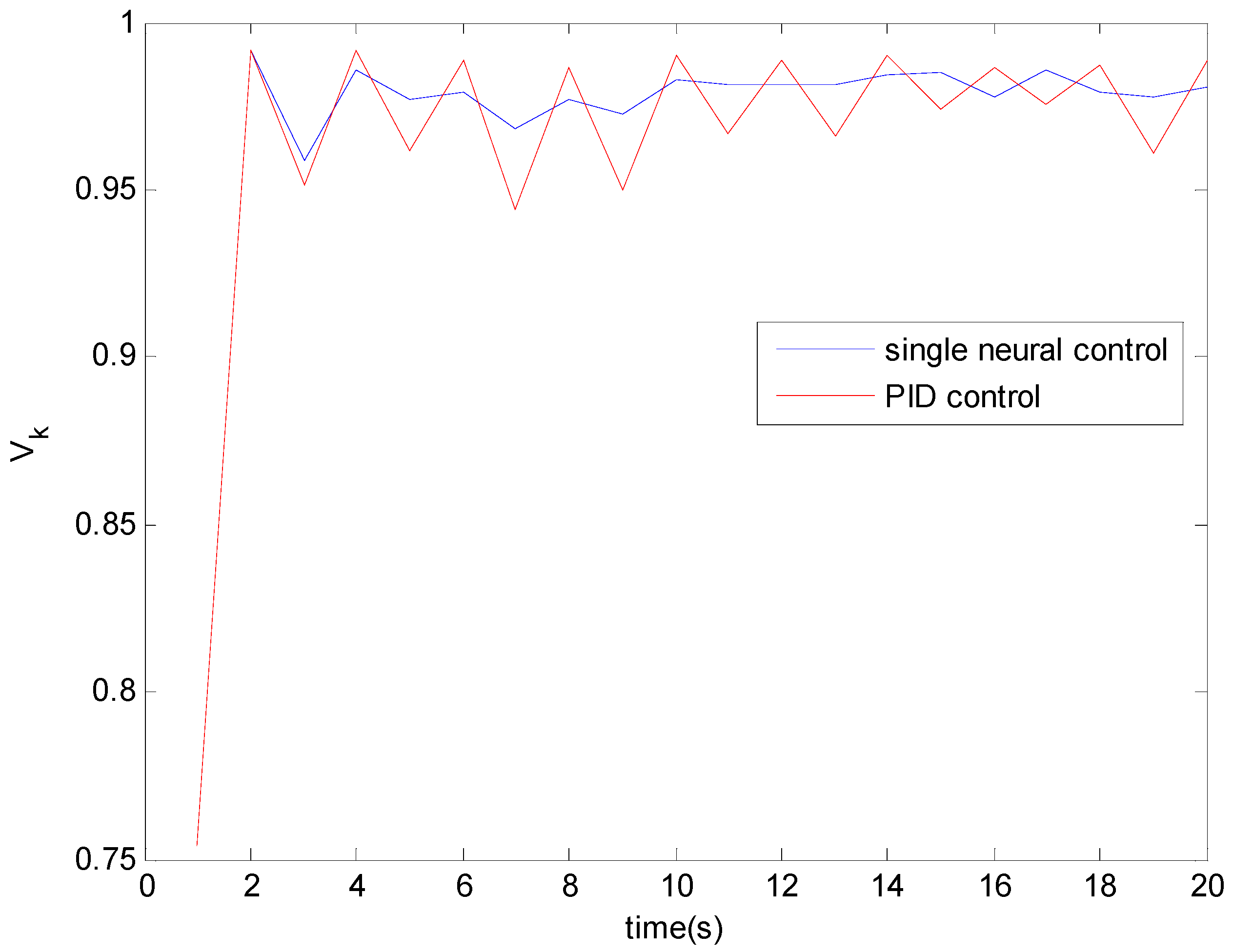

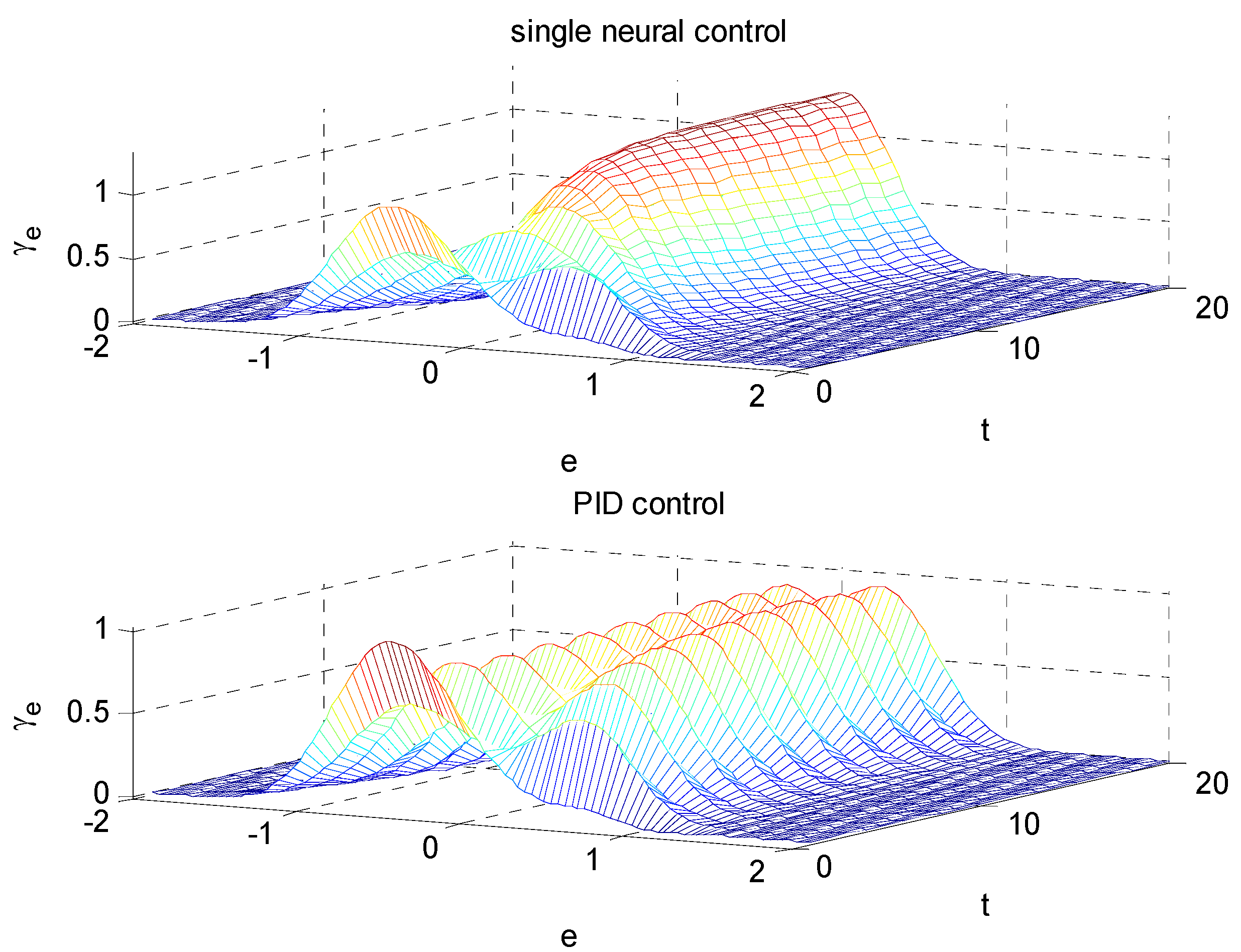

It can be seen from

Figure 2 and

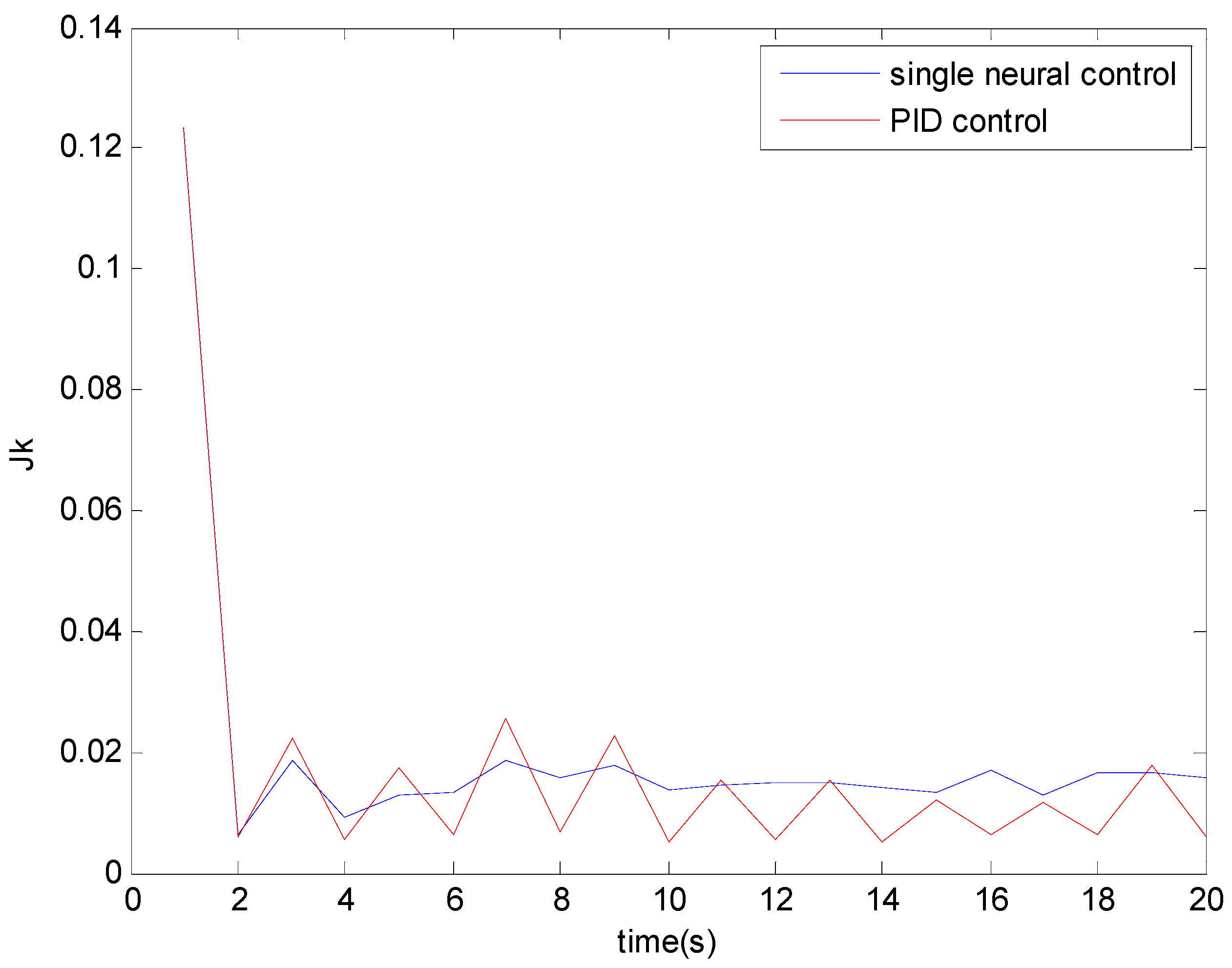

Figure 3 that the information potentials under PID control and proposed single-neuron adaptive control both increase with time, while the performance indices

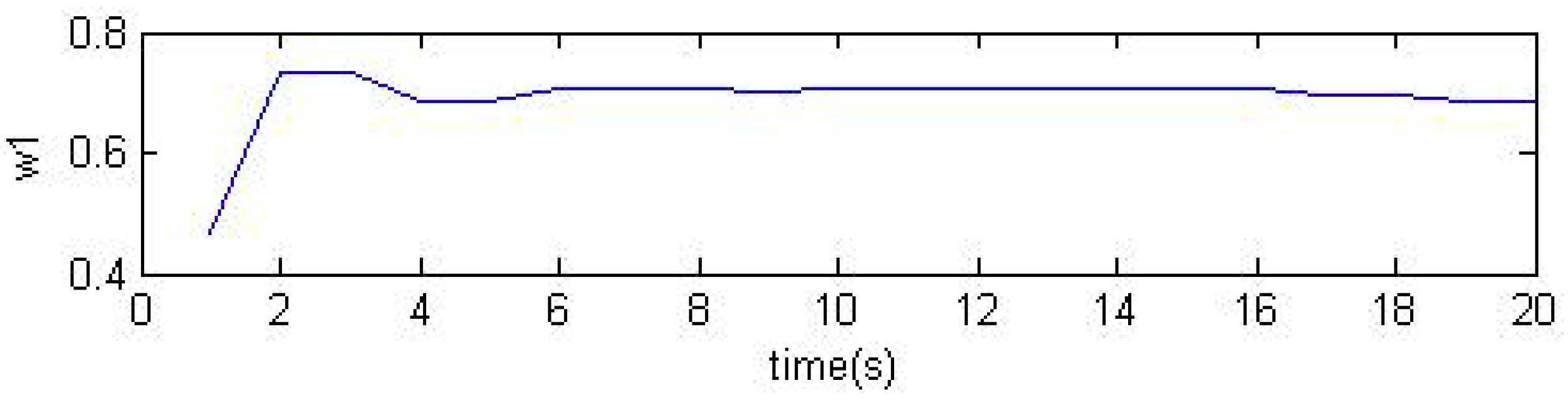

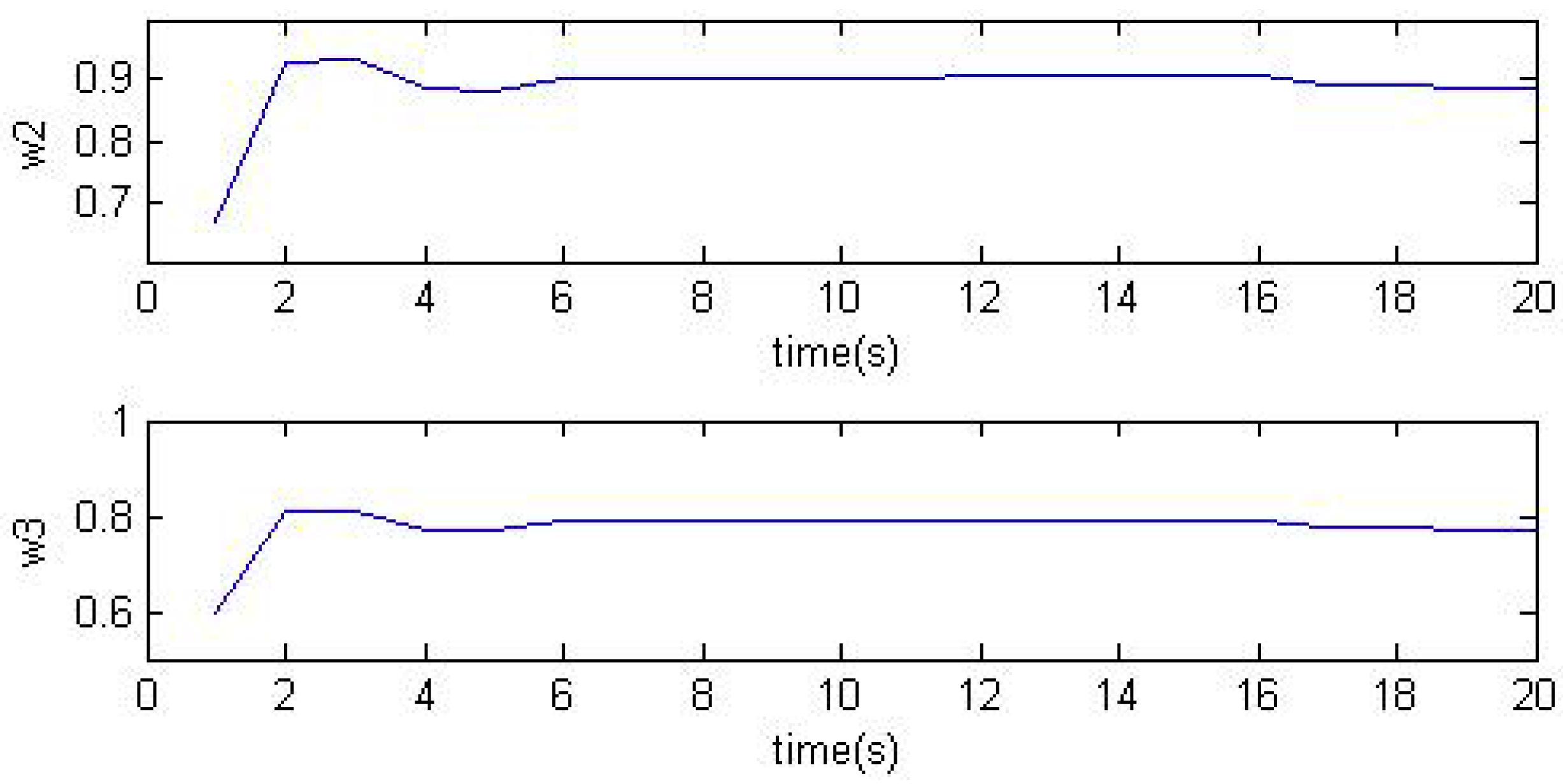

decrease with time. The variances of the single neuron weights tuned by the proposed performance index are presented in

Figure 4. In

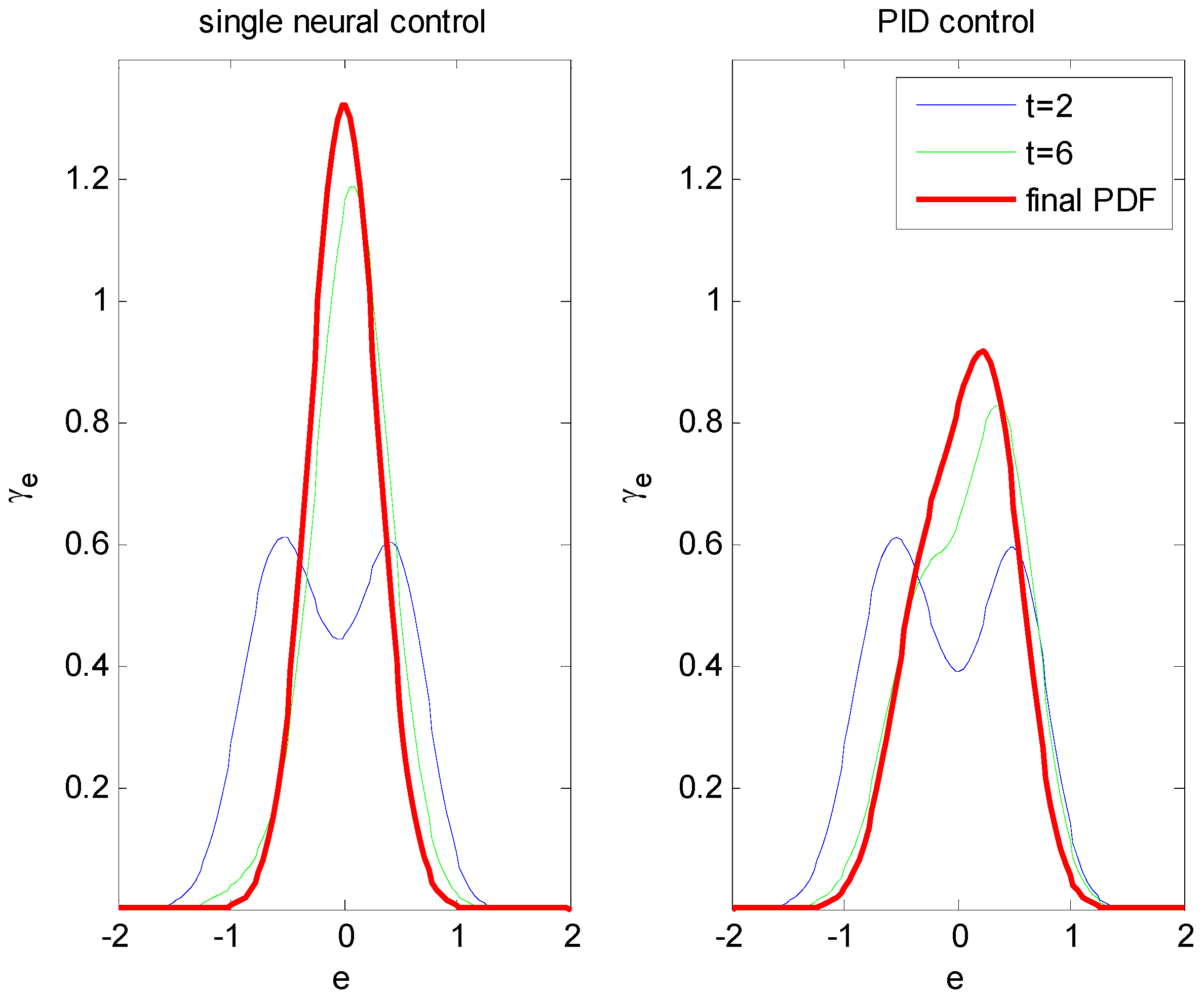

Figure 5, both the range of tracking errors and PDFs of tracking errors are given. It can be seen that the shapes of PDFs of the tracking errors become narrower along with the increasing of the time. In order to clarify the improvements, the PDFs at several typical instants are shown in

Figure 6. It can be shown from

Figure 5 and

Figure 6 that the proposed single neural adaptive controller can drive the tracking errors toward a smaller randomness. Conversely, the PID control strategy cannot minimize the randomness effectively. From these comparative results, it is obvious that the proposed strategy has better randomness rejection ability than PID control law. Therefore, the proposed control strategy is more suitable for nonlinear stochastic systems with non-Gaussian noises.

Figure 2.

Information potentials.

Figure 2.

Information potentials.

Figure 3.

Performance indices.

Figure 3.

Performance indices.

Figure 4.

Weights of the single neuron.

Figure 4.

Weights of the single neuron.

Figure 5.

PDFs of tracking error.

Figure 5.

PDFs of tracking error.

Figure 6.

PDFs at typical instants.

Figure 6.

PDFs at typical instants.