Abstract

Thermodynamic modeling of extensive systems usually implicitly assumes the additivity of entropy. Furthermore, if this modeling is based on the concept of Shannon entropy, additivity of the latter function must also be guaranteed. In this case, the constituents of a thermodynamic system are treated as subsystems of a compound system, and the Shannon entropy of the compound system must be subjected to constrained maximization. The scope of this paper is to clarify prerequisites for applying the concept of Shannon entropy and the maximum entropy principle to thermodynamic modeling of extensive systems. This is accomplished by investigating how the constraints of the compound system have to depend on mean values of the subsystems in order to ensure additivity. Two examples illustrate the basic ideas behind this approach, comprising the ideal gas model and condensed phase lattice systems as limiting cases of fluid phases. The paper is the first step towards developing a new approach for modeling interacting systems using the concept of Shannon entropy.

1. Introduction

In his basic work, Shannon [1] defines a function H which measures the amount of information of a system which can reside in either of m possible states by means of the probabilities of the states:

Both, the constant K and the basis of the logarithm are arbitrary as they just account for a scaling of H. The set of all can be written as probability distribution :

with the normalization condition

In the following we set and choose the natural logarithm. When building the sum over all states, the limits of the summation () can be omitted and H can formally be written as function of the probability distribution:

Previous papers [1,2,3,4,5,6,7,8] worked out that this measure has all the properties of thermodynamic entropy as introduced by statistical physics. Throughout this paper we call as defined in Equation (3) the Shannon entropy of the system under consideration, in order to distinguish it from thermodynamic entropy.

The range of is given by . The zero value for a distribution is where one of the equals 1 and, because of the normalization condition (2), all other are zero. The maximum value is given for uniformly distributed probabilities [6]:

1.1. Compound Systems

We consider a compound system composed of N subsystems, each characterized by its individual probability distribution:

The state of the compound system is defined by the states of the subsystems. We therefore write the probability distribution of the compound system as

where is the probability of the compound state where subsystem 1 is in the state , subsystem 2 is in the state , and so on. It is not necessary for the subsystems to have identical probability distributions.

Generally the probability of the compound state , comprising the states A and B of two subsystems is given by

where is the probability of subsystem 1 to be in state A, given that subsystem 2 is in state B. If the subsystems are statistically independent, i.e., , then it follows (cf. [9,10]):

If all N subsystems comprising the considered compound system are statistically independent, straightforward application of Equation (6) to the probability distribution (5) gives:

With this probability distribution the Shannon entropy of the compound system is:

Hence the Shannon entropy of independent subsystems is additive. In the special case of N equal and statistically independent subsystems, i.e.,

, the homogeneity of the Shannon entropy of the compound system follows:

Throughout this paper the index s is used for single systems and c for compound systems.

1.2. The Bridge to Thermodynamic Entropy

Considering a compound system composed of N equal and statistically independent subsystems, all subsystems are characterized by the probability distribution , and homogeneity, Equation (8), is guaranteed. For a large number of subsystems, i.e., , the probabilities can be expressed as relative occupation numbers , designating the number of subsystems residing in the state i:

with

the total number of subsystems within the compound system, playing the role of the normalization condition (2). Expressing the Shannon entropy of a single subsystem with the occupation numbers in Equation (9) gives

and because of homogeneity, Equation (8), the Shannon entropy of the compound system is:

The right hand side of Equation (10) has the same form as the logarithm of the thermodynamic probability W known from classical statistical mechanics [11]:

The variables in Equation (11) give the number of particles in cell j of the μ-space, and have the very same meaning as the occupation numbers in Equation (10), i.e., the numbers of particles residing in the (mechanical) state i. The set is called the occupation of the μ-space, and the thermodynamic probability W is the number of microstates realizing the given occupation. Hence, the left hand sides of Equations (10) and (11) stand for the same measure and can be combined to:

Table 1 compares the concepts behind the two measures. Because of

where S is the thermodynamic entropy of the system we get the result:

This equation reveals the equivalence between Shannon entropy and thermodynamic entropy related by the Boltzmann constant.

Table 1.

Comparison between Shannon entropy H and thermodynamic probability W.

| Shannon entropy H | thermodynamic probability W |

|---|---|

| probability distribution: | occupation: |

| assumption | |

| equal subsystems, statistically independent | Stirling’s formula applied to N and to all |

1.3. Additivity of Shannon Entropy: Its Significance for Thermodynamic Modeling

Homogeneity of a compound system, Equation (8), is the starting point for thermodynamic modeling based on the states of its constituents. On the one hand, as shown in section 1.2, it builds the bridge between Shannon entropy and the classical thermodynamic entropy. On the other hand, the crucial property of additivity enables the calculation of thermodynamic entropy simply by calculating the Shannon entropy of a single subsystem. Subsequently, the compound system’s entropy can be expressed by the sum of entropies of the constituting subsystems.

Applied to a gas or fluid this means to derive the Shannon entropy of one atom or molecule based on their respective states. When speaking of molecular or discrete states we do not necessarily consider the quantum-mechanical states of atoms or molecules; the mechanical, continuous states of atoms or molecules are also possible candidates. But when using the discrete formulation of Shannon entropy, Equation (1), a discretization of the continuous states is helpful.

When applying lattice models for describing condensed phase systems, the goal is reduced to derivation of the Shannon entropy of a single lattice site. However, when deriving the Shannon entropy of a compound system by utilizing homogeneity, Equation (8), we made the following preassumptions:

The first is the assumption of statistically independent subsystems, which may be plausible for many thermodynamic systems as long as the subsystems (the particles) are ‘not too strongly correlated in some nonlocal sense’ [10].

We did not emphasize the second assumption, because it seems to be self-evident: We used the probability distributions as given system variables, as if they were properties of the subsystems, which - for statistically independent systems - stay constant. But as known from classical thermodynamics, the entropy of a system depends on system variables like internal energy, temperature, pressure and so on. In addition, entropy is not predefined directly by these variables, but underlies a maximization principle, stating that a system in thermodynamic equilibrium resides in a state where entropy is a maximum, with respect to the constraints given by the system variables. This means that we cannot deal a priori with given probability distributions, but we have to determine the very probability distribution which maximizes entropy with respect to the constraints. Hence, if we ask for validity of additivity, Equation (8), we have to investigate the maximized Shannon entropies of a compound system and its constituting single systems separately, as shown in the following section.

2. Probability Distributions with Maximum Entropy

2.1. Maximization of Unconstrained Systems

As can be seen from Equation (4b), the maximum value of the Shannon entropy of unconstrained systems, i.e., uniformly distributed states, depends solely on the number of their possible states. For a single system with m possible states we get . In the case of a compound system consisting of N single systems, each with m possible states, the number of possible states is , resulting in a Shannon entropy of

i.e., N times the Shannon entropy of the single system (cf. Equation (4b)). So for unconstrained systems homogeneity, Equation (8), is guaranteed.

2.2. Constrained Maximization of a Single System

Now a single system with m possible states is considered, each of them characterized by the value of a random variable F. Let one constraint be given by the mean value of the random variable F:

A lot of probability distributions may result in the same mean value . Among these probability distributions we are looking for the one yielding the maximum value for the Shannon entropy. The maximizing probability distribution and the resulting Shannon entropy will depend on the exact choice for , so that both can be expressed as functions of this constraint:

The maximizing probability distribution considering constraint (12) and the normalization condition (2) can be found by applying Lagrange’s method of constrained extremalization [12]. This method introduces the Lagrange function

where and are the Lagrangian multipliers. has to be maximized by equating the derivations with respect to all to zero:

With from Equation (3) and performing all derivations we get:

Inserting Equation (14) into the normalization condition (2) yields:

Inserting Equation (14) into constraint (12) results in:

Using the abbreviation , combination of Equations (15) and (16) yields:

This equation can be solved numerically for , which can now be used to express the maximizing probability distribution. For that purpose, Equations (14) and (15) can be rewritten as:

Combining these equations yields the maximizing probability distribution

Inserting (18) into definition (3) results in:

The denominator of the first factor does not depend on the index i of the outer sum and can be put in front of the sum. The logarithm of the fraction is now written as sum of two terms:

Further rearrangement finally yields:

This value represents the maximum value of the Shannon entropy among all probability distributions compatible with the normalization condition and the constraint, Equation (12).

2.3. Constrained Maximization of a Cmpound System

Given a compound system composed of N single systems as mentioned in the last section, each of which can reside in either of m possible states and with each state i being assigned a value of a discrete random variable F, the number of possible states is . With the probabilities of the compound system, where k denotes the state, when the single system 1 is in state , single system 2 is in state and so on: , the probabilities can be rewritten as:

with

Let G be a random variable related to the states of the compound system, and be the according value of G in the state k. is chosen in such a way, that it represents the sum of the random variables F of the single systems:

with the value of F of particle 1 in state and so on. The mean value of G, which will act as constraint for the compound system, is (cf. Equation (12)):

The mean value of the compound system is the sum of the mean values of the single systems. If the single systems are equal, their mean value is the same:

resulting in

Now we are looking for the probability distribution which fulfills the normalization condition, guarantees the mean value given by the constraint, and yields the maximum value for the Shannon entropy. The result will depend on the constraint :

Again applying Lagrange’s method, the solutions (17), (18) and (19) of the single system can be reused by replacing , , and considering that the number of states is now . With being the solution of (cf. Equation (17))

the probabilities of the compound system are (cf. Equation (18))

and the Shannon entropy of the compound system is (cf. Equation (19)):

Inserting Equation (21) into Equation (28) results in:

as explicitly derived in the supplementary material. Taking into account Equation (26) we get:

By comparing Equation (32) with Equation (17) one can see that the solutions and fulfill the same equations; they are equal and their subscripts can therefore be omitted:

Now we again use Equation (21) and insert it into Equation (30). The result is:

with intermediate steps given in the supplementary material. The same expression can be obtained when Equations (20) and (21) are inserted into the probabilities, Equation (29), resulting in

and using this for calculating Shannon entropy by means of Equation (3). This alternative derivation is also included in the supplementary material.

Because of the equivalence of and , Equation (33), we can rewrite Equations (19) and (34):

Comparing both these equations yields the result:

Equation (36) illustrates that homogeneity is also fulfilled for compound systems underlying an extremalization principle with respect to one constraint. The crucial assumption we made is that the constraint of the subsystems and the constraint of the compound system obey equation (21).

2.4. Systems Underlying Several Constraints

To be more general we consider systems under several constraints, again beginning with a single system. Let α be the number of random variables as constraints, and m the number of possible states. The value of in the state i is , the according value of is etc. The constraints are given by the mean values of the random variables, with

Straightforward application of Lagrange’s method of constrained extremalization results [1.0] in (cf. Equation (18)):

with the abbreviation

and the being the solutions of the following system of equations (cf. Equation (17)):

Calculating the Shannon entropy with the probabilities given by Equation (37) yields (cf. Equation (19)):

We consider a compound system composed of N of these single systems, with α random variables , which are associated to the random variables of the single systems in the same way indicated by Equations (22), (23), (24), (25) and (26). The value of in the state k is , the according value of is and so on, and they are related to the random variables of the single system by (cf. Equation (21)):

The constraints are given as the mean values of the random variables, with

Straightforward application of Lagrange’s method of constrained extremalization results in (cf. Equations (18) and (37)):

with the abbreviation

and with the being the solutions of the following system of equations (cf. Equations (17) and (39)):

Calculating the Shannon entropy with the probabilities given by Equation (43) yields (cf. Equations (19) and (40)):

According to Equation (42) we replace the right hand side of the first equation of system (45) with ,

and all other equations of system (45) with the according expressions. In the definition of the we replace the exponents according to Equation (41) and insert the expression into Equation (47). The evaluation yields:

with defined similarly to for the single system, Equation (38), but now with the factors :

The system of equations for for the single system, Equation (39), is the same as for the for the compound system, Equation (48), resulting in:

Now, replacing all Y-factors in the definition of the , (44), with the according X-factors results in:

Inserting these expressions into the Shannon entropy of the compound system, Equation (46), we get the result:

and comparing with Equation (40) we have

It is therefore proven that the Shannon entropy of compound systems underlying several constraints is homogeneous if the constraints behave according to Equations (41) and (42).

Equations (21) and (26) for systems underlying one constraint as well as Equations (41) and (42) for systems underlying several constraints reveal that homogeneity of Shannon entropy is guaranteed, if the constraints behave homogeneously, i.e., in a linear dependence of the number of subsystems:

We can therefore conclude that the assumption of both independent subsystems and of a compound system underlying a maximization principle with respect to additive constraints lead to the same important result: the homogeneity of the Shannon entropy of the compound system, hence the homogeneity of the modeled thermodynamic entropy. Both assumptions also act as prerequisites for homogeneity and can be regarded as complementary views of the same property of a compound system; neither of those aspects is preferred to the other.

3. Application to Thermodynamic Modeling of Fluid Phases

Amendatory to the previous sections where considerations were established for single and compound systems in general, in the following the application to thermodynamic modeling of fluid phases shall be discussed. These models describe the systems under consideration from the viewpoint of their possible states. For this purpose we reflect on the limiting cases of fluid phases, the ideal gas model and the condensed phase lattice system.

3.1. Ideal Gas

The ideal gas can be considered to be a compound system, consisting of a huge number of ideal and equal particles with no interactions among them. These particles are treated as the subsystems of the compound system. The mechanical state of a particle in the sense of classical mechanics is given by its position and velocity vectors, so the state is described by 6 random variables. The kinetic state of the ideal particle does not depend on its position and vice versa, so the position and velocity vectors are independent random variables. Consequently, as discussed in section 2.4, this leads to two statistically independent probability distributions, and the Shannon entropy splits in a kinetic and a positional term:

Therefore, maximizing H can be split by maximizing and separately. We restrict our considerations to the maximization of the kinetic term, which still comprises three random variables: one for the velocity and two for the direction of the movement. Assuming isotropy for the ideal gas means that the distribution of the kinetic energy does not depend on the direction of the movement. Hence we can again argue that the velocity is independent from the two other random variables, and we restrict our considerations to the kinetic states of the particles, defined only by the mean of their velocity, equivalent to their kinetic energy e. The kinetic energy is in fact a continuous variable. But in order to use the discrete formulation of Shannon entropy, Equation (1), we can discretize it by introducing an arbitrarily small energy quantum :

The discretized energy is meant only as a mathematical artifice. In the limit all possible continuous states can be represented. The kinetic, discrete state k of the ideal gas is then defined by the kinetic states of the particles: . Obviously, the kinetic energy of the whole system in the state k is the sum of the kinetic energies of the single particles:

cf. with Equations (21) and (41), and the additivity of the kinetic energy acting as constraint follows immediatley as

in accordance with Equations (26) and (42), and therefore additivity of the Shannon entropy of the kinetic term is guaranteed, cf. with Equation (36):

This result is the basis for the discrete modeling of the ideal gas, to be presented in a subsequent paper.

3.2. Condensed Phase Lattice Systems

Lattice systems are mostly applied for strongly interacting condensed phases where molecular distances correspond to the liquid or solid state. Many engineering models used in process simulators for chemical-engineering purposes such as activity coefficient models or equations of state are originally based on lattice models [13][14][15]. One of the reasons for this is that such models can easily be verified by Monte-Carlo simulations, alleviating model development and verification. Therefore, in the following the peculiarities of lattice systems shall be discussed from the viewpoint of Shannon entropy.

A lattice system provides fixed sites, each of which is occupied by one molecule in the simplest case, each of which interacts with its closest neighbors. In the following we apply the maximization presented in section 2 to an exemplary, one-dimensional lattice, comprising molecules of two types.

In terms of section 2, in the following the whole lattice is considered as the compound system, composed of sites which represent the subsystems.

3.2.1. The concept of subsystems applied to a lattice system

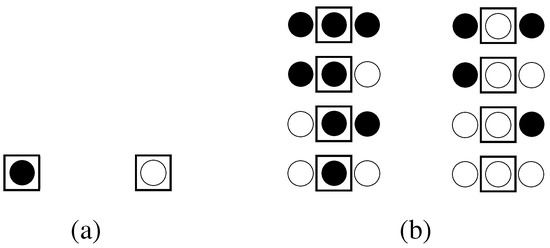

The simplest way to define a subsystem in terms of section 1.1 for a lattice is to use a single lattice site isolated from its adjacent neighbors. In a linear lattice system with two components such an isolated site has possible discrete states, as illustrated in Figure 1(a).

Figure 1.

All possible discrete states of a single lattice site as subsystem of a linear lattice system when observed (a) isolated from its z nearest neighbors and (b) associated with its neighbors. In a linear lattice, considering only the nearest neighbors, .

However, a concept of an isolated lattice which does not take its nearest, interacting neighbors into account does not allow for the formulation of constraints including interaction energies between sites, even though such constraints are essential for model development. For this reason, we introduce the concept of a single lattice site associated with its z nearest neighbors as subsystem, z representing the coordination number. The subsystem comprises () sites, as illustrated in Figure 1(b). In this concept, the discrete state of a lattice site is determined not only by its own molecule type but also by the type and arrangement of the z nearest neighbors contributing to energetic interactions. Here it is assumed that molecular interactions are confined to the nearest neighbors of the central molecule. If molecules beyond the direct neighbors also contribute to interactions, the concept of associated sites can be extended accordingly.

3.2.2. The unconstrained system

Shannon entropy of a subsystem: Without consideration of any constraints, it follows from equation (4a) that both of the two possible states shown in Figure 1(a) have the same probability, , corresponding to a system with an equal number of black and white sites. Equation (4b) for an isolated site with 2 possible states yields

For an associated site shown in Figure 1(b), consisting of () sites, again according to Equation (4a), all possible states are equally probable. The number of possible states is now , yielding the maximum Shannon entropy of

which is the ()-fold of the Shannon entropy of the isolated site given by (51).

In a next step, the possibility of expressing the Shannon entropy of a compound lattice system by the Shannon entropy of its constituting subsystems shall be examined.

Shannon entropy of a compound system: When distributing two types of molecules over a lattice comprising N sites, the number of possible states is given by , yielding the maximum Shannon entropy of

which is the N-fold of the maximum Shannon entropy of an isolated site, cf. Equation (51). When considering associated sites as subsystems, where a subsystem consists of () sites, the number of subsystems is

Now the number of states of the compound system is , and the according Shannon entropy yields

which is the -fold of the maximum Shannon entropy of an associated site, cf. Equation (52).

Equations (52)-(54) reveal the homogeneity of the Shannon entropy of unconstrained lattice systems, cf. Equation (8):

where now denotes the Shannon entropy of the whole lattice as compound system, is the Shannon entropy of the considered subsystem, i.e., isolated or associated site, and N is the number of subsystems.

3.2.3. System considering constraints

There are basically three types of constraints to be considered in a lattice system: Energy, composition and the equivalence of contact pairs between molecules of different types.

Energy: As mentioned at the beginning of section 3.2, the intended purpose of lattice systems is the consideration of interaction energies between adjacent lattice sites. Therefore, the concept of a single lattice site associated with its nearest neighbors was introduced in section 3.2.1. to be used as subsystem. Based on this concept, constraints considering interaction energies can be formulated generically in the form

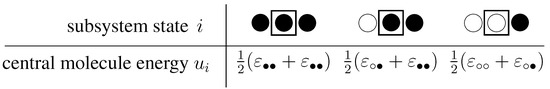

which is in line with (12), designating the energy assigned to the central molecule of an associated site, its probability of residing in state i and the mean value of energy. Figure 2 illustrates this nomenclature.

Figure 2.

Examples for states and related energies of an associated lattice site as subsystem. ε denotes the interaction energy between two sites, where each site is assigned the half of it.

To formulate the energy assigned to a compound lattice consisting of N associated sites as subsystems, we use the index k to denote the state of the compound system which is determined by the states of its subsystems: . With this nomenclature, the energy of a compound system in state k is , where

which is analogous to (21). Recalling equations (22) to (25) for the mean values of energies, it follows that

Because all single subsystems are of the same kind and no single subsystem is preferred to another, their mean value is the same, resulting in

which is analogous to (26). Using (56) as the only constraint aside from the normalization of probabilities, maximization of the Shannon entropy analog to (13) requires solution of the Lagrange function

Application of the maximization principle to (57) in line with (27) - (34) finally results in

as Shannon entropy of the compound lattice system, analogously to Equation (36). Equation (58) reveals that the Shannon entropy of a constrained lattice system can also be expressed through the Shannon entropy of subsystems, whereupon subsystems are single lattice sites associated with their respective nearest neighbors. As shown in section 2.4, several functions with the generic form of equation (55) can also be considered as constraints in the maximization principle.

Composition: In the simplest case of a binary system, there are molecules of two types constituting the lattice. The constraint for the compound system is simply , the total number of 1-molecules. can be interpreted in two ways: as relative fraction of 1-molecules in the system, or as probability to find a 1-molecule at a given site. Hence, if we consider compound systems of identical composition, behaves according to Equation (49), fulfilling the prerequisites for constraints that enable homogeneity of Shannon entropy. The same holds for which is related to by the normalization condition. This can easily be extended to systems comprising an arbitrary number of components.

Equivalence of contact pairs: Contact pairs designate the number of contacts between molecules of different types in the system, e.g. (read ’2 around 1’) the number of all 2-molecules around all molecules of type 1, and the number of all 1-molecules around all molecules of type 2. As the number of contacts between molecules of different types must not depend on the viewpoint, the equivalence has to be fulfilled in lattice systems generally, independent of the respective size. The according constraint for the maximization prinziple is

This equating to zero is a homogeneous function in terms of Equation (49).

In summary, all three types of constraints are homogeneous in terms of Equation (49), ensuring that after maximization the Shannon entropy of a compound system is also homogeneous. The complete Lagrange function finally comprises the mean values of energy, composition and equivalence of contact pairs as constraints to be considered in a lattice model. Practical application will be shown in a subsequent paper.

4. Conclusions

The scope of this paper was to clarify prerequisites for applying the concept of Shannon entropy and maximum entropy principle to thermodynamic modeling of extensive systems. The main criterion for applicability of this kind of modeling is the additivity of the Shannon entropy. It was shown that this additivity is guaranteed, provided that the additivity of the constraints is given. If a thermodynamic model comprises additive constraints, this prerequisite is fulfilled, and the method is explicitly applicable to systems of interacting components, i.e., real fluids. This was shown for two limiting cases of fluid phases, the ideal gas model and condensed phase lattice systems.

The main benefit of thermodynamic modeling based on Shannon entropy is that it makes the equilibrium distribution of discrete states available. This establishes new possibilities for thermodynamic and mass transport models as it allows consideration of a more detailed picture of physical behavior of matter on a molecular basis, beyond the scope of traditional modeling methods. This will be exploited in subsequent papers.

Supplementary Materials

Supplementary File 1Acknowledgments

The authors gratefully acknowledge support from NAWI Graz.

Author Contributions

M.P. contributed section 2 and section 3.1, T.W. contributed section 3.1, section 1 and section 4 were joint contributions of M.P. and T.W. The paper was read and complemented by A.P.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shannon, C.E. A Mathematical Theory of Communication. Bell Sys. Techn. Journ. 1948, 27, 379–423,623–656. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information Theory and Statistical Mechanics. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information Theory and Statistical Mechanics. II. Phys. Rev. 1957, 108, 171–189. [Google Scholar] [CrossRef]

- Jaynes, E.T. Gibbs vs Boltzmann Entropies. Am. Journ. Phys. 1965, 33, 391–398. [Google Scholar] [CrossRef]

- Wehrl, A. General Properties of Entropy. Rev. Mod. Phys. 1978, 50, 221–260. [Google Scholar] [CrossRef]

- Guiasu, S.; Shenitzer, A. The Principle of Maximum Entropy. Math. Intell. 1985, 7, 42–48. [Google Scholar] [CrossRef]

- Ben-Naim, A. Entropy Demystified; World Scientific Publishing: Singapore, 2008. [Google Scholar]

- Ben-Naim, A. A Farewell to Entropy: Statistical Thermodynamics Based in Information; World Scientific Publishing: Singapore, 2008. [Google Scholar]

- Curado, E.; Tsallis, C. Generalized Statistical Mechanics: Connection with Thermodynamics. J. Phys. A 1991, 24, L69–L72. [Google Scholar] [CrossRef]

- Tsallis, C. Nonadditive Entropy: The Concept and its Use. Europ. Phys. Journ. A 2009, 40, 257–266. [Google Scholar] [CrossRef]

- Maczek, A. Statistical Thermodynamics; Oxford University Press: Oxford, UK, 1998. [Google Scholar]

- Wilde, D.J.; Beightler, C.S. Foundations of Optimization; Prentice-Hall: Englewood Cliffs, NJ, USA, 1967. [Google Scholar]

- Fowler, R.H.; Kapitza, P.; Mott, N.F.; Bullard, E.C. Mixtures - The Theory of the Equilibrium Properties of Some Simple Classes of Mixtures, Solutions and Alloys; Oxford at the Clarendon Press, 1952. [Google Scholar]

- Abrams, D.S.; Prausnitz, J.M. Statistical Thermodynamics of Liquid Mixtures: A New Expression for the Excess Gibbs Energy of Partly or Completely Miscible Systems. AIChE J. 1975, 21, 116–128. [Google Scholar] [CrossRef]

- Bronneberg, R.; Pfennig, A. MOQUAC, a New Expression for the Excess Gibbs Energy based on Molecular Orientations. Fluid Phase Equilib. 2013, 338, 67–77. [Google Scholar] [CrossRef]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).