1. Introduction

There is a famous analogy between statistical mechanics and quantum mechanics. In statistical mechanics, a system can be in any state, but its probability of being in a state with energy

E is proportional to exp(−

E/T ), where

T is the temperature in units where Boltzmann’s constant is one. In quantum mechanics, a system can move along any path, but its amplitude for moving along a path with action

S is proportional to exp(−

S/iħ), where

ħ is Planck’s constant. Therefore, we have an analogy, where making the replacements:

formally turns the probabilities for states in statistical mechanics into the amplitudes for paths, or “histories”, in quantum mechanics. In statistical mechanics, the strength of thermal fluctuations is governed by

T. In quantum mechanics, the strength of quantum fluctuations is governed by

ħ.

In statistical mechanics, the probabilities exp(−E/T ) arise naturally from maximizing entropy subject to a constraint on the expected value of energy. Following the analogy, we might guess that the amplitudes exp(−S/iħ) arise from maximizing some quantity subject to a constraint on the expected value of action. This quantity deserves a name, so let us tentatively call it “quantropy”.

In fact, Lisi [

5] and Munkhammar [

7] have already treated quantum systems as interacting with a “heat bath” of action and sought to derive quantum mechanics from a principle of maximum entropy with amplitudes (or as they prefer to put it, complex probabilities) replacing probabilities. However, seeking to derive amplitudes for paths in quantum mechanics from a maximum principle is not quite correct. Quantum mechanics is rife with complex numbers, and it makes no sense to maximize a complex function. However, a complex function can still have stationary points, where its first derivative vanishes. Therefore, a less naive program is to derive the amplitudes in quantum mechanics from a “principle of stationary quantropy”. We do this for a class of discrete systems and then illustrate the idea with the example of a free particle, discretizing both space and time.

Carrying this out rigorously is not completely trivial. In the simplest case, entropy is defined as a sum involving logarithms. Moving to quantropy, each term in the sum involves a logarithm of a complex number. Making each term well defined requires a choice of branch cut; it is not immediately clear that we can do this and obtain a differentiable function as the result. Additional complications arise when we consider the continuum limit of the free particle. Our treatment handles all these issues.

We begin by reviewing the main variational principles in physics and pointing out the conceptual gap that quantropy fills. In Section 2, we introduce quantropy along with two related quantities: the free action and the expected action. In Section 3, we develop tools for computing all of these quantities. In Section 4, we illustrate our methods with the example of a free particle, and address some of the conceptual questions raised by our results. We conclude by mentioning some open issues in Section 5.

1.1. Statics

Static systems at temperature zero obey the

principle of minimum energy. In classical mechanics, energy is often the sum of kinetic and potential energy:

where the potential energy

V depends only on the system’s position, while the kinetic energy

K also depends on its velocity. Often, though not always, the kinetic energy has a minimum at velocity zero. In classical mechanics, this lets us minimize energy in a two-step way. First, we minimize

K by setting the velocity to zero. Then, we minimize

V as a function of position.

While familiar, this is actually somewhat noteworthy. Usually, minimizing the sum of two things involves an interesting tradeoff. In quantum physics, a tradeoff really is required, thanks to the uncertainty principle. We cannot know the position and velocity of a particle simultaneously, so we cannot simultaneously minimize potential and kinetic energy. This makes minimizing their sum much more interesting. However, in classical mechanics, in situations where K has a minimum at velocity zero statics at temperature zero is governed by a principle of minimum potential energy.

The study of static systems at nonzero temperature deserves to be called “thermostatics”, though it is usually called “equilibrium thermodynamics”. In classical or quantum equilibrium thermodynamics at any fixed temperature, a system is governed by the

principle of minimum free energy. Instead of our system occupying a single definite state, it will have different probabilities of occupying different states, and these probabilities will be chosen to minimize the free energy:

Here, 〈

E〉 is the expected energy,

T is the temperature and

S is the entropy. Note that the principle of minimum free energy reduces to the principle of minimum energy when

T = 0.

Where does the principle of minimum free energy come from? One answer is that free energy F is the amount of “useful” energy: the expected energy 〈E〉 minus the amount in the form of heat, TS. For some reason, systems in equilibrium minimize this.

Boltzmann and Gibbs gave a deeper answer in terms of entropy. Suppose that our system has some space of states

X and that the energy of the state

x ∈

X is

E(

x). Suppose that

X is a measure space with some measure

dx, and assume that we can describe the equilibrium state using a probability distribution, a function

p:

X → [0,

∞) with:

Then, the entropy is:

while the expected value of the energy is:

Now, suppose our system maximizes entropy subject to a constraint on the expected value of energy. Using the method of Lagrange multipliers, this is the same as maximizing

S −

β〈

E〉, where

β is a Lagrange multiplier. When we maximize this, we see the system chooses a Boltzmann distribution:

One could call

β the “coolness”, since working in units where Boltzmann’s constant equals one is just the reciprocal of the temperature. Therefore, when the temperature is positive, maximizing

S −

β〈

E〉 is the same as minimizing the free energy:

In summary, every minimum or maximum principle in statics can be seen as a special case or a limiting case of the

principle of maximum entropy, as long as we admit that sometimes we need to maximize entropy subject to constraints. This is quite satisfying, because as noted by Jaynes, the principle of maximum entropy is a general principle for reasoning in situations of partial ignorance [

4]. Therefore, we have a kind of “logical” explanation for the laws of statics.

1.2. Dynamics

Now, suppose things are changing as time passes, so we are doing dynamics instead of statics. In classical mechanics, we can imagine a system tracing out a path

q(

t) as time passes from

t =

t0 to

t =

t1. The action of this path is often the integral of the kinetic minus potential energy:

where

K(

t) and

V (

t) depend on the path

q. To keep things from getting any more confusing than necessary, we are calling action

A instead of the more usual

S, since we are already using

S for entropy.

The principle of least action says that if we fix the endpoints of this path, that is the points q(t0) and q(t1), the system will follow the path that minimizes the action subject to these constraints. This is a powerful idea in classical mechanics. However, in fact, sometimes, the system merely chooses a stationary point of the action. The Euler–Lagrange equations can be derived just from this assumption. Therefore, it is better to speak of the principle of stationary action.

This principle governs classical dynamics. To generalize it to quantum dynamics, Feynman [

2] proposed that instead of our system following a single definite path, it can follow any path, with an amplitude

a(

q) of following the path

q. He proposed this formula for the amplitude:

where

ħ is Planck’s constant. He showed, in a nonrigorous but convincing way, that when we integrate

a(

q) over all paths starting at a point

x0 at time

t0 and ending at a point

x1 at time

t1, we obtain a result proportional to the amplitude for a particle to go from the first point to the second. He also gave a heuristic argument showing that as

ħ → 0, this prescription reduces to the principle of stationary action.

Unfortunately, the integral over all paths is hard to make rigorous, except in certain special cases. This is a bit of a distraction for our discussion now, so let us talk more abstractly about “histories” instead of paths and consider a system whose possible histories form some space X with a measure dx. We will look at an example later.

Suppose the action of the history

x ∈

X is

A(

x)

. Then, Feynman’s sum over histories formulation of quantum mechanics says the amplitude of the history

x is:

This looks very much like the Boltzmann distribution:

Indeed, the only serious difference is that we are taking the exponential of an imaginary quantity instead of a real one. This suggests deriving Feynman’s formula from a stationary principle, just as we can derive the Boltzmann distribution by maximizing entropy subject to a constraint. This is where quantropy enters the picture.

2. Quantropy

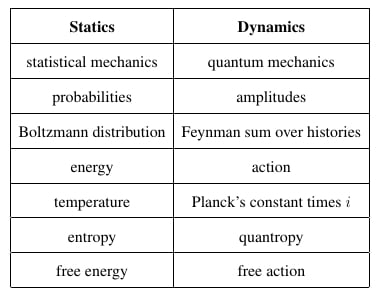

We have described statics and dynamics and a well-known analogy between them. However, we have seen that there are some missing items in the analogy:

| Statics | Dynamics |

|---|

| statistical mechanics | quantum mechanics |

| probabilities | amplitudes |

| Boltzmann distribution | Feynman sum over histories |

| energy | action |

| temperature | Planck’s constant times i |

| entropy | ??? |

| free energy | ??? |

Our goal now is to fill in the missing entries in this chart. Since the Boltzmann distribution:

comes from the principle of maximum entropy, one might hope Feynman’s sum over histories formulation of quantum mechanics:

comes from a maximum principle, as well.

Unfortunately Feynman’s sum over histories involves complex numbers, and it does not make sense to maximize a complex function. So, let us try to derive Feynman’s prescription from a principle of stationary quantropy.

Suppose we have a set of histories,

X, equipped with a measure

dx. Suppose there is a function

a:

X → ℂ assigning to each history

x ∈

X a complex amplitude

a(

x). We assume these amplitudes are normalized, so that:

since that is what Feynman’s normalization actually achieves. We define the “quantropy” of

a by:

One might fear this is ill-defined when

a(

x) = 0, but that is not the worst problem; in the study of entropy, we typically set 0 ln 0 = 0. The more important problem is that the logarithm has different branches: we can add any multiple of 2

πi to our logarithm and get another equally good logarithm. To deal with this, let us suppose that as we vary the numbers

a(

x), they do not go through zero. In the end,

a(

x) will be defined in terms of the exponential of the action, so it will be nonzero. If we only vary it slightly, it will remain nonzero, so we can choose a specific logarithm ln(

a(

x)) for each number

a(

x), which varies smoothly as a function of

a(

x).

To formalize this, we could treat quantropy as depending not on the amplitudes

a(

x), but on some function

b:

X → ℂ, such that exp(

b(

x)) =

a(

x). In this approach, we require:

and define the quantropy by:

Then, the problem of choosing branches for the logarithm does not come up. However, we shall take the informal approach where we express quantropy in terms of amplitudes and choose a specific branch for ln

a(

x), as described above.

Next, let us seek amplitudes

a(

x) that give a stationary point of the quantropy

Q subject to a constraint on the “expected action”:

The term “expected action” is a bit odd, since the numbers

a(

x) are amplitudes rather than probabilities. While one could try to justify this term from how expected values are computed in Feynman’s formalism, we are mainly using it because 〈

A〉 is analogous to the expected value of the energy, 〈

E〉, which we saw earlier.

Let us look for a stationary point of

Q subject to a constraint on 〈

A〉, say 〈

A〉 =

α. To do this, one would be inclined to use Lagrange multipliers and to look for a stationary point of:

However, there is another constraint, namely:

Therefore, let us write:

and look for stationary points of

Q subject to the constraints:

To do this, the Lagrange multiplier recipe says we should find stationary points of:

where

λ and

μ are Lagrange multipliers. The Lagrange multiplier

λ is the more interesting one. It is analogous to the “coolness”

β = 1

/T, so our analogy chart suggests that we should take:

We shall see that this is correct. When

λ becomes large, our system becomes close to classical, so we call

λ the “classicality” of our system.

Following the usual Lagrange multiplier recipe, we seek amplitudes for which:

holds, along with the constraint equations. We begin by computing the derivatives we need:

Thus, we need:

or:

The constraint:

then forces us to choose:

so we have:

This is precisely Feynman’s sum over histories’ formulation of quantum mechanics if

λ = 1

/iħ!

Note that the final answer does two equivalent things in one blow:

In case the second point is puzzling, note that the “free action” plays the same role in quantum mechanics that the free energy 〈

E〉 −

TS plays in statistical mechanics. It completes the analogy chart at the beginning of this section. It is widely used in the effective action approach to quantum field theory, though not under the name “free action”: as we shall see, it is simply –

iħ times the logarithm of the partition function.

It is also worth noting that when ħ → 0, the free action reduces to the action. Thus, in this limit, the principle of stationary free action reduces to the principle of stationary action in classical dynamics.

3. Computing Quantropy

In thermodynamics, there is a standard way to compute the entropy of a system in equilibrium starting from its partition function. We can use the same techniques to compute quantropy. It is harder to get the integrals to converge in interesting examples. But we can worry about that later, when we do an example.

First, recall how to compute the entropy of a system in equilibrium starting from its partition function. Let

X be the set of states of the system. We assume that

X is a measure space and that the system is in a mixed state given by some probability distribution

p:

X → [0,

∞), where, of course:

We assume each state

x has some energy

E(

x) ∈ ℝ. Then, the mixed state maximizing the entropy:

with a constraint on the expected energy:

is the Boltzmann distribution:

for some value of the coolness

β, where

Z is the partition function:

To compute the entropy of the Boltzmann distribution, we can thus take the formula for entropy and substitute the Boltzmann distribution for

p(

x), getting:

Reshuffling this, we obtain a formula for the free energy:

Of course, we can also write the free energy in terms of the partition function and

β:

We can do the same for the expected energy:

This, in turn, gives:

In short, if we know the partition function of a system in thermal equilibrium as a function of

β, we can easily compute its entropy, expected energy and free energy.

Similarly, if we know the partition function of a quantum system as a function of

λ = 1

/iħ, we can compute its quantropy, expected action and free action. Let

X be the set of histories of some system. We assume that

X is a measure space and that the amplitudes for histories are given by a function:

a:

X → ℂ obeying

We also assume each history

x has some action

A(

x) ∈ ℝ. In the last section, we saw that to obtain a stationary point of quantropy:

with a constraint on the expected action:

we must use Feynman’s prescription for the amplitudes:

for some value of the classicality

λ = 1

/iħ, where

Z is the partition function:

As mentioned, the formula for quantropy here is a bit dangerous, since we are taking the logarithm of the complex-valued function

a(

x), which requires choosing a branch. Luckily, the ambiguity is greatly reduced when we use Feynman’s prescription for

a, because in this case,

a(

x) is defined in terms of an exponential. Therefore, we can choose this branch of the logarithm:

Once we choose a logarithm for

Z, this formula defines ln

a(

x).

Inserting this formula for ln

a(

x) into the formula for quantropy, we obtain:

We can simplify this a bit, since the integral of

a is 1:

We thus obtain:

This quantity is what we called the “free action” in the previous section. Let us denote it by the letter Φ:

In terms of

λ, we have:

Now, we can compute the expected action just as we computed the expected energy in thermodynamics:

This gives:

The following chart shows where our analogy stands now.

| Statistical Mechanics | Quantum Mechanics |

|---|

| states: x ∈ X | histories: x ∈ X |

| probabilities: p: X → [0, ∞) | amplitudes: a: X → ℂ |

| energy: E : X → ℝ | action: A: X → ℝ |

| temperature: T | Planck’s constant times i: iħ |

| coolness: β = 1/T | classicality: λ = 1/iħ |

| partition function:

| partition function:

|

| Boltzmann distribution: p(x) = e−βE(x)/Z | Feynman sum over histories: a(x) = e−λA(x)/Z |

| entropy:

| quantropy:

|

| expected energy: 〈E〉 = ∫X p(x)E(x) dx | expected action: 〈A〉 = ∫X a(x)A(x) dx |

| free energy: F = 〈E〉 − TS | free action: Φ = 〈A〉 − iħQ |

| |

| |

| |

| principle of maximum entropy | principle of stationary quantropy |

| principle of minimum energy (in T → 0 limit) | principle of stationary action (in ħ → 0 limit) |

4. The Quantropy of a Free Particle

Let us illustrate these ideas with an example: a free particle. Suppose we have a free particle on a line tracing out some path as time goes by:

Then, its action is just the time integral of its kinetic energy:

where

. The partition function is then:

where we integrate an exponential involving the action over the space of all paths.

Unfortunately, the space of all paths is infinite-dimensional, so

Dq is ill-defined: there is no “Lebesgue measure” on an infinite-dimensional vector space. Thus, we start by treating time as discrete, a trick going back to Feynman’s original work [

2]. We consider

n time intervals of length Δ

t. We say the position of our particle at the

i-th time step is

qi ∈ ℝ, and require that the particle keeps a constant velocity

vi between the (

i − 1)-st and

i-th time steps:

Then, the action, defined as an integral, reduces to a finite sum:

We consider histories of the particle where its initial position is

q0 = 0, but its final position

qn is arbitrary. If we do not “nail down” the particle at some particular time in this way, our path integrals will diverge. Therefore, our space of histories is:

and we are ready to apply the formulas in the previous section.

We start with the partition function. Naively, it is:

where:

But this means nothing until we define the measure

Dq. Since the space of histories is just ℝ

n with coordinates

q1,…,

qn, an obvious guess for a measure would be:

However, the partition function should be dimensionless. The quantity

λA(

q) and its exponential are dimensionless, so the measure had better be dimensionless, too. However,

dq1 ⋯

dqn has units of length

n. Therefore, to make the measure dimensionless, we introduce a length scale, Δ

x, and use the measure:

It should be emphasized that despite the notation Δ

x, space is not discretized, just time. This length scale Δ

x is introduced merely in order to make the measure on the space of histories dimensionless.

Now, let us compute the partition function. For starters, we have:

Since

q0 is fixed, we can express the positions

q1,…,

qn in terms of the velocities

v1,…vn. Since:

this change of variables gives:

However, this

n-tuple integral is really just a product of

n integrals over one variable, all of which are equal. Thus, we get some integral to the

n-th power:

Now, when

α is positive, we have:

but we will apply this formula to compute the partition function, where the constant playing the role of

α is imaginary. This makes some mathematicians nervous, because when

α is imaginary, the function being integrated is no longer Lebesgue integrable. However, when

α is imaginary, we get the same answer if we impose a cutoff and then let it go to infinity:

or damp the oscillations and then let the amount of damping go to zero:

So we shall proceed unabashed and claim:

Given this formula for the partition function, we can compute everything we care about: the expected action, free action and quantropy. Let us start with the expected action:

This formula says that the expected action of our freely moving quantum particle is proportional to

n, the number of time steps. Each time step contributes î/2 to the expected action. The mass of the particle, the time step Δ

t and the length scale Δ

x do not matter at all; they disappear when we take the derivative of the logarithm containing them. Indeed, our action could be any function of this sort:

where

ci are positive numbers, and we would still get the same expected action:

Since we can diagonalize any positive definite quadratic form, we can state this fact more generally: whenever the action is a positive definite quadratic form on an

n-dimensional vector space of histories, the expected action is

n times

iħ/2

. For example, consider a free particle in three-dimensional Euclidean space, and discretize time into

n steps as we have done here. Then the action is a positive definite quadratic form on a 3

n-dimensional vector space, so the expected action is 3

n times

iħ/2

.We can try to interpret this as follows. In the path integral approach to quantum mechanics, a system can trace out any history it wants. If the space of histories is an n-dimensional vector space, it takes n real numbers to determine a specific history. Each number counts as one “decision”. In the situation we have described, where the action is a positive definite quadratic form, each decision contributes iħ/2 to the expected action.

There are some questions worth answering:

Why is the expected action imaginary? The action

A is real. How can its expected value be imaginary? The reason is that we are not taking its expected value with respect to a probability measure, but instead, with respect to a complex-valued measure. Recall that:

The action A is real, but λ = 1/iħ is imaginary; so, it is not surprising that this “expected value” is complex-valued.

Why does the expected action diverge as n → ∞? We have discretized time in our calculation. To take the continuum limit, we must let n → ∞, while simultaneously letting Δt → 0 in such a way that nΔt stays constant. Some quantities will converge when we take this limit, but the expected action will not: it will go to infinity. What does this mean?

This phenomenon is similar to how the expected length of the path of a particle undergoing Brownian motion is infinite. In fact, the free quantum particle is just a Wick-rotated version of Brownian motion, where we replace time by imaginary time; so, the analogy is fairly close. The action we are considering now is not exactly analogous to the arc length of a path:

Instead, it is proportional to this quadratic form:

However, both of these quantities diverge when we discretize Brownian motion and then take the continuum limit. The reason is that for Brownian motion, with probability 1, the path of the particle is non-differentiable, with Hausdorff dimension > 1 [

6]. We cannot apply probability theory to the quantum situation, but we are seeing that the “typical” path of a quantum free particle has infinite expected action in the continuum limit.

Why does the expected action of the free particle resemble the expected energy of an ideal gas? For a classical ideal gas with

n particles in three-dimensional space, the expected energy is:

in units where Boltzmann’s constant is one. For a free quantum particle in three-dimensional space, with time is discretized into

n steps, the expected action is:

Why are the answers so similar?

The answers are similar because of the analogy we are discussing. Just as the action of the free particle is a positive definite quadratic form on ℝ

n, so is the energy of the ideal gas. Thus, computing the expected action of the free particle is just like computing the expected energy of the ideal gas, after we make these replacements:

The last remark also means that the formulas for the free action and quantropy of a quantum free particle will be analogous to those for the free energy and entropy of a classical ideal gas, except missing the factor of 3 when we consider a particle on a line. For the free particle on a line, we have seen that:

Setting:

we can write this more compactly as:

We thus obtain the following formula for the free action:

Note that the ln

K term dropped out when we computed the expected action by differentiating ln

Z with respect to

λ, but it shows up in the free action.

The presence of this ln

K term is surprising, since the constant

K is not part of the usual theory of a free quantum particle. A completely analogous surprise occurs when computing the partition function of a classical ideal gas. The usual textbook answer involves a term of type ln

K, where

K is proportional to the volume of the box containing the gas divided by the cube of the thermal de Broglie wavelength of the gas molecules [

8]. Curiously, the latter quantity involves Planck’s constant, despite the fact that we we are considering a

classical ideal gas! Indeed, we are forced to introduce a quantity with dimensions of action to make the partition function of the gas dimensionless, because the partition function is an integral of a dimensionless quantity over position-momentum pairs, and

dpdq has units of action. Nothing within classical mechanics forces us to choose this quantity to be Planck’s constant; any choice will do. Changing our choice only changes the free energy by an additive constant. Nonetheless, introducing Planck’s constant has the advantage of removing this ambiguity in the free energy of the classical ideal gas, in a way that is retroactively justified by quantum mechanics.

Analogous remarks apply to the length scale Δx in our computation of the free action of a quantum particle. We introduced it only to make the partition function dimensionless. It is mysterious, much as Planck’s constant was mysterious when it first forced its way into thermodynamics. We do not have a theory or experiment that chooses a favored value for this constant. All we can say at present is that it appears naturally when we push the analogy between statistical mechanics and quantum mechanics to its logical conclusion, or, a skeptic might say, to its breaking point.

Finally, the quantropy of the free particle on a line is:

Again, the answer depends on the constant

K: if we do not choose a value for this constant, we only obtain the quantropy up to an additive constant. An analogous problem arises for the entropy of a classical ideal gas: without introducing Planck’s constant, we can only compute this entropy up to an additive constant.

5. Conclusions

There are many questions left to tackle. The biggest is: what is the meaning of quantropy? Unfortunately it seems hard to attack this directly. It may be easier to work out more examples and develop more of an intuition for this concept. There are, however, some related puzzles worth keeping in mind.

As emphasized by Lisi [

5], it is rather peculiar that in the path-integral approach to quantum mechanics, we normalize the complex numbers

a(

x) associated with paths, so that they integrate to 1:

It clearly makes sense to normalize probabilities so that they sum to 1. However, starting from the wavefunction of a quantum system, we obtain probabilities only after taking the absolute value of the wavefunction and squaring it. Thus, for wavefunctions, we impose:

rather than:

For this reason, Lisi calls the numbers

a(

x) “complex probabilities” rather than amplitudes. However, the meaning of complex probabilities remains mysterious, and this is tied to the mysterious nature of quantropy. Feynman’s essay on the interpretation of negative probabilities could provide some useful clues [

1].

It is also worth keeping in mind another analogy: “coolness as imaginary time”. Here, we treat

β as analogous to

it/ħ, rather than 1

/iħ. This is widely used to convert quantum mechanics problems into statistical mechanics problems by means of Wick rotation, which essentially means studying the unitary group exp(−

itH/ħ) by studying the semigroup exp(−

βH) and then analytically continuing

β to imaginary values. Wick rotation plays an important role in Hawking’s computation of the entropy of a black hole, nicely summarized in his book with Penrose [

3]. The precise relation of this other analogy to the one explored here remains unclear and is worth exploring. Note that the quantum Hamiltonian

H shows up on

both sides of this other analogy.