An Entropy-Based Adaptive Hybrid Particle Swarm Optimization for Disassembly Line Balancing Problems

Abstract

:1. Introduction

- (1)

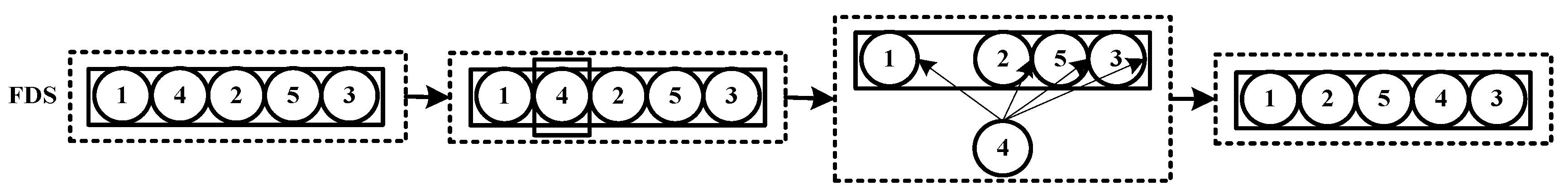

- The disassembly hierarchy information graph (DHIG) is introduced into the solution representation to make the real values apply on the permutation of tasks, which makes the DLBP transform into a problem of searching optimum path in the directed and weighted graph.

- (2)

- In the proposed algorithm, the selection of the particle with good diversity, crossover rate and mutation rate are depended on the change of the entropy. This can increase the number of the optimal solutions and improve the speed of convergence.

- (3)

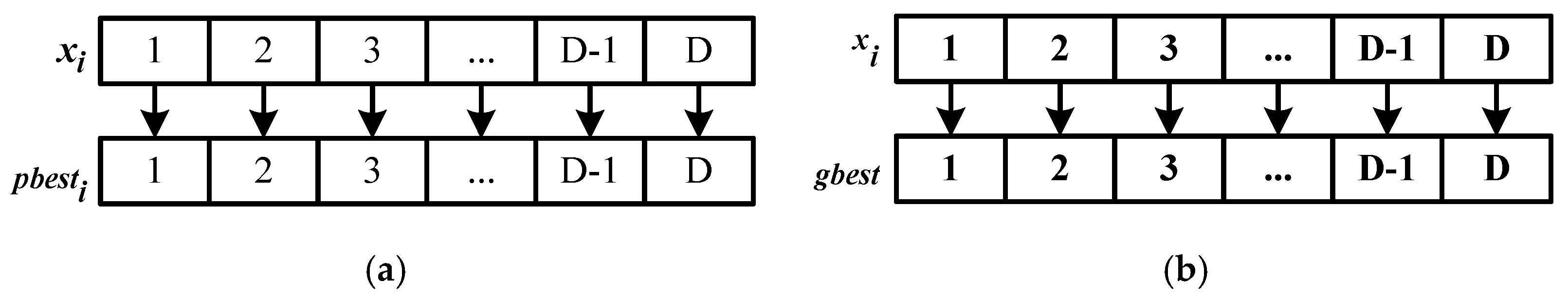

- The particle with good diversity is added to standard particle swarm velocity update formula, and we propose an improved comprehensive learning strategy, called dimension learning. The particle learns from the gbest of the swarm, its own pbest and the particle with good diversity. In this version, some dimensions are randomly chosen to learn from the gbest. Some of the remaining dimensions are randomly chosen to learn from the particle with good diversity and the remaining dimensions learn from its own pbest, thus making the particles diverse enough for getting the optimal solutions.

2. Notation

| CT | Working cycle of the workstation. |

| ti | Removal time of ith part. |

| Total part removal time requirement in ith workstation. | |

| n | Number of parts for removal. |

| m | Number of workstations. |

| m* | Minimum value of m. |

| i, j | Part identification, part count (1, …, n). |

| k | Workstation count (1, …, m). |

| N | The set of natural numbers. |

| PSi | ith part in a solution sequence. |

| di | Demand; quantity of part i requested. |

| hi | Binary value; (the binary variable is 1 if the part is hazardous, else zero). |

| IP | Set (i, j) of parts such that part i must precede part j. |

| Immediately preceding matrix. | |

| Distribution matrix. | |

3. DLBP Definition and Formulation

4. Proposed Algorithm for Solving DLBP

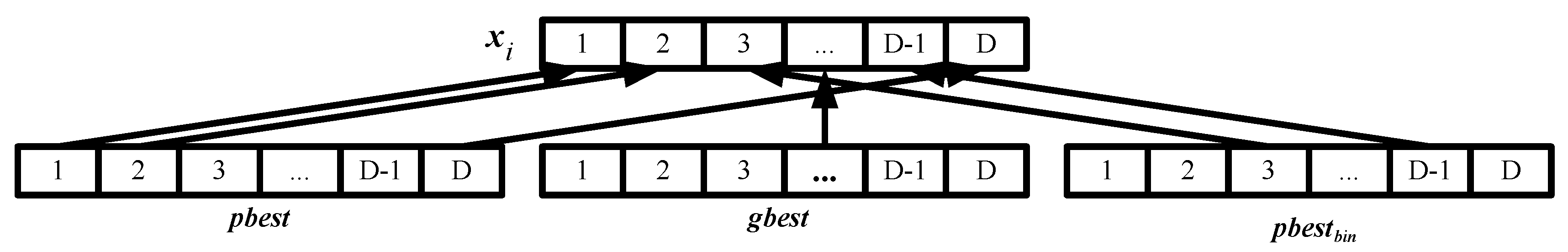

4.1. Solution Representation

- Step 1:

- Generate five (as many as the number of the tasks) random numbers between (0, 1).

- Step 2:

- Select the tasks with zero in-degree in topological sorting as the candidate set based on the precedence graph.

- Step 3:

- If the candidate task set is null, go to step 5.

- Step 4:

- Select the task with the highest disassembly priority from the candidate task set to disassembly and remove the task from the precedence graph; go back to step 2.

- Step 5:

- Output the FDS.

4.2. Introduction of Entropy

4.3. Dimension Learning

4.4. Self-Adaptive Crossover and Mutation Operator

4.5. The Regeneration of the Particles

4.6. Algorithm Proceduce for DLBP

- Step 1:

- Set , , , , , , , , , , , , , , , , .

- Step 2:

- Built the DHIG in order to calculate the individual-dimension-entropy and the population-distribution-entropy by Equations (8) and (9), respectively. Then, select and calculate the values of and .

- Step 3:

- Update the position of particles by Equation (10).

- Step 4:

- Evaluate the objective functions.

- Step 5:

- Adopt self-adaptive crossover and mutation operator to create better solutions.

- Step 6:

- Update the external archive.

- Step 7:

- Convert the non-dominated solution to the continuous representation and replace with pbest for use at the next generation.

- Step 8:

- Make sure the position of the gbest according to the values of CD and GD.

- Step 9:

- If T > Tmax, go to step 10; else, go back to step 2.

- Step 10:

- Output optimal disassembly solutions.

5. Numerical Results

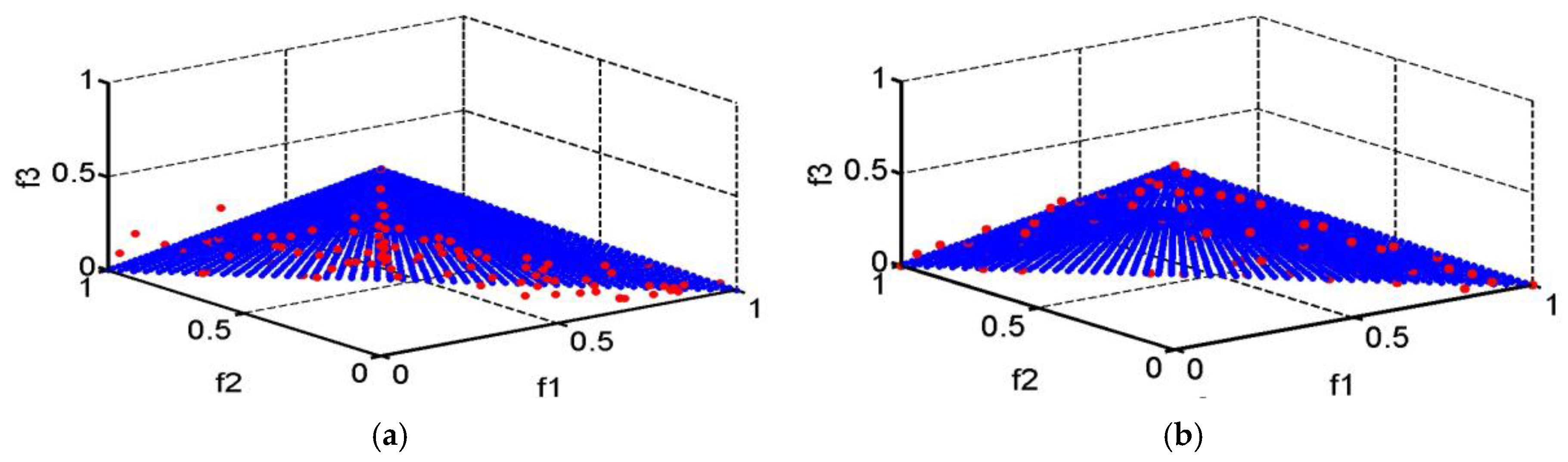

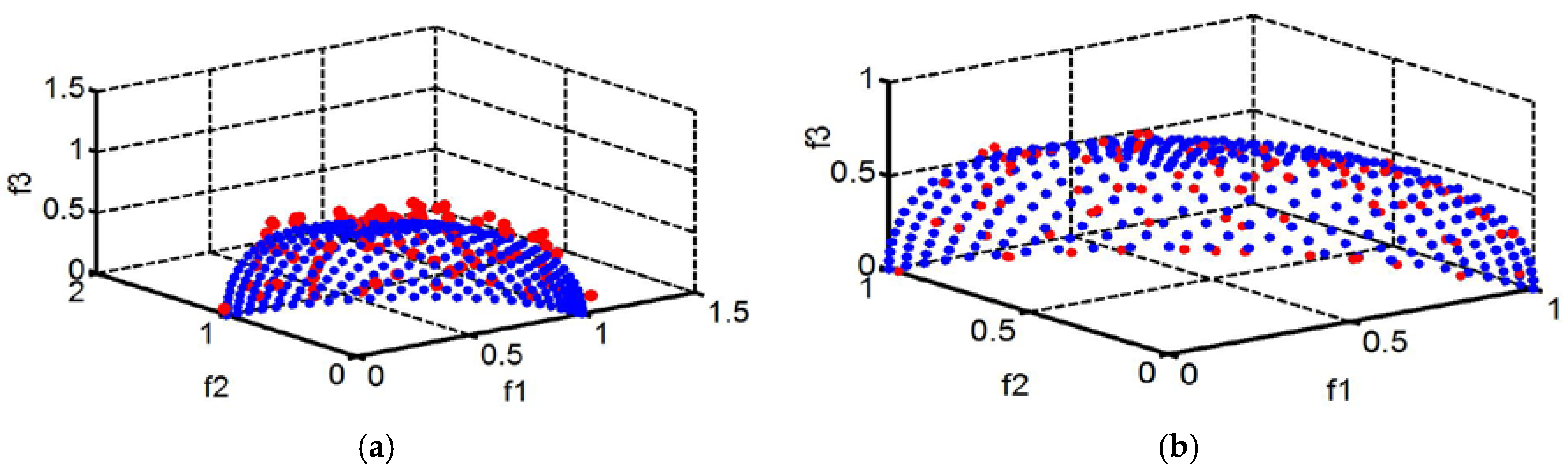

5.1. Test for Benchmark Functions

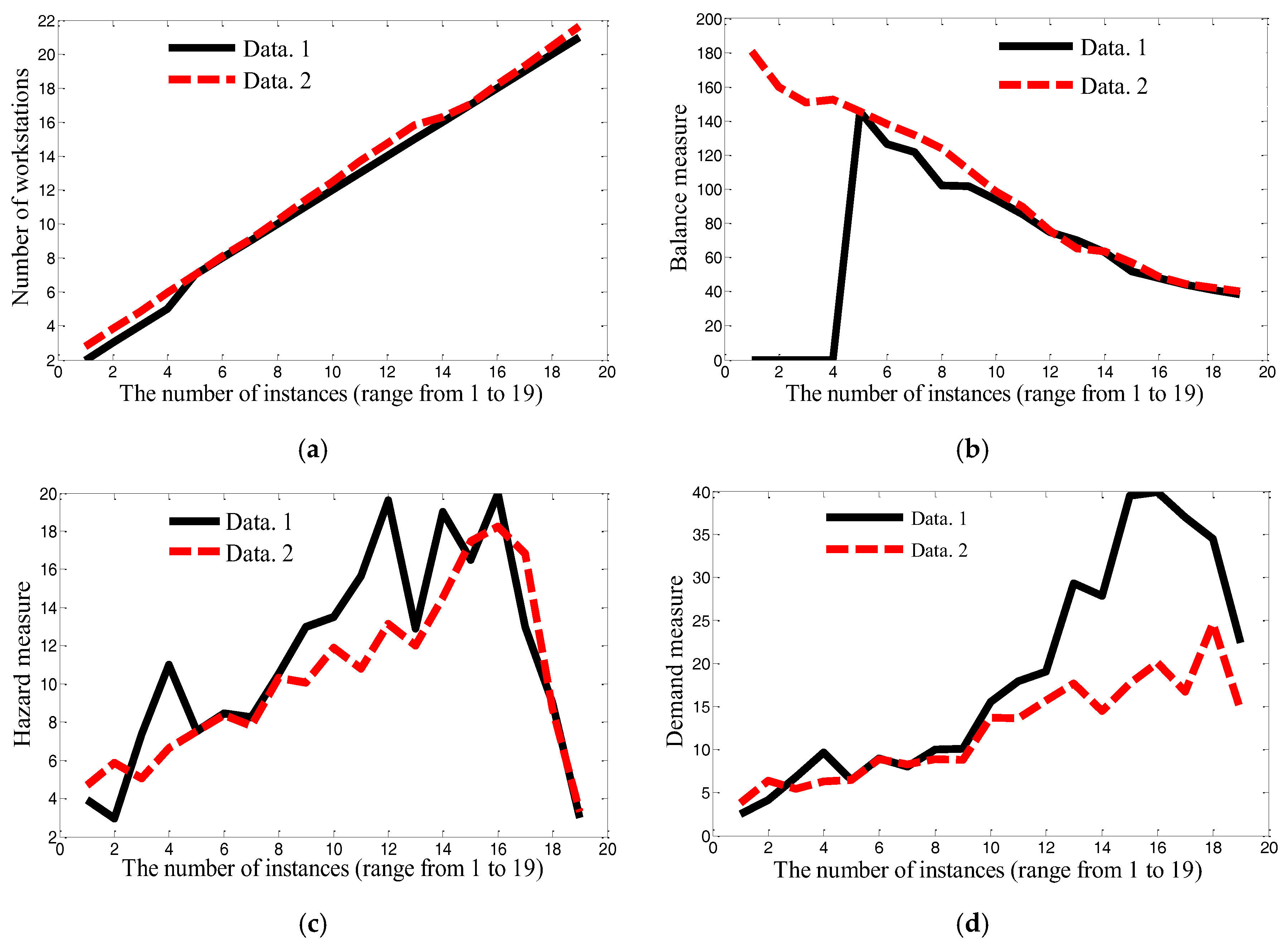

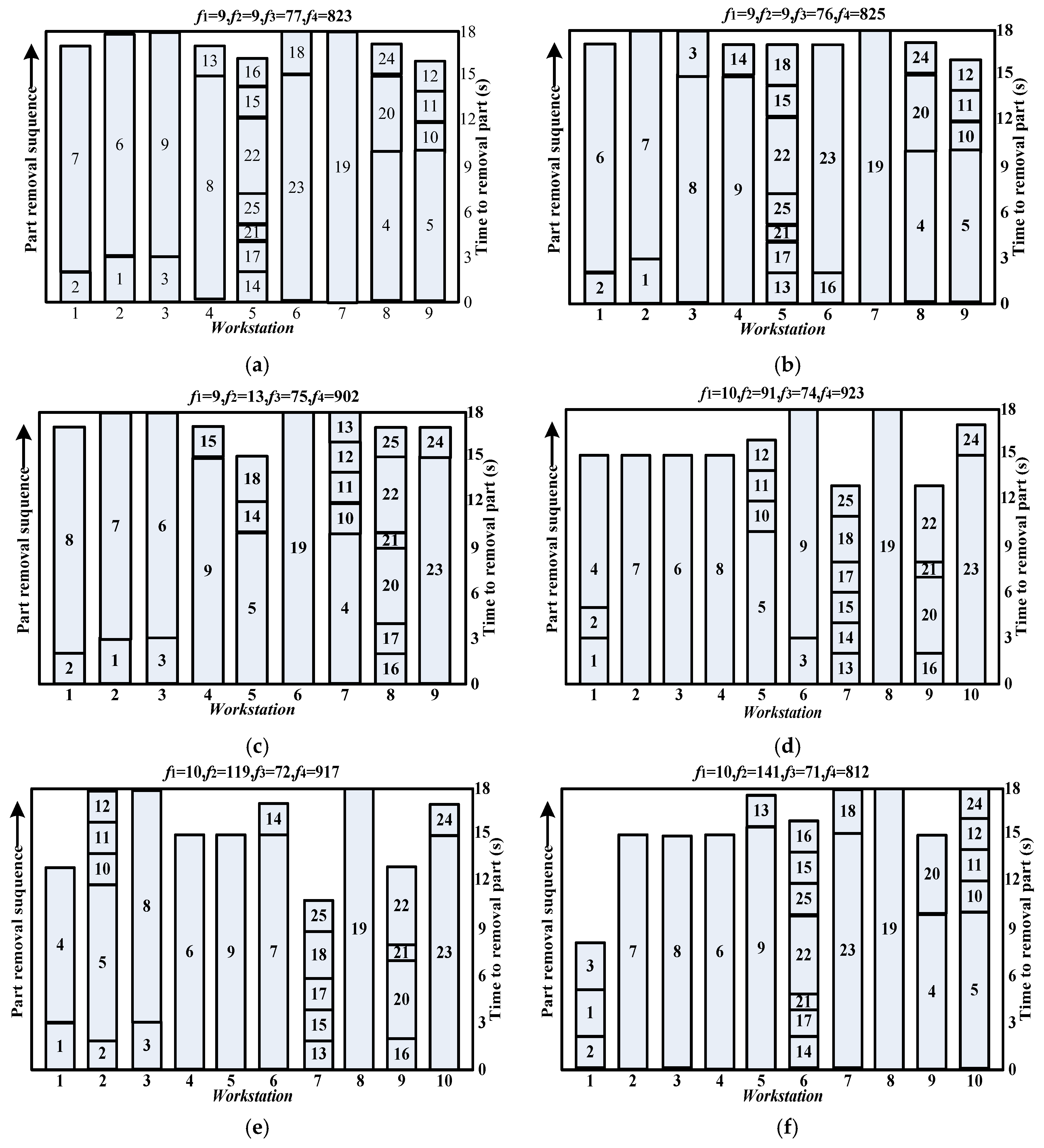

5.2. Applied Examples

- (1)

- Based on prioritization of multi-objective.

- (2)

- Optimize the objectives simultaneously.

- The removal times are set to

- The hazard values are quantified to

- Demands are set to

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Güngör, A.; Gupta, S.M. Disassembly line in product recovery. Int. J. Prod. Res. 2002, 40, 2569–2589. [Google Scholar] [CrossRef]

- McGovern, S.M.; Gupta, S.M. A balancing method and genetic algorithm for disassembly line balancing. Eur. J. Oper. Res. 2007, 179, 692–708. [Google Scholar] [CrossRef]

- Özceylan, E.; Paksoy, T. Fuzzy mathematical programming approaches for reverse supply chain optimization with disassembly line balancing problem. J. Intell. Fuzzy Syst. 2014, 26, 1969–1985. [Google Scholar]

- Mete, S.; Çil, Z.A.; Özceylan, E.; Ağpak, K. Resource constrained disassembly line balancing problem. IFAC-PapersOnLine 2016, 49, 921–925. [Google Scholar] [CrossRef]

- Altekin, F.T.; Kandiller, L.; Ozdemirel, N.E. Profit-oriented disassembly-line balancing. Int. J. Prod. Res. 2008, 46, 2675–2693. [Google Scholar] [CrossRef] [Green Version]

- McGovern, S.M.; Gupta, S.M. Ant colony optimization for disassembly sequencing with multiple objectives. Int. J. Adv. Manuf. Technol. 2006, 30, 481–496. [Google Scholar] [CrossRef]

- Ding, L.P.; Feng, Y.X.; Tan, J.R.; Gao, Y.C. A new multi-objective ant colony algorithm for solving the disassembly line balancing problem. Int. J. Adv. Manuf. Technol. 2010, 48, 761–771. [Google Scholar] [CrossRef]

- Kalayci, C.B.; Gupta, S.M.; Nakashima, K. A Simulated Annealing Algorithm for Balancing a Disassembly Line. In Design for Innovative Value towards a Sustainable Society; Springer: Berlin, Germany, 2012. [Google Scholar]

- Kalayci, C.B.; Gupta, S.M. A particle swarm optimization algorithm for solving disassembly line balancing problem. In Proceedings for the Northeast Region Decision Sciences Institute; Springer: Berlin, Germany, 2012. [Google Scholar]

- Kalayci, C.B.; Polat, O.; Gupta, S.M. A variable neighborhood search algorithm for disassembly lines. J. Manuf. Technol. Manag. 2014, 26, 182–194. [Google Scholar] [CrossRef]

- Kalayci, C.B.; Gupta, S.M.; Nakashima, K. Bees colony intelligence in al solving disassembly line balancing problem. In Proceedings of the 2011 Asian Conference of Management Science and Applications, Sanya, China, 21–23 December 2011; pp. 21–22. [Google Scholar]

- Aydemir-Karadag, A.; Turkbey, O. Multi-objective optimization of stochastic disassembly line balancing with station paralleling. Comput. Ind. Eng. 2013, 65, 413–425. [Google Scholar] [CrossRef]

- Paksoy, T.; Güngör, A.; Özceylan, E.; Hancilar, A. Mixed model disassembly line balancing problem with fuzzy goals. Int. J. Prod. Res. 2013, 51, 6082–6096. [Google Scholar] [CrossRef]

- Kalayci, C.B.; Gupta, S.M. Ant colony optimization for sequence-dependent disassembly line balancing problem. J. Manuf. Technol. Manag. 2013, 24, 413–427. [Google Scholar] [CrossRef]

- Kalayci, C.B.; Gupta, S.M. A particle swarm optimization algorithm with neighborhood-based mutation for sequence-dependent disassembly line balancing problem. Int. J. Adv. Manuf. Technol. 2013, 69, 197–209. [Google Scholar] [CrossRef]

- Kalayci, C.B.; Gupta, S.M. Artificial bee colony algorithm for solving sequence-dependent disassembly line balancing problem. Expert Syst. Appl. 2013, 40, 7231–7241. [Google Scholar] [CrossRef]

- Kalayci, C.B.; Polat, O.; Gupta, S.M. A hybrid genetic algorithm for sequence-dependent disassembly line balancing problem. Ann. Opera. Res. 2016, 242, 321–354. [Google Scholar] [CrossRef]

- Turky, A.M.; Abdullah, S. A multi-population harmony search algorithm with external archive for dynamic optimization problems. Inf. Sci. 2014, 272, 84–95. [Google Scholar] [CrossRef]

- Hu, W.; Liang, H.; Peng, C.; Du, B.; Hu, Q. A hybrid chaos-particle swarm optimization algorithm for the vehicle routing problem with time window. Entropy 2013, 15, 1247–1270. [Google Scholar] [CrossRef]

- Nasir, M.; Das, S.; Maity, D.; Sengupta, S.; Halder, U.; Suganthan, P.N. A dynamic neighborhood learning based particle swarm optimizer for global numerical optimization. Inf. Sci. 2012, 209, 16–36. [Google Scholar] [CrossRef]

- Dou, J.; Su, C.; Li, J. A discrete particle swarm optimization algorithm for assembly line balancing problem of type 1. IEEE Comput. Soc. 2011, 1, 44–47. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the 1995 IEEE International Conference on Neural Networks, Perth, Western Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Van, D.B.F.; Engelbrecht, A.P. A cooperative approach to particle swarm optimization. IEEE Trans. Evol. Comput. 2004, 8, 225–238. [Google Scholar]

- De Paula, L.; Soares, A.S.; de Lima, T.W.; Coelho, C.J. Feature Selection using Genetic Algorithm: An analysis of the bias-property for one-point crossover. In Proceedings of the 2016 on Genetic and Evolutionary Computation Conference Companion, New York, NY, USA, 20–24 July 2016; pp. 1461–1462. [Google Scholar]

- Samuel, R.K.; Venkumar, P. Some novel methods for flow shop scheduling. Int. J. Eng. Sci. Technol. 2011, 3, 8395–8403. [Google Scholar]

- Cicirello, V.A. Non-wrapping order crossover: An order preserving crossover operator that respects absolute position. In Proceedings of the Genetic and Evolutionary Computation Conference, GECCO 2006, Seattle, WA, USA, 8–12 July 2006; pp. 1125–1132. [Google Scholar]

- Raquel, C.R.; Naval, P.C. An effective use of crowding distance in multi-objective particle swarm optimization. In Proceedings of the 7th Annual Conference on Genetic and Evolutionary Computation, Washington, DC, USA, 25–29 July 2005; pp. 257–264. [Google Scholar]

- Deb, K.; Pratap, A. A fast and elitist multi-objective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2004, 8, 256–279. [Google Scholar]

- McGovern, S.M.; Gupta, S.M. Uninformed and probabilistic distributed agent combinatorial searches for the unary NP-complete disassembly line balancing problem. In Proceedings of the SPIE-The International Society for Optical Engineering, Bellingham, WA, USA, 4 November 2005; Volume 5997. [Google Scholar]

| Type | AHPSO without Entropy | AHPSO with Entropy |

|---|---|---|

| The particle with good diversity | Randomly selected one from the other particles’ | |

| Crossover rate and mutation rate | According to Equations (11) and (12) | Empirical value: , |

| Inertia weight | ||

| Learning factor | ||

| The size of external archive | 100 | 100 |

| 100 | 100 | |

| / | , | , |

| 30 | 30 | |

| 300 | 300 |

| Performance Metric | AHPSO without Entropy | AHPSO with Entropy | |

|---|---|---|---|

| DTLZ1 | GD | 5.1445 × 10−4 | 1.3342 × 10−4 |

| SP | 0.0055 | 0.0042 | |

| MS | 0.9878 | 1 | |

| DTLZ2 | GD | 3.701 × 10−4 | 2.403 × 10−4 |

| SP | 0.0063 | 0.0045 | |

| MS | 0.9842 | 0.9967 |

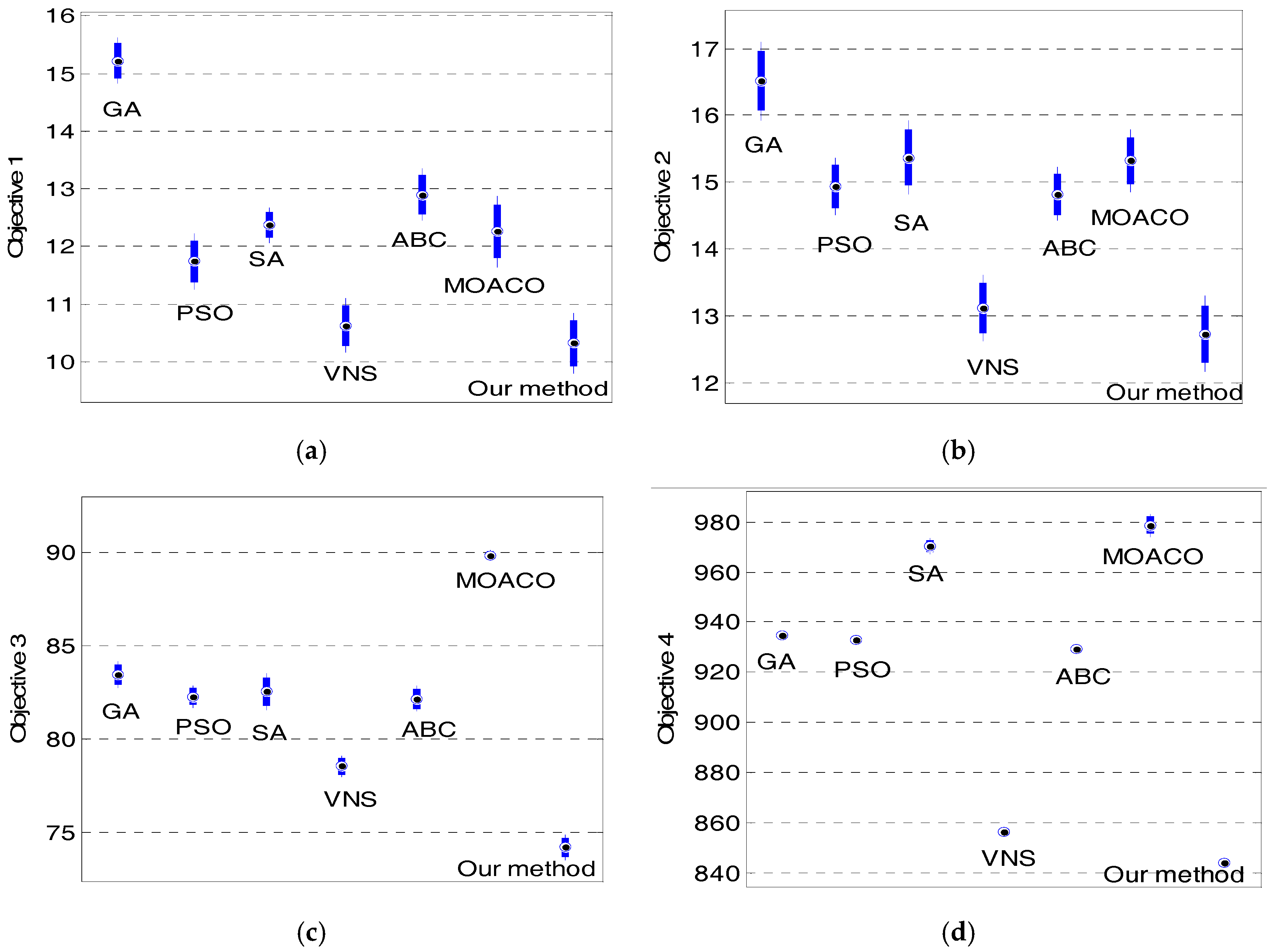

| Publication | Method | f1 | f2 | f3 | f4 | |

|---|---|---|---|---|---|---|

| McGovern and Gupta, 2007 [2] | GA | 9 | 9 | 82 | 868 | |

| Kalayci and Gupta, 2012 [9] | PSO | 9 | 9 | 80 | 857 | |

| Kalayci and Gupta, 2012 [8] | SA | 9 | 9 | 81 | 853 | |

| Kalayci et al., 2014 [10] | VNS | 9 | 9 | 76 | 825 | |

| Kalayciet al., 2011 [11] | ABC | 9 | 9 | 81 | 853 | |

| Ding et al., 2010 [7] | MOACO | No.1 | 9 | 9 | 87 | 927 |

| No.2 | 9 | 11 | 85 | 898 | ||

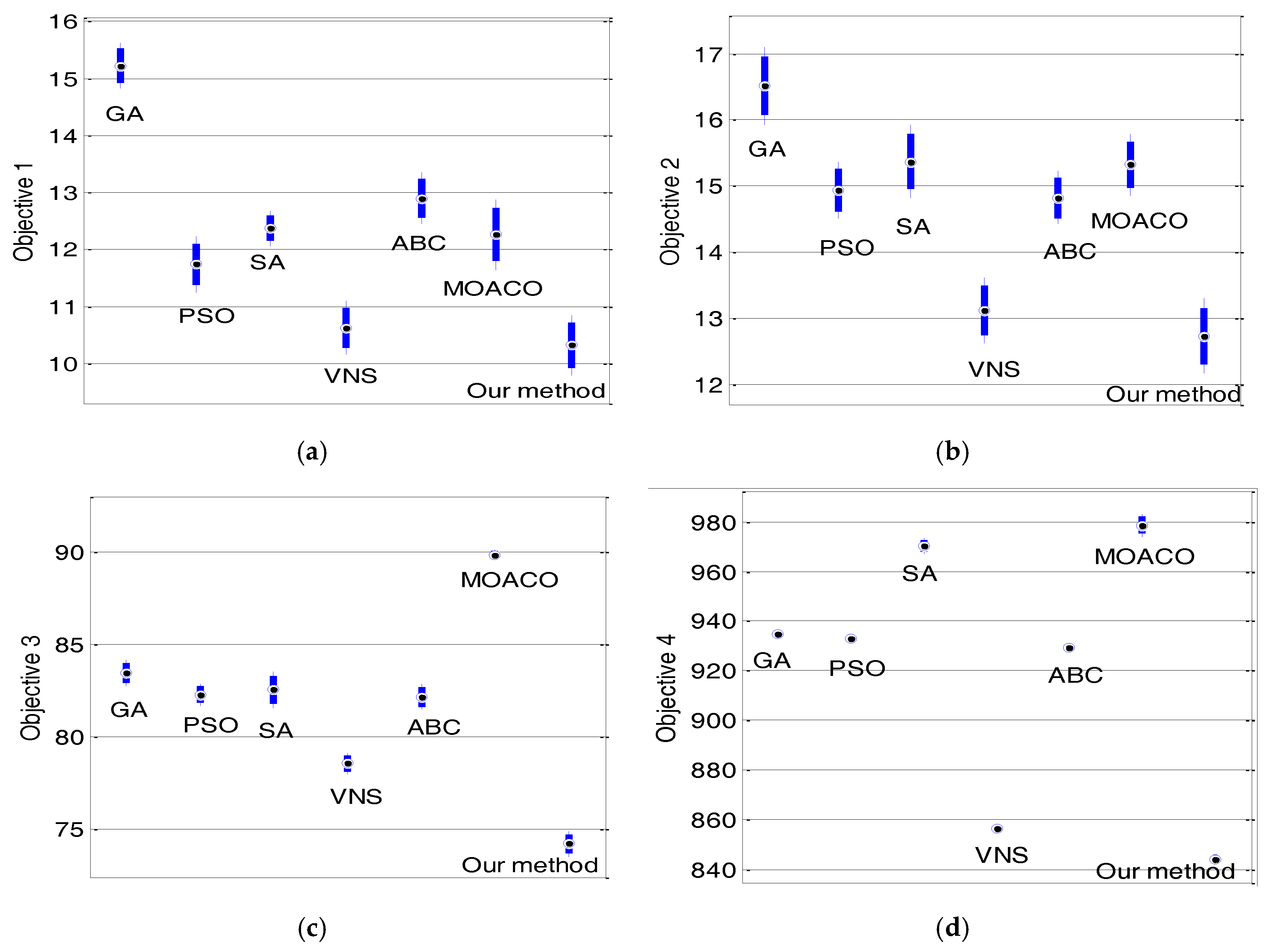

| Objective | Method | Average | Standard Deviation | Confidence Interval | |

|---|---|---|---|---|---|

| f1 | GA | 15.22 | 1.34 | 14.82 | 15.62 |

| PSO | 11.74 | 1.64 | 11.25 | 12.23 | |

| SA | 12.37 | 1.01 | 12.07 | 12.67 | |

| VNS | 10.63 | 1.56 | 10.16 | 11.10 | |

| ABC | 12.9 | 1.52 | 12.44 | 13.35 | |

| MOACO | 12.26 | 2.07 | 11.64 | 12.88 | |

| entropy-based AHPSO | 10.32 | 1.72 | 9.80 | 10.84 | |

| f2 | GA | 16.51 | 1.98 | 15.92 | 17.10 |

| PSO | 14.94 | 1.43 | 14.51 | 15.37 | |

| SA | 15.37 | 1.87 | 14.81 | 15.93 | |

| VNS | 13.12 | 1.64 | 12.63 | 13.61 | |

| ABC | 14.82 | 1.34 | 14.42 | 15.22 | |

| MOACO | 15.32 | 1.55 | 14.85 | 15.79 | |

| entropy-based AHPSO | 12.74 | 1.89 | 12.17 | 13.30 | |

| f3 | GA | 83.46 | 2.37 | 82.75 | 84.17 |

| PSO | 82.27 | 1.98 | 81.68 | 82.86 | |

| SA | 82.53 | 3.35 | 81.52 | 83.54 | |

| VNS | 78.55 | 1.93 | 77.97 | 79.13 | |

| ABC | 82.15 | 2.30 | 81.46 | 82.84 | |

| MOACO | 89.83 | 0.93 | 89.55 | 90.11 | |

| entropy-based AHPSO | 74.2 | 2.31 | 73.51 | 74.89 | |

| f4 | GA | 934.64 | 6.72 | 932.62 | 936.66 |

| PSO | 932.81 | 5.38 | 931.19 | 934.43 | |

| SA | 970.35 | 10.54 | 967.18 | 973.52 | |

| VNS | 856.29 | 4.87 | 854.83 | 857.75 | |

| ABC | 929.43 | 5.09 | 927.90 | 930.96 | |

| MOACO | 978.67 | 15.02 | 974.16 | 983.18 | |

| entropy-based AHPSO | 843.82 | 5.96 | 842.03 | 845.61 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, S.; Wang, Y.; Yu, H.; Nie, S. An Entropy-Based Adaptive Hybrid Particle Swarm Optimization for Disassembly Line Balancing Problems. Entropy 2017, 19, 596. https://doi.org/10.3390/e19110596

Xiao S, Wang Y, Yu H, Nie S. An Entropy-Based Adaptive Hybrid Particle Swarm Optimization for Disassembly Line Balancing Problems. Entropy. 2017; 19(11):596. https://doi.org/10.3390/e19110596

Chicago/Turabian StyleXiao, Shanli, Yujia Wang, Hui Yu, and Shankun Nie. 2017. "An Entropy-Based Adaptive Hybrid Particle Swarm Optimization for Disassembly Line Balancing Problems" Entropy 19, no. 11: 596. https://doi.org/10.3390/e19110596