Strategic Information Processing from Behavioural Data in Iterated Games

Abstract

:1. Introduction

2. Materials and Methods

2.1. Game Theory: Matching Pennies

| agent | |||

| Left | Right | ||

| agent i | Left | ||

| Right | |||

2.2. Probabilities and Information Theory

2.3. The Experimental Set-up

3. Results

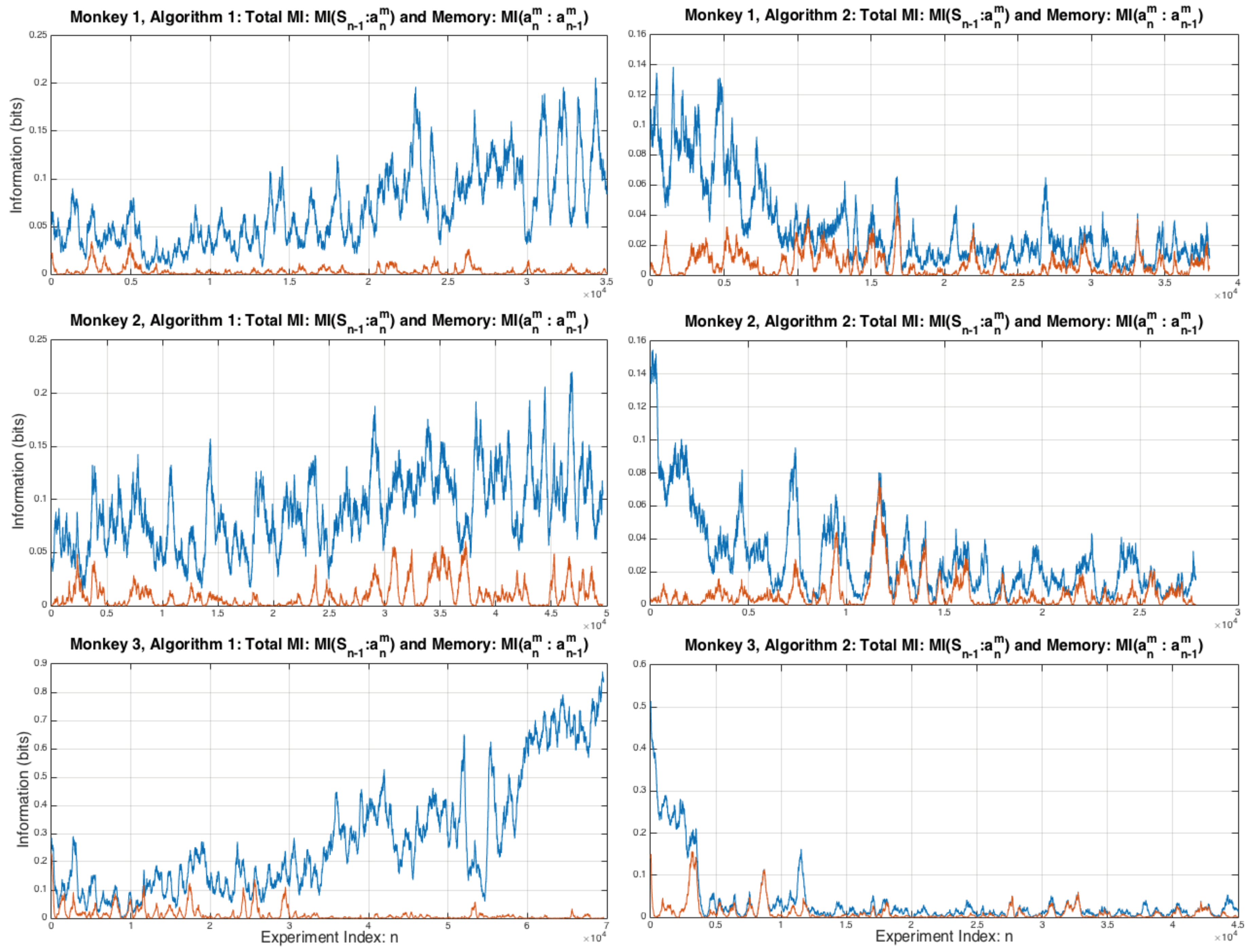

3.1. 1-Step Monkey Memory and Total Information

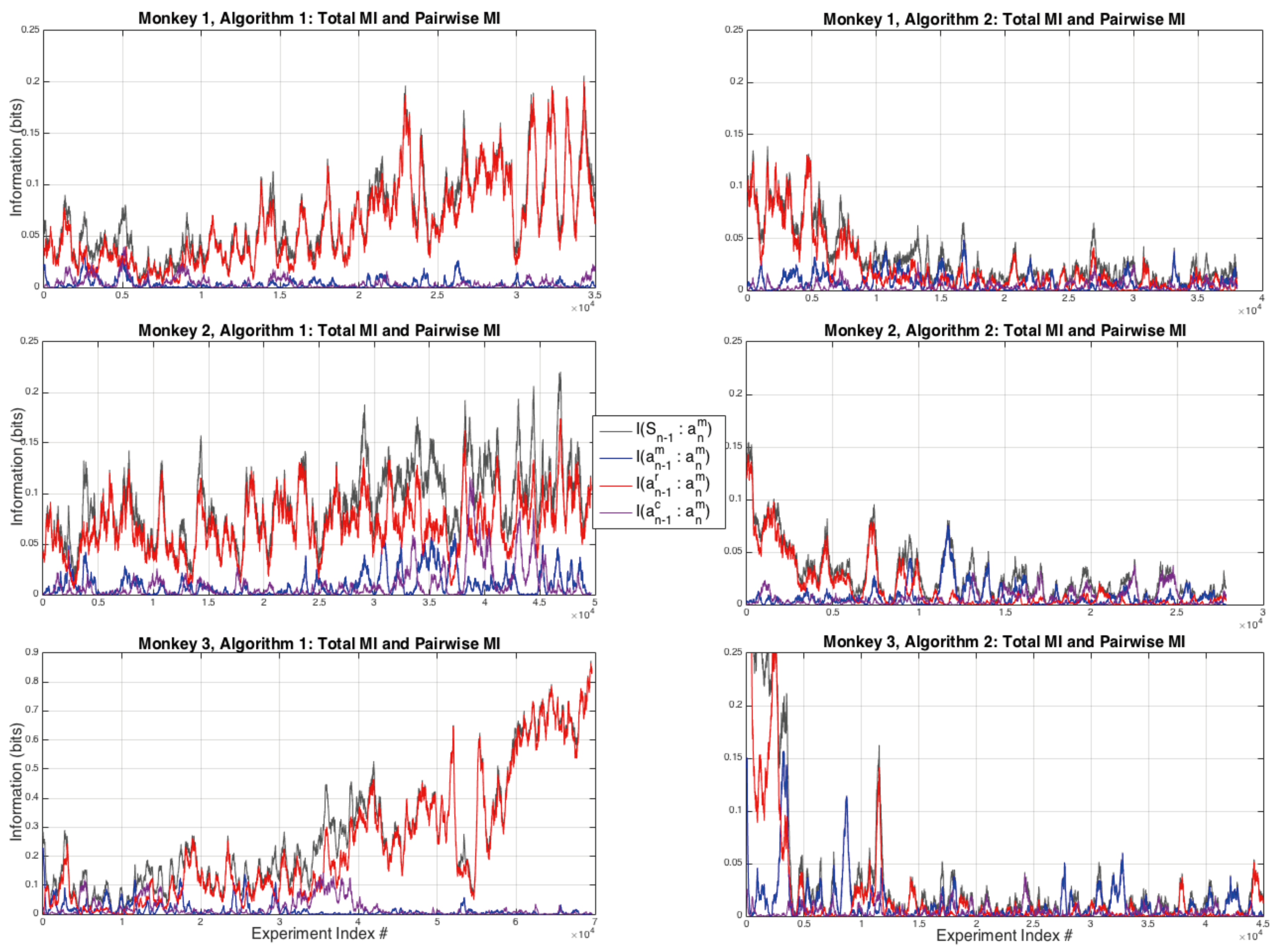

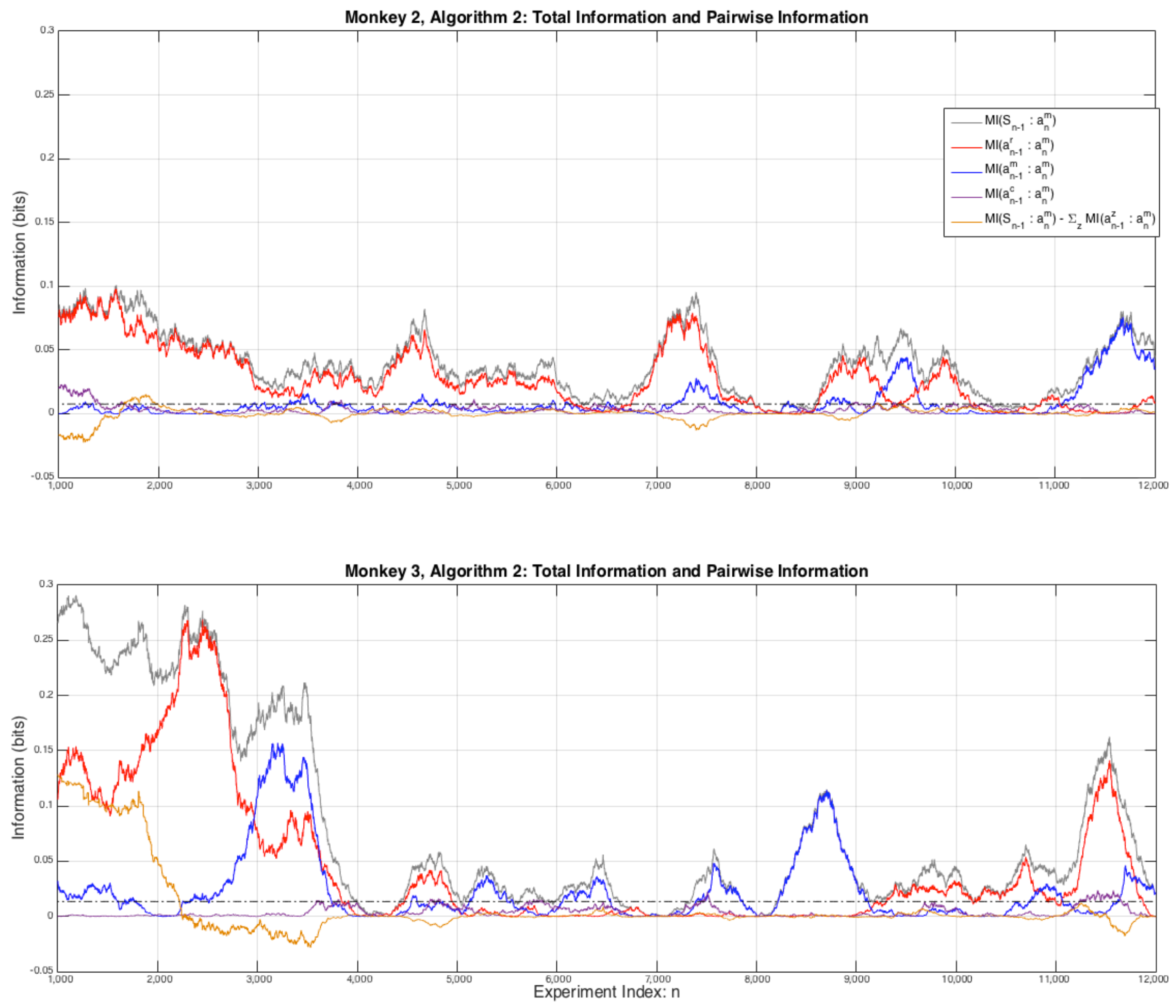

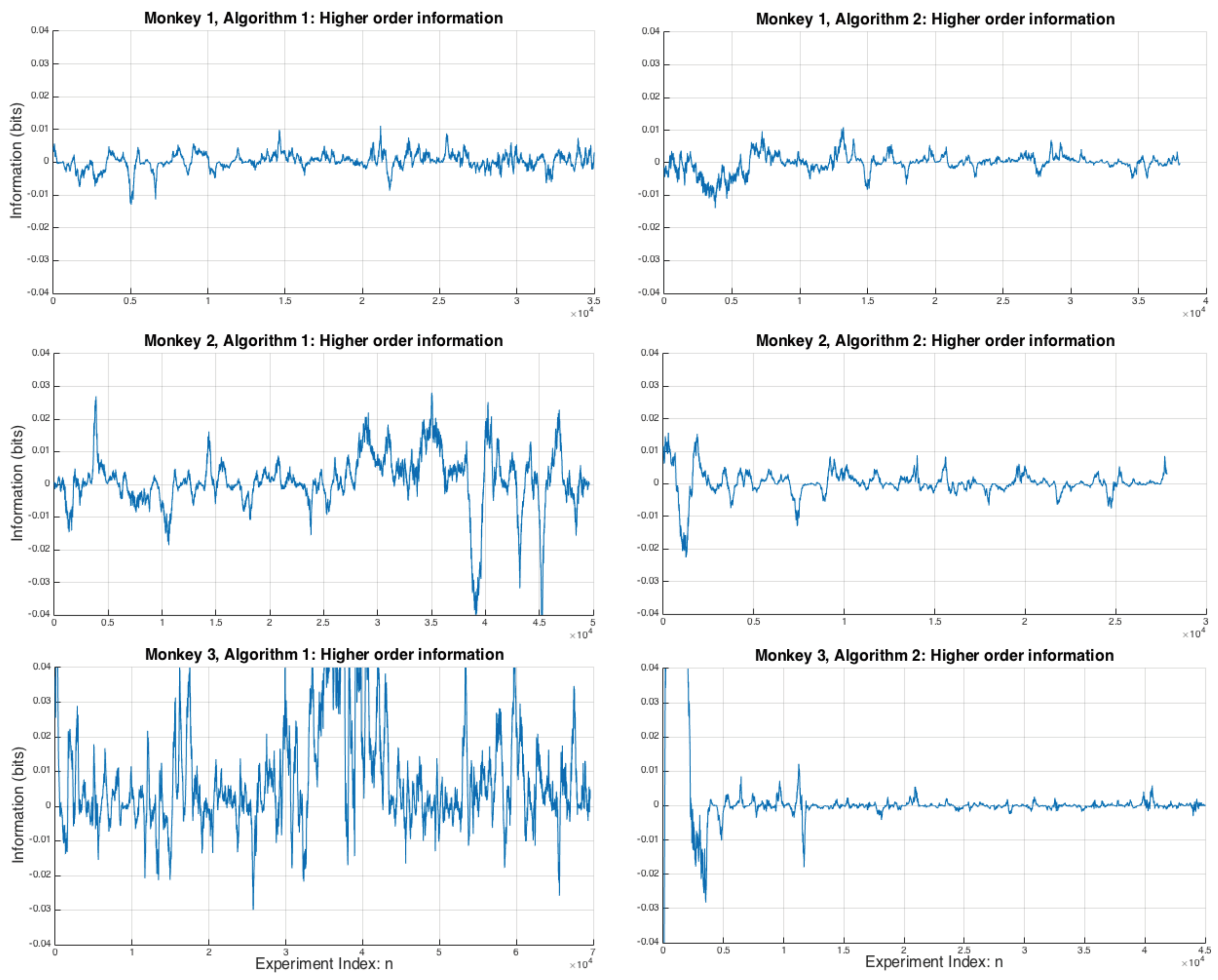

3.2. Pairwise and Higher Order Interactions

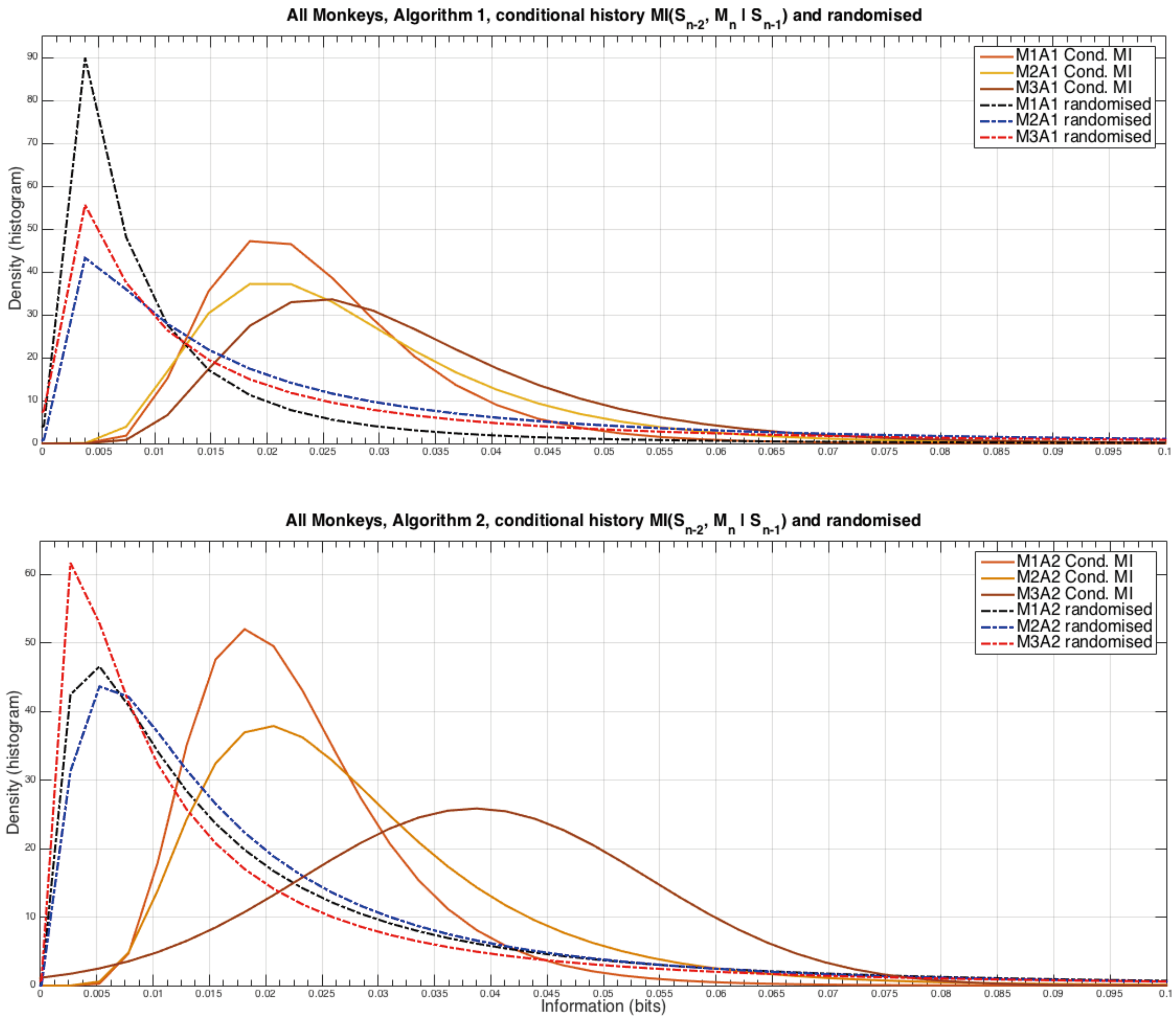

3.3. Two-Step Monkey Memory and Conditional Mutual Information

4. Discussion

| XNOR logic gate for win-stay, lose-switch strategy of agent i | |

|

Acknowledgments

Conflicts of Interest

References

- Mirowski, P. Machine Dreams: Economics Becomes a Cyborg Science; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Mirowski, P. Markets come to bits: Evolution, computation and markomata in economic science. J. Econ. Behav. Organ. 2007, 63, 209–242. [Google Scholar] [CrossRef]

- Tesfatsion, L. Agents come to bits: Towards a constructive comprehensive taxonomy of economic entities. J. Econ. Behav. Organ. 2007, 63, 333–346. [Google Scholar] [CrossRef]

- Axelrod, R.; Tesfatsion, L. Appendix A: A guide for newcomers to agent-based modeling in the social sciences. Handb. Comput. Econ. 2006, 2, 1647–1659. [Google Scholar]

- Harré, M. Utility, Revealed Preferences Theory, and Strategic Ambiguity in Iterated Games. Entropy 2017, 19, 201. [Google Scholar] [CrossRef]

- Von Neumann, J.; Morgenstern, O. Theory of Games and Economic Behavior; Princeton University Press: Princeton, NJ, USA, 2007. [Google Scholar]

- Nash, J. Non-cooperative games. Ann. Math. 1951, 54, 286–295. [Google Scholar] [CrossRef]

- Weibull, J.W. Evolutionary Game Theory; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Helbing, D.; Farkas, I.J.; Molnar, P.; Vicsek, T. Simulation of pedestrian crowds in normal and evacuation situations. Pedestr. Evacuat. Dyn. 2002, 21, 21–58. [Google Scholar]

- Brown, J.S.; Laundré, J.W.; Gurung, M. The ecology of fear: Optimal foraging, game theory, and trophic interactions. J. Mammal. 1999, 80, 385–399. [Google Scholar] [CrossRef]

- Axelrod, R.M. The Evolution of Cooperation; Basic Books: New York, NY, USA, 2006. [Google Scholar]

- Nowak, M.A. Evolutionary Dynamics; Harvard University Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Nowak, M.; Sigmund, K. A strategy of win-stay, lose-shift that outperforms tit-for-tat in the Prisoner’s Dilemma game. Nature 1993, 364, 56–58. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.; Conroy, M.L.; McGreevy, B.P.; Barraclough, D.J. Reinforcement learning and decision making in monkeys during a competitive game. Cogn. Brain Res. 2004, 22, 45–58. [Google Scholar] [CrossRef] [PubMed]

- Camerer, C. Behavioral Game Theory: Experiments in Strategic Interaction; Princeton University Press: Princeton, NJ, USA, 2003. [Google Scholar]

- Prokopenko, M.; Harré, M.; Lizier, J.; Boschetti, F.; Peppas, P.; Kauffman, S. Self-referential basis of undecidable dynamics: From The Liar Paradox and The Halting Problem to The Edge of Chaos. arXiv, 2017; arXiv:1711.02456. [Google Scholar]

- Rosser, J.B. On the complexities of complex economic dynamics. J. Econ. Perspect. 1999, 13, 169–192. [Google Scholar] [CrossRef]

- Markose, S.M. Computability and evolutionary complexity: Markets as complex adaptive systems (CAS). Econ. J. 2005, 115, F159–F192. [Google Scholar] [CrossRef]

- Barraclough, D.J.; Conroy, M.L.; Lee, D. Prefrontal cortex and decision making in a mixed-strategy game. Nat. Neurosci. 2004, 7, 404–410. [Google Scholar] [CrossRef] [PubMed]

- Lee, D. Game theory and neural basis of social decision making. Nat. Neurosci. 2008, 11, 404–409. [Google Scholar] [CrossRef] [PubMed]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 2000, 85, 461–464. [Google Scholar] [CrossRef] [PubMed]

- Wibral, M.; Pampu, N.; Priesemann, V.; Siebenhühner, F.; Seiwert, H.; Lindner, M.; Lizier, J.T.; Vicente, R. Measuring information-transfer delays. PLoS ONE 2013, 8, e55809. [Google Scholar] [CrossRef] [PubMed]

- Bossomaier, T.; Barnett, L.; Harré, M.; Lizier, J.T. An Introduction to Transfer Entropy: Information Flow in Complex Systems; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Axelrod, R. Effective choice in the prisoner’s dilemma. J. Confl. Resolut. 1980, 24, 3–25. [Google Scholar] [CrossRef]

- Lizier, J.T. JIDT: An information-theoretic toolkit for studying the dynamics of complex systems. arXiv, 2014; arXiv:1408.3270. [Google Scholar]

- Bertschinger, N.; Rauh, J.; Olbrich, E.; Jost, J.; Ay, N. Quantifying unique information. Entropy 2014, 16, 2161–2183. [Google Scholar] [CrossRef]

- Griffith, V.; Koch, C. Quantifying synergistic mutual information. In Guided Self-Organization: Inception; Springer: Berlin/Heidelberg, Germany, 2014; pp. 159–190. [Google Scholar]

- Seo, H.; Barraclough, D.J.; Lee, D. Lateral intraparietal cortex and reinforcement learning during a mixed-strategy game. J. Neurosci. 2009, 29, 7278–7289. [Google Scholar] [CrossRef] [PubMed]

- Minsky, M.; Papert, S. Perceptrons; MIT Press: Cambridge, MA, USA, 1969. [Google Scholar]

- Donahue, C.H.; Seo, H.; Lee, D. Cortical signals for rewarded actions and strategic exploration. Neuron 2013, 80, 223–234. [Google Scholar] [CrossRef] [PubMed]

- Wolpert, D.H.; Kolchinsky, A.; Owen, J. The minimal hidden computer needed to implement a visible computation. arXiv, 2017; arXiv:1708.08494. arXiv:1708.08494. [Google Scholar]

- Ay, N.; Löhr, W. The Umwelt of an embodied agent–a measure-theoretic definition. Theory Biosci. 2015, 134, 105–116. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Harré, M.S. Strategic Information Processing from Behavioural Data in Iterated Games. Entropy 2018, 20, 27. https://doi.org/10.3390/e20010027

Harré MS. Strategic Information Processing from Behavioural Data in Iterated Games. Entropy. 2018; 20(1):27. https://doi.org/10.3390/e20010027

Chicago/Turabian StyleHarré, Michael S. 2018. "Strategic Information Processing from Behavioural Data in Iterated Games" Entropy 20, no. 1: 27. https://doi.org/10.3390/e20010027