Maximum Entropy Analysis of Flow Networks: Theoretical Foundation and Applications

Abstract

:1. Introduction

2. Network Specifications

- (1)

- A set of vertices (nodes), with index i or j.

- (2)

- A set of internal edges (including loop edges) between pairs of nodes, commonly represented by an adjacency matrix , in which each element indicates the connectivity between nodes i and j. On an undirected graph, , indicating Boolean connectivity (hence is symmetric with a zero diagonal), so respectively for undirected simple graphs and undirected loop-graphs, where is the number of self-loops. On a digraph, refers strictly to the connection from node i to j, thus incorporating the flow direction (so can be asymmetric), hence . On a multigraph, indicates the number of edges from node i to j, whence respectively for undirected multigraphs, undirected loop multigraphs and multidigraphs. For weighted graphs, (or more generally ), representing the weight of each edge. Double summation then gives respectively for undirected, undirected loop and directed weighted graphs, where (or more generally ) are respectively the total weight and the weight of self-loops.

- (3)

- A set of external edges (links) to nodes, represented by an N-dimensional external adjacency vector , in which each element indicates the number of external links to node i. For undirected graphs, digraphs and multigraphs, . (While not implemented here, the external links could alternatively be represented by P connections to a fictitious external node, allowing all links to be united into an augmented adjacency matrix [24,33].) For a weighted graph, if the external edges are also weighted then , where (or more generally ) is the total weight of external edges.

- (4)

- A set of M internal flow rates , , of some countable quantity B from nodes i to j along the th edge, measured in units of B s. In general, the flow rates will be functions of time and/or the graph ensemble. For a simple graph, they can be grouped into the flow rate matrix , with nonexistent edges handled by assigning or . (Alternatively, the admissible flow rates can be stacked into a flow rate vector , for which the th and nth edges are connected by a lookup table [32,33].) In addition:

- (a)

- On an undirected graph, the non-diagonal flow rates , are antisymmetric and can reverse direction. In contrast, on a digraph they are strictly nonnegative and need not be correlated . We recognize that in all networks, the flows are quantized (especially significant for transport networks), but for maximum generality, we here adopt the continuum assumption with real-valued flow rates.

- (b)

- A graph with self-loops can have , i.e., . Such flows are incompatible with potential flow networks, but can be realized in other systems such as transport networks.

- (c)

- On a multigraph, there may be up to connections from node i to j, with flow rates labelled for . These can be united into the third-order matrix , assigning or for nonexistent edges. If summed along the kth direction, this gives an total internal flow rate matrix , with entries .

- (d)

- For networks with alternating electrical currents, it is convenient to consider complex flow rates (phasors) , commonly expanded as , where is the current amplitude, is the phase and .

- (e)

- For multidimensional flows, we define as the flow of species on the th edge, where C is the total number of species (e.g., cars, trucks and buses on a road network, or independent chemical species on a chemical reaction network). These can be collated into the fourth-order matrix , which if summed along the kth direction gives the total internal flow rate matrix . For brevity, we refer to flows of a C-dimensional vector quantity with components .

- (f)

- In some flow networks, a given edge into a node may be connected only to some outward edges (e.g., certain traffic junctions in cities, or protected nodes in electrical networks). Such nodes must be treated as graphical objects in their own right, leading to embedded networks-of-networks. Such complications, while important, are not examined further here.

- (5)

- A set of P external flow rates , , , where , denoting flow on the mth external link to node i, here defined positive if an inward flow. These flow rates are also measured in units of B s, and can be grouped into the matrix , again assigning or for nonexistent external links. Furthermore:

- (a)

- For networks with a maximum of one external link to each node (), we need only consider the N-vector of external flow rates .

- (b)

- For alternating current networks, we consider complex external flow rates (phasors) , where is the external current amplitude and the phase.

- (c)

- For multidimensional flows, we consider the flow rate of each species c, which can be assembled into the external flow rate matrix . This can be summed along the m direction to give the total external flow rate matrix , with entries .

- (6)

- For potential flow systems: a set of N potentials at each node , united into the N-dimensional vector . For alternating current networks, we consider complex potentials (phasors) , commonly written as , where is the electrical potential amplitude and is the phase. For multidimensional flows, we consider the independent potentials , assembled into the matrix .

- (7)

- For potential flow systems: a set of M potential losses (negative differences), on a simple graph given by:These are generally interpreted as driving forces for the flow rates , measured in units of a difference or gradient in the intensive variable conjugate to B. Examples include losses in pressure, electrical potential, temperature (or reciprocal temperature) and chemical potential (or chemical potential divided by temperature). For simple graphs, these can be assembled into the matrix . In a multigraph, the potential losses are independent of subindex k:but it is useful computationally to retain all terms in an matrix . For multicomponent flows, the potential losses give the matrix .

- (8)

- For potential flow systems: a set of M resistance or constitutive relations for each edge, which for purely local dependencies can be written as:where is the th resistance relation. For example, in electrical circuits, (3) give linear relations (Ohm’s law), where is the resistance (for direct currents) or impedance (for alternating currents) of the th edge. In pipe flow networks, (3) are often written as power law relations (Blasius’ law), where is a parameter and is a coefficient [49,50], or can be expressed in more complicated forms such as the Colebrook equation [51]. Some resistance relations may not be functions: i.e., they may allow multiple solutions. For multidimensional flows, (3) applied to the cth pair of flow rates and potential losses gives:In multidimensional flows, there is also the possibility of cross-phenomenological transport processes, e.g., of thermodiffusive, thermoelectric, electrokinetic, electroosmotic or galvanomagnetic phenomena, e.g., [52,53,54,55,56]. These are usually represented by linear Onsager relations, valid close to equilibrium, of the form:in which is the th phenomenological resistance, which will satisfy reciprocity . However, in general these will require the (nonlinear) relations:in which is now the th phenomenological resistance function. The connections between reciprocal functions may be complicated. Equations (3)–(6) can be assembled into the function:where is an resistance operator, which in general may contain non-local effects, cross-phenomenological effects and other dependencies.

3. Maximum Entropy Analysis

3.1. Overview

- (1)

- Definition of a joint probability and/or probability density function (pdf), to quantify all uncertainties in the specification of a given system;

- (2)

- Definition of a relative entropy function for the network, based on this probabilistic representation;

- (3)

- Incorporation of information about the a priori distribution of the system over its parameter space, in the form of a prior probability and/or pdf;

- (4)

- Encoding of other background information, for example any physical laws or known parameter values, in the form of constraints;

- (5)

- Maximization of the relative entropy function, subject to the prior and constraints, to predict the state of the system.

3.2. Uncertainty and Probabilistic Representation

3.3. Entropy and Prior Probabilities

3.4. Moment Constraints

- (1)

- Kirchhoff’s first law: Applied in the mean, this states that the mean flow rate of each species c through each node i will be zero at steady state. For an undirected graph, this can be written as the sums of inflows and outflows:using except for . On a directed graph, the flow paths into and out of each node must be counted separately:Reversing the sum and expectation operators, (27)–(28) can be assembled into the matrix equation:where and are vectors or matrices of 0s or 1s of appropriate dimension, ⊤ denotes the transpose, for an undirected graph and 1 for a directed graph, and is an operator on . Equation (29) makes use of the k-summed flow rate matrices and , with the latter viewed from its face, so the bracketed term accounts for the row and column sums of respectively.

- (2)

- Kirchhoff’s second law: Applied in the mean, this states that at steady state, the mean potential losses must be in balance around each connected loop, or equivalently that the mean potentials at each node must be constant. For a flow network with multidimensional potentials, the first definition gives an expression for each loop (cycle) ℓ on the network:in which the potential losses are added in the assigned direction of ℓ. In a multigraph, (30) applies to each parallel edge , while in a directed graph, the two loop orientations (clockwise or anticlockwise) can be independent. A search algorithm is required to identify a maximal set of independent loops, expressed by the loop adjacency matrices for , containing elements to indicate the presence and orientation of each edge in the loop. These can be stacked into the loop adjacency matrix . Equation (30) can then be rewritten as the matrix equation:where ⊘ is a contraction product over the common indices of two matrices, given by the sum of their element-wise products, which subsumes the vector scalar product (dot product) for vectors and the tensor scalar product (double dot product) for second order tensors. In some networks, additional terms (e.g., reservoir potentials, pump heads, electrical source potentials, etc.) may also appear in (30)–(31).

3.5. Extended MaxEnt Algorithm

3.6. Role of Time

4. Applications

- (1)

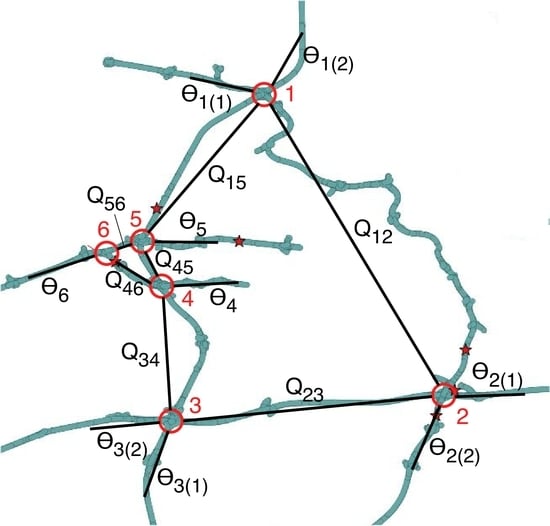

- Analyses of pipe flow networks used for water distribution systems, incorporating constraints on normalization (19), linear and nonlinear global expectation values (20) and (22), Kirchhoff’s node and loop laws (29) and (31), and resistance functions (32) [32,33,73,74,79,80,81,82,83,84,85]. This includes development of the analytical formulation, iterative numerical methods to handle nonlinear constraints, and rapid semi-analytical (quadratic programming) schemes for partition function integration. More recently, this work was extended by the use of reduced parameter basis sets to ensure consistency regardless of network representation [33,73,81]. Research also extended to comparative analyses of different prior probability functions [32,33,73,74,79], the use of priors to encode “soft constraints” [73,74,79], and formulation of an alternative linear algebra solution method for nonlinear systems with Gaussian priors [73,74,79]. The method was also demonstrated by application to a 1123-node, 1140-pipe water distribution network from Torrens, ACT, Australia [32,33,73]. An example set of inferred water flow rates on this network is illustrated in Figure 2a; further details of this analysis are given in [32].

- (2)

- Analyses of electrical networks, incorporating constraints on normalization (19), global expectation values (20) and (22), Kirchhoff’s node and loop laws (29) and (31), and resistance or impedance functions (32) [73,86]. This includes analysis of a 400-node electrical network in Campbell, ACT, Australia, subject to solar forcing from distributed household photovoltaic systems. An example set of inferred electrical power flows on this network is illustrated in Figure 2b, showing flow reversals due to high solar forcing; further details of this analysis are given in [73,86].

- (3)

- Analyses of transport networks, reformulated in terms of trip flow rates (from origins to destinations) rather than link flow rates, and incorporating constraints on normalization (19), global expectation values (20) and (22), various forms of Kirchhoff’s node laws (29) and various cost constraints [73,87]. These include different formulations using the gravity model of transport flows, or for route selection by proportional assignment or equilibrium assignment (cost minimization) methods.

- (4)

- Derivation of graph priors for several graph ensembles [88], invoking the relative entropy:where is reinterpreted to represent a graph macrostate, and are the posterior and prior probabilities and is the graph ensemble. This is used in place of the Shannon entropy typically used in MaxEnt analyses of networks, e.g., [6,7,9,10,28,29,30]:in which represents an individual graph. The use of graph priors enables a simplified accounting over graph macrostates—taking advantage of the most important feature of statistical mechanics—thereby simplifying the analysis of networks with uncertainty in the network structure.

5. Comparison to Bayesian Inference

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Barabasi, A.L.; Albert, R. Emergence of scaling in random networks. Science 1999, 286, 509–512. [Google Scholar] [CrossRef] [PubMed]

- Strogatz, S.H. Exploring complex networks. Nature 2001, 410, 268–276. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dorogovtsev, S.N.; Mendes, J.F.F. Evolution of networks. Adv. Phys. 2002, 51, 1079–1187. [Google Scholar] [CrossRef] [Green Version]

- Albert, R.; Barabási, A.-L. Statistical mechanics of complex networks. Rev. Mod. Phys. 2002, 74, 47–97. [Google Scholar] [CrossRef] [Green Version]

- Newman, M.E.J. The structure and function of complex networks. SIAM Rev. 2003, 45, 167–256. [Google Scholar] [CrossRef]

- Park, J.; Newman, M.E.J. Statistical mechanics of networks. Phys. Rev. E 2004, 70, 066117. [Google Scholar] [CrossRef] [Green Version]

- Barrat, A.; Barthélemy, M.; Vespignani, A. Dynamical Processes on Complex Networks; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Castellano, C.; Fortunato, S.; Loreto, V. Statistical physics of social dynamics. Rev. Mod. Phys. 2009, 81, 591–646. [Google Scholar] [CrossRef] [Green Version]

- Newman, N. Networks: An Introduction; Oxford University Press: Oxford, UK, 2010. [Google Scholar]

- Squartini, T.; Garlaschelli, D. Maximum-Entropy Networks: Pattern Detection, Network Reconstruction and Graph Combinatorics; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Barabási, A.-L.; Albert, R.; Jeong, H. Scale-free characteristics of random networks: the topology of the world-wide web. Phys. A 2000, 281, 69–77. [Google Scholar] [CrossRef]

- Fu, F.; Liu, L.; Wang, L. Empirical analysis of online social networks in the age of Web 2.0. Phys. A 2008, 387, 675–684. [Google Scholar] [CrossRef]

- Caamaño-Martín, E.; Laukamp, H.; Jantsch, M.; Erge, T.; Thornycroft, J.; De Moor, H.; Cobben, S.; Suna, D.; Gaiddon, B. Interaction between photovoltaic distributed generation and electricity networks. Prog. Photovolt. Res. Appl. 2008, 16, 629–643. [Google Scholar] [CrossRef]

- Buldyrev, S.V.; Parshani, R.; Paul, G.; Stanley, H.E. Havlin, S. Catastrophic cascade of failures in interdependent networks. Nature 2010, 464, 1025–1028. [Google Scholar] [CrossRef] [PubMed]

- Wilson, A.G. A statistical theory of spatial distribution models. Transp. Res. 1967, 1, 253–269. [Google Scholar] [CrossRef]

- Willumsen, L.G. Estimation of an O-D Matrix from Traffic Counts: A Review; Working Paper 99; Institute of Transport Studies, University of Leeds: Leeds, UK, 1978. [Google Scholar]

- Guimera, R.; Mossa, S.; Turtschi, A.; Amaral, L.A.N. The worldwide air transportation network: Anomalous centrality, community structure, and cities’ global roles. Proc. Natl. Acad. Sci. USA 2005, 102, 7794–7799. [Google Scholar] [CrossRef] [PubMed]

- de Ortúzar, J.D.; Willumsen, L.G. Modelling Transport, 4th ed.; Wiley: New York, NY, USA, 2011. [Google Scholar]

- Barthélemy, M. Spatial networks. Phys. Rep. 2011, 499, 1–101. [Google Scholar] [CrossRef] [Green Version]

- Arenas, A.; Díaz-Guilera, A.; Kurths, J.; Moreno, Y.; Zhoug, C. Synchronization in complex networks. Phys. Rep. 2008, 469, 93–153. [Google Scholar] [CrossRef] [Green Version]

- George, B.; Kim, S. Spatio-Temporal Networks; Springer: Heidelberg, Germany, 2013. [Google Scholar]

- Albantakis, L.; Marshall, W.; Hoel, E.; Tononi, G. What caused what? A quantitative account of actual causation using dynamical causal networks. Entropy 2019, 21, 459. [Google Scholar] [CrossRef]

- Jeong, H.; Tombor, B.; Albert, R.; Oltvai, Z.N.; Barabasi, A.L. The large-scale organization of metabolic networks. Nature 2000, 407, 651–654. [Google Scholar] [CrossRef] [Green Version]

- Famili, I.; Palsson, B.O. The convex basis of the left null space of the stoichiometric matrix leads to the definition of metabolically meaningful pools. Biophys. J. 2003, 85, 16–26. [Google Scholar] [CrossRef]

- Guimera, R.; Amaral, L.A.N. Functional cartography of complex metabolic networks. Nature 2005, 433, 895. [Google Scholar] [CrossRef]

- Reichstein, M.; Falge, E.; Baldocchi, D.; Papale, D.; Aubinet, M.; Berbigier, P.; Bernhofer, C.; Buchmann, N.; Gilmanov, T.; Granier, A.; et al. On the separation of net ecosystem exchange into assimilation and ecosystem respiration: review and improved algorithm. Glob. Chang. Biol. 2005, 11, 1424–1439. [Google Scholar] [CrossRef]

- Donges, J.F.; Zou, Y.; Marwan, N.; Kurths, J. Complex networks in climate dynamics: Comparing linear and nonlinear network construction methods. Eur. Phys. J. Spec. Top. 2009, 174, 157–179. [Google Scholar] [CrossRef]

- Bianconi, G. Statistical mechanics of multiplex networks: Entropy and overlap. Phys. Rev. E 2013, 87, 062806. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Menichetti, G.; Remondini, D.; Bianconi, G. Correlations between weights and overlap in ensembles of weighted multiplex networks. Phys. Rev. E 2014, 90, 062817. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boccaletti, S.; Bianconi GCriado, R.; del Genio, C.I.; Gómez-Gardeñes, J.; Romance, M.; Sendiña-Nadal, I.; Wang, Z.; Zanin, M. The structure and dynamics of multilayer networks. Phys. Rep. 2014, 544, 1–122. [Google Scholar] [CrossRef] [Green Version]

- Kivelä, M.; Arenas, A.; Barthélemy, M.; Gleeson, J.P.; Moreno, Y.; Porter, M.A. Multilayer networks. J. Complex Netw. 2014, 2, 203–271. [Google Scholar] [CrossRef] [Green Version]

- Waldrip, S.H.; Niven, R.K.; Abel, M.; Schlegel, M. Maximum entropy analysis of hydraulic pipe flow networks. J. Hydraul. Eng. ASCE 2016, 142, 04016028. [Google Scholar] [CrossRef]

- Waldrip, S.H.; Niven, R.K.; Abel, M.; Schlegel, M. Reduced-parameter method for maximum entropy analysis of hydraulic pipe flow networks. J. Hydraul. Eng. ASCE 2018, 144, 04017060. [Google Scholar] [CrossRef]

- Perra, N.; Gonçalves, B.; Pastor-Satorras, R.; Vespignani, A. Activity driven modeling of time varying networks. Sci. Rep. 2002, 2, 469. [Google Scholar] [CrossRef]

- Keeling, M.J.; Eames, K.T.D. Networks and epidemic models. J. R. Soc. Interface 2005, 2, 295–307. [Google Scholar] [CrossRef] [Green Version]

- Jaynes, E.T. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information theory and statistical mechanics. In Brandeis University Summer Institute, Lectures in Theoretical Physics, Vol. 3: Statistical Physics; Ford, K.W., Ed.; Benjamin-Cummings Publ. Co.: San Francisco, CA, USA, 1963; pp. 181–218, In Papers on Probability, Statistics and Statistical Physics; Rosenkratz, R.D., Ed.; D. Reidel Publ. Co.: Dordrecht, Holland, 1983; pp. 39–76. [Google Scholar]

- Jaynes, E.T. Probability Theory: The Logic of Science; Bretthorst, G.L., Ed.; Cambridge U.P.: Cambridge, UK, 2003. [Google Scholar]

- Tribus, M. Information theory as the basis for thermostatics and thermodynamics. J. Appl. Mech. Trans. ASME 1961, 28, 1–8. [Google Scholar] [CrossRef]

- Tribus, M. Thermostatics and Thermodynamics; D. Van Nostrand Co. Inc.: Princeton, NJ, USA, 1961. [Google Scholar]

- Kapur, J.N.; Kesevan, H.K. Entropy Optimization Principles with Applications; Academic Press, Inc.: Boston, MA, USA, 1992. [Google Scholar]

- Gzyl, H. The Method of Maximum Entropy; World Scientific: Singapore, 1995. [Google Scholar]

- Wu, N. The Maximum Entropy Method; Springer: Berlin, Germay, 1997. [Google Scholar]

- Boltzmann, L. Über die Beziehung zwischen dem zweiten Hauptsatze der Mechanischen Wärmetheorie und der Wahrscheinlichkeitsrechnung, respektive den Sätzen über das Wärmegleichgewicht. Wien. Ber. 1877, 76, 373–435, English Translated: Le Roux, J., 2002. Available online: http://users.polytech.unice.fr/~leroux/boltztrad.pdf (accessed on 1 June 2019).

- Planck, M. Über das Gesetz der Energieverteilung im Normalspektrum. Annalen der Physik 1901, 4, 553–563. [Google Scholar] [CrossRef]

- Ellis, R.S. Entropy, Large Deviations, and Statistical Mechanics; Springer: New York, NY, USA, 1985. [Google Scholar]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Annals Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Sanov, I.N. On the probability of large deviations of random variables. Mat. Sbornik 1957, 42, 11–44. (In Russian) [Google Scholar]

- Schlichting, H.; Gersten, K. Boundary Layer Theory, 8th ed.; Springer: New York, NY, USA, 2001. [Google Scholar]

- Niven, R.K. Simultaneous extrema in the entropy production for steady-state fluid flow in parallel pipes. J. Non-Equilib. Thermodyn. 2010, 35, 347–378. [Google Scholar] [CrossRef]

- Colebrook, C.F. Turbulent Flow in Pipes, With Particular Reference to the Transition Region Between the Smooth and Rough Pipe Laws. J. ICE 1939, 11, 133–156. [Google Scholar] [CrossRef]

- De Groot, S.R.; Mazur, P. Non-Equilibrium Thermodynamics; Dover Publications: New York, NY, USA, 1984. [Google Scholar]

- Kreuzer, H.J. Nonequilibrium Thermodynamics and Its Statistical Foundations; Clarendon Press: Oxford, UK, 1981. [Google Scholar]

- Demirel, Y. Nonequilibrium Thermodynamics; Elsevier: New York, NY, USA, 2002. [Google Scholar]

- Bird, R.B.; Stewart, W.E.; Lightfoot, E.N. Transport Phenomena, 2nd ed.; John Wiley & Sons: New York, NY, USA, 2002. [Google Scholar]

- Kondepudi, D.; Prigogine, I. Modern Thermodynamics: From Heat Engines to Dissipative Structures, 2nd ed.; John Wiley & Sons: Chichester, UK, 2015. [Google Scholar]

- Tolman, R.C. The Principles of Statistical Mechanics; Oxford University Press: London, UK, 1938. [Google Scholar]

- Davidson, N. Statistical Mechanics; McGraw-Hill: New York, NY, USA, 1962. [Google Scholar]

- Hill, T.L. Statistical Mechanics: Principles and Selected Applications; McGraw-Hill: New York, NY, USA, 1956. [Google Scholar]

- Callen, H.B. Thermodynamics and an Introduction to Thermostatistics, 2nd ed.; John Wiley: New York, NY, USA, 1985. [Google Scholar]

- Niven, R.K. Steady state of a dissipative flow-controlled system and the maximum entropy production principle. Phys. Rev. E 2009, 80, 021113. [Google Scholar] [CrossRef] [Green Version]

- Niven, R.K.; Noack, B.R. Control volume analysis, entropy balance and the entropy production in flow systems. In Beyond the Second Law: Entropy Production and Non-Equilibrium Systems; Dewar, R.C., Lineweaver, C., Niven, R.K., Regenauer-Lieb, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 129–162. [Google Scholar]

- Niven, R.K.; Ozawa, H. Entropy production extremum principles. In Handbook of Applied Hydrology, 2nd ed.; Singh, V., Ed.; McGraw-Hill: New York, NY, USA, 2016; Chapter 32. [Google Scholar]

- Bayes, T. (presented by Price, R.). An essay towards solving a problem in the doctrine of chance. Philos. Trans. R. Soc. Lond. 1763, 53, 370–418. [Google Scholar]

- Laplace, P. Mémoire sur la probabilité des causes par les évènements. l’Académie Royale des Sciences 1774, 6, 621–656. [Google Scholar]

- Polya, G. Mathematics and Plausible Reasoning, Vol II, Patterns of Plausible Inference; Princeton U.P.: Princeton, NJ, USA, 1954. [Google Scholar]

- Polya, G. Mathematics and Plausible Reasoning, Vol II, Patterns of Plausible Inference, 2nd ed.; Princeton U.P.: Princeton, NJ, USA, 1968. [Google Scholar]

- Cox, R.T. The Algebra of Probable Inference; John Hopkins Press: Baltimore, MD, USA, 1961. [Google Scholar]

- Zwillinger, D. CRC Standard Mathematical Tables and Formulae; Chapman & Hal/CRC Press: Boca Raton, FL, USA, 2003. [Google Scholar]

- Monin, A.S.; Yaglom, A.M. Statistical Fluid Mechanics: Mechanics of Turbulence, Vol. I; Dover Publ.: Mineola, NY, USA, 1971. [Google Scholar]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Favretti, M. Lagrangian submanifolds generated by the maximum entropy principle. Entropy 2005, 7, 1–14. [Google Scholar] [CrossRef]

- Waldrip, S.H. Probabilistic Analysis of Flow Networks using the Maximum Entropy Method. Ph.D. Thesis, The University of New South Wales, Canberra, Australia, 2017. [Google Scholar]

- Waldrip, S.H.; Niven, R.K. Comparison between Bayesian and maximum entropy analyses of flow networks. Entropy 2017, 19, 58. [Google Scholar] [CrossRef]

- Dewar, R.C. Information theory explanation of the fluctuation theorem, maximum entropy production and self-organized criticality in non-equilibrium stationary states. J. Phys. A Math. Gen. 2003, 36, 631–641. [Google Scholar] [CrossRef] [Green Version]

- Dewar, R.C. Maximum entropy production and the fluctuation theorem. J. Phys. A Math. Gen. 2005, 38, L371–L381. [Google Scholar] [CrossRef] [Green Version]

- Jaynes, E.T. The minimum entropy production principle. Ann. Rev. Phys. Chem. 1980, 31, 579–601. [Google Scholar] [CrossRef]

- Wang, Q.A. Maximum entropy change and least action principle for nonequilibrium systems, Astrophys. Space Sci. 2006, 305, 273–281. [Google Scholar] [CrossRef]

- Waldrip, S.H.; Niven, R.K. Bayesian and Maximum Entropy Analyses of Flow Networks with Gaussian or Non-Gaussian Priors, and Soft Constraints. In Proceedings of the 37th International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering (MaxEnt 2017), Sao Paulo, Brazil, 9–14 July 2017. Springer Proc. Math. Stat., 2018, 239, 285–294. [Google Scholar]

- Niven, R.K.; Waldrip, S.H.; Abel, M.; Schlegel, M. Probabilistic modelling of water distribution networks, extended abstract. In Proceedings of the 22nd International Congress on Modelling and Simulation (MODSIM2017), Hobart, Tasmania, Australia, 3–8 December 2017. [Google Scholar]

- Waldrip, S.H.; Niven, R.K.; Abel, M.; Schlegel, M. Consistent maximum entropy representations of pipe flow networks. In Proceedings of the 36th International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering (MaxEnt 2016), Ghent, Belgium, 10–15 July 2016. AIP Conf. Proc 1853, Melville NY USA, 2017, 070004. [Google Scholar]

- Waldrip, S.H.; Niven, R.K.; Abel, M.; Schlegel, M.; Noack, B.R. MaxEnt analysis of a water distribution network in Canberra, ACT, Australia. In Proceedings of the 34th International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering (MaxEnt 2014), Amboise, France, 21–26 September 2014. AIP Conf. Proc. 1641, Melville NY USA, 2015, 479–486. [Google Scholar]

- Niven, R.K.; Abel, M.; Waldrip, S.H.; Schlegel, M. Maximum entropy analysis of flow and reaction networks. In Proceedings of the 34th International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering (MaxEnt 2014), Amboise, France, 21–26 September 2014. AIP Conf. Proc. 1641, Melville NY USA, 2015, 271–278. [Google Scholar]

- Niven, R.K.; Abel, M.; Schlegel, M.; Waldrip, S.H. Maximum entropy analysis of flow networks. In Proceedings of the 33rd International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering (MaxEnt 2013), Canberra, Australia, 15–20 December 2013. AIP Conf. Proc. 1636, Melville NY USA, 2014, 159–164. [Google Scholar]

- Waldrip, S.H.; Niven, R.K.; Abel, M.; Schlegel, M. Maximum entropy analysis of hydraulic pipe networks. In Proceedings of the 33rd International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering (MaxEnt 2013), Canberra, Australia, 15–20 December 2013. AIP Conf. Proc. 1636, Melville NY USA, 2014, 180–186. [Google Scholar]

- Niven, R.K.; Waldrip, S.H.; Abel, M.; Schlegel, M. Probabilistic modelling of energy networks, extended abstract. In Proceedings of the 22nd International Congress on Modelling and Simulation (MODSIM2017), Hobart, Tasmania, Australia, 3–8 December 2017. [Google Scholar]

- Waldrip, S.H.; Niven, R.K.; Abel, M.; Schlegel, M. MaxEnt analysis of transport networks. In Proceedings of the 36th International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering (MaxEnt 2016), Ghent, Belgium, 10–15 July 2016. AIP Conf. Proc 1853, Melville NY USA, 2017, 070003. [Google Scholar]

- Niven, R.K.; Abel, M.; Waldrip, S.H.; Schlegel, M.; Guimera, R. Maximum entropy analysis of flow networks with structural uncertainty (graph ensembles). In Proceedings of the 37th International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering (MaxEnt 2017), Sao Paulo, Brazil, 9–14 July 2017. Springer Proc. Mathematics and Statistics 2018, 239, 261–274. [Google Scholar]

- Skilling, J.; Gull, S. Bayesian maximum entropy image reconstruction. In Spatial Statistics and Imaging: Papers from the Research Conference on Image Analysis and Spatial Statistics, Bowdoin College, Brunswick, Maine, Summer 1988; Possolo, A., Ed.; Institute of Mathematical Statistics: Hayward, CA, USA, 1991; pp. 341–367. [Google Scholar]

- Mohammad-Djafari, A.; Giovannelli, J.F.; Demoment, G.; Idier, J. Regularization, maximum entropy and probabilistic methods in mass spectrometry data processing problems. Int. J. Mass Spectrom. 2002, 215, 175–193. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niven, R.K.; Abel, M.; Schlegel, M.; Waldrip, S.H. Maximum Entropy Analysis of Flow Networks: Theoretical Foundation and Applications. Entropy 2019, 21, 776. https://doi.org/10.3390/e21080776

Niven RK, Abel M, Schlegel M, Waldrip SH. Maximum Entropy Analysis of Flow Networks: Theoretical Foundation and Applications. Entropy. 2019; 21(8):776. https://doi.org/10.3390/e21080776

Chicago/Turabian StyleNiven, Robert K., Markus Abel, Michael Schlegel, and Steven H. Waldrip. 2019. "Maximum Entropy Analysis of Flow Networks: Theoretical Foundation and Applications" Entropy 21, no. 8: 776. https://doi.org/10.3390/e21080776