Tsallis Entropy for Loss Models and Survival Models Involving Truncated and Censored Random Variables

Abstract

:1. Introduction

2. Preliminaries

2.1. The Exponential Distribution

2.2. The Weibull Distribution

2.3. The Distribution

2.4. The Gamma Distribution

2.5. The Tsallis Entropy

3. Tsallis Entropy Approach for Loss Models

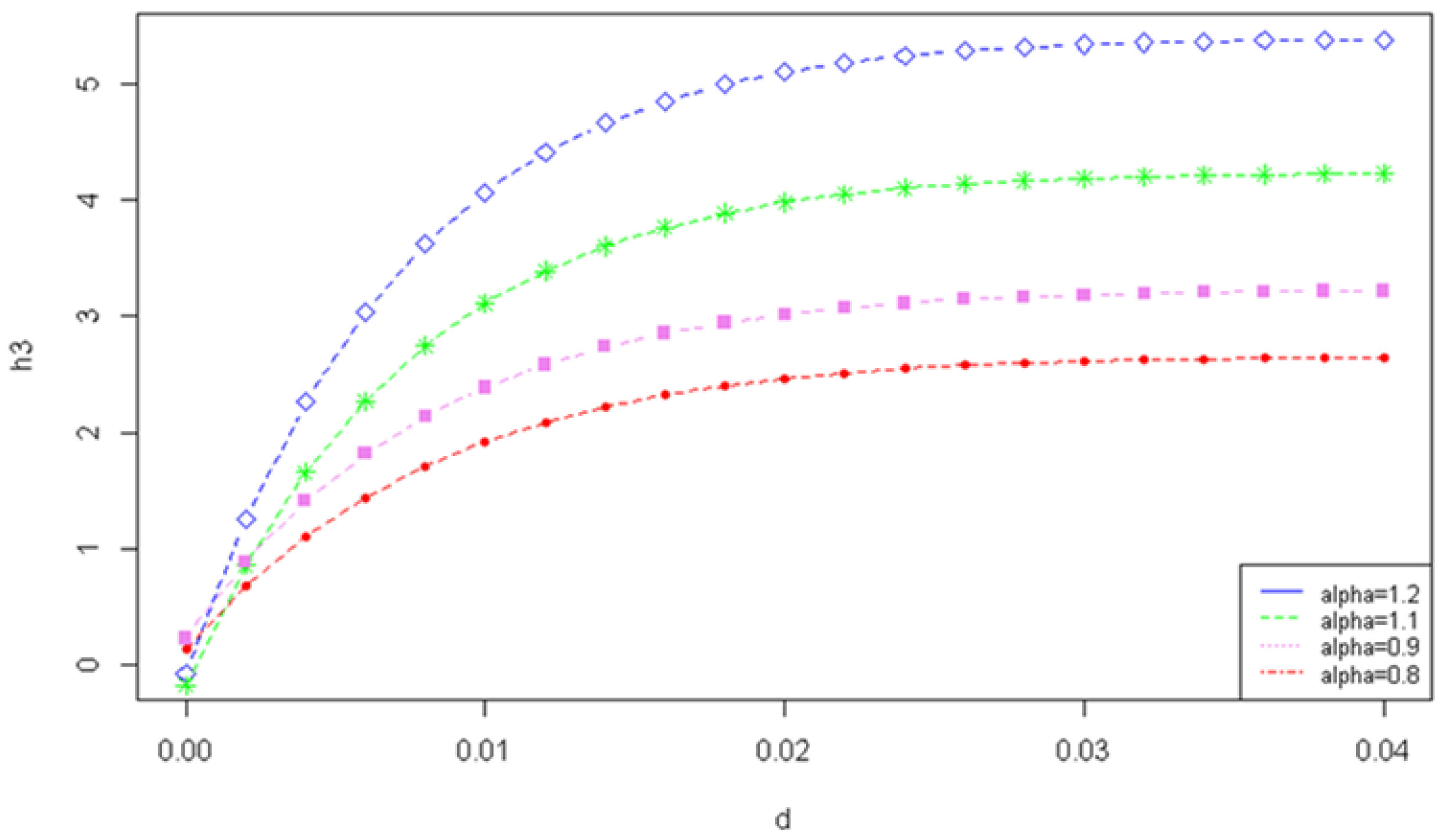

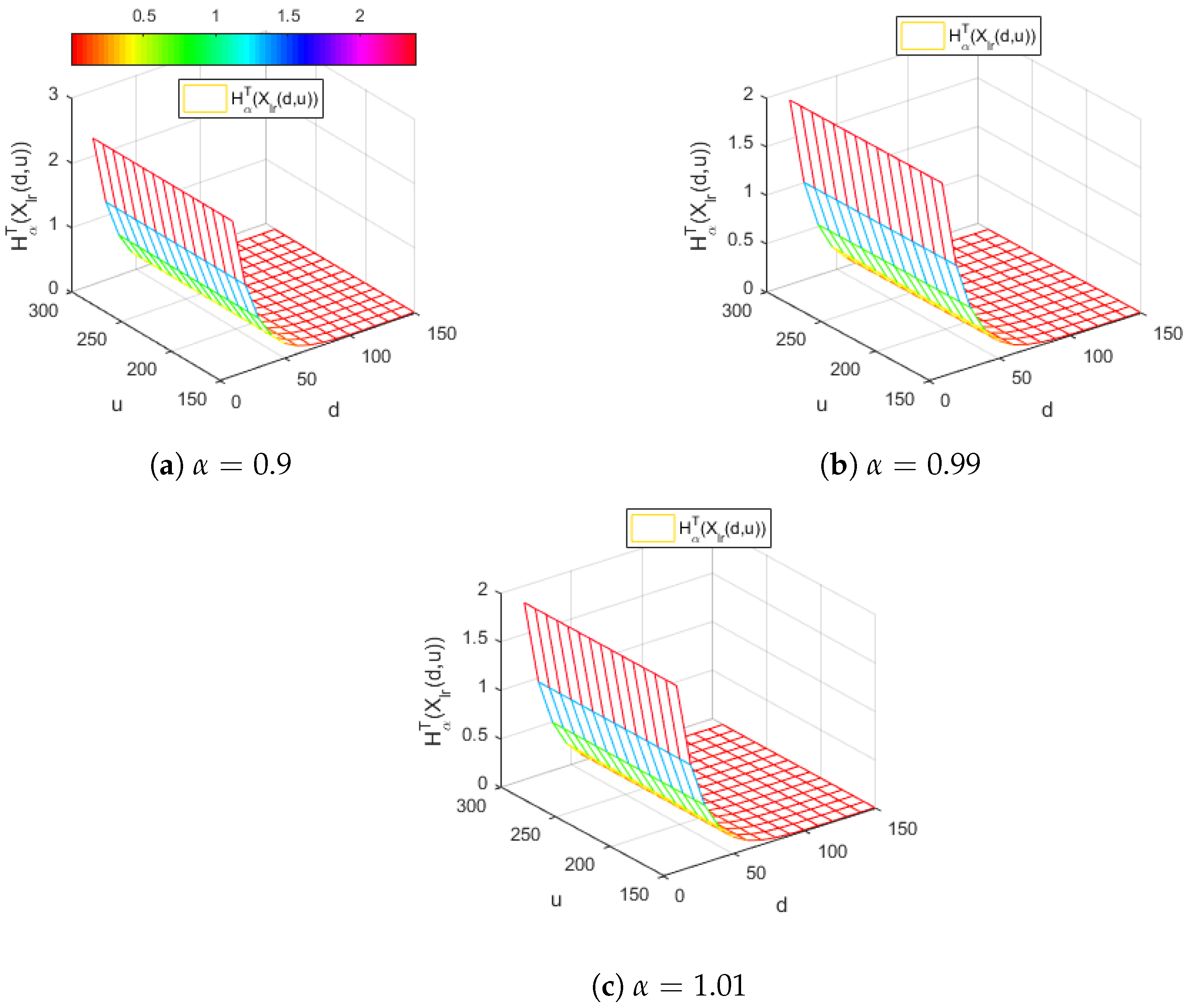

3.1. Loss Models Involving Truncation or Censoring from Below

3.2. Loss Models Involving Truncation or Censoring from Above

3.3. Loss Models Involving Truncation from Above and from Below

3.4. Loss Models under Inflation

4. Tsallis Entropy Approach for Survival Models

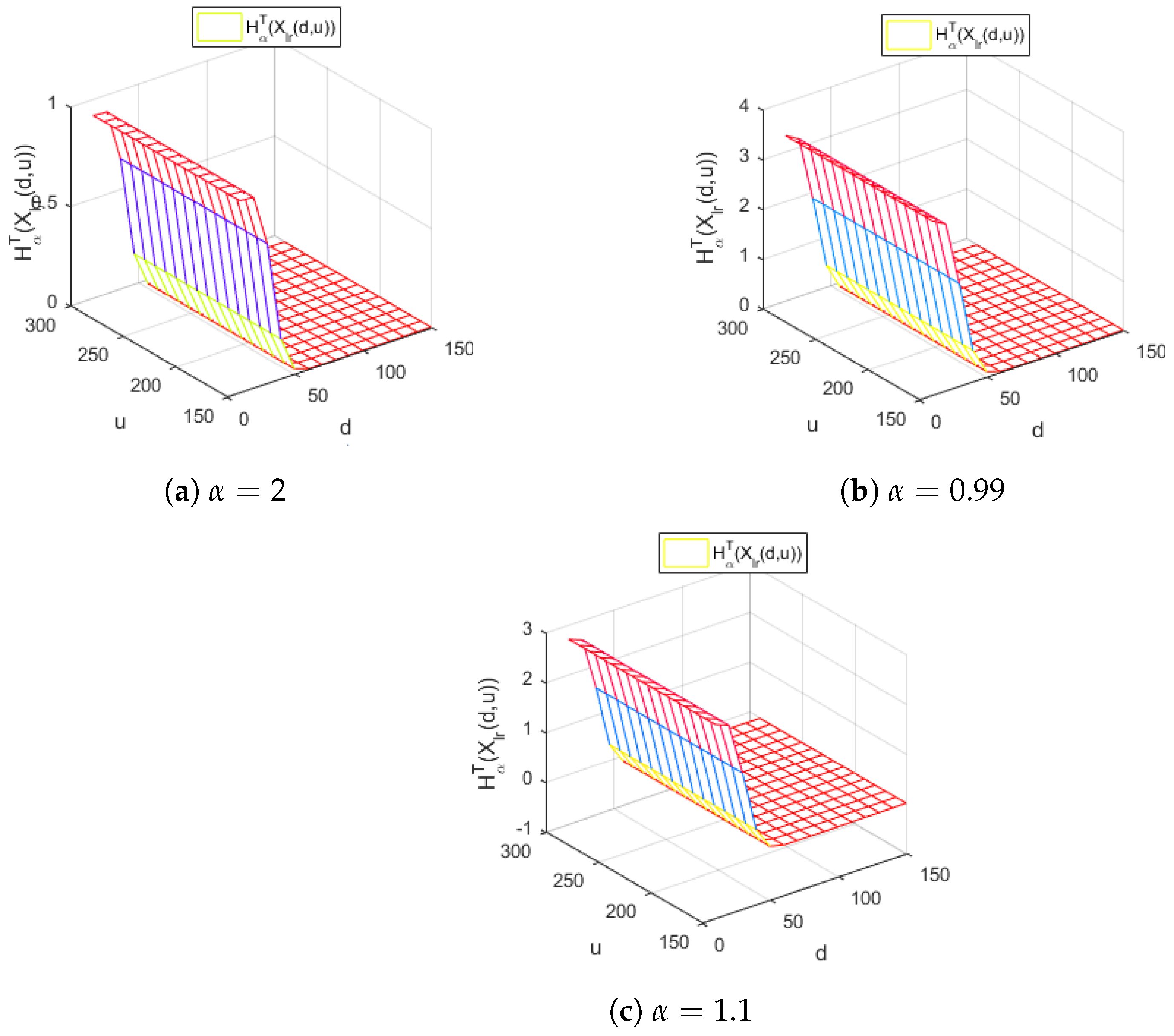

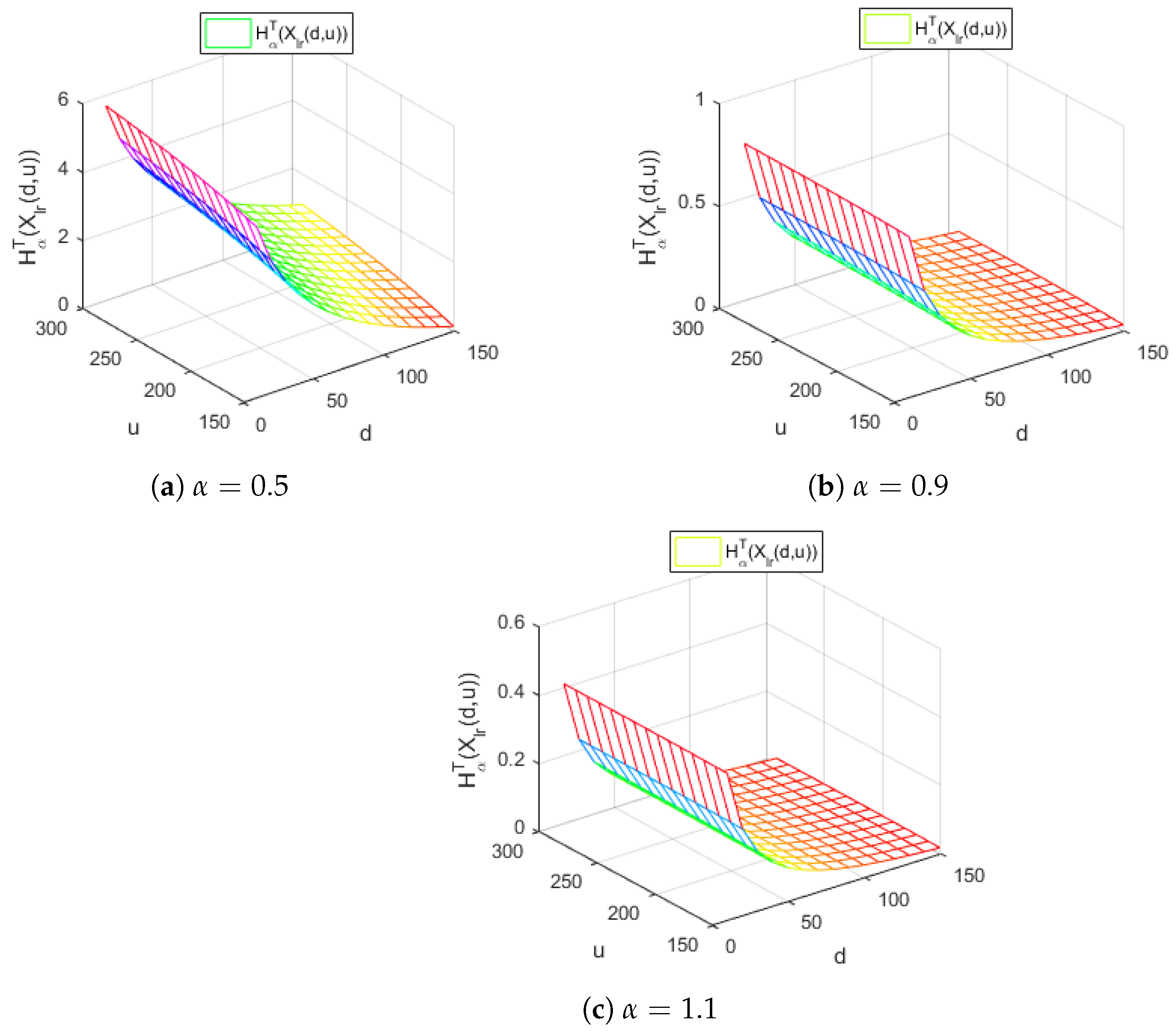

4.1. The Proportional Hazard Rate Model

4.2. The Proportional Reversed Hazard Rate Model

5. Applications

- The Tsallis entropy corresponding to the random variable X which models the loss;

- The Tsallis entropy of the left-truncated loss and, respectively, censored loss random variable corresponding to the per-payment risk model with a deductible d, namely and, respectively, ;

- The Tsallis entropy of the right-truncated and, respectively, censored loss random variable corresponding to the per-payment risk model with a policy limit u, denoted by and, respectively, ;

- The Tsallis entropy of losses of the right-truncated loss random variable corresponding to the per-loss risk model with a deductible d and a policy limit u, .

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Koukoumis, C.; Karagrigoriou, A. On Entropy-type Measures and Divergences with Applications in Engineering, Management and Applied Sciences. Int. J. Math. Eng. Manag. Sci. 2021, 6, 688–707. [Google Scholar] [CrossRef]

- Iatan, I.; Dragan, M.; Preda, V.; Dedu, S. Using Probabilistic Models for Data Compression. Mathematics 2022, 10, 3847. [Google Scholar] [CrossRef]

- Li, S.; Zhuang, Y.; He, J. Stock market stability: Diffusion entropy analysis. Phys. A 2016, 450, 462–465. [Google Scholar] [CrossRef]

- Miśkiewicz, J. Improving quality of sample entropy estimation for continuous distribution probability functions. Phys. A 2016, 450, 473–485. [Google Scholar] [CrossRef]

- Toma, A.; Karagrigoriou, A.; Trentou, P. Robust Model Selection Criteria Based on Pseudodistances. Entropy 2020, 22, 304. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moretto, E.; Pasquali, S.; Trivellato, E. Option pricing under deformed Gaussian distributions. Phys. A 2016, 446, 246–263. [Google Scholar] [CrossRef]

- Remuzgo, L.; Trueba, C.; Sarabia, S.M. Evolution of the global inequality in greenhouse gases emissions using multidimensional generalized entropy measures. Phys. A 2015, 444, 146–157. [Google Scholar] [CrossRef] [Green Version]

- Sheraz, M.; Dedu, S.; Preda, V. Volatility Dynamics of Non-Linear Volatile Time Series and Analysis of Information Flow: Evidence from Cryptocurrency Data. Entropy 2022, 24, 1410. [Google Scholar] [CrossRef]

- Toma, A.; Leoni-Aubin, S. Robust portfolio optimization using pseudodistances. PLoS ONE 2015, 10, e0140546. [Google Scholar] [CrossRef] [Green Version]

- Nayak, A.S.; Rajagopal, S.A.K.; Devi, A.R.U. Bipartite separability of symmetric N-qubit noisy states using conditional quantum relative Tsallis entropy. Phys. A 2016, 443, 286–295. [Google Scholar] [CrossRef]

- Pavlos, G.P.; Iliopoulos, A.C.; Zastenker, G.N.; Zelenyi, L.M. Tsallis non-extensive statistics and solar wind plasma complexity. Phys. A 2015, 422, 113–135. [Google Scholar] [CrossRef]

- Singh, V.P.; Cui, H. Suspended sediment concentration distribution using Tsallis entropy. Phys. A 2014, 414, 31–42. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Buono, F.; Longobardi, M. A unified formulation of entropy and its application. Phys. A 2022, 596, 127214. [Google Scholar] [CrossRef]

- Ebrahimi, N. How to measure uncertainty in the residual life distributions. Sankhya 1996, 58, 48–57. [Google Scholar]

- Ebrahimi, N.; Pellerey, F. New partial ordering of survival functions based on the notion of uncertainty. J. Appl. Probab. 1995, 32, 202–211. [Google Scholar] [CrossRef]

- Baxter, L.A. A note on information and censored absolutely continuous random variables. Stat. Decis. 1989, 7, 193–197. [Google Scholar] [CrossRef]

- Zografos, K. On some entropy and divergence type measures of variability and dependence for mixed continuous and discrete variables. J. Stat. Plan. Inference 2008, 138, 3899–3914. [Google Scholar] [CrossRef]

- Sachlas, A.; Papaioannou, T. Residual and past entropy in actuarial science. Methodol. Comput. Appl. Probab. 2014, 16, 79–99. [Google Scholar] [CrossRef]

- Gupta, R.C.; Gupta, R.D. Proportional reversed hazard rate model and its applications. J. Stat. Plan. Inference 2007, 137, 3525–3536. [Google Scholar] [CrossRef]

- Di Crescenzo, A.; Longobardi, M. Entropy-based measure of uncertainty in past lifetime distributions. J. Appl. Probab. 2002, 39, 430–440. [Google Scholar] [CrossRef]

- Messelidis, C.; Karagrigoriou, A. Contingency Table Analysis and Inference via Double Index Measures. Entropy 2022, 24, 477. [Google Scholar] [CrossRef] [PubMed]

- Anastassiou, G.; Iatan, I.F. Modern Algorithms of Simulation for Getting Some Random Numbers. J. Comput. Anal. Appl. 2013, 15, 1211–1222. [Google Scholar]

- Pardo, L. Statistical Inference Based on Divergence Meaures; Chapman & Hall/CRC: Boca Raton, FL, USA, 2006. [Google Scholar]

- Toma, A. Model selection criteria using divergences. Entropy 2014, 16, 2686–2698. [Google Scholar] [CrossRef] [Green Version]

- Belzunce, F.; Navarro, J.; Ruiz, J.; del Aguila, Y. Some results on residual entropy function. Metrika 2004, 59, 147–161. [Google Scholar] [CrossRef]

- Vonta, F.; Karagrigoriou, A. Generalized measures of divergence in survival analysis and reliability. J. Appl. Probab. 2010, 47, 216–234. [Google Scholar] [CrossRef] [Green Version]

- Tsallis, C. Possible generalization of Boltzmann–Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Tsallis, C.; Mendes, R.S.; Plastino, A.R. The role of constraints within generalized nonextensive statistics. Phys. A 1998, 261, 534–554. [Google Scholar] [CrossRef]

- Tsallis, C.; Anteneodo, A.; Borland, L.; Osorio, R. Nonextensive statistical mechanics and economics. Phys. A 2003, 324, 89–100. [Google Scholar] [CrossRef] [Green Version]

- Tsallis, C. Introduction to Nonextensive Statistical Mechanics; Springer Science Business Media, LLC: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Furuichi, S. Information theoretical properties of Tsallis entropies. J. Math. Phys. 2006, 47, 023302. [Google Scholar] [CrossRef] [Green Version]

- Furuichi, S. On uniqueness theorems for Tsallis entropy and Tsallis relative entropy. IEEE Trans. Inf. Theory 2005, 51, 3638–3645. [Google Scholar] [CrossRef] [Green Version]

- Trivellato, B. Deformed exponentials and applications to finance. Entropy 2013, 15, 3471–3489. [Google Scholar] [CrossRef] [Green Version]

- Trivellato, B. The minimal k-entropy martingale measure. Int. J. Theor. Appl. Financ. 2012, 15, 1250038. [Google Scholar] [CrossRef]

- Preda, V.; Dedu, S.; Sheraz, M. New measure selection for Hunt-Devolder semi-Markov regime switching interest rate models. Phys. A 2014, 407, 350–359. [Google Scholar] [CrossRef]

- Preda, V.; Dedu, S.; Gheorghe, C. New classes of Lorenz curves by maximizing Tsallis entropy under mean and Gini equality and inequality constraints. Phys. A 2015, 436, 925–932. [Google Scholar] [CrossRef]

- Miranskyy, A.V.; Davison, M.; Reesor, M.; Murtaza, S.S. Using entropy measures for comparison of software traces. Inform. Sci. 2012, 203, 59–72. [Google Scholar] [CrossRef] [Green Version]

- Preda, V.; Dedu, S.; Sheraz, M. Second order entropy approach for risk models involving truncation and censoring. Proc. Rom.-Acad. Ser. Math. Phys. Tech. Sci. Inf. Sci. 2016, 17, 195–202. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. BellSyst. Tech. J. 1984, 27, 379–423. [Google Scholar]

- Klugman, S.A.; Panjer, H.H.; Willmot, G.E. Loss Models: From Data to Decisions; John Wiley and Sons: New York, NY, USA, 2004. [Google Scholar]

- Di Crescenzo, A. Some results on the proportional reversed hazards model. Stat. Probab. Lett. 2000, 50, 313–321. [Google Scholar] [CrossRef]

- Sankaran, P.G.; Gleeja, C.L. Proportional reversed hazard and frailty models. Metrika 2008, 68, 333–342. [Google Scholar] [CrossRef]

- Cox, D.R. Regression models and life-tables. J. R. Stat. Soc. 1972, 34, 187–220. [Google Scholar] [CrossRef]

- McNeil, A.J. Estimating the tails of loss severity distributions using extreme value theory. ASTIN Bull. 1997, 27, 117–137. [Google Scholar] [CrossRef] [Green Version]

- Pigeon, M.; Denuit, M. Composite Lognormal-Pareto model with random threshold. Scand. Actuar. J. 2011, 3, 177–192. [Google Scholar] [CrossRef]

- Resnick, S.I. Discussion of the Danish data on large fire insurance losses. ASTIN Bull. 1997, 27, 139–151. [Google Scholar] [CrossRef]

| u | |||||||

|---|---|---|---|---|---|---|---|

| 10 | 5.434 | 5.5005 | 5.3837 | 3.7994 | 4.1067 | 4.0564 | |

| 0.5 | 15 | 4.5725 | 4.7622 | 4.712 | |||

| 20 | 4.9845 | 5.09140 | 5.0411 | ||||

| 25 | 5.2006 | 5.2582 | 5.2079 | ||||

| 10 | 2.5446 | 2.5778 | 2.5156 | 2.2220 | 2.3504 | 2.3214 | |

| 0.9 | 15 | 2.4306 | 2.488 | 2.4591 | |||

| 20 | 2.505432 | 2.52792 | 2.4989660524 | ||||

| 25 | 2.5156 | 2.5314 | 2.5396 | ||||

| 10 | 2.20865 | 2.2369 | 1.091 | 2.316 | 2.0827 | 2.0792 | |

| 1 | 15 | 2.2534 | 2.1767 | 2.1732 | |||

| 20 | 2.22484 | 2.20041 | 2.1969 | ||||

| 25 | 2.2140 | 2.2064 | 2.203 | ||||

| 10 | 1.26474 | 1.278 | 1.2521 | 1.2094 | 1.24799 | 1.2353 | |

| 1.5 | 15 | 1.2503 | 1.2626 | 1.2499 | |||

| 20 | 1.2609475715 | 1.2644 | 1.2518 | ||||

| 25 | 1.2637 | 1.2647 | 1.2525 | ||||

| 10 | 0.8459 | 0.8524 | 0.8396 | 0.8279 | 0.8433 | 0.837 | |

| 2 | 15 | 0.8415 | 0.8457 | 0.83944 | |||

| 20 | 0.8448 | 0.8459 | 0.8395 | ||||

| 25 | 0.8456 | 0.8459 | 0.8396 |

| u | |||||||

|---|---|---|---|---|---|---|---|

| 10 | 5.434 | 5.5043 | 5.33 | 3.7994 | 4.1067 | 4.0027 | |

| 0.5 | 15 | 4.5725 | 4.7622 | 4.6583 | |||

| 20 | 4.98457 | 5.091 | 4.9874 | ||||

| 25 | 5.2006 | 5.2582 | 5.1542 | ||||

| 10 | 2.5446 | 2.5796 | 2.4829 | 2.222 | 2.35 | 2.2887 | |

| 0.9 | 15 | 2.43 | 2.488 | 2.4264 | |||

| 20 | 2.5054 | 2.5279 | 2.4662 | ||||

| 25 | 2.5314 | 2.5396 | 2.4779 | ||||

| 10 | 2.20865 | 2.2384 | 1.0621 | 2.31601 | 2.0827 | 2.09548 | |

| 1 | 15 | 2.2534 | 2.1767 | 2.1894 | |||

| 20 | 2.2248 | 2.2004 | 2.2131 | ||||

| 25 | 2.214 | 2.2064 | 2.2192 | ||||

| 10 | 1.26474 | 1.2786 | 1.2364 | 1.2094 | 1.2479 | 1.2197 | |

| 1.5 | 15 | 1.2503 | 1.2626 | 1.2343 | |||

| 20 | 1.2609 | 1.2644 | 1.2362 | ||||

| 25 | 1.26373 | 1.2647 | 1.2364 | ||||

| 10 | 0.8459 | 0.85275 | 0.8311 | 0.82792 | 0.8433 | 0.8285 | |

| 2 | 15 | 0.8415 | 0.8457 | 0.8309 | |||

| 20 | 0.8448 | 0.8459 | 0.8395 | ||||

| 25 | 0.8448 | 0.8459 | 0.8311 |

| u | |||||||

|---|---|---|---|---|---|---|---|

| 10 | 5.434 | 5.508 | 5.2754 | 3.7994 | 4.1067 | 3.9481 | |

| 0.5 | 15 | 4.5725 | 4.7622 | 4.6036 | |||

| 20 | 4.9845 | 5.0914 | 4.9328 | ||||

| 25 | 5.2006 | 5.2582 | 5.0996 | ||||

| 10 | 2.5446 | 2.5812 | 2.4491 | 2.222 | 2.3504 | 2.2549 | |

| 0.9 | 15 | 2.4306 | 2.488 | 2.3926 | |||

| 20 | 2.5054 | 2.5279 | 2.4324 | ||||

| 25 | 2.5314 | 2.5396 | 2.4441 | ||||

| 10 | 2.2086 | 2.2398 | 1.03212 | 2.316 | 2.08275 | 2.11294 | |

| 1 | 15 | 2.2534 | 2.1767 | 2.20691 | |||

| 20 | 2.2248 | 2.2004 | 2.23 | ||||

| 25 | 2.214 | 2.2064 | 2.2366 | ||||

| 10 | 1.2647 | 1.2792 | 1.2199 | 1.2094 | 1.2479 | 1.2031 | |

| 1.5 | 15 | 1.2503 | 1.2626 | 1.2178 | |||

| 20 | 1.2609 | 1.2644 | 1.2196 | ||||

| 25 | 1.2637 | 1.2647 | 1.2199 | ||||

| 10 | 0.8459 | 0.853 | 0.8219 | 0.8279 | 0.8433 | 0.8193 | |

| 2 | 15 | 0.8415 | 0.8457 | 0.8218 | |||

| 20 | 0.8448 | 0.8459 | 0.8219 | ||||

| 25 | 0.8456 | 0.8459 | 0.8219 |

| u | |||||||

|---|---|---|---|---|---|---|---|

| 10 | |||||||

| 0.5 | 15 | ||||||

| 20 | |||||||

| 25 | |||||||

| 10 | |||||||

| 0.9 | 15 | ||||||

| 20 | |||||||

| 25 | |||||||

| 10 | |||||||

| 1 | 15 | ||||||

| 20 | |||||||

| 25 | |||||||

| 10 | |||||||

| 1.5 | 15 | ||||||

| 20 | |||||||

| 25 | |||||||

| 10 | |||||||

| 2 | 15 | ||||||

| 20 | |||||||

| 25 |

| u | |||||||

|---|---|---|---|---|---|---|---|

| 10 | |||||||

| 0.5 | 15 | ||||||

| 20 | |||||||

| 25 | |||||||

| 10 | |||||||

| 0.9 | 15 | ||||||

| 20 | |||||||

| 25 | |||||||

| 10 | |||||||

| 1 | 15 | ||||||

| 20 | |||||||

| 25 | |||||||

| 10 | |||||||

| 1.5 | 15 | ||||||

| 20 | |||||||

| 25 | |||||||

| 10 | |||||||

| 2 | 15 | ||||||

| 20 | |||||||

| 25 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Preda, V.; Dedu, S.; Iatan, I.; Cernat, I.D.; Sheraz, M. Tsallis Entropy for Loss Models and Survival Models Involving Truncated and Censored Random Variables. Entropy 2022, 24, 1654. https://doi.org/10.3390/e24111654

Preda V, Dedu S, Iatan I, Cernat ID, Sheraz M. Tsallis Entropy for Loss Models and Survival Models Involving Truncated and Censored Random Variables. Entropy. 2022; 24(11):1654. https://doi.org/10.3390/e24111654

Chicago/Turabian StylePreda, Vasile, Silvia Dedu, Iuliana Iatan, Ioana Dănilă Cernat, and Muhammad Sheraz. 2022. "Tsallis Entropy for Loss Models and Survival Models Involving Truncated and Censored Random Variables" Entropy 24, no. 11: 1654. https://doi.org/10.3390/e24111654