Effective Visual Tracking Using Multi-Block and Scale Space Based on Kernelized Correlation Filters

Abstract

:1. Introduction

2. Related Work

2.1. Correlation Filter-Based Tracking

2.2. Part-Based Tracking

3. Proposed Method

3.1. The KCF Tracker

3.2. Scale Estimation Strategy via Scale Space Filter

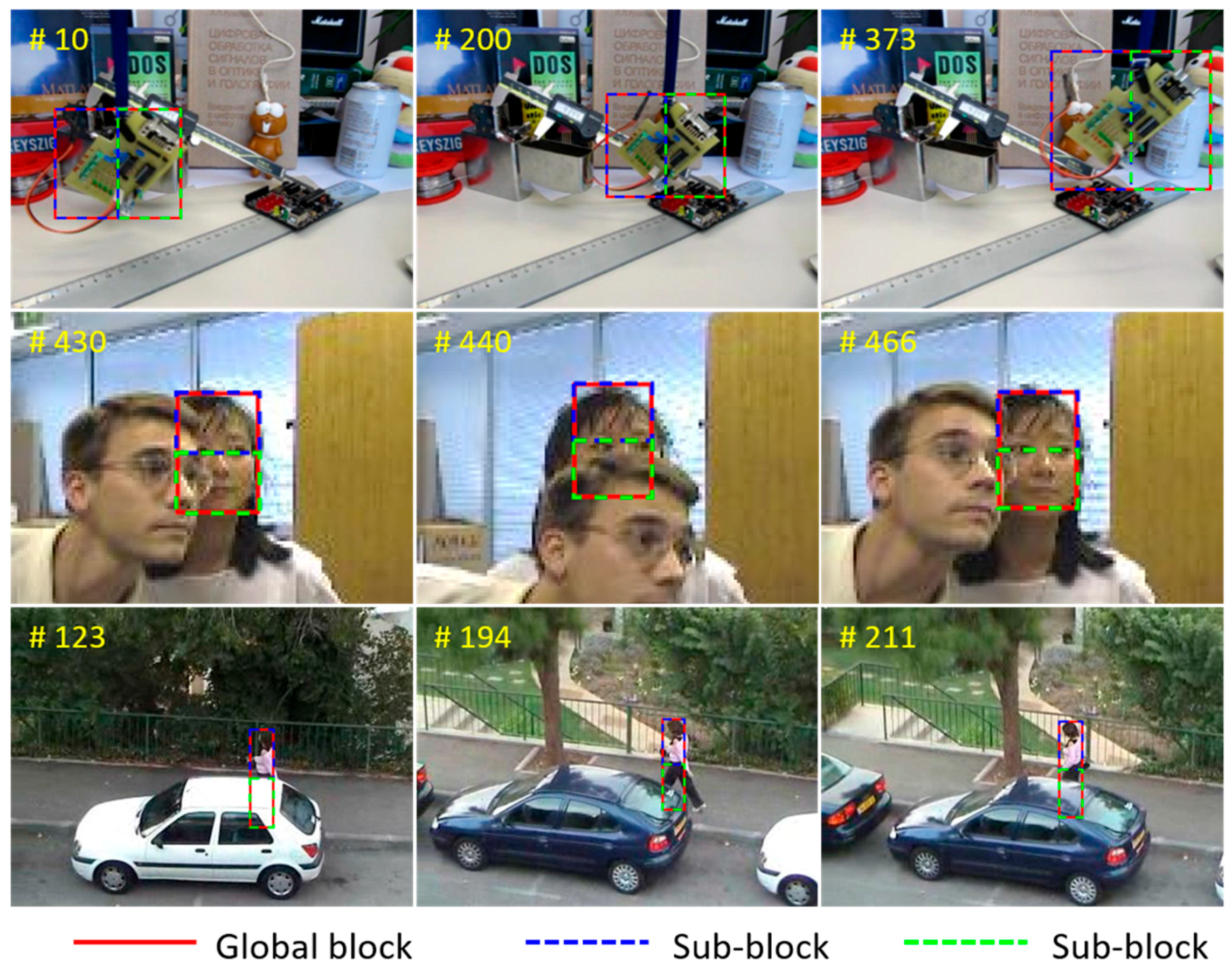

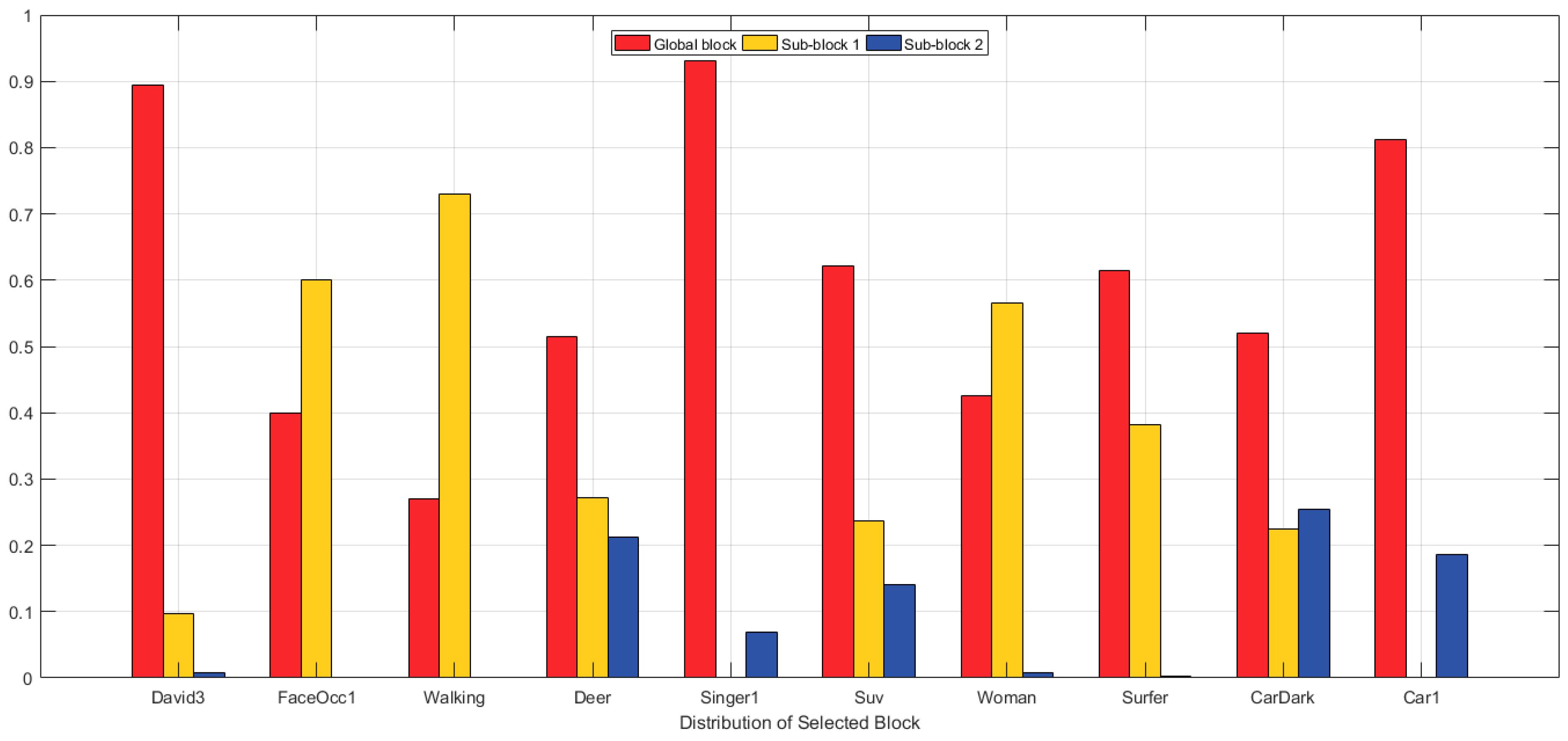

3.3. Multi-Block Scheme for Partial Occlusion

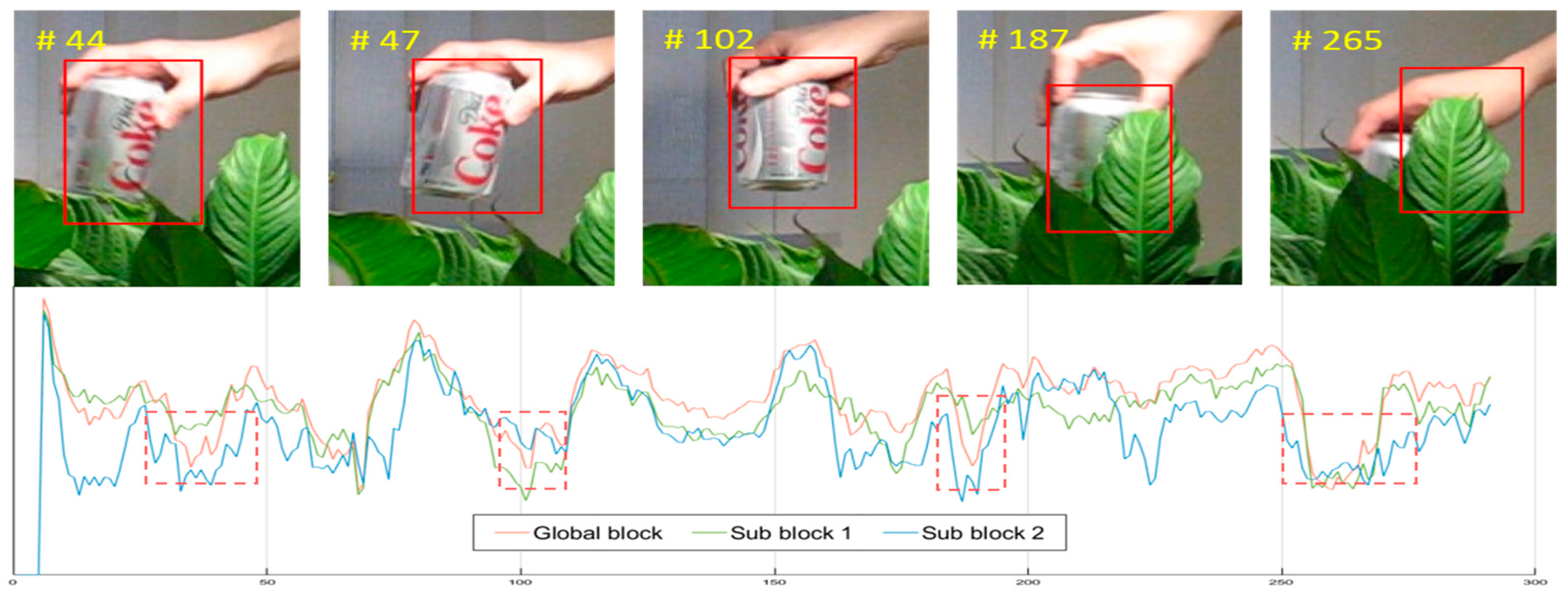

3.4. Adaptive Update Model Using PSR

4. Experiments

4.1. Experimental Setup

4.2. Features and Parameters

4.3. Evaluation Methodology

4.4. Results

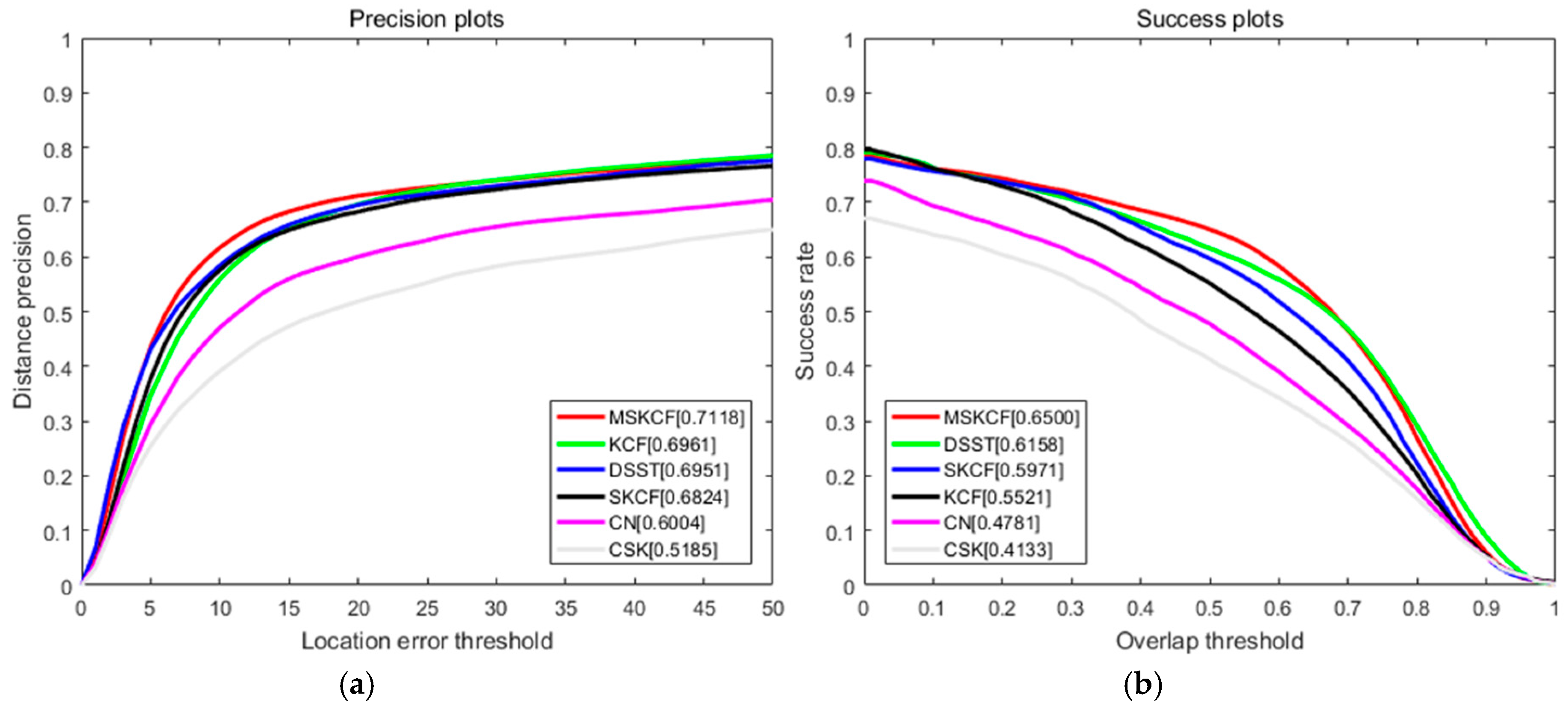

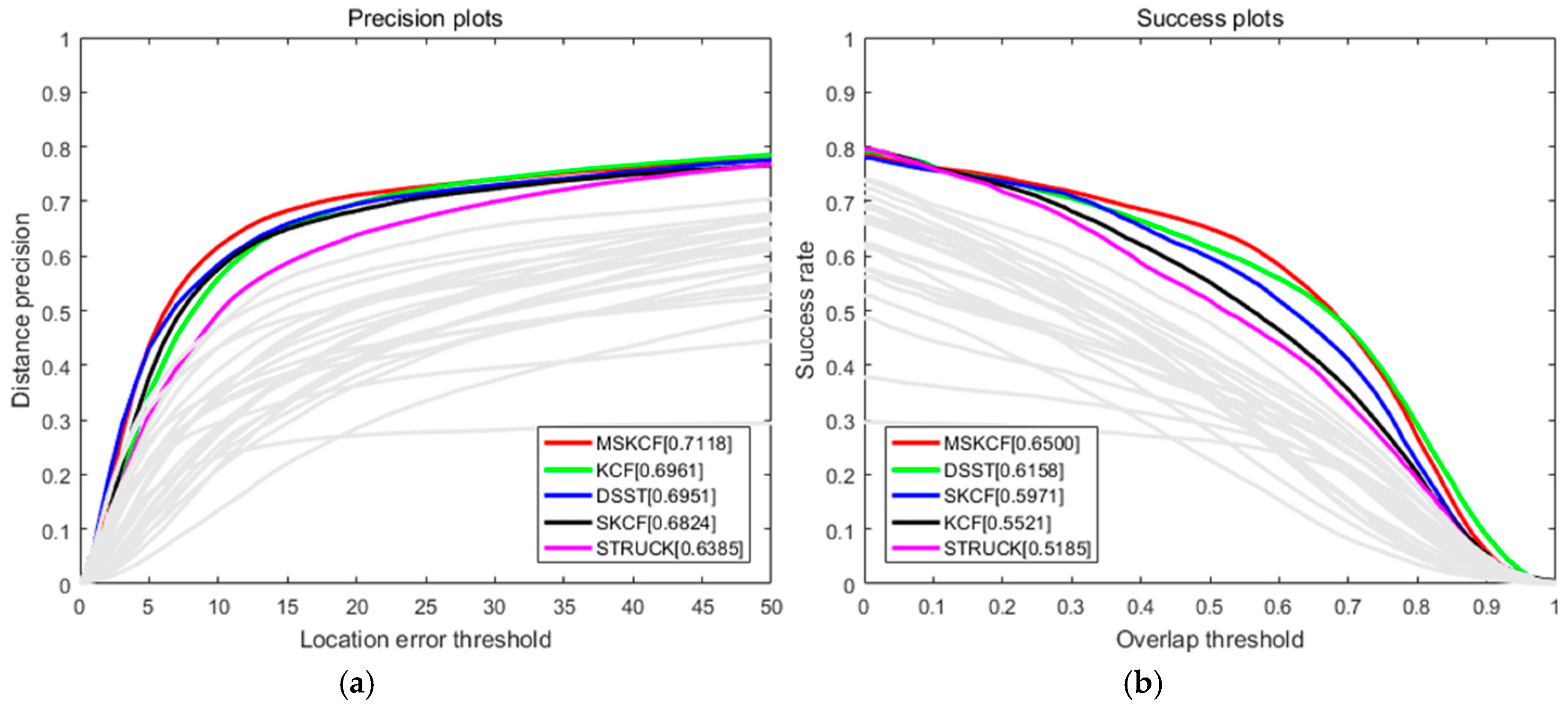

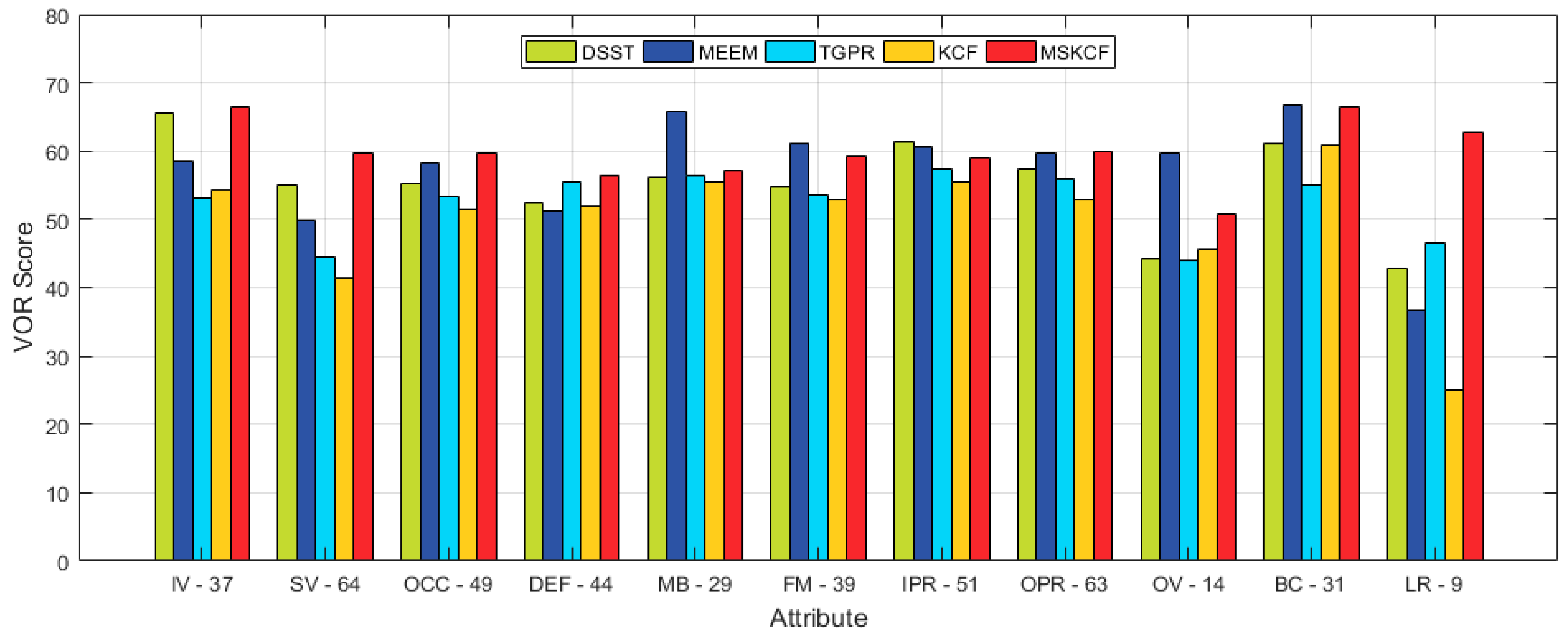

4.4.1. Quantitative Evaluation

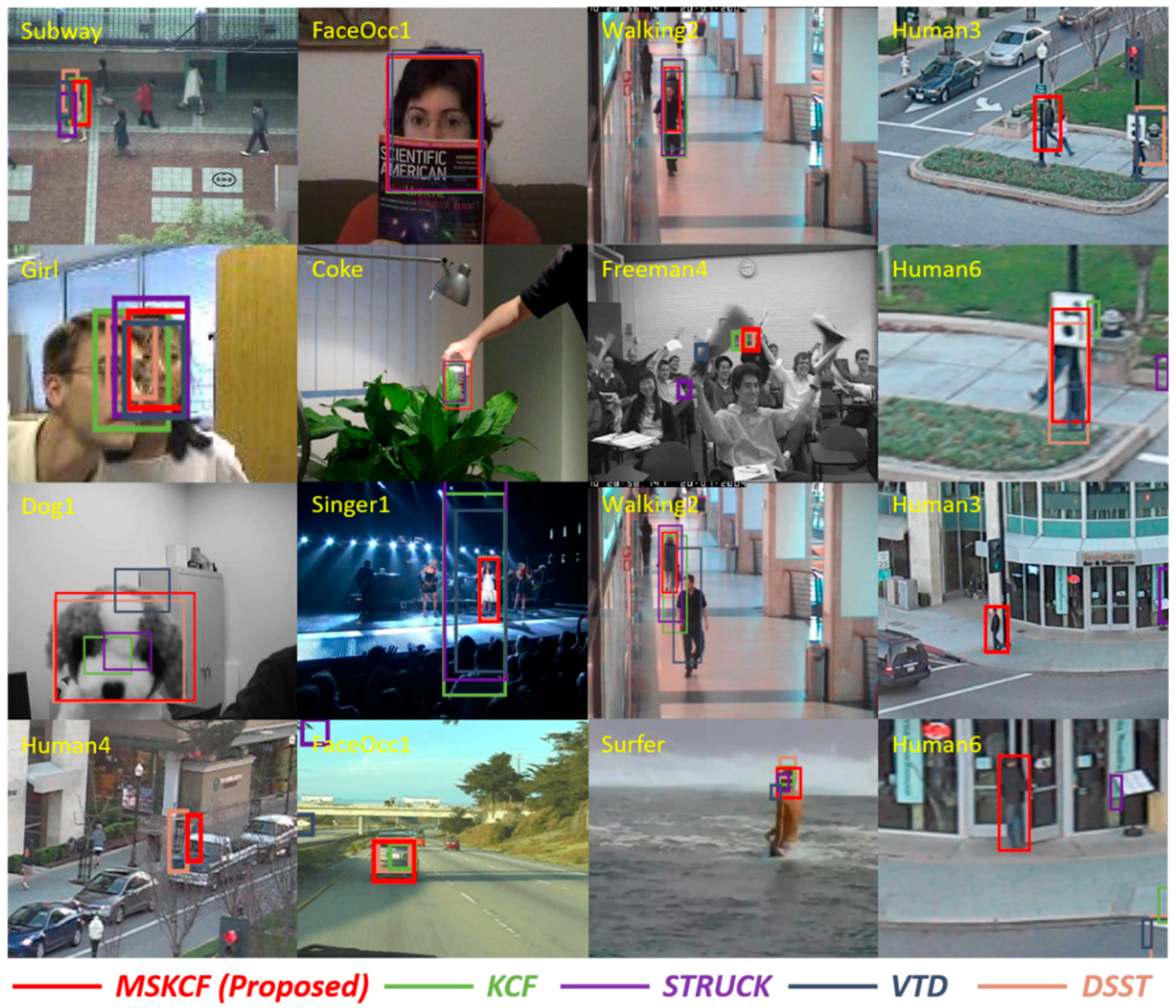

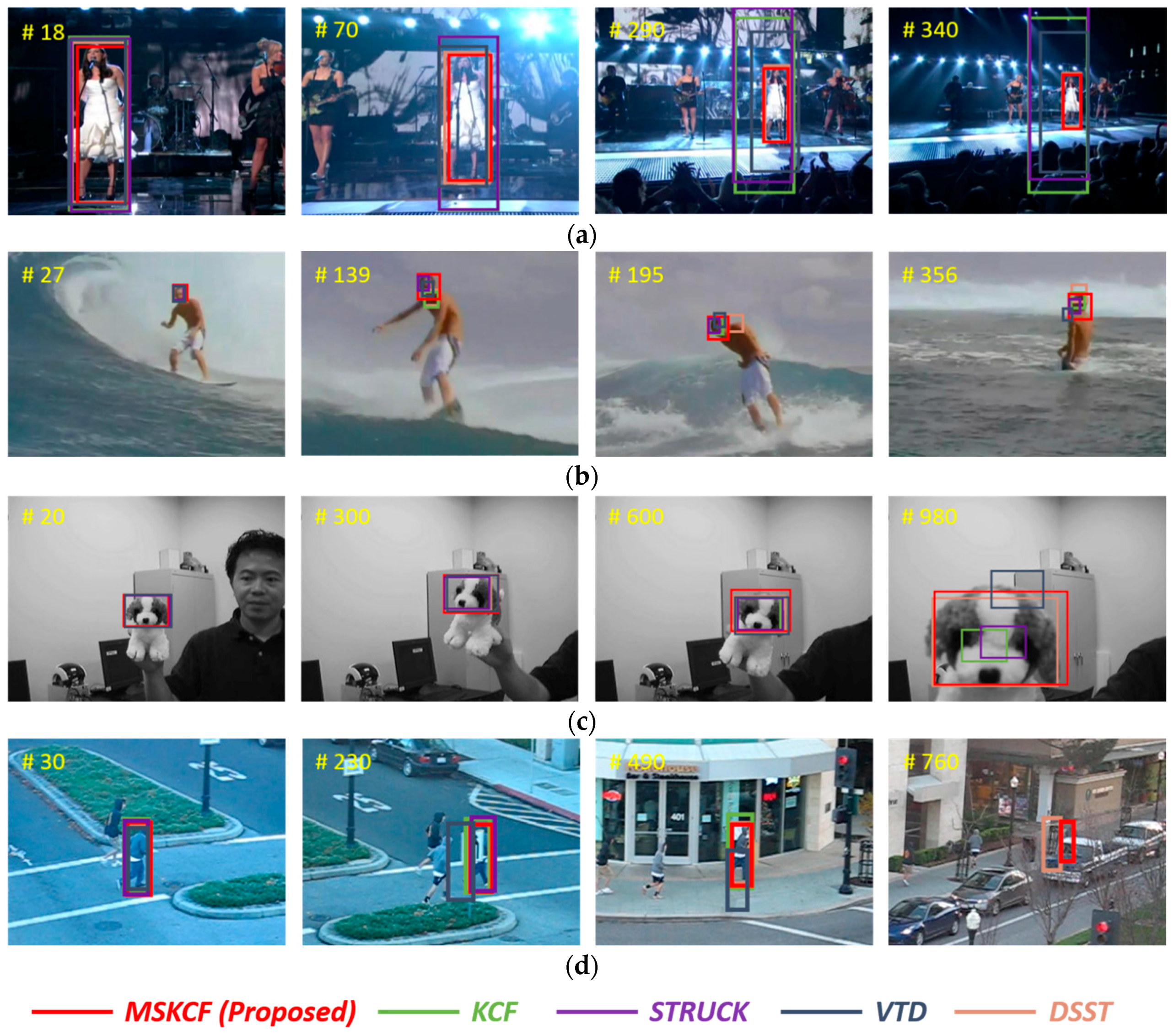

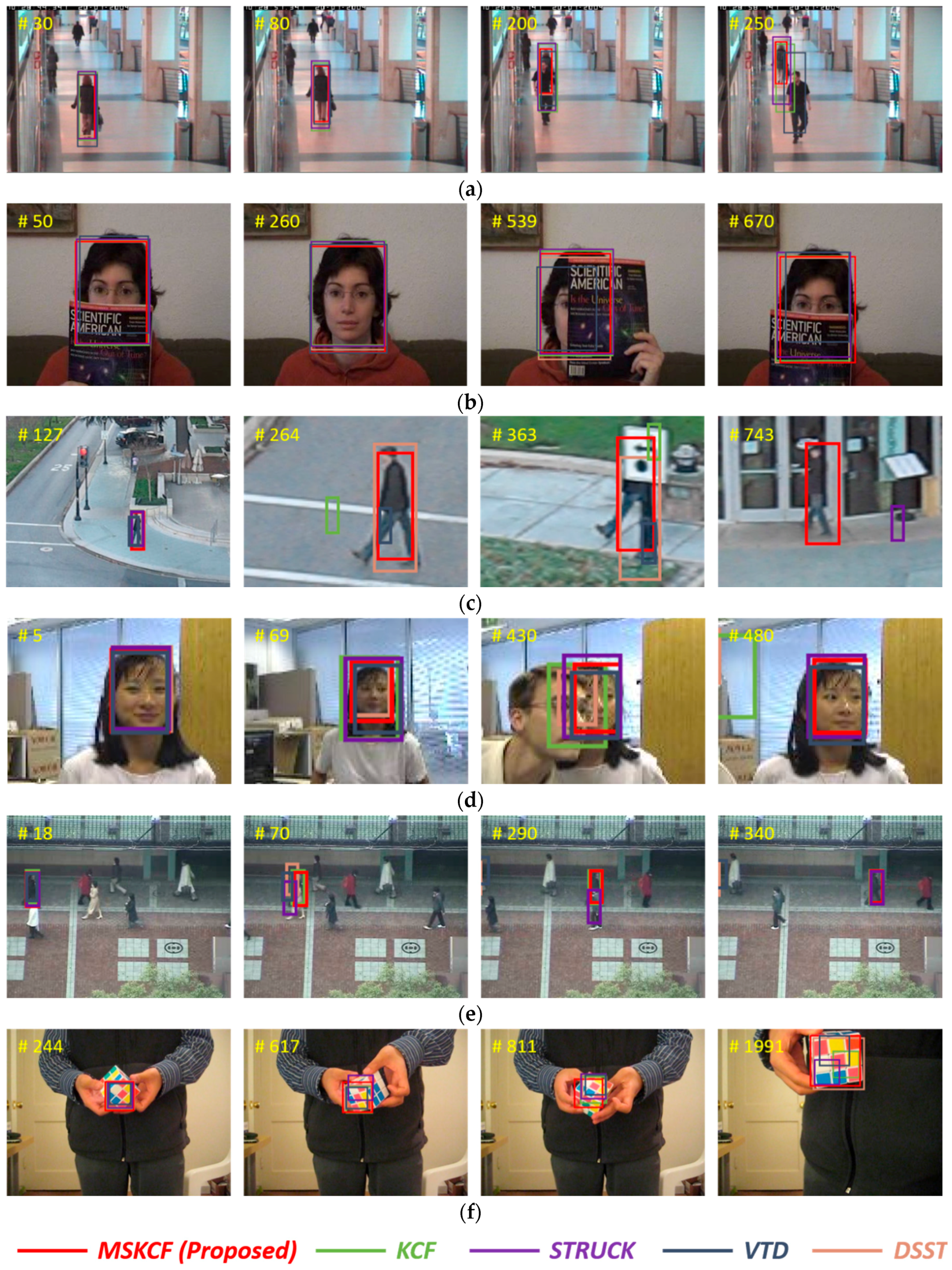

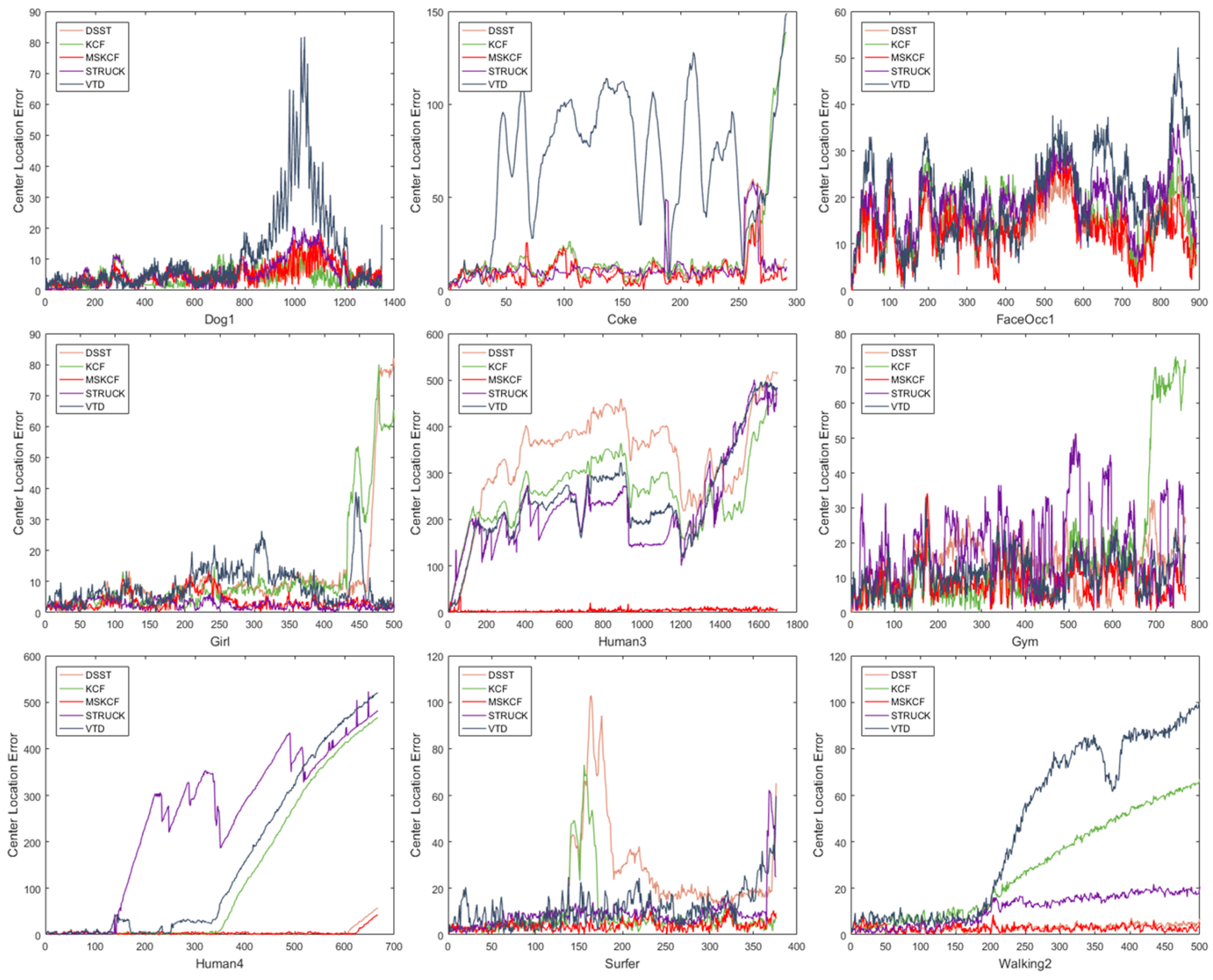

4.4.2. Qualitative Evaluation

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ross, D.A.; Lim, J.; Lin, R.; Yang, M. Incremental learning for robust visual tracking. IJCV 2008, 77, 125–141. [Google Scholar] [CrossRef]

- Wang, D.; Lu, H. Visual Tracking via Probability Continuous Outlier Model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014.

- Kwon, J.; Lee, K.M. Visual tracking decomposition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010.

- Mei, X.; Ling, H. Robust Visual Tracking using L1 Minimization. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1436–1443.

- Adam, A.; Rivlin, E.; Shimshoni, I. Robust Fragments-Based Tracking Using the Integral Histogram. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Washington, DC, USA, 17–22 June 2006.

- Zhang, T.; Ghanem, B.; Liu, S.; Ahuja, N. Robust Visual Tracking via Multi-task Sparse Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012.

- Zhang, T.; Ghanem, B.; Liu, S.; Ahuja, N. Low-rank sparse learning for robust visual tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012.

- Zhang, T.; Bibi, A.; Ghanem, B. In defense of sparse tracking: Circulant sparse tracker. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3880–3888.

- Avidan, S. Ensemble Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 261–271. [Google Scholar] [CrossRef] [PubMed]

- Grabner, H.; Grabner, M.; Bischof, H. Real-Time tracking via on-line boosting. In Proceedings of the British Machine Vision Conference, Edinburgh, UK, 4–7 September 2006.

- Saffari, A.; Leistner, C.; Santner, J.; Godec, M.; Bischof, H. On-line random forests. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Miami, FL, USA, 20–25 June 2009.

- Hare, S.; Saffari, A.; Torr, P.H.S. Struck: Structured Output Tracking with Kernels. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011.

- Babenko, B.; Yang, M.-H.; Belongie, S. Visual Tracking with Online Multiple Instance Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009.

- Kalal, Z.; Matas, J.; Mikolajczyk, K. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Danelljan, M.; Gustav, H.; Khan, S.F.; Felsberg, M. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014.

- Henriques, F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010.

- Henriques, F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012.

- Danelljan, M.; Khan, F.S.; Felsberg, M.; van de Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014.

- Li, Y.; Zhu, J. A scale adaptive kernel correlation filter tracker with feature integration. In Lecture Notes in Computer Science; Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer: Berlin, Germany, 2015; Volume 8926, pp. 254–265. [Google Scholar]

- Danelljan, M.; Gustav, H.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 58–66.

- Ruan, Y.; Wei, Z. Real-Time Visual Tracking through Fusion Features. Sensors 2016, 16, 949. [Google Scholar] [CrossRef] [PubMed]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3880–3888.

- Shu, G.; Dehghan, A.; Oreifej, O.; Hand, E.; Shah, M. Part-based multiple-person tracking with partial occlusion handling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012.

- Zhang, T.; Jia, K.; Xu, C.; Ma, Y.; Ahuja, N. Partial occlusion handling for visual tracking via robust part matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014.

- Zhang, K.; Song, H. Real-time visual tracking via online weighted multiple instance learning. Pattern Recognit. 2013, 46, 397–411. [Google Scholar] [CrossRef]

- Akın, O.; Mikolajczyk, K. Online Learning and Detection with Part-based Circulant Structure. In Proceedings of the IEEE International Conference on Pattern Recognition (ICPR), Washington, DC, USA, 24–28 August 2014.

- Liu, T.; Wnag, G.; Yang, Q. Real-time part-based visual tracking via adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015.

- Yao, R.; Xia, S.; Shen, F.; Zhou, Y.; Niu, Q. Exploiting Spatial Structure from Parts for Adaptive Kernelized Correlation Filter Tracker. IEEE Signal Process. Lett. 2016, 23, 658–662. [Google Scholar] [CrossRef]

- Zhang, K.; Liu, Q.; Wu, Y.; Yang, M.-H. Robust Visual Tracking via Convolutional Networks without Training. IEEE Trans. Image Process. 2016, 25, 1779–1792. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Ling, H.; Hu, W.; Xing, J. Transfer learning based visual tracking with gaussian processes regression. In Lecture Notes in Computer Science; Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer: Berlin, Germany, 2015; Volume 8926, pp. 188–203. [Google Scholar]

- Zhang, J.; Ma, S.; Sclaroff, S. MEEM: Robust tracking via multiple experts using entropy minimization. In Lecture Notes in Computer Science; Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer: Berlin, Germany, 2015; Volume 8926, pp. 188–203. [Google Scholar]

- Van De Weijer, J.; Schmid, C.; Verbeek, J.; Larlus, D. Learning color names for real-world applications. IEEE Trans. Image Process. 2009, 18, 1512–1523. [Google Scholar] [CrossRef] [PubMed]

- Rifkin, R.; Yeo, G.; Poggio, T. Regularized least-squares classification. Nato Sci. Ser. Sub Series III Comput. Syst. Sci. 2003, 190, 131–154. [Google Scholar]

- Scholkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; The MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Lim, J.; Yang, M.H. Online Object Tracking: A Benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013.

- Piotr’s Toolbox. Available online: https://pdollar.github.io/toolbox/ (accessed on 3 November 2016).

- Everingham, M.; Gool, V.L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. IJCV 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Jia, X.; Lu, H.; Yang, M.-H. Visual Tracking via Adaptive Structural Local Sparse Appearance Model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012.

- Severin, S.; Grabner, H.; Gool, L.V. Beyond semi-supervised tracking: Tracking should be as simple as detection, but not simpler than recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Miami, FL, USA, 20–25 June 2009.

- Pérez, P.; Hue, C.; Vermaak, J.; Gangnet, M. Color-based probabilistic tracking. In Proceedings of the European Conference on Computer Vision (ECCV), London, UK, 28–31 May 2002.

- Zhang, K.; Zhang, L.; Yang, M.H. Fast compressive tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2002–2015. [Google Scholar] [CrossRef] [PubMed]

- Dinh, T.B.; Vo, N.; Medioni, G. Context tracker: Exploring supporters and distracters in unconstrained environments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 20–25 June 2011.

- Laura, S.-L.; Erik, L.-M. Distribution fields for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012.

- Comaniciu, D.; Visvanathan, R.; Peter, M. Kernel-based object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 564–577. [Google Scholar] [CrossRef]

- Shaul, O.; Aharon, B.H.; Dan, L.; Shai, A. Locally orderless tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012.

- Collins, R.T.; Liu, Y.; Leordeanu, M. Online selection of discriminative tracking features. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1631–1643. [Google Scholar] [CrossRef] [PubMed]

- Zhong, W.; Lu, H.; Yang, M.H. Robust object tracking via sparsity-based collaborative model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012.

- Kwon, J.; Lee, K.M. Tracking by sampling trackers. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011.

| Precision | CLE | VOR | VOR (AUC) | FPS | |

|---|---|---|---|---|---|

| CSK [18] | 51.84 | 304.60 | 0.4133 | 0.3817 | 455 |

| CN [19] | 60.04 | 82.48 | 0.4781 | 0.4220 | 220 |

| DSST [15] | 69.50 | 48.31 | 0.6158 | 0.5248 | 34 |

| KCF [16] | 69.60 | 44.73 | 0.5521 | 0.4782 | 260 |

| SKCF | 68.23 | 46.11 | 0.5970 | 0.5010 | 72 |

| MSKCF | 71.17 | 46.30 | 0.6500 | 0.5290 | 52 |

| Precision | CLE | VOR | VOR (AUC) | |

|---|---|---|---|---|

| STRUCK [12] | 63.84 | 47.07 | 0.5189 | 0.4618 |

| SCM [45] | 56.80 | 62.02 | 0.4322 | 0.3982 |

| VTD [3] | 51.19 | 61.77 | 0.3915 | 0.3536 |

| CXT [44] | 55.24 | 67.42 | 0.4326 | 0.3887 |

| CSK [18] | 51.84 | 304.60 | 0.4133 | 0.3817 |

| OAB [10] | 47.95 | 70.30 | 0.4031 | 0.3618 |

| IVT [1] | 43.17 | 88.11 | 0.3419 | 0.3076 |

| FRAG [5] | 42.44 | 80.66 | 0.3586 | 0.3308 |

| ASLA [40] | 51.14 | 68.10 | 0.3863 | 0.3600 |

| MSKCF | 71.17 | 46.30 | 0.6500 | 0.5290 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, S.; Kim, G.; Lee, S. Effective Visual Tracking Using Multi-Block and Scale Space Based on Kernelized Correlation Filters. Sensors 2017, 17, 433. https://doi.org/10.3390/s17030433

Jeong S, Kim G, Lee S. Effective Visual Tracking Using Multi-Block and Scale Space Based on Kernelized Correlation Filters. Sensors. 2017; 17(3):433. https://doi.org/10.3390/s17030433

Chicago/Turabian StyleJeong, Soowoong, Guisik Kim, and Sangkeun Lee. 2017. "Effective Visual Tracking Using Multi-Block and Scale Space Based on Kernelized Correlation Filters" Sensors 17, no. 3: 433. https://doi.org/10.3390/s17030433