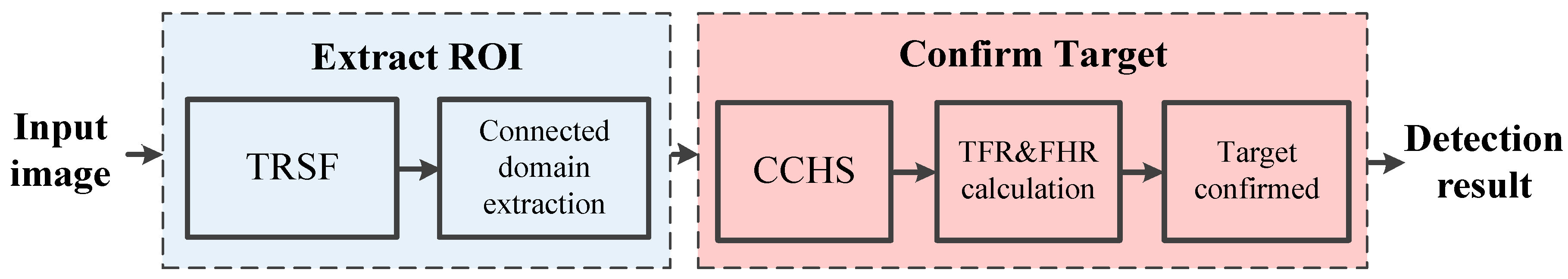

2.2. ROI Extraction

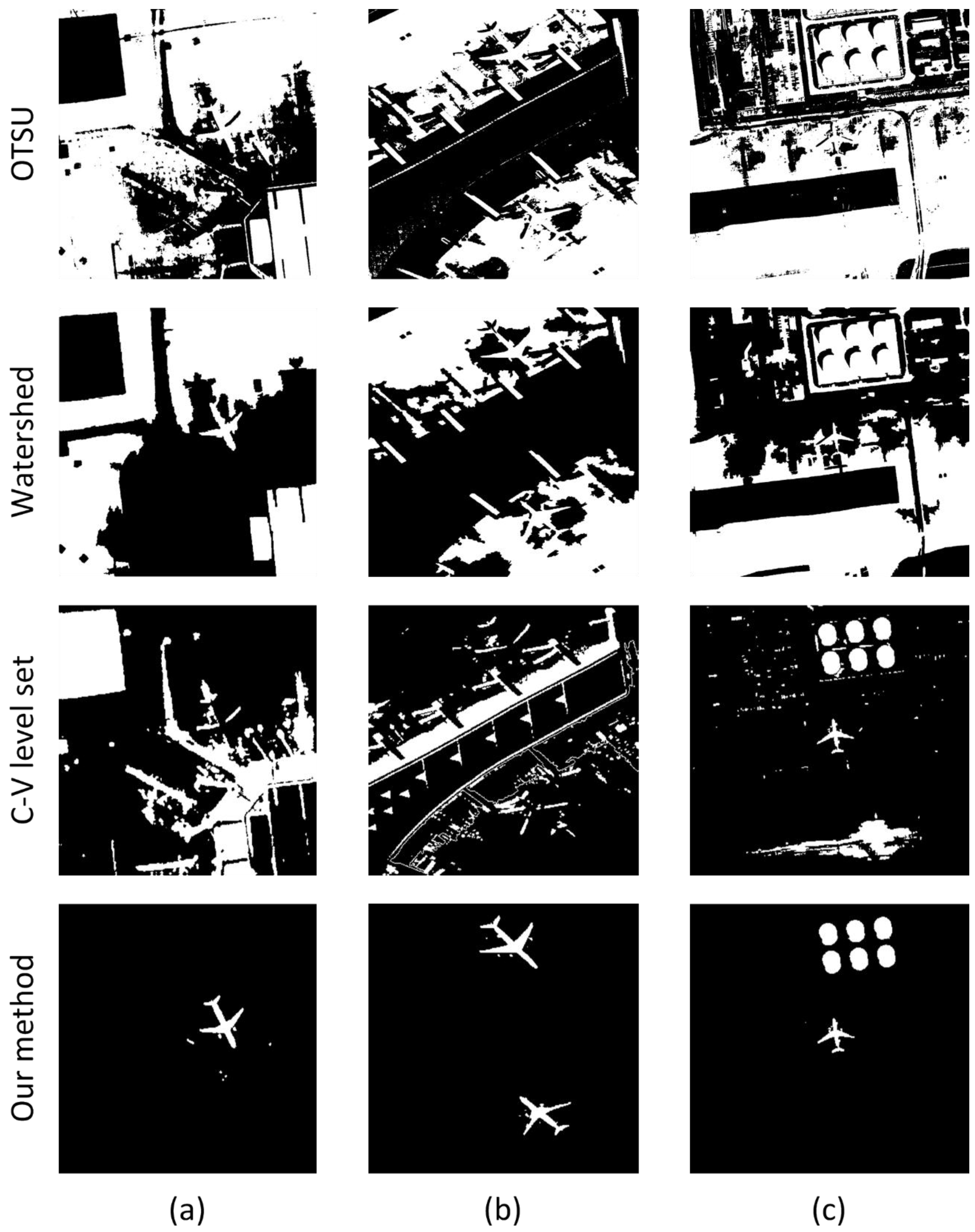

The binarization of the input image is a necessary operation before extracting the connected regions. In the Otsu’s algorithm, the segmentation threshold is obtained through the gray histogram calculation based on statistical information. Such algorithm is characterized by simple, stable and effective calculations and ensures the image segmentation quality in the case of simple background. It is usually regarded as one of the most fashionable threshold segmentation algorithms. However, considering that a remote sensing image consists of numerous grey levels, it seems a difficult task to extract the ROI from such a complex image directly through Otsu’s algorithm. It is found out that the aircraft luminance is usually very high through observation. So the suspected aircraft area can be obtained through further gray histogram segmentation based on Otsu’s algorithm pre-segmentation. The active contour algorithm, such as C-V model [

17], can effectively segment a target with a weak boundary. But the conventional C-V model has low computational efficiency with many iterations, and it is unable to process the case of many gray levels. By approaching its energy function curve to the target contour, the Region-Scalable Fitting (RSF) model [

18] can segment the image with uneven intensity distribution. But such model is sensitive to the contour line. Simultaneously, the initial contour has certain effects on both the number of iterations and convergence. By combining the merits of Otsu’s algorithm and RSF, this paper proposes the improved segmentation algorithm TRSF for target segmentation and extraction.

The Otsu’s algorithm characterizes the separating properties of both the targets and the background through the Interclass Variance, which is defined as:

where

is the variance between two classes,

is the proportion of Class A in the image,

is the gray average of Class A,

is the proportion of Class B in the image,

is the gray average of Class B, and

is the overall gray average of the image. The

, which maximizes the total interclass variance

, is just the segmentation threshold between the minimum and maximum gray values. The segmented result is defined as level set function

as follows.

where

is the segmented quasi-target region, and

is the quasi-background region. The energy function of RSF model in the level set is:

Equation (3) is composed of three terms, where

is the length of closed contour line,

is the regular term of level set, and

is a non-negative constant representing the weighting coefficient of every energy term.

and

. The

is approximated to Heaviside function:

The introduced non-negative kernel function

is defined as:

where σ is positive scale parameter.

When the closed boundary

is not located in the two homogeneous regions, the minimum of

cannot be achieved. The variable E gets its minimal value if the contour line is on the boundary between two homogeneous regions. Therefore, the two matching functions

and

are adopted to fit the image intensity of the areas on both sides of the boundary

C, which is defined as:

The introduced kernel function can control the boundary scalability, which makes the most of local intensity information of an image. This model segments an image with intensity inhomogeneity and could achieve relatively good segmentation results for some objects with weak boundaries.

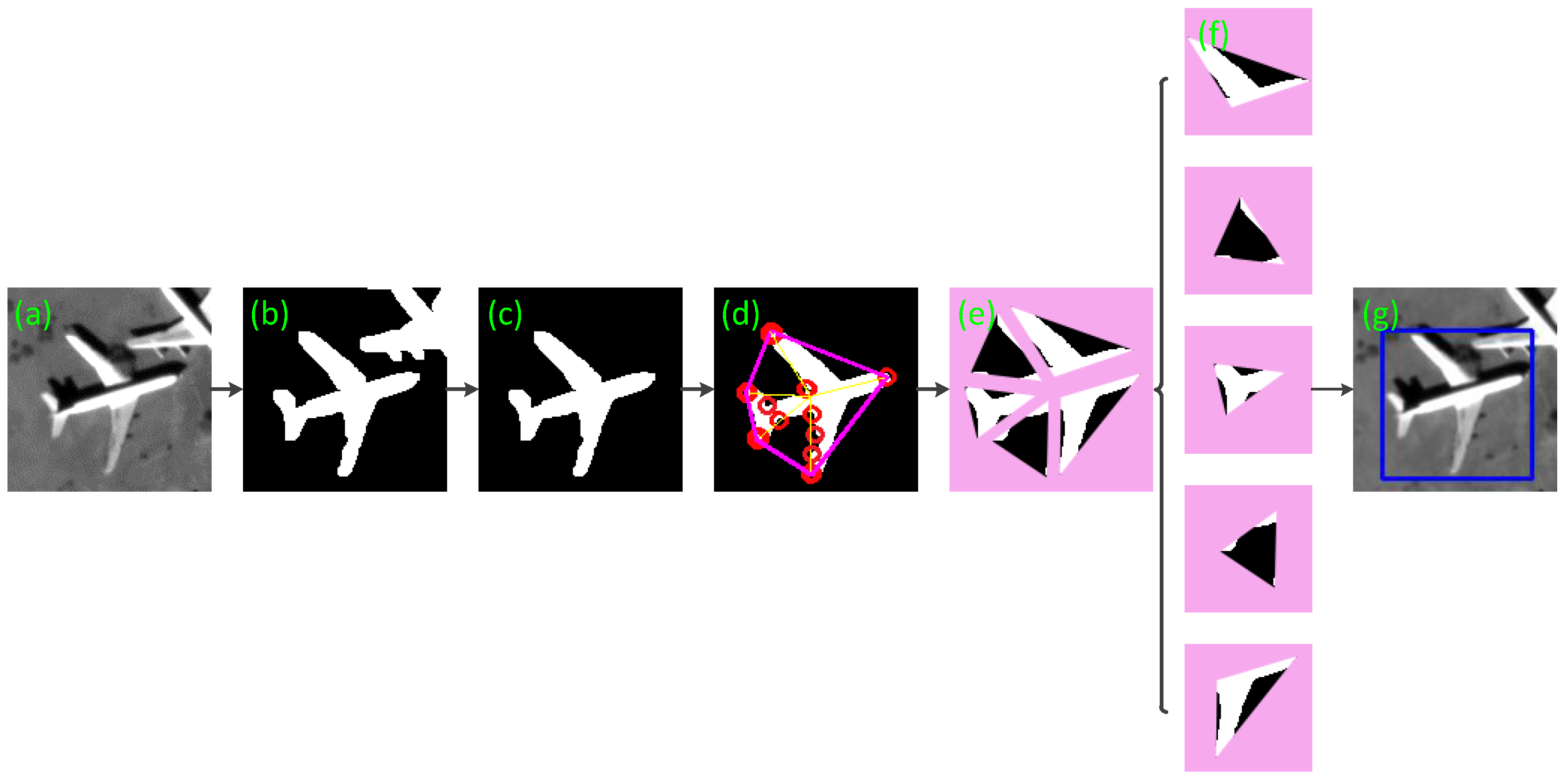

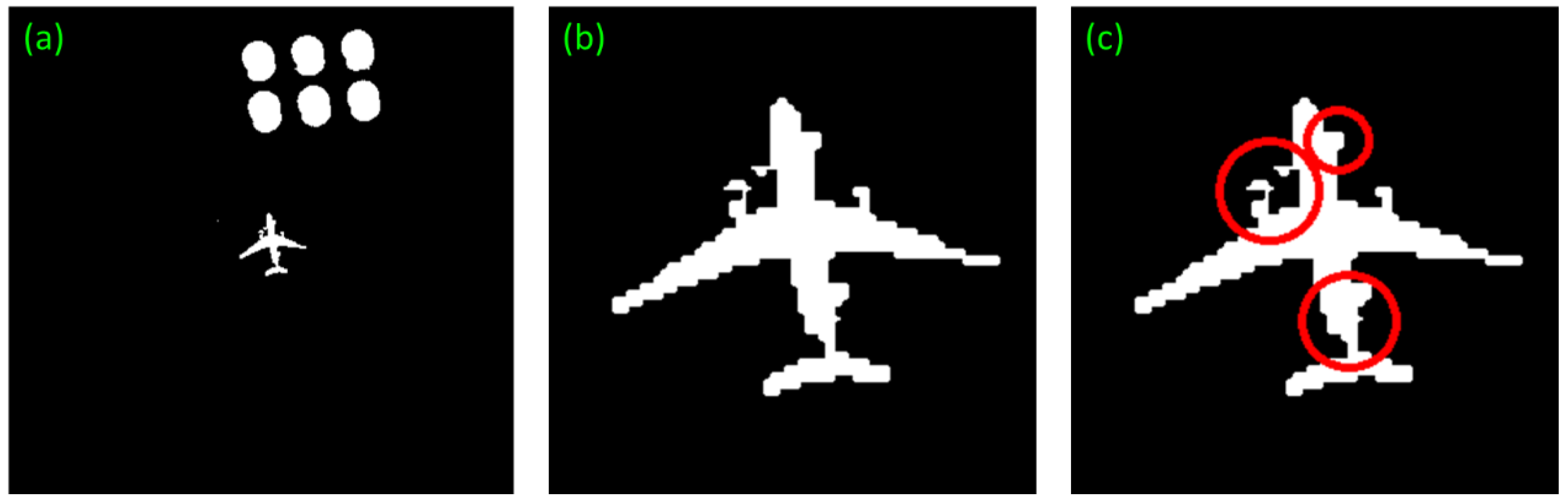

This paper employs in coarse-to-fine fashion to extract the ROIs.

Figure 3 shows this process in detail as follows:

Downsample the original image by a factor of 4, and then implement initial Otsu’s algorithm segmentation for the low-resolution image. In this way, the complexity of computation is reduced.

After the completion of Otsu’s algorithm, weed out small areas at first, and then process the segmented region through secondary segmentation and morphological processing at a high gray level (10%). At this point, the aircraft area can be located basically.

Take the segmented binary image as the local region and initial condition of active contour, define the initial level set function as the piecewise function containing only two function values, namely 1 and −1, and use this function to guide the curve evolution in the image.

Evolve the level set function through energy functionality, and search for the level set . When the symbol of changes, the corresponding energy functionality will decrease so that . Otherwise, keep unchanged.

Repeat the steps c and d, and stop the repetition when the energy functionality doesn’t change any more. Finally, the segmentation result can be obtained.

Process the segmented binary image morphologically, and weed out small areas.

For subsequent processing, we employ the searching algorithm for 8-neighborhood connected regions to extract the connected regions from a binary image [

19]. Suppose the image function is

, the (

)-order moment is given as:

The area is the zero-order moment, is the centroid of a connected region, and , .

2.3. ROI Analysis to Confirm Target

In this section, a corner-convex-hull based segmentation (CCHS) algorithm is given. Furthermore, the new features—namely TFR and FHR—are introduced into the judgment of true targets particularly.

2.3.1. Corner-Convex-Hull Based Segmentation Algorithm

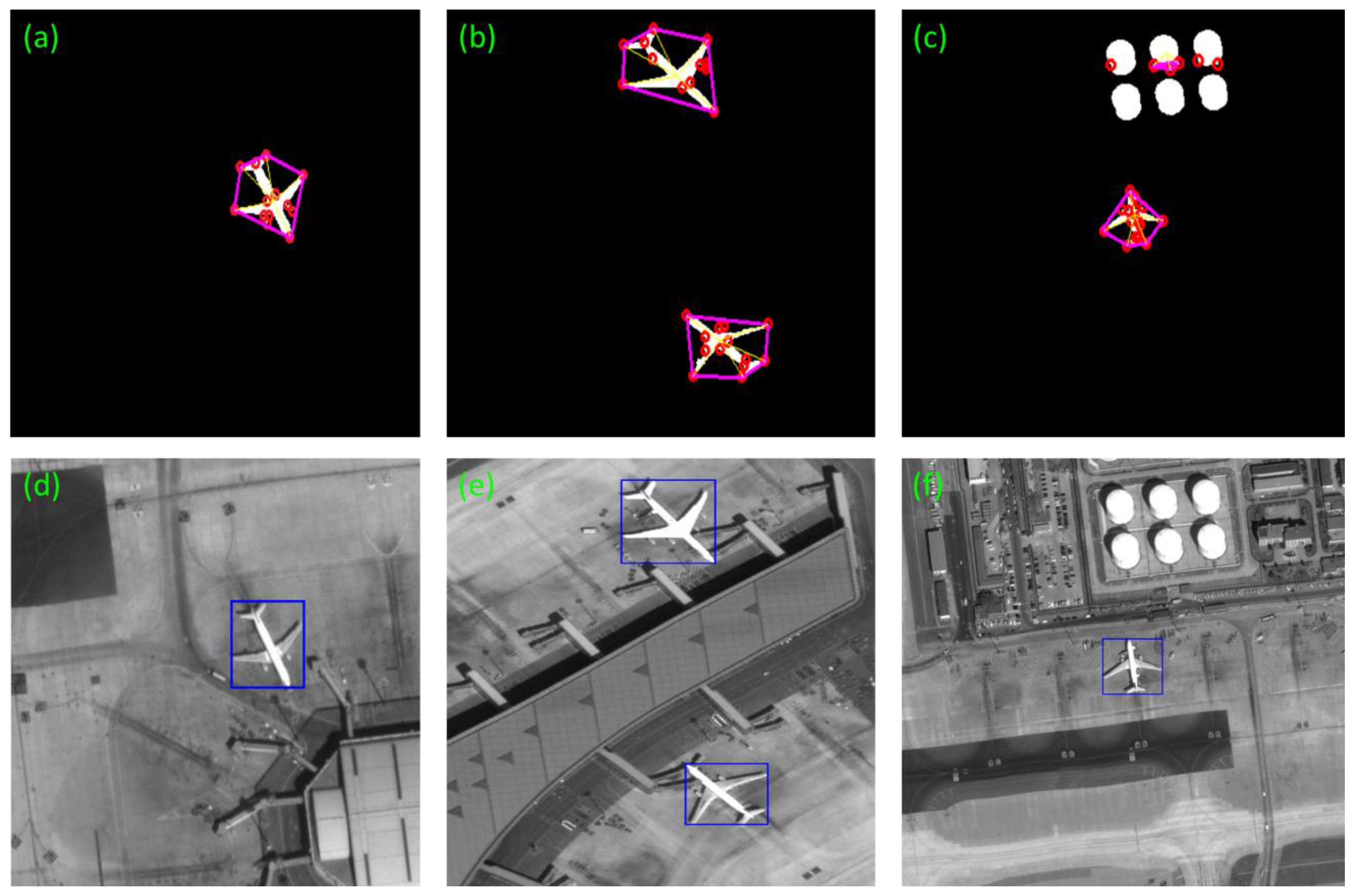

After the last step, many of the connected regions, which include the connected regions of the aircraft target, have been extracted by TRSF algorithm. Then we would exclude the false targets in these connected regions. The aircraft targets should be filtered in accordance with the aircraft features that we presented below. The most fundamental features, such as area and aspect ratio of the minimum external moment, are used to eliminate a large number of regions. Since the aircraft contour takes on typical geometric features, its feature points are available for target screening. With a small calculation burden and steady results, the Harris corner operator [

20] is adopted. Corners are important local features of a target because they cannot only keep important shape information of a target, but also reduce redundant data of the target effectively to relieve the computation burden. Besides, corners remain invariant during transition, zooming and rotation. Thus, corner feature is the crucial basis for aircraft detection method proposed by this paper. The response value

R of Harris corner points is:

where

, in which

,

, and

are the partial derivatives and second-order mixed partial derivative of the gray value of image point

in the

and

directions;

is an empirical value, usually between 0.04~0.06 and we set 0.04. By setting the threshold

, a point whose response value

is bigger than

is considered as Harris corner. The detection result of target corners is shown in the

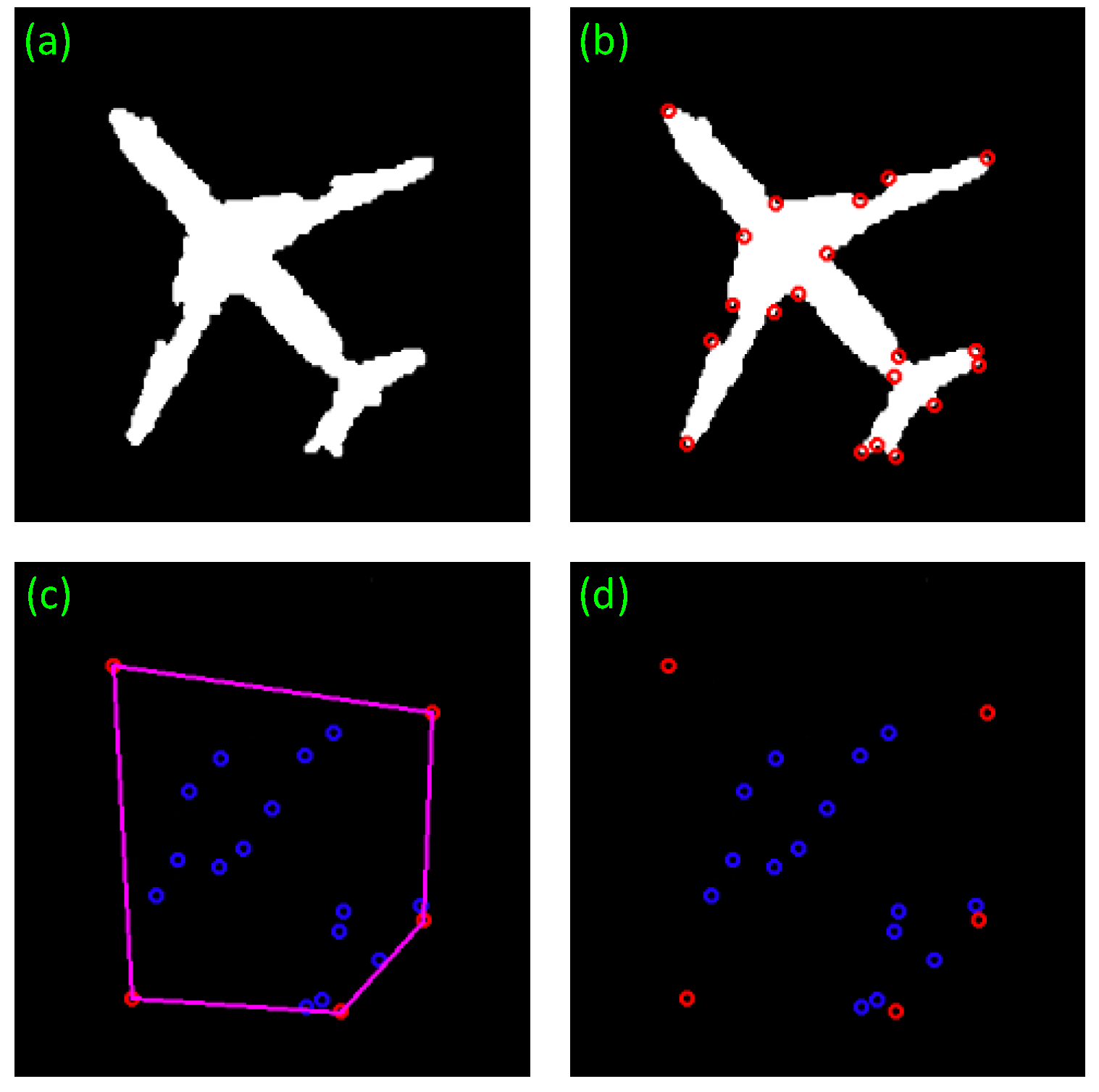

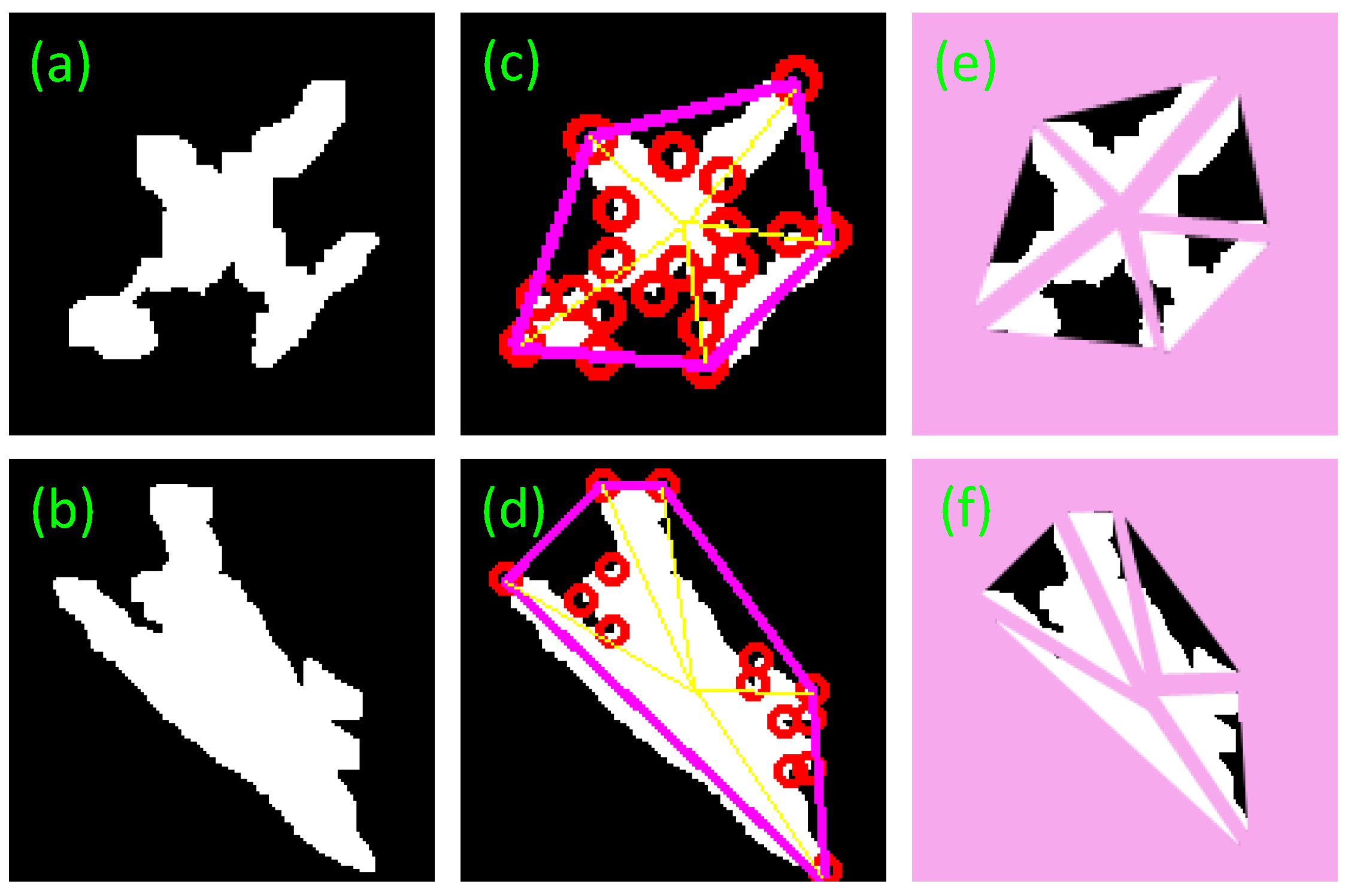

Figure 4b.

After the extraction of corners from a segmented binary image, it is found that an aircraft has five salient corners at the aircraft nose, wings and tail respectively. Convex hull [

21] of these corners reveals that the convex hull of aircraft corners is a pentagon constituting only five vertexes or corners at the aircraft nose, wings and tail respectively, while other corners are located inside the convex hull, as shown in

Figure 4c. Some defective corners caused by segmentation are also found inside the convex hull. Hence, some defects arising from threshold segmentation have no effects on the extraction of convex hull. The corner extraction from a binary image can also shorten the operation time.

When multiple adjacent corners overlap, the similar corners can be merged in accordance by applying the Euclidean distance to prevent one feature point from being detected as several corners. In this way, the pentagon corners for an aircraft become extremely clear. The usage of extracted corner set can greatly reduce the calculation burden in convex closure detection in a whole connected region.

The process of convex hull detection in this section is given as follows (Algorithm 1):

| Algorithm 1 |

![Sensors 17 01047 i001]() |

Points in the result will be listed in counter-clockwise order.

2.3.2. New Features for Target Confirmation

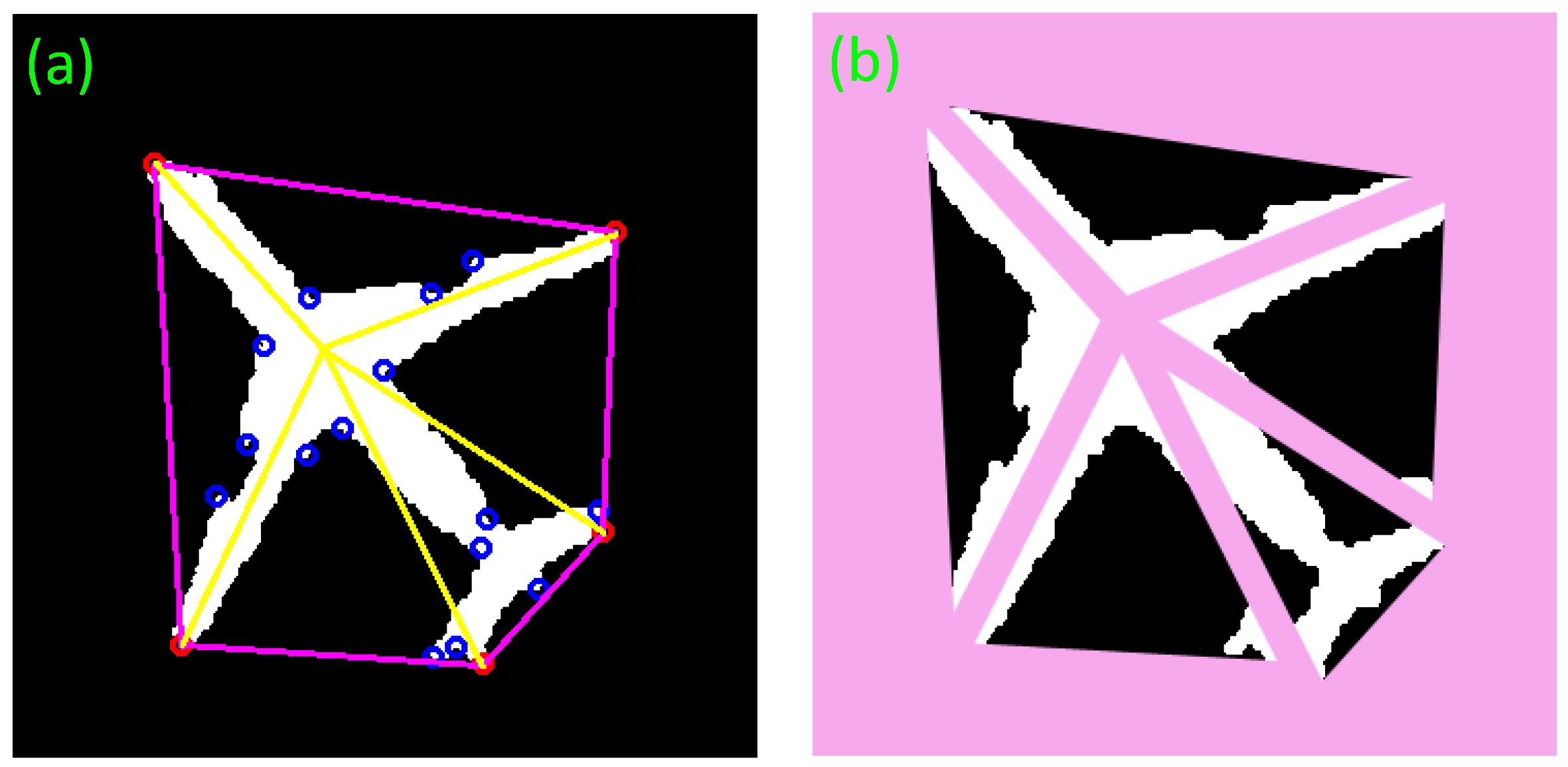

After drawing the convex hull of ROIs, there are still some false candidates in a pentagon shape which needs to be further wed out. Here, we propose a segmentation approach that divides a connected region into several parts so that every slice can be independently analyzed to remove the false targets.

In this section, the centroid of a connected region is connected to the vertexes of convex hull to segment the region into five fragments. The shape of each fragment is a triangle which includes a part of connected region and a part of convex hull background. The new feature TFR describes the radio of the area of each target part to each triangle and the other feature FHR describes the radio of area of each triangle to the whole hull. The definitions are as follows.

where

is the number of the pixels in a fragment whose value is 1,

is the number of pixels in every whole fragment, and

is the number of pixels in the whole fragment.

The result of fragment segmentation is shown in

Figure 5. Moreover,

Table 1 gives the features of aircraft fragments. Through the five features, an aircraft target can be identified.

According to

Table 1, fragments 2 and 4 have a very small TFR of around 10%, followed by the TFR of fragments 1 and 5, which is about 70%. The TFR of fragment 3 is more than 80%, the highest among them. Some regularities can also be found in the distribution of FHR. By adopting these features, false alarms can be rejected.

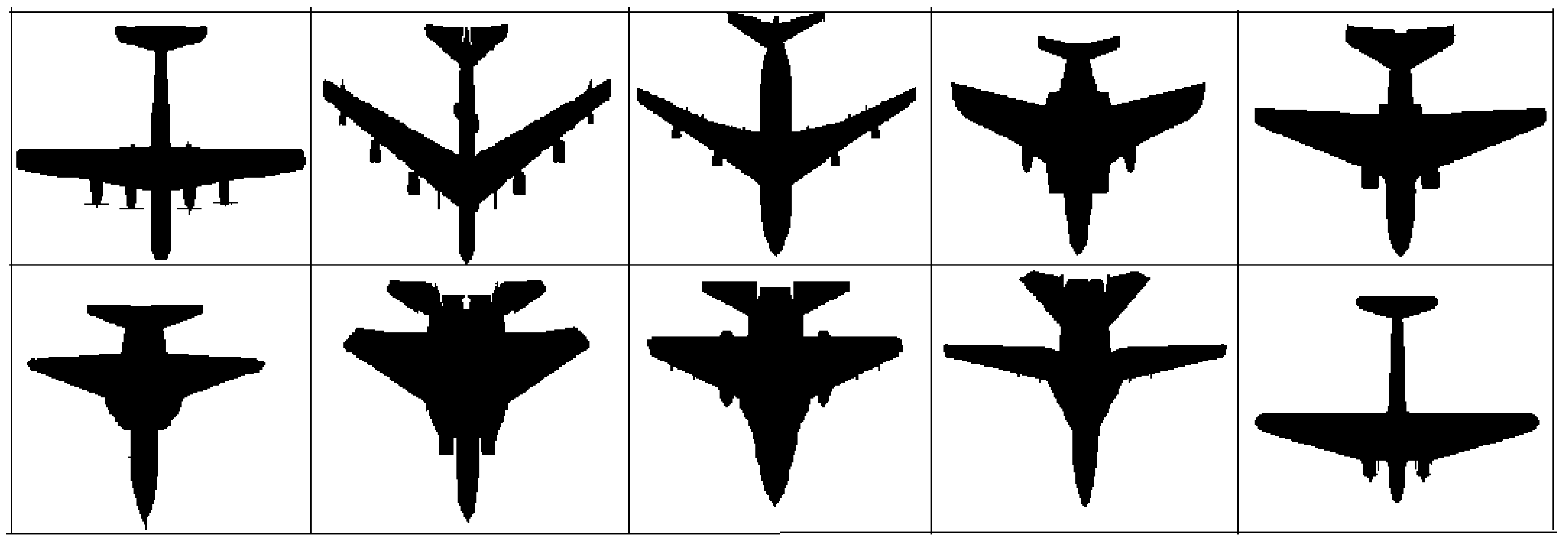

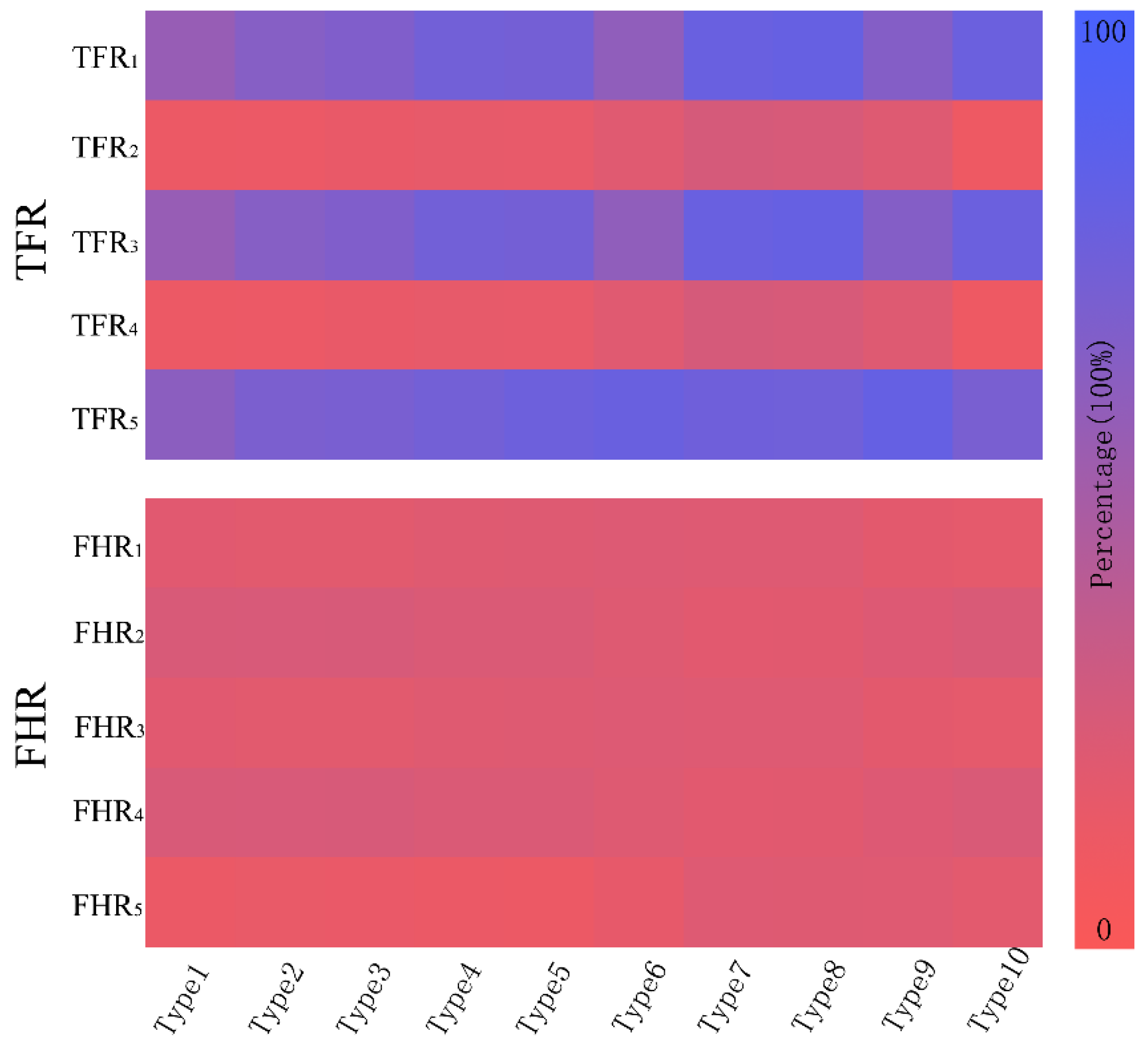

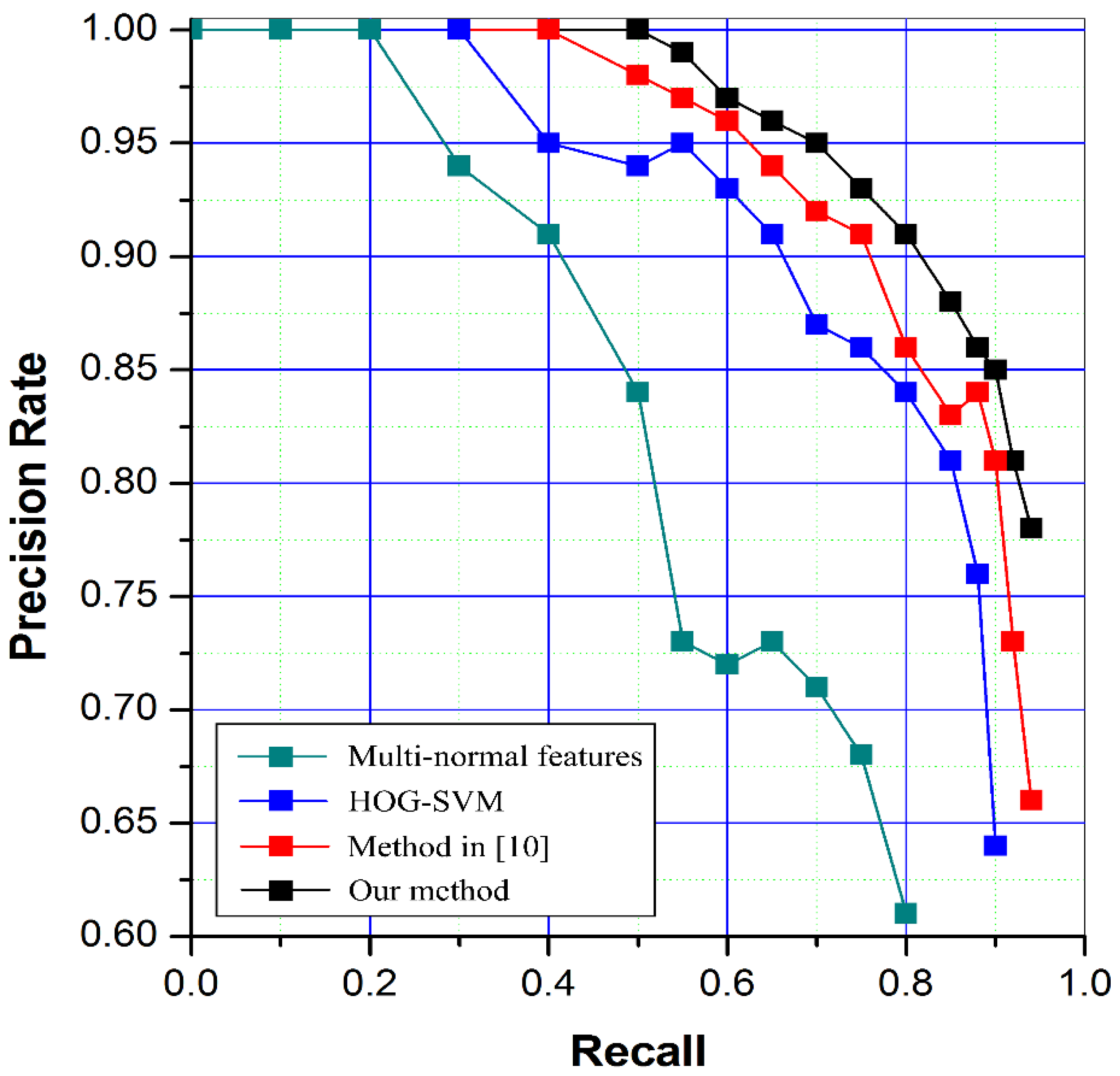

The fragment features are applied to different aircraft models [

22] shown in the

Figure 6, and the corresponding data are shown in the

Table 2. It is found that the two features of different aircraft models show some regularity. The pseudo color map shows the regularity more obviously in

Figure 7.

If the extracted aircraft is irregular, as shown in

Figure 8a, the invariant moment based on symmetry will be no longer valid. The features proposed in this paper could cope with this problem.

Figure 8b illustrates that the false alarm can be rejected though it also has five vertexes.

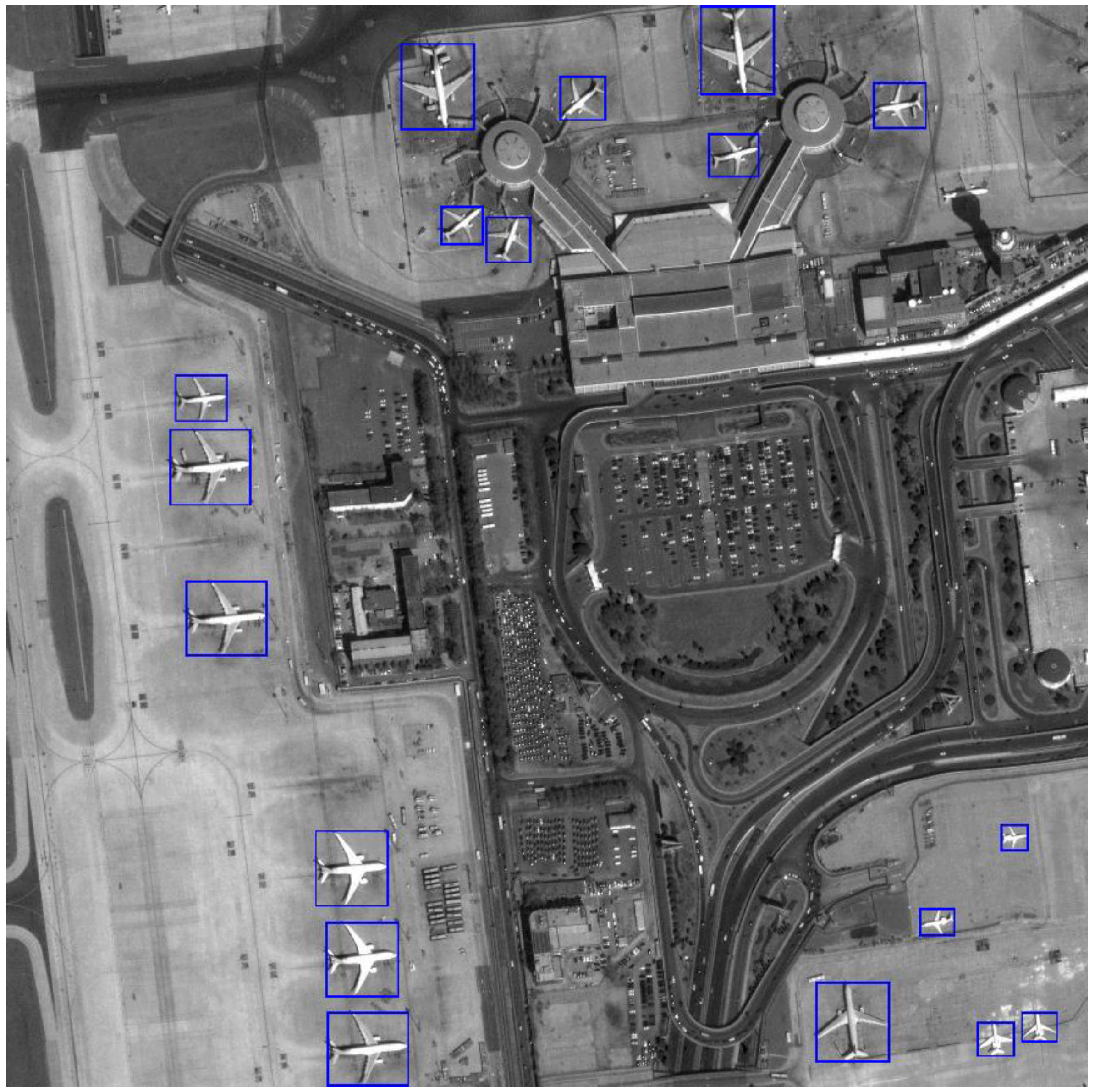

Through the SVM learning and training for a large number of remote sensing aircraft image slices, the targets can be judged accurately [

23].