1. Introduction

A large remote sensing image with a wide field of view and high resolution is often required for many applications, such as map-making, disaster management, and military reconnaissance [

1,

2]. However, the wide field of view and high resolution cannot be captured at the same time because of the limit of the sensor size of the aerial camera. Image mosaicking was used to solve this problem effectively. Image mosaicking is the instrument used to gain a remote sensing image that meets the requirements for both the field of view and resolution using a series of images with overlapping areas. Ideally, the transition in the overlapping region from one image to another should be invisible. Realistically, due to different illumination, exposure parameter settings, depth of field differences, shooting field changes, and other reasons, the overlapping area will inevitably have uneven brightness and geometric misalignment. The problem of brightness unevenness in the mosaicking image can be effectively solved after a series of color corrections, smoothing [

3,

4,

5], and image fusion [

6,

7,

8]. However, the apparent parallax caused by geometric misalignment cannot be solved by the above method. An effective way to solve this problem is to find an optimal seamline in the overlapping region, then take image content respectively on each side. The optimal seamline detection is to find the minimal difference between the two images in the overlapping area, e.g., the intensity difference, gradient difference, and color difference. The geometric misalignment can be eliminated by the above process, and two images can be mosaicked as a large field of view image without apparent parallax.

Since Milgram [

9] proposed computer mosaicking technology, finding the optimal seamline to improve the quality of mosaicking image has become an important direction for many scholars to study. Many methods have been proposed to determine the location of the seamline. Yang et al. [

10] smoothed the artificial edge effectively through the two-dimensional seam point search strategy. Afek and Brand [

11] completed the geometric correction of the image by adding the feature-matching algorithm to the optimal seamline detection process. Fernandez et al. [

12] proposed a bottleneck shortest path algorithm to realize the drawing of the aerial photography map by using the absolute value of pixel differences in the overlapping region. Fernandez and Marti [

13] subsequently optimized the bottleneck shortest path with a greedy random adaptive search procedure (GRASP) to obtain a superior image. Kerschner [

14] constructed an energy function based on color and texture similarity, and then used the twin snakes detection algorithm to detect the position of the optimal seamline. One snake is a profile that moves inside the image, changing its shape until its own energy function is minimal [

15]. The twin snakes detection algorithm created start points for two contours on the opposite sides of the overlapping area, which passed through the overlapping area and constantly changed the shape until a new one was synthesized. Soille [

16] proposed a mosaicking algorithm based on the morphology and marker control segmentation program, which rendered the seamline along the highlight structure to reduce the visibility of the joints in mosaicking image. Chon et al. [

17] used the normalized cross correlation (NCC) to construct a new object function that could effectively evaluate mismatching between two input images. This model determined the horizontal expectations of the largest difference in overlapping region, and then detected the position of the best seamline using Dijkstra’s algorithm. It could contain fewer high-energy pixels in a long seamline. Yu et al. [

18] constructed a combined energy function with a combination of multiple image similarity measures, including pixel-based similarity (color, edge, texture), region-based similarity (saliency) and location constraint, and then determined the seamline by dynamic programming (DP) [

19]. Li et al. [

20] extracted the histogram of oriented gradient (HOG) feature to construct an energy function, then detected the seamline by graph cuts.

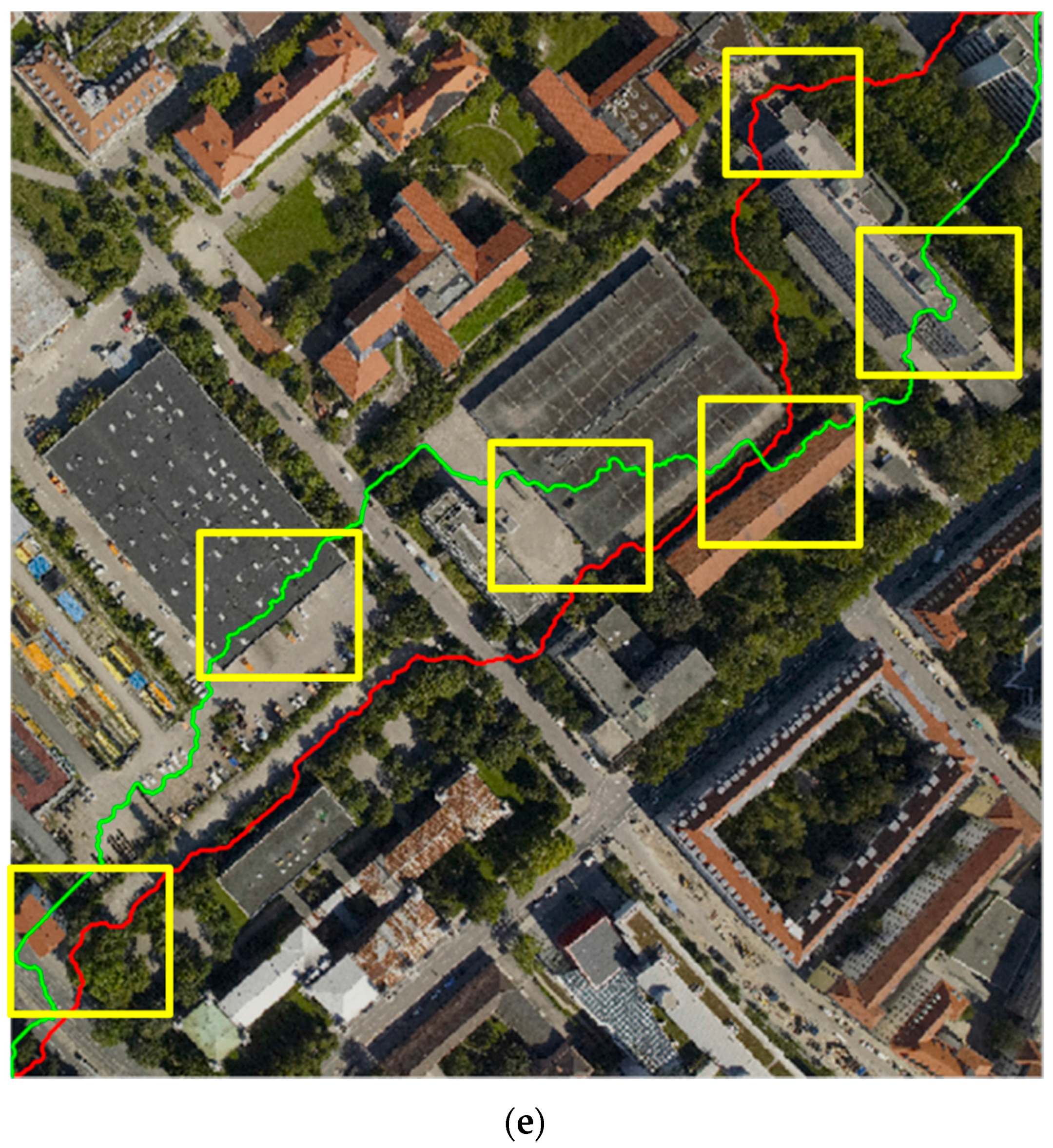

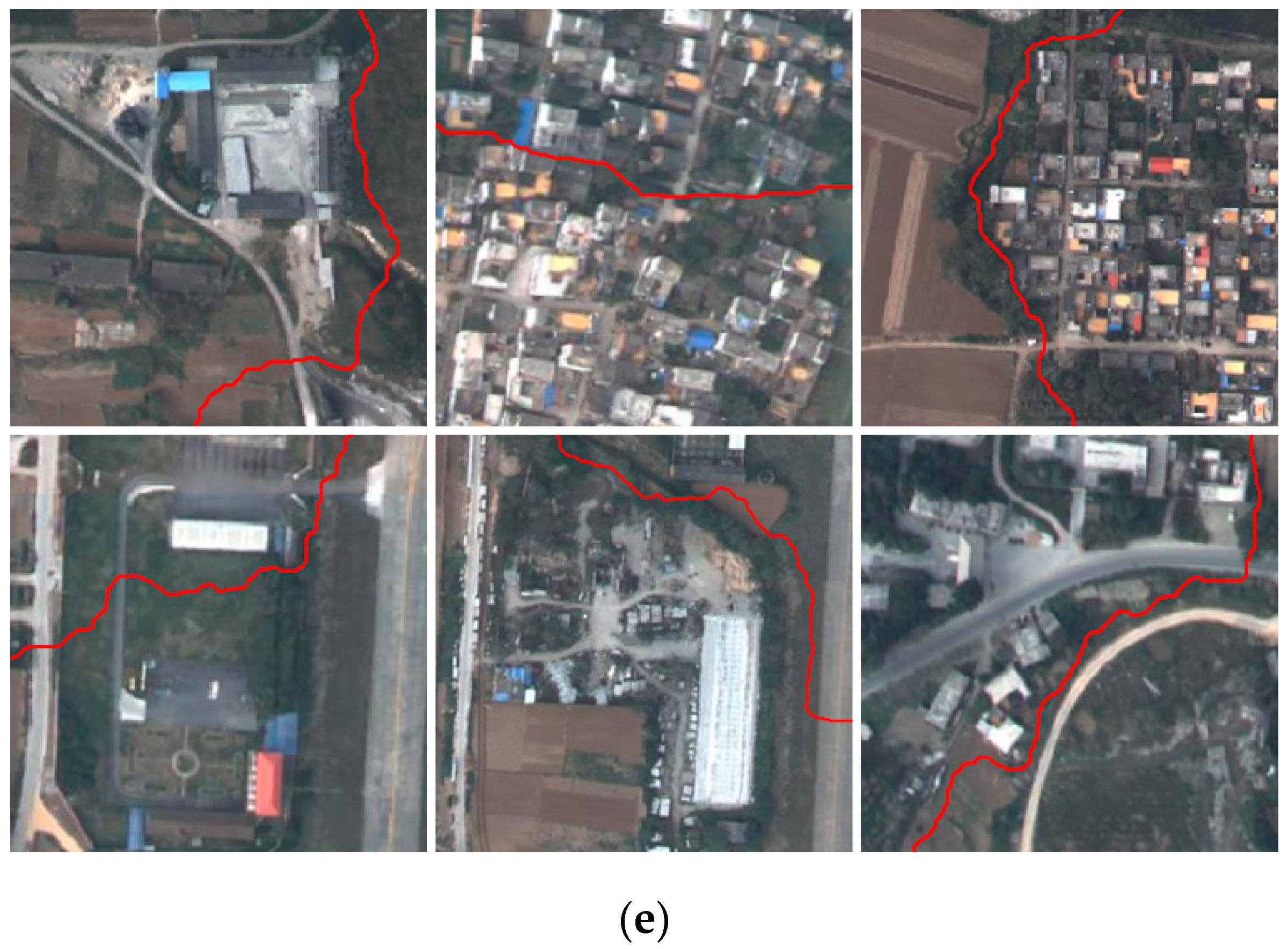

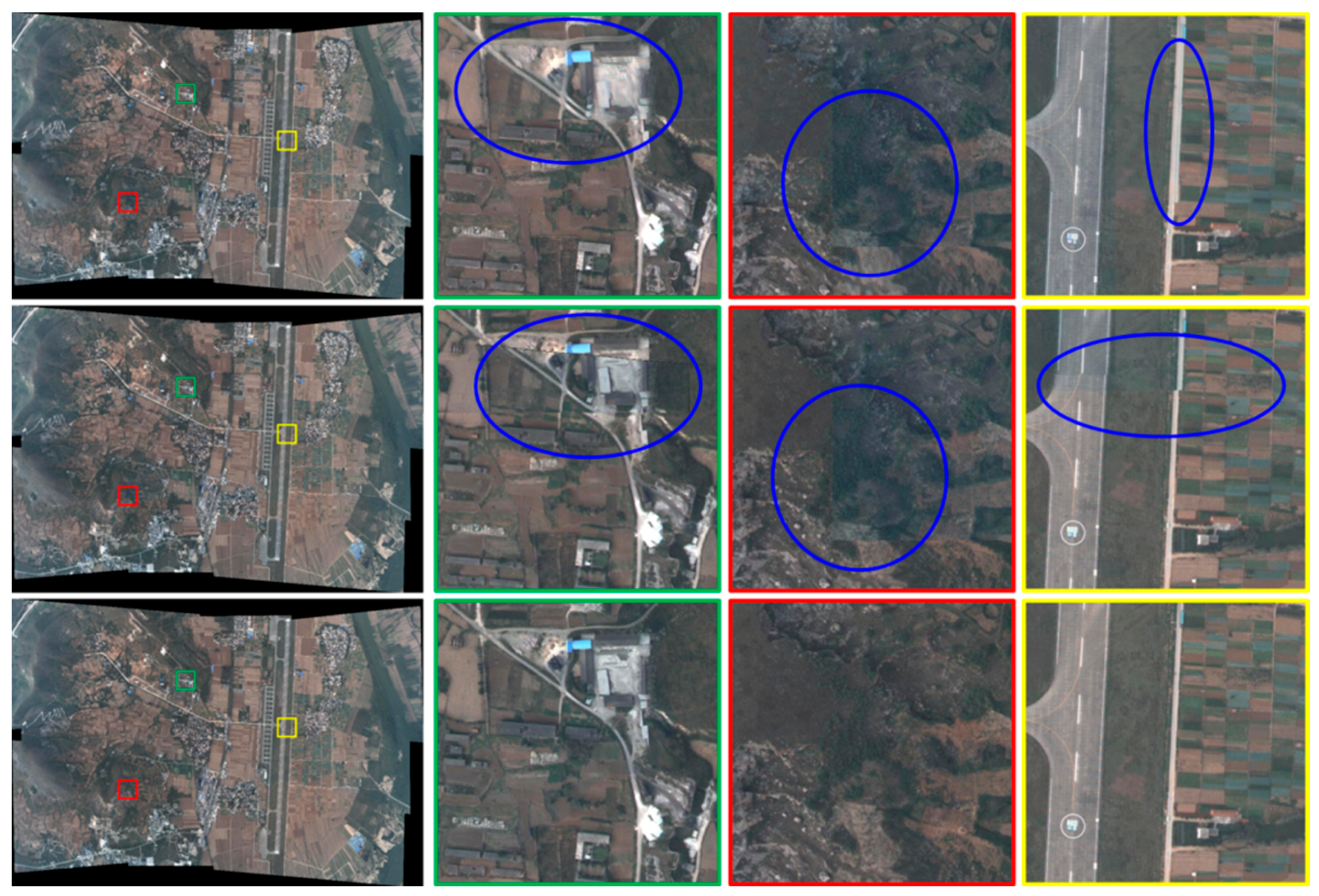

Many of the methods described above considered seamline detection as an energy optimization, characterizing the difference between input images in overlapping regions by constructing a special energy function (cost). Image information should be contained in the cost comprehensively, e.g., the color, the gradient, the texture feature and the edge strength, and then find the optimal solution through different optimization algorithms, such as dynamic programming, Dijkstra’s algorithm [

21], snake model [

15], and graph cuts [

22,

23]. The core issue is how to avoid the seamline passing through the obvious objects in overlapping area. Owing to the differences of input images, there will be pixel misalignment and color differences near the seamline in the mosaicking image when the seamline passes through the obvious objects. This manifests as the obvious “seam” which can compromise the integrity of objects. Therefore, the seamline should pass through smooth texture region, such as roads, rivers, grass, i.e., background regions, bypassing the obvious objects, such as buildings or cars. It is beneficial to avoid the seamline from passing through the obvious objects to extract the pixel position of objects accurately. Namely, segmentation of the input image is necessary.

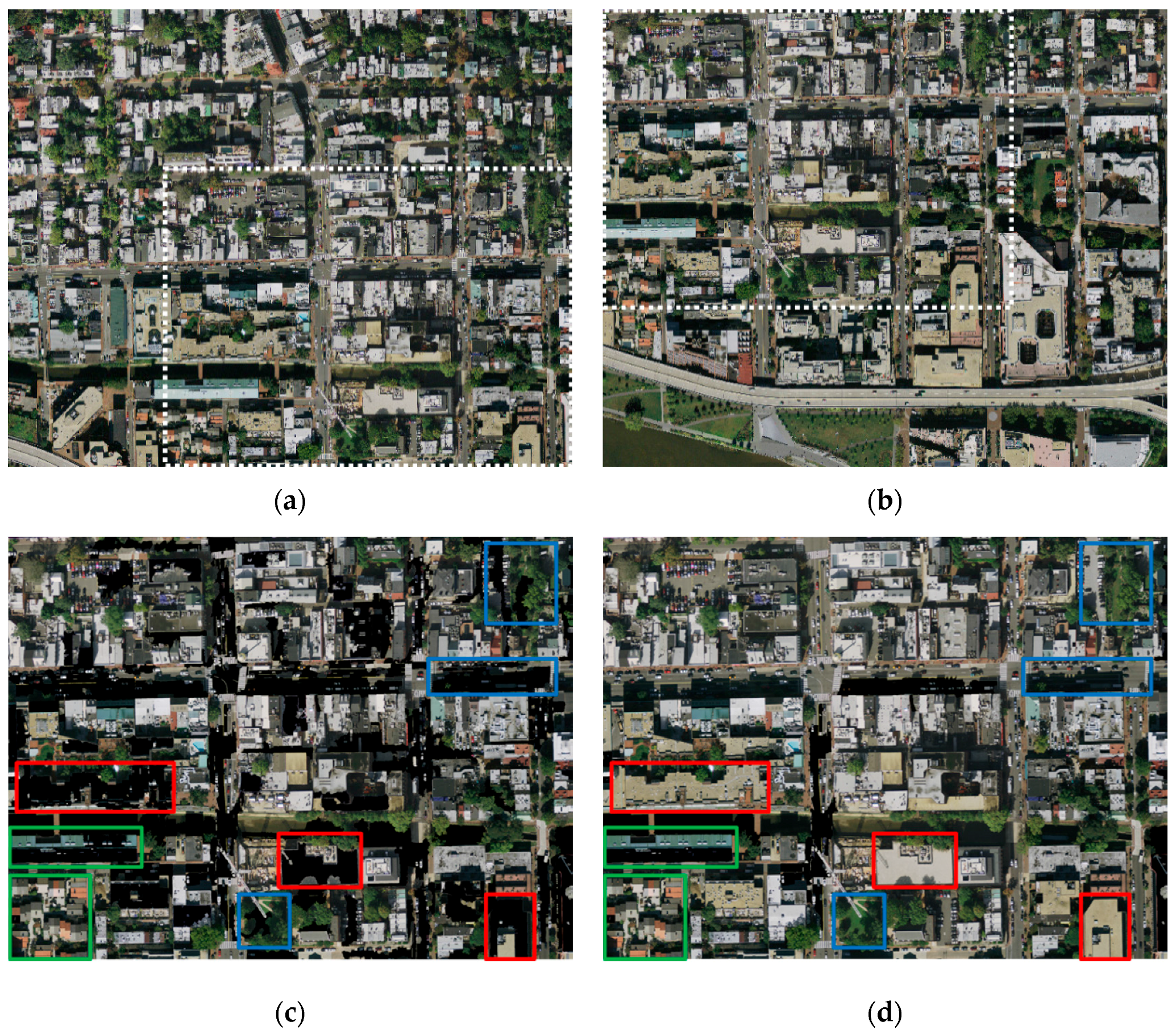

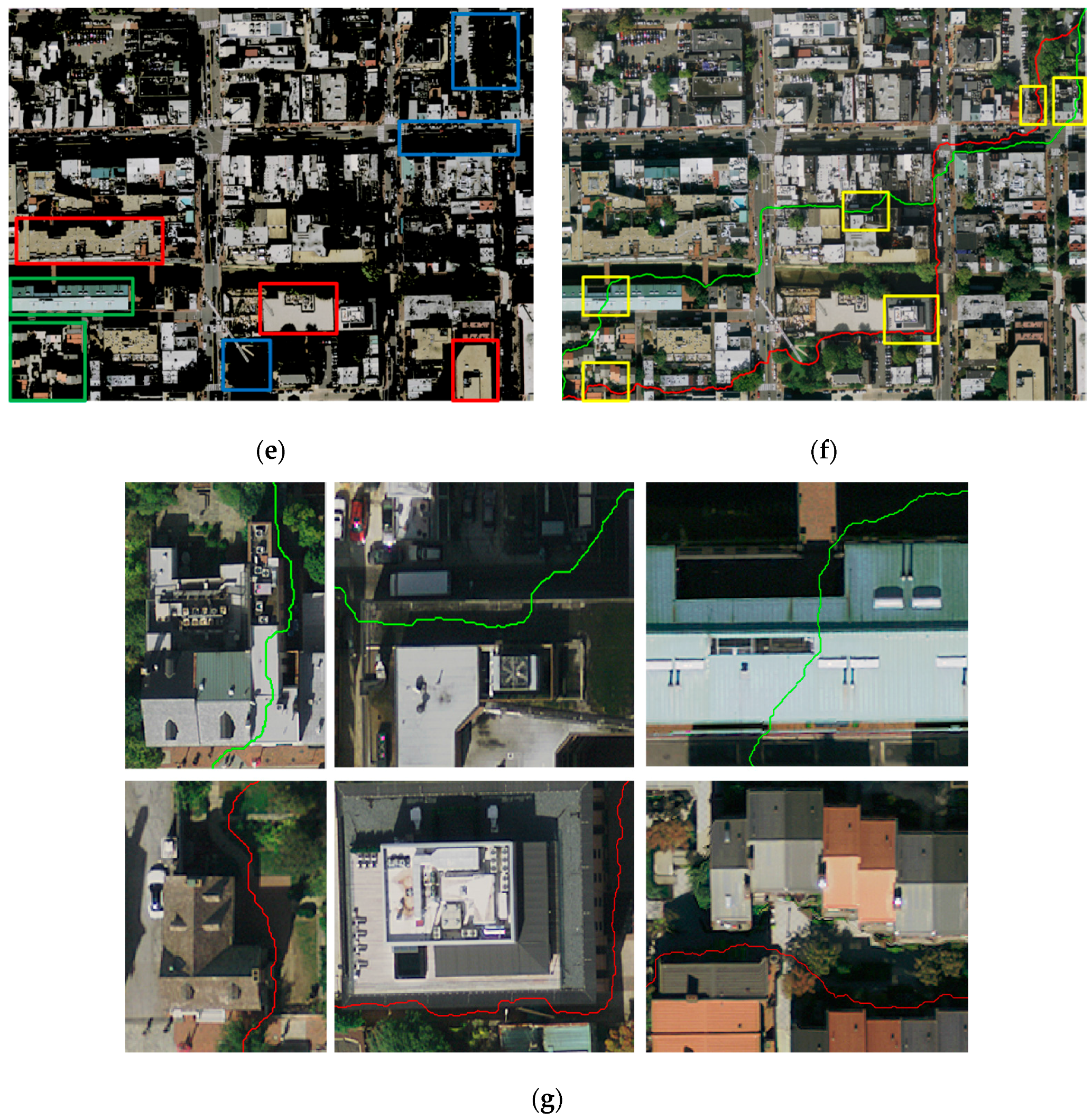

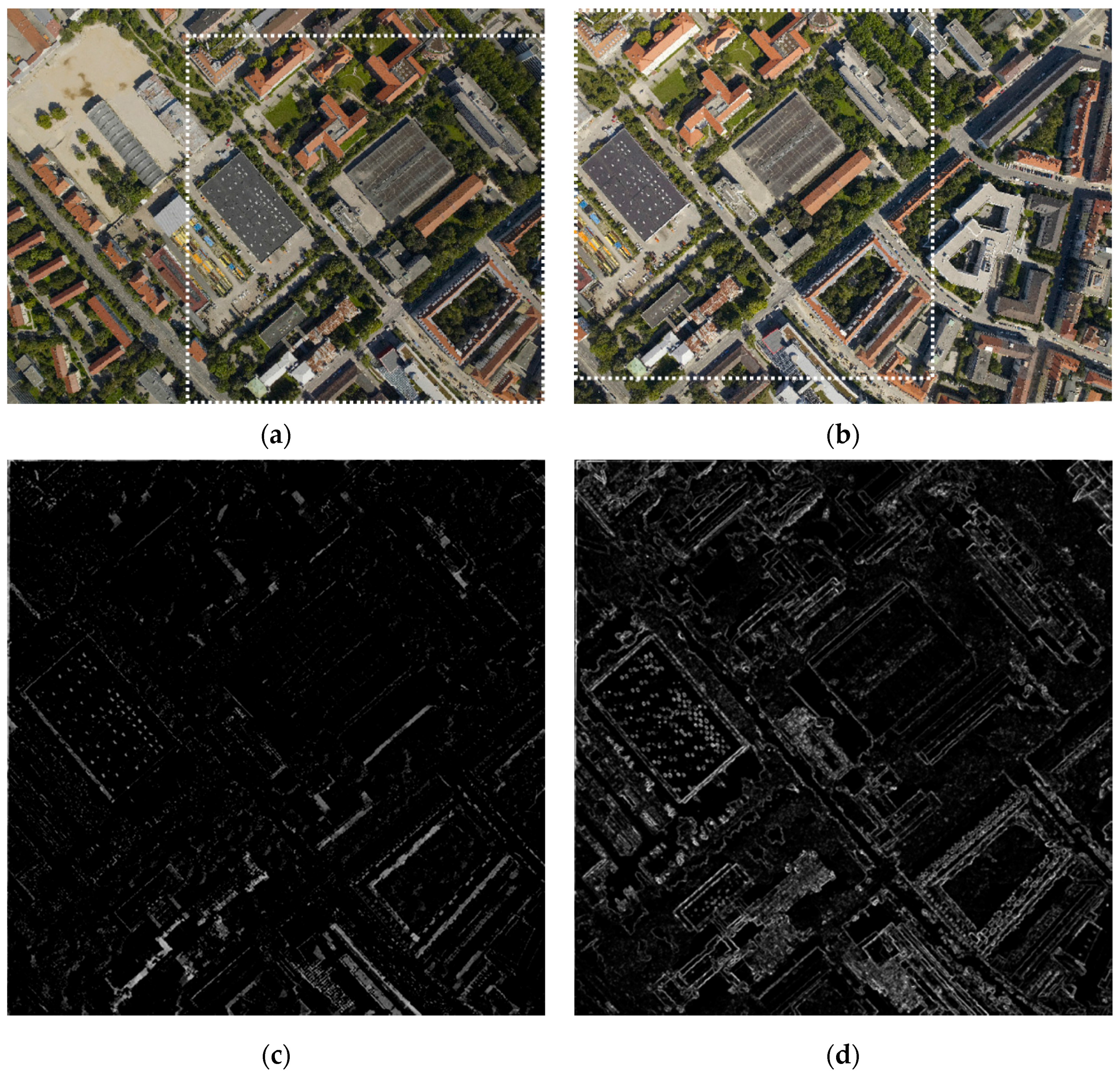

Pan et al. [

24] proposed an urban image mosaicking method based on segmentation, which determined preferred regions by the mean-shift (MS) algorithm and calculated the color difference as the cost. Firstly, the input images were segmented by the mean-shift algorithm and then the span of every segmented region was computed. Then preferred regions were determined based on the given threshold of the span which was consistent with the size of the largest obvious object in the overlapping regions. Ideally, most of the obvious objects are smaller than the grass or street areas, which are segmented into smaller regions, so that the selected preferred regions do not contain obvious objects, such as buildings, cars, etc. Under the realistic condition, there are some difficulties in the implementation of the method. The result of segmentation strongly depends on the parameters of the mean-shift algorithm, such as bandwidth. In addition, the threshold of the span is related to the size of the objects of the overlapping region, which cannot be completed automatically and needs to be given artificially for each image. Furthermore, the size of the obvious object is not always smaller than the street or the grass, so we cannot accurately extract the preferred regions without obvious objects. This method is too simple to construct the cost map, only considering the color difference, but not the other aspects, e.g., texture or edge. Saito et al. [

25] proposed a seamline determination method based on semantic segmentation by training a convolution neural network (CNN). The model can effectively avoid the buildings and obtain a good mosaicking. However, the model needs large-scale image datasets for training, and it is very time consuming.

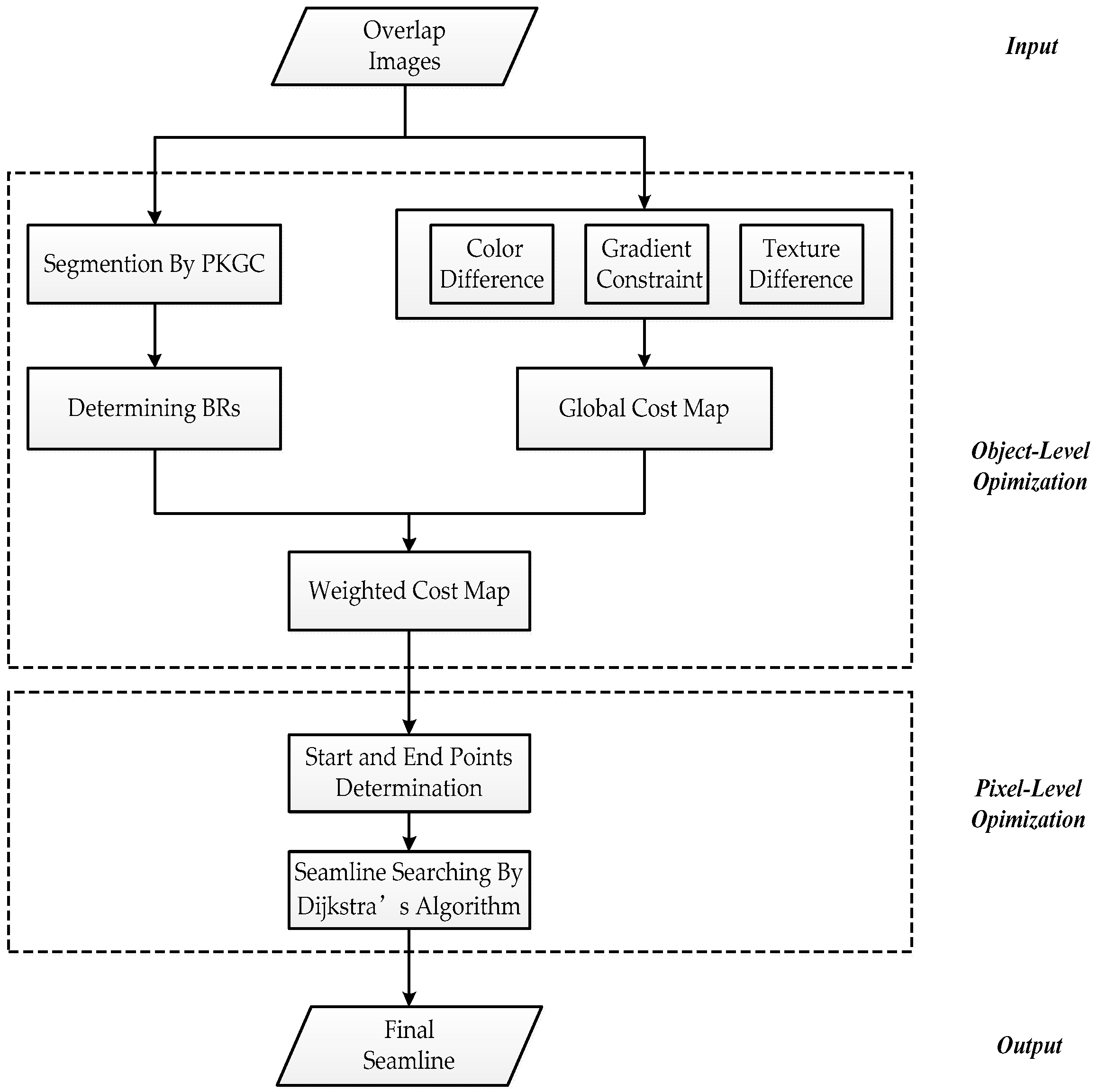

In this paper, a novel method for seamline determination is presented based on a parametric kernel graph cuts (PKGC) segmentation algorithm [

26] for remote sensing image mosaicking. We determine the seamline via a two-level optimization strategy. Object-level optimization is executed firstly. The cost map is weighted by the background regions (BRs) determined by the results of the PKGC segmentation. The cost map contains the color difference, gradient constraint, and texture difference. Then the pixel-level optimization by Dijkstra’s algorithm is carried out to determine the seamline in the weighted cost. This paper is organized as follows:

Section 2 describes the novel method of this paper.

Section 3 presents the experimental results and the discussion.

Section 4 summarizes this paper.

2. Methods

Considering the integrity of the mosaicking image, the seamline should pass through flat areas of texture, such as rivers and meadows, bypassing the obvious objects, such as buildings. Therefore, we can set the background regions (BRs) and obvious regions (ORs) with an image segmentation method. The seamline prefers to pass through BRs and round ORs.

The corresponding relation of the overlap area of the input images will be determined after pretreatment and registration for input images [

27]. Then the seamline can be detected in the overlapping region. Firstly, we determine BRs based on the segmentation by the PKGC algorithm. Then we construct the global cost considering the color difference, the multi-scale morphological gradient (MSMG) constraint, and texture difference. Finally, we determine the pixel position of the seamline by Dijkstra’s algorithm based on the weighted cost map.

Figure 1 shows the flowchart of this method.

2.1. BR Determination

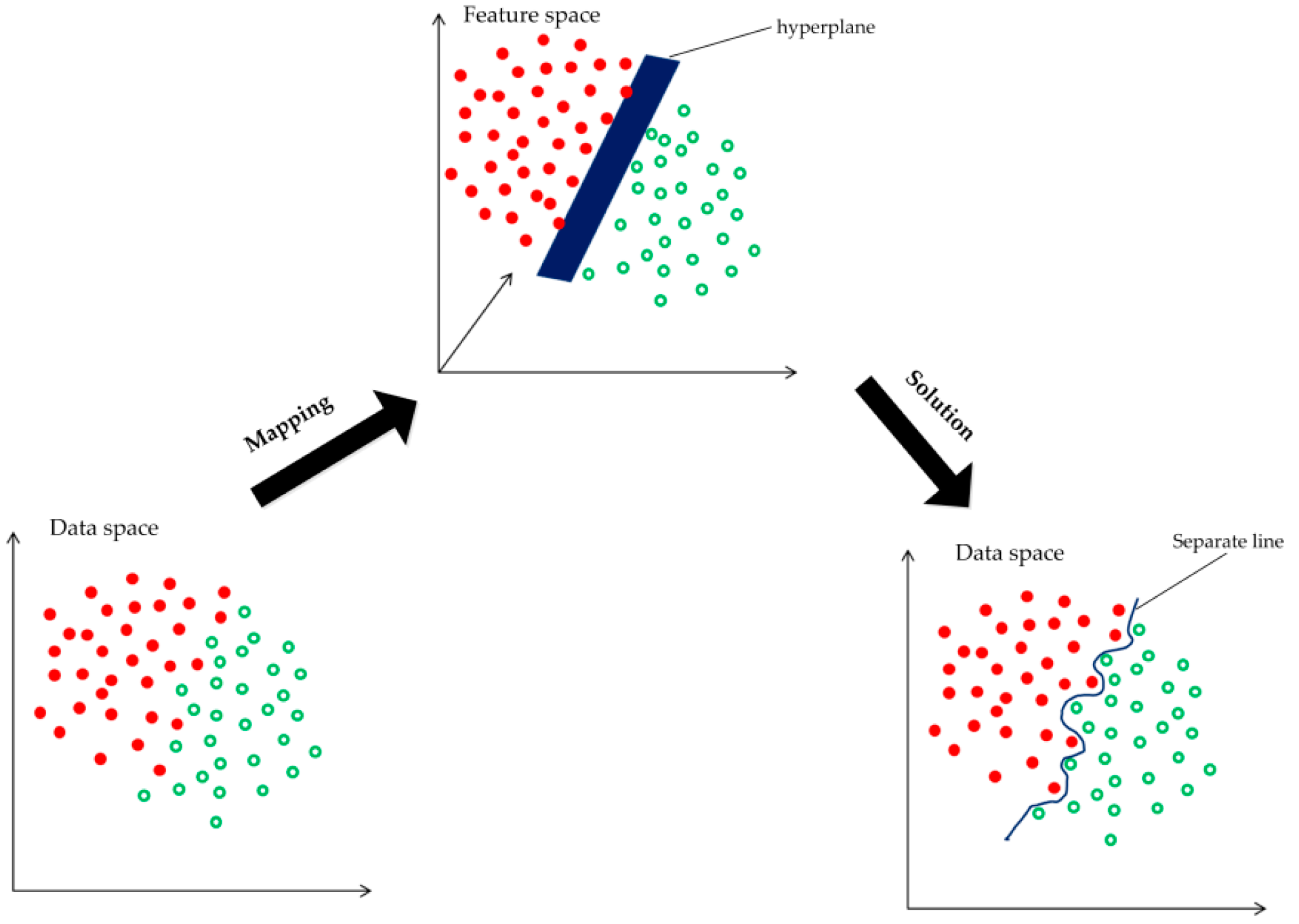

Segmentation by PKGC: The PKGC algorithm borrows from the idea of kernel k-means, and a kernel function

is introduced in the segmentation. The image data is implicitly mapped into the high-dimensional feature space. This makes it possible to highlight the slight difference between the image data, so that the original data, which cannot be divided, is linearly separable (or approximately linear), as

Figure 2 shows. This is helpful to construct the piecewise constant model (PCM) containing only the dot product operation and the unsupervised segmentation function. By Mercer’s theorem, any continuous, symmetric and positive semi-definite kernel function can be expressed as a dot product of higher dimensional space without knowing the mapping.

The kernel graph cuts model needs to set

marks for the

regions firstly, and then every pixel of the image is assigned a mask. Finally, determine which region that each pixel belongs to according to the mark. The segmentation by graph cuts method in the kernel-induced space is transformed into finding a mark allocation scheme to minimize the energy function. The energy function contains two items: the first is the kernel-induced distance term, which is used to estimate the deviation between the mapped data in each region of the PCM model, and the second is the smoothing term which can smooth adjacent pixels. The energy function is as follows:

where

is the non-Euclidean distance between the region’s parameter and the observations.

is the PCM parameter of region

, which can be acquired by the k-means clustering algorithm.

is the indexing function assigning a label to the pixel.

is the label of the segmentation region.

is the number of segmentation regions.

means the region of label

.

is the nonlinear mapping from image space

to the higher dimensional feature space

. The commonly-used function is the radial basis function (RBF),

.

and

represent two adjacent pixels.

is the smoothing function,

, where

is constant.

is a non-negative factor used to weigh the two terms. Then introducing the kernel function:

where “

” is the dot product in the feature space. The non-Euclidean distance of the feature space can be expressed as follows:

Then, substitution of Equation (3) into Equation (1) results in the expression:

Clearly, the solution of Equation (4) depends only on the regional parameters and the indexing function . The iterative two-step optimization method is used to minimize the function. Firstly, fix the labeling results (image segmentation) and update the current statistics region parameter. Optimize for the given kernel function. Then search for optimal labeling results (image segmentation) using the graph cuts iteration base on the region parameter obtained above.

Determining BRs: The image can be segmented into the foreground obvious objects regions and the background regions. Two input images are segmented using the PKGC algorithm independently. The BRs determine the intersection of the segmentation results of the left image and the right image. The remaining regions of overlapping area are regarded as ORs, i.e., the union of the segmentation results.

2.2. Constructing the Energy Function

We consider the following steps to construct a more accurate energy function. Firstly, calculate the global energy function and then obtain the weighted cost weighted by the BRs. Let the compound image I be the overlapping region of the input left image and the right image . The global energy function of pixel contains several aspects as follows:

2.2.1. Color Difference

Color difference is the most common energy function in seamline detection for image mosaicking. We calculate the difference in the HSV (hue, saturation, value) color space instead of the common RGB space. The color difference

is defined as follows:

where

and

is the intensity values of pixel

in the

and

channels of the HSV space of the left image

. Weight coefficient

is used to balance the effects of

and

channels, and equals 0.95 in this paper. Similarly,

and

express analogous meaning.

2.2.2. MSMG Constraint

In the image morphological processing, the structural element is a common tool for image feature extraction and the structural element with different shapes can extract different image features. Furthermore, changing the size of the element can be extended to the multi-scale space [

28]. The gradient can represent the sharpness of an image [

29,

30]. The comprehensive gradient feature will be extracted by the multi-scale morphological gradient operator [

31,

32]. In this paper, we propose a novel multi-angle linear structural element to extract the multi-scale morphological gradient (MSMG), extracting the multi-scale gradient of each angle and then combining them into the multi-scale morphological gradient, as

Figure 3 shows. The details of this method are given as follows:

Firstly, construct the multi-scale element:

where

is the basic linear structural element with length

and angle

, and

is the sum of scales.

Then the gradient feature

with scale

and angle

will be extracted by the above operator. Let image

.

where

and

is the morphological dilation and erosion respectively, which are defined as:

where

is the coordinate of the pixel in the image, and

is the coordinate of the structural element.

According to the above definition, the maximum and minimum gray value of the local image region can be obtained by dilation and erosion operators, respectively. The morphological gradient is defined as the difference of the dilation and erosion, which can extract the local information effectively. Meanwhile, we can obtain more comprehensive information by changing the scale and angle of the linear structural element. The large scale indicates the gradient information within long distances, while the gradient information with short distances is indicated by the small scale. Angle 0° indicates the horizontal gradient information, and angle 90° indicates the vertical gradient information.

Finally, gradients of all scales and all angles are integrated into the multi-scale morphological gradient

MSMG.

where

m is the number of angle

, and

in this paper, i.e.,

.

is the numbers of scales, and

in this paper.

is the weight of gradient in scale

,

.

The MSMG constraint

of pixel

is defined as:

where

and

are the multi-scale morphological gradients of the pixel

in the left

image and the right image

.

2.2.3. Texture Difference

In this paper, we calculate the image entropy of the 3 × 3 neighborhood of the pixel to represent the local texture features of the image. We iterate through all pixels using the entropy filter of size 3 in the implementation. Image entropy is the measure of data randomness in an image’s gray histogram, which is calculated by:

where

is the total number of histograms of image

. The detail texture information cannot be represented by image entropy due to only being based on the frequency of neighborhood data regardless of the intensity contrast. Considering the amalgamating of the variations of illumination and contrast in the image, it is considered adequate to regard image entropy as the coarse representation of texture features. The texture difference

is defined as following.

where

is the image entropy of the 3 × 3 neighborhood of the pixel

in the image, similar to

.

The global cost

is combined by the above three terms.

Then we weight

by the BRs obtained in

Section 2.1 to obtain the weighted cost

.

where

is the weight coefficient of BRs,

. The seamline should preferentially pass through the BRs around the obvious objects, so we give a small weight value for the BRs.

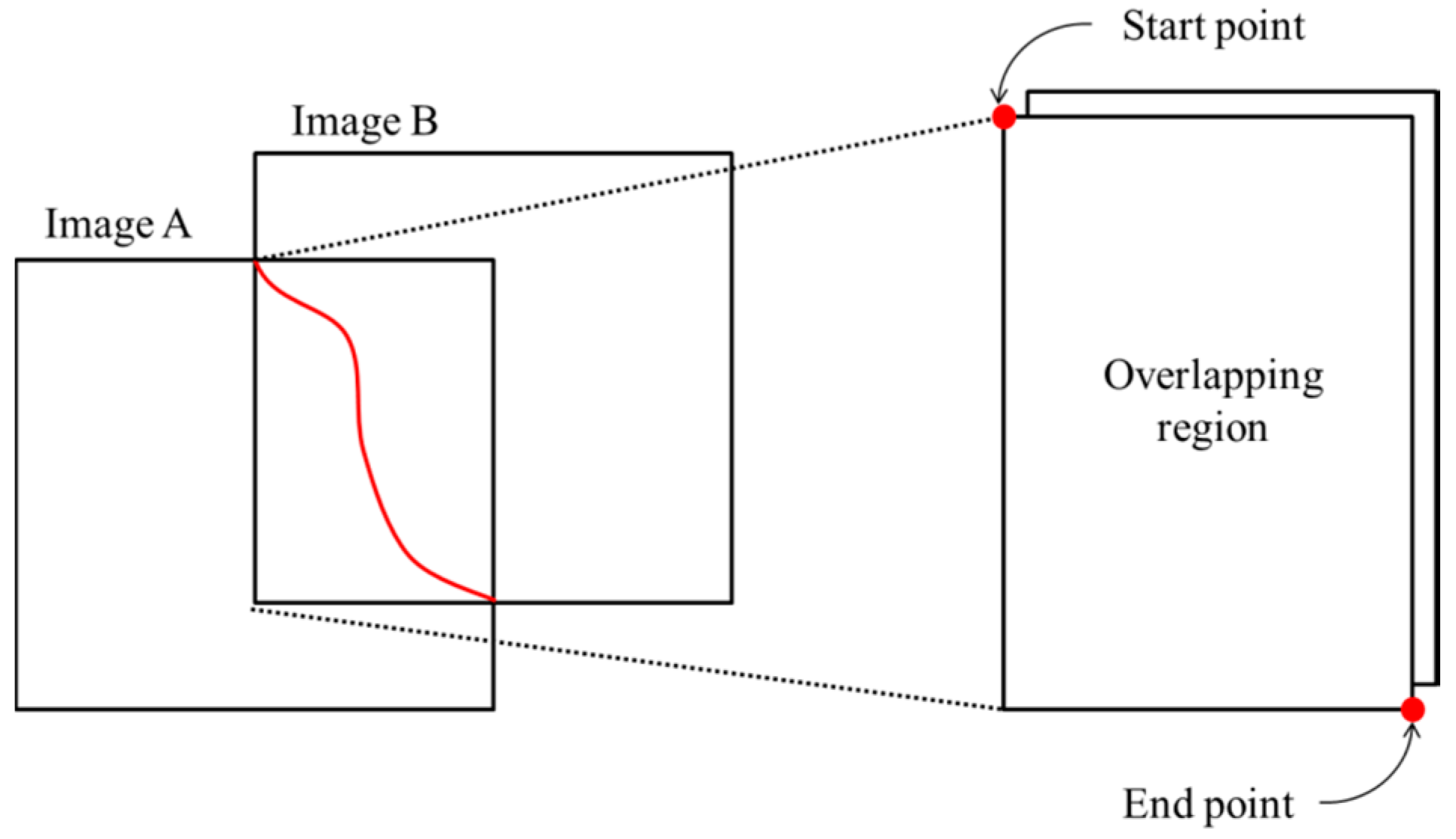

2.3. Pixel-Level Optimization

The purpose of the pixel-level optimization is to optimize the location of the seamline in the local area. As shown in

Figure 4, the overlap area of the image can be gained after determining the relation of the input images, and the intersection of the input images edges is determined as the start and end point of the seamline. Then we detect the shortest path based on the weighted cost from the start point to the end point.

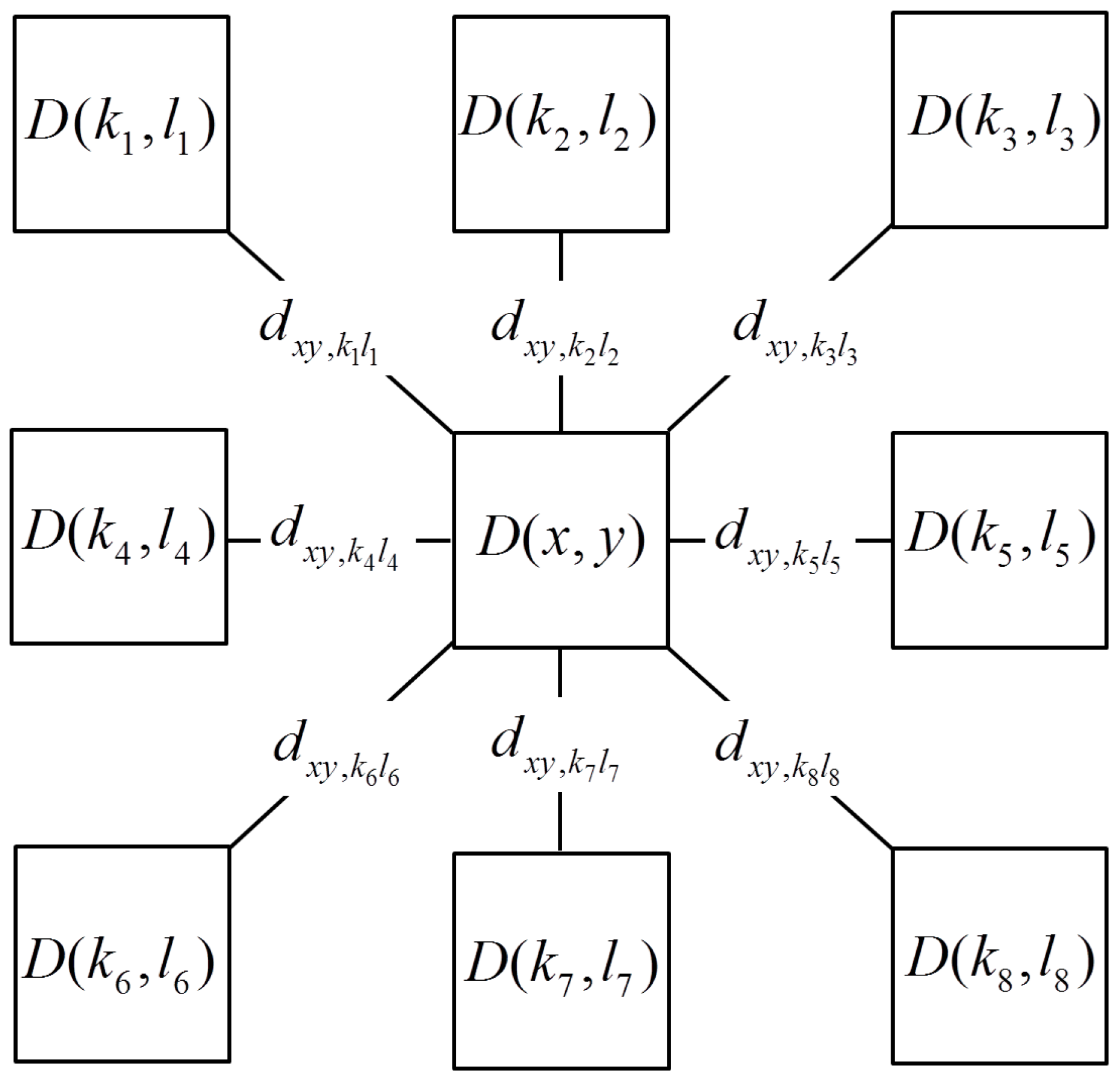

Dijkstra’s algorithm is a global optimization technique to find the shortest path between the two nodes in the graph. Each pixel in the overlapping region is regard as one node which has eight neighbor nodes. As shown in

Figure 5, the local cost is calculated based on the cost difference of neighbor nodes when detecting the shortest path using Dijkstra’s algorithm. Let

be one node and

be a neighbor node of this node. The local cost of these two nodes is defined as:

where

and

are the weighted cost of pixel

and

. Let

be all adjacent nodes of the node

.

and

represent the global minimum cost from the start node to pixel

and

, respectively.

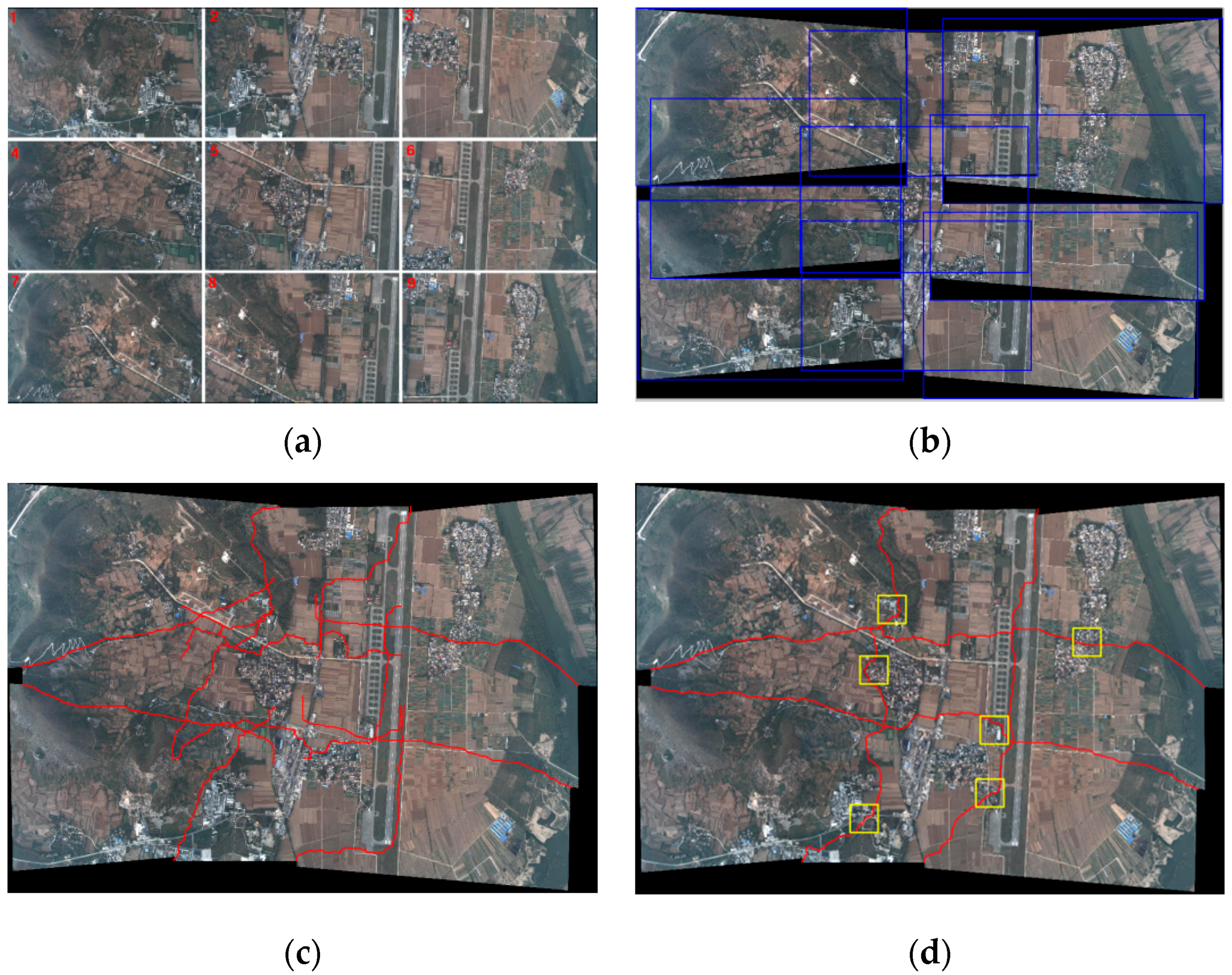

2.4. Multi-Image Seamline Detection

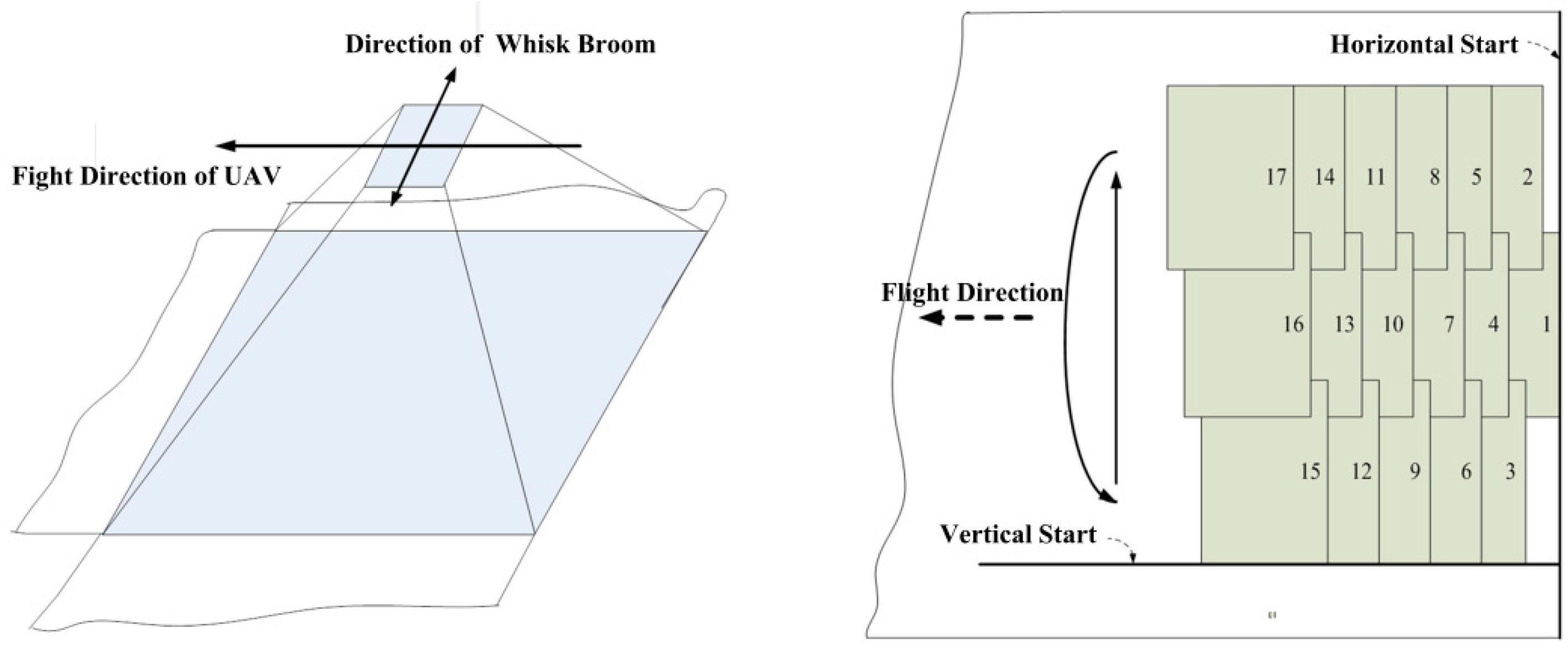

We introduce the method of seamline determination in a two-image overlapping region and can obtain a panoramic image with a wide field of view and high resolution by mosaicking a set of images using this method. As shown in

Figure 6a, in the process of multi-image mosaicking, we hope that there is no multi-image overlap, i.e., the overlapping regions are all two-image overlap. In practical applications, the regions are always multi-image overlap.

Figure 6b shows an illustrative example where the overlapping region is overlapped by three input images A, B, and C. The traditional method is to detect the seamline just between each of the two images, named frame-to-frame [

33]. To mosaic multiple images more accurately, we propose a new optimization strategy to detect seamlines for multi-image overlap. Firstly we find the point

which is the weighted cost minimum in the setting rectangle. The center of this rectangle is the center of the multi-image overlapping region and its length and height are a quarter of the overlapping region. Then we detect the seamlines from the point

to the joint points of AB, AC, and BC. Finally, the panoramic image is mosaicked based on the optimal seamlines.