Automatic Leaf Segmentation for Estimating Leaf Area and Leaf Inclination Angle in 3D Plant Images

Abstract

:1. Introduction

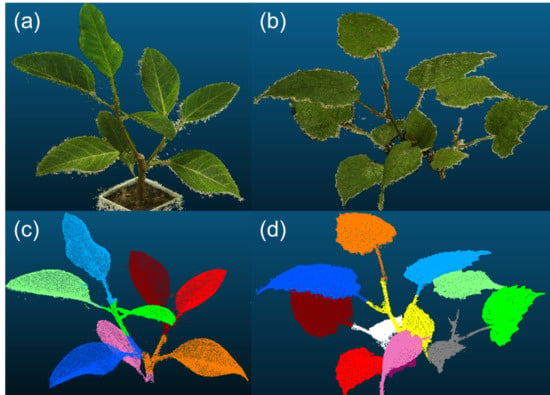

2. Materials and Methods

2.1. Plant Material

2.2. 3D Reconstruction of Plants

2.3. Automatic Leaf Segmentation and Its Evaluation

2.3.1. Conversion into Voxel Coordinate

2.3.2. Generation of Top-View Binary 2D Image from Voxel-Based 3D Model

2.3.3. Generation of Seed Regions for 3D Leaf Segmentation

2.3.4. Automatic Leaf Segmentation by Expanding the Seed Region

2.4. Automatic Leaf Area and Leaf Inclination Angle Estimation from Segmented Leaves

2.5. Evaluation of the Accuracy of Automatic Leaf Segmentation and Leaf Area and Leaf Inclination Angle Estimation

3. Results

3.1. Leaf Segmentation

3.2. Leaf Area and Leaf Inclination Angle Estimation from Segmented Leaves

4. Discussion

4.1. Leaf Segmentation

4.2. Voxel-Based Leaf Area Calculation and Leaf Inclination Angle Estimation

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Muller-Linow, M.; Pinto-Espinosa, F.; Scharr, H.; Rascher, U. The leaf angle distribution of natural plant populations: Assessing the canopy with a novel software tool. Plant Methods 2015, 11, 11. [Google Scholar] [CrossRef] [PubMed]

- Shono, H. A new method of image measurement of leaf tip angle based on textural feature and a study of its availability. Environ. Control Biol. 1995, 33, 197–207. [Google Scholar] [CrossRef]

- Dornbusch, T.; Wernecke, P.; Diepenbrock, W. A method to extract morphological traits of plant organs from 3D point clouds as a database for an architectural plant model. Ecol. Model. 2007, 200, 119–129. [Google Scholar] [CrossRef]

- Zhang, Y.; Teng, P.; Shimizu, Y.; Hosoi, F.; Omasa, K. Estimating 3D Leaf and Stem Shape of Nursery Paprika Plants by a Novel Multi-Camera Photography System. Sensors 2016, 16, 874. [Google Scholar] [CrossRef] [PubMed]

- Leemans, V.; Dumont, B.; Destain, M.F. Assessment of plant leaf area measurement by using stereo-vision. In Proceedings of the 2013 International Conference on 3D Imaging, Liège, Belgium, 3–5 December 2013; pp. 1–5. [Google Scholar]

- Honjo, T.; Shono, H. Measurement of leaf tip angle by using image analysis and 3-D digitizer. J. Agric. Meteol. 2001, 57, 101–106. [Google Scholar] [CrossRef]

- Muraoka, H.; Takenaka, A.; Tang, H.; Koizumi, H.; Washitani, I. Flexible leaf orientations of Arisaema heterophyllum maximize light capture in a forest understorey and avoid excess irradiance at a deforested site. Ann. Bot. 1998, 82, 297–307. [Google Scholar] [CrossRef]

- Bailey, B.N.; Mahaffee, W.F. Rapid measurement of the three-dimensional distribution of leaf orientation and the leaf angle probability density function using terrestrial LiDAR scanning. Remote Sens. Environ. 2017, 194, 63–76. [Google Scholar] [CrossRef]

- Konishi, A.; Eguchi, A.; Hosoi, F.; Omasa, K. 3D monitoring spatio–temporal effects of herbicide on a whole plant using combined range and chlorophyll a fluorescence imaging. Funct. Plant Biol. 2009, 36, 874–879. [Google Scholar] [CrossRef]

- Paproki, A.; Sirault, X.; Berry, S.; Furbank, R.; Fripp, J. A novel mesh processing based technique for 3D plant analysis. BMC Plant Boil. 2012, 12, 63. [Google Scholar] [CrossRef] [PubMed]

- Paulus, S.; Behmann, J.; Mahlein, A.K.; Plümer, L.; Kuhlmann, H. Low-cost 3D systems: Suitable tools for plant phenotyping. Sensors 2014, 14, 3001–3018. [Google Scholar] [CrossRef] [PubMed]

- Hosoi, F.; Omasa, K. Voxel-based 3-D modeling of individual trees for estimating leaf area density using high-resolution portable scanning lidar. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3610–3618. [Google Scholar] [CrossRef]

- Hosoi, F.; Omasa, K. Estimating vertical plant area density profile and growth parameters of a wheat canopy at different growth stages using three-dimensional portable lidar imaging. ISPRS J. Photogramm. Remote Sens. 2009, 64, 151–158. [Google Scholar] [CrossRef]

- Leeuwen, M.V.; Nieuwenhuis, M. Retrieval of forest structural parameters using LiDAR remote sensing. Eur. J. For. Res. 2010, 129, 749–770. [Google Scholar] [CrossRef]

- Hosoi, F.; Nakai, Y.; Omasa, K. Estimation and error analysis of woody canopy leaf area density profiles using 3-D airborne and ground-based scanning lidar remote-sensing techniques. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2215–2223. [Google Scholar] [CrossRef]

- Hosoi, F.; Nakabayashi, K.; Omasa, K. 3-D modeling of tomato canopies using a high-resolution portable scanning lidar for extracting structural information. Sensors 2011, 11, 2166–2174. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dassot, M.; Constant, T.; Fournier, M. The use of terrestrial LiDAR technology in forest science: Application fields, benefits and challenges. Ann. For. Sci. 2011, 68, 959–974. [Google Scholar] [CrossRef]

- Hosoi, F.; Omasa, K. Estimation of vertical plant area density profiles in a rice canopy at different growth stages by high-resolution portable scanning lidar with a lightweight mirror. ISPRS J. Photogramm. Remote Sens. 2012, 74, 11–19. [Google Scholar] [CrossRef]

- Hosoi, F.; Nakai, Y.; Omasa, K. 3-D voxel-based solid modeling of a broad-leaved tree for accurate volume estimation using portable scanning lidar. ISPRS J. Photogramm. Remote Sens. 2013, 82, 41–48. [Google Scholar] [CrossRef]

- Itakura, K.; Hosoi, F. Automatic individual tree detection and canopy segmentation from three-dimensional point cloud images obtained from ground-based lidar. J. Agric. Meteol. 2018, 74, 109–113. [Google Scholar] [CrossRef]

- Ivanov, N.; Boissard, P.; Chapron, M.; Andrieu, B. Computer stereo plotting for 3-D reconstruction of a maize canopy. Agric. For. Meteol. 1995, 75, 85–102. [Google Scholar] [CrossRef]

- Li, L.; Zhang, Q.; Huang, D.F. A Review of Imaging Techniques for Plant Phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Takizawa, H.; Ezaki, N.; Mizuno, S.; Yamamoto, S. Plant recognition by integrating color and range data obtained through stereo vision. JACIII 2005, 9, 630–636. [Google Scholar] [CrossRef]

- Mizuno, S.; Noda, K.; Ezaki, N.; Takizawa, H.; Yamamoto, S. Detection of wilt by analyzing color and stereo vision data of plant. In Proceedings of the Computer Vision/Computer Graphics Collaboration Techniques, Rocquencourt, France, 4–6 May 2007; pp. 400–411. [Google Scholar]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Morgenroth, J.; Gomez, C. Assessment of tree structure using a 3D image analysis technique—A proof of concept. Urban For. Urban Green. 2014, 13, 198–203. [Google Scholar] [CrossRef]

- Obanawa, H.; Hayakawa, Y.; Saito, H.; Gomez, C. Comparison of DSMs derived from UAV-SfM method and terrestrial laser scanning. Jpn. Soc. Photogram. Remote Sens. 2014, 53, 67–74. [Google Scholar] [CrossRef] [Green Version]

- Rose, J.C.; Paulus, S.; Kuhlmann, H. Accuracy analysis of a multi-view stereo approach for phenotyping of tomato plants at the organ level. Sensors 2015, 15, 9651–9665. [Google Scholar] [CrossRef] [PubMed]

- Itakura, K.; Hosoi, F. Estimation of tree structural parameters from video frames with removal of blurred images using machine learning. J. Agric. Meteol. 2018, 74, 154–161. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Straightforward reconstruction of 3D surfaces and topography with a camera: Accuracy and geoscience application. J. Geophys. Res. Earth Surf. 2012, 117. [Google Scholar] [CrossRef] [Green Version]

- Xia, C.; Wang, L.; Chung, B.K.; Lee, J.M. In situ 3D segmentation of individual plant leaves using a RGB-D camera for agricultural automation. Sensor 2015, 15, 20463–20479. [Google Scholar] [CrossRef] [PubMed]

- Minervini, M.; Abdelsamea, M.; Tsaftaris, S.A. Image-based plant phenotyping with incremental learning and active contours. Ecol. Inf. 2014, 23, 35–48. [Google Scholar] [CrossRef] [Green Version]

- Alenya, G.; Dellen, B.; Foix, S.; Torras, C. Robotized plant probing: Leaf segmentation utilizing time-of-flight data. IEEE Robot. Autom. Mag. 2013, 20, 50–59. [Google Scholar] [CrossRef]

- Paulus, S.; Dupuis, J.; Mahlein, A.K.; Kuhlmann, H. Surface feature based classification of plant organs from 3D laserscanned point clouds for plant phenotyping. BMC Bioinfom. 2013, 14, 238. [Google Scholar] [CrossRef] [PubMed]

- Kaminuma, E.; Heida, N.; Tsumoto, Y.; Yamamoto, N.; Goto, N.; Okamoto, N.; Konagaya, A.; Matsui, M.; Toyoda, T. Automatic quantification of morphological traits via three-dimensional measurement of Arabidopsis. Plant J. 2014, 38, 358–365. [Google Scholar] [CrossRef] [PubMed]

- Teng, C.H.; Kuo, Y.T.; Chen, Y.S. Leaf segmentation, classification, and three-dimensional recovery from a few images with close viewpoints. Opt. Eng. 2011, 50, 037003. [Google Scholar]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal altitude, overlap, and weather conditions for computer vision UAV estimates of forest structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef]

- Miller, J.; Morgenroth, J.; Gomez, C. 3D modelling of individual trees using a handheld camera: Accuracy of height, diameter and volume estimates. Urban For. Urban Green. 2015, 14, 932–940. [Google Scholar] [CrossRef]

- Hosoi, F.; Omasa, K. Factors contributing to accuracy in the estimation of the woody canopy leaf area density profile using 3D portable lidar imaging. J. Exp. Bot. 2007, 58, 3463–3473. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Soille, P. Morphological Image Analysis: Principles and Applications; Springer Science & Business Media: Berlin, Germany, 2013. [Google Scholar]

- Maurer, C.R.; Qi, R.; Raghavan, V. A linear time algorithm for computing exact Euclidean distance transforms of binary images in arbitrary dimensions. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 265–270. [Google Scholar] [CrossRef] [Green Version]

- Gonzales, R.C.; Woods, R.E. Digital Image Processing; Pearson: England, UK, 2013; Chapter 10. [Google Scholar]

- Meyer, F. Topographic distance and watershed lines. Signal Proc. 1994, 38, 113–125. [Google Scholar] [CrossRef]

- Hosoi, F.; Nakai, Y.; Omasa, K. Estimating the leaf inclination angle distribution of the wheat canopy using a portable scanning lidar. J. Agric. Meteol. 2009, 65, 297–302. [Google Scholar] [CrossRef] [Green Version]

- Pound, M.P.; French, A.P.; Murchie, E.H.; Pridmore, T.P. Surface reconstruction of plant shoots from multiple views. In Proceedings of the ECCV 2014: Computer Vision—ECCV 2014 Workshops, Zurich, Switzerland, 6–12 September 2014; pp. 158–173. [Google Scholar]

- Frolov, K.; Fripp, J.; Nguyen, C.V.; Furbank, R.; Bull, G.; Kuffner, P.; Daily, H.; Sirault, X. Automated plant and leaf separation: Application in 3D meshes of wheat plants. In Proceedings of the International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 30 November–2 December 2016; pp. 1–7. [Google Scholar]

- Kanuma, T.; Ganno, K.; Hayashi, S.; Sakaue, O. Leaf area measurement using stereo vision. IFAC Proc. 1998, 31, 157–162. [Google Scholar] [CrossRef]

- Paulus, S.; Schumann, H.; Kuhlmann, H.; Léon, J. High-precision laser scanning system for capturing 3D plant architecture and analysing growth of cereal plants. Biosyst. Eng. 2014, 121, 1–11. [Google Scholar] [CrossRef]

| Average Leaf Number | Leaf Area (cm2) | Absolute Leaf Area Estimation Error (cm2) | Success Rate (%) | |

|---|---|---|---|---|

| Dwarf schefflera | 5 | 8.06 | 0.06 | 100 |

| Kangaroo vine | 11 | 13.74 | 0.67 | 82 |

| Pothos | 4 | 20.67 | 0.29 | 100 |

| Hydrangea | 4 | 41.48 | 1.66 | 100 |

| Council tree | 5.3 | 59.68 | 3.44 | 75 |

| Dwarf schefflera | 5 | 73.48 | 2.35 | 90 |

| All sample | 5.7 | 36.2 | 1.73 | 86.9 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Itakura, K.; Hosoi, F. Automatic Leaf Segmentation for Estimating Leaf Area and Leaf Inclination Angle in 3D Plant Images. Sensors 2018, 18, 3576. https://doi.org/10.3390/s18103576

Itakura K, Hosoi F. Automatic Leaf Segmentation for Estimating Leaf Area and Leaf Inclination Angle in 3D Plant Images. Sensors. 2018; 18(10):3576. https://doi.org/10.3390/s18103576

Chicago/Turabian StyleItakura, Kenta, and Fumiki Hosoi. 2018. "Automatic Leaf Segmentation for Estimating Leaf Area and Leaf Inclination Angle in 3D Plant Images" Sensors 18, no. 10: 3576. https://doi.org/10.3390/s18103576