Sparse Ultrasound Imaging via Manifold Low-Rank Approximation and Non-Convex Greedy Pursuit

Abstract

1. Introduction

2. Model-Based Imaging and Regularization

3. Off-Grid Events and Dictionary Expansion

4. Rank-K Approximation of Local Manifolds

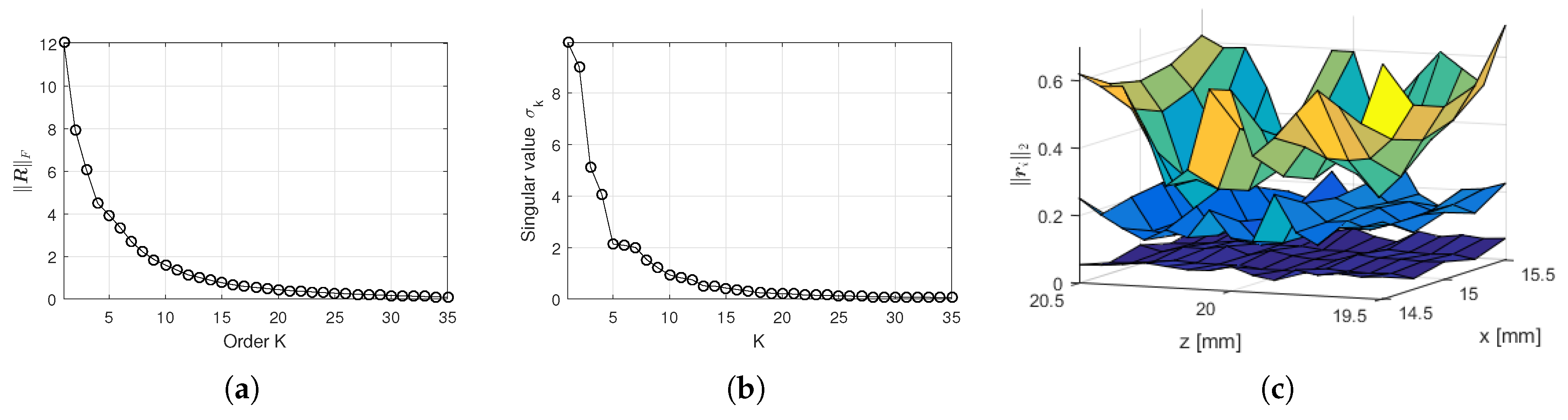

4.1. Highly Coherent Discrete Local Manifolds

4.2. SVD Expansion

5. Reconstruction Algorithm

5.1. Limitations of Conic Constraints

5.2. Non-Convex Constraints

5.3. OMP for Expanded Dictionaries

| Algorithm 1 OMP for Expanded Dictionaries (OMPED) |

Input:, , , , , ,

|

5.4. Recovery of Locations and Amplitudes

6. Empirical Results

6.1. Simulated Acquisition Set

6.2. Recovery Accuracy

6.3. Estimation of Residual and Stop Criterion

6.4. Reconstructed Images: Examples

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Fessler, J.A. Model-based image reconstruction for MRI. IEEE Signal Process. Mag. 2010, 27, 81–89. [Google Scholar] [CrossRef] [PubMed]

- Ollinger, J.M.; Fessler, J.A. Positron-emission tomography. IEEE Signal Process. Mag. 1997, 14, 43–55. [Google Scholar] [CrossRef]

- Censor, Y. Finite series-expansion reconstruction methods. Proc. IEEE 1983, 71, 409–419. [Google Scholar] [CrossRef]

- Lavarello, R.; Kamalabadi, F.; O’Brien, W.D. A regularized inverse approach to ultrasonic pulse-echo imaging. IEEE Trans. Med. Imaging 2006, 25, 712–722. [Google Scholar] [CrossRef] [PubMed]

- Zanin, L.; Zibetti, M.; Schneider, F. Conjugate gradient and regularized Inverse Problem-Based solutions applied to ultrasound image reconstruction. In Proceedings of the 2011 IEEE International Ultrasonics Symposium (IUS), Orlando, FL, USA, 18–21 October 2011; pp. 377–380. [Google Scholar]

- Zanin, L.G.S.; Schneider, K.F.; Zibetti, M.V.W. Regularized Reconstruction of Ultrasonic Imaging and the Regularization Parameter Choice. In Proceedings of the International Conference on Bio-Inspired Systems and Signal Processing, Vilamoura, Portugal, 1–4 February 2012; pp. 438–442. [Google Scholar]

- Desoky, H.; Youssef, A.B.M.; Kadah, Y.M. Reconstruction using optimal spatially variant kernel for B-mode ultrasound imaging. In Proceedings of the SPIE Medical Imaging 2003: Ultrasonic Imaging and Signal Processing, San Diego, CA, USA, 23–28 February 2003; pp. 147–153. [Google Scholar]

- Lingvall, F.; Olofsson, T.; Stepinski, T. Synthetic aperture imaging using sources with finite aperture: Deconvolution of the spatial impulse response. J. Acoust. Soc. Am. 2003, 114, 225–234. [Google Scholar] [CrossRef] [PubMed]

- Lingvall, F.; Olofsson, T. On time-domain model-based ultrasonic array imaging. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2007, 54, 1623–1633. [Google Scholar] [CrossRef] [PubMed]

- Olofsson, T.; Wennerstrom, E. Sparse Deconvolution of B-Scan Images. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2007, 54, 1634–1641. [Google Scholar] [CrossRef] [PubMed]

- Viola, F.; Ellis, M.A.; Walker, W.F. Time-domain optimized near-field estimator for ultrasound imaging: Initial development and results. IEEE Trans. Med. Imaging 2008, 27, 99–110. [Google Scholar] [CrossRef] [PubMed]

- Schmerr, L.W., Jr. Fundamentals of Ultrasonic Phased Arrays; Springer: Berlin, Germany, 2014; Volume 215. [Google Scholar]

- Smith, N.; Webb, A. Introduction to Medical Imaging: Physics, Engineering and Clinical Applications; Cambridge Texts in Biomedical Engineering, Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Jensen, J.A. A model for the propagation and scattering of ultrasound in tissue. Acoust. Soc. Am. 1991, 89, 182–190. [Google Scholar] [CrossRef]

- Jensen, J. Simulation of advanced ultrasound systems using Field II. In Proceedings of the 2004 IEEE International Symposium on Biomedical Imaging: Nano to Macro, Arlington, VA, USA, 15–18 April 2004; Volume 1, pp. 636–639. [Google Scholar] [CrossRef]

- Mor, E.; Azoulay, A.; Aladjem, M. A matching pursuit method for approximating overlapping ultrasonic echoes. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2010, 57, 1996–2004. [Google Scholar] [CrossRef] [PubMed]

- Carcreff, E.; Bourguignon, S.; Idier, J.; Simon, L. A linear model approach for ultrasonic inverse problems with attenuation and dispersion. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2014, 61, 1191–1203. [Google Scholar] [CrossRef] [PubMed]

- Kruizinga, P.; van der Meulen, P.; Fedjajevs, A.; Mastik, F.; Springeling, G.; de Jong, N.; Bosch, J.G.; Leus, G. Compressive 3D ultrasound imaging using a single sensor. Sci. Adv. 2017, 3. [Google Scholar] [CrossRef] [PubMed]

- Golub, G.H.; Van Loan, C.F. Matrix Computations (Johns Hopkins Studies in Mathematical Sciences), 3rd ed.; The Johns Hopkins University Press: Baltimore, MD, USA, 1996. [Google Scholar]

- Hansen, C. Rank-Deficient and Discrete Ill-Posed Problems: Numerical Aspects of Linear Inversion; SIAM: Philadelphia, PA, USA, 1998. [Google Scholar]

- Guarneri, G.A.; Pipa, D.R.; Junior, F.N.; de Arruda, L.V.R.; Zibetti, M.V.W. A Sparse Reconstruction Algorithm for Ultrasonic Images in Nondestructive Testing. Sensors 2015, 15, 9324–9343. [Google Scholar] [CrossRef] [PubMed]

- Chi, C.Y.; Goutsias, J.; Mendel, J. A fast maximum-likelihood estimation and detection algorithm for Bernoulli-Gaussian processes. In Proceedings of the ICASSP ’85 IEEE International Conference on Acoustics, Speech, and Signal Processing, Tampa, FL, USA, 26–29 April 1985; Volume 10, pp. 1297–1300. [Google Scholar] [CrossRef]

- Ekanadham, C.; Tranchina, D.; Simoncelli, E.P. Recovery of Sparse Translation-Invariant Signals With Continuous Basis Pursuit. IEEE Trans. Signal Process. 2011, 59, 4735–4744. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Zhang, C.; Xie, L. Robustly stable signal recovery in compressed sensing with structured matrix perturbation. IEEE Trans. Signal Process. 2012, 60, 4658–4671. [Google Scholar] [CrossRef]

- Teke, O.; Gurbuz, A.C.; Arikan, O. A robust compressive sensing based technique for reconstruction of sparse radar scenes. Dig. Signal Process. 2014, 27, 23–32. [Google Scholar] [CrossRef]

- Tang, G.; Bhaskar, B.N.; Shah, P.; Recht, B. Compressed Sensing Off the Grid. IEEE Trans. Inf. Theory 2013, 59, 7465–7490. [Google Scholar] [CrossRef]

- Zhu, H.; Leus, G.; Giannakis, G.B. Sparsity-Cognizant Total Least-Squares for Perturbed Compressive Sampling. IEEE Trans. Signal Process. 2011, 59, 2002–2016. [Google Scholar] [CrossRef]

- Chen, S.S.; Donoho, D.L.; Saunders, M.A. Atomic Decomposition by Basis Pursuit. SIAM J. Sci. Comput. 1998, 20, 33–61. [Google Scholar] [CrossRef]

- Knudson, K.C.; Yates, J.; Huk, A.; Pillow, J.W. Inferring sparse representations of continuous signals with continuous orthogonal matching pursuit. Adv. Neural Inf. Process. Syst. 2014, 27, 1215–1223. [Google Scholar]

- Tropp, J.A.; Gilbert, A.C. Signal Recovery From Random Measurements Via Orthogonal Matching Pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Passarin, T.A.R.; Pipa, D.R.; Zibetti, M.V.W. A minimax dictionary expansion for sparse continuous reconstruction. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 2136–2140. [Google Scholar] [CrossRef]

- Eckart, C.; Young, G. The approximation of one matrix by another of lower rank. Psychometrika 1936, 1, 211–218. [Google Scholar] [CrossRef]

- Fyhn, K.; Marco, F.; Holdt, S. Compressive parameter estimation for sparse translation-invariant signals using polar interpolation. IEEE Trans. Signal Process. 2015, 63, 870–881. [Google Scholar] [CrossRef]

- Barrett, H.; Myers, K. Foundations of Image Science; Wiley Series in Pure and Applied Optics; Wiley-Interscience: Hoboken, NJ, USA, 2004. [Google Scholar]

- Bovik, A. Handbook of Image & Video Processing; Academic Press Series in Communications, Networking and Multimedia; Academic Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Kim, S.J.; Koh, K.; Lustig, M.; Boyd, S.; Gorinevsky, D. An Interior-Point Method for Large-Scale L1-Regularized Least Squares. IEEE J. Sel. Top. Signal Process. 2007, 1, 606–617. [Google Scholar] [CrossRef]

- Tupholme, G.E. Generation of acoustic pulses by baffled plane pistons. Mathematika 1969, 16, 209–224. [Google Scholar] [CrossRef]

- Miller, A. Subset Selection in Regression; CRC Press: Boca Raton, FL, USA, 2002. [Google Scholar]

- Soussen, C.; Idier, J.; Brie, D.; Duan, J. From Bernoulli Gaussian Deconvolution to Sparse Signal Restoration. IEEE Trans. Signal Process. 2011, 59, 4572–4584. [Google Scholar] [CrossRef]

- Schmerr, L.W.; Song, S.J. Ultrasonic Nondestructive Evaluation Systems: Models and Measurements; Springer: Berlin, Germany, 2007. [Google Scholar]

- Velichko, A.; Bai, L.; Drinkwater, B. Ultrasonic defect characterization using parametric-manifold mapping. Proc. R. Soc. A 2017, 473, 20170056. [Google Scholar] [CrossRef] [PubMed]

- Ekanadham, C.; Tranchina, D.; Simoncelli, E.P. A unified framework and method for automatic neural spike identification. J. Neurosci. Methods 2014, 222, 47–55. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rigo Passarin, T.A.; Wüst Zibetti, M.V.; Rodrigues Pipa, D. Sparse Ultrasound Imaging via Manifold Low-Rank Approximation and Non-Convex Greedy Pursuit. Sensors 2018, 18, 4097. https://doi.org/10.3390/s18124097

Rigo Passarin TA, Wüst Zibetti MV, Rodrigues Pipa D. Sparse Ultrasound Imaging via Manifold Low-Rank Approximation and Non-Convex Greedy Pursuit. Sensors. 2018; 18(12):4097. https://doi.org/10.3390/s18124097

Chicago/Turabian StyleRigo Passarin, Thiago Alberto, Marcelo Victor Wüst Zibetti, and Daniel Rodrigues Pipa. 2018. "Sparse Ultrasound Imaging via Manifold Low-Rank Approximation and Non-Convex Greedy Pursuit" Sensors 18, no. 12: 4097. https://doi.org/10.3390/s18124097

APA StyleRigo Passarin, T. A., Wüst Zibetti, M. V., & Rodrigues Pipa, D. (2018). Sparse Ultrasound Imaging via Manifold Low-Rank Approximation and Non-Convex Greedy Pursuit. Sensors, 18(12), 4097. https://doi.org/10.3390/s18124097