1. Introduction

Vehicle localization is one of the key components of both autonomous driving and advanced driver assistance systems (ADAS). Accurate localization results can improve performances of other key components such as perception, planning, and control. The most widely used vehicle localization method is global navigation satellite systems (GNSS). This method provides global positions and its error is not accumulated, but produces inaccurate results when the GNSS signal is reflected or blocked. To alleviate this problem, GNSS has often been fused with dead reckoning (DR). This method is robust against the status of the GNSS signal and provides accurate results in a short period of time, but its error can accumulate over time. Recently, to overcome these drawbacks, localization methods that utilize a perception sensor and digital map have been widely researched [

1]. These methods localize the ego-vehicle by matching the landmarks detected by the perception sensor and the landmarks stored in the digital map.

Various types of landmarks have been used, and road markings are one of the most widely used landmarks for vehicle localization. In terms of the perception sensor, road markings are relatively easy to detect because their shapes are predetermined by regulations and their color and reflectivity are significantly different from those of the road surface. In terms of the digital map, road markings can be stored at low capacity because their shapes are relatively simple compared with other road structures. Among road markings, lane markings are most widely used for vehicle localization. Lane markings provide abundant information on lateral position, but lack information on longitudinal position. To alleviate the drawback of lane markings, methods that utilize stop lines, crosswalks, arrows, and letters have been suggested. Those road markings are useful in urban situations because they frequently appear on urban roads. However, in highway situations, stop lines and crosswalks rarely exist, and arrows and letters seldom appear.

Figure 1 shows the results of analyzing sequential images taken from approximately 40 km of highway from the viewpoint of road markings. First of all, this highway does not include any stop lines and crosswalks. In general, there are no stop lines and crosswalks on Korean highways. Red points in

Figure 1 indicate the locations where arrows and letters were captured by the front camera. The arrows and letters were observed only in approximately 3% of the entire images. Furthermore, the arrows and letters are concentrated in specific areas such as intersections. The roads where the arrows and letters are not continuously observed reach up to 7.8 km. This analysis clearly shows that stop lines, crosswalks, arrows, and letters have limitations of improving longitudinal positioning accuracy in highway situations.

To overcome the limitations of the abovementioned road markings, lane endpoints can be used as landmarks to improve longitudinal positioning accuracy. The lane endpoint indicates the location where a lane marking starts or ends, so this paper classifies it into a lane starting point and a lane ending point.

Figure 2a shows lane endpoints taken in a highway. Red and blue crosses indicate the starting and ending points, respectively. As a landmark for vehicle localization in highway situations, the lane endpoint has the following advantages: (1) Since it represents a specific point, it provides information on both lateral and longitudinal positions; (2) Since it has a simple and distinct shape, it can effectively be detected using a conventional automotive front camera; (3) Since it can be captured at a close distance, its position accuracy is guaranteed; (4) It frequently appears in highway situations. In

Figure 1, blue points indicate the locations where the lane endpoints were captured by the front camera while driving on approximately 40 km of highway. The lane endpoints were observed in approximately 88% of the entire images, and the roads where the lane endpoints are not continuously observed reach at most to 1.7 km in a tunnel. According to Korean regulations, the length of a single dashed lane marking is 8.0 m, longitudinal distance between two dashed lane markings is 12.0 m, and the width of the driving lane is at least 3.5 m as shown in

Figure 2b. This means that the lane endpoints of the same type (staring or ending point) appear every 20.0 m in the longitudinal direction and every 3.5 m in the lateral direction. Because there is enough space between adjacent lane endpoints, there is little confusion between adjacent lane endpoints when matching them with those stored in the digital map. The effect of using a specific location of a road marking for vehicle localization has been verified in [

2] where arrow endpoints are used instead of lane endpoints. Note that this paper suggests that the lane endpoints are used as one of several landmarks, rather than suggesting the use of lane endpoints alone.

This paper proposes two methods concerning the use of the lane endpoints as landmarks for vehicle localization. First, it proposes a method to efficiently detect the lane endpoints using a vehicle-mounted monocular forward-looking (front) camera, which is the most widely installed perception sensor. Second, it proposes a method to reliably measure the position accuracy of the lane endpoints detected from images taken while the ego-vehicle is moving at high speed.

Since the front camera module is responsible for detecting various objects such as lane markings, vehicles, pedestrians, traffic signs, etc., an algorithm added to this module should require less computational costs. Thus, this paper proposes a method that can efficiently detect lane endpoints by combining simple algorithms. The proposed method first extracts lane pixels using a top-hat filter, and detects left and right lanes based on RANSAC. Once the lanes are detected, profiles of the top-hat filter response are generated along the detected lanes. Lane endpoint candidates are generated by finding local minima and maxima from differentiation results of the profiles. The lane endpoint candidates are verified by a two-class classifier after converting them into bird’s-eye view images. Finally, the positions of the lane endpoints from the camera are calculated based on the camera’s intrinsic and extrinsic parameters.

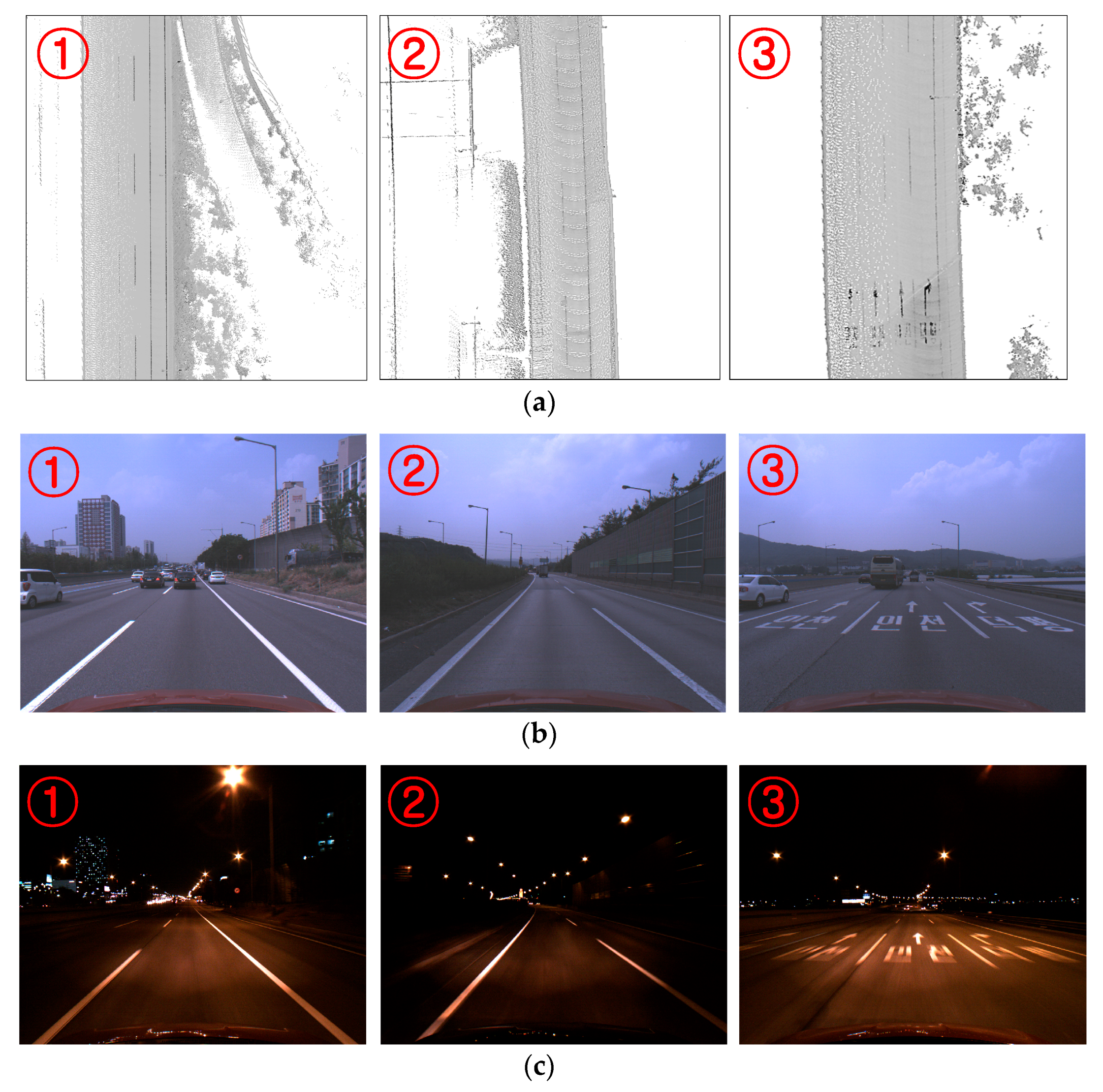

In general, the landmark-based localization system estimates the position of the ego-vehicle by comparing the positions of the detected landmarks from the ego-vehicle and those stored in the digital map. This means the position accuracy of the detected landmark is directly related to the performance of the vehicle localization system. In addition, it is necessary to have a distribution of the position error of the detected landmark in order to effectively apply extended Kalman or particle filtering methods. However, it is quite difficult to precisely measure the position accuracy of the landmarks, which are detected from images taken while the ego-vehicle is moving on a highway at high speed. According to our literature review, there has been no previous work attempting it. To reliably measure the position accuracy of the landmark, this paper proposes a method that uses a mobile mapping system (MMS) after examining various approaches. Since the MMS consists of high-precision positioning equipment and high performance LIDARs, it produces highly accurate positions and dense 3D points with reflectivities. If the front camera is attached to the MMS, 3D locations of the detected lane endpoints from the front camera can be accurately obtained while the ego-vehicle is moving at high speed by using the precise positions and dense 3D points produced by the MMS. Thus, this paper reliably measures the position accuracy of the lane endpoints by comparing its position obtained from the camera and the corresponding position obtained from the MMS.

In the experiment, the proposed method was evaluated based on a dataset acquired while driving on approximately 80 km of highway. Half of the dataset was acquired during daytime and the other half at night. The proposed lane endpoint detection method shows 96.1% recall and 99.7% precision in daytime, and 94.7% recall and 100% precision at night. As a result of the MMS-based position accuracy evaluation, the detected lane endpoints show 7.8 cm and 21.6 cm average position errors in lateral and longitudinal directions during daytime, and 8.2 cm and 48.2 cm average position errors in lateral and longitudinal directions at night. In terms of execution time, this method requires only 4.35 ms per image so that it can handle 230 frames per second.

The rest of this paper is organized as follows:

Section 2 explains related research.

Section 3 and

Section 4 describe the proposed lane endpoint detection method and the MMS-based position accuracy evaluation method, respectively.

Section 5 presents experimental results and analyses. Finally, this paper is concluded with future works in

Section 6.

2. Related Research

The perception sensor and digital map-based vehicle location methods can be categorized based on the information acquired by the perception sensor and stored in the digital map. According to this criterion, previous methods can be categorized into range data-based, feature point-based, and road marking-based approaches. Since the proposed system is categorized into the road marking-based approach, the other two approaches are briefly introduced in this section.

Figure 3 shows the taxonomy of the vehicle localization methods.

The range data-based approach acquires range data using perception sensors and matches them with range information stored in the digital map. Since this approach mostly utilizes active sensors such as LIDARs and Radars, it is robust against illumination conditions and textures of surrounding objects. However, its performance can be degraded in cases where a few fixed objects and many moving obstacles are presented on a broad road. The methods in this approach recognize specific objects (e.g., curbs [

3], building facades [

4], pole-like objects [

5], or guardrails [

6]), or directly utilize 3D point clouds [

7] to match them with the digital map.

The feature point-based approach extracts feature points mostly from images and matches them with feature points stored in the digital map by comparing their descriptors. Because this approach utilizes a large number of feature points on various surrounding objects, it can achieve high localization accuracy. However, its performance can be affected by appearance and position changes of obstacles. In addition, it requires a large storage volume to store numerous feature points along with their high-dimensional descriptors in the digital map. The methods in this approach utilize various types of interest point detectors and feature descriptors: maximally stable extremal regions (MSER) with moment invariants [

8], difference of Gaussians (DoG) with scale-invariant feature transform (SIFT), fast Hessian detector with speeded up robust features (SURF) [

9], and DIRD (Dird is an illumination robust descriptor) [

10].

The road marking-based approach extracts markings on road surfaces and matches them with road markings stored in the digital map. Since road markings are visually distinctive and under government regulations, they can be reliably detected compared to other objects. However, localization performance can be degraded when the road markings are worn or covered with snow. The road marking-based approach can further be categorized into signal-level, feature-level, and symbol-level approaches according to the level of information being used.

The signal-level approach uses raw data of road surfaces obtained from the perception sensor. Mattern et al. [

11] matches intensities of image pixels with a virtual road surface image generated from the digital map. Levinson and Thrun [

12] compares infrared reflectivities of a LIDAR with their means and standard deviations stored in the digital map. The feature-level approach utilizes features of the road marking extracted from raw data of the perception sensor. Hata and Wolf [

13], Kim et al. [

14], and Suganuma and Uozumi [

15] find road marking features by comparing infrared reflectivities acquired by LIDARs with neighboring reflectivities or threshold values. Schreiber et al. [

16] extracts road marking features by applying the oriented matched filter to free space obtained by a stereo camera. Deusch et al. [

17] uses the maximally stable extremal regions to find road marking features from images of a front camera. Jo et al. [

18] utilizes the top-hat filter to extract road marking features from bird’s-eye view images. The signal-level and feature-level approaches require less effort to handle the outputs of the perception sensors. However, since the raw data and road marking features have a large amount of information, these approaches require a high computational cost for the matching procedure and a large storage volume for the digital map.

The symbol-level approach recognizes a variety of types of road markings and matches them with those stored in the digital map. Lane markings are the most popularly used symbols for vehicle localization. Nedevschi et al. [

19], Jo et al. [

20], Lu et al. [

21], Gruyer et al. [

22], Tao et al. [

23], Shunsuke et al. [

24], and Suhr et al. [

2] utilize a variety of types of cameras to detect lane markings for vehicle localization purposes. In particular, Nedevschi et al. [

19] not only recognize positions of lanes but also their types (e.g., double, single, interrupted, and merge) as additional information. Even though the lane markings provide abundant information on the lateral position, they lack information on the longitudinal position. To complement this, stop lines, crosswalks, arrows, and letters have been utilized as well. Nedevschi et al. [

19] recognizes stop-lines using a stereo camera and Jo et al. [

20] recognize crosswalks using a front camera in order to enhance longitudinal localization accuracies. Nedevschi et al. [

19] detect five types of arrows using a stereo camera to recognize the driving lane of the ego-vehicle. Wu and Ranganathan [

25] recognize ten types of arrows and letters using a stereo camera and extract corner features from them. Suhr et al. [

2] recognize nine types of arrows and diamond along with their starting points using a single front camera. The symbol-level approach requires a low computational cost for the matching procedure and a small storage volume for the digital map because it simply matches and stores locations and types of symbols. However, it requires additional computing resources to reliably recognize specific symbols. Thus, the symbol recognition procedure should be designed to be cost-effective.

The lane endpoints are expected to be good landmarks for vehicle localization when applying the symbol-level approach to highway situations. This paper proposes two essential components for its application: a cost-effective method to detect the lane endpoints using a conventional monocular front camera and a reliable method to measure the position accuracy of the lane endpoints based on the MMS. According to our literature review, there has been no previous work that explicitly detects the lane endpoint for vehicle localization purposes and measures its position accuracy.

4. Position Accuracy Evaluation

4.1. Candidates for Evaluation Methods

Unfortunately, after extensive literature review, we have not found any previous methods for evaluating the position accuracy of the road markings, which are detected from images taken during high-speed driving. Thus, this section introduces the methods considered as candidates for position accuracy evaluation, and explains the reason why the MMS-based method is finally selected. The following three methods have been considered as candidates: measuring instrument-based, on-vehicle sensor-based, and MMS-based.

The measuring instrument-based method measures the relative position from the camera to the road marking using various measuring devices. The terrestrial laser scanning (TLS) is one of the most widely used instruments, and is mainly used for obtaining high resolution 3D models of large structures such as dams, mines, and terrain. To evaluate the position accuracy of the road marking using this method, TLS and the monocular front camera are first installed together and the rigid transformation between them should be precalibrated. The ground truth position of the road marking is obtained from 3D points produced by the TLS, and its position is transformed into the camera coordinate system using the rigid transformation between the TLS and camera. Finally, the position accuracy is measured by comparing the transformed ground truth position and the position calculated by the image of the camera. This method has an advantage of providing a dense and precise 3D shape of the road marking. However, it can be used only in cases where the vehicle is stationary because the TLS takes a long time to scan surrounding areas. For the same reason, it is difficult for the TLS to scan long and narrow areas such as highways. Due to these limitations, it has been concluded that this method is inappropriate to use in highway situations.

The on-vehicle sensor-based method measures the relative position from the camera to the road marking using various ranging sensors that have already been mounted on commercialized or autonomous vehicles. The most widely used ranging sensors mounted on those vehicles are a stereo camera and multi-layer LIDAR. This method is similar to the measuring instrument-based method. However, there is a major difference, which is the sensing speed. The sensing speed of the vehicle mounted ranging sensors are designed to be fast so that they can quickly obtain the ranging data of the surrounding area while the vehicle is moving at high speeds. To evaluate the position accuracy of the road marking using this method, the stereo camera or LIDAR along with the monocular front camera are first installed on the same vehicle and the rigid transformation between those sensors should be precalibrated. The ground truth position of the road marking is obtained from a dense disparity map of the stereo camera or 3D points produced by the multi-layer LIDAR, and its position is transformed into the camera coordinate system using the rigid transformation between those sensors. Finally, the position accuracy is measured by comparing the transformed ground truth position and the position calculated by the image of the camera. This method has an advantage that it is applicable to situations where the vehicle is moving at high speeds. However, in the case of the stereo camera, its ranging accuracy severely deteriorates when the distance of the road marking increases. In the case of the multi-layer LIDAR, it provides a high ranging accuracy, but its range data is not dense enough to accurately localize the road marking. Due to these limitations, it has been concluded that this method is inappropriate to use.

The MMS-based method measures the relative position from the camera to the road marking using dense 3D points and accurate positions obtained by the MMS. The MMS consists of high-precision positioning equipment and high performance LIDARs, and those sensors are usually mounted on a vehicle. Since the MMS can measure dense and accurate 3D points while the vehicle is moving at high speeds, it has an advantage of measuring long and wide areas such as highways. To evaluate the position accuracy of the road marking using this method, the monocular front camera is first attached to the MMS and the rigid transformation between the camera and MMS should be precalibrated. The ground truth position of the road marking is obtained from 3D points and its position is transformed into the camera coordinate system using the precalibrated rigid transformation and accurate positions produced by the MMS. Finally, the position accuracy is measured by comparing the transformed ground truth position and the position calculated by the image of the camera. Compared to the measuring instrument-based method, the MMS-based method has an advantage that it can be used while the vehicle is moving at high speeds. Compared to the on-vehicle sensor-based method, its 3D structure is denser and more accurate. Because of these advantages, this paper has selected the MMS-based method for evaluating the position accuracy of the road marking. A detailed explanation of this method will be presented in the following sections.

4.2. Acquisition of 3D Points Using MMS

The MMS used in this paper consists of a high precision LIDAR as a ranging sensor and a combination of real-time kinematic-global positioning system (RTK-GPS), high precision inertial measurement unit (IMU), and distance measurement instrument (DMI) as a positioning sensor.

Table 1 summarizes the specifications of these sensors.

The mapping and positioning sensors are mounted on an off-the-shelf vehicle as shown in

Figure 7. 3D structures surrounding the vehicle is obtained by registering 3D points acquired by the ranging sensor using 6D positions produced by the positioning sensor while the vehicle is moving. The obtained 3D points include 3D locations and infrared reflectivities. The monocular camera used to detect the lane endpoints is mounted on the vehicle equipped with the MMS, and the rigid transformation between the camera and MMS is precalibrated. Since an image is captured by being triggered by the positioning sensor, the precise camera position at the time of capturing the image are stored.

Figure 8a,b show an example 3D structure and image captured by the MMS and camera, respectively. The red point and blue arrow in

Figure 8a indicate the camera position at the time when the image in

Figure 8b is captured.

4.3. Acquisition of Lane Endpoint Ground Truth

To evaluate the position accuracy of the lane endpoint, the ground truth position of the lane endpoint is manually designated using the 3D points obtained by the MMS. Since the manually designated position is in the 3D point cloud coordinate system, it is converted into the camera coordinate system using the precise camera position at the time of capturing the image, which is provided by the MMS. The position accuracy is evaluated by comparing the ground truth position and the position produced by the proposed method.

To manually designate the lane endpoints in the 3D points, roads and lanes should be visually distinguishable. However, since the roads and lanes are located on the same plane, it is hard to distinguish between them using their 3D information. Thus, this paper utilizes the infrared reflectivities provided by the LIDAR of the MMS to visually distinguish the roads and lanes. The 3D points of the lanes have high infrared reflectivities compared with the roads because they are drawn with a highly reflective paint. The positions of the lane endpoints are manually designated by displaying the 3D points based on their infrared reflectivities.

Figure 9a shows the displayed 3D points based on their infrared reflectivities. In this figure, the larger the infrared reflectivity, the darker the 3D point is drawn. It can be noticed that the lanes are darker than the roads. This paper randomly selected 100 camera positions from approximately 40 km of highway, and manually designated four lane endpoints from the closest pair of dashed lanes.

Figure 9a shows the 3D points around a randomly chosen camera position, which is depicted by a red point. In this figure, two green dashed lines indicate the selected dashed lane pair, which is closest to the camera position.

Figure 9b shows an enlargement of one of the two selected lanes. Since the proposed method detects the center of the lane end as shown in

Figure 5, the same location should be designated in the 3D points. To this end, the left and right corners of the lane end are manually designated and their center location is used as the ground truth of the lane endpoint.

Figure 9c shows an example of the lane endpoint designation. In this figure, two blue points indicate two manually designated locations and a green point indicates the center of the two blue points, which is the ground truth of the lane endpoint.

4.4. Evaluation of Lane Endpoint Position Accuracy

Since the position of the lane endpoint is calculated with respect to the camera position in

Section 3.4, its ground truth position obtained in

Section 4.3 should be transformed into the camera coordinate system. To this end, the manually designated ground truth,

GP in the point cloud coordinate system is first transformed into the camera coordinate system,

GC as:

where R

P2C and

tP2C are the rotation matrix and translation vector that represent a rigid transformation between the point cloud and camera coordinate systems. R

P2C and

tP2C are obtained from the high precision positioning sensor of the MMS.

GC is manipulated as:

where R

B2C and

t′

B2C are the rotation matrix and the translation vector that represent a rigid transformation between the camera and checkerboard coordinate systems, which has been already explained in (8) and (9).

XG and

ZG are the ground truth position of the lane endpoint in lateral and longitudinal directions, respectively. The position accuracy of the lane endpoint is calculated by comparing (

XG and

ZG) in (12) and (

XC and

ZC) in (10).