1. Introduction

By the end of the twentieth century, a series of major breakthroughs had been made in the fields of space technology and information technology, resulting in significant changes to the fields of surveying and mapping. The development of Earth observation systems continues to change the nature of survey and mapping products as well as the methods for updating maps. Thus, satellite images have become another important source of information in addition to aerial photogrammetry. Among the optical satellite mapping methods, the multiline array stereo mode and agile stereo mode are undoubtedly two of the most common methods for acquiring stereo images.

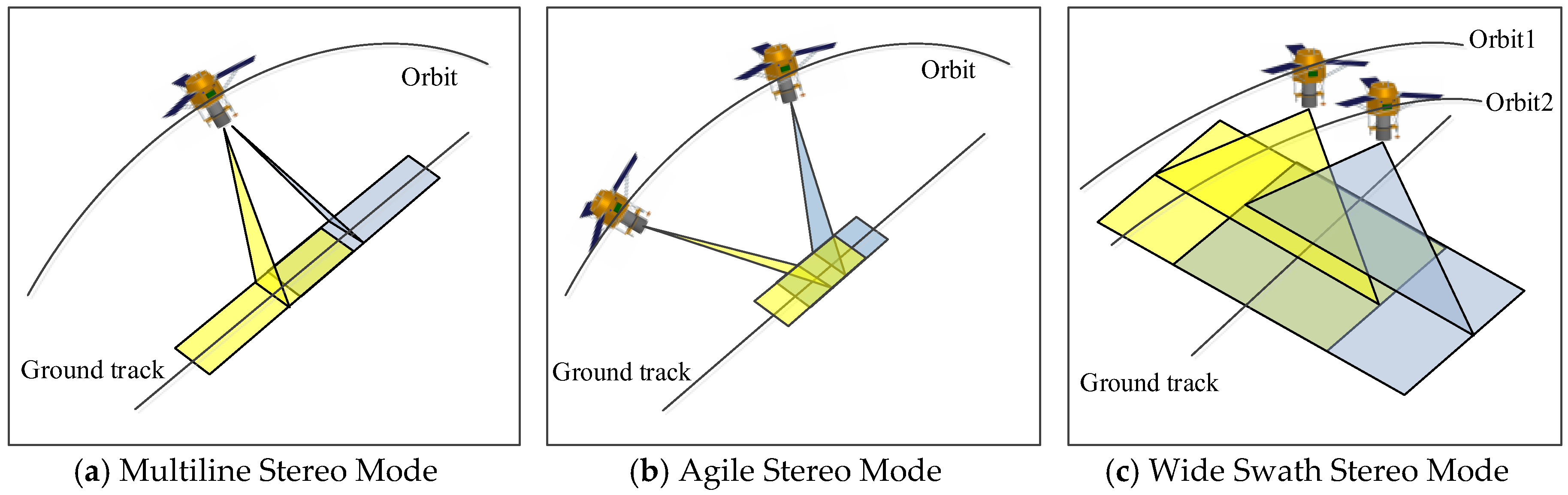

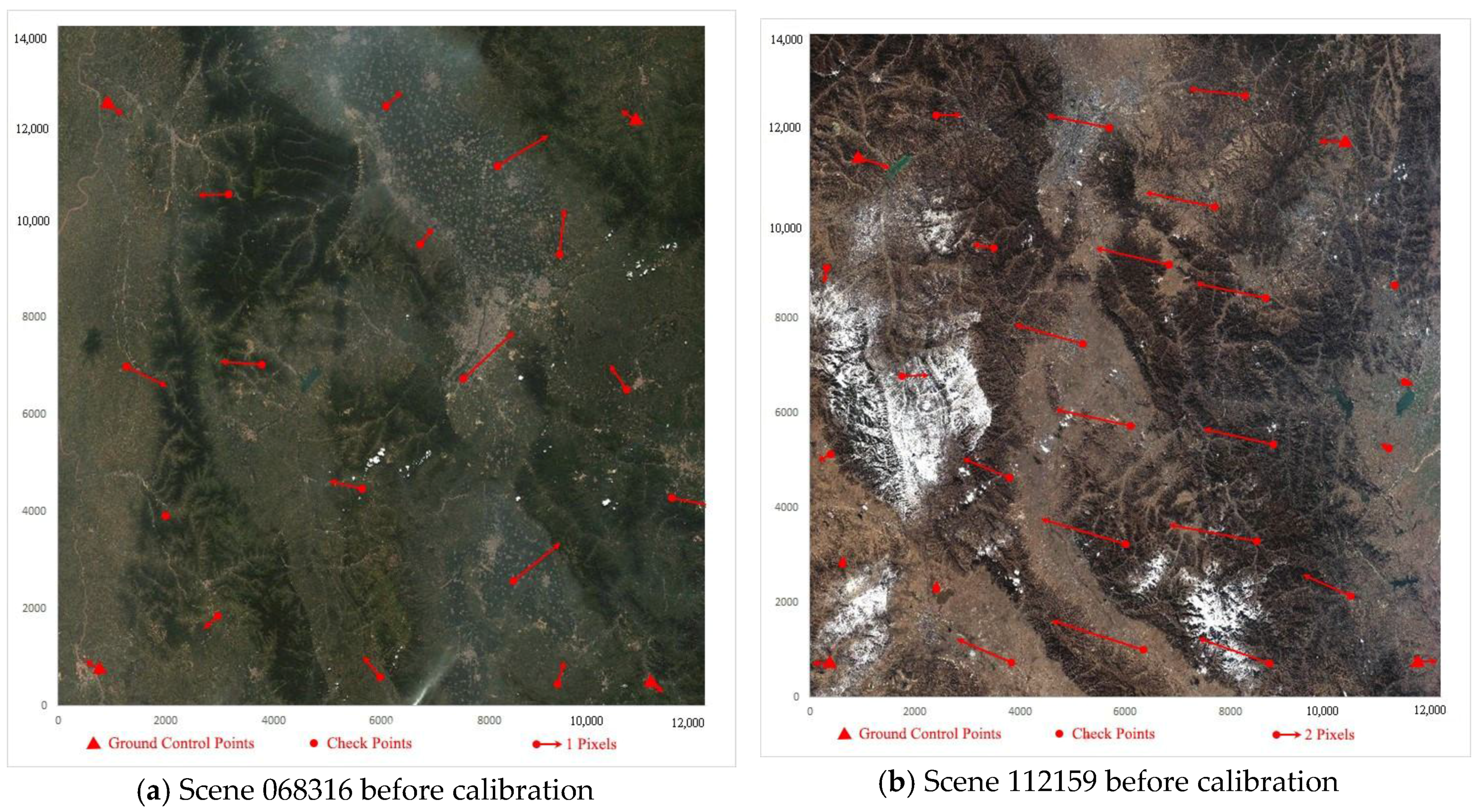

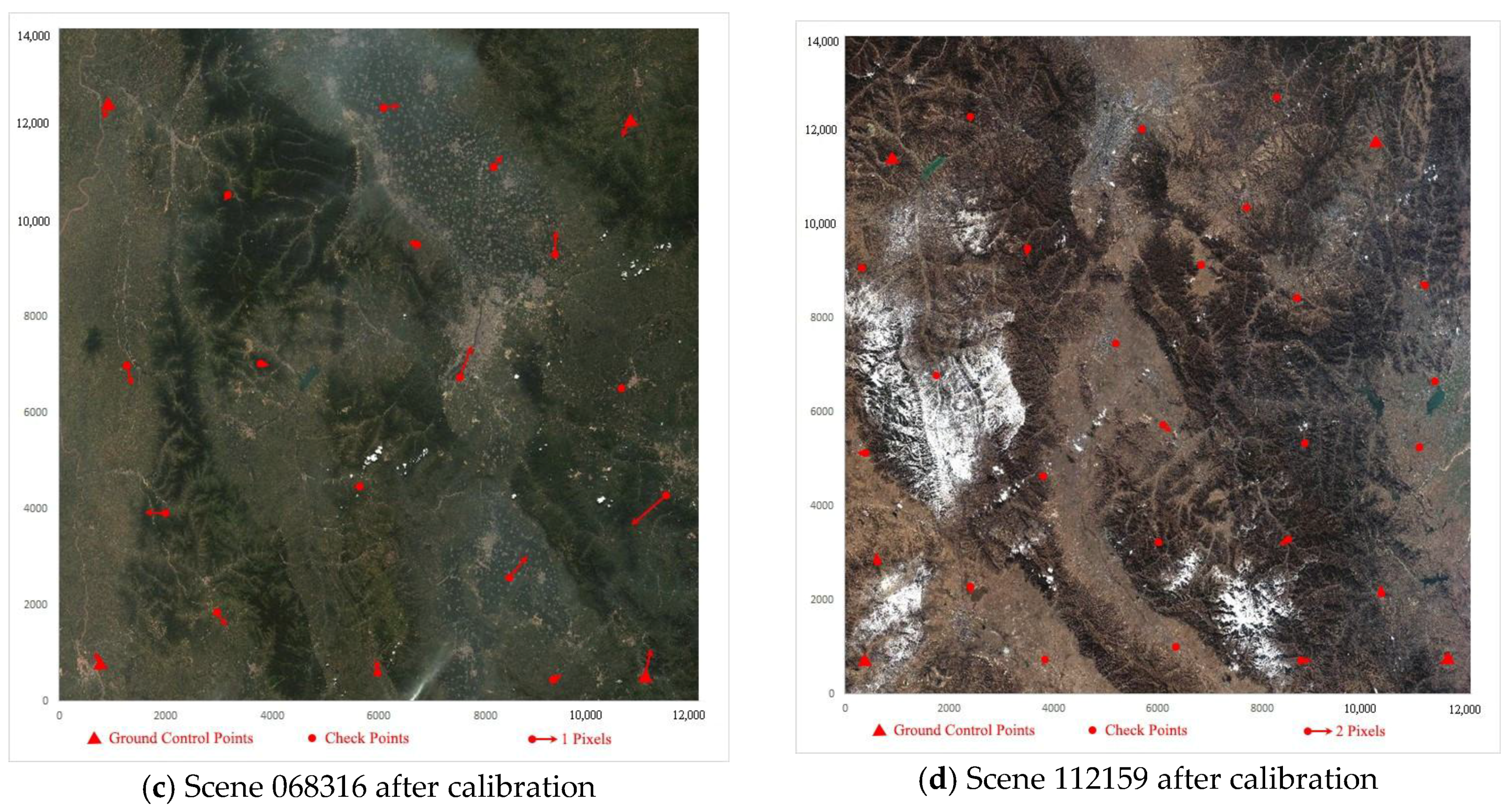

As shown in

Figure 1a, the multiline array stereo mode uses multiline array cameras to image the surface and acquire multiple images at different angles, baselines, and overlapping areas. Because this method acquires strip images along a track, it is capable of surveying and mapping a wide area. The SPOT-5 HRS camera [

1,

2,

3,

4] and the Terra ASTER camera [

5,

6] use the two-line array stereo mode, whereas the Ziyuan-3 triple linear-array camera [

7,

8] and the MappingSatellite-1 camera [

9,

10,

11] adopt the three-line array stereo mode. However, due to the narrow width (generally less than 50 km) of the multiline array, the revisit period may be up to two or three months, which results in a low temporal resolution. In short, the multiline array stereo mode has a wide spatial coverage and a low temporal resolution.

As shown in

Figure 1b, the agile stereo mode uses one camera to observe the same area at different angles and forms a stereo image pair to obtain stereo information. This mode is typically used to acquire two or more times the number of observations of the same area at different angles using the attitude maneuver of the satellite pitch or roll axis. Relying on high satellite agility, the agile stereo mode can make rapid stereo observations of an area (generally in a few seconds along a track or a few hours across a track). IKONOS [

12], GeoEye [

13], QuickBird-2 [

14], WorldView [

15], SPOT-6 and 7 [

16], and Pleiades [

17,

18,

19] use this stereo mode for surveying and mapping. However, due to satellite agility, the agile stereo mode cannot easily acquire a complete strip of stereo images covering a broad area and can only focus on one area, such as an urban area. In short, the agile stereo mode has a high-temporal resolution and a narrow spatial coverage.

Thus, the conflict between the temporal resolution and spatial coverages of these two modes limits many remote sensing applications, such as rapid updates of medium scale topographic maps, global change detect, etc., which often require wide spatial coverages and high-temporal resolution. In June 2009 and October 2011, the Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) provided two versions of the Global Digital Elevation Model (GDEM). Although the ASTER GDEM achieves a global 30-m resolution, meeting the demand of a 1:250,000 scale topographic map, the data have a poor temporal resolution and make it difficult to rapidly update maps and detect global change.

In this study, we show how the wide field and short revisit period of images in the wide swath stereo mode can address this issue. This mode is typically used to acquire two or more times the number of observations of the same area from different orbits. As shown in

Figure 1c, the wide swath stereo mode uses one camera to observe the same area from different orbits and forms a stereo image pair using the WFV of the camera without requiring attitude agility. Compared with classic stereo modes, the wide swath stereo mode relies on a wide swath (e.g., 800 km) and can rapidly obtain numerous stereo observations of a certain area (generally within a few days) as well as provide a wide coverage for survey and mapping purposes. In short, the wide swath stereo mode has both a wide spatial coverage and high-temporal resolution, which can meet the demands for rapidly updating maps and detecting global changes.

At present, while high-resolution wide swath images are less common because of the limitations of satellite camera hardware, the Gaofen-1 (GF-1) wide-field-view (WFV) images, with their total swath width of 800 km, multispectral resolution of 16 m and revisit period of four days [

20,

21], are used to implement the wide swath stereo mode. In addition, calibration of the lens to correct for the radial distortions is used in generation of digital surface models (DSMs) from SPOT-5 [

22], and there are nonlinear system errors in GF-1 WFV images. Therefore, calibration is necessary and vital in the computation of GF-1 3D stereo models for more accurate DSM. In this paper, we first present the key processes behind the wide swath stereo mode with calibration. Then, we perform GF-1 WFV experiments to demonstrate DSM accuracy improvement after calibration and the validity of the wide swath stereo mode, which is our research purpose. Finally, we present a discussion and concluding remarks.

2. Materials and Methods

2.1. Overview of GF-1 WFV

GF-1 is the first satellite of the Chinese high-resolution Earth observation system. The main purpose of GF-1 is to make major technological breakthroughs, such as those in optical remote sensing technology (high-spatial, multispectral, and high-temporal resolutions), multi-image mosaic and fusion technology, high-precision and high-stability attitude control technology, high-reliability low-orbit satellite technology, and high-resolution data processing and application technology [

20].

The GF-1 satellite design parameters are shown in

Table 1. The satellite has a sun synchronous orbit and is equipped with two high-resolution (HR) cameras and four WFV cameras. The nadir resolution of the HR panchromatic camera is 2 m, and that of the HR multispectral camera is 8 m. The total swath of the HR cameras is 60 km, and thus, the revisit period is typically 41 days. The nadir resolution of the WFV camera is 16 m over a total swath of 800 km, and this camera has a revisit period of 4 days.

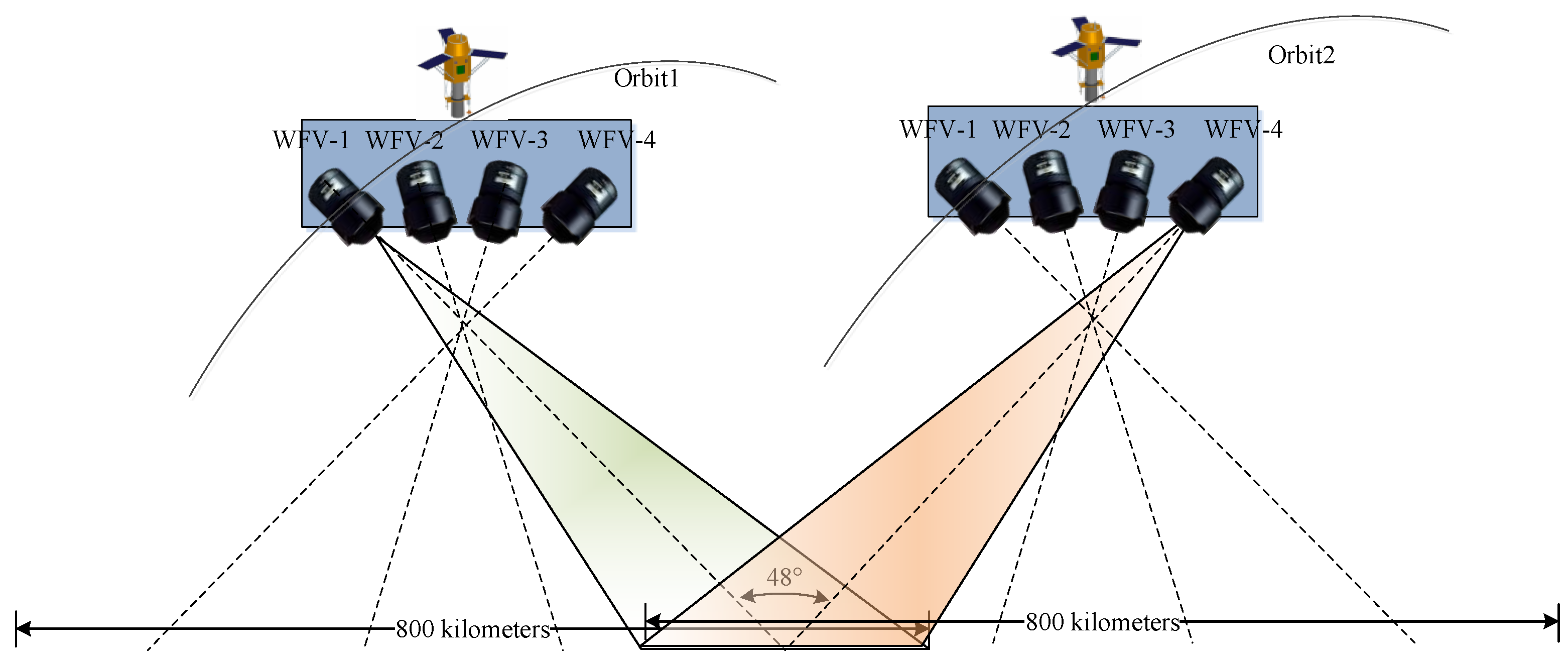

In this study, we use the WFV cameras. The field design of the GF-1 WFV cameras is shown in

Figure 2. The field of view (FOV) of the camera is 16.44°, and the overlap FOV between adjacent cameras is 0.44°. The angle between the center sights of WFV-1 and WFV-4 is up to 48°. By taking the wide swath characteristics into account, it is possible to apply WFV-1 and WFV-4 to stereo mapping.

However, because the primary goals of the GF-1 WFV camera are for use in land and resource surveys, the nonlinear system errors of the image, especially the distortion error, are less of a consideration in the camera design and data processing. The nonlinear system error of the images will seriously influence the stereo mapping, so a calibration should be applied to the WFV camera to acquire non-distorted images in advance. Then, an analysis of the intersection accuracies between WFV-1 and WFV-4 should be performed to demonstrate the feasibility of the image acquisition. Finally, the processing procedure for the wide swath stereo mapping using GF-1 WFV images must be specified.

2.2. Calibration

To acquire a high-accuracy digital surface model (DSM), the nonlinear system distortion in the GF-1 WFV images should be detected and compensated for in advance. Traditional calibration methods usually require a high-accuracy geometric calibration field (GCF) that covers the entire image across the satellite path to acquire sufficient ground control points (GCPs) [

17,

23,

24]. However, due to the wide swath size of the GF-1 WFV images, it is difficult to obtain enough GCPs from the GCF to cover all rows in one GF-1 WFV image, especially when considering the high construction costs and site constraints of the GCF.

Huang et al. [

25] propose a multicalibration image method to solve the GF-1 WFV image calibration problem. In this method, the calibration images are collected at different times, and their different rows are covered by the GCF. Then, the GCPs covering all the rows can be obtained and can be used with the modified calibration model to detect distortion. Experiments show that this method can increase the GF-1 WFV image orientation accuracy from several pixels to 1 pixel, thereby eliminating nearly all the nonlinear distortion. In this study, we use this method to detect and correct the GF-1 WFV-1 and WFV-4 images.

The calibration model for the linear sensor model is established based on [

7]:

where

indicates the satellite position with respect to the geocentric Cartesian coordinate system, and

is the rotation matrix converting the body coordinate system to the geocentric Cartesian coordinate system. Both these parameters are functions of time and are, therefore, functions of scan lines. Here,

represents the ray direction when the

z-coordinate is a constant with a value of 1 in the body coordinate system. Furthermore,

m denotes the unknown scaling factor, and

is the ground position of the pixel in the geocentric Cartesian coordinate system.

RU is the offset matrix that compensates for the exterior errors, and

denotes the interior distortion of the image space.

RU can be expanded by introducing additional variables [

26,

27,

28]:

where

,

and

are rotations about the

X,

Y, and

Z axes of the body coordinates, respectively, and should be detected to eliminate exterior errors. Note that images collected at different times have different exterior errors, and thus, the number of

RU values correspond to the number of images.

As mentioned above, multicalibration images are collected at different times and have different exterior errors (the installation errors may be the same) but the same interior error. The strong correlation between the exterior and the interior errors will inevitably influence the interior error in different calibration images. The interior error in the image space varies with the calibration images and is difficult to fit using the classical 5 order polynomial model [

25].

The additional parameters

are introduced, and the modified polynomial model can be written as [

25]

where the variables

, and

describe the distortion;

s is the image coordinate across a track;

n represents the number of calibration images; and

represent the modified parameters of each calibration image (except for the base image). Note that the images collected at different times have the same distortions.

Based on Equations (1)–(3), the functional relationship of the image point and parameters can be derived as Equation (4) in a simple style:

Equation (4) is the basic calibration model of the proposed method.

By taking partial derivative and linearization for Equation (4), the error equation can be written simply as:

where

represents the correction to the calibration parameters of images.

A is coefficient matrix of the error equation, and

L is the constant vector. Equation (5) is the basic error equation of the proposed method in the paper.

The corresponding normal equation of Equation (5):

The correction to the calibration parameters

t from the normal Equation (6) will be:

After the correction to the calibration parameters t is calculated, the calibration parameters can be updated.

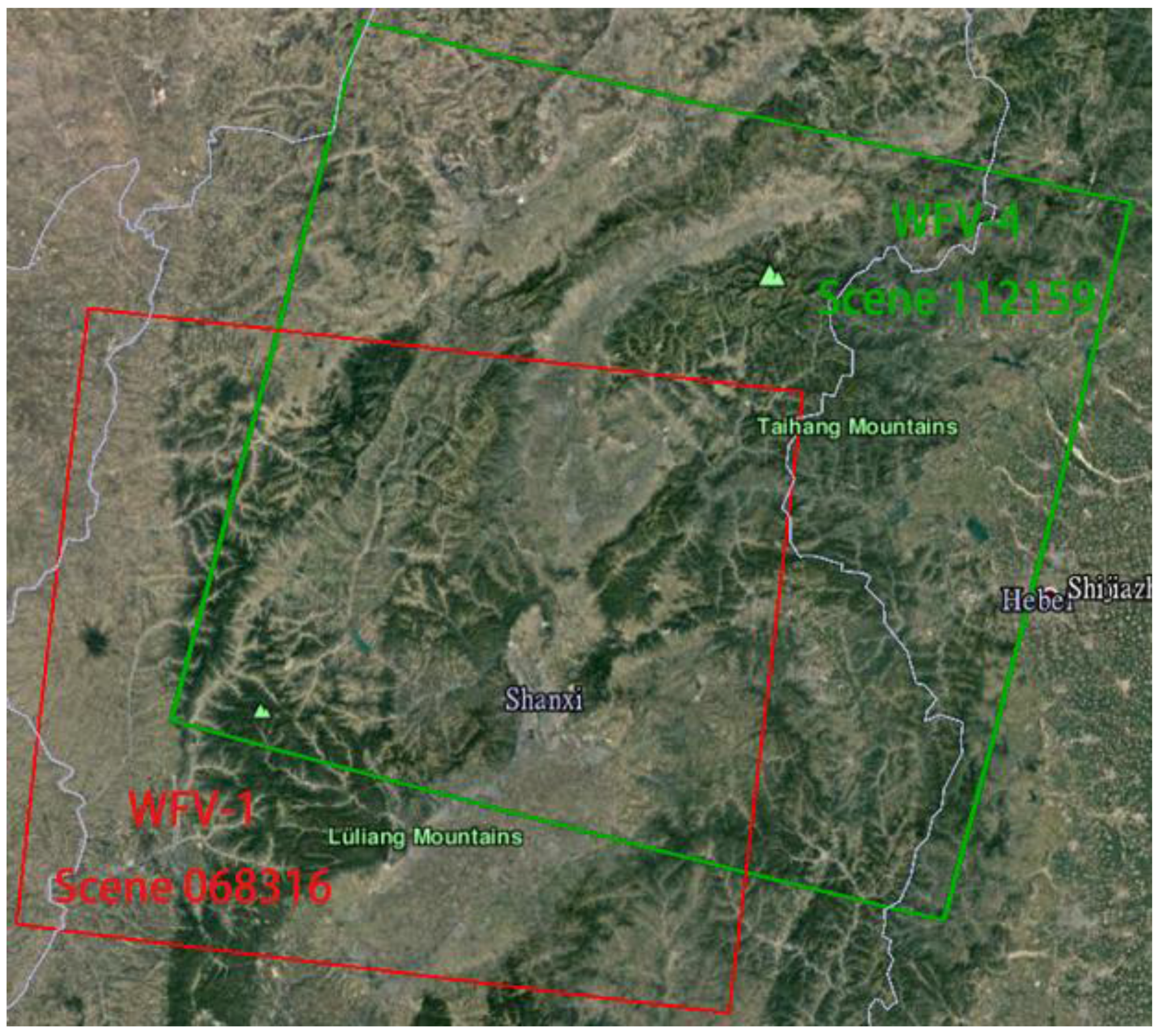

2.3. 3D Stereo Model and Analysis

The stereo partners for 3D stereo model are made up of the GF-1 WFV-1 and WFV-4 images from different orbits with common coverage (

Figure 3). Corresponding points acquired by the semi-global matching (SGM) method [

29] enable to reconstruct the 3D location of the object point on the terrain. Forward intersection is done via iterative least squares adjustment using 2

n (for

n stereo partners) observation equations [

30,

31]. Normally, in this research on Gaofen-1,

n is 2 and 4 equations are established per stereo tie point for the derivation of the 3 object space coordinates including planimetry and elevation. The initial values for the object space coordinates are derived from an affine transformation using the corner coordinates given by the image provider. Initial height values are taken from the mean height of the area under investigation. Normally, convergence is achieved after several iterations.

According to [

32,

33], the ratio R between the vertical accuracy and horizontal accuracy is written as follows:

where

herror represents the horizontal error and

verror represents the vertical error.

S is the baseline length, and

H is flight height. Thus, the horizontal error can be calculated as follows:

The flight height of GF-1 is 644.5 km, while the baseline between WFV-1 and WFV-4 is approximately 600 km. The calibration accuracy

ec is approximately 1 pixel, and the corresponding point matching accuracy

em is approximately 0.5 pixels. Because the nadir resolution

resnad is approximately 16 m, the resolutions of WFV-1 and WFV-4 (

res) are determined by Equation (10) considering the swing angle

θ (half of the angle between the two camera center sights):

Thus, the vertical and horizontal errors for WFV-1 and WFV-4 are as follows:

According to the stereo analysis, the planimetric and height accuracies for the GF-1 WFV-1 and WFV-4 cameras corresponded to approximately 29 m and 31 m. As the calibration and matching accuracies are approximate values, the stereo accuracy is merely a reference value that differs slightly from the actual value at each pixel.

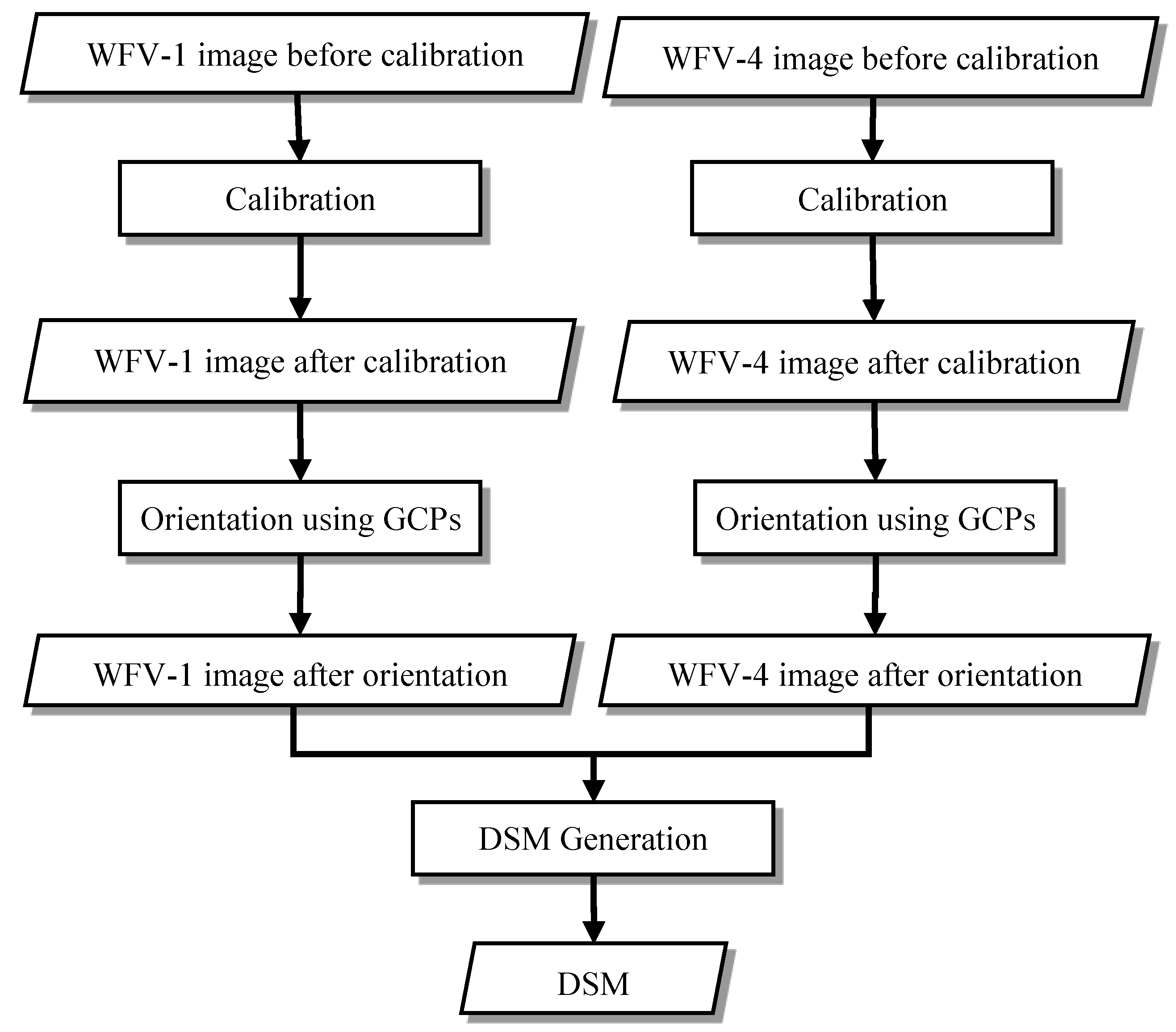

2.4. Processing Procedure

The process for wide swath stereo mapping using GF-1 WFV images is shown in

Figure 4. There are three main processes: calibration, orientation using GCPs, and DSM generation. Of these, the calibration and orientation using GCPs are applied to raw WFV-1 and WFV-4 images, respectively, whereas the DSM generation uses the WFV-1 and WFV-4 images after orientation.

First, the calibration method in [

25] is used to detect and correct for the systematic nonlinear distortion error and to acquire post-calibration images. Note that distortion detection is performed only once for each camera during the calibration process, and the calibration parameters can be used continuously by compensating the images.

Then, the orientation using the GCPs is applied based on the affine model, which is the most common orientation model, resulting in post-orientation images. This process should be performed on each image because the orientation without the GCPs differs for each image. The orientation process eliminates most of the random errors in the images.

Finally, the calibration parameters for camera distortion and exterior orientation parameters from the affine model are sent to DSM generation. The SGM method [

29] is introduced to acquire the corresponding points and point cloud, and then the DSM is generated from the point cloud. Note that the WFV-1 and WFV-4 images should have some overlap when using this method.

4. Conclusions

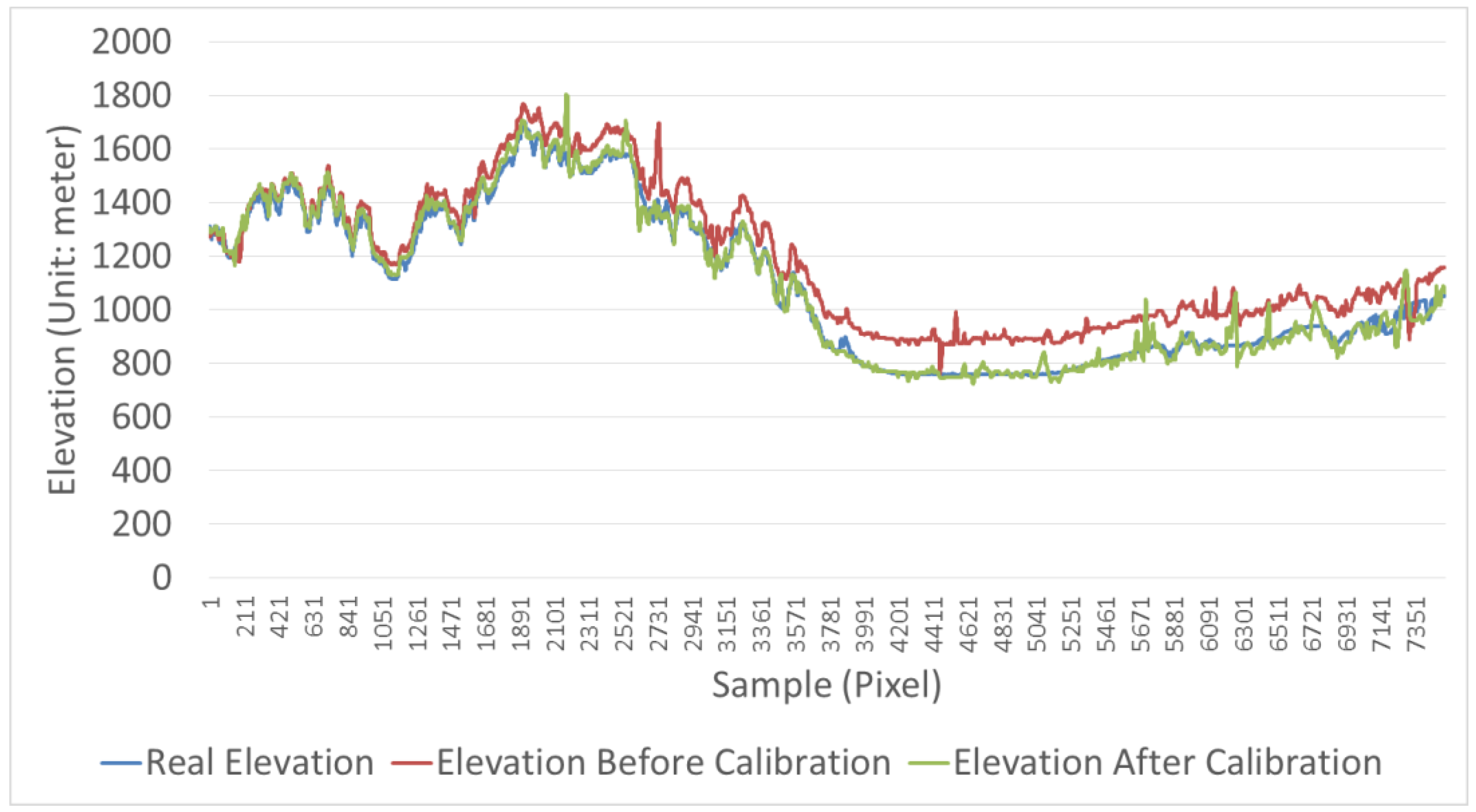

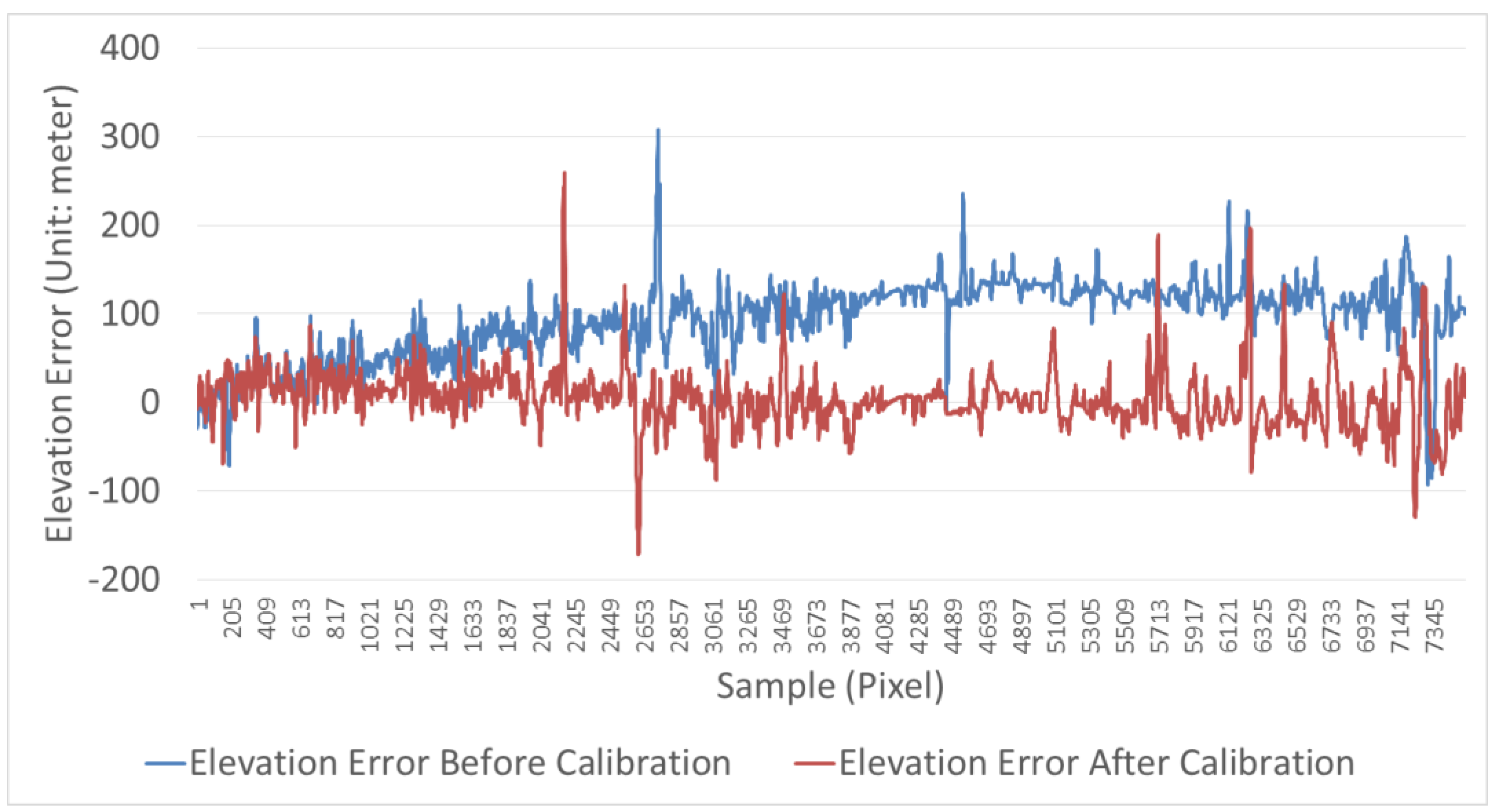

This paper proposes a wide swath stereo mode method that is characterized by both a wide spatial coverage and a high-temporal resolution. Compared with classical stereo modes, the wide swath stereo mode is capable of obtaining a wider range of stereo images over a short time period. The GF-1 WFV images with a total swath of 800 km, a multispectral resolution of 16 m and a revisit period of four days, are used in experiments. Nonlinear system errors in GF-1 WFV images is detected and compensated for in advance, and calibration bring a significant improvement of DSM accuracy. The results show that the wide swath stereo mode of the GF-1 WFV images can reach an elevation accuracy of 30 m for a DSM at proper calibration conditions, which meets the demand of the 1:250,000 scale mapping and rapid updates of the topographic map, and demonstrates the feasibility and efficacy of this mode for satellite imaging.

Moreover, given the limited nadir resolution of 16 m and poor radiation quality of the GF-1 WFV images, the 30 m elevation accuracy is still relatively low, although the elevation accuracy is significantly improved after calibration. We suggest that by using higher resolution wide swath images of improved radiation qualities, the wide swath stereo mapping mode will deliver better results with the proper calibration.