Dynamic Non-Rigid Objects Reconstruction with a Single RGB-D Sensor

Abstract

:1. Introduction

2. Related Works

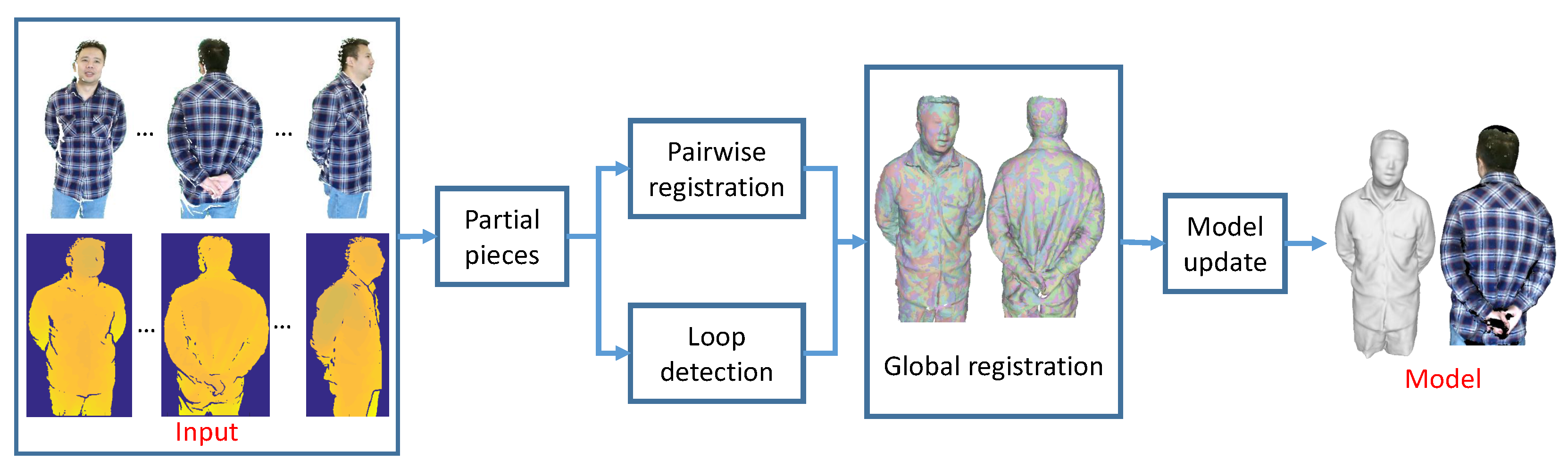

3. Pipeline

4. Our Approach

4.1. Partial Pieces Generation and Pairwise Non-Rigid Registration

4.1.1. Partial Pieces Generation

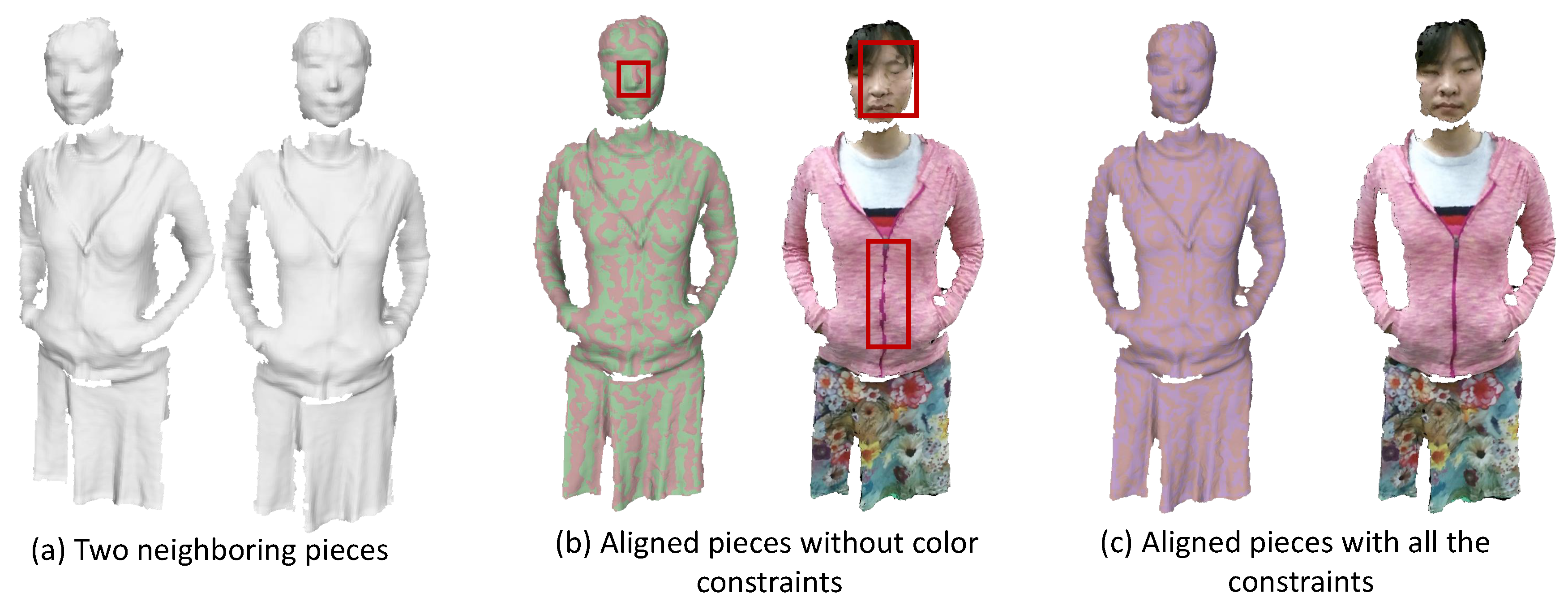

4.1.2. Pairwise Non-Rigid Registration

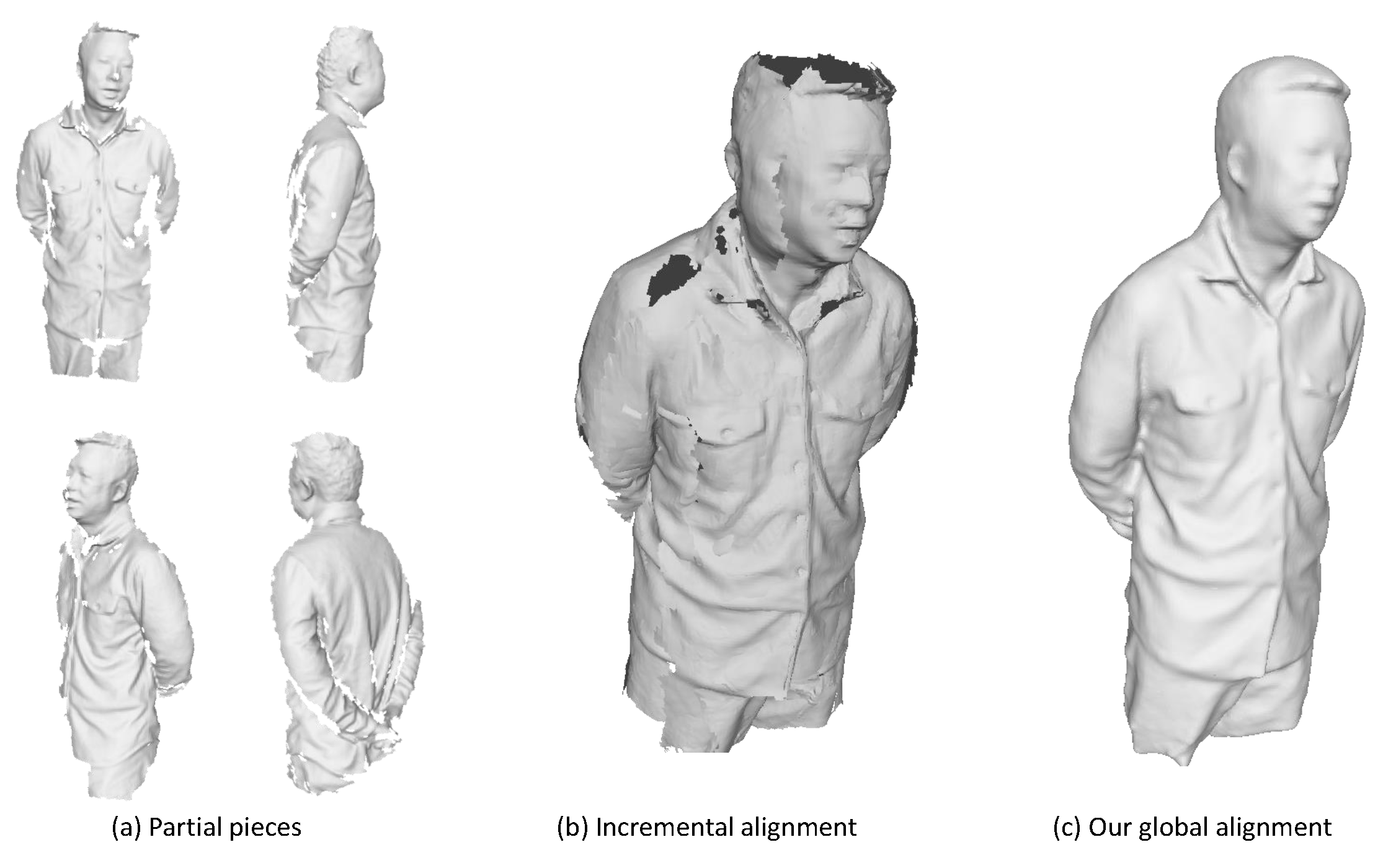

4.2. Loop Detection and Global Non-Rigid Registration

4.2.1. Loop Detection

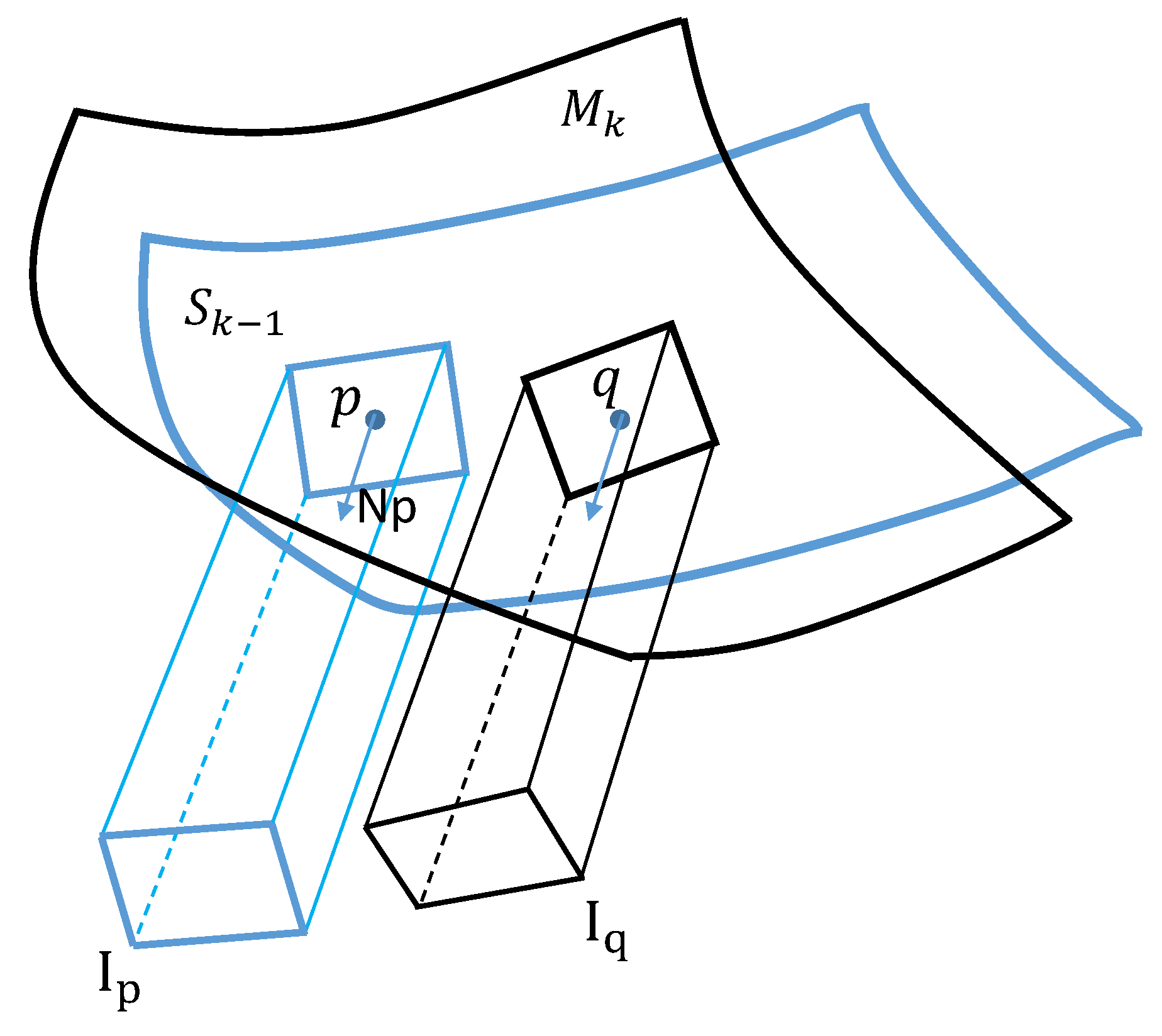

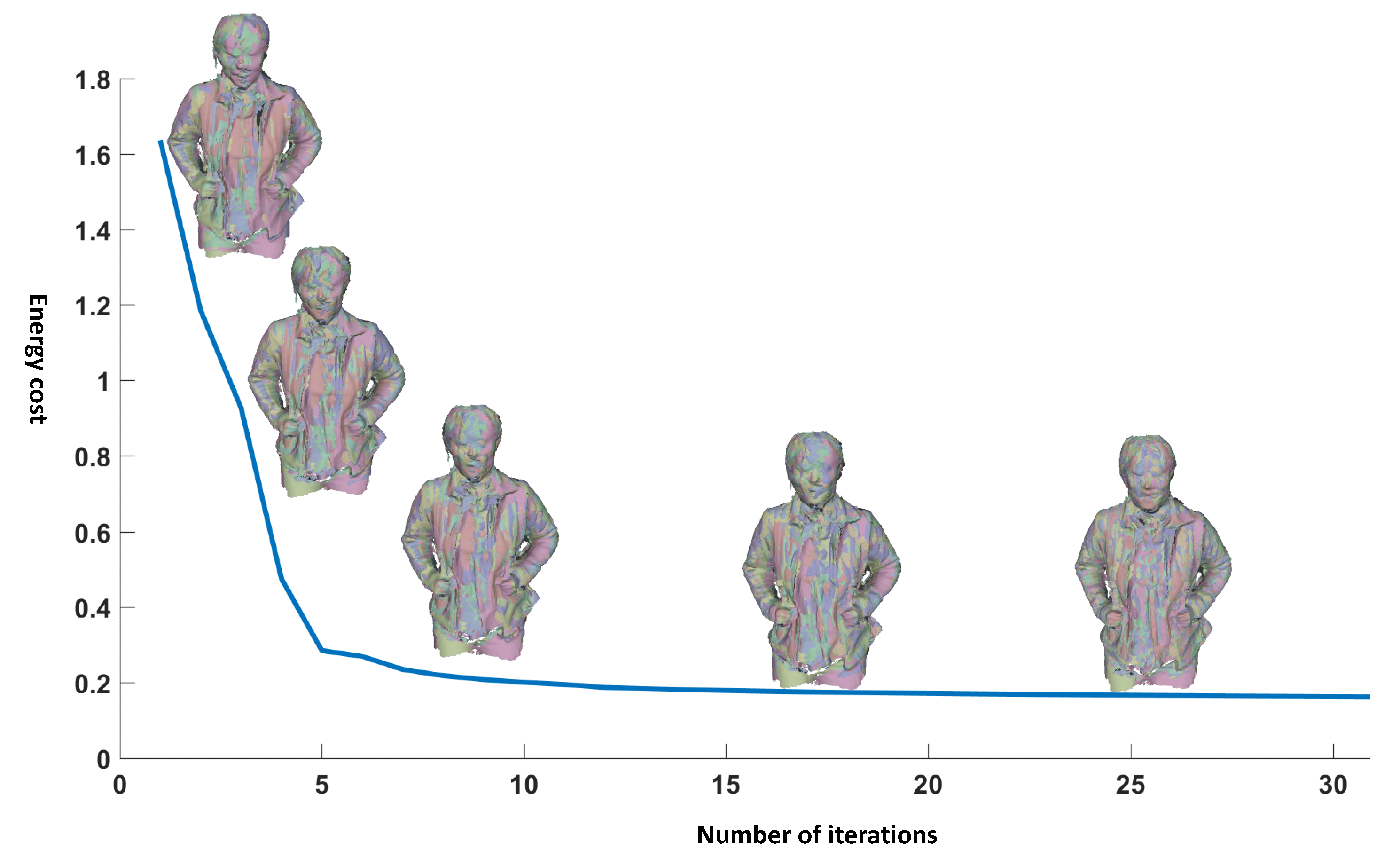

4.2.2. Global Non-Rigid Registration

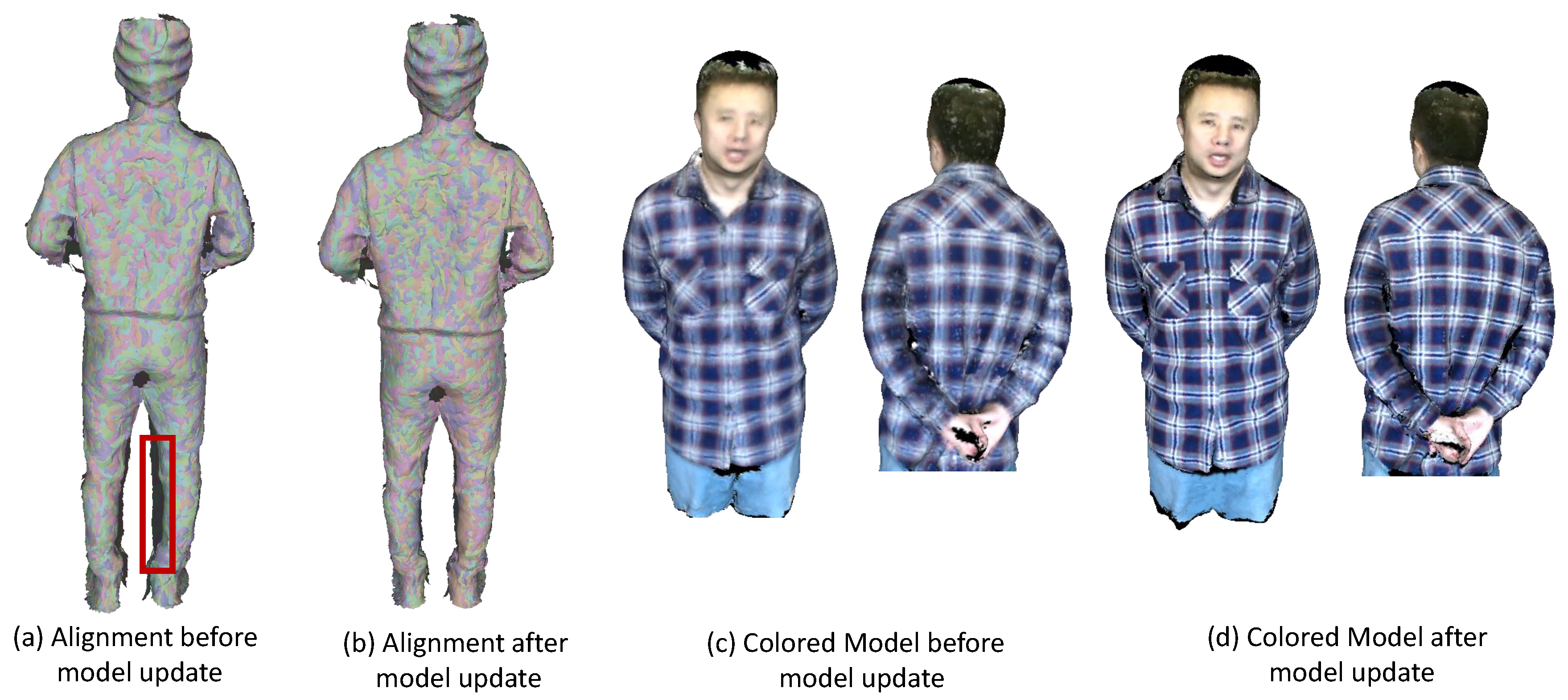

4.3. Model Update

| Algorithm 1: Model-update algorithm. |

|

4.4. Implementation Details

5. Experiments

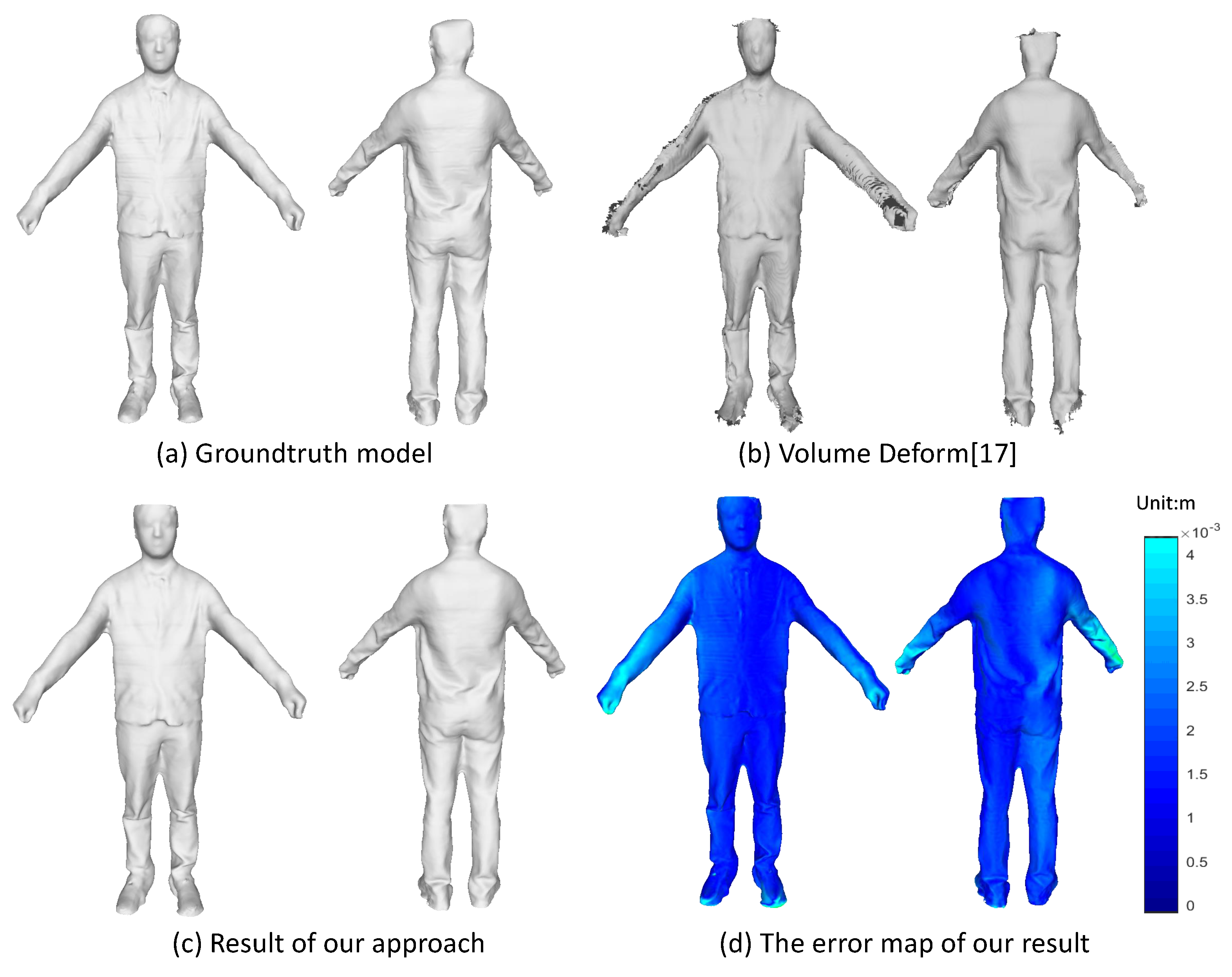

5.1. Quantitative Evaluation on Rigid Objects

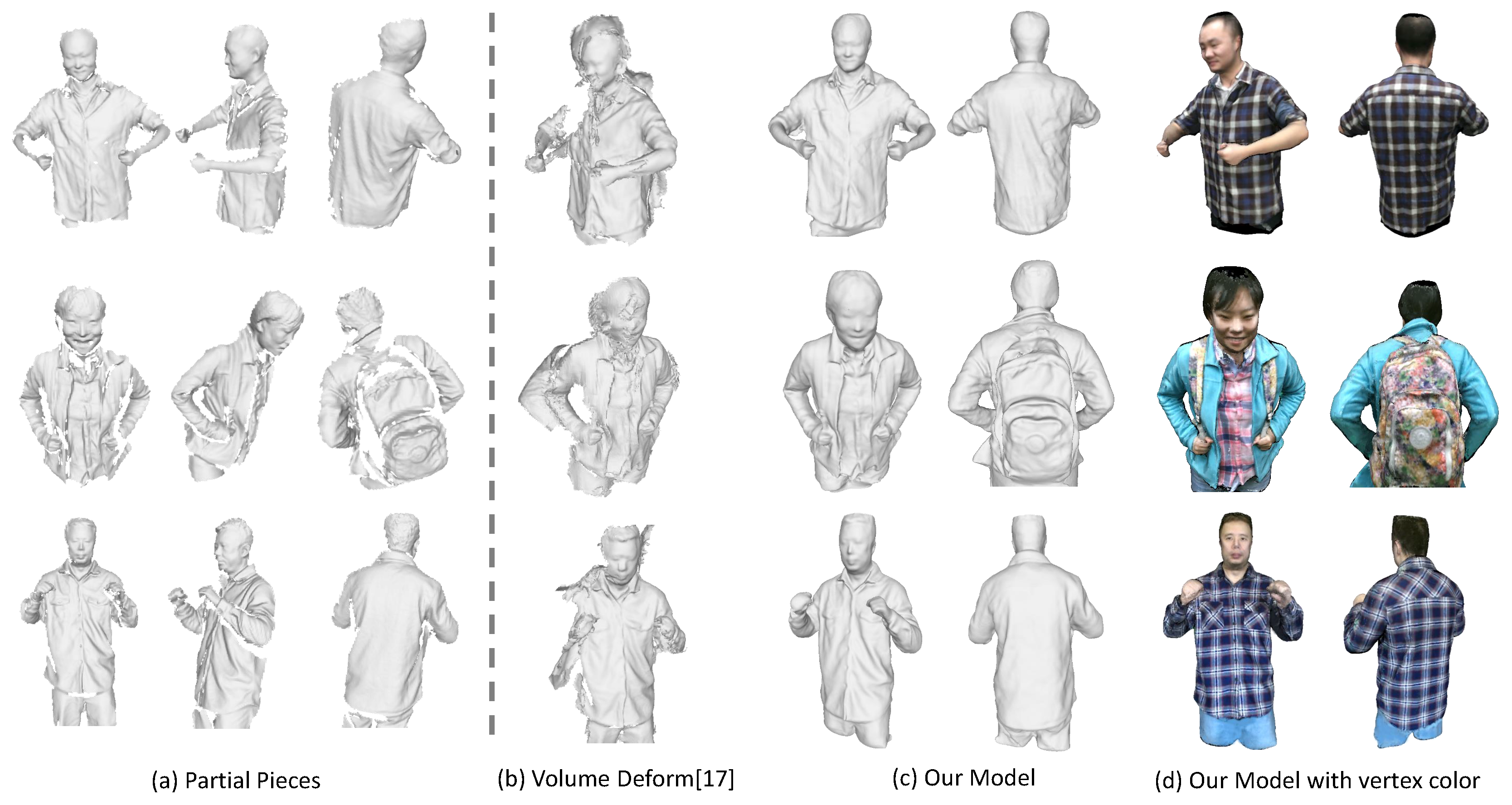

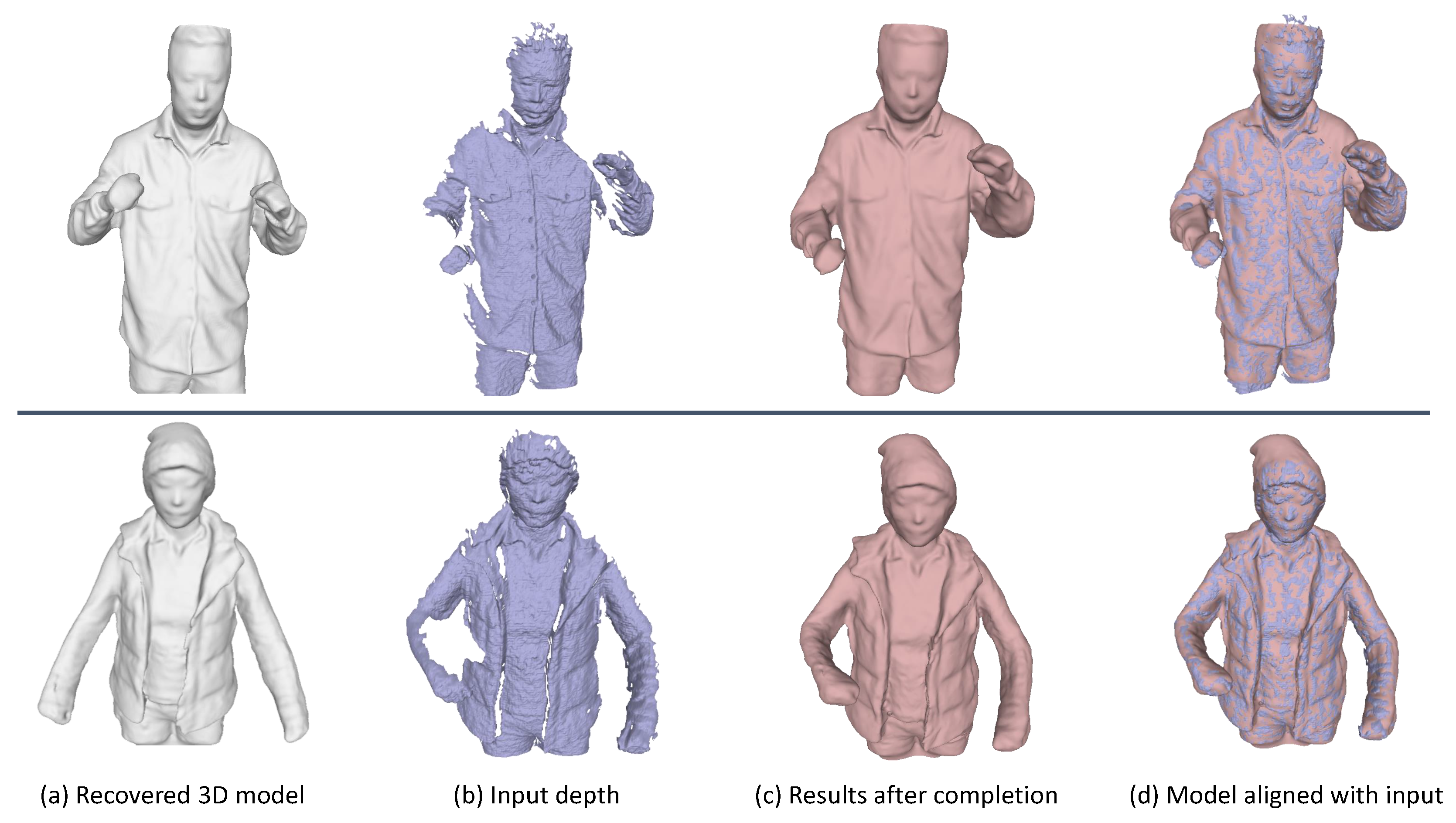

5.2. Qualitative Evaluation on Captured Subjects

5.3. Applications

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Whelan, T.; Salas-Moreno, R.; Glocker, B.; Davison, A.; Leutenegger, S. ElasticFusion: Real-Time Dense SLAM and Light Source Estimation. Int. J. Robot. Res. 2016, 35, 1697–1716. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.; Tardós, J. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Endres, F.; Hess, J.; Engelhard, N.; Sturm, J.; Cremers, D.; Burgard, W. An evaluation of the RGB-D SLAM system. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 1691–1696. [Google Scholar]

- Starck, J.; Hilton, A. Surface Capture for Performance-Based Animation. IEEE Comput. Graph. Appl. 2007, 27, 21–31. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aguiar, E.D.; Stoll, C.; Theobalt, C.; Ahmed, N.; Seidel, H.-P.; Thrun, S. Performance capture from sparse multi-view video. ACM Trans. Graph. 2008, 27, 98. [Google Scholar] [CrossRef]

- Vlasic, D.; Baran, I.; Matusik, W.; Popović, J. Articulated mesh animation from multi-view silhouettes. ACM Trans. Graph. 2008, 27, 97. [Google Scholar] [CrossRef]

- Waschbüsch, M.; Würmlin, S.; Cotting, D.; Sadlo, F.; Gross, M. Scalable 3D video of dynamic scenes. Vis. Comput. 2005, 21, 629–638. [Google Scholar] [CrossRef]

- Dou, M.; Fuchs, H.; Frahm, J.M. Scanning and tracking dynamic objects with commodity depth cameras. In Proceedings of the 2013 IEEE Symposium on Mixed and Augmented Reality (ISMAR), Adelaide, Australia, 1–4 October 2013; pp. 99–106. [Google Scholar]

- Tong, J.; Zhou, J.; Liu, L.; Pan, Z.; Yan, H. Scanning 3D full human bodies using Kinects. IEEE Trans. Vis. Comput. Graph. 2012, 18, 643–650. [Google Scholar] [CrossRef] [PubMed]

- Alexiadis, D.S.; Zarpalas, D.; Daras, P. Real-Time, Full 3-D Reconstruction of Moving Foreground Objects From Multiple Consumer Depth Cameras. IEEE Trans. Multimed. 2013, 15, 339–358. [Google Scholar] [CrossRef]

- Dou, M.; Khamis, S.; Degtyarev, Y.; Davidson, P.; Fanello, S.R.; Kowdle, A.; Escolano, S.O.; Rhemann, C.; Kim, D.; Taylor, J.; et al. Fusion4D: real-time performance capture of challenging scenes. ACM Trans. Graph. 2016, 35, 114. [Google Scholar] [CrossRef]

- Li, H.; Vouga, E.; Gudym, A.; Luo, L.; Barron, J.T.; Gusev, G. 3D self-portraits. ACM Trans. Graph. 2013, 32, 187. [Google Scholar] [CrossRef]

- Cui, Y.; Chang, W.; Nolly, T.; Stricker, D. Kinectavatar: Fully automatic body capture using a single Kinect. In Proceedings of the Asian Conference on Computer Vision (ACCV), Daejeon, Korea, 5–9 November 2012; pp. 133–147. [Google Scholar]

- Anguelov, D.; Srinivasan, P.; Koller, D.; Thrun, S.; Rodgers, J.; Davis, J. SCAPE: shape completion and animation of people. ACM Trans. Graph. 2005, 24, 408–416. [Google Scholar] [CrossRef]

- Gall, J.; Stoll, C.; de Aguiar, E.; Theobalt, C.; Rosenhahn, B.; Seidel, H.-P. Motion capture using joint skeleton tracking and surface estimation. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 1746–1753. [Google Scholar]

- Newcombe, R.A.; Fox, D.; Seitz, S.M. DynamicFusion: Reconstruction and tracking of non-rigid scenes in real time. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 343–352. [Google Scholar]

- Innmann, M.; Zollhöfer, M.; Nießner, M.; Theobalt, C.; Stamminger, M. VolumeDeform: Real-Time Volumetric Non-rigid Reconstruction. In Proceedings of the 2016 European Conference on Computer Vision (ECCV), Amsterdam, The Netherland, 8–16 October 2016; pp. 362–379. [Google Scholar]

- Slavcheva, M.; Baust, M.; Cremers, D.; Ilic, S. KillingFusion: Non-rigid 3D Reconstruction without Correspondences. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 343–352. [Google Scholar]

- Zollhöfer, M.; Izadi, S.; Rehmann, C.; Zach, C.; Fisher, M.; Wu, C.; Fitzgibbon, A.; Loop, C.; Theobalt, C.; Stamminger, M. Real-time non-rigid reconstruction using an RGB-D camera. ACM Trans. Graph. 2014, 33, 156. [Google Scholar] [CrossRef]

- Guo, K.; Xu, F.; Wang, Y.; Liu, Y.; Dai, Q. Robust Non-rigid Motion Tracking and Surface Reconstruction Using L0 Regularization. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3083–3091. [Google Scholar]

- Bogo, F.; Black, M.J.; Loper, M.; Romero, J. Detailed Full-Body Reconstructions of Moving People from Monocular RGB-D Sequences. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2300–2308. [Google Scholar]

- Zhang, Q.; Fu, B.; Ye, M.; Yang, R. Quality dynamic human body modeling using a single low-cost depth camera. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 676–683. [Google Scholar]

- Zhu, H.Y.; Yu, Y.; Zhou, Y.; Du, S.D. Dynamic human body modeling using a single RGB camera. Sensors 2016, 16, 402. [Google Scholar] [CrossRef] [PubMed]

- Guo, K.W.; Xu, F.; Yu, T.; Liu, X.; Dai, Q.; Liu, Y. Real-time Geometry, Albedo and Motion Reconstruction Using a Single RGBD Camera. ACM Trans. Graph. 2017, 36, 32. [Google Scholar] [CrossRef]

- Yu, T.; Guo, K.; Xu, F.; Dong, Y.; Su, Z.; Zhao, J.; Li, J.; Dai, Q.; Liu, Y. BodyFusion: Real-time Capture of Human Motion and Surface Geometry Using a Single Depth Camera. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 910–919. [Google Scholar]

- Dou, M.; Taylor, J.; Fuchs, H.; Fitzgibbon, A.; Izadi, S. 3D scanning deformable objects with a single RGBD sensor. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 493–501. [Google Scholar]

- Sumner, R.W.; Schmid, J.; Pauly, M. Embedded deformation for shape manipulation. ACM Trans. Graph. 2007, 26, 80. [Google Scholar] [CrossRef]

- Li, H.; Sumner, R.W.; Pauly, M. Global correspondence optimization for non-rigid registration of depth scans. In Proceedings of the 2008 Eurographics Association Symposium on Geometry Processing, Copenhagen, Denmark, 2–4 July 2008; pp. 1421–1430. [Google Scholar]

- Brox, T.; Malik, J. Large Displacement Optical Flow: Descriptor Matching in Variational Motion Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 500–513. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Zuo, X.; Du, C.; Wang, R.; Zheng, J.; Yang, R. Dynamic Non-Rigid Objects Reconstruction with a Single RGB-D Sensor. Sensors 2018, 18, 886. https://doi.org/10.3390/s18030886

Wang S, Zuo X, Du C, Wang R, Zheng J, Yang R. Dynamic Non-Rigid Objects Reconstruction with a Single RGB-D Sensor. Sensors. 2018; 18(3):886. https://doi.org/10.3390/s18030886

Chicago/Turabian StyleWang, Sen, Xinxin Zuo, Chao Du, Runxiao Wang, Jiangbin Zheng, and Ruigang Yang. 2018. "Dynamic Non-Rigid Objects Reconstruction with a Single RGB-D Sensor" Sensors 18, no. 3: 886. https://doi.org/10.3390/s18030886