Multiband Imaging CMOS Image Sensor with Multi-Storied Photodiode Structure †

Abstract

:1. Introduction

2. Multi-Storied Photodiode Concept and Structure

3. Measurement Results and Discussion

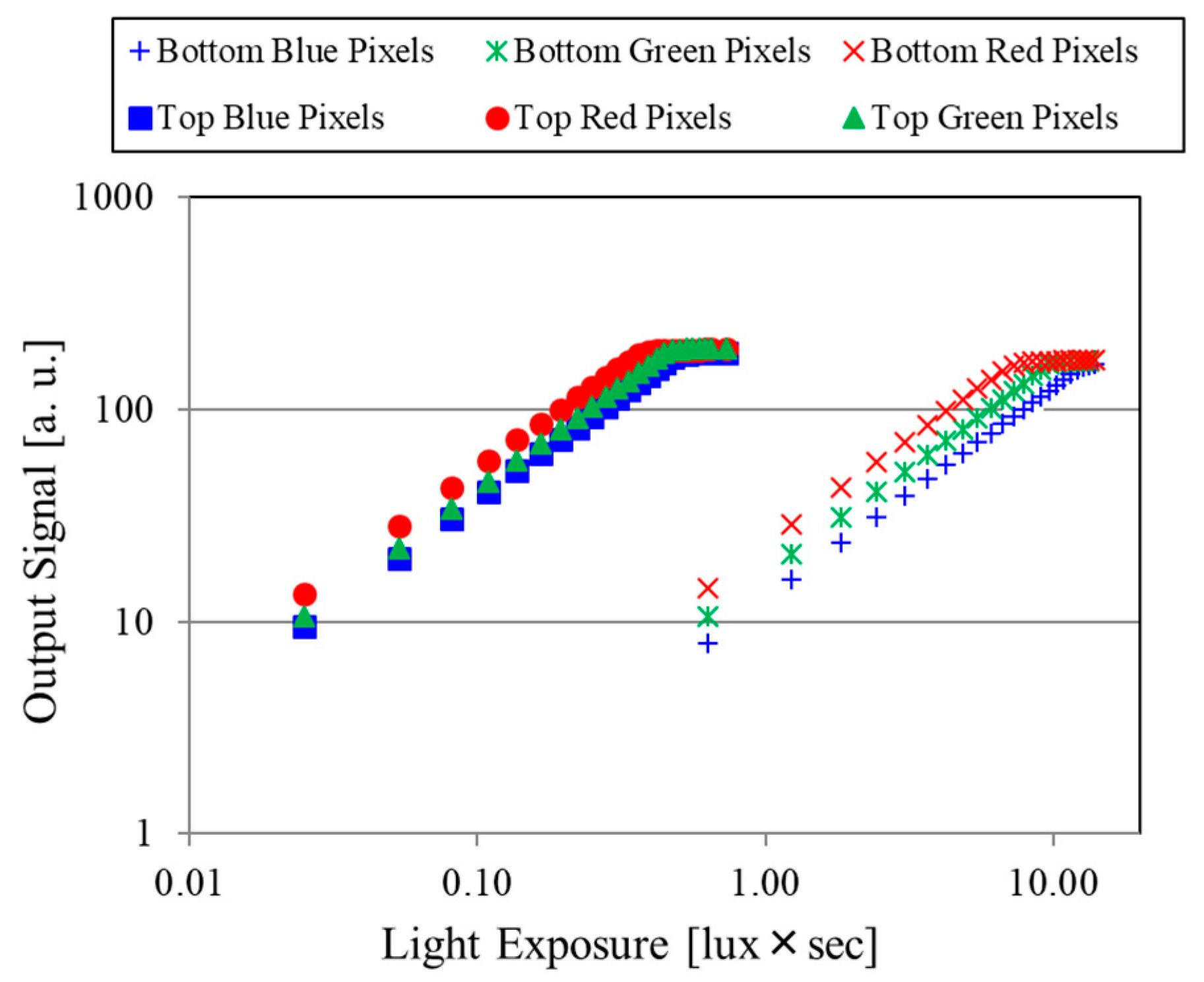

3.1. Photoelectric Conversion Characteristics

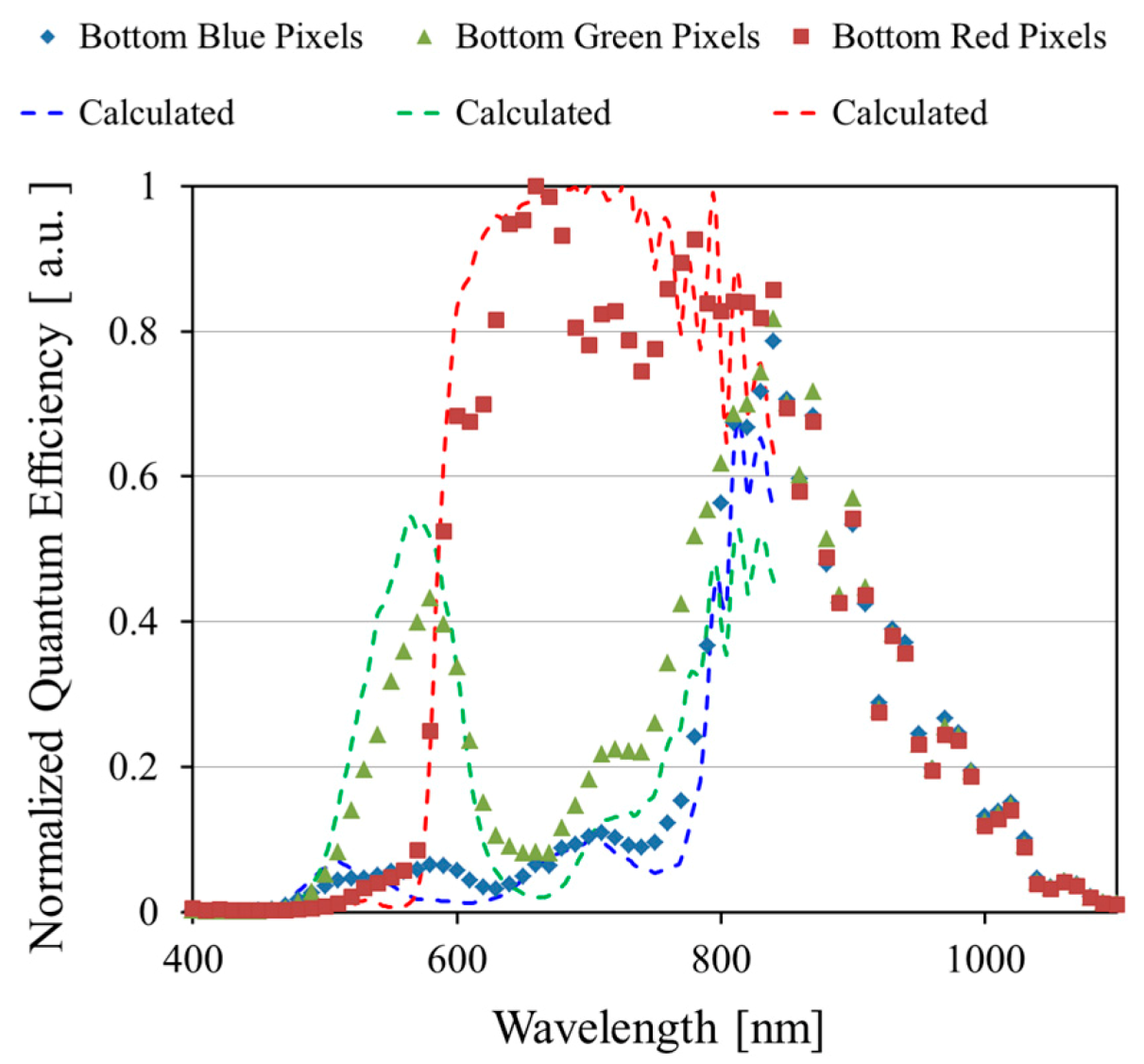

3.2. Spectral Response Characteristics

3.3. RGB and NIR Images Taken by the Multi-Storied Photodiode CMOS Image Sensor

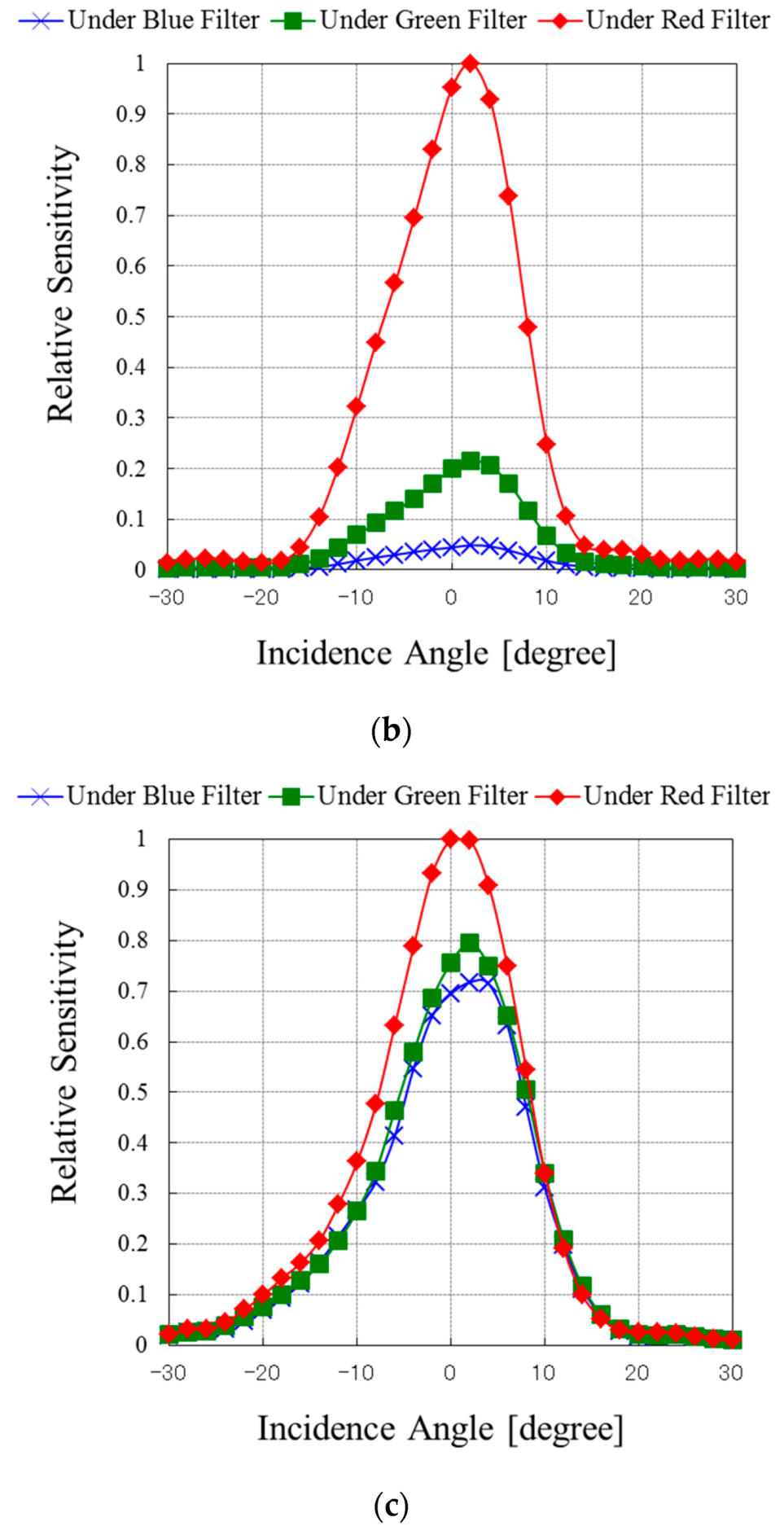

3.4. RGB and NIR Images Taken by the Multi-Storied Photodiode CMOS Image Sensor

4. Conclusions

Author Contributions

Conflicts of Interest

References

- Kim, W.; Wang, Y.; Ovsiannikov, I.; Lee, S.; Park, Y.; Chung, C.; Fossum, E. A 1.5 Mpixel RGBZ CMOS Image Sensor for Simultaneous Color and Range Image Capture. In Proceedings of the 2012 IEEE International Solid-State Circuits Conference Digest of Technical Papers (ISSCC), San Francisco, CA, USA, 19–23 February 2012; pp. 392–394. [Google Scholar]

- Nonaka, S.; Saito, Y.; Gotoda, T.; Kozu, T.; Matsuda, T.; Oda, I.; Suzuki, H.; Saito, D. Narrow Band Imaging (NBI) System is Promising Device to Detect Superficial Pharyngeal Cancer at an Early Stage in Patients with Esophageal Squamous Cell Carcinoma. Gastrointest. Endosc. 2006, 63, AB250. [Google Scholar] [CrossRef]

- Hashimoto, J. Finger Vein Authentication Technology and its Future. In Proceedings of the 2006 Symposium on VLSI Circuits Digest of Technical Papers, Honolulu, HI, USA, 15–17 June 2006; pp. 5–8. [Google Scholar]

- Zimmermann, T.; Rietdorf, J.; Pepperkok, R. Spectral Imaging and its Applications in Live Cell Microscopy. FEBS Lett. 2003, 546, 87–92. [Google Scholar] [CrossRef]

- Harvey, A.R.; Beale, J.E.; Greenaway, A.H.; Hanlon, T.J.; Williams, J.W. Technology Options for Hyperspectral Imaging. Proc. SPIE 2000, 4132, 13–24. [Google Scholar]

- Chen, Z.; Wang, X.; Liang, R. RGB-NIR Multispectral Camera. Opt. Express 2014, 22, 4985–4994. [Google Scholar] [CrossRef] [PubMed]

- Merrill, R.B. Color Separation in an Active Pixel Cell Imaging Array Using a Triple-Well-Structure. U.S. Patent 5,965,875, 12 October 1999. [Google Scholar]

- Takemoto, Y.; Kobayashi, K.; Tsukimura, M.; Takazawa, N.; Kato, H.; Suzuki, S.; Aoki, J.; Kondo, T.; Saito, H.; Gomi, Y.; et al. Multi-Storied Photodiode CMOS Image Sensor for Multiband Imaging with 3D Technology. In Proceedings of the 2015 IEEE International Electron Devices Meeting (IEDM), Washington, DC, USA, 7–9 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 771–774. [Google Scholar]

- Takemoto, Y.; Tsukimura, M.; Takazawa, N.; Kato, H.; Suzuki, S.; Aoki, J.; Kondo, T.; Saito, H.; Gomi, Y.; Matsuda, S.; et al. Optical Characteristics of Multi-Storied Photodiode CMOS Image Sensor with 3D Stacking Technology. In Proceedings of the 2017 International Image Sensor Workshop, Hiroshima, Japan, 30 May–2 June 2017. [Google Scholar]

| Items | Specifications |

|---|---|

| Fabrication process | 0.18-μm 1P6M |

| Chip size | 20.1 mm × 19.7 mm |

| Pixel size | 3.8 μm × 3.8 μm |

| Multi-storied photodiode pixel area | 16.1 mm × 0.9 mm |

| Number of multi-storied photodiodes | 4224 × 240 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Takemoto, Y.; Tsukimura, M.; Kato, H.; Suzuki, S.; Aoki, J.; Kondo, T.; Saito, H.; Gomi, Y.; Matsuda, S.; Tadaki, Y. Multiband Imaging CMOS Image Sensor with Multi-Storied Photodiode Structure †. Sensors 2018, 18, 1688. https://doi.org/10.3390/s18061688

Takemoto Y, Tsukimura M, Kato H, Suzuki S, Aoki J, Kondo T, Saito H, Gomi Y, Matsuda S, Tadaki Y. Multiband Imaging CMOS Image Sensor with Multi-Storied Photodiode Structure †. Sensors. 2018; 18(6):1688. https://doi.org/10.3390/s18061688

Chicago/Turabian StyleTakemoto, Yoshiaki, Mitsuhiro Tsukimura, Hideki Kato, Shunsuke Suzuki, Jun Aoki, Toru Kondo, Haruhisa Saito, Yuichi Gomi, Seisuke Matsuda, and Yoshitaka Tadaki. 2018. "Multiband Imaging CMOS Image Sensor with Multi-Storied Photodiode Structure †" Sensors 18, no. 6: 1688. https://doi.org/10.3390/s18061688