An Effective Image Denoising Method for UAV Images via Improved Generative Adversarial Networks

Abstract

1. Introduction

2. Methods

2.1. Deep Architecture

2.1.1. Generative Model

2.1.2. Discriminative Model

2.2. Loss Function

3. Experiments

3.1. Experimental Setting

3.1.1. Experimental Data

3.1.2. Prameters Setting and Model Details

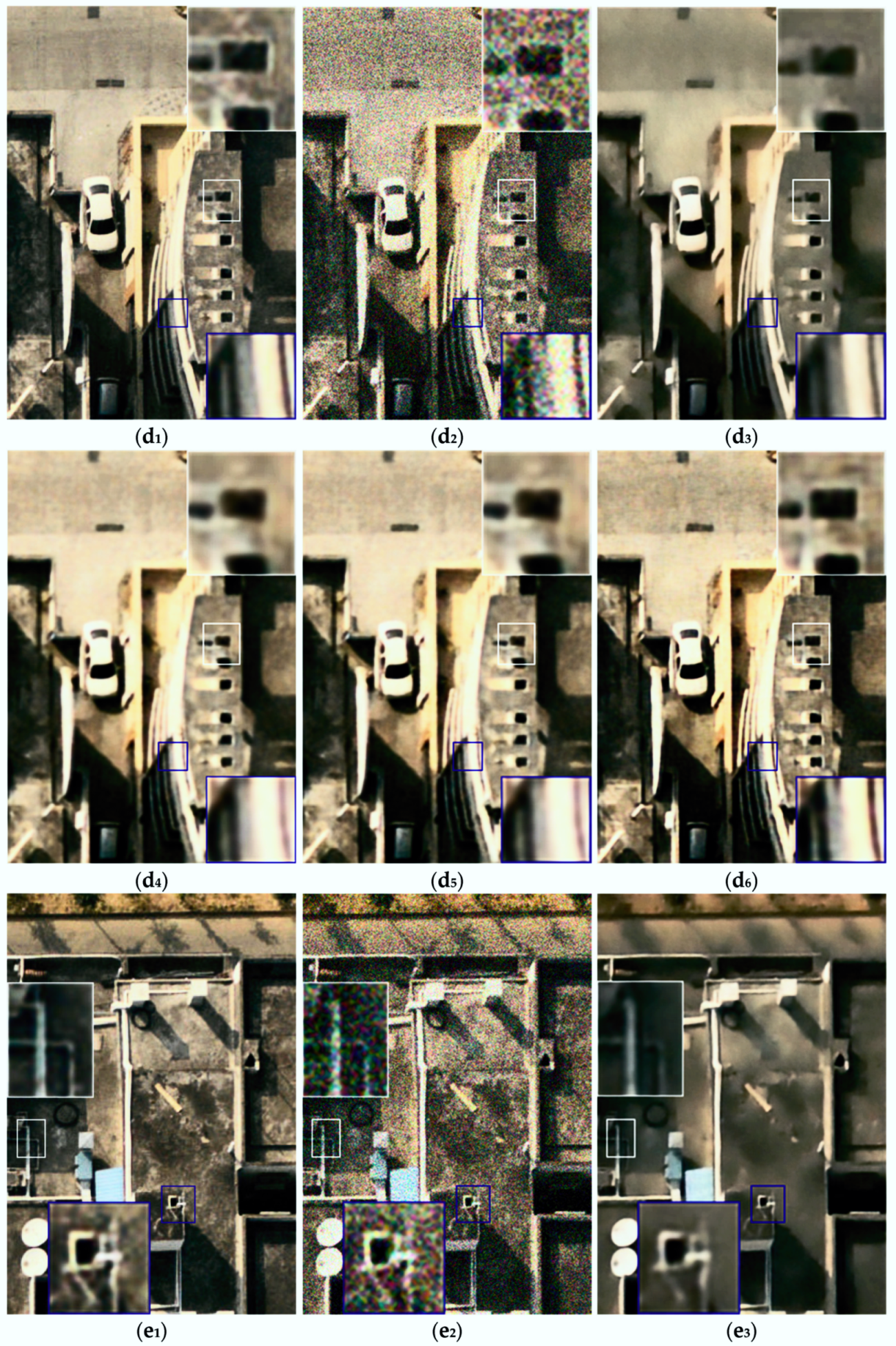

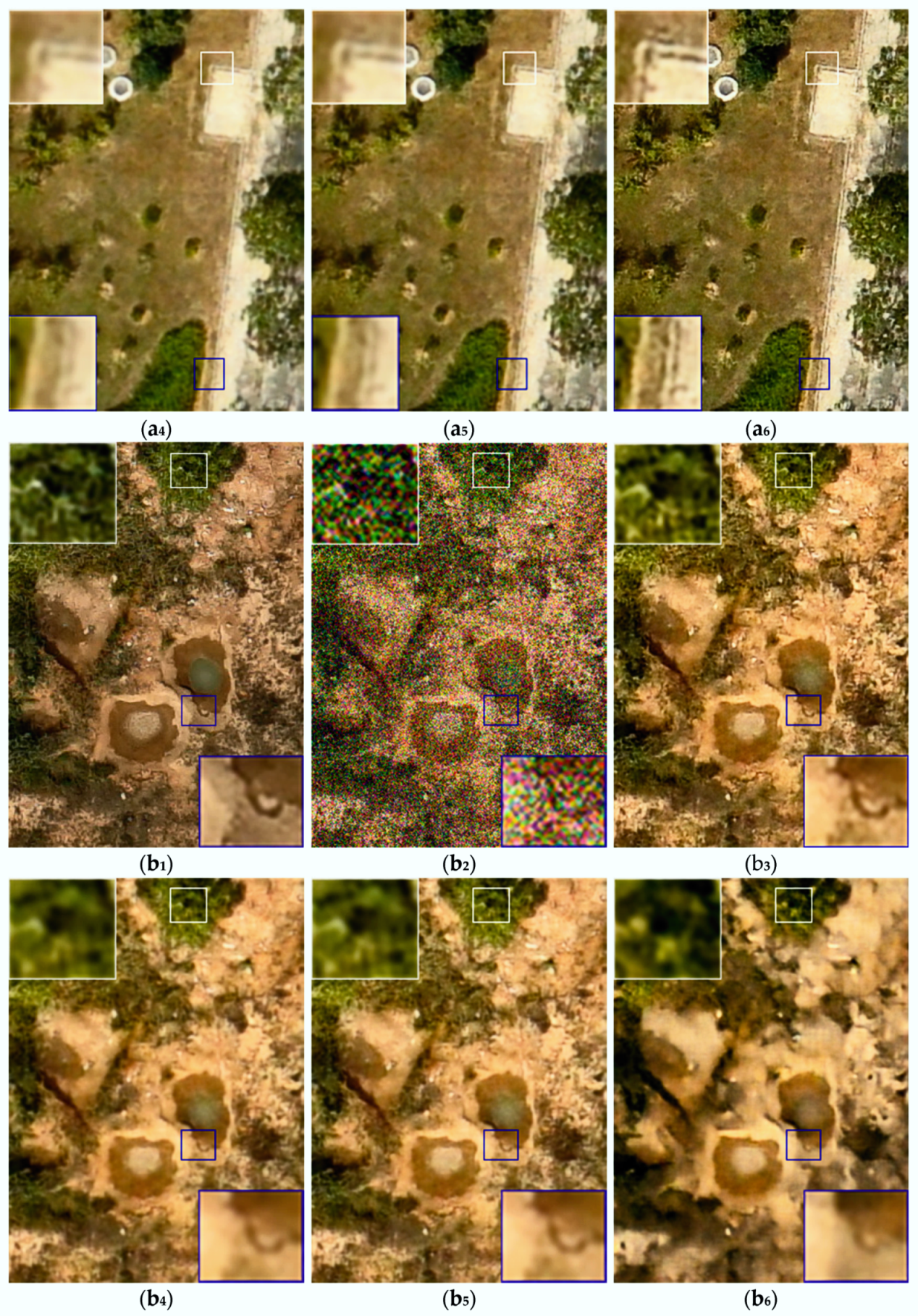

3.2. Comparison and Qualitative Evaluation

3.2.1. Comparion in the Traditional Way

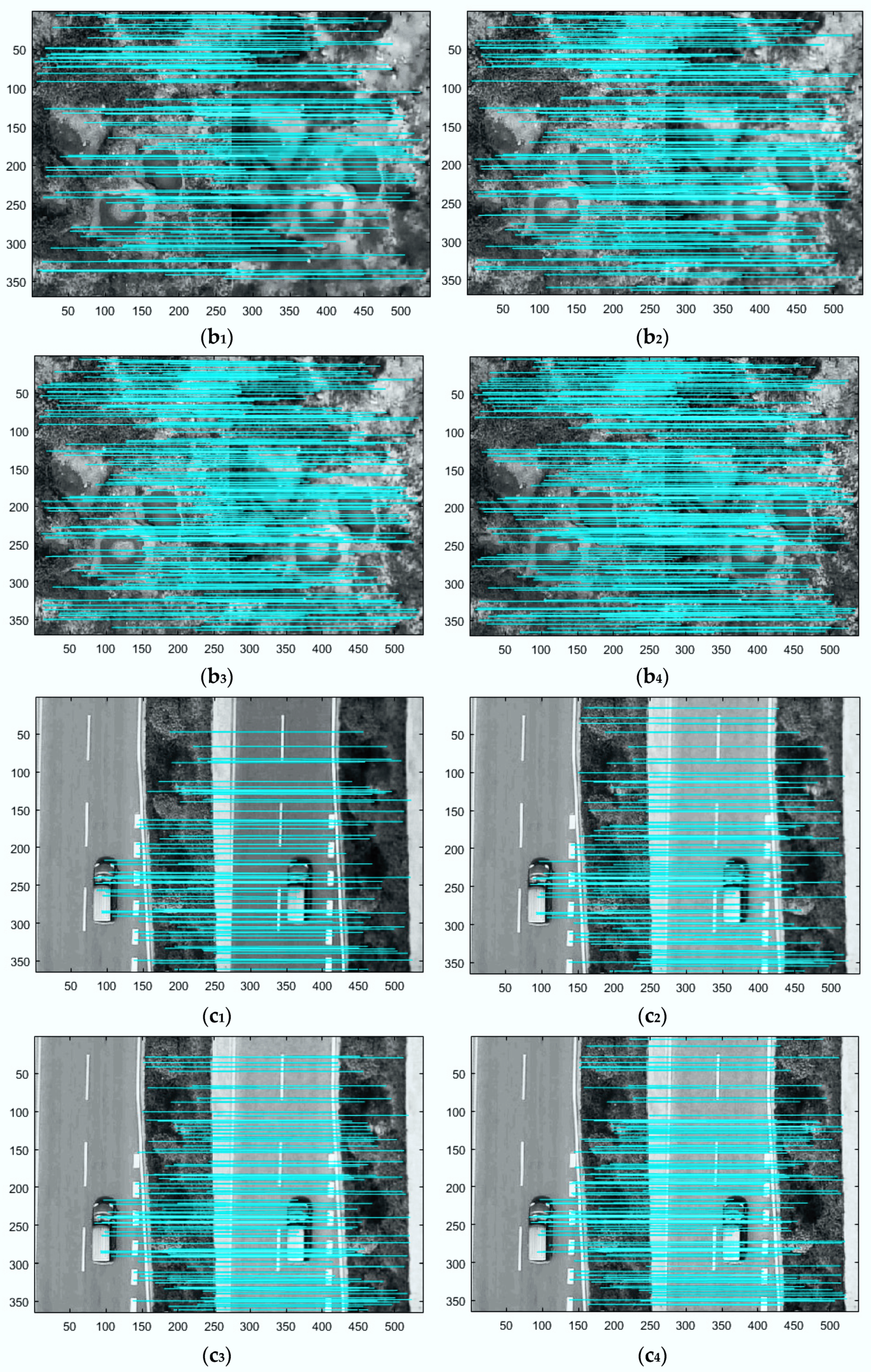

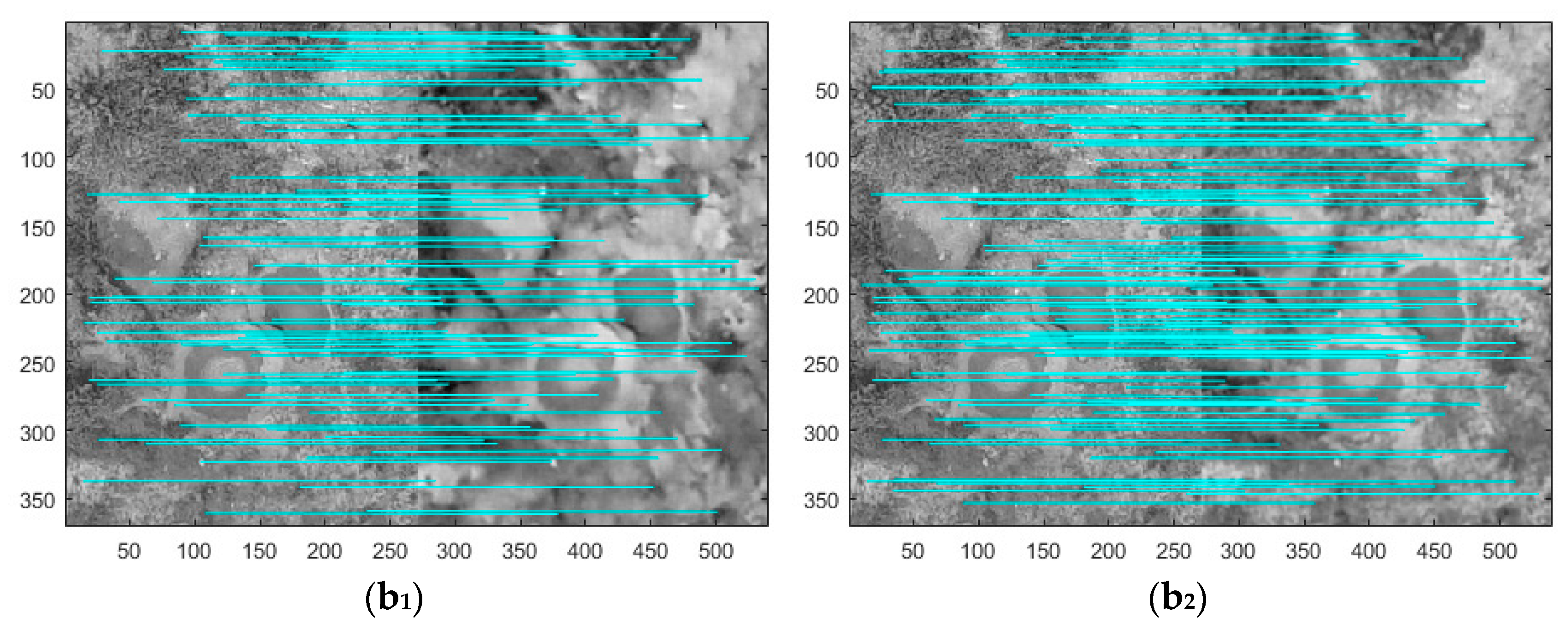

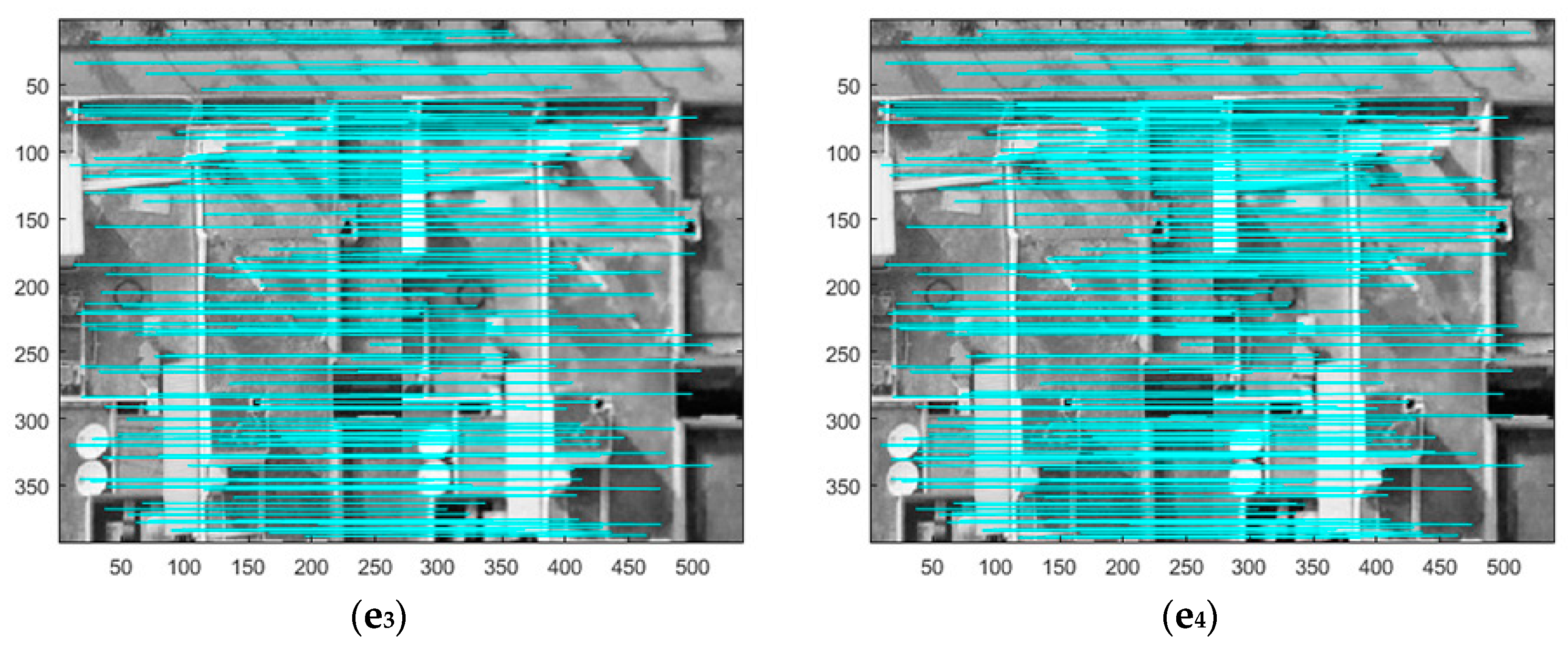

3.2.2. Compare Denoising Results Using Image Matching

3.2.3. Compare Denoising Results Using Image Classification

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Liu, L.; Huang, F.; Li, G.; Liu, Z. Remote-Sensing Image Denoising Using Partial Differential Equations and Auxiliary Images as Priors. IEEE Geosci. Remote Sens. Lett. 2012, 9, 358–362. [Google Scholar] [CrossRef]

- Rajapriyadharshini, R.; Benadict, R.J. SAR Image Denoising Via Clustering Based Linear Discriminant Analysis. In Proceedings of the International Conference on Innovations in Information Embedded and Communication Systems 2015, Coimbatore, India, 19–20 March 2015; pp. 1–6. [Google Scholar]

- Bhosale, N.P.; Manza, R.R.; Kale, K.V. Design and Development of Wavelet Filter to Remove Noise from ASTER Remote Sensing Images. Int. J. Appl. Eng. Res. 2015, 10, 29–34. [Google Scholar]

- Wang, H.; Wang, J.; Jin, H.; Li, H.; Jia, D.; Ma, Y.; Liu, Y.; Yao, F.; Yang, Q. High-order balanced M-band multiwavelet packet transform-based remote sensing image denoising. EURASIP J. Adv. Signal Process. 2016, 2016, 10. [Google Scholar] [CrossRef]

- Xu, S.; Zhou, Y.; Xiang, H.; Li, S. Remote Sensing Image Denoising Using Patch Grouping-Based Nonlocal Means Algorithm. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2275–2279. [Google Scholar] [CrossRef]

- Penna, P.A.A.; Mascarenhas, N.D.A. (Non-)homomorphic approaches to denoise intensity SAR images with non-local means and stochastic distances. Comput. Geosci. 2017, 18, 127–128. [Google Scholar] [CrossRef]

- Kim, B.H.; Kim, M.Y.; Chae, Y.S. Background Registration-Based Adaptive Noise Filtering of LWIR/MWIR Imaging Sensors for UAV Applications. Sensors 2018, 18, 60. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.; Yan, L.; Fang, H.; Liu, H. Simultaneous Destriping and Denoising for Remote Sensing Images With Unidirectional Total Variation and Sparse Representation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1051–1055. [Google Scholar] [CrossRef]

- Cerra, D.; Bieniarz, J.; Storch, T.; Müller, R.; Reinartz, P. Restoration of EnMAP Data through Sparse Reconstruction. In Proceedings of the 7th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing—WHISPERS 2015, Tokyo, Japan, 2–5 June 2015. [Google Scholar]

- Xu, B.; Cui, Y.; Li, Z.; Yang, J. An Iterative SAR Image Filtering Method Using Nonlocal Sparse Model. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1635–1639. [Google Scholar] [CrossRef]

- Jain, V.; Seung, H.S. Natural Image Denoising with Convolutional Networks. In Proceedings of the International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–11 December 2008; pp. 769–776. [Google Scholar]

- Xie, J.; Xu, L.; Chen, E. Image Denoising and Inpainting with Deep Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 341–349. [Google Scholar]

- Harmeling, S. Image denoising: Can plain Neural Networks compete with BM3D? In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2392–2399. [Google Scholar]

- Wu, Y.; Zhao, H.; Zhang, L. Image Denoising with Rectified Linear Units. In Proceedings of the International Conference on Neural Information Processing 2014, Kuching, Malaysia, 3–6 November 2014; pp. 142–149. [Google Scholar]

- Xu, L.; Ren, J.S.J.; Liu, C.; Jia, J. Deep convolutional neural network for image deconvolution. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 1790–1798. [Google Scholar]

- Li, H.M. Deep Learning for Image Denoising. Int. J. Signal Process. Image Process. Pattern Recognit. 2014, 7, 171–180. [Google Scholar] [CrossRef]

- Mao, X.J.; Shen, C.; Yang, Y.B. Image Restoration Using Very Deep Convolutional Encoder-Decoder Networks with Symmetric Skip Connections. In Proceedings of the Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Ioffe, S.; Szegedy, S. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Huang, R.; Zhang, S.; Li, T.; He, R. Beyond Face Rotation: Global and Local Perception GAN for Photorealistic and Identity Preserving Frontal View Synthesis. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2458–2467. [Google Scholar]

- Caballero, J.; Ledig, C.; Aitken, A.; Acosta, A.; Totz, J.; Wang, Z.; Shi, W. Real-Time Video Super-Resolution with Spatio-Temporal Networks and Motion Compensation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Huang, X.; Li, Y.; Poursaeed, O.; Hopcroft, J.; Belongie, S. Stacked Generative Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1866–1875. [Google Scholar]

- He, K.; Zhang, X.; Ren, R.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- He, K.; Zhang, X.; Ren, R.; Sun, J. Identity Mappings in Deep Residual Networks. In Proceedings of the European Conference on Computer Vision (ECCV2016), Amsterdam, The Netherlands, 22–29 October 2016; pp. 630–645. [Google Scholar]

- Ren, S.; Girshick, R.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-based Fully Convolutional Networks. In Proceedings of the Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Johnson, J.; Alahi, A.; Li, F.F. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In Proceedings of the European Conference on Computer Vision (ECCV2016), Amsterdam, The Netherlands, 22–29 October 2016; pp. 694–711. [Google Scholar]

- Kiasari, M.A.; Moirangthem, D.S.; Lee, M. Generative Moment Matching Autoencoder with Perceptual Loss. In Proceedings of the ICONIP 2017, Guangzhou, China, 14–18 November 2017; pp. 226–234. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D. Generative Adversarial Networks. Adv. Neural Inf. Process. Syst. 2014, 3, 2672–2680. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv, 2015; arXiv:1511.06434. [Google Scholar]

- Dosovitskiy, A.; Brox, T. Generating images with perceptual similarity metrics based on deep networks. In Proceedings of the 2016 Advances in Neural Information Processing Systems (NIPS), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- Mathieu, M.; Couprie, C.; Lecun, Y. Deep multi-scale video prediction beyond mean square error. arXiv, 2016; arXiv:1511.05440. [Google Scholar]

- Yu, X.; Porikli, F. Ultra-resolving face images by discriminative generative networks. In Proceedings of the European Conference on Computer Vision (ECCV2016), Amsterdam, The Netherlands, 22–29 October 2016; pp. 318–333. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D. Distinctive image features from scale-invariant key points. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

| Images | Method [8] | Method [5] | Method [17] | Ours |

|---|---|---|---|---|

| Noise Level: 20 | ||||

| a | 30.7543 | 30.9581 | 31.0174 | 31.2741 |

| b | 30.3965 | 30.5583 | 30.6967 | 30.9458 |

| c | 31.6907 | 31.9678 | 31.8534 | 32.1033 |

| d | 29.6358 | 29.8145 | 29.9543 | 30.2104 |

| e | 30.2235 | 30.4169 | 30.5576 | 30.7562 |

| Average results 1 | 30.5942 | 30.7657 | 30.9856 | 31.1123 |

| Images | Noise Level: 35 | |||

| a | 28.8165 | 28.6967 | 28.8871 | 29.0564 |

| b | 28.3084 | 28.5758 | 28.4312 | 28.9687 |

| c | 29.4874 | 29.7321 | 29.7276 | 29.9576 |

| d | 27.5172 | 27.6954 | 27.8958 | 28.1354 |

| e | 28.2913 | 28.4782 | 28.6184 | 28.7858 |

| Average results | 28.4358 | 28.6127 | 28.7968 | 28.9854 |

| Images | Noise Level: 55 | |||

| a | 26.1869 | 26.3112 | 26.4984 | 26.7156 |

| b | 25.9276 | 26.1164 | 26.3658 | 26.5942 |

| c | 27.1962 | 27.3335 | 27.4795 | 27.6614 |

| d | 25.4956 | 25.6442 | 25.7841 | 25.9612 |

| e | 26.0517 | 26.2654 | 26.3127 | 26.5154 |

| Average results | 26.0014 | 26.2424 | 26.4912 | 26.6576 |

| Images | Method [8] | Method [5] | Method [17] | Ours |

|---|---|---|---|---|

| Noise Level: 20 | ||||

| a | 0.8799 | 0.8818 | 0.8841 | 0.8857 |

| b | 0.8776 | 0.8797 | 0.8804 | 0.8823 |

| c | 0.8857 | 0.8897 | 0.8895 | 0.8912 |

| d | 0.8704 | 0.8723 | 0.8741 | 0.8765 |

| e | 0.8731 | 0.8763 | 0.8779 | 0.8806 |

| Average results | 0.8756 | 0.8781 | 0.8796 | 0.8821 |

| Images | Noise Level: 35 | |||

| a | 0.8345 | 0.8362 | 0.8379 | 0.8406 |

| b | 0.8309 | 0.8339 | 0.8352 | 0.8371 |

| c | 0.8398 | 0.8441 | 0.8433 | 0.8468 |

| d | 0.8231 | 0.8249 | 0.8271 | 0.8289 |

| e | 0.8276 | 0.8303 | 0.8319 | 0.8343 |

| Average results | 0.8289 | 0.8312 | 0.8333 | 0.8369 |

| Images | Noise Level: 55 | |||

| a | 0.7682 | 0.7701 | 0.7719 | 0.7745 |

| b | 0.7639 | 0.7661 | 0.7679 | 0.7701 |

| c | 0.7739 | 0.7792 | 0.7798 | 0.7819 |

| d | 0.7245 | 0.7271 | 0.7289 | 0.7315 |

| e | 0.7589 | 0.7618 | 0.7641 | 0.7658 |

| Average results | 0.7601 | 0.7647 | 0.7662 | 0.7689 |

| Images | Method [8] | Method [5] | Method [17] | Ours |

|---|---|---|---|---|

| Noise Level 35/55 | Noise Level 35/55 | Noise Level 35/55 | Noise Level 35/55 | |

| a | 110/53 | 114/74 | 127/78 | 150/88 |

| b | 160/101 | 205/141 | 240/145 | 274/154 |

| c | 96/66 | 104/70 | 118/76 | 135/78 |

| d | 136/115 | 138/129 | 157/144 | 192/154 |

| e | 177/154 | 192/162 | 211/179 | 260/215 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.; Xiao, X.; Guo, B.; Qin, Q.; Chen, R. An Effective Image Denoising Method for UAV Images via Improved Generative Adversarial Networks. Sensors 2018, 18, 1985. https://doi.org/10.3390/s18071985

Wang R, Xiao X, Guo B, Qin Q, Chen R. An Effective Image Denoising Method for UAV Images via Improved Generative Adversarial Networks. Sensors. 2018; 18(7):1985. https://doi.org/10.3390/s18071985

Chicago/Turabian StyleWang, Ruihua, Xiongwu Xiao, Bingxuan Guo, Qianqing Qin, and Ruizhi Chen. 2018. "An Effective Image Denoising Method for UAV Images via Improved Generative Adversarial Networks" Sensors 18, no. 7: 1985. https://doi.org/10.3390/s18071985

APA StyleWang, R., Xiao, X., Guo, B., Qin, Q., & Chen, R. (2018). An Effective Image Denoising Method for UAV Images via Improved Generative Adversarial Networks. Sensors, 18(7), 1985. https://doi.org/10.3390/s18071985