Human Body Mixed Motion Pattern Recognition Method Based on Multi-Source Feature Parameter Fusion

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Principles and Systems

2.2. Selection of Common Gait Patterns

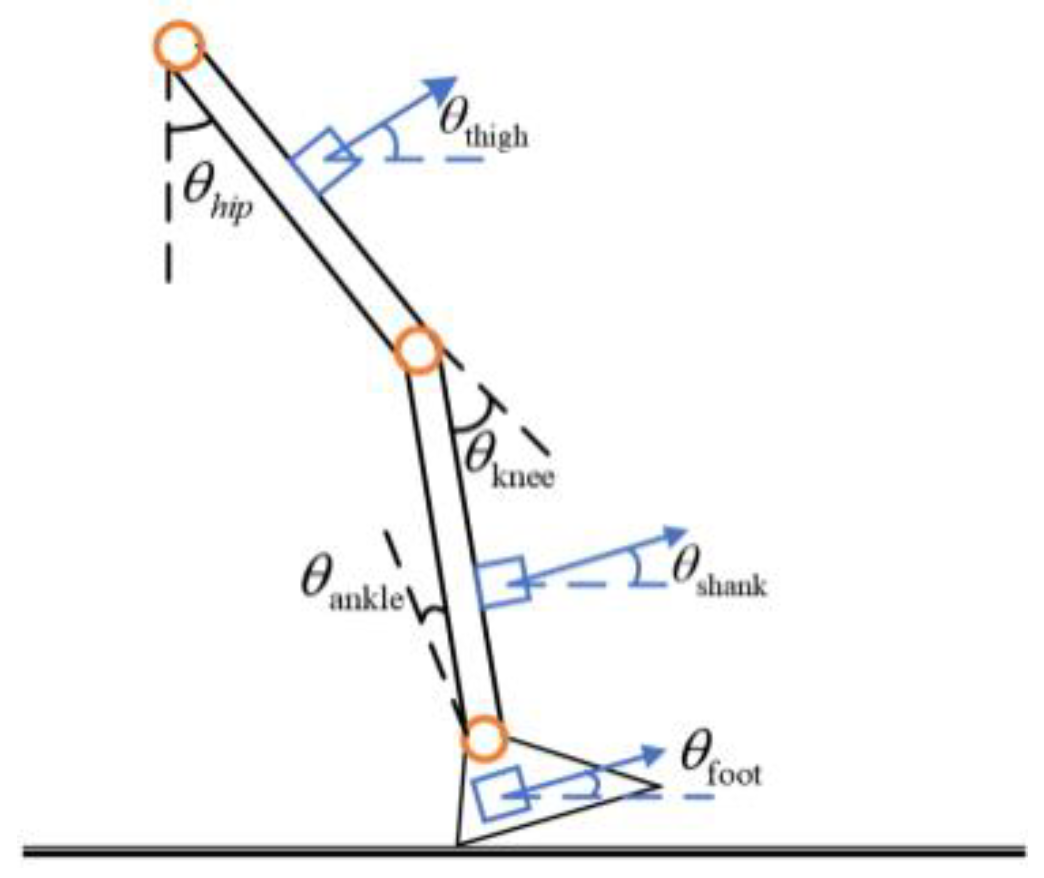

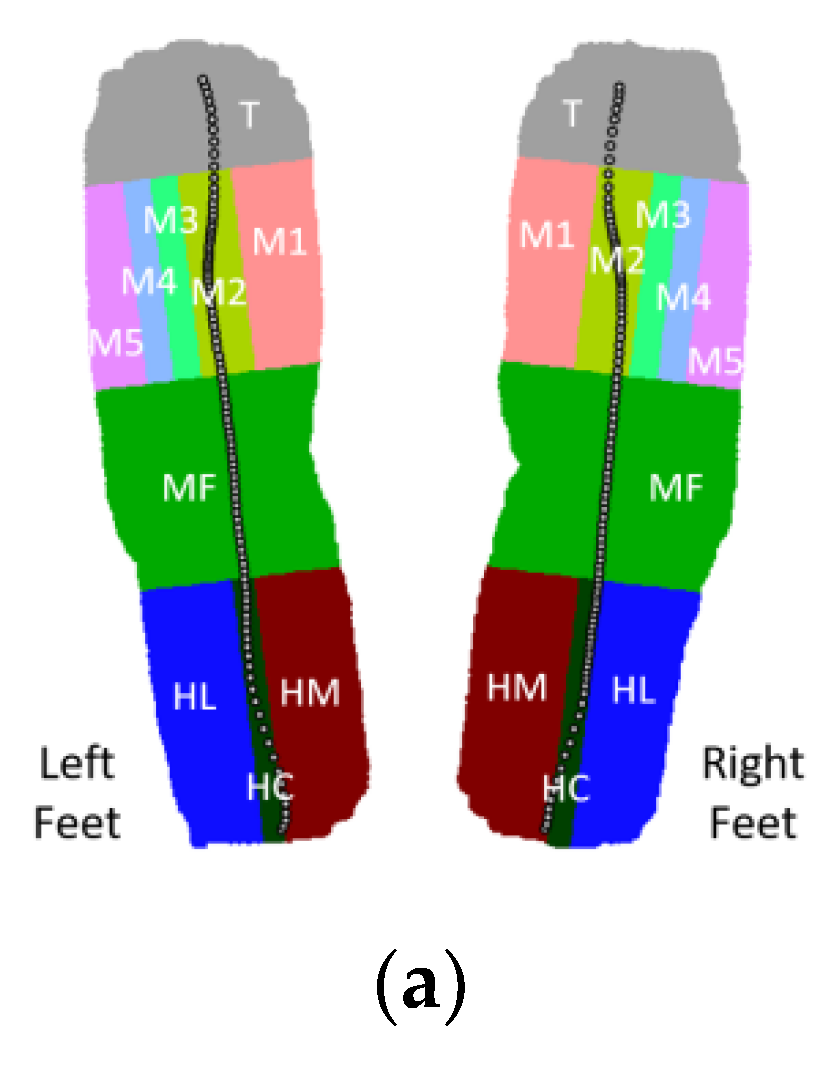

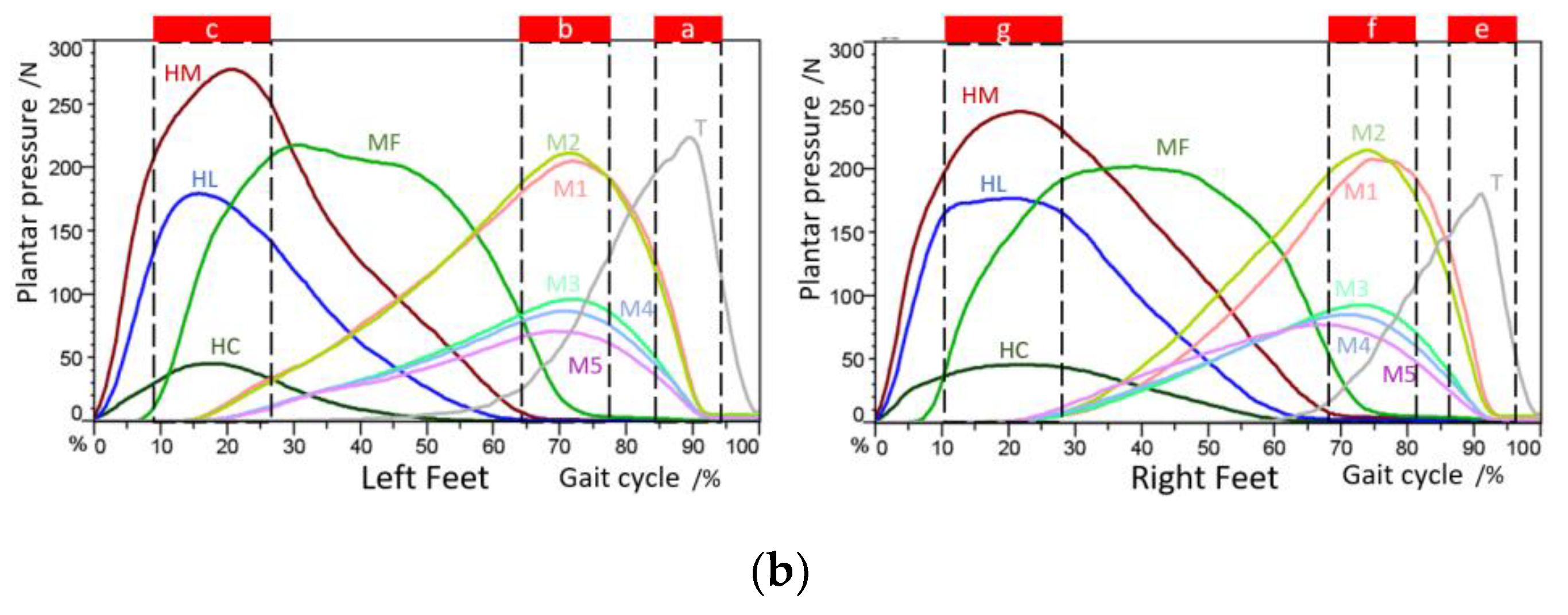

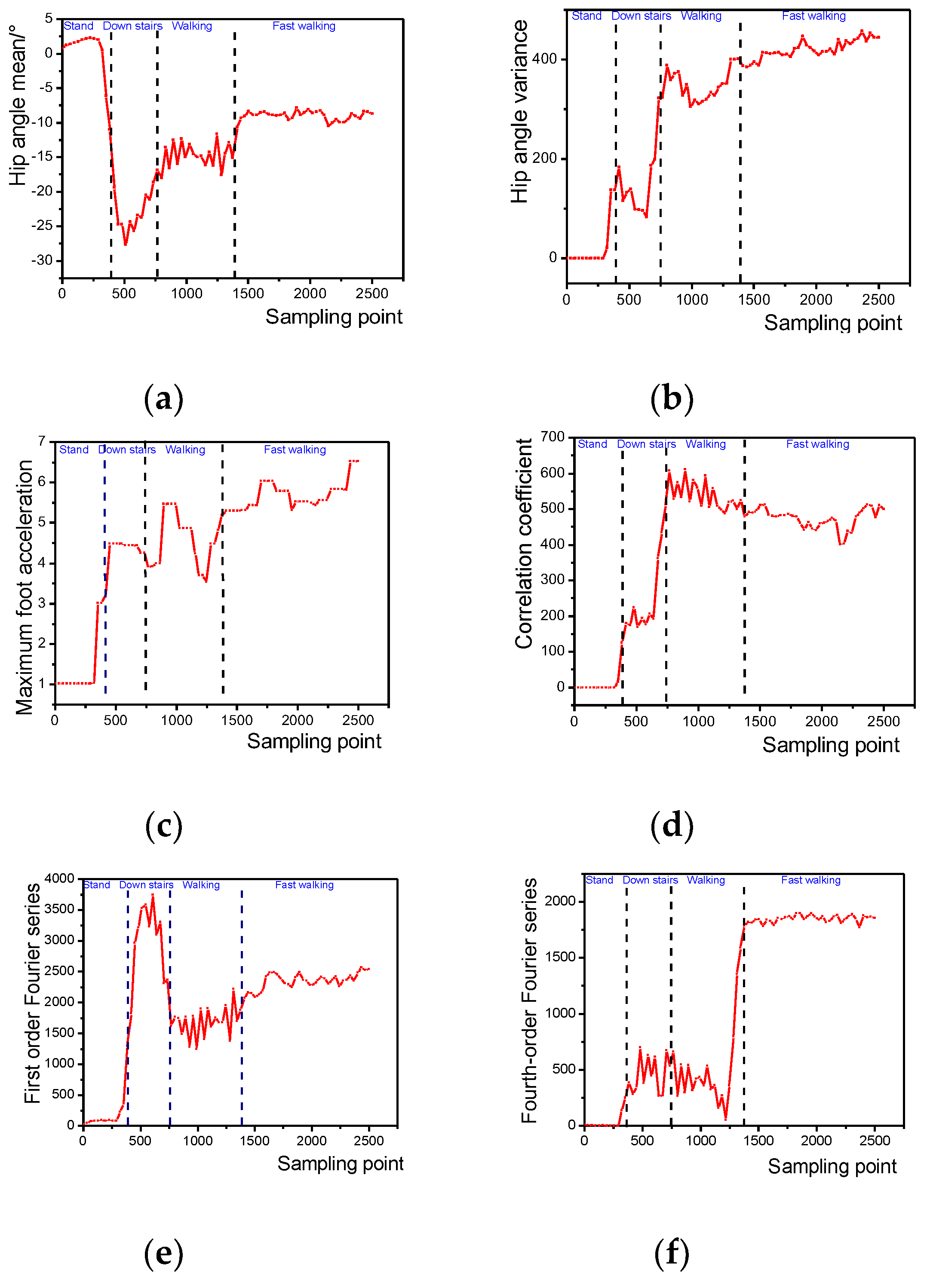

2.3. Feature Parameter Extraction

2.3.1. Time Domain Features and Frequency Domain Characteristics

2.3.2. Energy Domain Characteristics

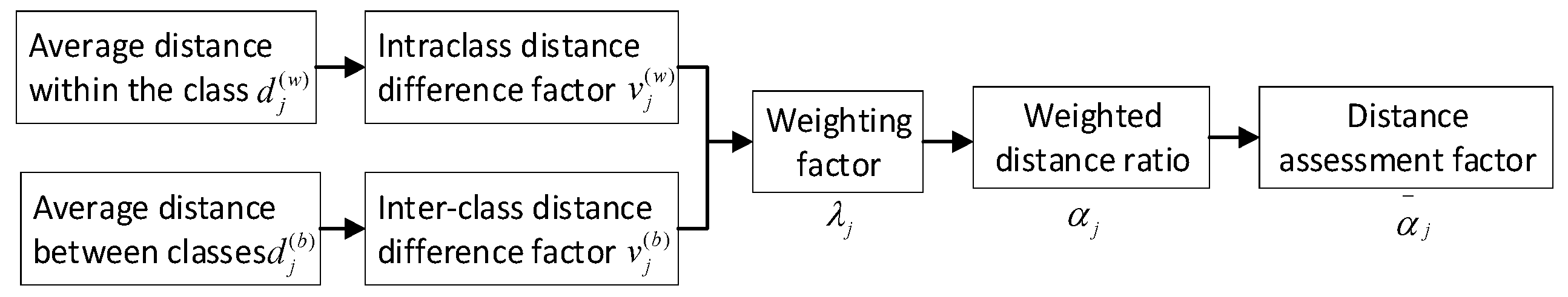

2.4. Distance-Based Feature Evaluation Method and Selection

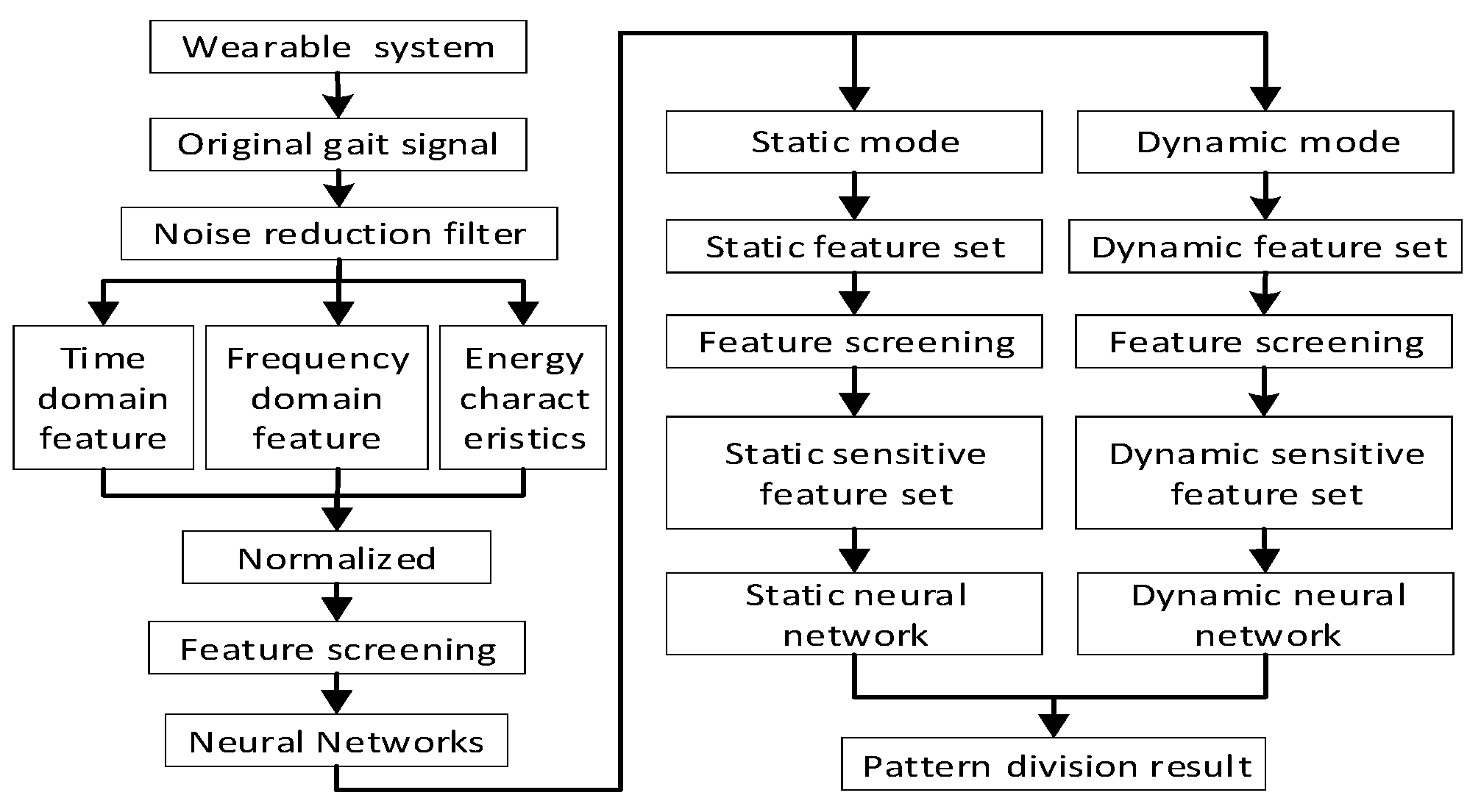

2.5. Motion State Recognition Model

3. Results

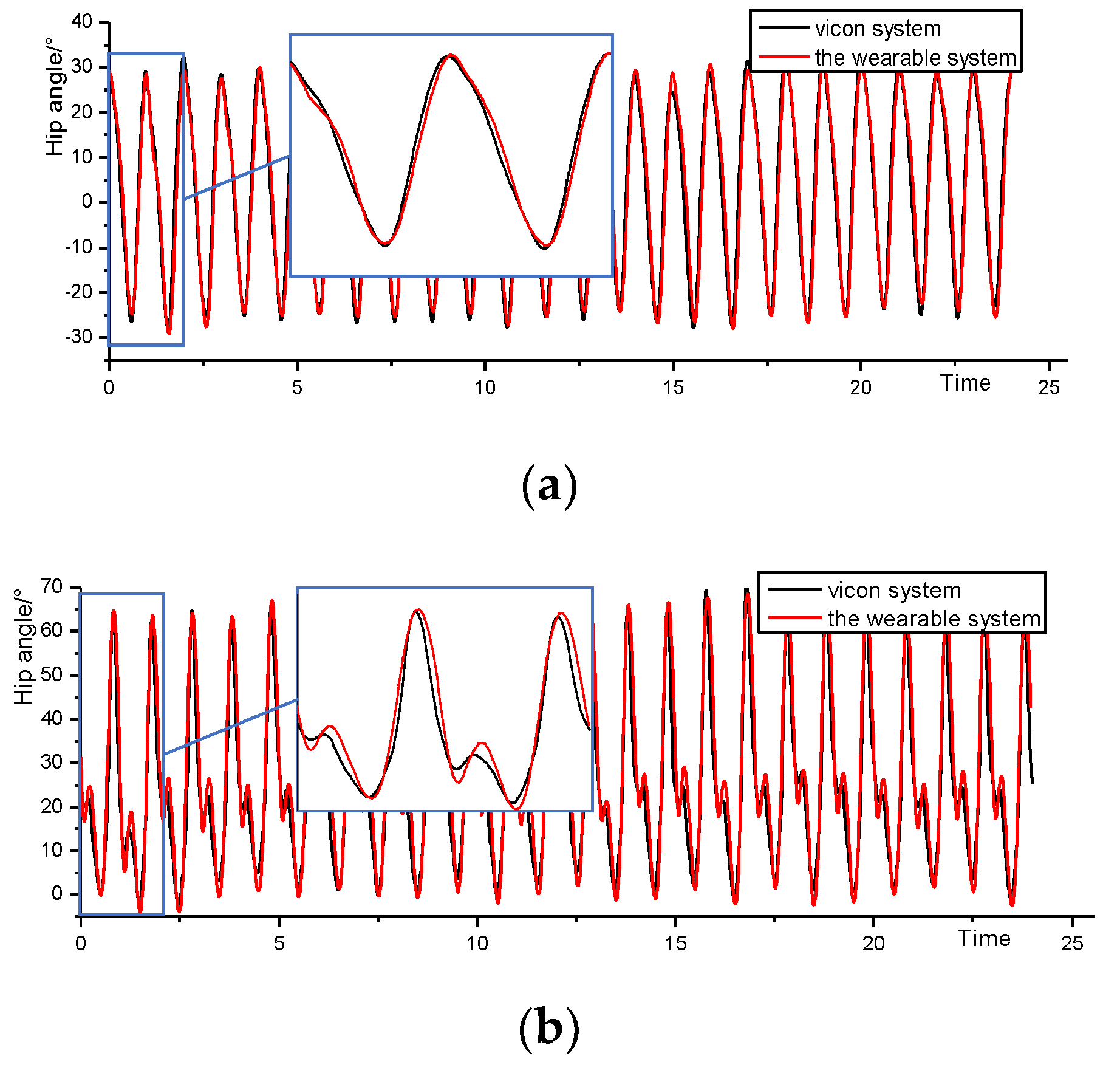

3.1. Commissioning and Calibration of the Experimental System

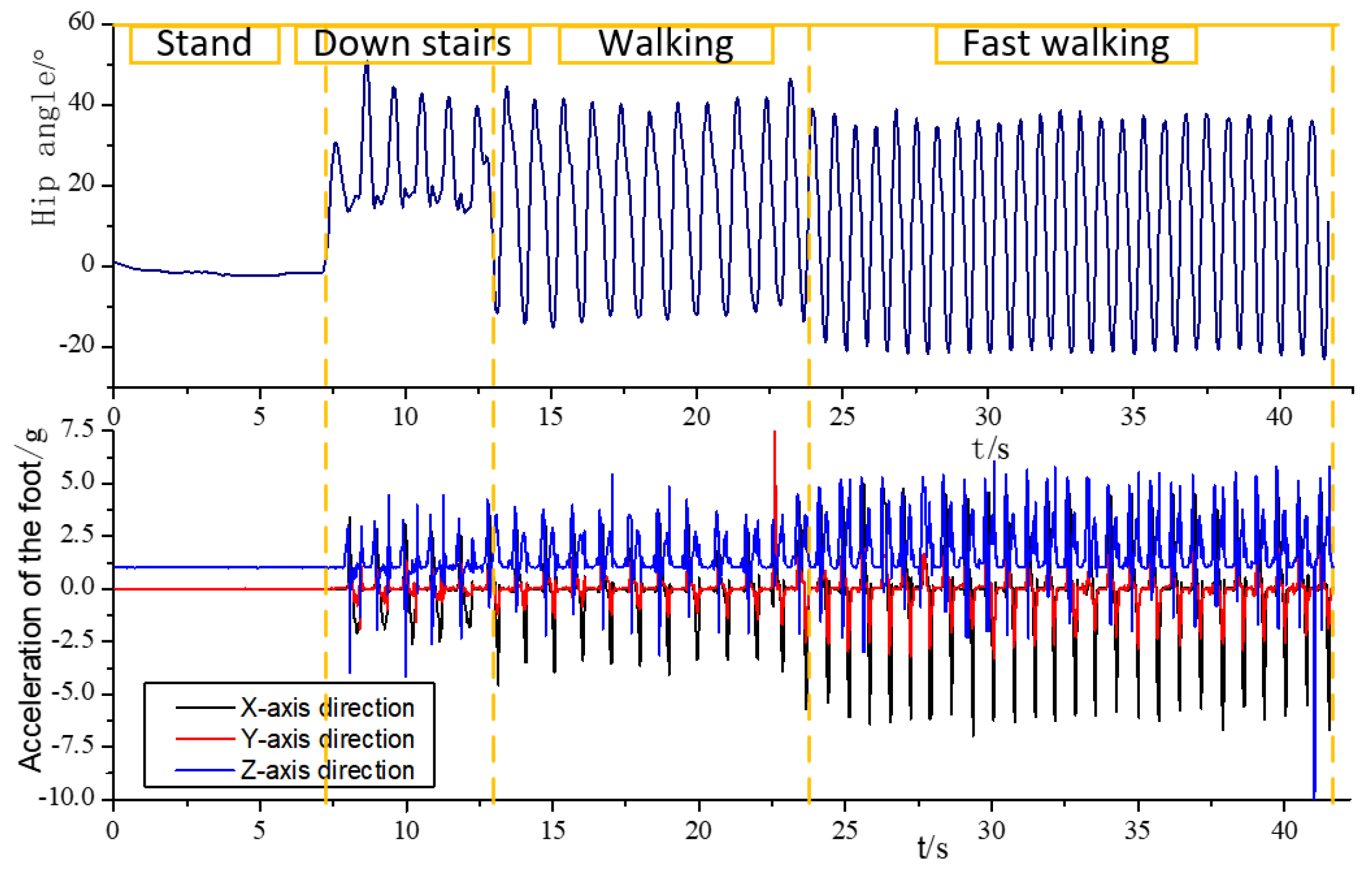

3.2. Procedure

3.3. Experimental and Applied Research

3.3.1. Single Motion Pattern or Gesture Recognition Experiment

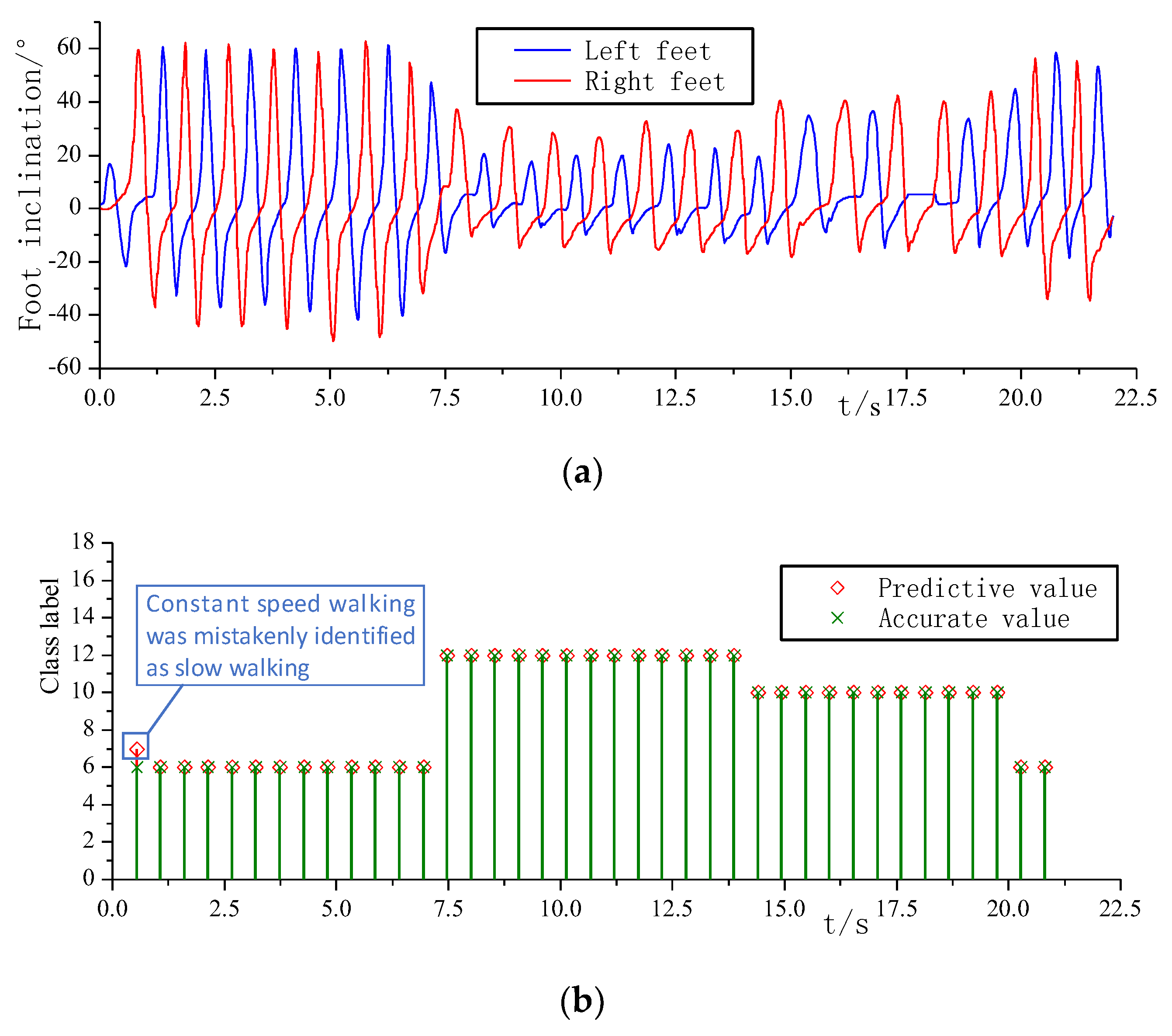

3.3.2. Recognition Experiment of Mixed Motion Mode

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| 20-Dimensional Static Sensitive Features | 40-Dimensional Dynamic Sensitive Features | ||||

|---|---|---|---|---|---|

| 3 | 63 | 1 | 46 | 95 | 110 |

| 7 | 67 | 6 | 54 | 98 | 114 |

| 9 | 69 | 9 | 61 | 99 | 121 |

| 12 | 72 | 19 | 64 | 101 | 123 |

| 13 | 75 | 20 | 67 | 102 | 124 |

| 15 | 76 | 29 | 69 | 103 | 127 |

| 16 | 78 | 31 | 75 | 105 | 128 |

| 18 | 86 | 34 | 77 | 107 | 131 |

| 26 | 87 | 38 | 79 | 108 | 136 |

| 27 | 139 | 42 | 93 | 109 | 138 |

References

- Wang, Z.; Qiu, S.; Cao, Z.; Jiang, M. Quantitative assessment of dual gait analysis based on inertial sensors with body sensor network. Sens. Rev. 2013, 33, 48–56. [Google Scholar] [CrossRef]

- Turaga, P.; Chellappa, R.; Subrahmanian, V.S.; Udrea, O. Machine recognition of human activities: A survey. IEEE Trans. Circuits Syst. Video Technol. 2008, 18, 1473. [Google Scholar] [CrossRef] [Green Version]

- Hu, W.; Tan, T.; Wang, L.; Maybank, S. A survey on visual surveillance of object motion and behaviors. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2004, 34, 334–352. [Google Scholar] [CrossRef]

- Taborri, J.; Palermo, E.; Rossi, S.; Cappa, P. Gait Partitioning Methods: A Systematic Review. Sensors 2016, 16, 66. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mannini, A.; Trojaniello, D.; Cereatti, A.; Sabatini, A. A Machine Learning Framework for Gait Classification Using Inertial Sensors: Application to Elderly, Post-Stroke and Huntington’s Disease Patients. Sensors 2016, 16, 134. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, J.; Lockhart, T.E.; Soangra, R. Classifying Lower Extremity Muscle Fatigue during Walking Using Machine Learning and Inertial Sensors. Ann. Biomed. Eng. 2014, 42, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chalmers, E.; Le, J.; Sukhdeep, D.; Watt, J.; Andersen, J.; Lou, E. Inertial sensing algorithms for long-term foot angle monitoring for assessment of idiopathic toe-walking. Gait Posture 2014, 39, 485–489. [Google Scholar] [CrossRef]

- Yang, M.; Zheng, H.; Wang, H.; McClean, S.; Hall, J.; Harris, N. A machine learning approach to assessing gait patterns for Complex Regional Pain Syndrome. Med. Eng. Phys. 2012, 34, 740–746. [Google Scholar] [CrossRef] [PubMed]

- Yuan, K.; Wang, Q.; Wang, L. Fuzzy-Logic-Based Terrain Identification with Multisensor Fusion for Transtibial Amputees. IEEE/ASME Trans. Mechatron. 2015, 20, 618–630. [Google Scholar] [CrossRef]

- Khan, A.M.; Lee, Y.K.; Kim, T.S. Accelerometer signal-based human activity recognition using augmented autoregressive model coefficients and artificial neural nets. In Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 5172–5175. [Google Scholar]

- Chen, B.; Wang, X.; Huang, Y.; Wei, K.; Wang, Q. A foot-wearable interface for locomotion mode recognition based on discrete contact force distribution. Mechatronics 2015, 32, 12–21. [Google Scholar] [CrossRef]

- Parri, A.; Yuan, K.; Marconi, D.; Yan, T.; Crea, S.; Munih, M.; Lova, R.M.; Vitiello, N.; Wang, Q. Real-Time Hybrid Locomotion Mode Recognition for Lower Limb Wearable Robots. IEEE/ASME Trans. Mechatron. 2017, 22, 2480–2491. [Google Scholar] [CrossRef]

- Ngo, T.T.; Makihara, Y.; Nagahara, H.; Mukaigawa, Y.; Yagi, Y. Similar gait action recognition using an inertial sensor. Pattern Recognit. 2015, 48, 1289–1301. [Google Scholar] [CrossRef]

- Liu, L.; Yang, P.; Liu, Z. Lower limb motion pattern recognition based on multi-source information and generalized regression neural network. Robot 2015, 37, 310–317. [Google Scholar]

- Tkach, D.C.; Hargrove, L.J. Neuromechanical sensor fusion yields highest accuracies in predicting ambulation mode transitions for trans-tibial amputees. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 3074–3077. [Google Scholar]

- Hargrove, L.J.; Simon, A.M.; Young, A.J.; Lipschutz, R.D.; Finucane, S.B.; Smith, D.G.; Kuiken, T.A. Robotic leg control with EMG decoding in an amputee with nerve transfers. N. Engl. J. Med. 2013, 369, 1237. [Google Scholar] [CrossRef] [PubMed]

- Kavanagh, J.J.; Menz, H.B. Accelerometry: A technique for quantifying movement patterns during walking. Gait Posture 2008, 28, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Lau, H.Y.; Tong, K.Y. The reliability of using accelerometer and gyroscope for gait event identification on persons with dropped foot. Gait Posture 2008, 27, 248–257. [Google Scholar] [CrossRef]

- Hu, J.-S.; Sun, K.-C.; Cheng, C.-Y. A kinematic human-walking model for the normal-gait-speed estimation using tri-axial acceleration signals at waist location. IEEE Trans. Biomed. Eng. 2013, 60, 2271–2279. [Google Scholar]

- Bejarano, N.C.; Ambrosini, E.; Pedrocchi, A.; Ferrigno, G.; Monticone, M.; Ferrante, S. A novel adaptive real-time algorithm to detect gait events from wearable sensors. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 23, 413–422. [Google Scholar] [CrossRef]

- Kong, K.; Tomizuka, M. A gait monitoring system based on air pressure sensors embedded in a shoe. IEEE/ASME Trans. Mechatron. 2009, 14, 358–370. [Google Scholar] [CrossRef]

- Hanlon, M.; Anderson, R. Real-time gait event detection using wearable sensors. Gait Posture 2009, 30, 523–527. [Google Scholar] [CrossRef]

- Howell, A.M.; Kobayashi, T.; Hayes, H.A.; Foreman, K.B.; Bamberg, S.J.M. Kinetic gait analysis using a low-cost insole. IEEE Trans. Biomed. Eng. 2013, 60, 3284–3290. [Google Scholar] [CrossRef] [PubMed]

- Ma, H.; Liao, W.H. Human gait modeling and analysis using a semi-Markov process with ground reaction forces. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 25, 597–607. [Google Scholar] [CrossRef] [PubMed]

- Zhu, A.; Song, J.; Shen, H.; Shen, Z. Innovative design of the Foot of Exoskeleton robot. In Proceedings of the 2018 IEEE International Conference on Information and Automation (ICIA), Wuyishan, China, 11–13 August 2018; pp. 750–755. [Google Scholar]

- Lei, Y.; He, Z.; Yan, Y.; Hu, Q. Mechanical Fault Diagnosis Model Based on Feature Evaluation and Neural Network. Ph.D. Thesis, Xi’an Jiaotong University, Xi’an, China, 2006. [Google Scholar]

- Rumelhart, D.E.; Meclelland, J.L. Parallel Distributed Processing; MIT: Cambridge, MA, USA, 1986. [Google Scholar]

| Category | Condition | Gait Patterns | Detailed Descriptions | State Graph | Label |

|---|---|---|---|---|---|

| Static posture | Flat road | Standing still | Standing vertically |  | 1 |

| Standing with weight | Load 5 KG, stand still |  | 2 | ||

| Sitting | Sit down, two calves vertical ground |  | 3 | ||

| One knee down | Left leg bent, right knee touchdown |  | 4 | ||

| Dynamic attitude | Flat road | Fast walking | Walking speed is 4.5 km/h |  | 5 |

| Constant speed walking | Walking speed is 3.0 km/h |  | 6 | ||

| Slow walking | Walking speed is 2.0 km/h |  | 7 | ||

| Walking in place | Step frequency is 1.0 hz |  | 8 | ||

| Jogging | Running speed 6.0 km/h |  | 9 | ||

| Stepped pavement | Continuously stepping up | Two legs alternately as front legs |  | 10 | |

| Continuously stepping down | Two legs alternately as front legs |  | 11 | ||

| Single step step up | Right leg as a forward leg, left leg follows |  | 12 | ||

| Single step step down | Right leg as a forward leg, left leg follows |  | 13 | ||

| Slope road | Uphill | Constant slope, constant speed walking |  | 14 | |

| Downhill | Constant slope, constant speed walking |  | 15 |

| Number | Feature | Feature Content |

|---|---|---|

| 1–30 | Mean | Average of the angle of the hip, knee, and ankle; (6 dimensions) Average of the three-axis acceleration of the hip, knee, and ankle; (18 dimensions) Average of pressure on the left and right feet. (6 dimensions) |

| 31–60 | Variance | The variance of the hip, knee and ankle angles of the two legs; (6 dimensions) The variance of the triaxial acceleration of hips, knees and ankles of two legs; (18 dimensions) Variance of three pressure points on both feet. (6 dimensions) |

| 61–120 | Maximum value | Maximum and range of angles for hips, knees, and ankles; (12 dimensions) Maximum and range values of triaxial acceleration of hip, knee and ankle; (36 dimensions) Maximum and range values of the three pressure points of left and right feet. (12 dimensions) |

| 121–128 | Correlation coefficient | Hip and knee angle correlation coefficient of the left leg and right leg; (2 dimensions) Knee and ankle angle correlation coefficient of the left leg and right leg; (2 dimensions) Acceleration coefficient of left foot and right foot in x-z plane; (1 dimension) Angle correlation coefficient between left hip and right hip; (1 dimension) Angle correlation coefficient between left knee and right knee; (1 dimension) Angle correlation coefficient between left ankle and right ankle joint. (1 dimension) |

| 129–138 | Fourier series | Fifth-order Fourier series of hip and knee angles of left leg. (10 dimensions) |

| 139–140 | SMA | The amplitude of the left and right foot acceleration signals. (2 dimensions) |

| 141 | Wavelet energy entropy | Wave energy entropy of left hip joint angle. (1 dimension) |

| First Network | Static Neural Network | Dynamic Neural Network | |

|---|---|---|---|

| Input layer | 5 | 20 | 40 |

| Hidden layer | 25 | 100 | 200 |

| Output layer | 1 | 1 | 1 |

| Identification Project | First Neural Network | Static Neural Network | Dynamic Neural Network | Overall Model |

|---|---|---|---|---|

| Training | 100% | 100% | 100% | 100% |

| Test | 100% | 93.57% | 100% | 98.28% |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 88 | 1 | |||||||||||||

| 2 | 26 | 123 | |||||||||||||

| 3 | 84 | ||||||||||||||

| 4 | 98 | ||||||||||||||

| 5 | 104 | ||||||||||||||

| 6 | 84 | ||||||||||||||

| 7 | 125 | ||||||||||||||

| 8 | 112 | ||||||||||||||

| 9 | 104 | ||||||||||||||

| 10 | 97 | ||||||||||||||

| 11 | 87 | ||||||||||||||

| 12 | 127 | ||||||||||||||

| 13 | 126 | ||||||||||||||

| 14 | 95 | ||||||||||||||

| 15 | 91 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, J.; Zhu, A.; Tu, Y.; Wang, Y.; Arif, M.A.; Shen, H.; Shen, Z.; Zhang, X.; Cao, G. Human Body Mixed Motion Pattern Recognition Method Based on Multi-Source Feature Parameter Fusion. Sensors 2020, 20, 537. https://doi.org/10.3390/s20020537

Song J, Zhu A, Tu Y, Wang Y, Arif MA, Shen H, Shen Z, Zhang X, Cao G. Human Body Mixed Motion Pattern Recognition Method Based on Multi-Source Feature Parameter Fusion. Sensors. 2020; 20(2):537. https://doi.org/10.3390/s20020537

Chicago/Turabian StyleSong, Jiyuan, Aibin Zhu, Yao Tu, Yingxu Wang, Muhammad Affan Arif, Huang Shen, Zhitao Shen, Xiaodong Zhang, and Guangzhong Cao. 2020. "Human Body Mixed Motion Pattern Recognition Method Based on Multi-Source Feature Parameter Fusion" Sensors 20, no. 2: 537. https://doi.org/10.3390/s20020537