Earthquake-Induced Building-Damage Mapping Using Explainable AI (XAI)

Abstract

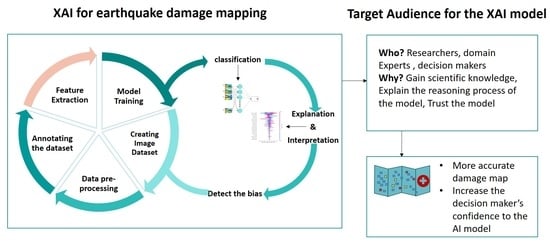

:1. Introduction

2. Related Work

| Main Findings | Features | References |

|---|---|---|

| Detected the collapsed buildings | Shape | Gamba et al. [21] |

| Radiometric and morphological | Pesaresi et al. [26] | |

| DSM | Turker et al. [27] | |

| Radiometric and morphological | Pesaresi et al. [26] | |

| Colour, shape, texture, height | Yu et al. [33] | |

| Classified buildings as damaged and non-damaged | Spectral, textural, and spatial relations | Li et al. [34] |

| Variance and the direction of edge intensity, texture | Mitomi et al. [35] | |

| Spectral, textural, and structural | Cooner et al. [36] | |

| Textural and colour | Rasika et al. [37] | |

| Spectral and textural | Rathjeh et al. [25] | |

| Spectral, textural, and height | Ural et al. [32] | |

| geometric | Wang et al. [38] | |

| Classified buildings as damaged, destroyed, possibly damaged, or no damage | Spectral, intensity, and coherence, DEM | Adriano et al. [20] |

| Classified the image into debris, non-debris, or no change | Spectral, textural, and brightness, shape | Khodaverdizahraee et al. [39] |

| Classified buildings as collapsed, partially collapsed, or undamaged | Normalised DSM, pixel intensity, and segment shape | Rezaian et al. [28] Rezaian [29] |

| Classified buildings as non-damaged, slightly damaged, low level of damage, | SAR texture | DellAqcua et al. [19] |

| Identified damaged areas | Spectral | Syifa et al. [9] |

3. Method

3.1. Multilayer Perceptron

3.2. Feature Extraction

3.3. Feature Analysis

3.4. Shapley Additive Explanations (SHAP)

3.4.1. SHAP Summary Plot

3.4.2. The Individual Force-Plot

4. Experiment and Results

4.1. Study Area

4.2. Data

4.3. Building Footprint

4.4. Copernicus Emergency Management Services

5. Discussions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Baker, S. San Francisco in ruins: The 1906 serial photographs of George R. Lawrence. Landscape 1989, 30, 9–14. [Google Scholar]

- Duan, F.; Gong, H.; Zhao, W. Collapsed houses automatic identification based on texture changes of post-earthquake aerial remote sensing image. In Proceedings of the 2010 18th International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; pp. 1–5. [Google Scholar]

- Kerle, N.; Nex, F.; Gerke, M.; Duarte, D.; Vetrivel, A. UAV-based structural damage mapping: A review. ISPRS Int. J Geo-Inf. 2020, 9, 14. [Google Scholar] [CrossRef] [Green Version]

- Janalipour, M.; Mohammadzadeh, A. Building damage detection using object-based image analysis and ANFIS from high-resolution image (Case study: BAM earthquake, Iran). IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 9, 1937–1945. [Google Scholar] [CrossRef]

- Ma, H.; Liu, Y.; Ren, Y.; Wang, D.; Yu, L.; Yu, J. Improved CNN classification method for groups of buildings damaged by earthquake, based on high resolution remote sensing images. Remote Sens. 2020, 12, 260. [Google Scholar] [CrossRef] [Green Version]

- Balz, T.; Liao, M. Building-damage detection using post-seismic high-resolution SAR satellite data. Int. J. Remote Sens. 2010, 31, 3369–3391. [Google Scholar] [CrossRef]

- Ma, Y.; Chen, F.; Liu, J.; He, Y.; Duan, J.; Li, X. An automatic procedure for early disaster change mapping based on optical remote sensing. Remote Sens. 2016, 8, 272. [Google Scholar] [CrossRef] [Green Version]

- Pham, T.-T.-H.; Apparicio, P.; Gomez, C.; Weber, C.; Mathon, D. Towards a rapid automatic detection of building damage using remote sensing for disaster management: The 2010 Haiti earthquake. Disaster Prev. Manag. 2014, 23, 53–66. [Google Scholar] [CrossRef]

- Syifa, M.; Kadavi, P.R.; Lee, C.-W. An artificial intelligence application for post-earthquake damage mapping in Palu, central Sulawesi, Indonesia. Sensors 2019, 19, 542. [Google Scholar] [CrossRef] [Green Version]

- Abdollahi, A.; Pradhan, B.; Alamri, A.M. An ensemble architecture of deep convolutional Segnet and Unet networks for building semantic segmentation from high-resolution aerial images. Geocarto Int. 2020, 1–16. [Google Scholar] [CrossRef]

- Dikshit, A.; Pradhan, B.; Alamri, A.M. Pathways and challenges of the application of artificial intelligence to geohazards modelling. Gondwana Res. 2020. [Google Scholar] [CrossRef]

- Bai, Y.; Mas, E.; Koshimura, S. Towards operational satellite-based damage-mapping using u-net convolutional network: A case study of 2011 tohoku earthquake-tsunami. Remote Sens. 2018, 10, 1626. [Google Scholar] [CrossRef] [Green Version]

- Ahmad, K.; Maabreh, M.; Ghaly, M.; Khan, K.; Qadir, J.; Al-Fuqaha, A. Developing Future Human-Centered Smart Cities: Critical Analysis of Smart City Security, Interpretability, and Ethical Challenges. arXiv 2020, arXiv:2012.09110. [Google Scholar]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef] [Green Version]

- Adadi, A.; Berrada, M. Peeking inside the black-box: A survey on explainable artificial intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Gunning, D. Explainable artificial intelligence (xai). Def. Adv. Res. Proj. Agency Nd Web 2017, 2. Available online: https://www.cc.gatech.edu/~alanwags/DLAI2016/(Gunning)%20IJCAI-16%20DLAI%20WS.pdf (accessed on 20 November 2020). [CrossRef] [PubMed] [Green Version]

- Farabet, C.; Couprie, C.; Najman, L.; LeCun, Y. Learning hierarchical features for scene labeling. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1915–1929. [Google Scholar] [CrossRef] [Green Version]

- Dong, L.; Shan, J. A comprehensive review of earthquake-induced building damage detection with remote sensing techniques. ISPRS J. Photogramm. Remote Sens. 2013, 84, 85–99. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; Gamba, P. Remote sensing and earthquake damage assessment: Experiences, limits, and perspectives. Proc. IEEE 2012, 100, 2876–2890. [Google Scholar] [CrossRef]

- Adriano, B.; Xia, J.; Baier, G.; Yokoya, N.; Koshimura, S. Multi-source data fusion based on ensemble learning for rapid building damage mapping during the 2018 sulawesi earthquake and tsunami in Palu, Indonesia. Remote Sens. 2019, 11, 886. [Google Scholar] [CrossRef] [Green Version]

- Gamba, P.; Casciati, F. GIS and image understanding for near-real-time earthquake damage assessment. Photogramm. Eng. Remote Sens. 1998, 64, 987–994. [Google Scholar]

- Yusuf, Y.; Matsuoka, M.; Yamazaki, F. Damage assessment after 2001 Gujarat earthquake using Landsat-7 satellite images. J. Indian Soc. Remote Sens. 2001, 29, 17–22. [Google Scholar] [CrossRef]

- Sugiyama, M.I.T.G.T.; Abe, H.S.K. Detection of Earthquake Damaged Areas from Aerial Photographs by Using Color and Edge Information. In Proceedings of the 5th Asian Conference on Computer Vision, Melbourne, Australia, 23–25 January 2002. [Google Scholar]

- Zhang, J.-F.; Xie, L.-L.; Tao, X.-X. Change detection of remote sensing image for earthquake-damaged buildings and its application in seismic disaster assessment. J. Nat. Disasters 2002, 11, 59–64. [Google Scholar]

- Rathje, E.M.; Crawford, M.; Woo, K.; Neuenschwander, A. Damage patterns from satellite images of the 2003 Bam, Iran, earthquake. Earthq. Spectra 2005, 21, 295–307. [Google Scholar] [CrossRef]

- Pesaresi, M.; Gerhardinger, A.; Haag, F. Rapid damage assessment of built-up structures using VHR satellite data in tsunami-affected areas. Int. J. Remote Sens. 2007, 28, 3013–3036. [Google Scholar] [CrossRef]

- Turker, M.; Cetinkaya, B. Automatic detection of earthquake-damaged buildings using DEMs created from pre-and post-earthquake stereo aerial photographs. Int. J. Remote Sens. 2005, 26, 823–832. [Google Scholar] [CrossRef]

- Rezaeian, M.; Gruen, A. Automatic Classification of Collapsed Buildings Using Object and Image Space Features. In Geomatics Solutions for Disaster Management; Springer: Berlin/Heidelberg, Germany, 2007; pp. 135–148. [Google Scholar]

- Rezaeian, M. Assessment of Earthquake Damages by Image-Based Techniques; ETH Zurich: Zurich, Switzerland, 2010; Volume 107. [Google Scholar]

- Stramondo, S.; Bignami, C.; Chini, M.; Pierdicca, N.; Tertulliani, A. Satellite radar and optical remote sensing for earthquake damage detection: Results from different case studies. Int. J. Remote Sens. 2006, 27, 4433–4447. [Google Scholar] [CrossRef]

- Rehor, M.; Bähr, H.P.; Tarsha-Kurdi, F.; Landes, T.; Grussenmeyer, P. Contribution of two plane detection algorithms to recognition of intact and damaged buildings in lidar data. Photogramm. Rec. 2008, 23, 441–456. [Google Scholar] [CrossRef]

- Ural, S.; Hussain, E.; Kim, K.; Fu, C.-S.; Shan, J. Building extraction and rubble mapping for city port-au-prince post-2010 earthquake with GeoEye-1 imagery and lidar data. Photogramm. Eng. Remote Sens. 2011, 77, 1011–1023. [Google Scholar] [CrossRef] [Green Version]

- Haiyang, Y.; Gang, C.; Xiaosan, G. Earthquake-collapsed building extraction from LiDAR and aerophotograph based on OBIA. In Proceedings of the 2nd International Conference on Information Science and Engineering, Hangzhou, China, 4–6 December 2010; pp. 2034–2037. [Google Scholar]

- Li, S.; Wu, H.; Wan, D.; Zhu, J. An effective feature selection method for hyperspectral image classification based on genetic algorithm and support vector machine. Knowl. Based Syst. 2011, 24, 40–48. [Google Scholar] [CrossRef]

- Mitomi, H.; Matsuoka, M.; Yamazaki, F. Application of automated damage detection of buildings due to earthquakes by panchromatic television images. In Proceedings of the 7th US National Conference on Earthquake Engineering, Boston, MA, USA, 21–25 July 2002. [Google Scholar]

- Cooner, A.J.; Shao, Y.; Campbell, J.B. Detection of urban damage using remote sensing and machine learning algorithms: Revisiting the 2010 Haiti earthquake. Remote Sens. 2016, 8, 868. [Google Scholar] [CrossRef] [Green Version]

- Rasika, A.; Kerle, N.; Heuel, S. Multi-scale texture and color segmentation of oblique airborne video data for damage classification. In Proceedings of the ISPRS 2006: ISPRS Midterm Symposium 2006 Remote Sensing: From Pixels to Processes, Enschede, The Netherlands, 8–11 May 2006; pp. 8–11. [Google Scholar]

- Wang, T.-L.; Jin, Y.-Q. Postearthquake building damage assessment using multi-mutual information from pre-event optical image and postevent SAR image. IEEE Geosci. Remote Sens. Lett. 2011, 9, 452–456. [Google Scholar] [CrossRef]

- Khodaverdizahraee, N.; Rastiveis, H.; Jouybari, A. Segment-by-segment comparison technique for earthquake-induced building damage map generation using satellite imagery. Int. J. Disaster Risk Reduct. 2020, 46, 101505. [Google Scholar] [CrossRef]

- Tomowski, D.; Klonus, S.; Ehlers, M.; Michel, U.; Reinartz, P. Change visualization through a texture-based analysis approach for disaster applications. In Proceedings of the ISPRS Proceedings, Vienna, Austria, 5–7 July 2010; pp. 1–6. [Google Scholar]

- Mansouri, B.; Hamednia, Y. A soft computing method for damage mapping using VHR optical satellite imagery. Ieee J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4935–4941. [Google Scholar] [CrossRef]

- Javadi, A.A.; Farmani, R.; Tan, T.P. A hybrid intelligent genetic algorithm. Adv. Eng. Inform. 2005, 19, 255–262. [Google Scholar] [CrossRef]

- Jena, R.; Pradhan, B.; Beydoun, G.; Alamri, A.M.; Sofyan, H. Earthquake hazard and risk assessment using machine learning approaches at Palu, Indonesia. Sci. Total Environ. 2020, 749, 141582. [Google Scholar] [CrossRef]

- Moya, L.; Muhari, A.; Adriano, B.; Koshimura, S.; Mas, E.; Marval-Perez, L.R.; Yokoya, N. Detecting urban changes using phase correlation and ℓ1-based sparse model for early disaster response: A case study of the 2018 Sulawesi Indonesia earthquake-tsunami. Remote Sens. Environ. 2020, 242, 111743. [Google Scholar] [CrossRef]

- Duangsoithong, R.; Windeatt, T. Relevant and redundant feature analysis with ensemble classification. In Proceedings of the 2009 Seventh International Conference on Advances in Pattern Recognition, Kolkata, India, 4–6 February 2009; pp. 247–250. [Google Scholar]

- Bolon-Canedo, V.; Remeseiro, B. Feature selection in image analysis: A survey. Artif. Intell. Rev. 2020, 53, 2905–2931. [Google Scholar] [CrossRef]

- Noriega, L. Multilayer perceptron tutorial. Sch. Comput. Staffs. Univ. 2005. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.608.2530&rep=rep1&type=pdf (accessed on 20 April 2021).

- Tang, J.; Deng, C.; Huang, G.-B. Extreme learning machine for multilayer perceptron. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 809–821. [Google Scholar] [CrossRef]

- Neaupane, K.M.; Achet, S.H. Use of backpropagation neural network for landslide monitoring: A case study in the higher Himalaya. Eng. Geol. 2004, 74, 213–226. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. ManCybern. 1973, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Kato, L.V. Integrating Openstreetmap Data in Object Based Landcover and Landuse Classification for Disaster Recovery. Master’s Thesis, University of Twente, Twente, The Netherlands, 2019. [Google Scholar]

- Guide, U. Definiens AG. Ger. Defin. Dev. XD 2009, 2. Available online: https://www.imperial.ac.uk/media/imperial-college/medicine/facilities/film/Definiens-Developer-User-Guide-XD-2.0.4.pdf (accessed on 18 December 2020).

- Ranjbar, H.R.; Ardalan, A.A.; Dehghani, H.; Saradjian, M.R. Using high-resolution satellite imagery to provide a relief priority map after earthquake. Nat. Hazards 2018, 90, 1087–1113. [Google Scholar] [CrossRef]

- Shapley, L.S. A value for n-person games. Contrib. Theory Games 1953, 2, 307–317. [Google Scholar]

- Lundberg, S.; Lee, S.-I. A unified approach to interpreting model predictions. arXiv 2017, arXiv:1705.07874. [Google Scholar]

- Molnar, C. Interpretable Machine Learning; Lulu Press: Morrisville, NC, USA, 2020. [Google Scholar]

- Situation Update No.15-Final 7.4 Earthquake and Tsunami. Available online: https://ahacentre.org/situation-update/situation-update-no-15-sulawesi-earthquake-26-october-2018/ (accessed on 20 November 2020).

- Copernicus Emergency Management Service (© 2015 European Union), [EMSR 317] Palu: Grading Map. Available online: https://emergency.copernicus.eu/mapping/list-of-components/EMSR317 (accessed on 16 July 2019).

- Charter Space and Majors Disasters. Available online: https://disasterscharter.org/web/guest/activations/-/article/earthquake-in-indonesia-activation-587 (accessed on 28 January 2021).

- Digital Globe: Satellite Imagery for Natural Disasters. Available online: https://www.digitalglobe.com/ecosystem/open-data (accessed on 16 July 2019).

- Missing Maps. Available online: http://www.missingmaps.org/ (accessed on 20 November 2020).

- MapSwipe. Available online: http://mapswipe.org/ (accessed on 20 November 2020).

- OpenStreetMap Contributors. Available online: https://www.openstreetmap.org (accessed on 16 July 2019).

- Scholz, S.; Knight, P.; Eckle, M.; Marx, S.; Zipf, A. Volunteered geographic information for disaster risk reduction—The missing maps approach and its potential within the red cross and red crescent movement. Remote Sens. 2018, 10, 1239. [Google Scholar] [CrossRef] [Green Version]

- Esch, T.; Thiel, M.; Schenk, A.; Roth, A.; Muller, A.; Dech, S. Delineation of urban footprints from TerraSAR-X data by analyzing speckle characteristics and intensity information. IEEE Trans. Geosci. Remote Sens. 2009, 48, 905–916. [Google Scholar] [CrossRef]

- Esch, T.; Schenk, A.; Ullmann, T.; Thiel, M.; Roth, A.; Dech, S. Characterization of land cover types in TerraSAR-X images by combined analysis of speckle statistics and intensity information. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1911–1925. [Google Scholar] [CrossRef]

- Copernicus Emergency Management Service. Available online: https://emergency.copernicus.eu/ (accessed on 23 March 2021).

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Janitza, S.; Strobl, C.; Boulesteix, A.-L. An AUC-based permutation variable importance measure for random forests. BMC Bioinform. 2013, 14, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Fu, T.; Blaschke, T.; Li, M.; Tiede, D.; Zhou, Z.; Ma, X.; Chen, D. Evaluation of feature selection methods for object-based land cover mapping of unmanned aerial vehicle imagery using random forest and support vector machine classifiers. ISPRS Int. J. Geo-Inf. 2017, 6, 51. [Google Scholar] [CrossRef]

- Bialas, J.; Oommen, T.; Havens, T.C. Optimal segmentation of high spatial resolution images for the classification of buildings using random forests. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101895. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

| Learning Rate | 0.01 |

| Hidden Layer | 2 |

| Activation Function | ReLU |

| Solver | Adam |

| Input Nodes | 50 |

| Iterations | 500 |

| Number | Feature Name | Mathematical Expression |

|---|---|---|

| 1 | GLCM Dissimilarity | |

| 2 | GLCM Contrast | |

| 3 | GLCM Homogeneity | |

| 5 | GLCM Entropy | |

| 6 | GLDV Entropy | |

| 7 | GLDV Mean |

| Feature Name | Mathematical Expression |

|---|---|

| Maximum Differences | |

| Brightness | |

| Mean Layer | |

| Standard Deviation | |

| Skewness | |

| Contrast to Neighbour Pixels | 1000 |

| Feature Name | Mathematical Expression |

|---|---|

| Rectangular fit | |

| Asymmetry | |

| Density |

| Dataset | Source | Type | Acquisition Date | Resolution |

|---|---|---|---|---|

| Indonesia earthquake and tsunami | DigitalGlobe | Raster (RGB) | 2 October 2018 | 0.5 (resample) |

| Built-up area | OSM | Shapefile (polygon) | 16 July 2019 | - |

| EMSR317 earthquake in Indonesia | Copernicus | Shapefile (point) | 30 September 2018 | - |

| This Study | Copernicus EMS Guideline |

|---|---|

| Non-Collapsed (Number: 6373) | No visible damage |

| Possibly damaged Uncertain interpretation due to image quality Presence of possible damage proxies. Building surrounded by damaged/destroyed buildings | |

| Damaged Minor: The roof remains largely intact but presents partial damage Major: Partial collapse of the roof; serious failure of walls | |

| Collapsed (Number: 2135) | Destroyed Total collapse, the collapse of part of the building (>50%); Building structure not distinguishable (the walls have been destroyed or collapsed) |

| Study Area | Number of Collapsed Buildings | Number of Non-Collapsed Buildings |

|---|---|---|

| Train | 1854 | 5638 |

| Test 0 | 119 | 78 |

| Test 1 | 127 | 107 |

| Test 2 | 0 | 109 |

| Test 3 | 28 | 401 |

| Test 4 | 1 | 47 |

| Accuracy | Overall Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| MLP (using dataset A) | 73.55 | 87.5 | 2.54 | 4.94 |

| MLP (using dataset B) | 83.68 | 80.11 | 52.72 | 63.59 |

| RF (using dataset A) | 73.5 | 99 | 2 | 4 |

| RF (after removing the three low-ranked features) | 73.84 | 99 | 32.72 | 6.38 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matin, S.S.; Pradhan, B. Earthquake-Induced Building-Damage Mapping Using Explainable AI (XAI). Sensors 2021, 21, 4489. https://doi.org/10.3390/s21134489

Matin SS, Pradhan B. Earthquake-Induced Building-Damage Mapping Using Explainable AI (XAI). Sensors. 2021; 21(13):4489. https://doi.org/10.3390/s21134489

Chicago/Turabian StyleMatin, Sahar S., and Biswajeet Pradhan. 2021. "Earthquake-Induced Building-Damage Mapping Using Explainable AI (XAI)" Sensors 21, no. 13: 4489. https://doi.org/10.3390/s21134489