Detection of Iris Presentation Attacks Using Feature Fusion of Thepade’s Sorted Block Truncation Coding with Gray-Level Co-Occurrence Matrix Features

Abstract

:1. Introduction

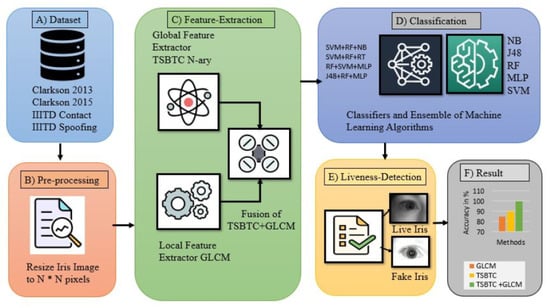

- Development of Thepade’s sorted block truncation coding (TSBTC) and gray-level co-occurrence matrix (GLCM) iris image data as features for the first time in iris liveness detection (ILD).

- Implementation of the fusion of best TSBTC N-ary global features with GLCM local features from an iris image, for the first time in ILD.

- Performance analysis of ML classifiers and ensembles to finalize the best classifier for ILD.

- Validating the performance of the proposed ILD method across various existing benchmark datasets and techniques.

2. Related Work

3. Proposed Iris Liveness Detection Using a Feature-Level Fusion of TSBTC and GLCM

3.1. Resizing

3.2. Feature Formation and Fusion

3.2.1. GLCM

3.2.2. TSBTC

3.2.3. Fusion of TSBTC and GLCM

3.3. Classification and Iris Liveness Detection

4. Experimental Set-Up

4.1. Description of the Dataset

- Clarkson LivDet2013—Clarkson LivDet2013 dataset has around 1536 iris images [33]. This dataset is separated into training and testing sets. To acquire images, the Dalsa sensor is used. During this experiment, the training set images are used. Table 3 shows details related to the dataset, the sensors used to acquire images, and the number of images used during this experiment. Figure 5 shows samples of images from the dataset.

- Clarkson LivDet2015—Images used in this dataset are captured using Dalsa and LG sensors [34]. Images are divided into three categories: live, pattern, and printed. In total, 25 subjects are used for live images and patterns are printed; 15 subjects each are used. The whole dataset is partitioned into training and testing.

- IIITD Combined Spoofing Database—Images used in this dataset are captured using two iris sensors, Cogent and Vista [35]. The images are divided into three categories: normal, print-scan attack, and print-capture attack.

- IIITD Contact Lens—Images used in this dataset are captured using two iris sensors, Cogent dual iris sensor and Vista FA2E single iris sensor [36,37]. The images are di-vided into three categories: normal, transparent, and colored. In total, 101 subjects are used. Both left and right iris images of each subject are captured; therefore, there are 202 iris classes.

4.2. Performance Measures

5. Results

5.1. TSBTC Results

5.2. GLCM Results

5.3. Fusion of TSBTC and GLCM Results

6. Discussions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability

Acknowledgments

Conflicts of Interest

References

- Su, L.; Shimahara, T. Advanced iris recognition using fusion techniques. NEC Tech. J. 2019, 13, 74–77. [Google Scholar]

- Lee, H.; Park, S.H.; Yoo, J.H.; Jung, S.H.; Huh, J.H. Face recognition at a distance for a stand-alone access control system. Sensors 2020, 20, 785. [Google Scholar] [CrossRef] [Green Version]

- Khade, S.; Ahirrao, S.; Thepade, S. Bibliometric Survey on Biometric Iris Liveness Detection. Available online: https://www.proquest.com/openview/e3b5291b23d16de13ce0f0bd5dcb004b/1?pq-origsite=gscholar&cbl=54903 (accessed on 31 October 2021).

- Kaur, J.; Jindal, N. A secure image encryption algorithm based on fractional transforms and scrambling in combination with multimodal biometric keys. Multimed. Tools Appl. 2019, 78, 11585–11606. [Google Scholar] [CrossRef]

- Choudhary, M.; Tiwari, V.; Venkanna, U. An approach for iris contact lens detection and classification using ensemble of customized DenseNet and SVM. Futur. Gener. Comput. Syst. 2019, 101, 1259–1270. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, W. Iris Liveness Detection: A Survey. In Proceedings of the 2018 IEEE Fourth International Conference on Multimedia Big Data (BigMM), Xi’an, China, 13–16 September 2018. [Google Scholar] [CrossRef]

- Trokielewicz, M.; Czajka, A.; Maciejewicz, P. Human iris recognition in post-mortem subjects: Study and database. In Proceedings of the 2016 IEEE 8th International Conference on Biometrics Theory, Applications and Systems (BTAS), Niagara Falls, NY, USA, 6–9 September 2016. [Google Scholar]

- Fathy, W.S.A.; Ali, H.S. Entropy with Local Binary Patterns for Efficient Iris Liveness Detection. Wirel. Pers. Commun. 2018, 102, 2331–2344. [Google Scholar] [CrossRef]

- Khade, S.; Thepade, S.D. Novel Fingerprint Liveness Detection with Fractional Energy of Cosine Transformed Fingerprint Images and Machine Learning Classifiers. In Proceedings of the 2018 IEEE Punecon, Pune, India, 30 November–2 December 2018. [Google Scholar] [CrossRef]

- Kaur, B.; Singh, S.; Kumar, J. Cross-sensor iris spoofing detection using orthogonal features. Comput. Electr. Eng. 2019, 73, 279–288. [Google Scholar] [CrossRef]

- Agarwal, R.; Jalal, A.S.; Arya, K.V. A multimodal liveness detection using statistical texture features and spatial analysis. Multimed. Tools Appl. 2020, 79, 13621–13645. [Google Scholar] [CrossRef]

- Jusman, Y.; Cheok, N.S.; Hasikin, K. Performances of proposed normalization algorithm for iris recognition. Int. J. Adv. Intell. Inform. 2020, 6, 161–172. [Google Scholar] [CrossRef]

- Khuzani, A.Z.; Mashhadi, N.; Heidari, M.; Khaledyan, D. An approach to human iris recognition using quantitative analysis of image features and machine learning. arXiv 2020, arXiv:2009.05880. [Google Scholar] [CrossRef]

- Agarwal, R.; Jalal, A.S.; Arya, K.V. Local binary hexagonal extrema pattern (LBHXEP): A new feature descriptor for fake iris detection. Vis. Comput. 2020, 37, 1357–1368. [Google Scholar] [CrossRef]

- Thavalengal, S.; Nedelcu, T.; Bigioi, P.; Corcoran, P. Iris liveness detection for next generation smartphones. IEEE Trans. Consum. Electron. 2016, 62, 95–102. [Google Scholar] [CrossRef]

- Dewan, J.H.; Thepade, S.D. Feature fusion approach for image retrieval with ordered color means based description of keypoints extracted using local detectors. J. Eng. Sci. Technol. 2021, 16, 482–509. [Google Scholar]

- Thepade, S.D.; Chaudhari, P.R. Land Usage Identification with Fusion of Thepade SBTC and Sauvola Thresholding Features of Aerial Images Using Ensemble of Machine Learning Algorithms. Appl. Artif. Intell. 2021, 35, 154–170. [Google Scholar] [CrossRef]

- Thepade, S.D.; Sange, S.; Das, R.; Luniya, S. Enhanced Image Classification with Feature Level Fusion of Niblack Thresholding and Thepade’s Sorted N-Ary Block Truncation Coding using Ensemble of Machine Learning Algorithms. In Proceedings of the 2018 IEEE Punecon, Pune, India, 30 November–2 December 2018. [Google Scholar] [CrossRef]

- Hu, Y.; Sirlantzis, K.; Howells, G. Iris liveness detection using regional features. Pattern Recognit. Lett. 2016, 82, 242–250. [Google Scholar] [CrossRef]

- Czajka, A. Pupil dynamics for iris liveness detection. IEEE Trans. Inf. Forensics Secur. 2015, 10, 726–735. [Google Scholar] [CrossRef]

- Naqvi, R.A.; Lee, S.W.; Loh, W.K. Ocular-net: Lite-residual encoder decoder network for accurate ocular regions segmentation in various sensor images. In Proceedings of the 2020 IEEE International Conference on Big Data and Smart Computing (BigComp), Busan, Korea, 19–22 February 2020. [Google Scholar] [CrossRef]

- Kimura, G.Y.; Lucio, D.R.; Britto, A.S.; Menotti, D. CNN hyperparameter tuning applied to iris liveness detection. arXiv 2020, arXiv:2003.00833. [Google Scholar]

- Lin, H.Y.S.; Su, Y.W. Convolutional neural networks for face anti-spoofing and liveness detection. In Proceedings of the 2019 6th International Conference on Systems and Informatics (ICSAI), Shanghai, China, 2–4 November 2019. [Google Scholar] [CrossRef]

- Long, M.; Zeng, Y. Detecting iris liveness with batch normalized convolutional neural network. Comput. Mater. Contin. 2019, 58, 493–504. [Google Scholar] [CrossRef]

- Dronky, M.R.; Khalifa, W.; Roushdy, M. A Review on Iris Liveness Detection Techniques. In Proceedings of the 2019 Ninth International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egypt, 8–10 December 2019. [Google Scholar] [CrossRef]

- Kushwaha, R.; Singal, G.; Nain, N. A Texture Feature Based Approach for Person Verification Using Footprint Bio-Metric; Springer: Heidelberg, Germany, 2020; pp. 1581–1611. ISBN 0123456789. [Google Scholar]

- Raiu, V.; Vidyasree, P.; Patel, A. Ameliorating the Accuracy Dimensional Reduction of Multi-modal Biometrics by Deep Learning. In Proceedings of the 2021 IEEE Aerospace Conference (50100), Big Sky, MT, USA, 6–13 March 2021. [Google Scholar] [CrossRef]

- Rasool, R.A. Feature-Level vs. Score-Level Fusion in the Human Identification System. Appl. Comput. Intell. Soft Comput. 2021, 2021, 6621772. [Google Scholar] [CrossRef]

- Khairnar, S.; Thepade, S.D.; Gite, S. Effect of image binarization thresholds on breast cancer identification in mammography images using OTSU, Niblack, Burnsen, Thepade’s SBTC. Intell. Syst. Appl. 2021, 10–11, 200046. [Google Scholar] [CrossRef]

- Dewan, J.H.; Thepade, S.D. Image Retrieval using Weighted Fusion of GLCM and TSBTC Features. In Proceedings of the 2021 6th International Conference for Convergence in Technology (I2CT), Maharashtra, India, 2–4 April 2021. [Google Scholar] [CrossRef]

- Khade, S.; Thepade, S.D.; Ambedkar, A. Fingerprint Liveness Detection Using Directional Ridge Frequency with Machine Learning Classifiers. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018. [Google Scholar] [CrossRef]

- Khade, S.; Thepade, S.D. Fingerprint liveness detection with machine learning classifiers using feature level fusion of spatial and transform domain features. In Proceedings of the 2019 5th International Conference On Computing, Communication, Control And Automation (ICCUBEA), Pune, India, 19–21 September 2019. [Google Scholar] [CrossRef]

- Yambay, D.; Doyle, J.S.; Bowyer, K.W.; Czajka, A.; Schuckers, S. LivDet-iris 2013-Iris Liveness Detection Competition 2013. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017. [Google Scholar] [CrossRef]

- Yambay, D.; Walczak, B.; Schuckers, S.; Czajka, A. LivDet-Iris 2015-Iris Liveness Detection Competition 2015. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017. [Google Scholar]

- Kohli, N.; Yadav, D.; Vatsa, M.; Singh, R.; Noore, A. Detecting medley of iris spoofing attacks using DESIST. In Proceedings of the 2016 IEEE 8th International Conference on Biometrics Theory, Applications and Systems (BTAS), Niagara Falls, NY, USA, 6–9 September 2016. [Google Scholar] [CrossRef]

- Yadav, D.; Kohli, N.; Doyle, J.S.; Singh, R.; Vatsa, M.; Bowyer, K.W. Unraveling the Effect of Textured Contact Lenses on Iris Recognition. IEEE Trans. Inf. Forensics Secur. 2014, 9, 851–862. [Google Scholar] [CrossRef]

- Kohli, N.; Yadav, D.; Vatsa, M.; Singh, R. Revisiting iris recognition with color cosmetic contact lenses. In Proceedings of the 2013 International Conference on Biometrics (ICB), Madrid, Spain, 4–7 June 2013. [Google Scholar] [CrossRef]

- Boyd, A.; Fang, Z.; Czajka, A.; Bowyer, K.W. Iris presentation attack detection: Where are we now? Pattern Recognit. Lett. 2020, 138, 483–489. [Google Scholar] [CrossRef]

- Das, P.; McFiratht, J.; Fang, Z.; Boyd, A.; Jang, G.; Mohammadi, A.; Purnapatra, S.; Yambay, D.; Marcel, S.; Trokielewicz, M.; et al. Iris Liveness Detection Competition (LivDet-Iris)-The 2020 Edition. In Proceedings of the 2020 IEEE International Joint Conference on Biometrics (IJCB), Houston, TX, USA, 6 January 2021. [Google Scholar] [CrossRef]

- Arora, S.; Bhatia, M.P.S.; Kukreja, H. A Multimodal Biometric System for Secure User Identification Based on Deep Learning. Adv. Intell. Syst. Comput. 2021, 1183, 95–103. [Google Scholar] [CrossRef]

- Omran, M.; Alshemmary, E.N. An Iris Recognition System Using Deep convolutional Neural Network. J. Phys. Conf. Ser. 2020, 1530, 012159. [Google Scholar] [CrossRef]

- Zhao, T.; Liu, Y.; Huo, G.; Zhu, X. A Deep Learning Iris Recognition Method Based on Capsule Network Architecture. IEEE Access 2019, 7, 49691–49701. [Google Scholar] [CrossRef]

- Wang, K.; Kumar, A. Cross-spectral iris recognition using CNN and supervised discrete hashing. Pattern Recognit. 2019, 86, 85–98. [Google Scholar] [CrossRef]

- Cheng, Y.; Liu, Y.; Zhu, X.; Li, S. A Multiclassification Method for Iris Data Based on the Hadamard Error Correction Output Code and a Convolutional Network. IEEE Access 2019, 7, 145235–145245. [Google Scholar] [CrossRef]

- Chatterjee, P.; Yalchin, A.; Shelton, J.; Roy, K.; Yuan, X.; Edoh, K.D. Presentation Attack Detection Using Wavelet Transform and Deep Residual Neural Net; Springer: Heidelberg, Germany, 2019. [Google Scholar] [CrossRef]

| Iris Presentation Attacks | Details |

|---|---|

| Print attacks | The impostor offers a printed image of validated iris to the biometric sensor [4]. |

| Contact lens attacks | The impostor wears contact lenses on which the pattern of the genuine iris is printed [5]. |

| Video attacks | The impostor plays a video of a registered user in front of a biometric system [6]. |

| Cadaver attacks | The impostor uses the eye of a dead person in front of a biometric system [7]. |

| Synthetic attacks | The impostor embeds the iris region into the authentic images to make the synthesized images more realistic [8]. |

| Paper | Author/Year | Feature Extraction | Attacks Identified | Datasets | Classifiers | Performances |

|---|---|---|---|---|---|---|

| ID | ||||||

| [17] | Thepade and Chaudhari, 2021 | TSBTC and Sauvola thresholding | NA | NR | SVM, Kstar, J48, RF, RT and ensembles | Accuracy, F-measures. |

| [16] | Dewan and Thepade, 2021 | TSBTC | NA | NA | NA | ARA = 63.31% |

| [12] | Jusman et al., 2020 | Hough transform, GLCM | NR | CASIA-Iris | Discriminant analysis classifiers | Accuracy = 100% |

| [11] | Agarwal et al., 2020 | Texture feature, GLCM | ATVs (iris) LivDet2011 (finger) IIITD CLI dataset (iris) | SVM | ACA = 96.3% | |

| [14] | Agarwal et al., 2020 | Local binary hexagonal extrema pattern | Contact Print | IIITD CLI ATVS-FIr | SVM | AER = 1.8 %, |

| [13] | Khuzani et al., 2020 | Shape, density, FFT, GLCM, GLDM, and wavelet | NR | CASIA-Iris-Interval | Multilayer neural network | Accuracy = 99.64% |

| [26] | Kush- waha et al., 2020 | GLCM, HOG, LBP | NA | Biometric 220X6 human footprint dataset | KNN, SVM, LDA, ensembles | Accuracy = 97.9% |

| [22] | Kimura et al., 2020 | CNN | Print contact | Clarkson, Warsaw, IIITD-WVU, Notre Dame | APCER = 4.18% BPCER= 0% | |

| [21] | Naqvi et al., 2020 | CNN model with a lite-residual encoder–decoder network | NA | NICE-II dataset, SBVPI | CNN | Average segmentation error = 0.0061 |

| [24] | Long and Zeng, 2019 | BNCNN | Synthetic, contact | CASIA-Iris-Lamp, CASIA-Iris-Syn, ND contact | BNCNN | Correct recognition rate= 100% |

| [21] | Asmara et al., 2019 | GLCM, Gabor filter | CASIA v1 Iris | Navies Bayes, SVM | Accuracy = 95.24% | |

| [3] | Kaur et al., 2019 | Orthogonal rotation-invariant feature set comprising of ZMs and PHTs | Print + scan, print + capture, patterned contact lenses | IIITD-CLI, IIS, Clarkson LivDet-Iris 2015, Warsaw LivDet-Iris 2015 | KNN | Accuracy = 98.49% (given different accuracy for different datasets) |

| [8] | Fathy and Ali, 2018 | Wavelet packets (WPs), local binary pattern (LBP), entropy | Print + synthetic | ATVS-Fir CASIA-Iris-Syn | SVM | ACA = 99.92% recall, precision, F1 |

| [18] | Thepade et al., 2018 | TSBTC, Niblack | NR | NR | SVM, RF, ensembles, Bayes net | Accuracy = 68.56% |

| [15] | Thavalen- gal et al., 2016 | Pupil localization techniques with distance metrics are used for detection | Real-time datasets | Binary tree classifier | ACER = 0% | |

| [19] | Hu et al., 2016 | LBP, histogram, SID | Contact lenses, print | Clarkson, Warsaw, Notre Dame, MobBIOfake | SVM | ER, Clarkson = 7.87%, Warsaw = 6.15% ND = 0.08%, MobBIOfake = 1.50% |

| Database | Sensor | Image Category | No. of Images Used for the Experiment |

|---|---|---|---|

| Clarkson 2013 | Dalsa | Off (live) | 350 |

| Pattern (contact) | 440 | ||

| Clarkson 2015 | Dalsa | Live | 378 |

| Pattern | 356 | ||

| Printed | 1416 | ||

| LG | Live | 258 | |

| Pattern | 433 | ||

| Printed | 844 | ||

| IIITD Combined Spoofing | Cogent | Normal | 2024 |

| Print-capture | 1113 | ||

| Print-scan | 980 | ||

| Vista | Normal | 2024 | |

| Print-capture | 1092 | ||

| Print-scan | 1196 | ||

| IIITD Contact | Cogent | Normal | 422 |

| Transparent | 1131 | ||

| Textured | 1150 | ||

| Vista | Normal | 1010 | |

| Transparent | 1010 | ||

| Textured | 1010 |

| Classifiers/Ensembles of Classifiers | Accuracy | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2-ary | 3-ary | 4-ary | 5-ary | 6-ary | 7-ary | 8-ary | 9-ary | 10-ary | 11-ary | AVG | |

| NB | 83.52 | 83.67 | 83.52 | 83.96 | 84.11 | 84.11 | 84.11 | 84.25 | 84.4 | 84.4 | 83.96 |

| J48 | 86.15 | 87.6 | 86.58 | 88.04 | 89.5 | 86.88 | 88.92 | 90.08 | 91.69 | 88.77 | 88.38 |

| SVM | 86.58 | 86.73 | 86.73 | 86.73 | 86.73 | 86.58 | 86.44 | 86.44 | 86.58 | 86.44 | 86.62 |

| RF | 89.06 | 93.29 | 93.14 | 93.87 | 94.16 | 93.29 | 93.14 | 93.29 | 93.87 | 93 | 93.01 |

| MLP | 86.44 | 86.58 | 86.44 | 86.29 | 86.58 | 87.9 | 87.9 | 87.9 | 87.6 | 88.33 | 87.07 |

| SVM + RF + NB | 86.44 | 86.88 | 87.17 | 87.17 | 87.17 | 87.46 | 86.73 | 86.88 | 87.17 | 86.88 | 87.01 |

| SVM + RF + RT | 88.77 | 93 | 92.12 | 92.41 | 92.56 | 92.12 | 91.54 | 92.12 | 92.71 | 92.56 | 91.93 |

| RF + SVM + MLP | 86.58 | 86.73 | 86.88 | 86.88 | 86.73 | 87.17 | 87.17 | 87.17 | 87.31 | 87.46 | 86.96 |

| J48 + RF + MLP | 87.17 | 89.5 | 88.62 | 89.94 | 90.08 | 90.08 | 90.37 | 91.39 | 92.27 | 91.25 | 89.94 |

| AVG | 86.746 | 88.22 | 87.91 | 88.37 | 88.62 | 88.4 | 88.48 | 88.84 | 89.29 | 88.79 | —— |

| Classifiers/Ensembles of Classifiers | Accuracy | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2-ary | 3-ary | 4-ary | 5-ary | 6-ary | 7-ary | 8-ary | 9-ary | 10-ary | 11-ary | AVG | |

| NB | 64.85 | 64.71 | 64.44 | 64.57 | 64.16 | 64.16 | 64.03 | 63.89 | 63.89 | 63.89 | 64.26 |

| J48 | 75.61 | 80.79 | 82.15 | 85.96 | 87.87 | 89.1 | 90.05 | 90.32 | 91.41 | 91.28 | 86.45 |

| SVM | 57.08 | 58.17 | 59.4 | 61.17 | 60.49 | 60.62 | 60.89 | 61.3 | 61.44 | 61.98 | 60.25 |

| Random Forest | 83.78 | 89.64 | 91.96 | 94.27 | 94.68 | 95.5 | 94.95 | 95.5 | 95.64 | 95.23 | 93.12 |

| MLP | 77.11 | 78.61 | 78.47 | 74.25 | 80.24 | 76.02 | 88.82 | 89.1 | 89.23 | 91.14 | 82.3 |

| SVM + RF + NB | 66.07 | 68.39 | 70.7 | 73.56 | 73.29 | 74.65 | 73.97 | 75.61 | 76.15 | 76.15 | 72.85 |

| SVM + RF + RT | 83.78 | 88.14 | 90.73 | 92.23 | 92.77 | 92.5 | 93.46 | 93.73 | 91.96 | 93.86 | 91.32 |

| RF + SVM + MLP | 78.74 | 79.15 | 81.6 | 76.7 | 73.43 | 74.38 | 70.02 | 75.34 | 68.8 | 71.66 | 74.98 |

| J48 + RF + MLP | 82.28 | 85.83 | 87.32 | 88.96 | 90.05 | 92.09 | 91 | 92.91 | 94 | 94 | 89.84 |

| AVG | 74.367 | 77.048 | 78.53 | 79.07 | 79.66 | 79.89 | 80.8 | 81.97 | 81.39 | 82.13 | —— |

| Classifiers/Ensembles of Classifiers | Accuracy | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2-ary | 3-ary | 4-ary | 5-ary | 6-ary | 7-ary | 8-ary | 9-ary | 10-ary | 11-ary | AVG | |

| NB | 46.41 | 45.82 | 44.32 | 43.88 | 43.73 | 44.02 | 44.47 | 63.91 | 63.82 | 64 | 50.438 |

| J48 | 64.47 | 62.08 | 64.47 | 61.19 | 64.77 | 62.98 | 63.88 | 66.37 | 65.4 | 65.58 | 64.119 |

| SVM | 61.04 | 61.04 | 61.04 | 61.04 | 61.04 | 61.04 | 61.04 | 64.09 | 64.09 | 64.09 | 61.955 |

| Random Forest | 63.88 | 67.61 | 71.64 | 70.44 | 75.52 | 74.92 | 75.97 | 75.41 | 76.29 | 76.73 | 72.841 |

| MLP | 57.61 | 62.53 | 59.7 | 59.7 | 61.49 | 61.79 | 62.38 | 65.58 | 65.23 | 66.11 | 62.212 |

| SVM + RF + NB | 60.89 | 60.74 | 61.49 | 61.94 | 62.53 | 63.88 | 65.22 | 65.67 | 65.58 | 65.75 | 63.369 |

| SVM + RF + RT | 61.64 | 66.71 | 71.04 | 68.35 | 71.94 | 71.04 | 74.62 | 73.22 | 73.57 | 75.68 | 70.781 |

| RF + SVM + MLP | 61.04 | 62.08 | 61.79 | 61.64 | 62.08 | 61.64 | 61.94 | 66.28 | 66.19 | 66.37 | 63.087 |

| J48 + RF + MLP | 61.49 | 65.82 | 68.35 | 66.86 | 68.05 | 68.5 | 68.95 | 68.48 | 68.48 | 69.71 | 67.369 |

| AVG | 59.83 | 61.603 | 62.648 | 61.671 | 63.461 | 63.312 | 64.274 | 67.667 | 67.627 | 68.22 | —— |

| Classifiers Ensembles of Classifiers | Accuracy | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2-ary | 3-ary | 4-ary | 5-ary | 6-ary | 7-ary | 8-ary | 9-ary | 10-ary | 11-ary | AVG | |

| NB | 90.09 | 94.99 | 95.1 | 95.2 | 94.99 | 94.99 | 94.88 | 95.2 | 95.2 | 92.49 | 94.31 |

| J48 | 98.08 | 98.29 | 98.61 | 98.61 | 98.08 | 99.25 | 99.04 | 98.93 | 99.14 | 98.8 | 98.68 |

| SVM | 96.48 | 96.59 | 96.8 | 97.01 | 97.01 | 97.12 | 97.12 | 97.44 | 97.55 | 97.74 | 97.08 |

| Random Forest | 97.97 | 98.61 | 98.82 | 99.25 | 99.14 | 99.46 | 99.36 | 99.36 | 99.25 | 99.18 | 99.04 |

| MLP | 98.93 | 99.14 | 99.25 | 99.14 | 99.14 | 99.14 | 99.04 | 99.04 | 99.04 | 98.78 | 99.06 |

| SVM + RF + NB | 96.27 | 96.59 | 96.91 | 97.01 | 97.01 | 97.23 | 97.23 | 97.55 | 97.65 | 98.19 | 97.16 |

| SVM + RF + RT | 97.87 | 98.4 | 98.5 | 99.04 | 99.04 | 99.25 | 99.14 | 99.25 | 99.04 | 99.17 | 98.87 |

| RF + SVM + MLP | 98.61 | 98.72 | 98.72 | 98.93 | 98.93 | 98.93 | 98.93 | 98.93 | 99.04 | 98.19 | 98.79 |

| J48 + RF + MLP | 98.4 | 98.72 | 98.82 | 99.04 | 99.04 | 99.57 | 99.36 | 99.25 | 99.36 | 99.15 | 99.07 |

| AVG | 96.96 | 97.78 | 97.94 | 98.13 | 98.04 | 98.32 | 98.23 | 98.32 | 98.36 | 97.96 | —— |

| Classifiers/Ensembles of Classifiers | Accuracy in Percentage (%) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Clarkson 2013 | Clarkson 2015 | IIITD Contact | IIITD Combined Spoofing | |||||||||

| TSBTC + GLCM | GLCM | TSBTC | TSBTC + GLM | GLCM | TSBTC | TSBTC + GLCM | GLCM | TSBTC | TSBTC + GLCM | GLCM | TSBTC | |

| NB | 83.74 | 81.77 | 84.40 | 65.33 | 67.84 | 63.89 | 58.91 | 59.51 | 63.91 | 97.55 | 92.86 | 93.85 |

| J48 | 91.54 | 83.23 | 90.23 | 91.34 | 73.97 | 91.35 | 69.31 | 72.94 | 65.49 | 99.04 | 96.43 | 98.97 |

| SVM | 86.73 | 82.21 | 86.51 | 61.99 | 59.94 | 61.71 | 64.09 | 73.20 | 64.09 | 98.99 | 95.51 | 97.65 |

| Random Forest | 93.78 | 82.79 | 93.44 | 95.57 | 82.01 | 95.44 | 78.88 | 75.36 | 76.51 | 99.57 | 96.73 | 99.22 |

| MLP | 88.26 | 83.81 | 87.97 | 90.30 | 77.11 | 90.19 | 64.13 | 73.01 | 65.67 | 99.57 | 98.06 | 98.91 |

| SVM + RF + NB | 86.88 | 83.38 | 87.03 | 79.43 | 64.98 | 76.15 | 69.18 | 73.64 | 65.67 | 99.20 | 96.63 | 97.92 |

| SVM + RF + RT | 91.84 | 82.50 | 92.64 | 94.34 | 81.33 | 92.91 | 76.42 | 74.79 | 74.63 | 99.57 | 97.04 | 99.11 |

| RF + SVM + MLP | 87.61 | 83.81 | 87.39 | 82.49 | 75.47 | 70.23 | 67.51 | 73.32 | 66.28 | 99.62 | 97.55 | 98.62 |

| J48 + RF + MLP | 92.63 | 83.81 | 91.76 | 94.00 | 79.70 | 94.00 | 76.07 | 74.72 | 69.10 | 99.68 | 97.34 | 99.26 |

| AVG | 89.22 | 83.03 | 89.04 | 83.86 | 73.59 | 81.76 | 69.39 | 72.28 | 67.93 | 99.20 | 96.46 | 98.16 |

| Datasets | Classifiers | Accuracy in % | Precision in % | Recall in % | F-Measure in % | APECR in % | NPCER in % | ACER in % |

|---|---|---|---|---|---|---|---|---|

| Clarkson 2013 | Random Forest | 93.78 | 95.50 | 86.20 | 90.60 | 7.90 | 4.12 | 6.01 |

| Clarkson 2015 | Random Forest | 95.57 | 96.50 | 95.50 | 96.00 | 4.72 | 3.47 | 4.09 |

| IIITD_Contact | Random Forest | 78.88 | 79.30 | 79.40 | 78.60 | 21.56 | 20.28 | 20.92 |

| IIITD_Spoofing | J48 + RF + MLP | 99.68 | 99.80 | 99.80 | 99.80 | 0.12 | 0.84 | 0.48 |

| Author/Year | Feature Extraction | Dataset | Performance Measure | Classifiers | Results (%) |

|---|---|---|---|---|---|

| P. Das et al., 2021 [39] | MSU PAD1 MSU PAD2 Notre Dame PAD | Clarkson University (CU), University of Notre Dame (ND), and Warsaw University of Technology (WUT) | APCER, BPCER, ACER | SVM, RF, MLP and CNN. | ACER = 2.61 ACER = 2.18 ACER = 28.96 |

| Arora et al., 2021 [40] | CNN | IIITD | Accuracy FAR | VGGNet | Acc = 97.98 |

| LeNet | Acc = 89.38 | ||||

| ConvNet | Acc = 98.99 | ||||

| Omran and Alshemmary 2020 [41] | CNN, IRISNet | IIITD | Sensitivity, accuracy, specificity, precision recall, G mean, and F-measure | (SVM, KNN, NB, DT | Acc = 96.43 |

| Zhao et al., 2019 [42] | Mask R-CNN | IIITD | Accuracy | R-CNN, CNN | Acc = 98.9 |

| Wang and Kumar 2019 [43] | CNN-SDH, CNN-Joint Bayesian | PolyU bi-spectra | Accuracy | CNN, SDH | Acc = 90.71 |

| Cheng et al., 2019 [44] | CNN | CASIA-Iris-L | Accuracy | Hadamard + CNN | Acc = 97.41 |

| Chatterjee et al., 2019 [45] | DWT, ResNet | ATVS | Accuracy | ResNet | Acc = 92.57 |

| Proposed Approach | TSBTC, GLCM, Fusion of TSBTC and GLCM | Clarkson 2013 | Accuracy, precision, recall, and F-measure | Random Forest | Acc = 93.78 |

| Clarkson 2015 | Random Forest | Acc= 95.57 | |||

| IIITD Contact | Random Forest | Acc = 78.88 | |||

| IIITD Combined Spoofing | J48 + RF + MLP | Acc = 99.68 ACER = 0.48 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khade, S.; Gite, S.; Thepade, S.D.; Pradhan, B.; Alamri, A. Detection of Iris Presentation Attacks Using Feature Fusion of Thepade’s Sorted Block Truncation Coding with Gray-Level Co-Occurrence Matrix Features. Sensors 2021, 21, 7408. https://doi.org/10.3390/s21217408

Khade S, Gite S, Thepade SD, Pradhan B, Alamri A. Detection of Iris Presentation Attacks Using Feature Fusion of Thepade’s Sorted Block Truncation Coding with Gray-Level Co-Occurrence Matrix Features. Sensors. 2021; 21(21):7408. https://doi.org/10.3390/s21217408

Chicago/Turabian StyleKhade, Smita, Shilpa Gite, Sudeep D. Thepade, Biswajeet Pradhan, and Abdullah Alamri. 2021. "Detection of Iris Presentation Attacks Using Feature Fusion of Thepade’s Sorted Block Truncation Coding with Gray-Level Co-Occurrence Matrix Features" Sensors 21, no. 21: 7408. https://doi.org/10.3390/s21217408