New Generation Federated Learning

Abstract

1. Introduction

- We combine incremental learning with federated learning and propose a new generation federated learning, which is dedicated to solving cross-client knowledge increments. It will have the possibility to expand the knowledge with the interaction of different clients.

- We summarize and define some of the most important challenges facing the NGFL framework and present our proposed basic solutions. Various baseline schemes are designed according to the solution to these challenges, and the optimal upper bound of the algorithm is given for research.

- For the first time, we address the problem of model aggregation at the incremental fusion stage.

2. Related Works

2.1. Class-Incremental Learning

2.2. Federated Learning

2.3. Federated Class-Incremental Learning

3. Problem Definition

3.1. Class-Incremental Learning

3.2. Federated Learning

3.3. Model Decoupling

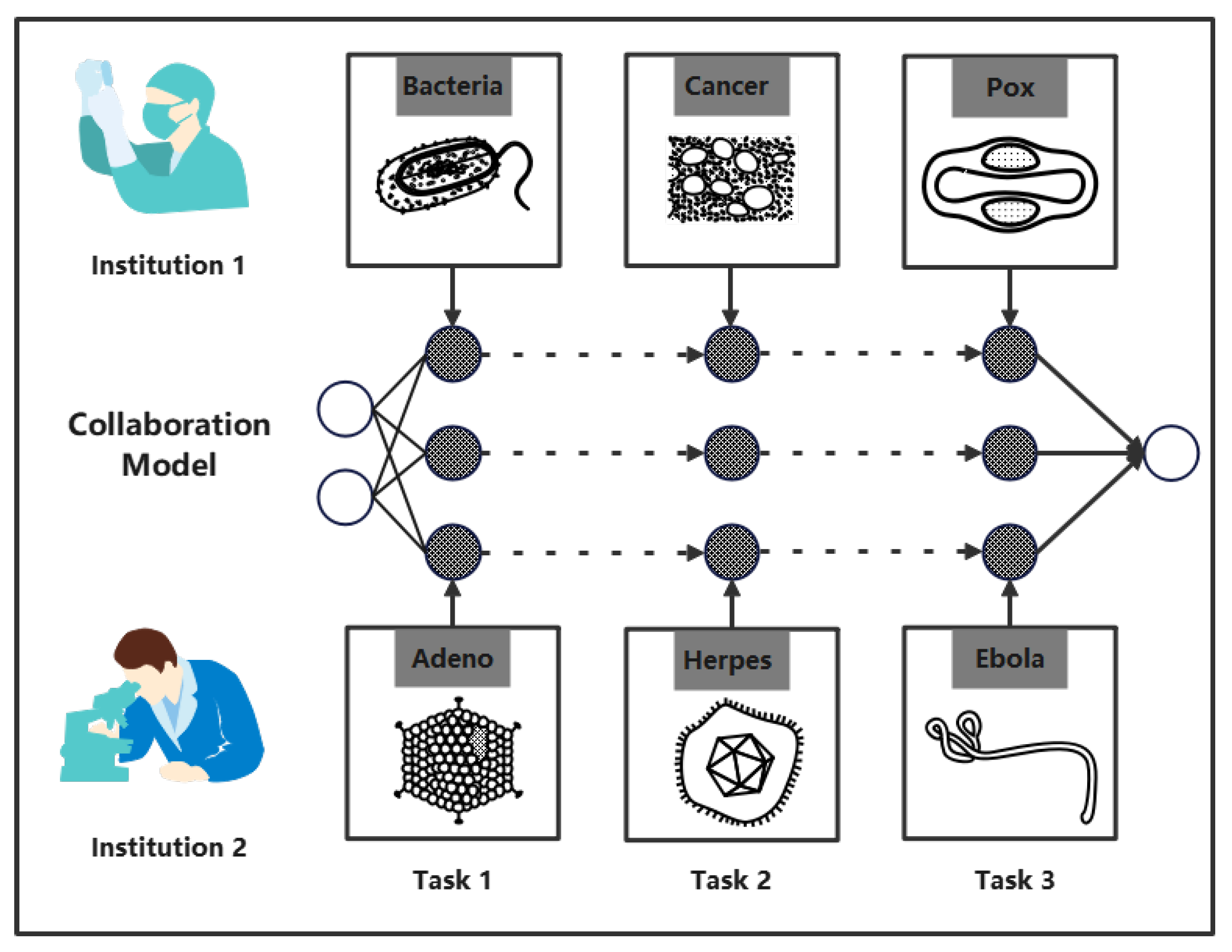

3.4. New Generation Federated Learning

- The server only needs to be given a basic task. More tasks should be learned from participating clients.

- The server should aggregate the models of different private tasks in the client, and achieve good performance.

- Every client should not have catastrophic forgetting.

- Clients should have good knowledge of other tasks from the global model.

- This framework should apply to all algorithms in this field.

4. Important Issues

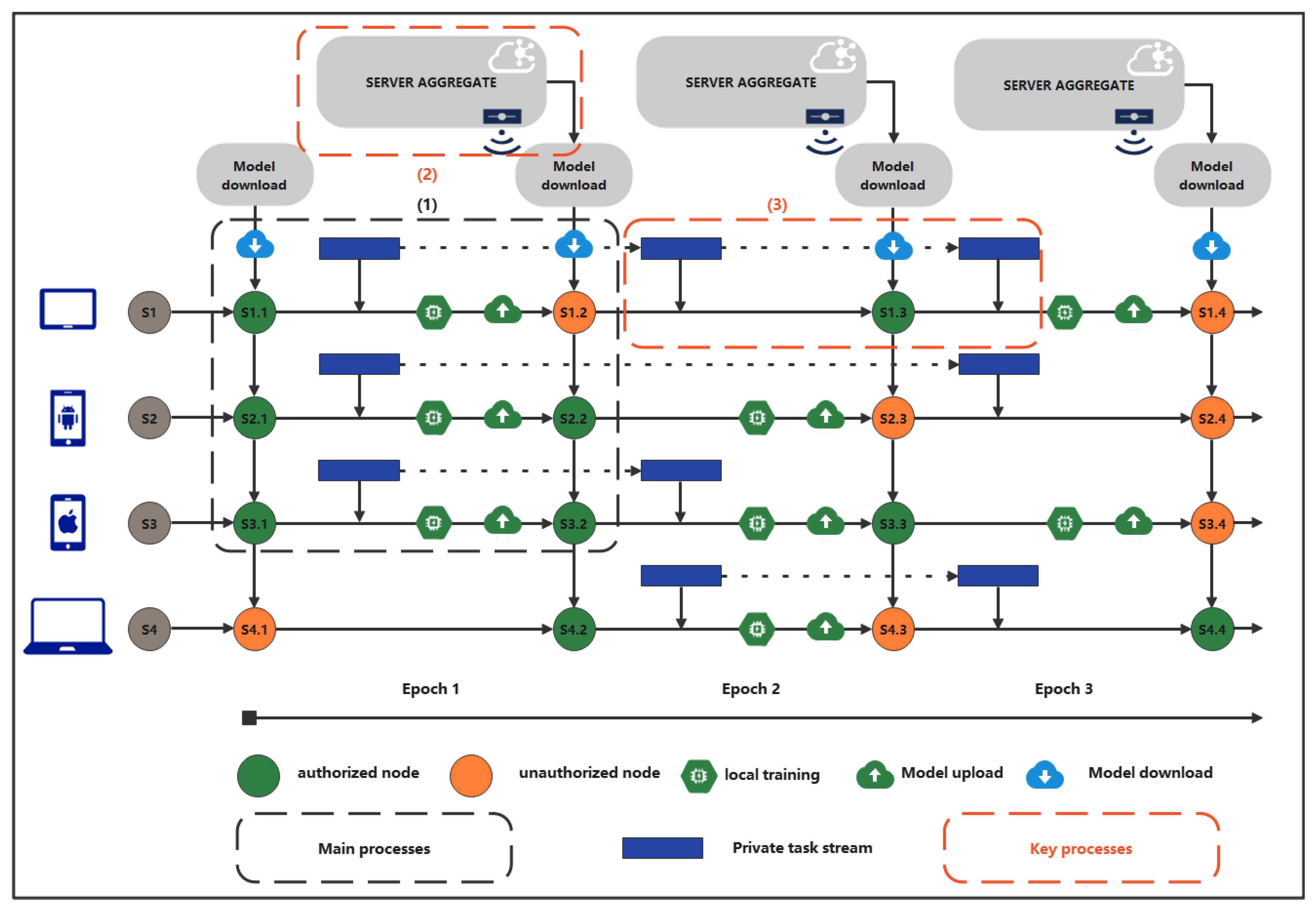

4.1. Client & Server Forget

Solution: Self-Attention and Total Attention

4.2. Task Overlap

- Full-covered: The entire class of client in the latest private task in round r has been incremented by other clients in the previous rounds, i.e. there are no new incremented classes. It can be expressed as

- Semi-covered: Some of the classes in client ’s latest private task in round r have been incremented by other clients in the previous rounds, i.e. there are some new incremented classes. It can be expressed as

- Not covered: The latest private task of client in round r has not been incremented by other clients in the previous rounds, i.e. all are new incremental classes. It can be expressed as

4.2.1. Solution: Double-Ended Task Table

4.3. Model Aggregate

4.3.1. Solution: Pre-Alignment and Post-Alignment

Pre-Alignment (PreA)

Post-Alignment (PostA)

4.3.2. Solution: Partial Fusion and Total Fusion

4.4. Communication & Storage Limit

5. Experiments

5.1. Datasets

5.1.1. CIFAR100

5.1.2. Tiny-ImageNet

5.2. (Hyper)Parameters

5.3. Baseline Analysis

6. Conclusions & Discussion

7. Limitation & Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS), Ft. Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Guo, P.; Wang, P.; Zhou, J.; Jiang, S.; Patel, V.M. Multi-Institutional Collaborations for Improving Deep Learning-Based Magnetic Resonance Image Reconstruction Using Federated Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, Virtual, 19–25 June 2021; pp. 2423–2432. [Google Scholar]

- Paragliola, G.; Coronato, A. Definition of a novel federated learning approach to reduce communication costs. Expert Syst. Appl. 2022, 189, 116109. [Google Scholar] [CrossRef]

- Lim, J.; Hwang, S.; Kim, S.; Moon, S.; Kim, W.Y. Scaffold-based molecular design using graph generative model. arXiv 2019, arXiv:1905.13639. [Google Scholar]

- Bai, X.; Wang, H.; Ma, L.; Xu, Y.; Gan, J.; Fan, Z.; Yang, F.; Ma, K.; Yang, J.; Bai, S.; et al. Advancing COVID-19 diagnosis with privacy-preserving collaboration in artificial intelligence. Nat. Mach. Intell. 2021, 3, 1081–1089. [Google Scholar] [CrossRef]

- Dong, J.; Wang, L.; Fang, Z.; Sun, G.; Xu, S.; Wang, X.; Zhu, Q. Federated Class-Incremental Learning. arXiv 2022, arXiv:2203.11473. [Google Scholar]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.C.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming catastrophic forgetting in neural networks. arXiv 2016, arXiv:1612.00796. [Google Scholar] [CrossRef] [PubMed]

- Chaudhry, A.; Dokania, P.K.; Ajanthan, T.; Torr, P.H.S. Riemannian Walk for Incremental Learning: Understanding Forgetting and Intransigence. arXiv 2018, arXiv:1801.10112. [Google Scholar]

- Lange, M.D.; Aljundi, R.; Masana, M.; Parisot, S.; Jia, X.; Leonardis, A.; Slabaugh, G.G.; Tuytelaars, T. A Continual Learning Survey: Defying Forgetting in Classification Tasks. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3366–3385. [Google Scholar] [CrossRef]

- Aljundi, R.; Babiloni, F.; Elhoseiny, M.; Rohrbach, M.; Tuytelaars, T. Memory Aware Synapses: Learning What (not) to Forget. In Proceedings of the Computer Vision, ECCV 2018, 15th European Conference, Munich, Germany, 8–14 September 2018; pp. 144–161. [Google Scholar] [CrossRef]

- Rebuffi, S.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. iCaRL: Incremental Classifier and Representation Learning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 5533–5542. [Google Scholar] [CrossRef]

- Thrun, S. Is Learning The n-th Thing Any Easier Than Learning The First? In Proceedings of the Advances in Neural Information Processing Systems 8, NIPS, Denver, CO, USA, 27–30 November 1995; Touretzky, D.S., Mozer, M., Hasselmo, M.E., Eds.; MIT Press: Cambridge, MA, USA, 1995; pp. 640–646. [Google Scholar]

- Zenke, F.; Poole, B.; Ganguli, S. Continual Learning Through Synaptic Intelligence. In Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6–11 August 2017; pp. 3987–3995. [Google Scholar]

- Jung, H.; Ju, J.; Jung, M.; Kim, J. Less-forgetting Learning in Deep Neural Networks. arXiv 2016, arXiv:1607.00122. [Google Scholar]

- Li, Z.; Hoiem, D. Learning Without Forgetting. In Proceedings of the Computer Vision, ECCV 2016, 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 614–629. [Google Scholar] [CrossRef]

- Lee, S.; Kim, J.; Jun, J.; Ha, J.; Zhang, B. Overcoming Catastrophic Forgetting by Incremental Moment Matching. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 4652–4662. [Google Scholar]

- Liu, X.; Masana, M.; Herranz, L.; van de Weijer, J.; López, A.M.; Bagdanov, A.D. Rotate your Networks: Better Weight Consolidation and Less Catastrophic Forgetting. In Proceedings of the 24th International Conference on Pattern Recognition, ICPR 2018, Beijing, China, 20–24 August 2018; pp. 2262–2268. [Google Scholar] [CrossRef]

- Triki, A.R.; Aljundi, R.; Blaschko, M.B.; Tuytelaars, T. Encoder Based Lifelong Learning. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, 22–29 October 2017; pp. 1329–1337. [Google Scholar] [CrossRef]

- Silver, D.L.; Mercer, R.E. The Task Rehearsal Method of Life-Long Learning: Overcoming Impoverished Data. In Proceedings of the Advances in Artificial Intelligence, 15th Conference of the Canadian Society for Computational Studies of Intelligence, AI 2002, Calgary, AB, Canada, 27–29 May 2002; pp. 90–101. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, J.; Ghosh, S.; Li, D.; Tasci, S.; Heck, L.P.; Zhang, H.; Kuo, C.J. Class-incremental Learning via Deep Model Consolidation. arXiv 2019, arXiv:1903.07864. [Google Scholar]

- Lee, K.; Lee, K.; Shin, J.; Lee, H. Overcoming Catastrophic Forgetting With Unlabeled Data in the Wild. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Korea, 27 October–2 November 2019; pp. 312–321. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, Y.; Wang, L.; Ye, Y.; Liu, Z.; Guo, Y.; Fu, Y. Large Scale Incremental Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; pp. 374–382. [Google Scholar] [CrossRef]

- Castro, F.M.; Marín-Jiménez, M.J.; Guil, N.; Schmid, C.; Alahari, K. End-to-End Incremental Learning. In Proceedings of the Computer Vision, ECCV 2018, 15th European Conference, Munich, Germany, 8–14 September 2018; pp. 241–257. [Google Scholar] [CrossRef]

- Shin, H.; Lee, J.K.; Kim, J.; Kim, J. Continual Learning with Deep Generative Replay. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 2990–2999. [Google Scholar]

- Ostapenko, O.; Puscas, M.M.; Klein, T.; Jähnichen, P.; Nabi, M. Learning to Remember: A Synaptic Plasticity Driven Framework for Continual Learning. arXiv 2019, arXiv:1904.03137. [Google Scholar]

- Kemker, R.; Kanan, C. FearNet: Brain-Inspired Model for Incremental Learning. arXiv 2017, arXiv:1711.10563. [Google Scholar]

- Xiang, Y.; Fu, Y.; Ji, P.; Huang, H. Incremental Learning Using Conditional Adversarial Networks. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Korea, 27 October–2 November 2019; pp. 6618–6627. [Google Scholar] [CrossRef]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and open problems in federated learning. arXiv 2019, arXiv:1912.04977. [Google Scholar]

- Li, Q.; Wen, Z.; Wu, Z.; Hu, S.; Wang, N.; Li, Y.; Liu, X.; He, B. A survey on federated learning systems: Vision, hype and reality for data privacy and protection. IEEE Trans. Knowl. Data Eng. 2021. Early Access. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Alazab, M.; RM, S.P.; Parimala, M.; Reddy, P.; Gadekallu, T.R.; Pham, Q.V. Federated learning for cybersecurity: Concepts, challenges and future directions. IEEE Trans. Ind. Inform. 2022, 18, 3501–3509. [Google Scholar] [CrossRef]

- Li, A.; Sun, J.; Li, P.; Pu, Y.; Li, H.; Chen, Y. Hermes: An efficient federated learning framework for heterogeneous mobile clients. In Proceedings of the 27th Annual International Conference on Mobile Computing and Networking, New Orleans, LA, USA, 27 March–1 April 2021; pp. 420–437. [Google Scholar]

- Li, B.; Chen, S.; Yu, K. Model Fusion from Unauthorized Clients in Federated Learning. Mathematics 2022, 10, 3751. [Google Scholar] [CrossRef]

- Sahu, A.K.; Li, T.; Sanjabi, M.; Zaheer, M.; Talwalkar, A.; Smith, V. On the Convergence of Federated Optimization in Heterogeneous Networks. arXiv 2018, arXiv:1812.06127. [Google Scholar]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated learning with non-iid data. arXiv 2018, arXiv:1806.00582. [Google Scholar] [CrossRef]

- Chen, H.Y.; Chao, W.L. Fedbe: Making bayesian model ensemble applicable to federated learning. arXiv 2020, arXiv:2009.01974. [Google Scholar]

- Chen, Y.; Nin, Y.; Slawski, M.; Rangwala, H. Asynchronous Online Federated Learning for Edge Devices. arXiv 2020, arXiv:cs.DC/1911.02134. [Google Scholar]

- Avdiukhin, D.; Kasiviswanathan, S. Federated Learning under Arbitrary Communication Patterns. In Proceedings of Machine Learning Research; Meila, M., Zhang, T., Eds.; PMLR: Sydney, Australia, 2021; Volume 139, pp. 425–435. [Google Scholar]

- Zheng, S.; Meng, Q.; Wang, T.; Chen, W.; Yu, N.; Ma, Z.M.; Liu, T.Y. Asynchronous Stochastic Gradient Descent with Delay Compensation. In Proceedings of Machine Learning Research; PMLR: Sydney, Australia, 2017; Volume 70, pp. 4120–4129. [Google Scholar]

| Notation | Description |

|---|---|

| Global communication round | |

| Client task id | |

| Client local training round | |

| Label /class * space at t-th task | |

| Label/class space for client i at t-th task | |

| Label/class space for client i at t-th task in r-th round | |

| Label/class space for client i’s all task in r-th round |

| Methods | 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Lower-Base(TA-TF) | 83.20 | 36.95 | 23.56 | 18.02 | 15.91 | 13.71 | 11.42 | 9.86 | 8.32 | 8.04 | 22.89 |

| Lower-Base(TA-PF) | 83.20 | 39.50 | 23.70 | 18.67 | 15.97 | 13.71 | 11.29 | 9.93 | 8.42 | 8.04 | 23.24 |

| Lower-Base(SA-TF) | 83.20 | 48.94 | 33.19 | 26.97 | 23.18 | 17.48 | 15.08 | 13.61 | 11.13 | 10.84 | 28.37 |

| Lower-Base(SA-PF) | 83.20 | 56.30 | 39.13 | 31.79 | 27.39 | 19.71 | 16.45 | 13.95 | 11.58 | 11.2 | 31.06 |

| Upper-Base(Self) | 83.20 | 69.80 | 65.30 | 62.40 | 61.60 | 60.6 | 58.60 | 56.70 | 54.90 | 53.5 | 62.60 |

| Upper-Base(Global) | 83.20 | 77.60 | 70.70 | 66.32 | 66.11 | 63.59 | 60.54 | 58.95 | 57.11 | 55.41 | 65.90 |

| Methods | 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Lower-Base(TA-TF) | 83.20 | 37.10 | 23.29 | 18.15 | 16.07 | 13.65 | 11.51 | 9.84 | 8.39 | 7.95 | 22.91 |

| Lower-Base(TA-PF) | 83.20 | 39.70 | 23.40 | 18.50 | 15.90 | 13.60 | 11.25 | 10.0 | 8.38 | 8.00 | 23.19 |

| Lower-Base(SA-TF) | 83.20 | 48.40 | 33.16 | 26.49 | 23.34 | 17.45 | 14.72 | 13.41 | 11.35 | 10.88 | 28.24 |

| Lower-Base(SA-PF) | 83.20 | 55.94 | 39.63 | 31.72 | 27.68 | 20.20 | 15.80 | 13.77 | 12.11 | 11.00 | 31.08 |

| Upper-Base(Self) | 83.20 | 74.90 | 69.00 | 64.90 | 64.10 | 61.70 | 59.70 | 57.40 | 55.5 | 54.15 | 64.45 |

| Upper-Base(Global) | 83.20 | 78.60 | 71.40 | 67.30 | 65.20 | 63.50 | 60.60 | 59.80 | 57.20 | 56.90 | 66.37 |

| Methods | 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Lower-Base(TA-TF) | 78.10 | 40.20 | 23.10 | 17.67 | 15.45 | 12.95 | 10.44 | 9.91 | 8.23 | 7.49 | 22.35 |

| Lower-Base(TA-PF) | 78.10 | 39.80 | 24.50 | 18.00 | 15.37 | 12.58 | 10.10 | 9.91 | 8.16 | 7.50 | 22.40 |

| Lower-Base(SA-TF) | 78.10 | 48.50 | 34.90 | 26.30 | 20.90 | 15.30 | 13.00 | 11.40 | 9.16 | 8.17 | 26.57 |

| Lower-Base(SA-PF) | 78.10 | 55.80 | 41.00 | 28.40 | 22.90 | 16.40 | 13.90 | 12.17 | 9.71 | 9.03 | 28.74 |

| Upper-Base(Self) | 78.10 | 69.90 | 63.20 | 59.10 | 58.40 | 58.50 | 56.50 | 55.80 | 54.10 | 53.20 | 60.68 |

| Upper-Base(Global) | 78.10 | 74.10 | 68.10 | 64.87 | 64.85 | 64.36 | 62.11 | 61.16 | 60.65 | 58.85 | 65.71 |

| Methods | 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Lower-Base (TA-TF) | 78.10 | 40.30 | 23.49 | 18.60 | 15.70 | 13.15 | 10.59 | 10.23 | 8.28 | 7.85 | 22.63 |

| Lower-Base (TA-PF) | 78.10 | 40.50 | 23.63 | 18.37 | 15.45 | 12.83 | 10.18 | 10.17 | 8.23 | 7.73 | 22.52 |

| Lower-Base (SA-TF) | 78.10 | 58.60 | 37.50 | 22.27 | 17.82 | 14.04 | 11.00 | 10.86 | 8.61 | 8.04 | 26.68 |

| Lower-Base (SA-PF) | 78.10 | 62.50 | 39.06 | 24.20 | 18.70 | 15.03 | 12.08 | 11.11 | 8.85 | 8.26 | 27.78 |

| Upper-Base (Self) | 78.10 | 72.40 | 64.43 | 61.50 | 60.68 | 59.70 | 57.71 | 56.25 | 55.13 | 53.35 | 61.92 |

| Upper-Base (Global) | 78.10 | 74.00 | 68.63 | 65.70 | 64.80 | 63.51 | 61.28 | 61.22 | 60.05 | 59.03 | 65.63 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, B.; Chen, S.; Peng, Z. New Generation Federated Learning. Sensors 2022, 22, 8475. https://doi.org/10.3390/s22218475

Li B, Chen S, Peng Z. New Generation Federated Learning. Sensors. 2022; 22(21):8475. https://doi.org/10.3390/s22218475

Chicago/Turabian StyleLi, Boyuan, Shengbo Chen, and Zihao Peng. 2022. "New Generation Federated Learning" Sensors 22, no. 21: 8475. https://doi.org/10.3390/s22218475

APA StyleLi, B., Chen, S., & Peng, Z. (2022). New Generation Federated Learning. Sensors, 22(21), 8475. https://doi.org/10.3390/s22218475