Evaluation of In-Cloth versus On-Skin Sensors for Measuring Trunk and Upper Arm Postures and Movements

Abstract

:1. Introduction

2. Materials and Methods

2.1. Demographic Data

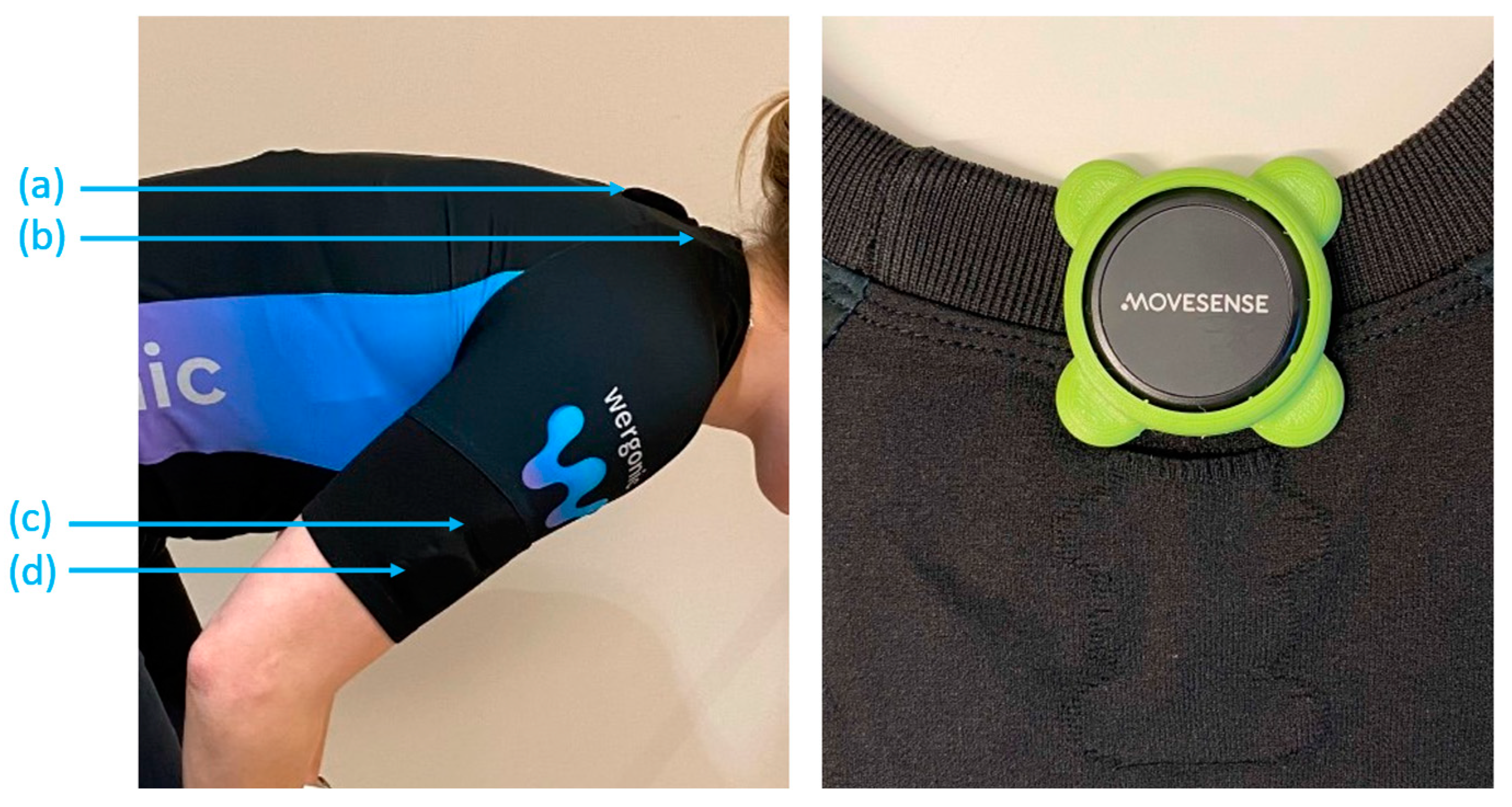

2.2. Experimental Setups

2.3. Experimental Protocol

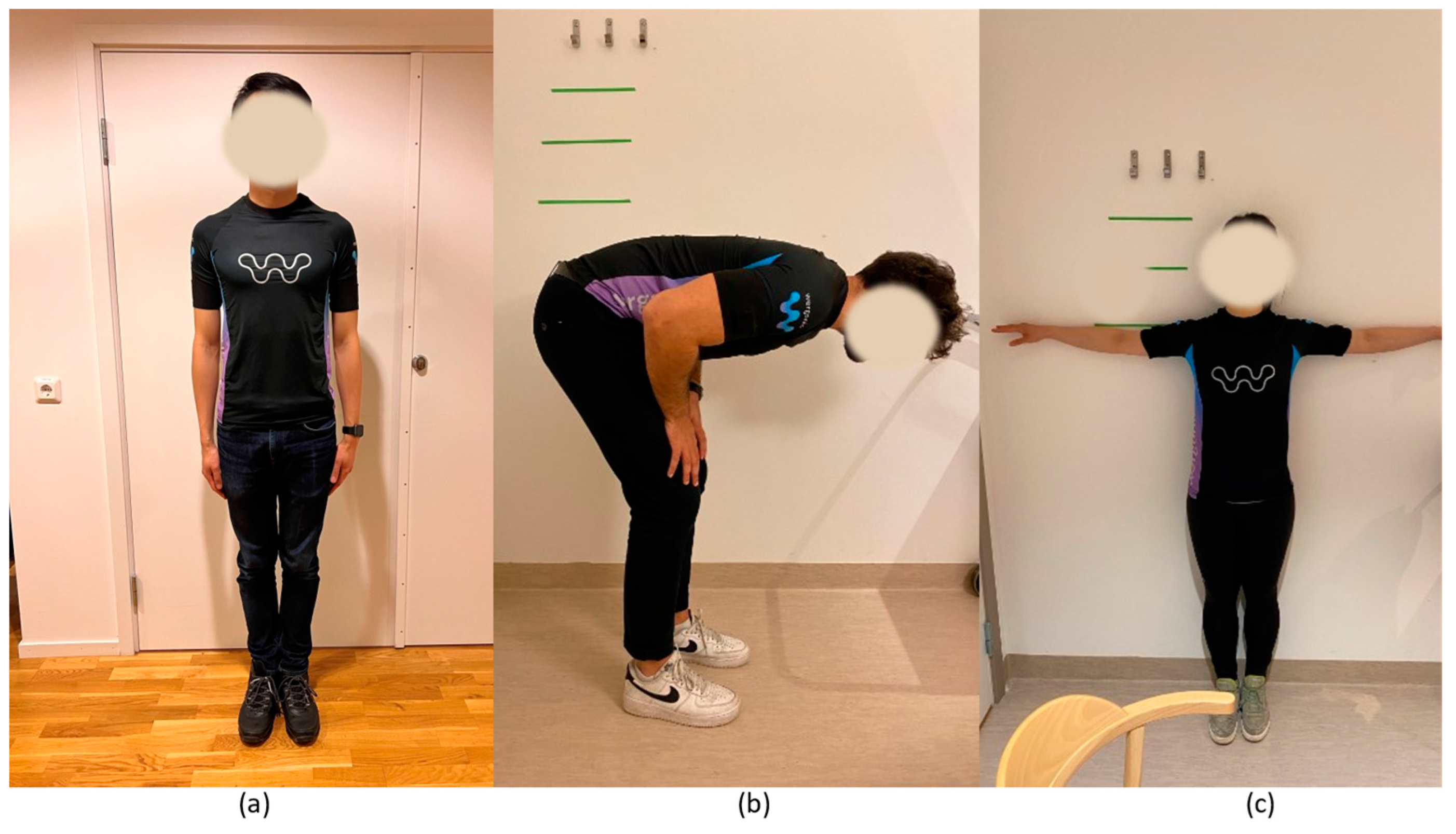

- (a)

- I-pose: stand up straight and look straight forward with arms at each side;

- (b)

- Forward trunk bending: bow forward at about 90°;

- (c)

- T-pose: stand up straight and look straight forward, and hold the arms horizontally to the sides at 90°.

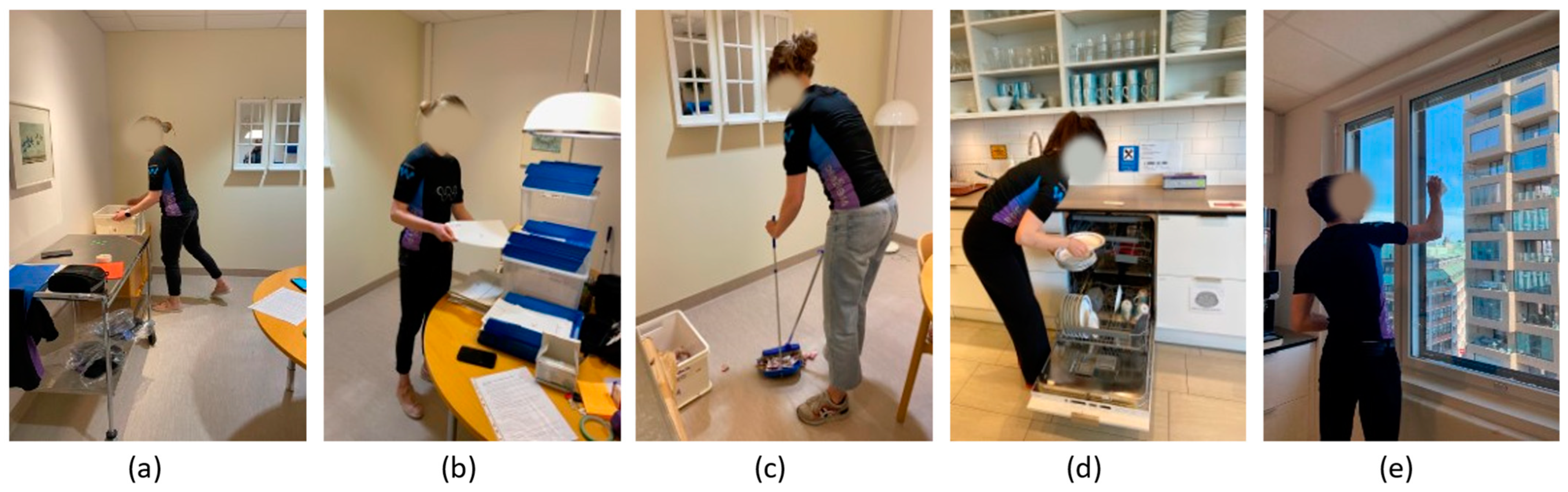

- (a)

- Lifting boxes: lift a light box from the floor to the table in front and put it back, and from the floor to the table to the side and put it back;

- (b)

- Sorting mail: sort mail with marked letters into the corresponding compartments at different heights;

- (c)

- Wiping floor: clean up paper scraps on the floor and put them into a box using a shovel and broom;

- (d)

- Cleaning dishwasher: empty cups and plates from the dishwasher and store them on shelves;

- (e)

- Cleaning windows: clean windows with markers at different heights using a rag and spray bottle.

2.4. Data Fusion and Signal Processing

- Inclination angles (arms): upper arm inclination angles were obtained by calculating the relative angle to the reference I-pose [29];

- Forward/Sagittal inclination angles (trunk): the forward inclination angles (inclination angles on the sagittal plane) were obtained using Hansson forward/backward projections, the corresponding I-pose as the reference, and forward trunk bending to indicate the direction [30].

- The inclination velocities (arms and trunk): were computed by using a simple temporal derivation, i.e., dividing the difference between two samples of inclination angles by the sampling time;

2.5. Statistical Analysis

3. Results

3.1. Angular Distributions

3.2. Angular Velocity

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Non-Dominant Arm, Inclination | Simulated Work Tasks | ||||

|---|---|---|---|---|---|

| Lifting Boxes | Sorting Mails | Wiping Floor | Cleaning Dishwasher | Cleaning Windows | |

| Percentile (°) | |||||

| 5th | 1.1 ± 2 (3.8) | 1.4 ± 2.5 (3.5) | 2.2 ± 1.7 (9.5) | 0.9 ± 1 (5.8) | 1.2 ± 1.6 (3.3) |

| 10th | 1.4 ± 2.2 (5.4) | 1.4 ± 2.5 (4.6) | 2.4 ± 1.7 (12.6) | 0.9 ± 0.6 (8.6) | 1.4 ± 2 (4.7) |

| 50th | 1.3 ± 1.7 (15.6) | 1.7 ± 2.3 (9.7) | 2 ± 1.8 (29.8) | 1.9 ± 1.1 (28.8) | 1.7 ± 2.2 (13.3) |

| 90th | 2.4 ± 1.9 (30.6) | 2.2 ± 2.6 (19.4) | 2.6 ± 2.4 (51.9) | 5.5 ± 4.3 (72.9) | 2.8 ± 3.5 (37.6) |

| 95th | 3 ± 2.1 (35) | 2.8 ± 2.9 (24.4) | 2.7 ± 2.4 (57.2) | 6.5 ± 4.1 (83.2) | 5.4 ± 4.3 (50.8) |

| Percentage of time (%) | |||||

| <20° | 4.8 ± 3.8 (65.2) | 7.8 ± 20.4 (91.5) | 4.3 ± 2.5 (28.9) | 3.5 ± 2.3 (37.1) | 4.7 ± 6.8 (71.8) |

| >30° | 4.2 ± 5.1 (11.7) | 0.9 ± 1.6 (2.3) | 3.7 ± 3.3 (48) | 2.8 ± 2.3 (47.4) | 1.4 ± 2.1 (14.1) |

| >45° | 0.6 ± 1.4 (0.9) | 0.4 ± 0.8 (1.2) | 3.9 ± 4.9 (22.8) | 1.5 ± 1.5 (29.7) | 1.2 ± 1.5 (7.4) |

| >60° | 0 ± 0 (0) | 0.2 ± 0.3 (0.4) | 2.1 ± 3.4 (4.7) | 2.2 ± 2.1 (17.6) | 0.9 ± 1.3 (4.3) |

| >90° | 0 ± 0 (0) | 0 ± 0.1 (0) | 0 ± 0 (0.1) | 1.8 ± 1.8 (3.4) | 0.5 ± 0.8 (1.4) |

| Non-Dominant Arm, Inclination Velocity | Simulated Work Tasks | ||||

|---|---|---|---|---|---|

| Lifting Boxes | Sorting Mails | Wiping Floor | Cleaning Dishwasher | Cleaning Windows | |

| Percentile (°/s) | |||||

| 5th | 0.3 ± 0.2 (2.7) | 0.2 ± 0.1 (1.5) | 0.2 ± 0.1 (1.8) | 0.2 ± 0.2 (2.3) | 0.3 ± 0.2 (2.6) |

| 10th | 0.6 ± 0.4 (6.6) | 0.3 ± 0.2 (3.5) | 0.2 ± 0.1 (4.4) | 0.3 ± 0.3 (5.2) | 0.4 ± 0.2 (5.8) |

| 50th | 2.1 ± 2.1 (34) | 1 ± 0.8 (17.4) | 0.5 ± 0.5 (23.5) | 1.4 ± 1.1 (30.3) | 1.6 ± 1.3 (30.9) |

| 90th | 4.9 ± 4.7 (97.7) | 3.5 ± 2.4 (52.7) | 1.9 ± 1.6 (68.6) | 5.5 ± 4.6 (106) | 3.7 ± 3 (94.7) |

| 95th | 6.6 ± 5.4 (124.6) | 4.9 ± 3.7 (69.3) | 1.9 ± 1.5 (88) | 6.6 ± 4 (140.5) | 5 ± 4.3 (122.2) |

| Dominant Arm, Generalized Velocity | Simulated Work Tasks | ||||

|---|---|---|---|---|---|

| Lifting Boxes | Sorting Mails | Wiping Floor | Cleaning Dishwasher | Cleaning Windows | |

| Percentile (°/s) | |||||

| 5th | 1.1 ± 0.6 (11.3) | 1.5 ± 0.7 (11.6) | 0.6 ± 0.4 (11.1) | 0.9 ± 0.6 (10.8) | 1.8 ± 1.4 (19.4) |

| 10th | 1.4 ± 0.7 (17.1) | 2.1 ± 0.8 (17.4) | 0.9 ± 0.5 (17.3) | 1.2 ± 0.9 (16.6) | 2.4 ± 2 (31.3) |

| 50th | 4.5 ± 4.3 (53) | 7 ± 2.9 (53.1) | 5.6 ± 3.3 (57.6) | 3.8 ± 2.2 (58.5) | 15.3 ± 5.1 (124.2) |

| 90th | 21.9 ± 28.1 (134.9) | 20.5 ± 11.9 (132.2) | 39.7 ± 29.5 (179.9) | 15.6 ± 18.7 (170.8) | 54 ± 30.1 (324.6) |

| 95th | 30.7 ± 40.9 (174.7) | 25.9 ± 16.1 (164.9) | 58.4 ± 49.7 (256.6) | 26.5 ± 35.3 (235.7) | 71.5 ± 40.2 (398.1) |

| Non-Dominant arm, Generalized Velocity | Simulated Work Tasks | ||||

|---|---|---|---|---|---|

| Lifting Boxes | Sorting Mails | Wiping Floor | Cleaning Dishwasher | Cleaning Windows | |

| Percentile (°/s) | |||||

| 5th | 1.1 ± 1.2 (11.9) | 0.7 ± 0.4 (6.8) | 0.6 ± 0.7 (9.2) | 1 ± 0.8 (9.6) | 0.9 ± 0.9 (12.3) |

| 10th | 1.6 ± 1 (17.9) | 0.8 ± 0.6 (10.4) | 0.9 ± 0.8 (13.7) | 1.2 ± 0.7 (14.7) | 1 ± 1.1 (18.3) |

| 50th | 3.9 ± 3.8 (55.2) | 2.7 ± 2.4 (32.7) | 2.3 ± 1.4 (41.1) | 3.6 ± 1.5 (53.4) | 3.4 ± 1.6 (56.6) |

| 90th | 29.7 ± 42.1 (145.3) | 12.9 ± 17 (94.1) | 12.7 ± 23.2 (104.4) | 12.5 ± 8.4 (159.4) | 18 ± 23.6 (148.5) |

| 95th | 39.1 ± 53.2 (191.9) | 12.4 ± 8.6 (123.4) | 25.8 ± 49.5 (136.6) | 22.9 ± 18.7 (216.4) | 27.4 ± 35.3 (189.9) |

References

- Kok, J.D.; Vroonhof, P.; Snijders, J.; Roullis, G.; Clarke, M.; Peereboom, K.; Dorst, P.V.; Isusi, I. Work-Related MSDs: Prevalence, Costs and Demographics in the EU (European Risk Observatory Executive Summary); Publications Office of the European Union: Luxembourg, 2019; pp. 1–18. [Google Scholar]

- Ahlberg, I. The Economic Costs of Musculoskeletal Disorders, a Cost-of-Illness Study in SWEDEN for 2012; Lund University: Lund, Sweden, 2014. [Google Scholar]

- Van Der Beek, A.J.; Dennerlein, J.T.; Huysmans, M.A.; Mathiassen, S.E.; Burdorf, A.; Van Mechelen, W.; Van Dieën, J.H.; Frings-Dresen, M.H.; Holtermann, A.; Janwantanakul, P.; et al. A research framework for the development and implementation of interventions preventing work-related musculoskeletal disorders. Scand. J. Work Environ. Health 2017, 31, 526–539. [Google Scholar] [CrossRef] [PubMed]

- Wells, R. Why have we not solved the MSD problem? Work 2009, 34, 117–121. [Google Scholar] [CrossRef] [PubMed]

- Forsman, M. The search for practical and reliable observational or technical risk assessment methods to be used in prevention of musculoskeletal disorders. Agron. Res. 2017, 15, 680–686. [Google Scholar]

- Hulshof, C.T.; Pega, F.; Neupane, S.; van der Molen, H.F.; Colosio, C.; Daams, J.G.; Frings-Dresen, M.H. The prevalence of occupational exposure to ergonomic risk factors: A systematic review and meta-analysis from the WHO/ILO Joint Estimates of the Work-related Burden of Disease and Injury. Environ. Int. 2021, 146, 106157. [Google Scholar] [CrossRef]

- Hansson, G.Å.; Balogh, I.; Byström, J.U.; Ohlsson, K.; Nordander, C.; Asterland, P.; Malmo Shoulder-Neck Study Group. Questionnaire versus direct technical measurements in assessing postures and movements of the head, upper back, arms and hands. Scand. J. Work Environ. Health 2001, 27, 30–40. [Google Scholar] [CrossRef] [Green Version]

- Koch, M.; Lunde, L.K.; Gjulem, T.; Knardahl, S.; Veiersted, K.B. Validity of questionnaire and representativeness of objective methods for measurements of mechanical exposures in construction and health care work. PLoS ONE 2016, 11, e0162881. [Google Scholar] [CrossRef] [Green Version]

- Takala, E.P.; Pehkonen, I.; Forsman, M.; Hansson, G.Å.; Mathiassen, S.E.; Neumann, W.P.; Winkel, J. Systematic evaluation of observational methods assessing biomechanical exposures at work. Scand. J. Work Environ. Health 2010, 36, 3–24. [Google Scholar] [CrossRef] [Green Version]

- Rhén, I.M.; Forsman, M. Inter- and intra-rater reliability of the OCRA checklist method in video-recorded manual work tasks. Appl. Ergon. 2020, 84, 103025. [Google Scholar] [CrossRef]

- Gupta, N.; Rasmussen, C.L.; Forsman, M.; Søgaard, K.; Holtermann, A. How does accelerometry-measured arm elevation at work influence prospective risk of long-term sickness absence? Scand. J. Work Environ. Health 2022, 48, 137–147. [Google Scholar] [CrossRef]

- Gupta, N.; Bjerregaard, S.S.; Yang, L.; Forsman, M.; Rasmussen, C.L.; Rasmussen CD, N.; Holtermann, A. Does occupational forward bending of the back increase long-term sickness absence risk? A 4-year prospective register-based study using device-measured compositional data analysis. Scand. J. Work Environ. Health 2022, 48, 651–661. [Google Scholar] [CrossRef]

- Arvidsson, I.; Dahlqvist, C.; Enquist, H.; Nordander, C. Action Levels for the Prevention of Work-Related Musculoskeletal Disorders in the Neck and Upper Extremities: A Proposal. Ann. Work Expo. Health 2021, 65, 741–747. [Google Scholar] [CrossRef]

- Wang, D.; Dai, F.; Ning, X. Risk Assessment of Work-Related Musculoskeletal Disorders in Construction: State-of-the-Art Review. J. Constr. Eng. Manag. 2015, 141, 04015008. [Google Scholar] [CrossRef]

- Lanata, A.; Greco, A.; Di Modica, S.; Niccolini, F.; Vivaldi, F.; Di Francesco, F.; Scilingo, E.P. A New Smart-Fabric based Body Area Sensor Network for Work Risk Assessment. In Proceedings of the 2020 IEEE International Workshop on Metrology for Industry 4.0 & IoT, Roma, Italy, 3–5 June 2020; pp. 187–190. [Google Scholar] [CrossRef]

- Yang, L.; Lu, K.; Diaz-Olivares, J.A.; Seoane, F.; Lindecrantz, K.; Forsman, M.; Eklund, J.A. Towards Smart Work Clothing for Automatic Risk Assessment of Physical Workload. IEEE Access 2018, 6, 40059–40072. [Google Scholar] [CrossRef]

- Manivasagam, K.; Yang, L. Evaluation of a New Simplified Inertial Sensor Method against Electrogoniometer for Measuring Wrist Motion in Occupational Studies. Sensors 2022, 22, 1690. [Google Scholar] [CrossRef]

- Lim, S.; D’Souza, C. A narrative review on contemporary and emerging uses of inertial sensing in occupational ergonomics. Int. J. Ind. Ergon. 2020, 76, 102937. [Google Scholar] [CrossRef]

- Huang, C.; Kim, W.; Zhang, Y.; Xiong, S. Development and validation of a wearable inertial sensors-based automated system for assessing work-related musculoskeletal disorders in the workspace. Int. J. Environ. Res. Public Health 2020, 17, 6050. [Google Scholar] [CrossRef]

- Zhang, X.; Schall, M.C.; Chen, H.; Gallagher, S.; Davis, G.A.; Sesek, R. Manufacturing worker perceptions of using wearable inertial sensors for multiple work shifts. Appl. Ergon. 2022, 98, 103579. [Google Scholar] [CrossRef]

- Digo, E.; Pastorelli, S.; Gastaldi, L. A Narrative Review on Wearable Inertial Sensors for Human Motion Tracking in Industrial Scenarios. Robotics 2022, 11, 138. [Google Scholar] [CrossRef]

- Lorenz, M.; Bleser, G.; Akiyama, T.; Niikura, T.; Stricker, D.; Taetz, B. Towards Artefact Aware Human Motion Capture using Inertial Sensors Integrated into Loose Clothing. In Proceedings of the IEEE 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 1682–1688. [Google Scholar] [CrossRef]

- Jayasinghe, U.; Harwin, W.S.; Hwang, F. Comparing Clothing-Mounted Sensors with Wearable Sensors for Movement Analysis and Activity Classification. Sensors 2019, 20, 82. [Google Scholar] [CrossRef] [Green Version]

- Gleadhill, S.; James, D.; Lee, J. Validating Temporal Motion Kinematics from Clothing Attached Inertial Sensors. In Proceedings of the 12th Conference of the International Sports Engineering Association, Brisbane, QLD, Australia, 26–29 March 2018; p. 304. [Google Scholar] [CrossRef] [Green Version]

- Camomilla, V.; Dumas, R.; Cappozzo, A. Human movement analysis: The soft tissue artefact issue. J. Biomech. 2017, 62, 1–4. [Google Scholar] [CrossRef] [Green Version]

- Xiao, X.; Zarar, S. Machine Learning for Placement-Insensitive Inertial Motion Capture. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 6716–6721. [Google Scholar] [CrossRef]

- Fan, X.; Lind, C.M.; Rhen, I.-M.; Forsman, M. Effects of Sensor Types and Angular Velocity Computational Methods in Field Measurements of Occupational Upper Arm and Trunk Postures and Movements. Sensors 2021, 21, 5527. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Schall, M.C.; Fethke, N.B. Measuring upper arm elevation using an inertial measurement unit: An exploration of sensor fusion algorithms and gyroscope models. Appl. Ergon. 2020, 89, 103187. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Grooten, W.J.A.; Forsman, M. An iPhone application for upper arm posture and movement measurements. Appl. Ergon. 2017, 65, 492–500. [Google Scholar] [CrossRef] [PubMed]

- Hansson, G.; Asterland, P.; Holmer, N.-G.; Skerfving, S. Validity and reliability of triaxial accelerometers for inclinometry in posture analysis. Med. Biol. Eng. Comput. 2001, 39, 405–413. [Google Scholar] [CrossRef]

- Nordander, C.; Hansson, G.Å.; Ohlsson, K.; Arvidsson, I.; Balogh, I.; Strömberg, U.; Skerfving, S. Exposure–response relationships for work-related neck and shoulder musculoskeletal disorders—Analyses of pooled uniform data sets. Appl. Ergon. 2016, 55, 70–84. [Google Scholar] [CrossRef]

- Wahlström, J.; Bergsten, E.; Trask, C.; Mathiassen, S.E.; Jackson, J.; Forsman, M. Full-Shift Trunk and Upper Arm Postures and Movements among Aircraft Baggage Handlers. Ann. Occup. Hyg. 2016, 60, 977–990. [Google Scholar] [CrossRef]

- Schall, M.C.; Fethke, N.B.; Chen, H. Working postures and physical activity among registered nurses. Appl. Ergon. 2016, 54, 243–250. [Google Scholar] [CrossRef]

- Granzow, R.F.; Schall, M.C.; Smidt, M.F.; Chen, H.; Fethke, N.B.; Huangfu, R. Characterizing exposure to physical risk factors among reforestation hand planters in the Southeastern United States. Appl. Ergon. 2018, 66, 1–8. [Google Scholar] [CrossRef]

- Forsman, M.; Fan, X.; Rhen, I.M.; Lind, C.M. Mind the gap—Development of conversion models between accelerometer- and IMU-based measurements of arm and trunk postures and movements in warehouse work. Appl. Ergon. 2022, 105, 103841. [Google Scholar] [CrossRef]

| Dominant Arm, Inclination | Simulated Work Tasks | ||||

|---|---|---|---|---|---|

| Lifting Boxes | Sorting Mails | Wiping Floor | Cleaning Dishwasher | Cleaning Windows | |

| Percentile (°) | |||||

| 5th | 0.7 ± 0.5 (4.5) | 1 ± 1.8 (3.7) | 2.2 ± 1.6 (8.4) | 0.6 ± 0.4 (5.6) | 1.7 ± 1.2 (10.2) |

| 10th | 0.7 ± 0.7 (6.4) | 1.1 ± 1.8 (5) | 2.4 ± 1.7 (11.4) | 0.9 ± 0.3 (7.9) | 2.5 ± 2.8 (15.8) |

| 50th | 1.4 ± 1.1 (17.1) | 1.5 ± 1.9 (11.8) | 2.7 ± 2 (23.6) | 1.2 ± 1.4 (23.6) | 4.1 ± 3.5 (52.7) |

| 90th | 3.5 ± 2.1 (32.4) | 2.8 ± 3.1 (25.6) | 2.5 ± 1.6 (36.6) | 7.6 ± 3.8 (70.8) | 7 ± 4.3 (103.2) |

| 95th | 4.1 ± 2.2 (36.2) | 3.6 ± 3.3 (34.3) | 2.2 ± 1.4 (40.8) | 8.3 ± 3.8 (83.1) | 7.4 ± 5 (112.2) |

| Percentage of time (%) | |||||

| <20° | 6.1 ± 4.4 (61.8) | 4.6 ± 5.8 (81.1) | 9.3 ± 8.5 (39.3) | 2.6 ± 2.8 (41.8) | 2.2 ± 2 (18.9) |

| >30° | 3.1 ± 2.7 (13.9) | 2.6 ± 4.1 (8.3) | 7.1 ± 6.1 (27.2) | 2.5 ± 3.1 (38.8) | 2.4 ± 1.3 (71.4) |

| >45° | 1.6 ± 2.5 (1.7) | 1.3 ± 2.5 (3.4) | 2.1 ± 4 (4.7) | 1.5 ± 2 (23.4) | 3.7 ± 2.5 (57.8) |

| >60° | 0.1 ± 0.2 (0.1) | 0.6 ± 1 (1.2) | 0.4 ± 1.1 (0.2) | 3.3 ± 2.4 (15.8) | 4.4 ± 3.6 (44.6) |

| >90° | – | 0.1 ± 0.5 (0.1) | – | 2 ± 1.9 (3.1) | 4.7 ± 2.4 (19.9) |

| Trunk, Forward Inclination | Simulated Work Tasks | ||||

|---|---|---|---|---|---|

| Lifting Boxes | Sorting Mails | Wiping Floor | Cleaning Dishwasher | Cleaning Windows | |

| Percentile (°) | |||||

| 5th | 2.8 ± 1.9 (2.4) | 2.6 ± 1.4 (5.6) | 2.5 ± 2 (20.5) | 3.3 ± 4.1 (−3.8) | 3.6 ± 3.3 (−8.4) |

| 10th | 2.9 ± 1.9 (5.8) | 2.7 ± 1.5 (7.7) | 2.6 ± 1.9 (25.2) | 3.2 ± 4.8 (−0.7) | 4.1 ± 3.3 (−6.1) |

| 50th | 3.2 ± 2.7 (23.6) | 2.7 ± 1.7 (14.4) | 3.1 ± 2.5 (41.9) | 3.7 ± 3.9 (20.4) | 3.7 ± 3.2 (3.1) |

| 90th | 6.8 ± 7.2 (63.8) | 2.7 ± 1.7 (20.7) | 3.9 ± 3.7 (53.6) | 5.6 ± 6.7 (64) | 3.9 ± 3.7 (16.6) |

| 95th | 6.8 ± 7.2 (67.3) | 2.5 ± 1.9 (22.4) | 4.1 ± 4.1 (56.3) | 5.8 ± 6.4 (70.9) | 3.9 ± 3.6 (21.1) |

| Percentage of time (%) | |||||

| <20° | 4.8 ± 4.2 (42.5) | 10.7 ± 11.4 (78.4) | 2.4 ± 2.4 (6.8) | 4.5 ± 6.9 (48.6) | 4.4 ± 5.9 (93.1) |

| >30° | 4.6 ± 5.3 (43.5) | 1.4 ± 2.7 (2.9) | 4.8 ± 4.2 (78.1) | 3.4 ± 4 (42.6) | 1.4 ± 1.7 (2.6) |

| >45° | 4 ± 4.5 (30) | 0 ± 0 (0.1) | 7 ± 7.1 (36.7) | 3.3 ± 4.6 (26.8) | 0.2 ± 0.4 (0.5) |

| >60° | 7.2 ± 9.3 (14.8) | 0 ± 0.1 (0.1) | 6.4 ± 10.9 (8.6) | 4.2 ± 7.3 (14) | 0 ± 0.1 (0) |

| >90° | – | – | – | – | – |

| Dominant Arm, Inclination Velocity | Simulated Work Tasks | ||||

|---|---|---|---|---|---|

| Lifting Boxes | Sorting Mails | Wiping Floor | Cleaning Dishwasher | Cleaning Windows | |

| Percentile (°/s) | |||||

| 5th | 0.3 ± 0.3 (2.5) | 0.4 ± 0.3 (2.1) | 0.2 ± 0.1 (2.5) | 0.3 ± 0.3 (2.4) | 0.4 ± 0.3 (4.3) |

| 10th | 0.5 ± 0.6 (6.4) | 0.7 ± 0.4 (5.5) | 0.4 ± 0.3 (6) | 0.5 ± 0.4 (5.8) | 0.9 ± 0.6 (10.4) |

| 50th | 1.8 ± 1.5 (33.5) | 3.3 ± 1.7 (30.1) | 1 ± 0.7 (31.8) | 1.4 ± 1.2 (32.2) | 4.5 ± 3.4 (64.8) |

| 90th | 4.1 ± 3.3 (96.3) | 10.9 ± 5.5 (90.5) | 4.1 ± 4 (91.7) | 5.1 ± 4.7 (108.7) | 18.8 ± 16.3 (191.6) |

| 95th | 5.1 ± 3.8 (121.7) | 15.3 ± 7.6 (116.5) | 5.5 ± 5.7 (116.2) | 9.4 ± 7.3 (142.3) | 26.1 ± 24.8 (244.1) |

| Trunk, Forward Inclination Velocity | Simulated Work Tasks | ||||

|---|---|---|---|---|---|

| Lifting Boxes | Sorting Mails | Wiping Floor | Cleaning Dishwasher | Cleaning Windows | |

| Percentile (°/s) | |||||

| 5th | 0.3 ± 0.2 (2.6) | 0.1 ± 0.1 (1) | 0.1 ± 0.1 (1.4) | 0.2 ± 0.1 (1.6) | 0.2 ± 0.2 (1.9) |

| 10th | 0.3 ± 0.3 (5) | 0.1 ± 0.1 (2) | 0.2 ± 0.1 (2.9) | 0.3 ± 0.2 (3.2) | 0.2 ± 0.2 (4.2) |

| 50th | 2 ± 3.1 (27.7) | 0.4 ± 0.3 (9.8) | 1 ± 0.7 (15.2) | 0.9 ± 1.2 (17.6) | 1.3 ± 0.7 (22.3) |

| 90th | 11 ± 10.7 (115.5) | 1.6 ± 1 (28.7) | 2.9 ± 2 (48.5) | 3.5 ± 3.1 (64.5) | 3.3 ± 1.8 (64.2) |

| 95th | 13.2 ± 12.1 (154.5) | 2.1 ± 1.3 (36.9) | 3.5 ± 3 (64) | 5.2 ± 5.2 (87.8) | 3.2 ± 1.8 (81.8) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hoareau, D.; Fan, X.; Abtahi, F.; Yang, L. Evaluation of In-Cloth versus On-Skin Sensors for Measuring Trunk and Upper Arm Postures and Movements. Sensors 2023, 23, 3969. https://doi.org/10.3390/s23083969

Hoareau D, Fan X, Abtahi F, Yang L. Evaluation of In-Cloth versus On-Skin Sensors for Measuring Trunk and Upper Arm Postures and Movements. Sensors. 2023; 23(8):3969. https://doi.org/10.3390/s23083969

Chicago/Turabian StyleHoareau, Damien, Xuelong Fan, Farhad Abtahi, and Liyun Yang. 2023. "Evaluation of In-Cloth versus On-Skin Sensors for Measuring Trunk and Upper Arm Postures and Movements" Sensors 23, no. 8: 3969. https://doi.org/10.3390/s23083969