A Concept of a Plug-In Simulator for Increasing the Effectiveness of Rescue Operators When Using Hydrostatically Driven Manipulators

Abstract

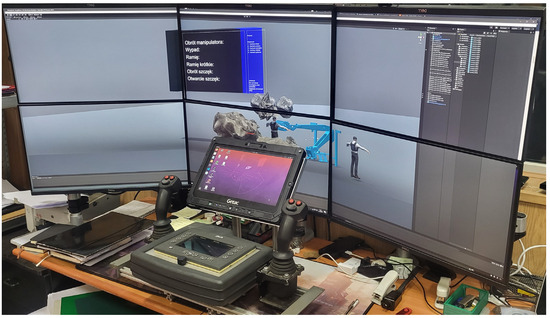

1. Introduction

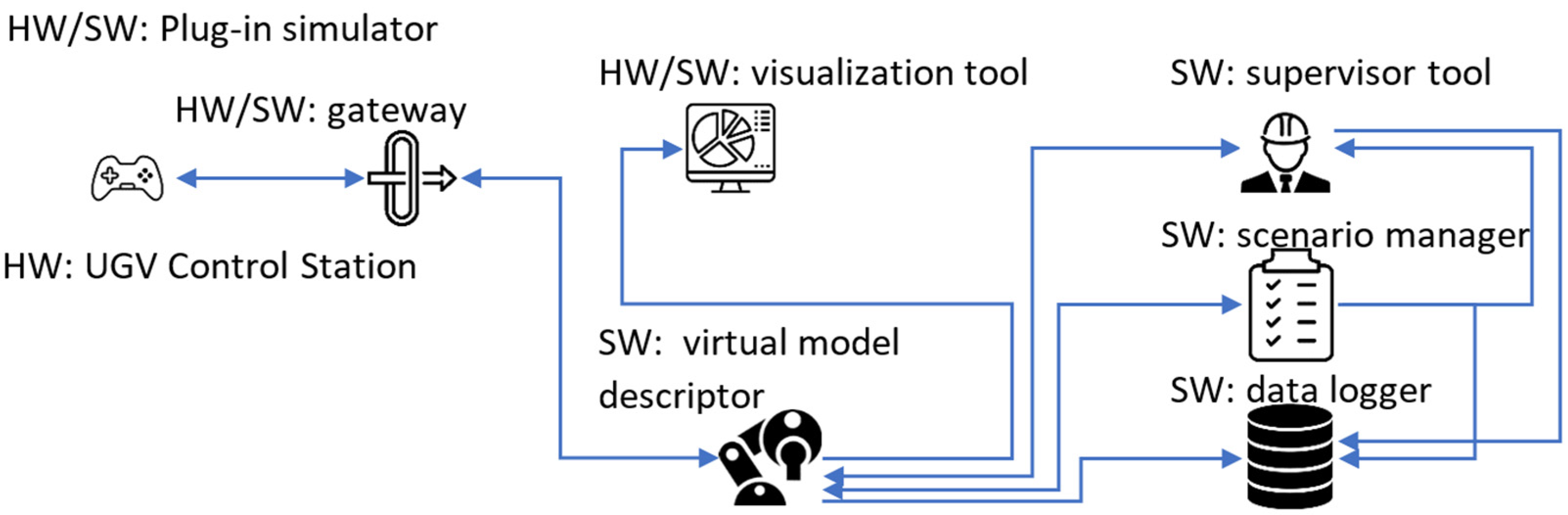

2. Materials and Methods

2.1. Model Descriptor’s and Supervisor Tool’s Building Methodology

2.2. Data Evaluation

- —performance indicator index;

- —test duration;

- —reference test duration;

- —number of object collisions when the force exceeded max value;

- —number of body collisions when the force exceeded max value;

- —number of overloads in the max direction;

- —number of overloads in the min direction;

- —subjective accuracy;

- — subjective situational awareness;

- —completion indicator.

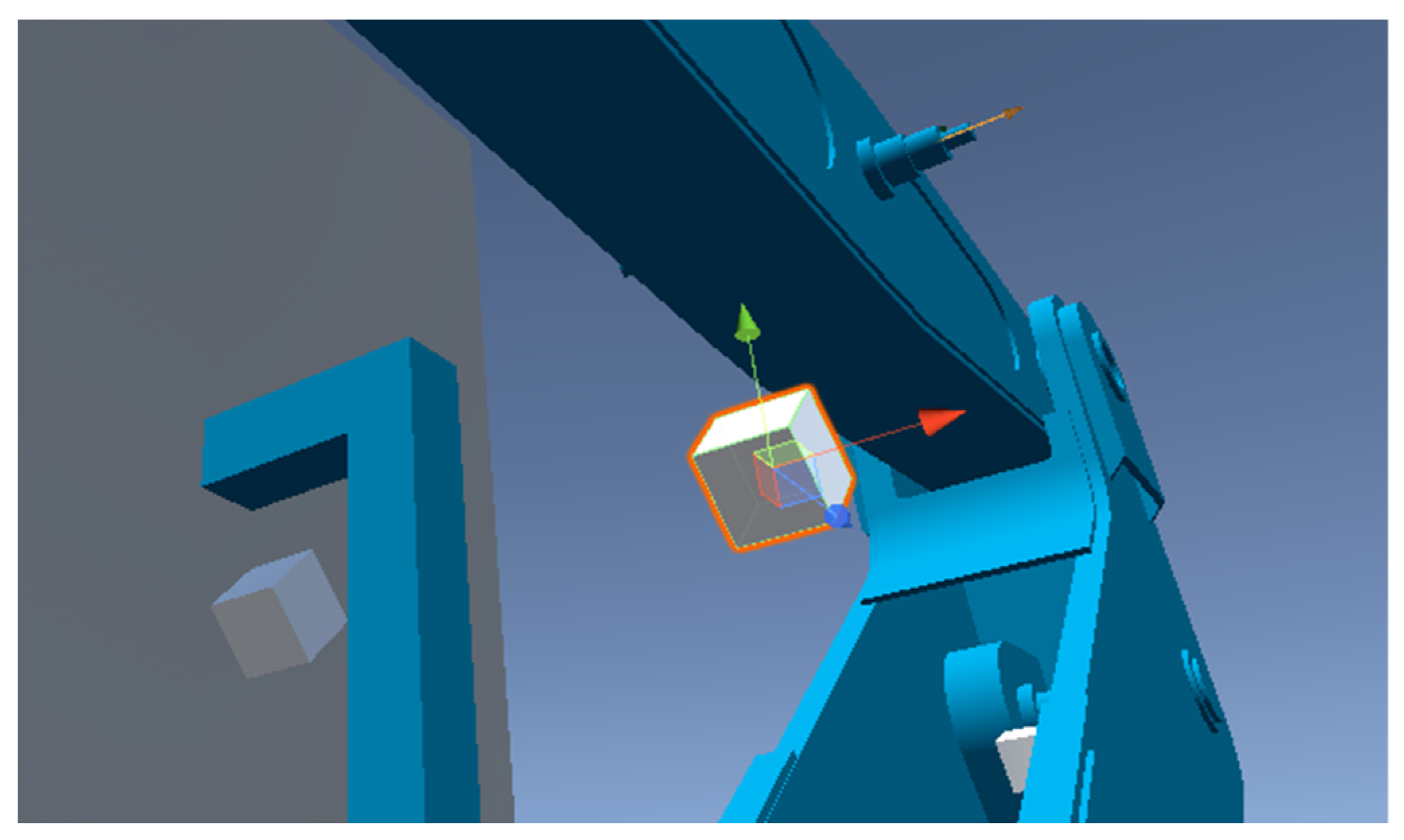

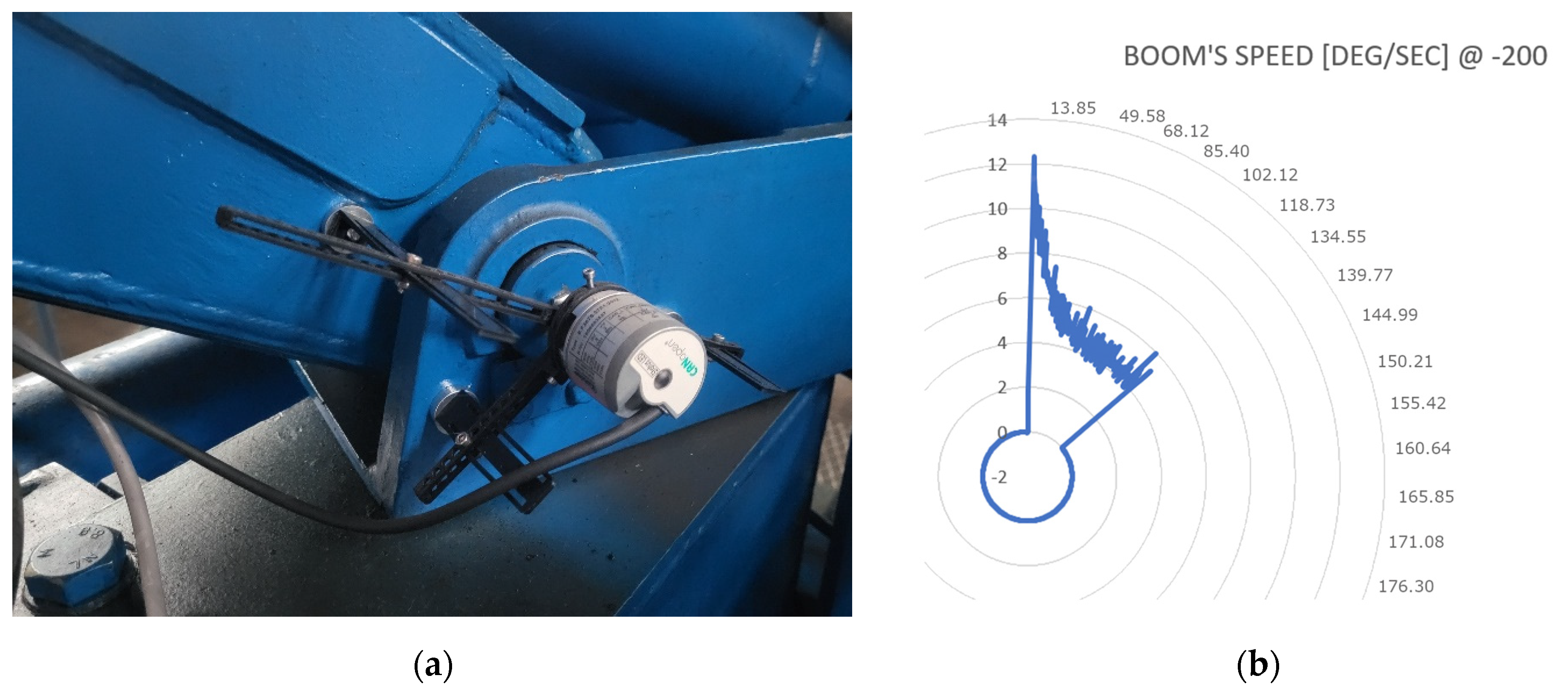

2.3. Hydrostatic Arm Model

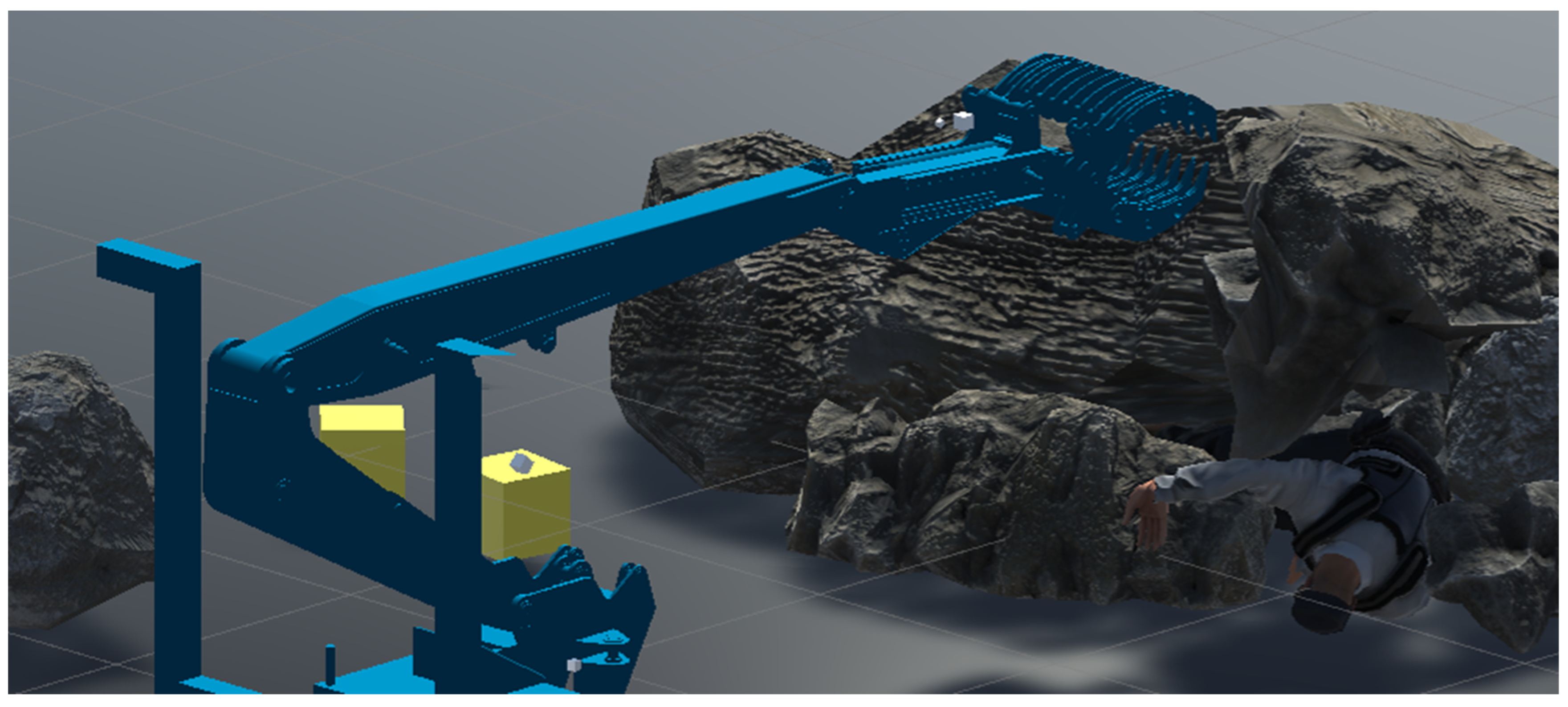

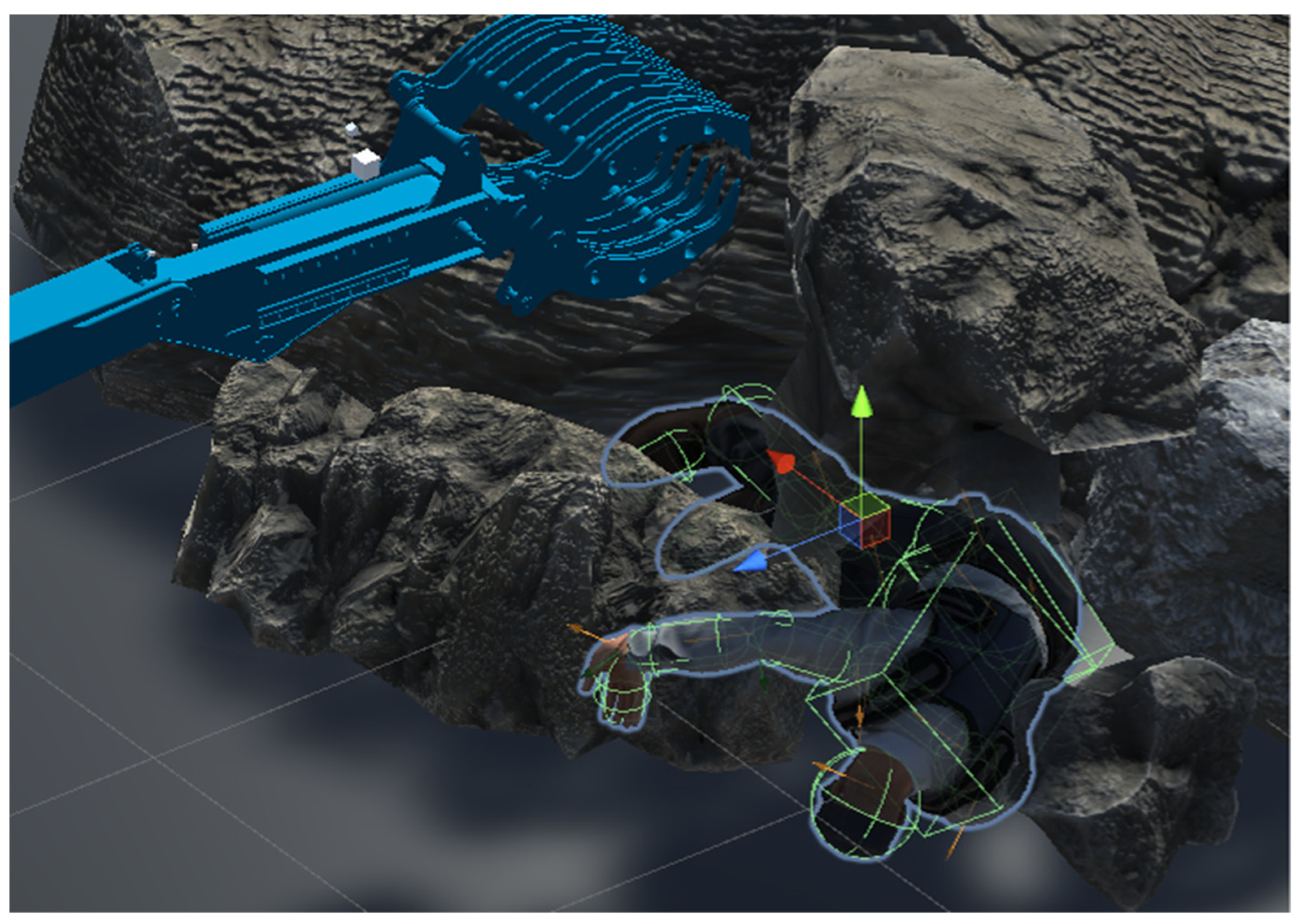

2.4. Simulator’s Functionality

3. Results

- 0, 20, 60, 100, 140, 180, 220.

- 0, −20, −60, −100, −140, −180, −220.

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Amit, D.; Neel, S.; Pranali, A.; Sayali, K. Fire Fighter Robot with Deep Learning and Machine Vision. Neural Comput. Appl. 2021, 34, 2831–2839. [Google Scholar]

- Bishop, R. A survey of intelligent vehicle applications worldwide. In Proceedings of the IEEE Intelligent Vehicles Symposium 2000 (Cat. No. 00TH8511), Dearborn, MI, USA, 5 October 2000; IEEE: Piscataway, NJ, USA, 2000; pp. 25–30. [Google Scholar]

- Giuseppe, Q.; Paride, C. Rese_Q: UGV for Rescue Tasks Functional Design. In Proceedings of the ASME 2018 International Mechanical Engineering Congress and Exposition, Pittsburgh, PA, USA, 9–15 November 2018. Volume 4A: Dynamics, Vibration, and Control. [Google Scholar]

- Halder, S.; Afsari, K. Robots in Inspection and Monitoring of Buildings and Infrastructure: A Systematic Review. Appl. Sci. 2023, 13, 2304. [Google Scholar] [CrossRef]

- Mao, Z.; Yan, Y.; Wu, J.; Hajjar, J.F.; Padlr, T. Towards Automated Post-Disaster Damage Assessment of Critical Infrastructure with Small Unmanned Aircraft Systems. In Proceedings of the 2018 IEEE International Symposium on Technologies for Homeland Security (HST), Woburn, MA, USA, 23–24 October 2018. [Google Scholar]

- Martirosov, S.; Hořejší, P.; Kopeček, P.; Bureš, M.; Šimon, M. The Effect of Training in Virtual Reality on the Precision of Hand Movements. Appl. Sci. 2021, 11, 8064. [Google Scholar] [CrossRef]

- Shi, X.; Yang, S.; Ye, Z. Development of a Unity–VISSIM Co-Simulation Platform to Study Interactive Driving Behavior. Systems 2023, 11, 269. [Google Scholar] [CrossRef]

- De Luca, A.; Siciliano, B. Closed-form dynamic model of planar multilink lightweight robots. IEEE Trans. Syst. Man Cybern. 1991, 21, 826–839. [Google Scholar] [CrossRef]

- Giorgio, I.; Del Vescovo, D. Energy-based trajectory tracking and vibration control for multi-link highly flexible manipulators. Math. Mech. Complex Syst. 2019, 7, 159–174. [Google Scholar] [CrossRef]

- Kim, P.; Park, J.; Cho, Y.K.; Kang, J. UAV-Assisted Autonomous Mobile Robot Navigation for as-Is 3D Data Collection and Registration in Cluttered Environments. Autom. Constr. 2019, 106, 102918. [Google Scholar] [CrossRef]

- Stampa, M.; Jahn, U.; Fruhner, D.; Streckert, T.; Röhrig, C. Scenario and system concept for a firefighting UAV-UGV team. In Proceedings of the 2022 Sixth IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 5–7 December 2022. [Google Scholar]

- Szrek, J.; Jakubiak, J.; Zimroz, R. A Mobile Robot-Based System for Automatic Inspection of Belt Conveyors in Mining Industry. Energies 2022, 15, 327. [Google Scholar] [CrossRef]

- Zhu, S.; Xiong, G.; Chen, H.; Gong, J. Guidance Point Generation-Based Cooperative UGV Teleoperation in Unstructured Environment. Sensors 2021, 21, 2323. [Google Scholar] [CrossRef] [PubMed]

- Va, H.; Choi, M.-H.; Hong, M. Efficient Simulation of Volumetric Deformable Objects in Unity3D: GPU-Accelerated Position-Based Dynamics. Electronics 2023, 12, 2229. [Google Scholar] [CrossRef]

- Mahler, J.; Goldberg, K. Learning deep policies for robot bin picking by simulating robust grasping sequences. Proc. Mach. Learn. Res. 2017, 78, 515–524. Available online: http://proceedings.mlr.press/v78/mahler17a.html (accessed on 21 January 2024).

- Mora-Soto, M.E.; Maldonado-Romo, J.; Rodríguez-Molina, A.; Aldape-Pérez, M. Building a Realistic Virtual Simulator for Unmanned Aerial Vehicle Teleoperation. Appl. Sci. 2021, 11, 12018. [Google Scholar] [CrossRef]

- Nuaimi, A.; Ali, F.; Zeddoug, J.; Nasreddine, B.A. Real-time Control of UGV Robot in Gazebo Simulator using P300-based Brain-Computer Interface. In Proceedings of the 2022 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Las Vegas, NV, USA, 6–8 December 2022. [Google Scholar]

- Sánchez, M.; Morales, J.; Martínez, J.L. Reinforcement and Curriculum Learning for Off-Road Navigation of an UGV with a 3D LiDAR. Sensors 2023, 23, 3239. [Google Scholar] [CrossRef] [PubMed]

- Todorov, E.; Erez, T.; Tassa, Y. MuJoCo: A physics engine for model-based control. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 5026–5033. [Google Scholar]

- Akkaya, I.; Andrychowicz, M.; Chociej, M.; Litwin, M.; McGrew, B.; Petron, A.; Paino, A.; Plappert, M.; Powell, G.; Ribas, R.; et al. Solving Rubik’s cube with a robot hand. arXiv 2019, arXiv:1910.07113. Available online: http://arxiv.org/abs/1910.07113 (accessed on 21 January 2024).

- Pathak, D.; Gandhi, D.; Gupta, A. Self-supervised exploration via disagreement. Proc. Mach. Learn. Res. 2019, 97, 5062–5071. Available online: http://proceedings.mlr.press/v97/pathak19a.html (accessed on 21 January 2024).

- Christiano, P.; Shah, Z.; Mordatch, I.; Schneider, J.; Blackwell, T.; Tobin, J.; Abbeel, P.; Zaremba, W. Transfer from simulation to real-world through learning deep inverse dynamics. arXiv 2016, arXiv:1610.03518. Available online: http://arxiv.org/abs/1610.03518 (accessed on 21 January 2024).

- Rusu, A.A.; Večerík, M.; Rothörl, T.; Heess, N.; Pascanu, R.; Hadsell, R. Sim-to-real robot learning from pixels with progressive nets. Proc. Mach. Learn. Res. 2017, 78, 262–270. Available online: http://proceedings.mlr.press/v78/rusu17a.html (accessed on 21 January 2024).

- Tobin, J.; Fong, R.; Ray, A.; Schneider, J.; Zaremba, W.; Abbeel, P. Domain randomization for transferring deep neural networks from simulation to the realworld. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 23–30. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for Gazebo, an opensource multi-robot simulator. IEEE/RSJ Int. Conf. Intell. Robot. Syst. 2004, 3, 2149–2154. Available online: http://ieeexplore.ieee.org/document/1389727 (accessed on 21 January 2024).

- Matas, J.; James, S.; Davison, A.J. Sim-to-real reinforcement learning for deformable object manipulation. arXiv 2018, arXiv:1806.07851. Available online: http://arxiv.org/abs/1806.07851 (accessed on 21 January 2024).

- Jin, H.; Chen, Q.; Chen, Z.; Hu, Y.; Zhang, J. Multi-LeapMotion sensor based demonstration for robotic refine tabletop object manipulation task. CAAI Trans. Intell. Technol. 2016, 1, 104–113. Available online: http://www.sciencedirect.com/science/article/pii/S2468232216000111 (accessed on 21 January 2024). [CrossRef]

- Kunze, L.; Beetz, M. Envisioning the qualitative effects of robot manipulation actions using simulation-based projections. Artif. Intell. 2017, 247, 352–380. Available online: http://www.sciencedirect.com/science/article/pii/S0004370214001544 (accessed on 21 January 2024). [CrossRef]

- James, S.; Davison, A.J.; Johns, E. Transferring end-to-end visuomotor control from simulation to real-world for a multi-stage task. Proc. Mach. Learn. Res. 2017, 78, 334–343. Available online: http://proceedings.mlr.press/v78/james17a.html (accessed on 21 January 2024).

- Alexander, A.L.; Brunyé, T.; Sidman, J.; Weil, S.A. From Gaming to Training: A Review of Studies on Fidelity, Immersion, Presence, and Buy-in and Their Effects on Transfer in Pc-Based Simulations and Games, DARWARS Training Impact Group; Aptima Inc.: Woburn, MA, USA, 2005; Volume 5, pp. 1–14. [Google Scholar]

- Aline, M.; Torchelsen, R.; Nedel, L. The effects of VR in training simulators: Exploring perception and knowledge gain. Comput. Graph. 2022, 102, 402–412. [Google Scholar]

- Coulter, R.; Saland, L.; Caudell, T.; Goldsmith, T.E.; Alverson, D. The effect of degree of immersion upon learning performance in virtual reality simulations for medical education. In Medicine Meets Virtual Reality; IOS Press: Amsterdam, The Netherlands, 2007; Volume 15, p. 155. [Google Scholar]

- Salman, N.; Colombo, S.; Manca, D. Testing and analyzing different training methods for industrial operators: An experimental approach. Comput. Aided Chem. Eng. 2013, 32, 667–672. Available online: https://www.sciencedirect.com/science/article/pii/B9780444632340501123 (accessed on 21 January 2024).

- Typiak, R. Wpływ Konfiguracji Układu Akwizycji Obrazu na Sterowanie Bezzałogową Platformą Lądową. Ph.D. Thesis, Wojskowa Akademia Techniczna, Warsaw, Poland, 2017. [Google Scholar]

| ID | Name | Parameter Name | Type | Visualized | Recorded |

|---|---|---|---|---|---|

| 1 | Joint angle | jax_y | Float | No | Yes |

| 2 | Joint speed | jsx_y | Float | No | Yes |

| 3 | Joint collision | jcx_y | Bool | Possible | Yes |

| 4 | Object name per joint collision | onx_y_z | String | No | Yes |

| 5 | Joint movement start | tjmx_y_start | Bool | No | Yes |

| 6 | Joint movement stop | tjmx_y_stop | Bool | No | Yes |

| 7 | Joint maximum position overload | tjox_y_max | Bool | No | Yes |

| 8 | Joint minimum position overload | tjox_y_min | Bool | No | Yes |

| 9 | Time start | tstart | Long | Yes | Yes |

| 10 | Time end | tstop | Long | Yes | Yes |

| ID | Name | Parameter Name | Type | Visualized | Recorded |

|---|---|---|---|---|---|

| 1 | Human body collision | hcx | Bool | Possible | Yes |

| 2 | Human body maximum force in collision point | hcxf | Float | No | Yes |

| 3 | Object collision | ocx | bool | No | Yes |

| 4 | Object maximum force in collision point | ocxf | Float | No | Yes |

| 5 | Maximum force on body without manipulator contact | hcxf_nm | Float | No | Yes |

| 6 | Maximum force on object without manipulator contact | ocxf_nm | Float | No | Yes |

| Manipulator Segment | MAE | MSE | RMSE |

|---|---|---|---|

| Boom | 0.0589 | 0.0006 | 0.0244 |

| Arm | 0.0530 | 0.0004 | 0.0220 |

| Long arm | 0.0424 | 0.0003 | 0.0154 |

| Short arm | 0.0381 | 0.0003 | 0.0123 |

| Jaws | 0.0343 | 0.0002 | 0.0111 |

| Control signal | 20 | 60 | 100 | 140 | 180 |

| MAE | 0.0445 | 0.0424 | 0.0554 | 0.0665 | 0.0795 |

| Control signal | −20 | −60 | −100 | −140 | −180 |

| MAE | 0.0401 | 0.0386 | 0.0406 | 0.0611 | 0.0694 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Typiak, R. A Concept of a Plug-In Simulator for Increasing the Effectiveness of Rescue Operators When Using Hydrostatically Driven Manipulators. Sensors 2024, 24, 1084. https://doi.org/10.3390/s24041084

Typiak R. A Concept of a Plug-In Simulator for Increasing the Effectiveness of Rescue Operators When Using Hydrostatically Driven Manipulators. Sensors. 2024; 24(4):1084. https://doi.org/10.3390/s24041084

Chicago/Turabian StyleTypiak, Rafał. 2024. "A Concept of a Plug-In Simulator for Increasing the Effectiveness of Rescue Operators When Using Hydrostatically Driven Manipulators" Sensors 24, no. 4: 1084. https://doi.org/10.3390/s24041084

APA StyleTypiak, R. (2024). A Concept of a Plug-In Simulator for Increasing the Effectiveness of Rescue Operators When Using Hydrostatically Driven Manipulators. Sensors, 24(4), 1084. https://doi.org/10.3390/s24041084