A Multiple Attention Convolutional Neural Networks for Diesel Engine Fault Diagnosis

Abstract

:1. Introduction

- (1)

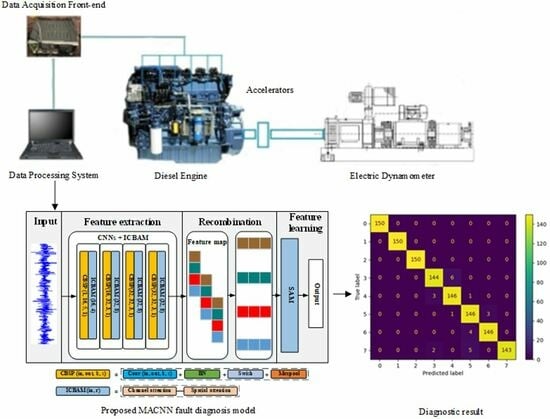

- The paper improves the arrangement of channel attention and spatial attention in the CBAM method while optimizing the kernel size.

- (2)

- The feature map obtained after feature extraction is sequentially recombined to preserve the temporal features of the vibration signal. The recombined feature maps are recognized using SAM.

- (3)

- A Swish activation function is introduced to suppress “Dead ReLU”, and the dynamic learning rate curve is designed to improve the convergence efficiency.

2. Background Theories

2.1. Convolutional Neural Networks

2.2. Convolutional Block Attention Module

2.3. Self-Attention Mechanism

3. Data Preparation

4. Proposed Method

4.1. Feature Extraction

4.2. Optimization of ICBAM

4.3. Feature Recombination

4.4. Feature Learning

| Algorithm 1. Proposed model |

| Model: MACNN |

| Input: Training set: TRAIN_data and TRAIN_label, test data: TEST_data |

| Output: Predicted labels of test data: TEST_label |

| Training: |

| 1: for k =1 . . . K do // forward propagation |

| 2: Calculate the feature map P based on (1), (2), (3). |

| 3: Calculate the feature map after adding attention P2 based on (4), (5), (10). |

| 4: Recombine P2 to obtain recombined sequence based on (11). |

| 5: Use the self-attention to learn the recombined sequence based on (6)~(7). |

| 6: Use Adam optimizer to update parameters. // back propagation |

| 7: end |

| Testing: Use TEST_data to predict labels of the test data TEST_label on trained model. |

5. Result Analysis

5.1. Training and Testing

5.2. Analysis of the MACNN Output

- (1)

- ACNN: This is the model that MACNN lacks ICBAM, which is used to prove the effectiveness of the introduction of ICBAM.

- (2)

- MACNN-noSAM: Same as MACNN, combine the four-layer convolutional network with ICBAM to extract features, and then flatten the recombined sequence features and input them into the fully connected layer.

5.3. Model Evaluation

5.4. Comparison with Other Diagnosis Methods

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, R.; Chen, H.; Guan, C. DPGCN Model: A Novel Fault Diagnosis Method for Marine Diesel Engines Based on Imbalanced Datasets. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Cai, B.; Wang, Z.; Zhu, H.; Liu, Y.; Hao, K.; Yang, Z.; Ren, Y.; Feng, Q.; Liu, Z. Artificial Intelligence Enhanced Two-Stage Hybrid Fault Prognosis Methodology of PMSM. IEEE Trans. Ind. Inform. 2022, 18, 7262–7273. [Google Scholar] [CrossRef]

- Kong, X.; Cai, B.; Liu, Y.; Zhu, H.; Liu, Y.; Shao, H.; Yang, C.; Li, H.; Mo, T. Optimal sensor placement methodology of hydraulic control system for fault diagnosis. Mech. Syst. Signal Process. 2022, 174, 109069. [Google Scholar] [CrossRef]

- Li, Q. New Approach for Bearing Fault Diagnosis Based on Fractional Spatio-Temporal Sparse Low Rank Matrix Under Multichannel Time-Varying Speed Condition. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- Barai, V.; Dhanalkotwar, V.; Ramteke, S.M.; Jaju, S.B.; Untawale, S.; Sharma, A.; Chelladurai, H.; Amarnath, M. Intelligent Fault Diagnosis of Scuffed Piston Rings Using Vibration Signature Analysis. J. Vib. Eng. Technol. 2024, 12, 1019–1035. [Google Scholar] [CrossRef]

- Lu, G.; Wen, X.; He, G.; Yi, X.; Yan, P. Early Fault Warning and Identification in Condition Monitoring of Bearing via Wavelet Packet Decomposition Coupled With Graph. IEEE/ASME Trans. Mechatron. 2022, 27, 3155–3164. [Google Scholar] [CrossRef]

- Rauber, T.W.; da Silva Loca, A.L.; Boldt, F.d.A.; Rodrigues, A.L.; Varejão, F.M. An experimental methodology to evaluate machine learning methods for fault diagnosis based on vibration signals. Expert Syst. Appl. 2021, 167, 114022. [Google Scholar] [CrossRef]

- Zhao, C.; Sun, J.; Lin, S.; Peng, Y. Rolling mill bearings fault diagnosis based on improved multivariate variational mode decomposition and multivariate composite multiscale weighted permutation entropy. Measurement 2022, 195, 111190. [Google Scholar] [CrossRef]

- Ke, Y.; Song, E.; Chen, Y.; Yao, C.; Ning, Y. Multiscale Bidirectional Diversity Entropy for Diesel Injector Fault-Type Diagnosis and Fault Degree Diagnosis. IEEE Trans. Instrum. Meas. 2022, 71, 1–10. [Google Scholar] [CrossRef]

- Zhu, Z.; Lei, Y.; Qi, G.; Chai, Y.; Mazur, N.; An, Y.; Huang, X. A review of the application of deep learning in intelligent fault diagnosis of rotating machinery. Measurement 2023, 206, 112346. [Google Scholar] [CrossRef]

- Habbouche, H.; Amirat, Y.; Benkedjouh, T.; Benbouzid, M. Bearing Fault Event-Triggered Diagnosis Using a Variational Mode Decomposition-Based Machine Learning Approach. IEEE Trans. Energy Conver. 2022, 37, 466–474. [Google Scholar] [CrossRef]

- Ribeiro Junior, R.F.; dos Santos Areias, I.A.; Campos, M.M.; Teixeira, C.E.; da Silva, L.E.B.; Gomes, G.F. Fault Detection and Diagnosis in Electric Motors Using Convolution Neural Network and Short-Time Fourier Transform. J. Vib. Eng. Technol. 2022, 10, 2531–2542. [Google Scholar] [CrossRef]

- Wang, J.; Wang, D.; Wang, S.; Li, W.; Song, K. Fault Diagnosis of Bearings Based on Multi-Sensor Information Fusion and 2D Convolutional Neural Network. IEEE Access 2021, 9, 23717–23725. [Google Scholar] [CrossRef]

- Zhao, X.; Yao, J.; Deng, W.; Ding, P.; Ding, Y.; Jia, M.; Liu, Z. Intelligent Fault Diagnosis of Gearbox Under Variable Working Conditions With Adaptive Intraclass and Interclass Convolutional Neural Network. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 6339–6353. [Google Scholar] [CrossRef] [PubMed]

- Du, C.Y.; Zhong, R.; Zhuo, Y.S.; Zhang, X.Y.; Yu, F.F.; Li, F.; Rong, Y.; Gong, Y.K. Research on fault diagnosis of automobile engines based on the deep learning 1D-CNN method. Eng. Res. Express 2022, 4, 18. [Google Scholar] [CrossRef]

- Zhao, H.P.; Mao, Z.W.; Zhang, J.J.; Zhang, X.D.; Zhao, N.Y.; Jiang, Z.N. Multi-branch convolutional neural networks with integrated cross-entropy for fault diagnosis in diesel engines. Meas. Sci. Technol. 2021, 32, 7. [Google Scholar] [CrossRef]

- Huang, D.; Fu, Y.; Qin, N.; Gao, S. Fault diagnosis of high-speed train bogie based on LSTM neural network. Sci. China Inf. Sci. 2020, 64, 119203. [Google Scholar] [CrossRef]

- Qin, C.J.; Jin, Y.R.; Zhang, Z.N.; Yu, H.G.; Tao, J.F.; Sun, H.; Liu, C.L. Anti-noise diesel engine misfire diagnosis using a multi-scale CNN-LSTM neural network with denoising module. CAAI Trans. Intell. Technol. 2023, 8, 963–986. [Google Scholar] [CrossRef]

- Ouyang, H.; Zeng, J.; Li, Y.; Luo, S. Fault Detection and Identification of Blast Furnace Ironmaking Process Using the Gated Recurrent Unit Network. Processes 2020, 8, 391. [Google Scholar] [CrossRef]

- Zhi, Z.; Liu, L.; Liu, D.; Hu, C. Fault Detection of the Harmonic Reducer Based on CNN-LSTM With a Novel Denoising Algorithm. IEEE Sens. J. 2022, 22, 2572–2581. [Google Scholar] [CrossRef]

- Zhou, P.; Shi, W.; Tian, J.; Qi, Z.Y.; Li, B.C.; Hao, H.W.; Xu, B. Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification. In Proceedings of the 54th Annual Meeting of the Association-for-Computational-Linguistics (ACL), Berlin, Germany, 7–12 August 2016; pp. 207–212. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Liu, Y.; Gan, H.B.; Cong, Y.J.; Hu, G.T. Research on fault prediction of marine diesel engine based on attention-LSTM. Proc. Inst. Mech. Eng. Part M-J. Eng. Marit. Environ. 2023, 237, 508–519. [Google Scholar] [CrossRef]

- Woo, S.H.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Guo, Y.; Mao, J.; Zhao, M. Rolling Bearing Fault Diagnosis Method Based on Attention CNN and BiLSTM Network. Neural Process. Lett. 2023, 55, 3377–3410. [Google Scholar] [CrossRef]

- Yao, H.; Liu, Y.; Li, X.; You, Z.; Feng, Y.; Lu, W. A Detection Method for Pavement Cracks Combining Object Detection and Attention Mechanism. IEEE Trans. Intell. Transp. 2022, 23, 22179–22189. [Google Scholar] [CrossRef]

- Yan, X.A.; Lu, Y.Y.; Liu, Y.; Jia, M.P. Attention mechanism-guided residual convolution variational autoencoder for bearing fault diagnosis under noisy environments. Meas. Sci. Technol. 2023, 34, 20. [Google Scholar] [CrossRef]

- Song, S.S.; Zhang, S.Q.; Dong, W.; Li, G.C.; Pan, C.Y. Multi-source information fusion meta-learning network with convolutional block attention module for bearing fault diagnosis under limited dataset. Struct. Health Monit. 2024, 23, 818–835. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Nahim, H.M.; Younes, R.; Shraim, H.; Ouladsine, M. Oriented review to potential simulator for faults modeling in diesel engine. J. Mar. Sci. Technol. 2016, 21, 533–551. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Prajit, R.; Barret, Z.; Quoc, V.L. Searching for Activation Functions. arXiv 2017, arXiv:1710.05941v2. [Google Scholar] [CrossRef]

| Items | Specifications |

|---|---|

| Number of cylinders | In-line 6 cylinders |

| Number of valves/cylinder | 4 |

| Displacement | 7.14 L |

| Cylinder diameter/length | 108/130 mm |

| Rated power/speed | 220 kW/2300 r/min |

| Maximum torque/speed range | 1250 Nm/1200–1600 r/min |

| Label | Fault Type | Fault Degree |

|---|---|---|

| 0 | Insufficient fuel supply (Normal-100%) | 75% |

| 1 | 25% | |

| 2 | Normal | -- |

| 3 | Abnormal rail pressure (Normal-1500 bar) | 1300 bar |

| 4 | 1100 bar | |

| 5 | Abnormal valve clearance (Normal-in 0.30 mm, out 0.50 mm) | (in 0.25, out 0.45) |

| 6 | (in 0.35, out 0.55) | |

| 7 | (in 0.40, out 0.60) |

| Kernel Size | Test Accuracy (%) |

|---|---|

| 1 × 1 | 93.71 |

| 3 × 1 | 93.72 |

| 5 × 1 | 94.71 |

| 7 × 1 | 95.89 |

| 11 × 1 | 94.08 |

| Kernel Size | Test Accuracy (%) |

|---|---|

| 1 × 1 | 84.58 |

| 3 × 1 | 95.76 |

| 5 × 1 | 95.09 |

| 7 × 1 | 96.55 |

| 11 × 1 | 90.96 |

| Description | Test Accuracy (%) |

|---|---|

| channel | 95.89 |

| spatial | 96.55 |

| channel + spatial | 96.67 |

| spatial + channel | 96.79 |

| channel and spatial in parallel (case 1) | 96.89 |

| channel and spatial in parallel (case 2) | 99.88 |

| Description | Test Accuracy (%) |

|---|---|

| MACNN (with CBAM) | 96.67 |

| MACNN (with ICBAM) | 99.88 |

| Layer Name | Output Size | Parametres |

|---|---|---|

| Conv1 | 16 | 5, stride 1, BN 16, Swish, max pool 2 |

| ICBAM1 | 16 | Channel (7, stride 1) and Spatial (7, stride 1) in parallel (case 2), reduction = 4 |

| Conv2 | 32 | 3, stride 1, BN 32, Swish, max pool 2 |

| ICBAM2 | 32 | Channel (7, stride 1) and Spatial (7, stride 1) in parallel (case 2), reduction = 8 |

| Conv3 | 32 | 3, stride 1, BN 32, Swish, max pool 2 |

| ICBAM3 | 32 | Channel (7, stride 1) and Spatial (7, stride 1) in parallel (case 2), reduction = 8 |

| Conv4 | 32 | 3, stride 1, BN 32, Swish, max pool 2 |

| ICBAM4 | 32 | Channel (7, stride 1) and Spatial (7, stride 1) in parallel (case 2), reduction = 8 |

| Recombine | - | - |

| Dropout | - | 0.5 |

| SAM | - | - |

| FC | 8 | Softmax |

| Model | Accuracy (%) | Test Used Time (Per 100 Samples)/s |

|---|---|---|

| ACNN | 93.95 | 0.15 |

| MACNN-noSAM | 97.08 | 0.36 |

| MACNN | 99.88 | 0.35 |

| Case | Original Division | Division 1 | Division 2 | Division 3 | Division 4 |

|---|---|---|---|---|---|

| Accuracy (%) | 99.88 | 99.50 | 99.73 | 99.81 | 99.61 |

| Speed (r/min) | Length (Per Sample) | Accuracy (%) | Recall (%) | Precision (%) | Time/100 Samples (s) |

|---|---|---|---|---|---|

| 700 | 4400 | 98.50 | 98.51 | 98.50 | 0.50 |

| 1300 | 2400 | 98.96 | 98.95 | 98.95 | 0.40 |

| 1600 | 1920 | 99.88 | 99.88 | 99.89 | 0.38 |

| 2300 | 1360 | 98.59 | 98.57 | 98.59 | 0.34 |

| Method | Accuracy (%) |

|---|---|

| VMD-KFCM | 77.29 |

| EEMD-KFCM | 55.42 |

| VMD-CNN(3D) | 36.21 |

| VMD-CNN(21D) | 80.21 |

| MACNN | 99.88 |

| Method | Accuracy (%) | Time Complexity | Time/100 Samples (s) |

|---|---|---|---|

| LSTM | 61.04 | 2 × 105 O | 3.67 |

| BiLSTM | 88.32 | 6 × 105 O | 7.26 |

| GRU | 83.33 | 5 × 105 O | 4.01 |

| BiGRU | 92.59 | 5 × 105 O | 8.12 |

| CNN-BiLSTM | 80.42 | 4 × 106 O | 7.37 |

| CNN-BiGRU | 90.42 | 4 × 106 O | 8.34 |

| 1DCNN | 90.18 | 4 × 106 O | 0.14 |

| MACNN | 99.88 | 6 × 106 O | 0.35 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, X.; Bi, F.; Cheng, J.; Tang, D.; Shen, P.; Bi, X. A Multiple Attention Convolutional Neural Networks for Diesel Engine Fault Diagnosis. Sensors 2024, 24, 2708. https://doi.org/10.3390/s24092708

Yang X, Bi F, Cheng J, Tang D, Shen P, Bi X. A Multiple Attention Convolutional Neural Networks for Diesel Engine Fault Diagnosis. Sensors. 2024; 24(9):2708. https://doi.org/10.3390/s24092708

Chicago/Turabian StyleYang, Xiao, Fengrong Bi, Jiangang Cheng, Daijie Tang, Pengfei Shen, and Xiaoyang Bi. 2024. "A Multiple Attention Convolutional Neural Networks for Diesel Engine Fault Diagnosis" Sensors 24, no. 9: 2708. https://doi.org/10.3390/s24092708