Parametric Performance Analysis and Energy Model Calibration Workflow Integration—A Scalable Approach for Buildings

Abstract

:1. Introduction

2. Background and Motivation

3. Research Methodology

3.1. Parametric Performance Analysis of the Case Study

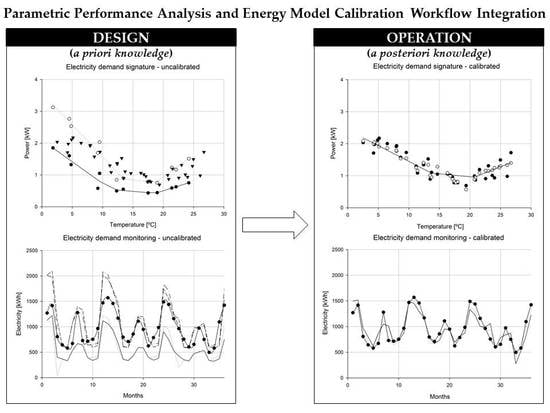

3.2. Parametric Performance Analysis and Model Calibration Integrated Workflow

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Nomenclature

| Variables and Parameters | |

| A | average value |

| a,b,c,d,e,f | regression coefficients |

| Cv(RMSE) | coefficient of variation of RMSE |

| D | deviation, difference between measured and simulated data |

| I | radiation |

| M | measured data |

| MAPE | mean absolute percentage error |

| NMBE | normalized mean bias error |

| q | specific energy transfer rate (energy signature) |

| P | predicted data |

| R2 | determination coefficient |

| RD | relative deviation |

| RMSE | root mean square error |

| S | simulated |

| SS | sum of the squares |

| y | numeric value |

| θ | temperature |

| Subscripts and Superscripts | |

| ‒ | average |

| ^ | predicted value |

| b | baseline |

| c | cooling |

| h | heating |

| i | index |

| res | residual |

| sol | solar |

References

- Dodd, N.; Donatello, S.; Garbarino, E.; Gama-Caldas, M. Identifying Macro-Objectives for the Life Cycle Environmental Performance and Resource Efficiency of EU Buildings; JRC EU Commission: Seville, Spain, 2015. [Google Scholar]

- BPIE. Europe’s Buildings Under the Microscope; Buildings Performance Institute Europe (BPIE): Brussels, Belgium, 2011. [Google Scholar]

- Saheb, Y. Energy Transition of the EU Building Stock—Unleashing the 4th Industrial Revolution in Europe; OpenExp: Paris, France, 2016; p. 352. [Google Scholar]

- Berardi, U. A cross-country comparison of the building energy consumptions and their trends. Resour. Conserv. Recycl. 2017, 123, 230–241. [Google Scholar] [CrossRef]

- D’Agostino, D.; Zangheri, P.; Cuniberti, B.; Paci, D.; Bertoldi, P. Synthesis Report on the National Plans for Nearly Zero Energy Buildings (NZEBs); JRC EU Commission: Ispra, Italy, 2016. [Google Scholar]

- Chwieduk, D. Solar Energy in Buildings: Thermal Balance for Efficient Heating and Cooling; Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- ISO. Energy Performance of Buildings—Energy Needs for Heating and Cooling, Internal Temperatures and Sensible and Latent Head Loads—Part 1: Calculation Procedures; Technical Report No. ISO 52016-1:2017; ISO: Geneva, Switzerland, 2016. [Google Scholar]

- Imam, S.; Coley, D.A.; Walker, I. The building performance gap: Are modellers literate? Build. Serv. Eng. Res. Technol. 2017, 38, 351–375. [Google Scholar] [CrossRef] [Green Version]

- Scofield, J.H.; Cornell, J. A critical look at “Energy savings, emissions reductions, and health co-benefits of the green building movement”. J. Expo. Sci. Environ. Epidemiol. 2019, 29, 584–593. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- MacNaughton, P.; Cao, X.; Buonocore, J.; Cedeno-Laurant, J.; Sprengle, J.; Bernstein, A.; Allen, J. Energy savings, emission reductions, and health co-benefits of the green building movement. J Expo. Sci. Environ. Epidemiol. 2018, 28, 307–318. [Google Scholar] [CrossRef]

- Yoshino, H.; Hong, T.; Nord, N. IEA EBC annex 53: Total energy use in buildings—Analysis and evaluation methods. Energy Build. 2017, 152, 124–136. [Google Scholar] [CrossRef] [Green Version]

- Tagliabue, L.C.; Manfren, M.; Ciribini, A.L.C.; De Angelis, E. Probabilistic behavioural modeling in building performance simulation—The Brescia eLUX lab. Energy Build. 2016, 128, 119–131. [Google Scholar] [CrossRef]

- Fabbri, K.; Tronchin, L. Indoor environmental quality in low energy buildings. Energy Procedia 2015, 78, 2778–2783. [Google Scholar] [CrossRef] [Green Version]

- Jaffal, I.; Inard, C.; Ghiaus, C. Fast method to predict building heating demand based on the design of experiments. Energy Build. 2009, 41, 669–677. [Google Scholar] [CrossRef]

- Kotireddy, R.; Hoes, P.-J.; Hensen, J.L.M. A methodology for performance robustness assessment of low-energy buildings using scenario analysis. Appl. Energy 2018, 212, 428–442. [Google Scholar] [CrossRef]

- Schlueter, A.; Geyer, P. Linking BIM and design of experiments to balance architectural and technical design factors for energy performance. Autom. Constr. 2018, 86, 33–43. [Google Scholar] [CrossRef]

- Shiel, P.; Tarantino, S.; Fischer, M. Parametric analysis of design stage building energy performance simulation models. Energy Build. 2018, 172, 78–93. [Google Scholar] [CrossRef]

- EEFIG. Energy Efficiency—the First Fuel for the EU Economy, how to Drive New Finance for Energy Efficiency Investments; Energy Efficiency Financial Institutions Group: Brussels, Belgium, 2015. [Google Scholar]

- Saheb, Y.; Bodis, K.; Szabo, S.; Ossenbrink, H.; Panev, S. Energy Renovation: The Trump Card for the New Start for Europe; JRC EU Commission: Ispra, Italy, 2015. [Google Scholar]

- Aste, N.; Adhikari, R.S.; Manfren, M. Cost optimal analysis of heat pump technology adoption in residential reference buildings. Renew. Energy 2013, 60, 615–624. [Google Scholar] [CrossRef]

- Tronchin, L.; Tommasino, M.C.; Fabbri, K. On the “cost-optimal levels” of energy performance requirements and its economic evaluation in Italy. Int. J. Sustain. Energy Plan. Manag. 2014, 3, 49–62. [Google Scholar]

- Fabbri, K.; Tronchin, L.; Tarabusi, V. Energy retrofit and economic evaluation priorities applied at an Italian case study. Energy Procedia 2014, 45, 379–384. [Google Scholar] [CrossRef] [Green Version]

- Ligier, S.; Robillart, M.; Schalbart, P.; Peuportier, B. Energy performance contracting methodology based upon simulation and measurement. In Proceedings of the IBPSA Building Simulation Conference 2017, San Francisco, CA, USA, 7–9 August 2017. [Google Scholar]

- Manfren, M.; Aste, N.; Moshksar, R. Calibration and uncertainty analysis for computer models—A meta-model based approach for integrated building energy simulation. Appl. Energy 2013, 103, 627–641. [Google Scholar] [CrossRef]

- Koulamas, C.; Kalogeras, A.P.; Pacheco-Torres, R.; Casillas, J.; Ferrarini, L. Suitability analysis of modeling and assessment approaches in energy efficiency in buildings. Energy Build. 2018, 158, 1662–1682. [Google Scholar] [CrossRef]

- Nguyen, A.-T.; Reiter, S.; Rigo, P. A review on simulation-based optimization methods applied to building performance analysis. Appl. Energy 2014, 113, 1043–1058. [Google Scholar] [CrossRef]

- Aste, N.; Manfren, M.; Marenzi, G. Building automation and control systems and performance optimization: A framework for analysis. Renew. Sustain. Energy Rev. 2017, 75, 313–330. [Google Scholar] [CrossRef]

- Østergård, T.; Jensen, R.L.; Maagaard, S.E. A comparison of six metamodeling techniques applied to building performance simulations. Appl. Energy 2018, 211, 89–103. [Google Scholar] [CrossRef]

- ISO. Energy Performance of Buildings—Assessment of Overall Energy Performance; Technical Report No. ISO 16346:2013; ISO: Geneva, Switzerland, 2013. [Google Scholar]

- ASHRAE. Guideline 14-2014: Measurement of Energy, Demand, and Water Savings; American Society of Heating, Refrigerating and Air-Conditioning Engineers: Atlanta, GA, USA, 2014. [Google Scholar]

- Masuda, H.; Claridge, D.E. Statistical modeling of the building energy balance variable for screening of metered energy use in large commercial buildings. Energy Build. 2014, 77, 292–303. [Google Scholar] [CrossRef]

- Paulus, M.T.; Claridge, D.E.; Culp, C. Algorithm for automating the selection of a temperature dependent change point model. Energy Build. 2015, 87, 95–104. [Google Scholar] [CrossRef]

- Tronchin, L.; Manfren, M.; Tagliabue, L.C. Optimization of building energy performance by means of multi-scale analysis—Lessons learned from case studies. Sustain. Cities Soc. 2016, 27, 296–306. [Google Scholar] [CrossRef]

- Jalori, S.; Agami Reddy, T.P. A unified inverse modeling framework for whole-building energy interval data: Daily and hourly baseline modeling and short-term load forecasting. ASHRAE Trans. 2015, 121, 156. [Google Scholar]

- Jalori, S.; Agami Reddy, T.P. A new clustering method to identify outliers and diurnal schedules from building energy interval data. ASHRAE Trans. 2015, 121, 33. [Google Scholar]

- Abdolhosseini Qomi, M.J.; Noshadravan, A.; Sobstyl, J.M.; Toole, J.; Ferreira, J.; Pellenq, R.J.-M.; Ulm, F.-J.; Gonzalez, M.C. Data analytics for simplifying thermal efficiency planning in cities. J. R. Soc. Interface 2016, 13, 20150971. [Google Scholar] [CrossRef] [Green Version]

- Kohler, M.; Blond, N.; Clappier, A. A city scale degree-day method to assess building space heating energy demands in Strasbourg Eurometropolis (France). Appl. Energy 2016, 184, 40–54. [Google Scholar] [CrossRef]

- Ciulla, G.; Lo Brano, V.; D’Amico, A. Modelling relationship among energy demand, climate and office building features: A cluster analysis at European level. Appl. Energy 2016, 183, 1021–1034. [Google Scholar] [CrossRef]

- Ciulla, G.; D’Amico, A. Building energy performance forecasting: A multiple linear regression approach. Appl. Energy 2019, 253, 113500. [Google Scholar] [CrossRef]

- Tagliabue, L.C.; Manfren, M.; De Angelis, E. Energy efficiency assessment based on realistic occupancy patterns obtained through stochastic simulation. In Modelling Behaviour; Springer: Cham, Switzerland, 2015; pp. 469–478. [Google Scholar]

- Cecconi, F.R.; Manfren, M.; Tagliabue, L.C.; Ciribini, A.L.C.; De Angelis, E. Probabilistic behavioral modeling in building performance simulation: A Monte Carlo approach. Energy Build. 2017, 148, 128–141. [Google Scholar] [CrossRef]

- Aste, N.; Leonforte, F.; Manfren, M.; Mazzon, M. Thermal inertia and energy efficiency—Parametric simulation assessment on a calibrated case study. Appl. Energy 2015, 145, 111–123. [Google Scholar] [CrossRef]

- Li, Q.; Augenbroe, G.; Brown, J. Assessment of linear emulators in lightweight Bayesian calibration of dynamic building energy models for parameter estimation and performance prediction. Energy Build. 2016, 124, 194–202. [Google Scholar] [CrossRef]

- Booth, A.; Choudhary, R.; Spiegelhalter, D. A hierarchical Bayesian framework for calibrating micro-level models with macro-level data. J. Build. Perform. Simul. 2013, 6, 293–318. [Google Scholar] [CrossRef]

- Tronchin, L.; Manfren, M.; Nastasi, B. Energy analytics for supporting built environment decarbonisation. Energy Procedia 2019, 157, 1486–1493. [Google Scholar] [CrossRef]

- Jentsch, M.F.; Bahaj, A.S.; James, P.A.B. Climate change future proofing of buildings—Generation and assessment of building simulation weather files. Energy Build. 2008, 40, 2148–2168. [Google Scholar] [CrossRef]

- Jentsch, M.F.; James, P.A.B.; Bourikas, L.; Bahaj, A.S. Transforming existing weather data for worldwide locations to enable energy and building performance simulation under future climates. Renew. Energy 2013, 55, 514–524. [Google Scholar] [CrossRef]

- Busato, F.; Lazzarin, R.M.; Noro, M. Energy and economic analysis of different heat pump systems for space heating. Int. J. Low-Carbon Technol. 2012, 7, 104–112. [Google Scholar] [CrossRef] [Green Version]

- Busato, F.; Lazzarin, R.M.; Noro, M. Two years of recorded data for a multisource heat pump system: A performance analysis. Appl. Therm. Eng. 2013, 57, 39–47. [Google Scholar] [CrossRef]

- Tronchin, L.; Fabbri, K. Analysis of buildings’ energy consumption by means of exergy method. Int. J. Exergy 2008, 5, 605–625. [Google Scholar] [CrossRef]

- Meggers, F.; Ritter, V.; Goffin, P.; Baetschmann, M.; Leibundgut, H. Low exergy building systems implementation. Energy 2012, 41, 48–55. [Google Scholar] [CrossRef]

- PHPP. The Energy Balance and Passive House Planning Tool. Available online: https://passivehouse.com/04_phpp/04_phpp.htm (accessed on 9 January 2020).

- Michalak, P. The development and validation of the linear time varying Simulink-based model for the dynamic simulation of the thermal performance of buildings. Energy Build. 2017, 141, 333–340. [Google Scholar] [CrossRef]

- Michalak, P. A thermal network model for the dynamic simulation of the energy performance of buildings with the time varying ventilation flow. Energy Build. 2019, 202, 109337. [Google Scholar] [CrossRef]

- Kristensen, M.H.; Hedegaard, R.E.; Petersen, S. Hierarchical calibration of archetypes for urban building energy modeling. Energy Build. 2018, 175, 219–234. [Google Scholar] [CrossRef]

- Kristensen, M.H.; Choudhary, R.; Petersen, S. Bayesian calibration of building energy models: Comparison of predictive accuracy using metered utility data of different temporal resolution. Energy Procedia 2017, 122, 277–282. [Google Scholar] [CrossRef]

- Tian, W.; de Wilde, P.; Li, Z.; Song, J.; Yin, B. Uncertainty and sensitivity analysis of energy assessment for office buildings based on Dempster-Shafer theory. Energy Convers. Manag. 2018, 174, 705–718. [Google Scholar] [CrossRef] [Green Version]

- Antony, J. Design of Experiments for Engineers and Scientists; Elsevier Science: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Østergård, T.; Jensen, R.L.; Mikkelsen, F.S. The best way to perform building simulations? One-at-a-time optimization vs. Monte Carlo sampling. Energy Build. 2020, 208, 109628. [Google Scholar] [CrossRef]

- EVO. IPMVP New Construction Subcommittee. International Performance Measurement & Verification Protocol: Concepts and Option for Determining Energy Savings in New Construction; Efficiency Valuation Organization (EVO): Washington, DC, USA, 2003; Volume III. [Google Scholar]

- FEMP. Federal Energy Management Program, M&V Guidelines: Measurement and Verification for Federal Energy Projects Version 3.0; U.S. Department of Energy Federal Energy Management Program; FEMP: Washington, DC, USA, 2008.

- Fabrizio, E.; Monetti, V. Methodologies and advancements in the calibration of building energy models. Energies 2015, 8, 2548. [Google Scholar] [CrossRef] [Green Version]

| Group | Type | Unit | Baseline | Design of Experiment | |

|---|---|---|---|---|---|

| Levels | |||||

| −1 | +1 | ||||

| Climate | UNI 10349:2016 | - | |||

| Geometry | Gross volume | m3 | 1557 | ||

| Net volume | m3 | 1231 | |||

| Heat loss surface area | m2 | 847 | |||

| Net floor area | m2 | 444 | |||

| Surface/volume ratio | 1/m | 0,54 | |||

| Envelope | U value external walls | W/(m2K) | 0.18 | 0.23 | 0.27 |

| U value roof | W/(m2K) | 0.17 | 0.21 | 0.26 | |

| U value transparent components | W/(m2K) | 0.83 | 1.04 | 1.25 | |

| Activities | Internal gains (lighting, appliances and occupancy, daily average) | W/m2 | 1 | 1 | 1.5 |

| Occupants | - | 5 | 5 | 5 | |

| Control and operation | Heating set-point temperature | °C | 20 | 20 | 22 |

| Cooling set-point temperature | °C | 26 | 26 | 28 | |

| Air-change rate (infiltration and mechanical ventilation with heat recovery in heating mode) | vol/h | 0.2 | 0.2 | 0.4 | |

| Shading factor (solar control summer mode) | - | 0.5 | 0.5 | 0.7 | |

| Domestic hot water demand | l/person/day | 50 | 50 | 70 | |

| Schedules—DOE constant operation | - | 0.00–23.00 | 0.00–23.00 | 0.00–23.00 | |

| Schedules—DOE behaviour 1 | - | 7.00–22.00 | 7.00–22.00 | 7.00–22.00 | |

| Schedules—DOE behaviour 2 | - | 7.00–9.00, 17.00–22.00 | 7.00–9.00, 17.00–22.00 | 7.00–9.00, 17.00–22.00 | |

| Technical System | Technology | Type | Unit | Value |

|---|---|---|---|---|

| Heating/Cooling system | Ground Source Heat Pump | Brine/Water Heat Pump | kW | 8.4 |

| Ground heat exchanger | Borehole Heat Exchanger (2 double U boreholes) | m | 100 | |

| On-site energy generation | Building Integrated Photo-Voltaic (BIPV) | Polycrystalline Silicon | kWp | 9.2 |

| Solar Thermal | Glazed flat plate collector | m2 | 4.32 | |

| Domestic Hot Water storage | m3 | 0.74 |

| Demand | Model Type 1 | Model Type 2 |

|---|---|---|

| Heating | ||

| Cooling | ||

| Base load |

| Metric | ASHRAE Guidelines 14 | IPMVP | FEMP |

|---|---|---|---|

| NMBE (%) | ±5 | ±20 | ±5 |

| Cv(RMSE) (%) | 15 | - | 15 |

| KPI | Unit | Baseline | Design of Experiment | |

|---|---|---|---|---|

| LB | UB | |||

| Electricity consumption | kWh/m2 | 20.8 | 16.9 | 31.7 |

| Model Type | Calibration Process Stage | Training Dataset | Testing Dataset | Statistical Indicators | |||

|---|---|---|---|---|---|---|---|

| R2 | MAPE | NMBE | Cv(RMSE) | ||||

| % | % | % | % | ||||

| Type 1 | Uncalibrated | DOE - Overall LB | - | 93.65 | 9.34 | 0.06 | 13.58 |

| Type 1 | Uncalibrated | DOE - Overall UB | - | 96.64 | 7.33 | 0.02 | 9.01 |

| Type 2 | Uncalibrated | DOE - Overall LB | - | 99.90 | 1.42 | −0.02 | 1.65 |

| Type 2 | Uncalibrated | DOE - Overall UB | - | 99.78 | 1.93 | −0.01 | 2.36 |

| Model Type | Calibration Process Stage | Training Dataset | Testing Dataset | Statistical Indicators | |||

|---|---|---|---|---|---|---|---|

| R2 | MAPE | NMBE | Cv(RMSE) | ||||

| % | % | % | % | ||||

| Type 1 | Partial Calibrated | Measured data—Year 1 and 2 | 82.64 | 11.44 | 0.04 | 13.44 | |

| Measured data—Year 3 | 69.74 | 18.40 | −6.95 | 19.75 | |||

| Type 2 | Calibrated | Measured data—Year 1 and 2 | - | 86.07 | 9.97 | 0.05 | 12.02 |

| - | Measured data—Year 3 | 87.54 | 11.97 | −2.21 | 12.50 | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manfren, M.; Nastasi, B. Parametric Performance Analysis and Energy Model Calibration Workflow Integration—A Scalable Approach for Buildings. Energies 2020, 13, 621. https://doi.org/10.3390/en13030621

Manfren M, Nastasi B. Parametric Performance Analysis and Energy Model Calibration Workflow Integration—A Scalable Approach for Buildings. Energies. 2020; 13(3):621. https://doi.org/10.3390/en13030621

Chicago/Turabian StyleManfren, Massimiliano, and Benedetto Nastasi. 2020. "Parametric Performance Analysis and Energy Model Calibration Workflow Integration—A Scalable Approach for Buildings" Energies 13, no. 3: 621. https://doi.org/10.3390/en13030621