Does the Hirsch Index Improve Research Quality in the Field of Biomaterials? A New Perspective in the Biomedical Research Field

Abstract

:1. Introduction

- Bibliometric indicators are quantitative methods based on the number of times a publication is cited. The higher the number of citations, the larger the group of researchers who have used this work as a reference and, thus, the stronger its impact on the scientific community;

- Peer review is a qualitative method based on the judgement of experts. A small number of researchers, specialized in the field of the work, analyze and evaluate the scientific value of a publication.

2. Methods

3. Results

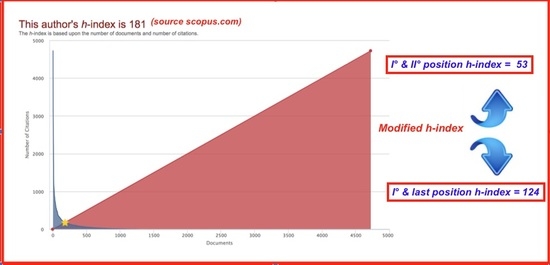

4. Discussion and Conclusions

Author Contributions

Funding

Acknowledgements

Conflicts of Interest

References

- Affatato, S. Perspectives in Total Hip Arthroplasty: Advances in Biomaterials and their Tribological Interactions; Affatato, S., Ed.; Elsevier Science: New York, NY, USA, 2014. [Google Scholar]

- Viceconti, M.; Affatato, S.; Baleani, M.; Bordini, B.; Cristofolini, L.; Taddei, F. Pre-clinical validation of joint prostheses: A systematic approach. J. Mech. Behav. Biomed. Mater. 2009, 2, 120–127. [Google Scholar] [CrossRef] [PubMed]

- Matsoukas, G.; Willing, R.; Kim, I.Y. Total Hip Wear Assessment: A Comparison Between Computational and In Vitro Wear Assessment Techniques Using ISO 14242 Loading and Kinematics. J. Biomech. Eng. 2009, 131, 41011. [Google Scholar] [CrossRef] [PubMed]

- Oral, E.; Neils, A.; Muratoglu, O.K. High vitamin E content, impact resistant UHMWPE blend without loss of wear resistance. J. Biomed. Mater. Res. B Appl. Biomater. 2015, 103, 790–797. [Google Scholar] [CrossRef] [PubMed]

- Ansari, F.; Ries, M.D.; Pruitt, L. Effect of processing, sterilization and crosslinking on UHMWPE fatigue fracture and fatigue wear mechanisms in joint arthroplasty. J. Mech. Behav. Biomed. Mater. 2016, 53, 329–340. [Google Scholar] [CrossRef] [PubMed]

- Kyomoto, M.; Moro, T.; Yamane, S.; Saiga, K.; Watanabe, K.; Tanaka, S.; Ishihara, K. High fatigue and wear resistance of phospholipid polymer grafted cross-linked polyethylene with anti-oxidant reagent. In Proceedings of the 10th World Biomaterials Congress, Montréal, QC, Canada, 17–22 May 2016. [Google Scholar]

- Essner, A.; Schmidig, G.; Wang, A. The clinical relevance of hip joint simulator testing: In vitro and in vivo comparisons. Wear 2005, 259, 882–886. [Google Scholar] [CrossRef]

- Affatato, S.; Spinelli, M.; Zavalloni, M.; Mazzega-Fabbro, C.; Viceconti, M. Tribology and total hip joint replacement: Current concepts in mechanical simulation. Med. Eng. Phys. 2008, 30, 1305–1317. [Google Scholar] [CrossRef] [PubMed]

- Ulrich, S.D.; Seyler, T.M.; Bennett, D.; Delanois, R.E.; Saleh, K.J.; Thongtrangan, I.; Stiehl, J.B. Total hip arthroplasties: What are the reasons for revision? Int. Orthop. 2008, 32, 597–604. [Google Scholar] [CrossRef] [PubMed]

- Geuna, A.; Martin, B.R. University Research Evaluation and Funding: An International Comparison. Minerva 2003, 41, 277–304. [Google Scholar] [CrossRef]

- Carpenter, C.R.; Cone, D.C.; Sarli, C.C. Using publication metrics to highlight academic productivity and research impact. Acad. Emerg. Med. 2014, 21, 1160–1172. [Google Scholar] [CrossRef] [PubMed]

- Rezek, I.; McDonald, R.J.; Kallmes, D.F. Is the h-index Predictive of Greater NIH Funding Success Among Academic Radiologists? Acad. Radiol. 2011, 18, 1337–1340. [Google Scholar] [CrossRef]

- Ciriminna, R.; Pagliaro, M. On the use of the h-index in evaluating chemical research. Chem. Central J. 2013, 7, 132. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Narin, F.; Olivastro, D.; Stevens, K.A. Bibliometrics: Theory, practice and problems. Eval. Rev. 1994, 18, 65–76. [Google Scholar] [CrossRef]

- Fayaz, H.C.; Haas, N.; Kellam, J.; Bavonratanavech, S.; Parvizi, J.; Dyer, G.; Smith, M. Improvement of research quality in the fields of orthopaedics and trauma—A global perspective. Int. Orthop. 2013, 37, 12051212. [Google Scholar] [CrossRef] [PubMed]

- Garfield, E. The history and meaning of the journal impact factor. JAMA 2006, 295, 90–93. [Google Scholar] [CrossRef] [PubMed]

- Garfield, E. The meaning of the Impact Factor. Int. J. Clin. Health Psychol. 2003, 3, 363–369. [Google Scholar]

- Bordons, M.; Fernández, M.T.; Gómez, I. Advantages and limitations in the use of impact factor measures for the assessment of research performance. Scientometrics 2002, 53, 195–206. [Google Scholar] [CrossRef]

- How Do I Find the Impact Factor and Rank for a Journal ? Available online: https://guides.hsl.virginia.edu/faq-jcr (accessed on 12 October 2018).

- Hirsch, J.E. An index to quantify an individual’s scientific research output. Proc. Natl. Acad. Sci. USA 2005, 102, 16569–16572. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bornmann, L.; Daniel, H.D. Does the h-index for ranking of scientists really work? Scientometrics 2005, 65, 391–392. [Google Scholar] [CrossRef]

- Costas, R.; Bordons, M. The h-index: Advantages, limitations and its relation with other bibliometric indicators at the micro level. J. Informetr. 2007, 1, 193–203. [Google Scholar] [CrossRef]

- Ahangar, H.G.; Siamian, H.; Yaminfirooz, M. Evaluation of the scientific outputs of researchers with similar h index: A critical approach. Acta Inform. Med. 2014, 22, 255–258. [Google Scholar] [CrossRef] [PubMed]

- Martin, B.R. Whither research integrity? Plagiarism, self-plagiarism and coercive citation in an age of research assessment. Res. Policy 2013, 42, 1005–1014. [Google Scholar] [CrossRef]

- Foo, J.Y.A. Impact of excessive journal self-citations: A case study on the folia phoniatrica et logopaedica journal. Sci. Eng. Ethics 2011, 17, 65–73. [Google Scholar] [CrossRef] [PubMed]

- Kreiman, G.; Maunsell, J.H.R. Nine Criteria for a Measure of Scientific Output. Front. Comput. Neurosci. 2011, 5, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Du, J.; Tang, X.L. Perceptions of author order versus contribution among researchers with different professional ranks and the potential of harmonic counts for encouraging ethical co-authorship practices. Scientometrics 2013, 96, 277–295. [Google Scholar]

- Tarkang, E.E.; Kweku, M.; Zotor, F.B. Publication practices and responsible authorship: A review article. J. Public Health Afr. 2017, 8, 36–42. [Google Scholar] [CrossRef] [PubMed]

- Kissan, J.; Laband, D.N.; Patil, V. Author order and research quality. South. Econ. J. 2005, 7, 545–555. [Google Scholar]

- Tscharntke, T.; Hochberg, M.E.; Rand, T.A.; Resh, V.H.; Krauss, J. Author sequence and credit for contributions in multiauthored publications. PLoS Biol. 2007, 5, 18. [Google Scholar] [CrossRef] [PubMed]

- Degli Esposti, M.; Boscolo, L. Top Italian Scientists Biomedical Sciences. 2018. Available online: http://www.topitalianscientists.org/TIS_HTML/Top_Italian_Scientists_Biomedical_Sciences.htm (accessed on 17 April 2018).

- Van Raan, A.F. Comparison of the Hirsch-index with standard bibliometric indicators and with peer judgment for 147 chemistry research groups. Scientometrics 2006, 67, 491–502. [Google Scholar] [CrossRef] [Green Version]

- Masic, I. H-index and how to improve it? Donald Sch. J. Ultrasound. Obstet. Gynecol. 2016, 10, 83–89. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Affatato, S.; Merola, M. Does the Hirsch Index Improve Research Quality in the Field of Biomaterials? A New Perspective in the Biomedical Research Field. Materials 2018, 11, 1967. https://doi.org/10.3390/ma11101967

Affatato S, Merola M. Does the Hirsch Index Improve Research Quality in the Field of Biomaterials? A New Perspective in the Biomedical Research Field. Materials. 2018; 11(10):1967. https://doi.org/10.3390/ma11101967

Chicago/Turabian StyleAffatato, Saverio, and Massimiliano Merola. 2018. "Does the Hirsch Index Improve Research Quality in the Field of Biomaterials? A New Perspective in the Biomedical Research Field" Materials 11, no. 10: 1967. https://doi.org/10.3390/ma11101967