1. Introduction

Large-scale Internet Service Providers (ISPs) like Google, Facebook, Amazon and Tencent [

1] usually rent Internet Data Centers (IDCs) to deploy their Internet services. Generally, IDCs are composed of infrastructure (e.g., servers, storage and network devices essential for the provision of Internet services, which are packaged in racks) and supporting environment (e.g., machine rooms, electricity, refrigeration, and network access needed for guaranteeing the normal running of infrastructure).

As for large ISPs, there are two major methods of constructing IDCs. The first one requires ISPs to construct and manage all of the infrastructure of IDCs and their supporting environments, and we call it “self-building mode”. The other method requires the ISP to build and manage the infrastructure of IDC, while the supporting environment is leased from a Data Center Provider (DCP). Thus, we call it “leasing mode”, e.g., Google, Amazon and Yahoo lease the supporting environment from Equinix (Redwood City, CA, USA), an American public corporation providing carrier-neutral data centers and internet exchanges to enable interconnection [

2].

Although Google et al. are trying the self-building mode, the leasing mode is still used widely due to its low construction cost, professional maintenance, and scalability, etc. In addition, it is quite difficult to build an IDC overseas because of national security policies. That is why the leasing mode is the common option of most ISPs. The operating cost under leasing mode mainly includes the lease expense to DCP. For convenience, DCP usually charges ISPs for the supporting environment at the unit of “rack per year”, rather than according to specific services it offers. That is, the more racks an ISP uses, the more lease expense it should pay the DCP. Therefore, to reduce the rack number by enhancing rack utilization is an effective way to lower the operating cost of ISP.

There are several factors limiting the rack utilization, which are as follows:

the total height of all servers in one rack should not exceed the height of the rack;

the number of servers should not exceed the total number of switch ports in one rack;

the total power used by servers should not exceed the power allocated to one rack.

As the IDC infrastructure including racks and switches are customized by ISPs, DCP usually sets no limitation on the height of rack and the number of switch ports in a rack. However, since the power supply system is pre-determined when building an IDC, the power of racks is subject to the power supply system in IDC. That is to say, the power of rack is the key limiting factor of rack utilization in IDC with leasing mode, and the rack utilization we focus on is essentially the utilization of rack power. Studies [

3,

4] have found that the power used by servers is always far below their power rating.

Taking

Figure 1 as an example, assuming the power demand of a server over time is as shown in

Figure 1a, if we set its power budget at the power rating as shown in

Figure 1b, there will be a huge power margin in the area shaded by dashed line. Specifically, the power margin is the part of the power budget that is not consumed by the servers, which directly decreases the rack utilization.

To eliminate the power margin in servers, the server consolidation approach [

5,

6,

7] unites as many applications as possible into fewer servers to make full use of the power budget. However, there are some problems with this method in real-world datacenters [

8]. First, due to the higher density of applications in a server, a single point of failure will affect more applications. Meanwhile, server consolidation is typically based on virtualization, which could bring some overhead and unfair contentions among applications. Finally, there is an inevitable cost of application migration when consolidating applications.

Another approach to eliminate the power margins is to restrict power budgets of servers [

9,

10,

11,

12], that is, to set a lower power budget by using power capping technology. Since the power supply of a rack is typically fixed, restricting the power budget of servers will allow more servers to be deployed in a rack. As the power of servers changes with the utilization of servers, the actual power of servers may exceed their power budget. To avoid this issue, an appropriate value should be set for the power budget of each server using the technique of power capping, which has been addressed in our previous work [

13]. After power budgets are assigned for each server in a rack, there will probably be some remaining space of power, which is called power fragment of rack and will affect the rack utilization. Reducing power fragment involves the permutation of racks and servers in IDC, which is difficult to decide due to their vast amount. To solve it, we propose a server placement algorithm based on dynamic programming to address the arrangement of racks and servers with given power budgets, which is capable of making an approximately optimal solution in a short time.

The main contribution of this paper is three-fold:

we analyse the potential reasons for inefficient rack utilization;

we propose a server placement algorithm based on dynamic programming to make a solution about the arrangement of racks and servers with given power budgets;

we evaluate our algorithm based on real data from Tencent and synthetic data.

The rest of this paper is organized as follows. The related work is discussed in

Section 2. In

Section 3, we introduce how to deploy servers in racks and analyse the potential reasons for inefficient rack utilization. In

Section 4, we propose a server placement algorithm and evaluate it in

Section 5 with the real traces from racks and servers in Tencent and synthetic data.

Section 6 concludes this paper and points out our future work.

2. Related Work

Saving the power consumption of servers can increase the number of servers installed in IDC and thus the utilization of IDC. Many researchers have discussed the issue of power consumption from the level of servers [

14] to that of IDC [

6,

7]. Generally, the solutions are thought to be of two kinds: shutting down the idle servers [

6,

7,

15] and dynamic voltage and frequency scaling (DVFS) [

8,

16]. The former solution usually relies on the server consolidation technology to merge more businesses into fewer number of servers, thus shutting down those idle servers or support equipment. This method improves the power utilization of servers and lowers the power margin of servers. However, there exist some implementation issues. The work of [

17] presents the disadvantages of business migration after shutdown, and the work of [

6] indicates that the server consolidation may result in resource competition. The DVFS technology, derived from the Busy-Idle state switch of CPU [

8], has been expanded to other components, such as memory and disk [

8,

17] to reduce the power consumption of servers at low utilization. More server margin can be released in the case of low utilization of server by adopting DVFS, so as to provide more space for further improving the rack utilization.

Both the shutdown of idle servers and DVFS can reduce power supply, which may cause the performance loss of applications. Many researchers have taken into account this issue [

10,

14]. The authors of [

6] obtain the business performance in various consolidation cases through the offline experiment with pairwise integration of different businesses, but it results in huge experimental cost. The work of [

14] analyzes the application situation of consolidation based on the assumption that the businesses with similar utilization are also similar in performance. The authors of [

10] introduce the technology of power capping, and make a tradeoff between power capping and business performance. In addition, the work in [

18] creates shuffled distribution topologies, where power feed connections are permuted among servers within and across racks. The authors of [

7] develop a compilation technology to lessen conflicts between different businesses in the same server.

Different from the above work, in this paper, we aim to reduce the power fragments of each rack, thus promoting the rack utilization in IDC as well as saving leasing cost, by proposing a server placement algorithm based on dynamic programming.

4. Our Approach

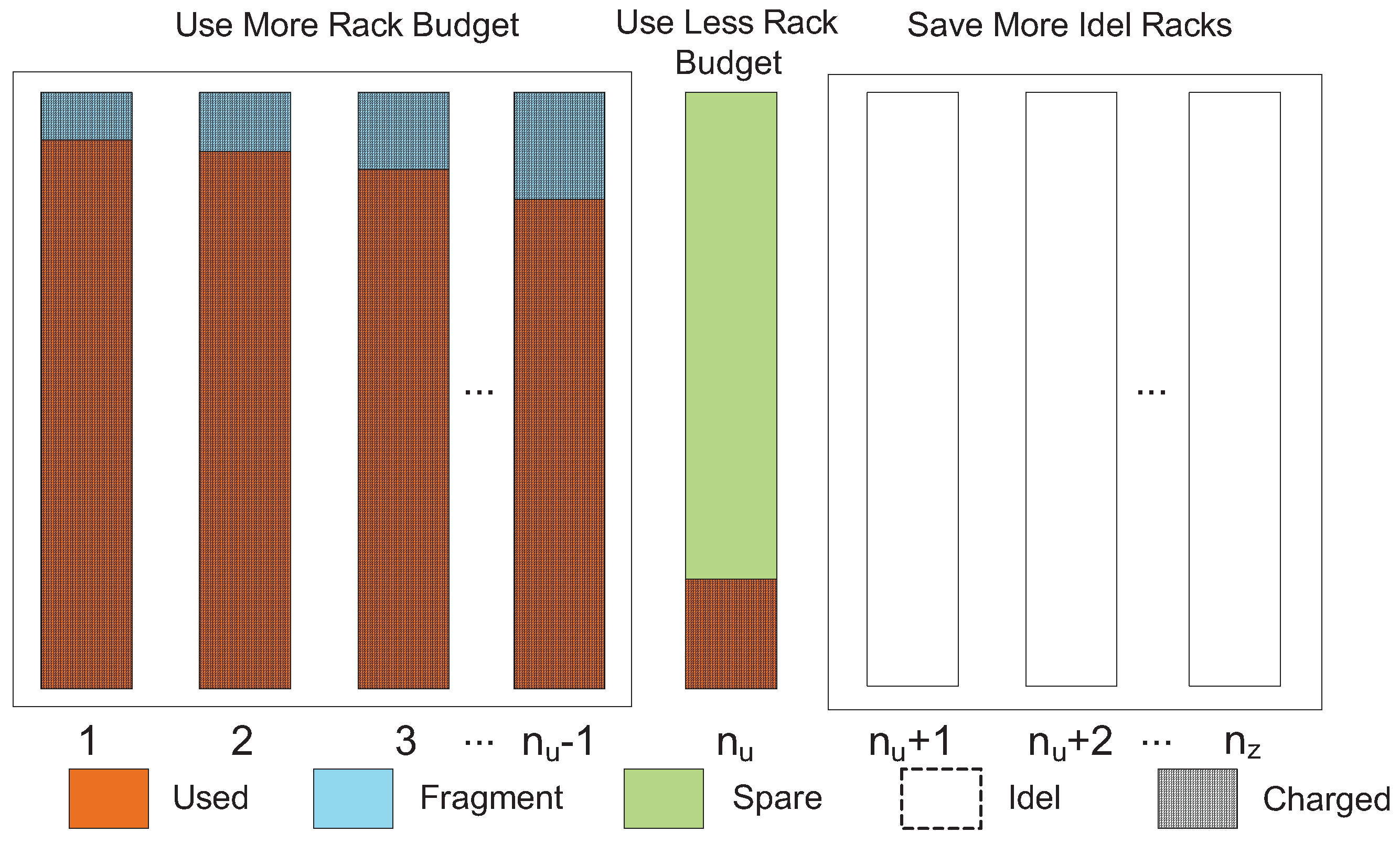

The power fragments of a rack refer to the fragments of power space generated after installing servers, which cannot be utilized by the servers stacked inside racks. A more rational arrangement of servers should reduce the power fragments of racks, thus improving their utilization. In this paper, we propose a server placement algorithm with the lowest leasing cost. First, we introduce the metrics of leasing cost. Then, we describe how the server placement algorithm works.

4.1. The Metrics of Cost

Suppose that there are

servers and

racks. As shown in

Figure 3, a total of

racks are used to accommodate all servers, where

. All of these

racks in use are ranked in an increasing order according to their remaining power that is not used. The first (

− 1) racks are unable to accommodate more servers due to insufficient remaining power. The cost of each rack is the full leasing cost. The

rack has the most remaining power, but there are no more servers to be racked. Thus, the cost of the rack is smaller than the full leasing cost as it can accommodate more servers. The dash area in

Figure 3 represents all the rack space to be charged. An optimal server placement strategy

is needed to minimize the leasing cost (

), e.g., minimizing the power budget of the first (

− 1) racks during deploying servers to save racks, and reducing the power consumption of the

rack. Equation (

7) is used to calculate the leasing cost of racks

, where the sum of all servers’ power in the

rack is assumed as

:

4.2. The Scale of Server Placement Problem

All servers and racks in datacenter should be taken as input when solving the optimal server placement strategy . However, the simultaneous processing of all servers and racks cannot be achieved due to the following two reasons:

the servers hosting the same or similar businesses are usually deployed in closer racks to reduce the expense of network. However, if the server placement strategy is implemented for all servers and racks, the servers with similar businesses may be deployed dispersedly in racks with long physical distance, resulting in huge communication expense.

IDC is equipped with a huge number of servers and racks. Suppose and are the number of servers and racks, and the complexity of iteration for the optimal server placement strategy is . If all servers and racks are taken as the input of optimal server placement strategy, the scale of problem will be so large that the solving time will be very long and even the problem cannot be solved.

Therefore, in the production system, taking a region consisting of a certain number of servers and racks as the input of server placement strategy is necessary. It should be noted that the region must be racks with short physical distance and a set of servers running similar businesses, thus reducing the cost of server migration during deploying servers and the cost of communication. Here, we first need to figure out what the size of region suitable for server placement strategy is.

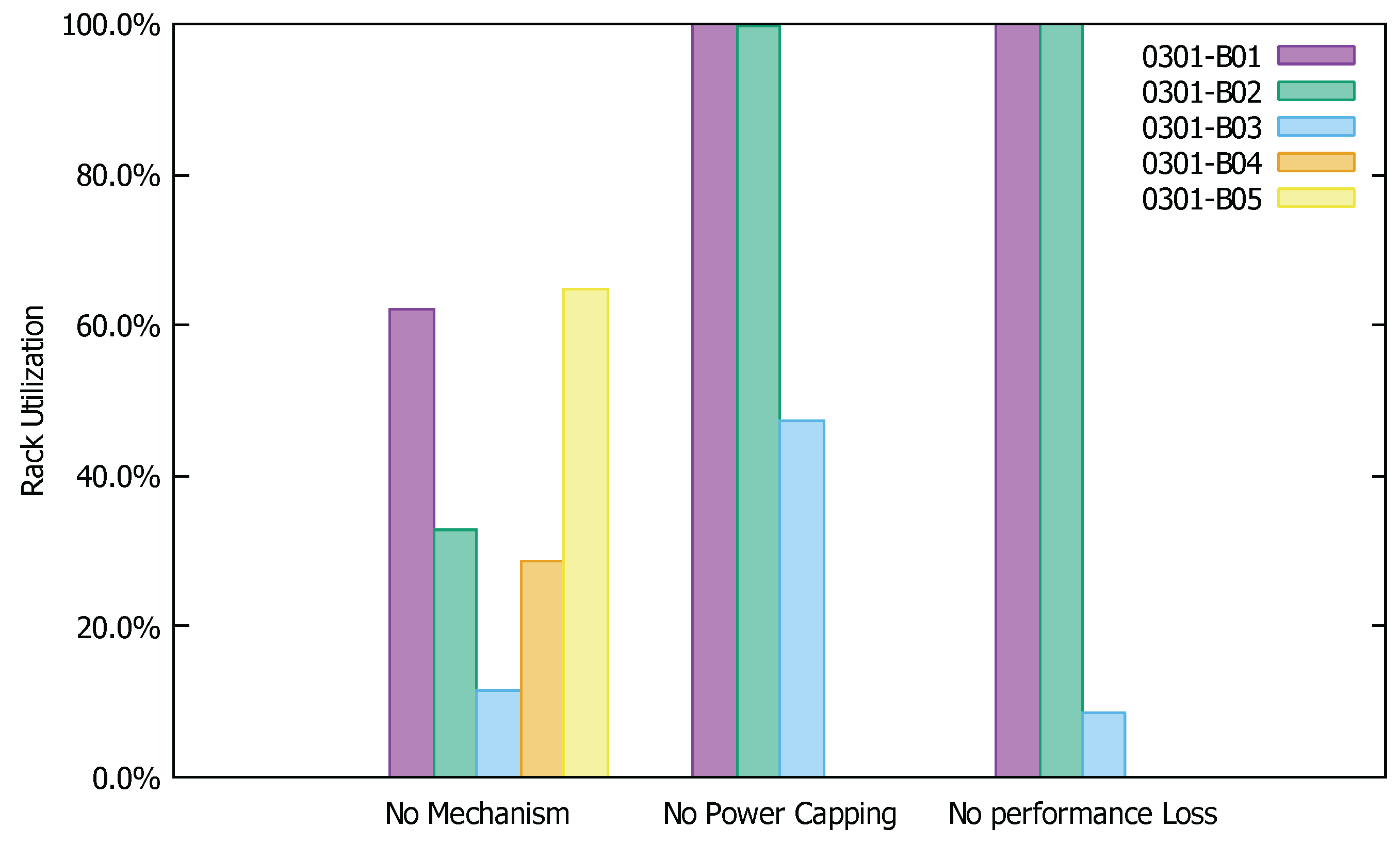

In the Tencent network, there are over 4700 kinds of businesses deployed in dedicated servers. The number of racks used by each business in one machine room are shown in

Figure 4. The number of racks used by more than 90% businesses is within 10, and that used by over 80% businesses does not exceed 5. Therefore, when optimizing the original server placement strategy, an integer between 5 and 10 can be used as the number of racks

, and the number of existing servers inside these

racks can be used as

.

4.3. Branch and Bound Based Algorithm

In order to address the problem discussed above, in this section, we propose a branch and bound based server placement algorithm. The algorithm proposed in this section is to search all possible server placement strategies. Since there exist

servers, and each server can be stacked inside any of the

racks, so the total number of possible strategies is

. Among them, strategies with the lowest leasing cost

are needed. In order to reduce the number of searches, the branch and bound approach is adopted in this algorithm to cut branches whose power exceeds the total rack power, as well as the current cost has exceeded the minimum cost. The variables and functions used in Algorithm 1 are described in

Table 1.

As shown in Algorithm 1, the input contains all servers in and all racks in , the output is a set of optimal leasing cost strategies of mapping from servers to racks. Specifically, the core function is visit(), which is used to process servers one-by-one in . In lines 2–6, it iterates over all feasible strategies of current server in each rack, and if the server is allowed to be added to one rack, then turn to the next server. In line 7, it determines whether the arrangement of all servers is completed. In addition, in lines 8–11, it calculates the leasing cost and determines whether the arrangement of servers and racks is the optimal solution. In line 16, it accesses the result of optimal arrangement by calling the visit(0).

| Algorithm 1 The Branch and Bound Based Server Placement |

| Input: The servers in and racks in ; |

Output: The placement decision of servers to racks */- 1:

function visit(i) it is used to decide which rack is used to host server */ - 2:

for each rack in do - 3:

if rack has space for the server in and current cost is less than then - 4:

call visit(i+1); - 5:

end if - 6:

end for - 7:

if the server is the last server in then check if the strategy is optimal when going to the last server in a strategy */ - 8:

compute current cost - 9:

if is less than then - 10:

= ; - 11:

store mapping from server to rack in - 12:

end if - 13:

end if - 14:

end function - 15:

={}; - 16:

call visit(0) achieve the optimal server placement decision*/ - 17:

return ;

|

The proposed algorithm traverses all available arrangements, and it can get the optimal solution. Although it reduces the number of computations by using the branch and bound method, the computing cost rises exponentially. Specifically, the time complexity and the space complexity are both , where n is the number of servers.

4.4. Dynamic Programming Based Algorithm

To reduce computational complexity, we propose another algorithm according to the following idea: servers should be assigned to each rack as much as possible, so as to minimize the power fragment of each rack. How to install more servers into each rack can be abstracted as a 0–1 Knapsack problem. That is, each rack is regarded as a knapsack with the capacity of

C, and

C is equal to

of the rack. Each server is regarded as an object with the value of

V and weight of

M, which are equal to the power threshold of server

. Then, the problem is how to maximize

V in each knapsack while ensuring the sum of objects’

M will not exceed

C. Notably, although multiple racks can be regarded as multiple knapsacks, it is different from the Multidimensional Knapsack (MKP) problem [

16]. Specifically, the MKP problem is to find a path to maximize the value of objects in all knapsacks. In addition, it does not apply to the case that not all knapsacks are needed for the optimal strategy.

The knapsack problem with gradually filling up each rack in this paper is more like a Stochastic Knapsack Problem (SKP). The number of knapsacks can be reduced if there are limited number of objects. The knapsack problem is a nondeterministic polynomial (NP) problem with overlapping sub-problems and optimal sub-structures. Thus, we can solve the problem by constructing a state transition equation and using the dynamic programming method. The state transition equation is constructed as follows:

Specifically, dp(i,) represents the largest power available for the to servers when the remaining rack power is . represents the power threshold of the server. In addition, represents the number of servers to be racked. The largest power available for the servers to be installed into a rack can be obtained by calling dp(0,).

The variables and functions used in Algorithm 2 are described in

Table 2.

In this section, we take the server placement problem as a knapsack problem. The pseudo-code of the algorithm is shown in Algorithm 2. The core function of this algorithm, named as dp(), is the state transition equation based on dynamic programming, which represents the maximum power of all servers starting from the one when the remaining power of a rack is . In the algorithm, the searching result of the substructure is stored in set d, thus avoiding the repeated computing of overlapping sub-problems. In line 5, the algorithm judges whether d has a computing result; if so, it returns the result (line 6). In lines 8 and 9, recursive computations are performed, respectively, to judge whether stacking the server can maximize the rack’s utilized power and to determine the server arrangement. In lines 10–13, two strategies are compared to return the better one. In lines 17–20, the server arrangement with the optimal leasing cost is obtained through multiple callings of dp().

| Algorithm 2 The Dynamic Programming Based Server Placement |

| Input: Servers in and racks in ; |

Output: The placement decision from servers to racks */ - 1:

procedure dp(i, ) - 2:

if i equals the length of or is not sufficient for server then - 3:

return 0, 0; - 4:

end if - 5:

if all servers starting from the server have the maximum power p and mapping m from servers to racks recorded in d under the constraint of then - 6:

return p, m; - 7:

end if - 8:

(, ) = dp(i+1, ); - 9:

(, ) = dp(i+1, -)+; - 10:

if > then - 11:

do not choose the server, refresh and with , ; - 12:

elsechoose the server, refresh and with , ; - 13:

end if - 14:

d.add( and ); - 15:

return and ; - 16:

end procedure - 17:

for each rack r in do - 18:

(tempv, tempm) = dp(0, ); - 19:

.add(tempm); - 20:

end for - 21:

return ;

|

The algorithm can provide the optimal server placement strategy for one rack in each computation. It belongs to the greedy algorithm achieving a local optimization for rack, so the final solution is an approximate optimal solution. Compared with the branch and bound algorithm, the solution of the algorithm proposed in this section is probably inferior to the global optimal solution, but it is much time-saving.