Visual Interface Evaluation for Wearables Datasets: Predicting the Subjective Augmented Vision Image QoE and QoS

Abstract

:1. Introduction

1.1. Related Works

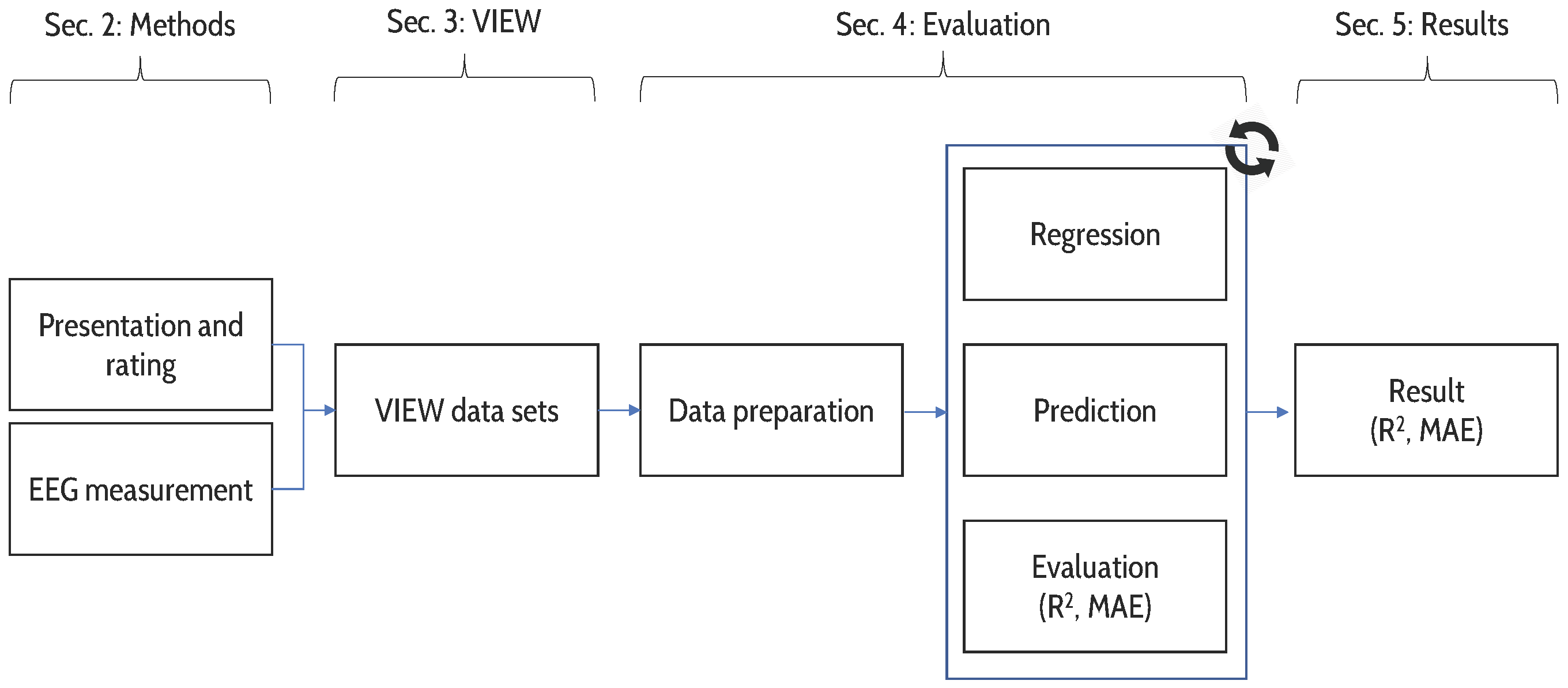

1.2. Contribution and Article Structure

- A performance evaluation of predicting the QoE of individual human subjects in overall vision augmentation (augmented reality) settings based on EEG measurements,

- An evaluation of how these data can be employed in future wearable device iterations through evaluations of potential complexity reductions and

- A publicly-available dataset of human subject quality ratings at different media impairment levels with accompanying EEG measurements.

2. Methodology

- Low at 2.5–6.1 Hz,

- Delta at 1–4 Hz,

- Theta at 4–8 Hz,

- Alpha at 7.5–13 Hz,

- Beta at 13–30 Hz, and

- Gamma at 30–44 Hz.

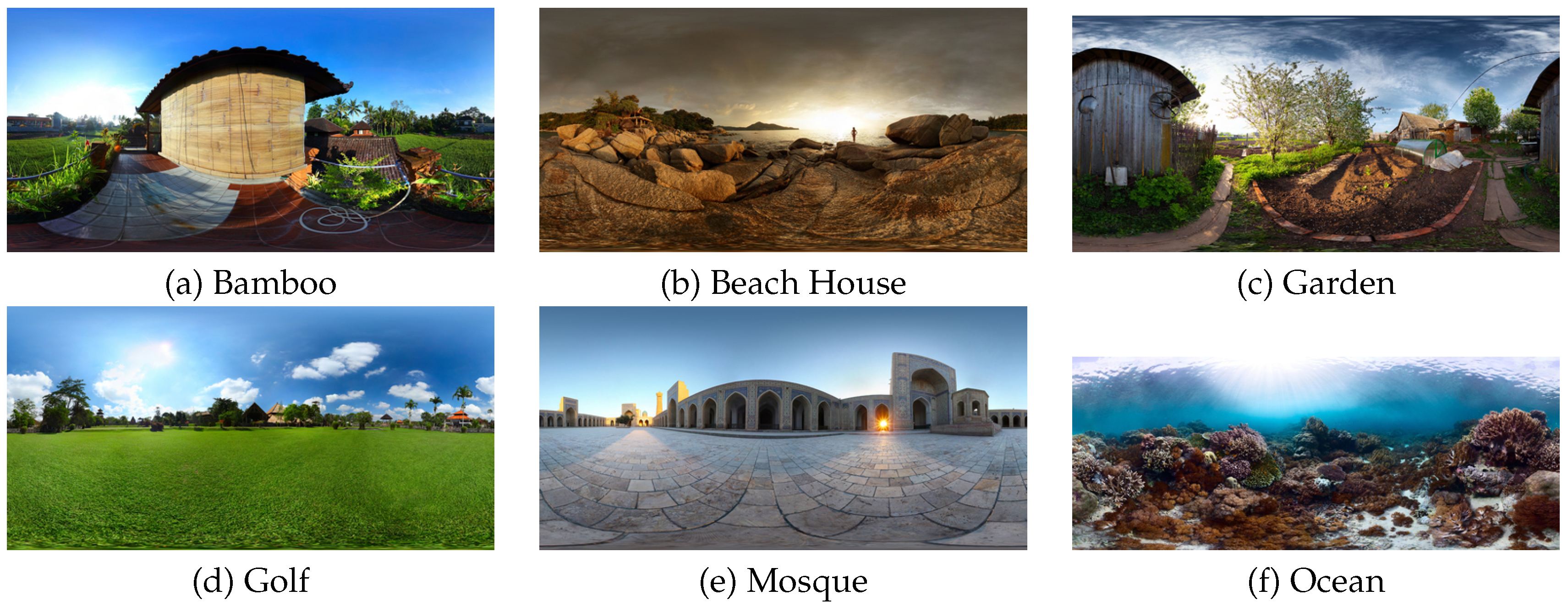

3. Visual Interface Evaluation for Wearables Datasets

3.1. Dataset Description

3.2. Dataset Utilization

4. Data Preparation and Evaluation

- All:

- Outside:

- Inside:

- Left:

- Right:

- Individual: , , and

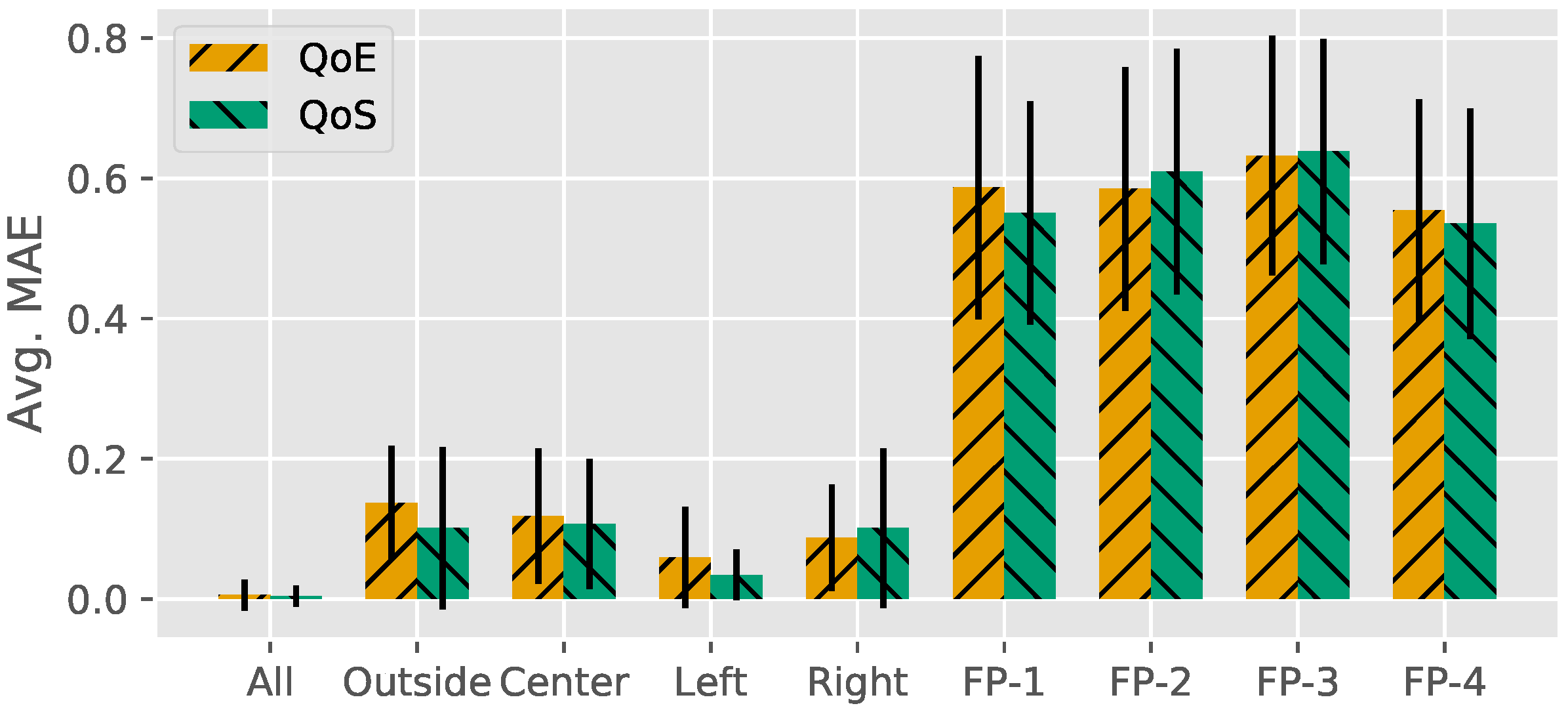

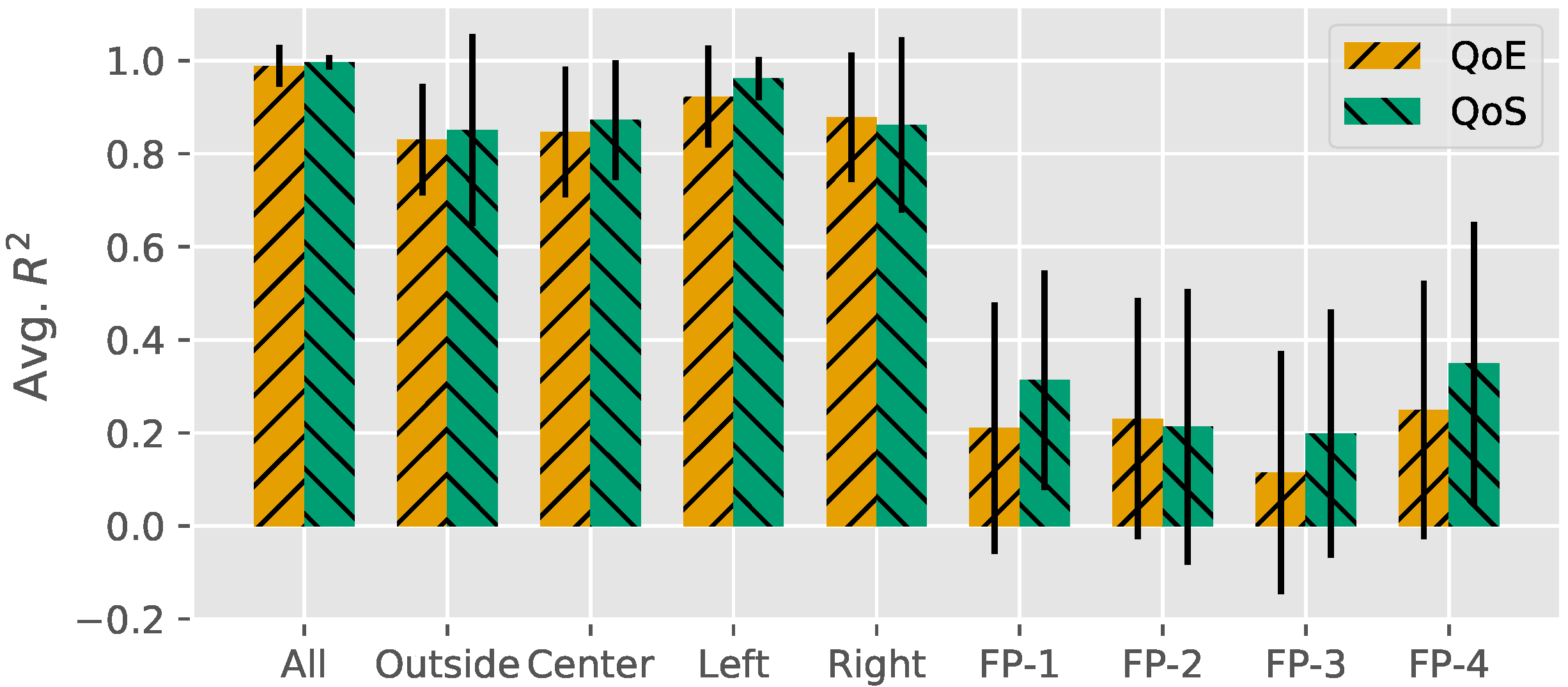

5. Results

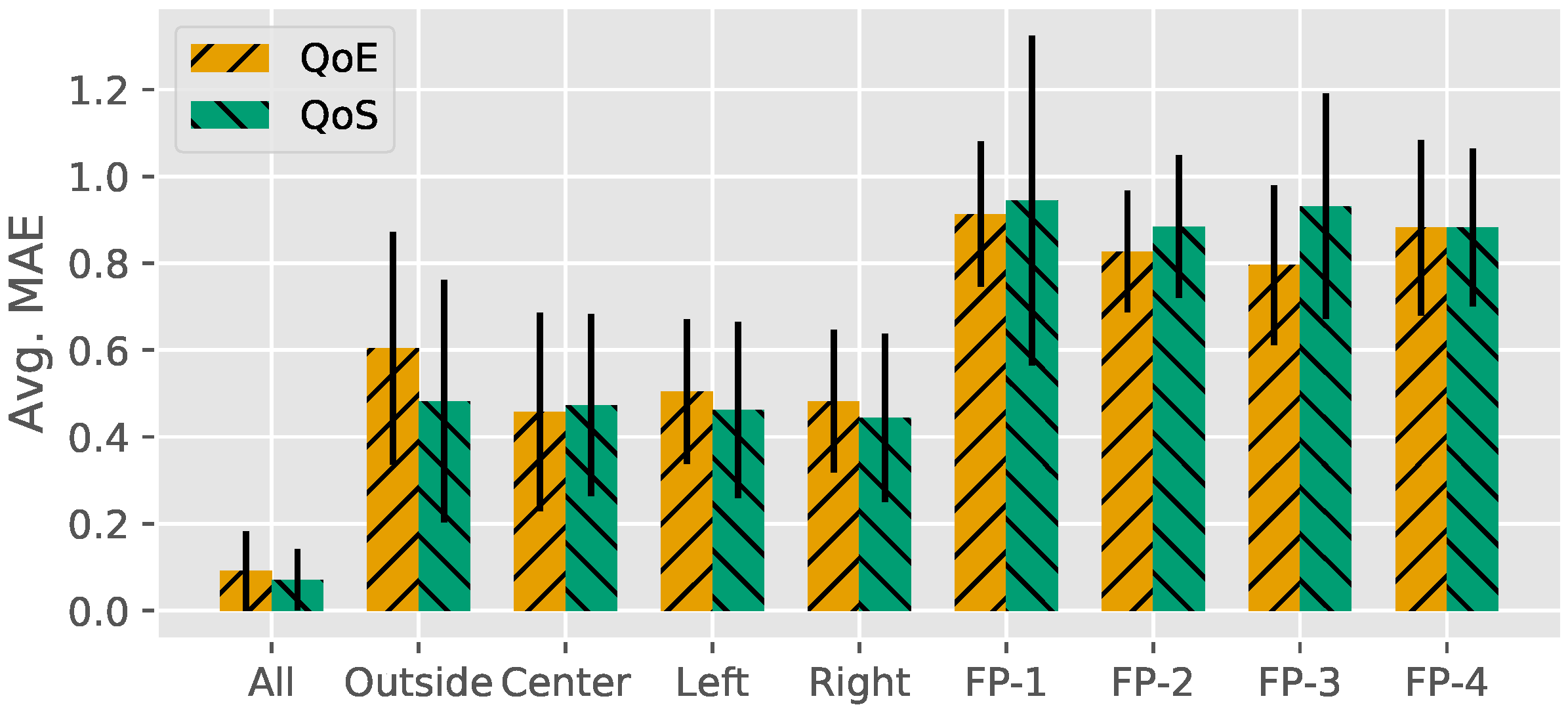

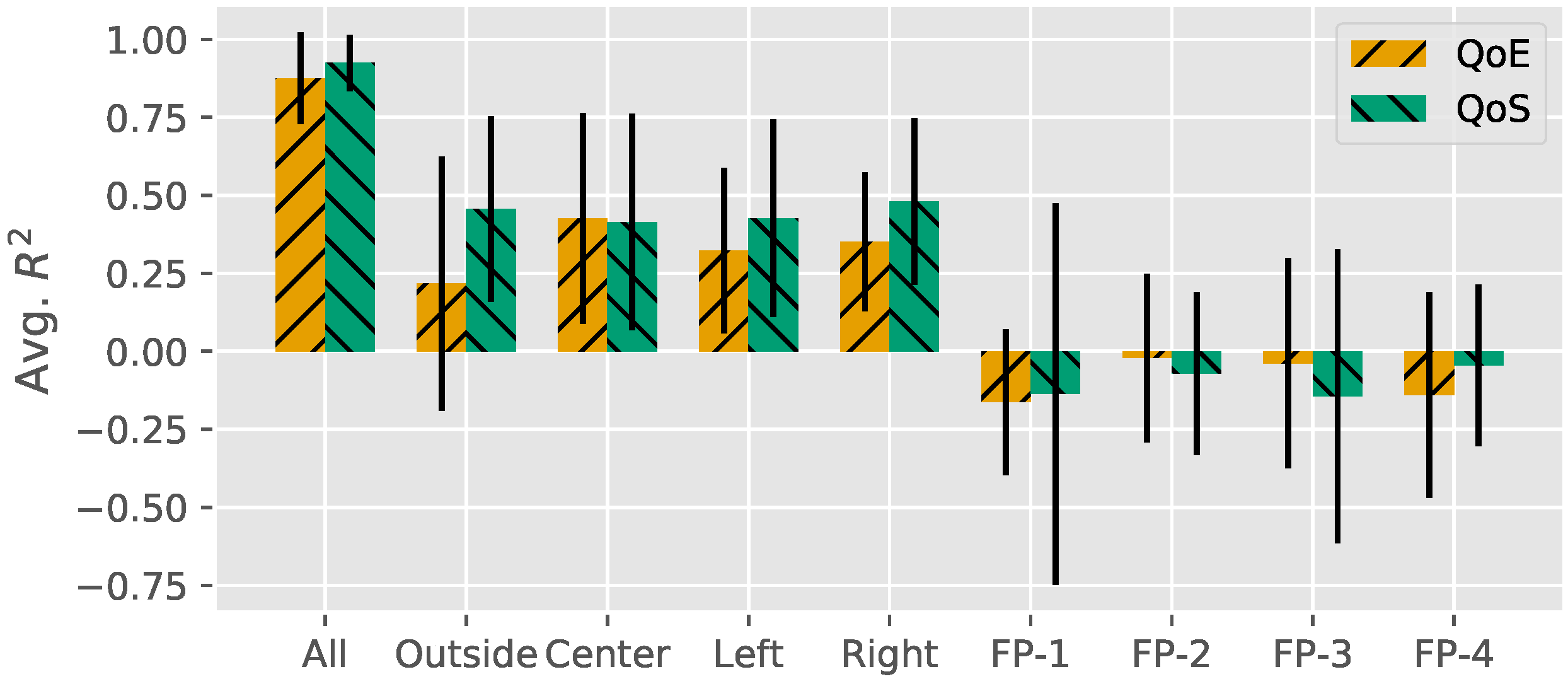

5.1. Results for Regular Images

5.2. Results for Spherical Images

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Keighrey, C.; Flynn, R.; Murray, S.; Murray, N. A QoE evaluation of immersive augmented and virtual reality speech language assessment applications. In Proceedings of the 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX), Erfurt, Germany, 31 May–2 June 2017; pp. 1–6. [Google Scholar]

- Gabbard, J.L.; Fitch, G.M.; Kim, H. Behind the Glass: Driver Challenges and Opportunities for AR Automotive Applications. Proc. IEEE 2014, 102, 124–136. [Google Scholar] [CrossRef]

- Rolland, J.; Fuchs, H. Optical Versus Video See-Through Head-Mounted Displays in Medical Visualization. Presence 2000, 9, 287–309. [Google Scholar] [CrossRef]

- Traub, J.; Sielhorst, T.; Heining, S.M.; Navab, N. Advanced Display and Visualization Concepts for Image Guided Surgery. J. Disp. Technol. 2008, 4, 483–490. [Google Scholar] [CrossRef]

- Lee, K. Augmented Reality in Education and Training. TechTrends 2012, 56, 13–21. [Google Scholar] [CrossRef]

- Shih, Y.Y.; Chung, W.H.; Pang, A.C.; Chiu, T.C.; Wei, H.Y. Enabling Low-Latency Applications in Fog-Radio Access Networks. IEEE Netw. 2017, 31, 52–58. [Google Scholar] [CrossRef]

- Seeling, P. Network Traffic Characteristics of Proxied Wearable Devices: A Day with Google Glass. In Proceedings of the IEEE Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2015. [Google Scholar]

- Brunnström, K.; Beker, S.A.; De Moor, K.; Dooms, A.; Egger, S.; Garcia, M.N.; Hossfeld, T.; Jumisko-Pyykkö, S.; Keimel, C.; Larabi, C.; et al. Qualinet White Paper on Definitions of Quality of Experience. Proceedings of 5th Qualinet Meeting, Novi Sad, Serbia, 12 March 2013. [Google Scholar]

- Engelke, U.; Nguyen, H.; Ketchell, S. Quality of augmented reality experience: A correlation analysis. In Proceedings of the 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX), Erfurt, Germany, 31 May–2 June 2017; pp. 1–3. [Google Scholar]

- Hektner, J.M.; Schmidt, J.A.; Csikszentmihalyi, M. Experience Sampling Method: Measuring the Quality of Everyday Life; Sage: Newcastle upon Tyne, UK, 2007. [Google Scholar]

- ITU-R, R. 1534-1, Method for the Subjective Assessment of Intermediate Quality Levels of Coding Systems (MUSHRA); International Telecommunication Union: Geneva, Switzerland, 2003. [Google Scholar]

- Calvo, R.A.; D’Mello, S. Affect Detection: An Interdisciplinary Review of Models, Methods, and Their Applications. IEEE Trans. Affect. Comput. 2010, 1, 18–37. [Google Scholar] [CrossRef]

- Gaubatz, M.D.; Hemami, S.S. On the nearly scale-independent rank behavior of image quality metrics. In Proceedings of the IEEE International Conference on Image Processing ICIP, San Diego, CA, USA, 12–15 October 2008; pp. 701–704. [Google Scholar]

- Fiedler, M.; Hossfeld, T.; Tran-Gia, P. A generic quantitative relationship between quality of experience and quality of service. IEEE Netw. 2010, 24, 36–41. [Google Scholar] [CrossRef]

- Reichl, P.; Tuffin, B.; Schatz, R. Logarithmic laws in service quality perception: where microeconomics meets psychophysics and quality of experience. Telecommun. Syst. 2013, 52, 587–600. [Google Scholar] [CrossRef]

- Seeling, P. Augmented Vision and Quality of Experience Assessment: Towards a Unified Evaluation Framework. In Proceedings of the IEEE ICC Workshop on Quality of Experience-based Management for Future Internet Applications and Services (QoE-FI), London, UK, 8–12 June 2015. [Google Scholar]

- Mann, S. Humanistic computing: “WearComp” as a new framework and application for intelligent signal processing. Proc. IEEE 1998, 86, 2123–2151. [Google Scholar] [CrossRef]

- Kanbara, M.; Okuma, T.; Takemura, H. A Stereoscopic Video See-through Augmented Reality System Based on Real-time Vision-based Registration. In Proceedings of the IEEE Virtual Reality 2000 (Cat. No. 00CB37048), New Brunswick, NJ, USA, 22–22 March 2000; pp. 255–262. [Google Scholar]

- Van Krevelen, D.W.F.; Poelman, R. A Survey of Augmented Reality Technologies, Applications and Limitations. Int. J. Virtual Real. 2010, 9, 1–20. [Google Scholar]

- Kruijff, E.; Swan, J.E., II; Feiner, S. Perceptual Issues in Augmented Reality Revisited. In Proceedings of the IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR), Seoul, Korea, 13–16 October 2010; pp. 3–12. [Google Scholar]

- Kalkofen, D.; Mendez, E.; Schmalstieg, D. Interactive Focus and Context Visualization for Augmented Reality. In Proceedings of the IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR), Nara, Japan, 13–16 November 2007; pp. 191–201. [Google Scholar]

- Bonanni, L.; Lee, C.H.; Selker, T. Attention-Based Design of Augmented Reality Interfaces. In Proceedings of the ACM Human Factors in Computing Systems Conference CHI, Portland, OR, USA, 2–7 April 2005; pp. 1228–1231. [Google Scholar]

- Sanches, S.R.R.; Tokunaga, D.M.; Silva, V.F.; Tori, R. Subjective Video Quality Assessment in Segmentation for Augmented Reality Applications. In Proceedings of the 2012 14th Symposium on Virtual and Augmented Reality, Rio de Janeiro, Brazil, 28–31 May 2012; pp. 46–55. [Google Scholar]

- Klein, E.; Swan, J.E.; Schmidt, G.S.; Livingston, M.A.; Staadt, O.G. Measurement Protocols for Medium-Field Distance Perception in Large-Screen Immersive Displays. In Proceedings of the IEEE Virtual Reality Conference (VR), Lafayette, LA, USA, 14–18 March 2009; pp. 107–113. [Google Scholar]

- Livingston, M.A.; Barrow, J.H.; Sibley, C.M. Quantification of Contrast Sensitivity and Color Perception using Head-worn Augmented Reality Displays. In Proceedings of the IEEE Virtual Reality Conference (VR), Lafayette, LA, USA, 14–18 March 2009; pp. 115–122. [Google Scholar]

- Woods, R.L.; Fetchenheuer, I.; Vargas Martín, F.; Peli, E. The impact of non-immersive head-mounted displays (HMDs) on the visual field. J. Soc. Inf. Disp. 2003, 11, 191–198. [Google Scholar] [CrossRef]

- Seeling, P. Visual User Experience Difference: Image Compression Impacts on the Quality of Experience in Augmented Binocular Vision. In Proceedings of the IEEE Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2016; pp. 931–936. [Google Scholar]

- Pan, C.; Xu, Y.; Yan, Y.; Gu, K.; Yang, X. Exploiting neural models for no-reference image quality assessment. In Proceedings of the 2016 Visual Communications and Image Processing (VCIP), Chengdu, China, 27–30 November 2016; pp. 1–4. [Google Scholar]

- Scholler, S.; Bosse, S.; Treder, M.S.; Blankertz, B.; Curio, G.; Mueller, K.R.; Wiegand, T. Toward a Direct Measure of Video Quality Perception Using EEG. IEEE Trans. Image Proc. 2012, 21, 2619–2629. [Google Scholar] [CrossRef] [PubMed]

- Bosse, S.; Müller, K.R.; Wiegand, T.; Samek, W. Brain-Computer Interfacing for multimedia quality assessment. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 002834–002839. [Google Scholar]

- Davis, P.; Creusere, C.D.; Kroger, J. The effect of perceptual video quality on EEG power distribution. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2420–2424. [Google Scholar]

- Lindemann, L.; Magnor, M.A. Assessing the quality of compressed images using EEG. In Proceedings of the 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011. [Google Scholar]

- Acqualagna, L.; Bosse, S.; Porbadnigk, A.K.; Curio, G.; Müller, K.R.; Wiegand, T.; Blankertz, B. EEG-based classification of video quality perception using steady state visual evoked potentials (SSVEPs). J. Neural Eng. 2015, 12, 026012. [Google Scholar] [CrossRef] [PubMed]

- Arnau-Gonzalez, P.; Althobaiti, T.; Katsigiannis, S.; Ramzan, N. Perceptual video quality evaluation by means of physiological signals. In Proceedings of the 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX), Erfurt, Germany, 31 May–2 June 2017; pp. 1–6. [Google Scholar]

- Bauman, B.; Seeling, P. Towards Predictions of the Image Quality of Experience for Augmented Reality Scenarios; Cornell University Library: Ithaca, NY, USA, 2017. [Google Scholar]

- Bauman, B.; Seeling, P. Towards Still Image Experience Predictions in Augmented Vision Settings. In Proceedings of the IEEE Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 8–11 January 2017; pp. 1–6. [Google Scholar]

- ITU-T. Recommendation ITU-T P.910: Subjective Video Quality Assessment Methods for Multimedia Applications. Available online: https://www.itu.int/rec/T-REC-P.910-199608-S/en (accessed on 15 May 2017).

- Ponomarenko, N.; Jin, L.; Ieremeiev, O.; Lukin, V.; Egiazarian, K.; Astola, J.; Vozel, B.; Chehdi, K.; Carli, M.; Battisti, F.; et al. Image database TID2013: Peculiarities, results and perspectives. Signal Proc. Image Commun. 2015, 30, 57–77. [Google Scholar] [CrossRef] [Green Version]

- Seeling, P. Visual Interface Evaluation for Wearables (VIEW). Available online: http://people.cst.cmich.edu/seeli1p/VIEW/ (accessed on 30 June 2017).

- Seeling, P. Visual Interface Evaluation for Wearables (VIEW). Available online: http://patrick.seeling.org/VIEW/ (accessed on 30 June 2017).

- Hipp, D.R. SQLite. Available online: http://www.sqlite.org (accessed on 30 June 2017).

- Jain, R. The Art of Computer Systems Performance Analysis: Techniques for Experimental Design, Measurement, Simulation, and Modeling; Wiley: Hoboken, NJ, USA, 1991. [Google Scholar]

| Subject_{u}_ratings | ||

|---|---|---|

| Field | Type | Description |

| file name | Text | Name of the image file shown |

| image i | Text | Description/image name |

| level l | Integer | Impairment level |

| start time | Real | Presentation start timestamp for image i with impairment l |

| end time | Real | Presentation end timestamp for image i with impairment l |

| rating | Integer | User rating for the presentation |

| Subject_{u}_eeg | ||

|---|---|---|

| Field | Type | Description |

| time t | Real | Measurement timestamp |

| low{1 …4}, | Real | Low bands (2.5–6.1 Hz) |

| alpha{1 …4}, | Real | Alpha bands (7.5–13 Hz) |

| beta{1 …4}, | Real | Beta bands (13–30 Hz) |

| delta{1 …4}, | Real | Delta bands (1–4 Hz) |

| gamma{1 …4}, | Real | Gamma bands (30–44 Hz) |

| theta{1 …4}, | Real | Theta bands (4–8 Hz) |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bauman, B.; Seeling, P. Visual Interface Evaluation for Wearables Datasets: Predicting the Subjective Augmented Vision Image QoE and QoS. Future Internet 2017, 9, 40. https://doi.org/10.3390/fi9030040

Bauman B, Seeling P. Visual Interface Evaluation for Wearables Datasets: Predicting the Subjective Augmented Vision Image QoE and QoS. Future Internet. 2017; 9(3):40. https://doi.org/10.3390/fi9030040

Chicago/Turabian StyleBauman, Brian, and Patrick Seeling. 2017. "Visual Interface Evaluation for Wearables Datasets: Predicting the Subjective Augmented Vision Image QoE and QoS" Future Internet 9, no. 3: 40. https://doi.org/10.3390/fi9030040