An Ontology-Based Approach to Enable Knowledge Representation and Reasoning in Worker–Cobot Agile Manufacturing

Abstract

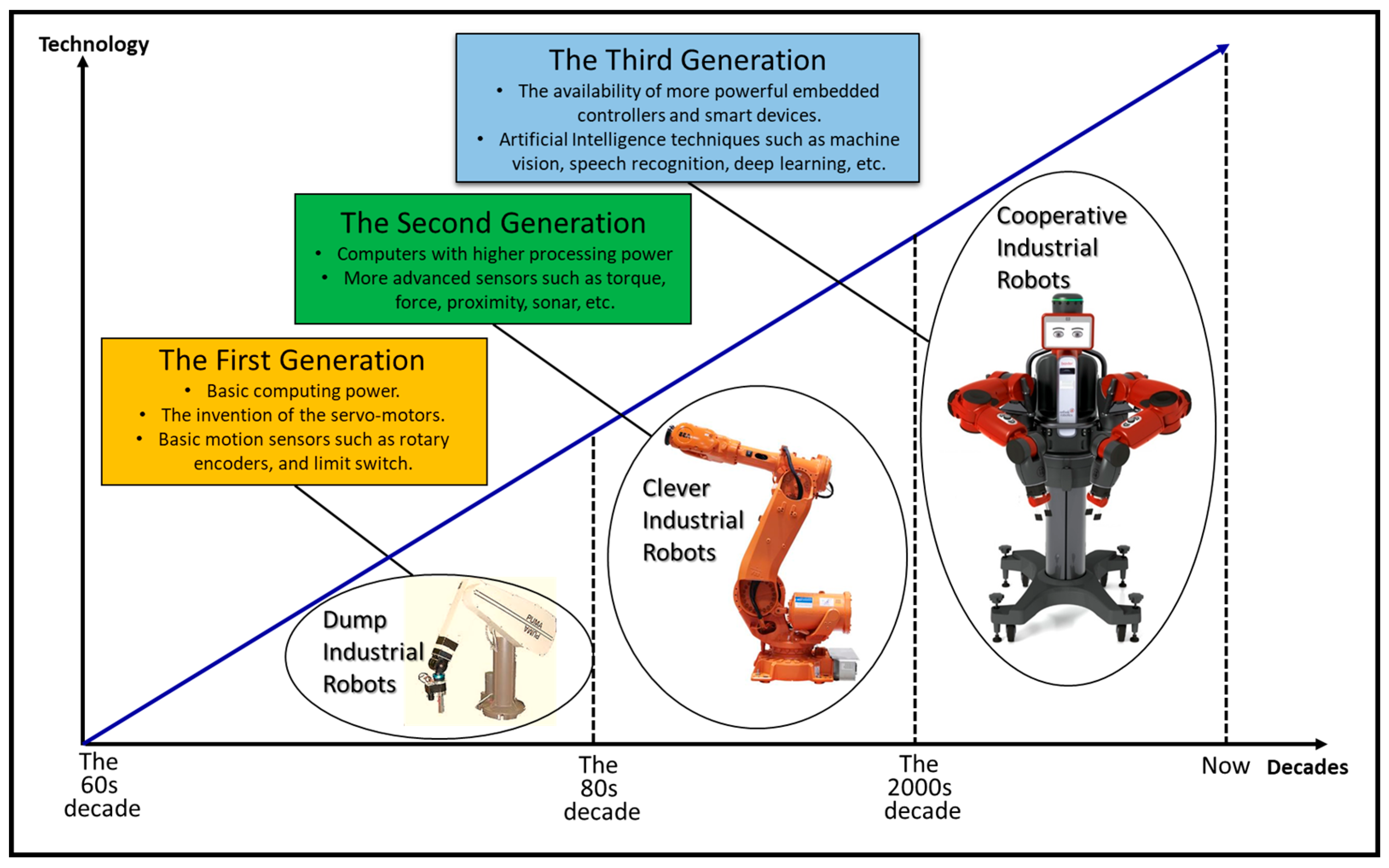

:1. Introduction: Evolution of Industrial Robotics

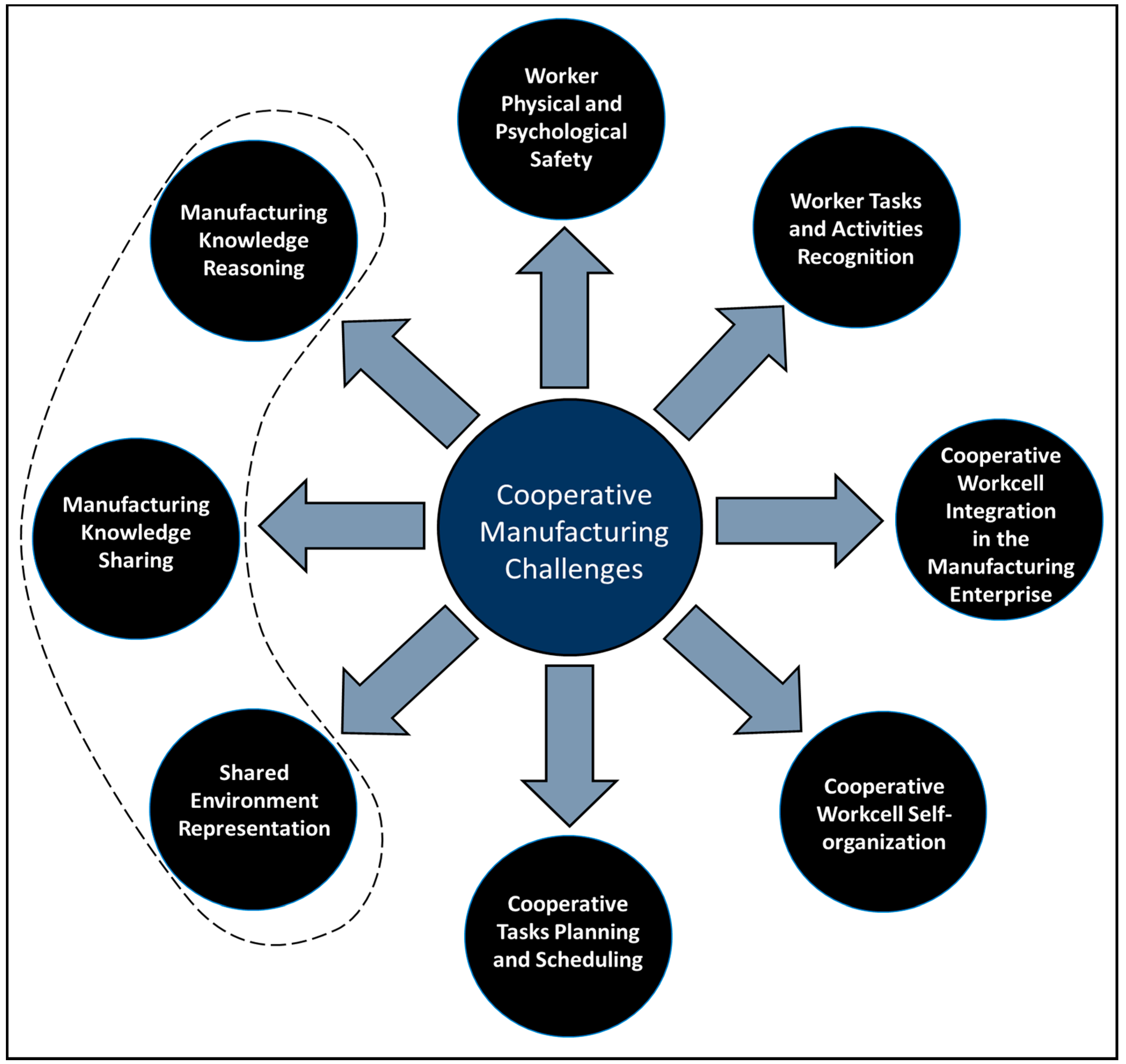

2. Related Work and Challenges in Cooperative Manufacturing

- Worker Safety: it is one of the earliest challenges which gained a lot of attention from the robotics community [6,7]. The safety in cooperative manufacturing extends from preventing the possibilities of any potential physical collision which might happen between the robot and the workcell components, to the psychological protection of the worker due to his cooperation with the robot. The research in [8] presents a real-time safety system which is capable of achieving the cooperative manufacturing with a standard IR – ABB-IRB-120. The system does not need to modify the standard robot hardware by adding any external sensors. Instead, it uses a virtual 3D representation of the cooperative workcell, to automatically control the robot speed based on the worker distance from it. The research in [9] is extensively discussing the different methodologies that can be used to grantee the physical and psychological safety in Human-Robot Interaction (HRI). The research in [10] introduces a neural network solution which dynamically compensates the robot path planning due to the changes in the work environment.

- Worker Activity Recognition: worker activity recognition is a very essential requirement to achieve the cooperative manufacturing, this is because it is the very first step to physically communicate with the cobot. The recognition could be for acquiring the worker status (i.e., busy or free), or for interacting with cobot during the manufacturing, for example to start or stop a cooperative task. The recognition of the worker activity can be also based on his natural movements during the manufacturing. For instance, picking and placing activity could be an indication that the worker is busy during that time, while holding a specific tool could express which operation is performed by the worker at the moment. The research in [11] proposes the use of the worker hand gestures to communicate with the cobot to add more flexibility to the manufacturing operations. Two modes of gesture recognition were defined due to this work. The first mode is an explicit gesture recognition, where a specific gesture which is not in the context of the manufacturing is detected. In other words, this mode of cooperation occurs when the worker is commanding the cobot directly via a set of a predefined gestures. The second mode is an implicit gesture recognition, where a specific gesture which is in the context of the manufacturing is detected, thus the cobot will perform a subsequent action. The research in [12] offers in detail a wide range of hand gestures which can be used by the worker during the cooperation with the cobot.

- Cooperative Workcell Integration in the Industrial Enterprise: The workcell is the smallest building block of the industrial enterprise, therefore it is important to define a method which can connect all the cooperative workcells horizontally over the shop floor, and vertically over the different layers of the enterprise. The research in [13] proposes the idea of linking the concept of holonic manufacturing architecture to the international society of automation (ISA)-95 standard to obtain this integration. On the horizontal level, the research proposes a supervisor holon which is providing coordination services when it is needed to cooperate outside the boundaries of the cooperative workcell. On the vertical level, the research defines the responsibilities of various types of holons and distributes them over the different layers of the industrial enterprise.

- Cooperative Workcell Self-organization: a form of self-organization must exist in the cooperative workcell to fulfill the purpose of the cooperative manufacturing. Self-organization is an adaptation mechanism which is used by the cooperative workcell to overcome the variations in the manufacturing environment. The articles in [13,14,15] mention four important sources of variations that can be found in the cooperative workcell. The first source comes from the variation in the production requirements such as the production customization and volume. The second source of variation is due to the change in the time which is taken from the worker to accomplish a specific task. The third source of variation occurs due to the change in the number of co-workers or cobots during the manufacturing, and finally the last source of variation can happen when there are more than one co-worker or cobot in the manufacturing workcell, each has different set of skills or tasks. The research in [16] addresses the problem of self-organization of a group of cooperative mobile robots. The research proposes an ontology-based concept to obtain the self-organization, however it does not show a clear implementation of this concept.

- Cooperative Tasks Planning and Scheduling: cooperative tasks planning means to select at least one cobot and one co-worker to assign a cooperative task. The researches in [13,17,18] assign the cooperative task based on matching the skills of the available workers and cobots with the required product recipe. Furthermore, the proposed control solution monitors the productivity of the workers and the cobots to balance the task assignment process. An automatic assembly planning of complex products has been considered in [19], the research provides a method which utilizes the information stored into a Computer-Aided Design (CAD) model to generate the assembly sequence, and then monitors the assembly execution in order to support the assembly joining plans. cooperative tasks scheduling is the problem of selecting the right sequence of executing a group of standing tasks. This problem is mainly affecting the overall efficiency of the cooperative workcell. The research in [20] shows the effect of the cooperative workcell scheduling by comparing two different techniques which are based on First Come First Serve (FCFS) and Johnson’s algorithm. Then, it proves that Johnson’s scheduling method will always give better results than FCFS by minimizing the overall makespan.

- Manufacturing Knowledge Representation: in order to obtain the cooperation between a cobot and a human co-worker, all the knowledge in their shared environment including themselves should be represented in form of meta-data. Descriptive meta-data are essential to give a meaning of the objects, tasks, and operations within the cooperative workcell. Structural meta-data are required to structure bonds between the objects to compound new descriptive meta-objects, this also can be done by associating new attributes to an existing object. Also, the structure meta-data can be used to define the parent-child relation among the objects. Finally, administrative meta-data is required to control the cooperative task assignment and the cooperation planning, management, and execution.

- Manufacturing Knowledge Sharing: the knowledge cannot be shared unless a proper mean of communication allows the knowledge exchange. In the context of cooperative manufacturing, the knowledge format should be understandable by both the human worker and the cobot. Therefore, finding the proper approach which can provide a common natural communication language between the human and the cobot is a crucial focus of this article.

- Manufacturing Knowledge Reasoning: after representing the knowledge and exchanging it among the different entities in the cooperative manufacturing system, a specific reasoning for this knowledge is necessary to be performed by every entity in the system to adapt to the variations in the production demands. The reasoning in the cooperative manufacturing is needed as every component in the system must have certain responsibilities and behaviors. Therefore, every component has to understand the production demands and the overall status of the workcell, thus they can comprehend the manufacturing knowledge and fulfill their functions.

3. Key Concepts

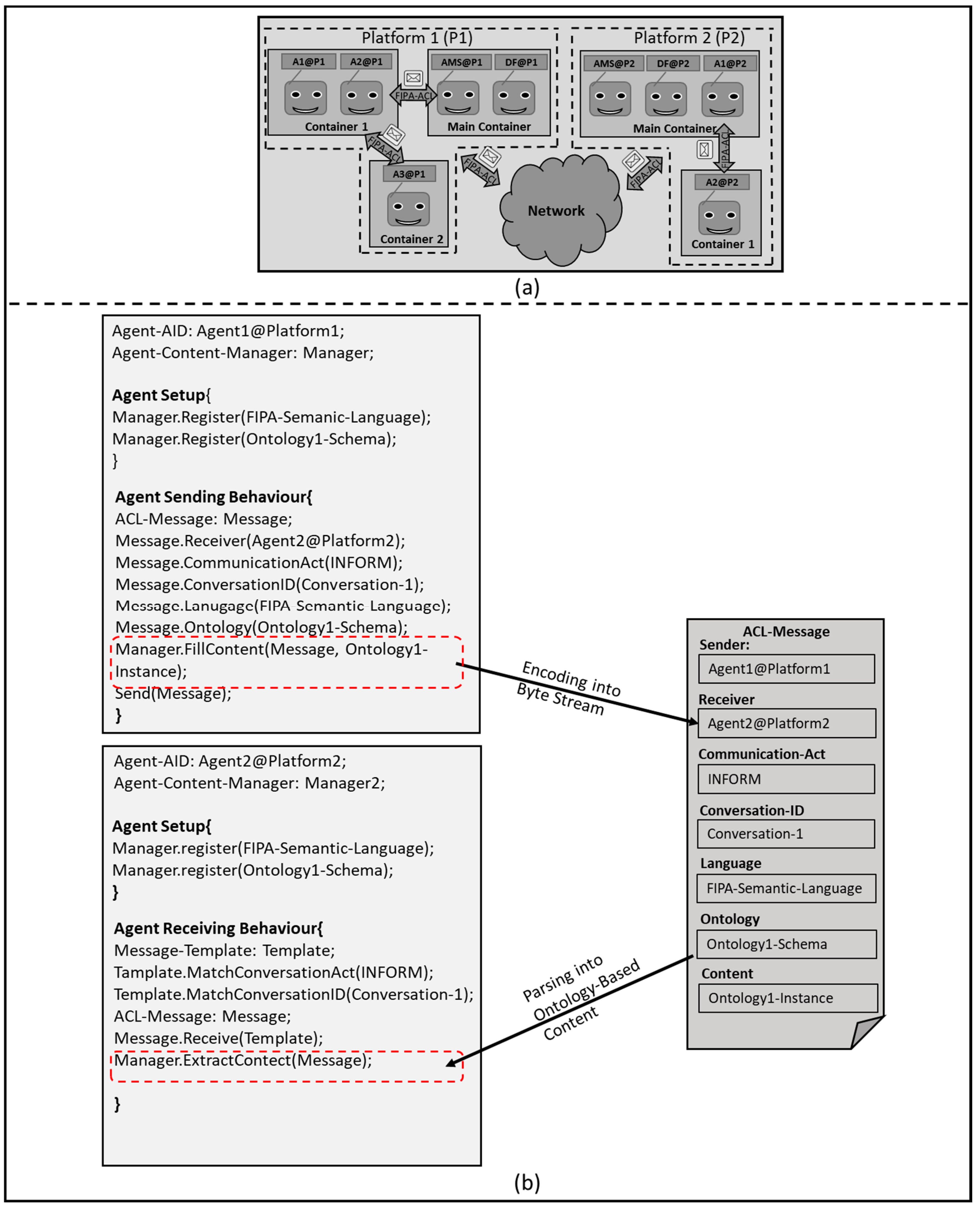

3.1. Ontology-Based Multi-Agent System

- Responsive: an agent is capable of perceiving its environment and respond in a timely fashion to the changes occurring in it.

- Pro-active: an agent is able to exhibit opportunistic, goal directed behavior and take initiative.

- Social: an agent can interact with other artificial agents or humans within its environment in order to solve a problem.

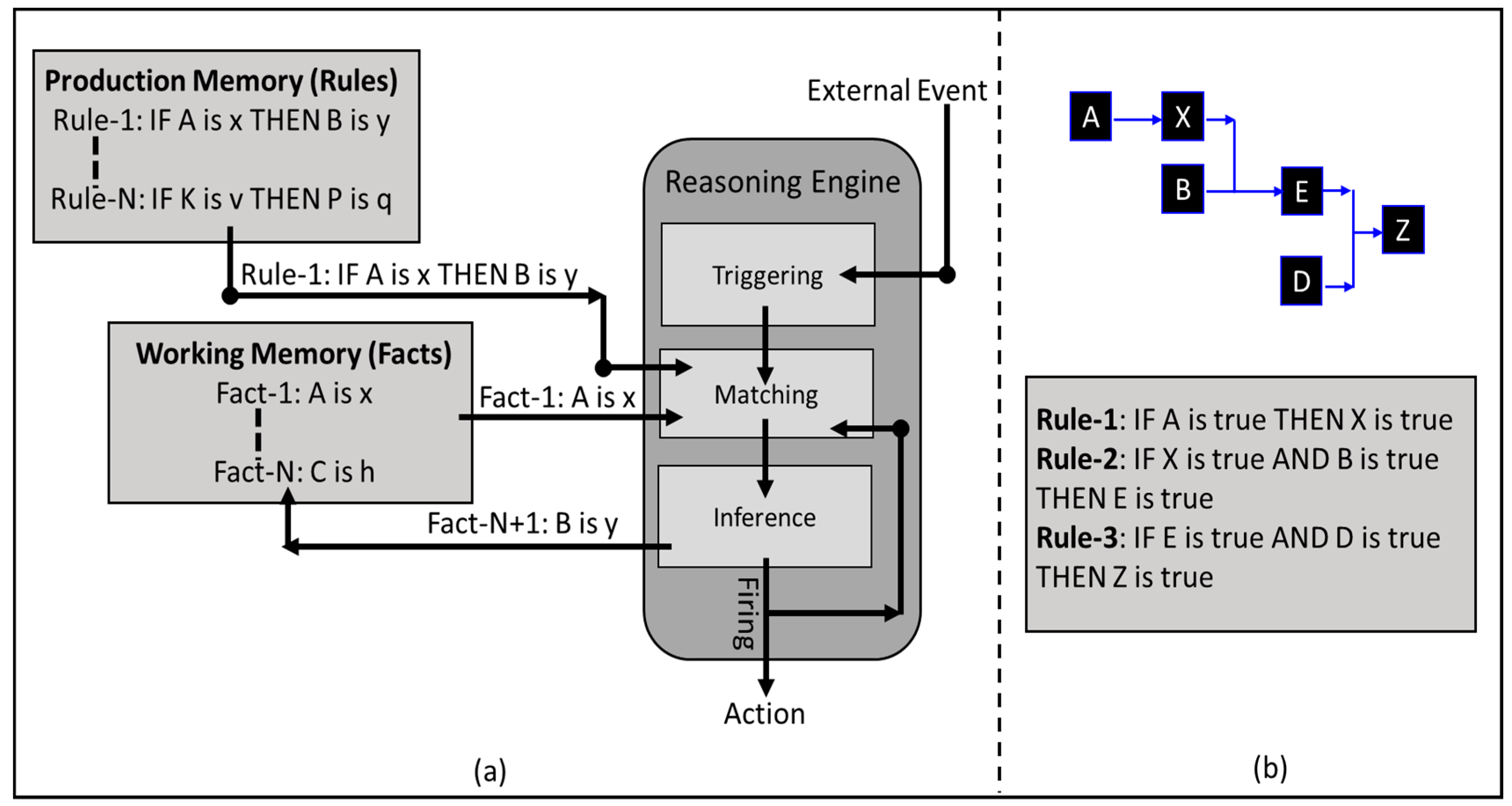

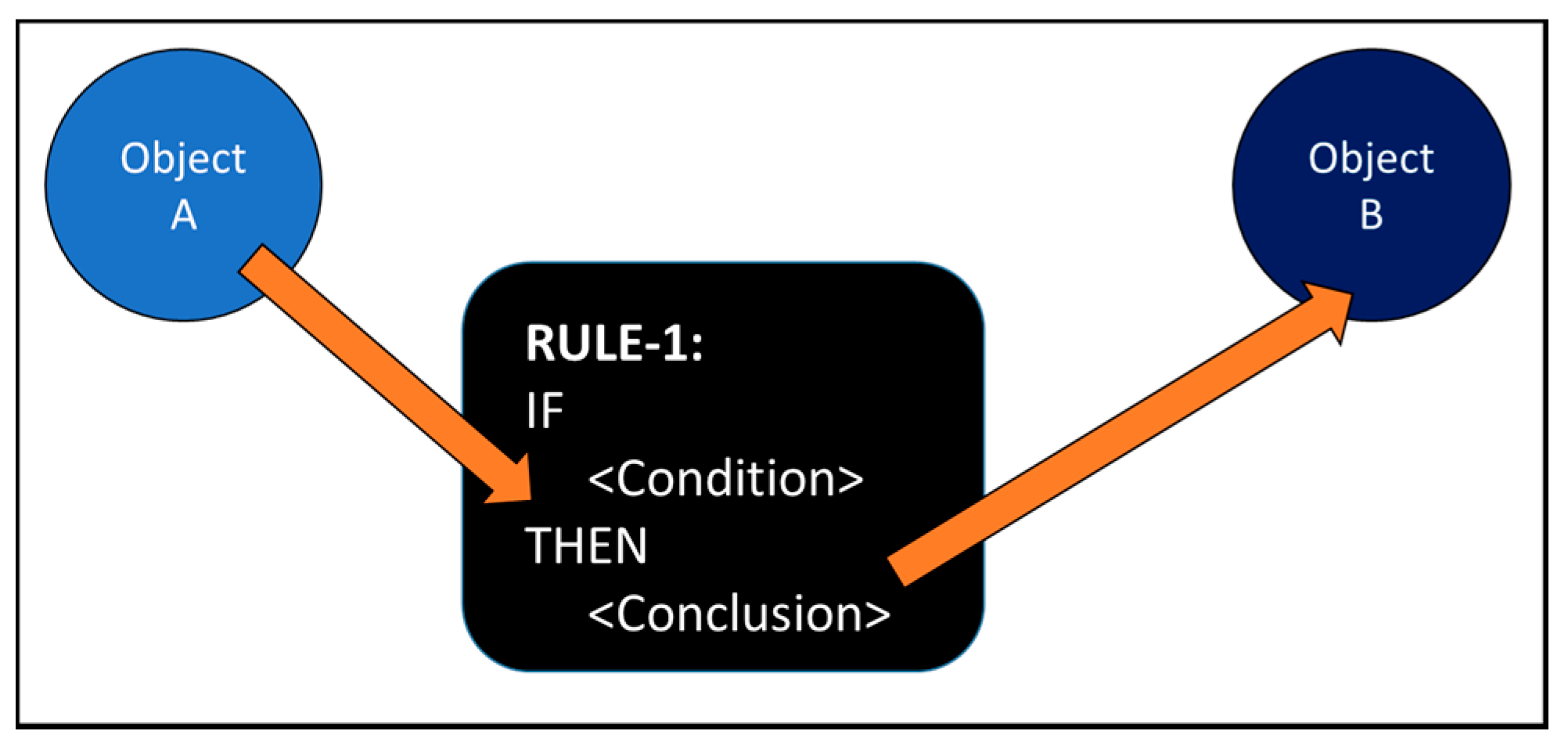

3.2. Rule-Based System

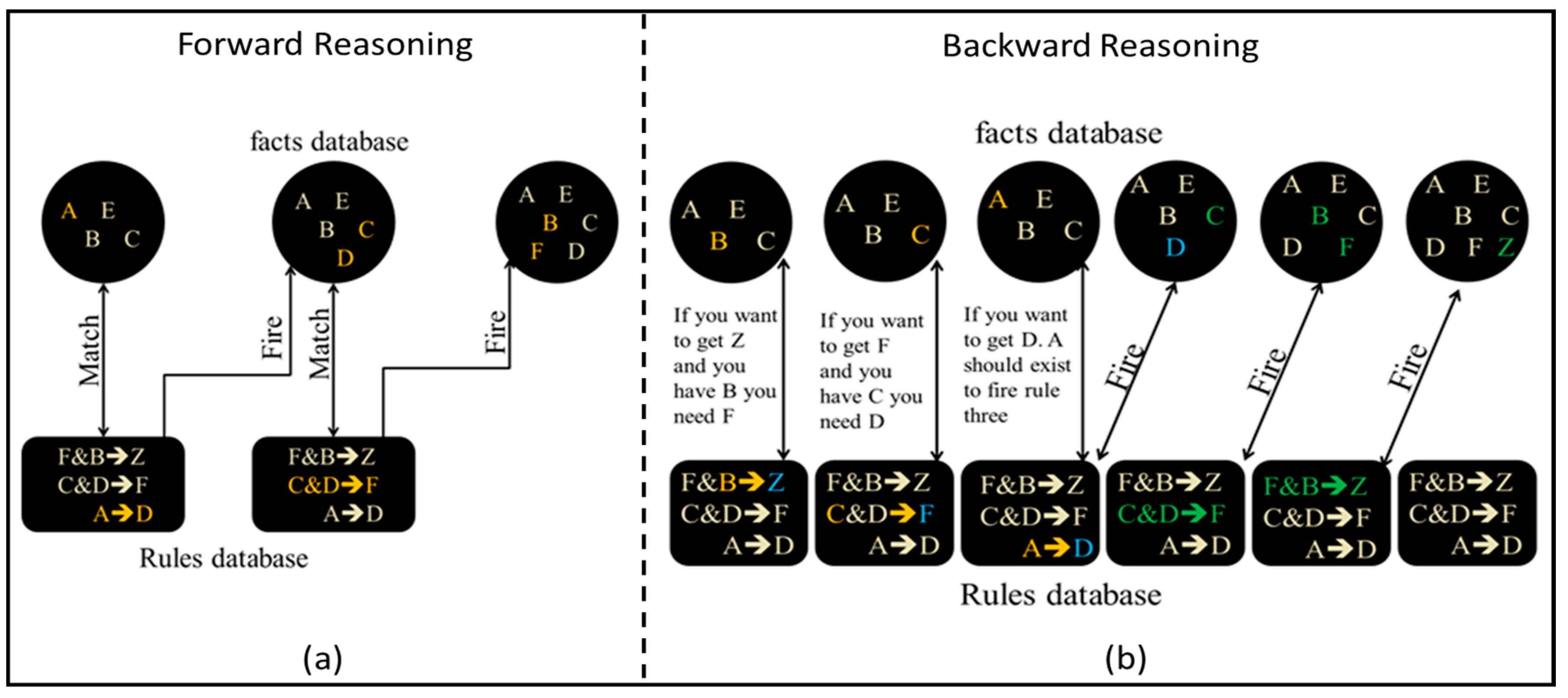

- Forward reasoning: in forward chaining, the rule-based system starts with the initial facts and derives new facts whenever a rule matches a fact. The reasoning engine goes through consequence of rules firing to find its final goal, which ultimately leads to find the solution route from the initial facts to the final goal in a forward reasoning style. An example of the forward chaining is shown in Figure 5a.

- Backward reasoning: in backward chaining, the rule-based system starts from the final goal, then it finds which rules must be fired to lead to this goal, therefore the reasoning engine can determine the facts which are needed to reach the final goal. During the backward chaining a consequence of sub-goals will appear, which ultimately leads to find the solution route from the final goal to the initial facts in a backward reasoning style. An example of the backward chaining is shown in Figure 5b.

- Declarative programming: rules programming technique is far easier to express complicated solutions for difficult problems, and to verify those solutions as well. Furthermore, Drools provides a decision making table option in a form of MS-excel, which makes it possible for someone with no coding background to read and modify the rules.

- Logic and data separation: as a direct result of the ReteOO algorithm, the data locates in the domain of the object, while the logic locates in the domain of the rules. This can be very useful to provide different levels of authorities and accessibilities for the users.

- Centralization of the knowledge: by creating a single knowledge base which contains all the facts, the knowledge will be accessible and shared by all the agents. Thus, problems can be solved in faster and more efficient ways.

- Speed and reliability: using the available options such as ReteOO, hybrid reasoning technique, or stateful reasoning session makes Drools very fast and reliable tool.

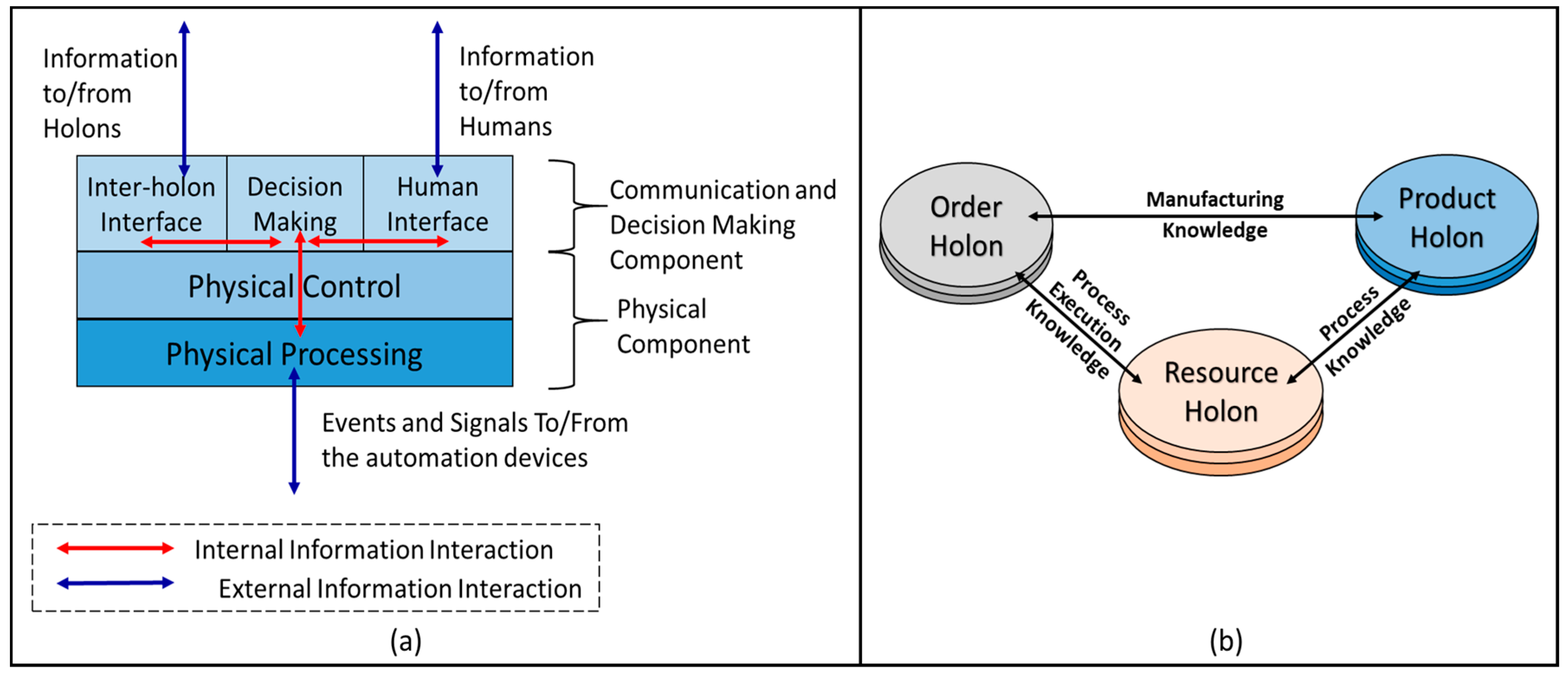

3.3. Holonic Control Architecture

- Autonomy: the capability of the holon to create and control the execution of its own plans.

- Cooperation: a process whereby a set of holons develop mutual plans to execute them together.

- Holarchy: a system of holons which cooperate to achieve a goal or objective. The holarchy defines the basic rules for cooperation of the holons and thereby limits their autonomy.

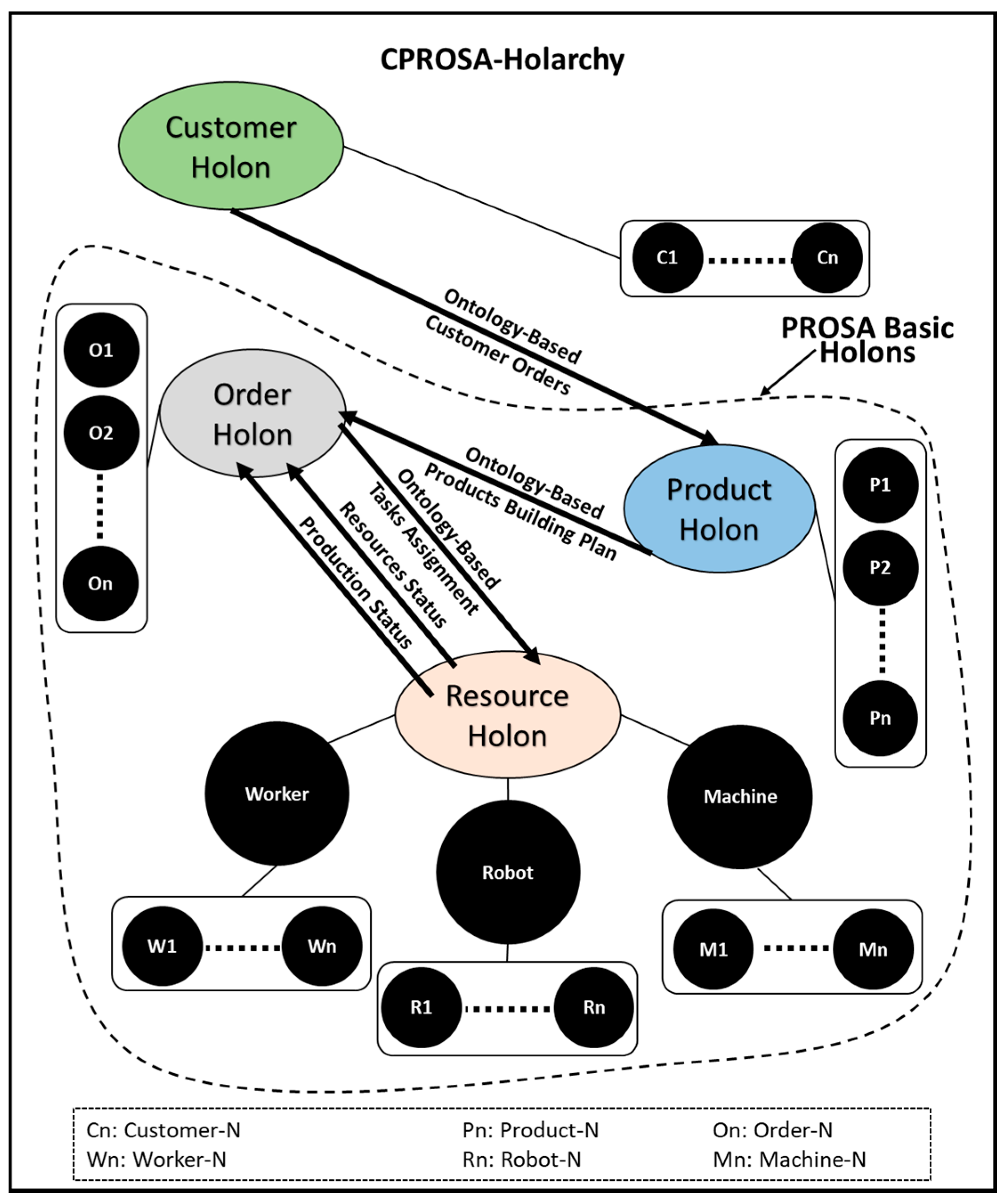

- Product Holon (PH): is responsible for processing and storing the different production plans which are required to insure the correct manufacturing of a certain product. Different production plans are needed to manufacture different products within the same workcell. Therefore, the PH has to match the required product type with its plans.

- Order Holon (OH): is responsible for composing, managing the production orders. Furthermore, in a small scale enterprise, it should assign the tasks to the existing operating resources and monitor the execution status of the assigned tasks.

- Operational Resource Holon (ORH): is a physical entity within the manufacturing system, it can represent a robot, machine, worker, etc.

4. Solution Approach

4.1. CPROSA-Holarchy

- An ontology defines the basic terms and relations comprising the vocabulary of a topic area as well the rules for combining terms and relations to define extensions for this vocabulary [40].

- An ontology is a formal, explicit specification of a shared conceptualization [41].

- An ontology is a logical theory accounting for the intended meaning of a formal vocabulary [42]. i.e., “it is a commitment to a particular conceptualization of the world”.

- An ontology provides the meta-information to describe the data semantics, represent knowledge, and communicate with various types of entities (e.g., software agents and humans) [43].

- An ontology can be described as “means of enabling communication and knowledge sharing by capturing a shared understanding of terms that can be used both by humans and machine software” [44].

- Terms: expressions that indicate entities that exist in the MAS and that agents may reason about. Terms can be seen as primitives which are atomic data types such as strings or integers, and concepts which are complex structure such as objects.

- Predicates: expressions that describe the status of the world and the relationships between the concepts.

- Actions: expressions that describe routines or operations that can be executed by an agent.

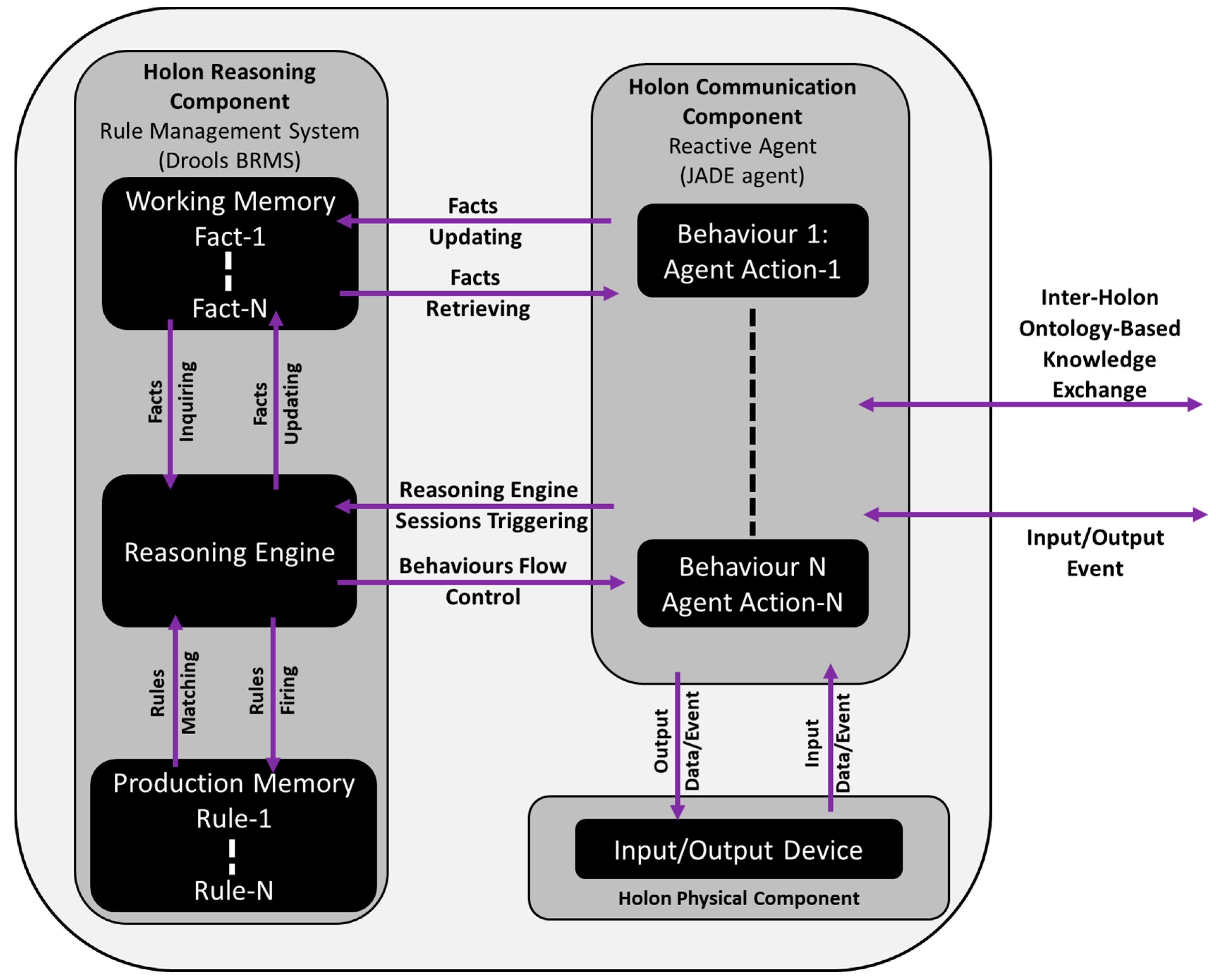

4.2. CPROSA-Holon Model

5. Case-Study

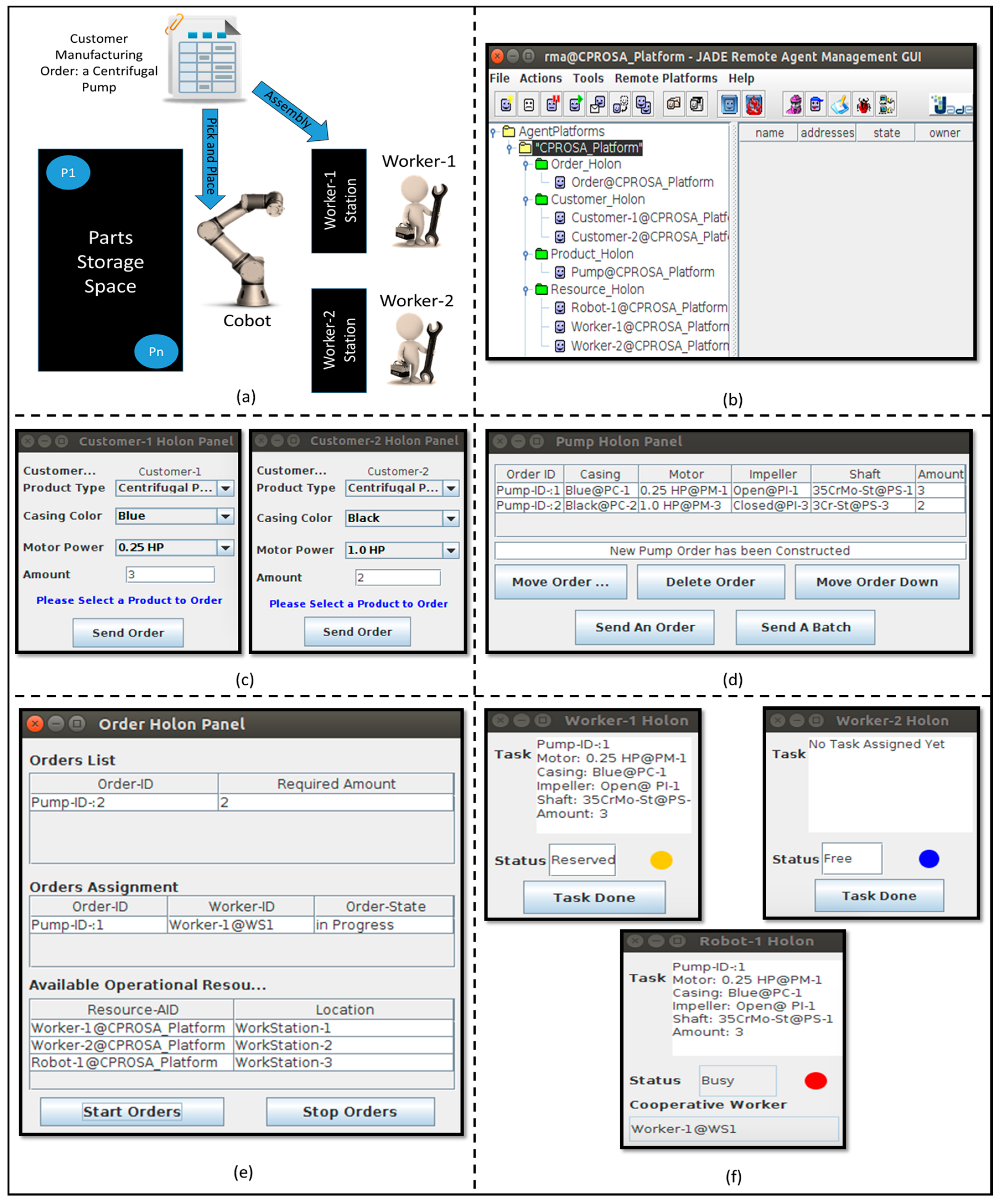

5.1. Case-Study Description

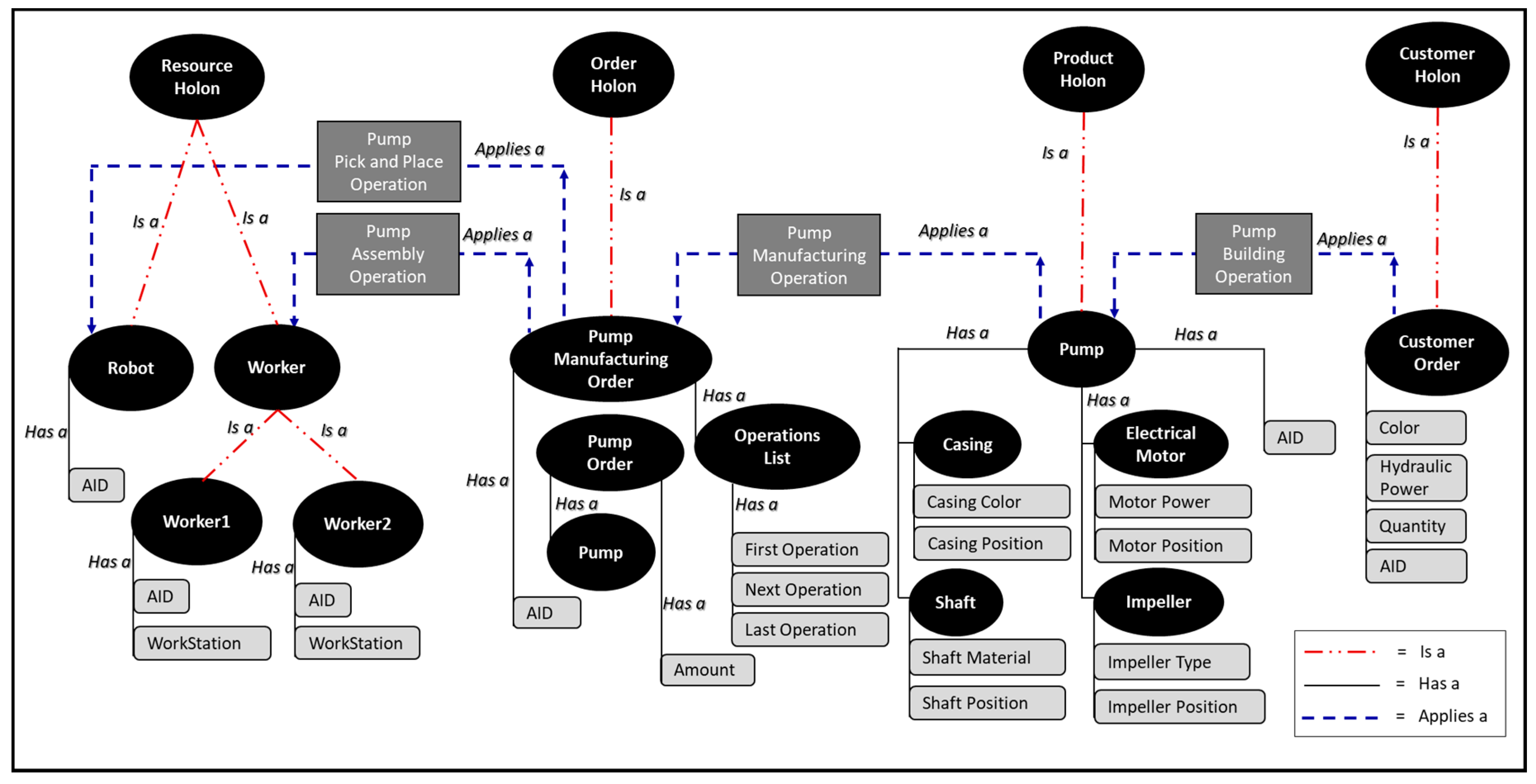

5.2. Case-Study Ontology

- Customer-Order: a schema which encapsulates some attributes such as the required color, the needed hydraulic power, and the required amount of units. Also, it contains an AID as every customer-order is an agent which needs an ID.

- Casing: a shared feature between the pump and the compressor. The casing schema contains two attributes which are the casing color, and position at the features storage space.

- Electrical-Motor: a shared feature between the pump and the compressor. The motor schema contains two attributes which are the motor electrical power, and position at the features storage space.

- Impeller: a unique feature of the pump. The impeller schema contains two attributes which are the impeller type, and position at the features storage space.

- Shaft: a unique feature of the pump. The shaft schema contains two attributes which are the shaft material, and position at the features storage space.

- Pump: a concept schema which encapsulates many other schemas under it, those schemas are the casing, electrical-motor, shaft, and impeller. Every pump is an agent, therefore it must contain an AID attribute.

- Pump-Order: this schema extends the pump schema by adding the required amount of units.

- Operations-List: a schema which includes a list of operations which can be used to manufacture either a pump or a compressor. The schema can be used to manufacture a product which needs three operations or less.

- Pump-Manufacturing-Order: a schema which combines a Pump-Order schema and an Operations-List schema. Also, it has an AID attribute as it acts as an agent.

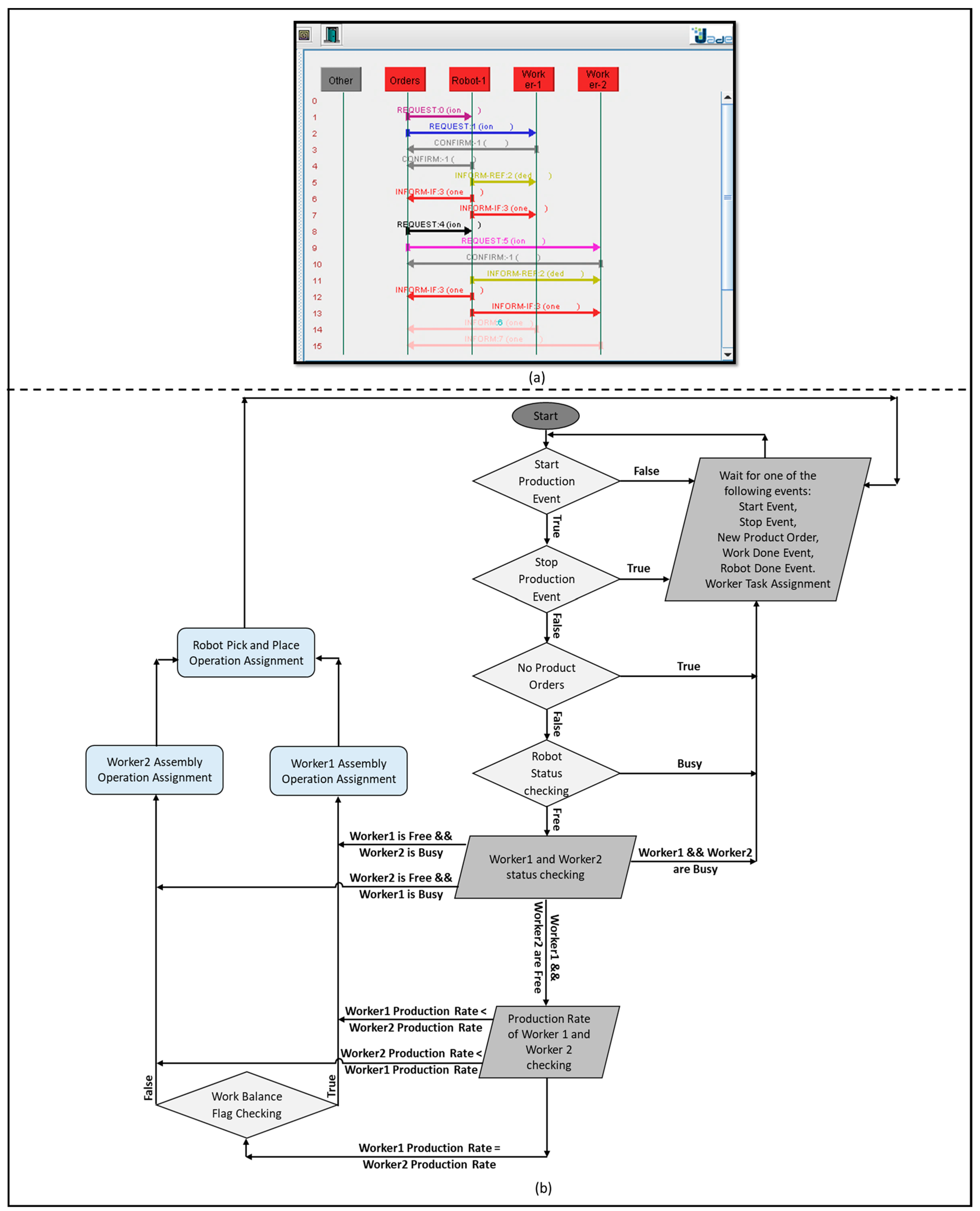

- Worker: a schema which contains two attributes, the first one is the worker AID, and the second is the worker location within the workcell (i.e., workstation). The worker agent is providing an UI for the worker for providing the assigned task and inquiring the task done event (see Figure 10f). Two instances of the worker agent exist in this case-study scenario. As has been mentioned before in Section 5.1, the worker can have three statuses. A free status when there are no product orders or the production is not started. A reserve status when the worker is waiting the first product unit to be placed by the cobot. A busy status while the cobot is still handling the orders and till the worker triggers the task-done button.

- Robot: a schema which contains one attribute which is the robot AID. The robot schema does not have a workstation attribute because in this specific case-study, we have one cobot which is responsible for the pick and place. Therefore, the location of the cobot is not necessary required, however in case of more than one cobot this attribute could be important. The robot agent is providing an UI to show the assigned task and the status of the cobot (see Figure 10f). As has been mentioned before in Section 5.1, the cobot can have two statuses. A free status when there are no product orders or the production is not started. A busy status when the cobot is picking and placing the production orders. A timer of two second has been assigned to every pick and place operation.

- (concept-x) <Is-a> (concept-y): usually a relation between two concept schemas. This relation is similar to the object oriented abstraction. Thus, this predicate expression has been used to express the parent-child relationship between the concepts.

- (concept-x) <Has-a> (attribute-x): usually a relation between a concept and an attribute, an attribute can be a concept schema or a primitive. This relation is similar to object oriented inheritance. Thus, this predicate expression has been used to form sophisticated objects from simpler ones.

- (agent-x) <Applies-an> (action-x): usually a relation between a concept and an action schema. A concept uses this predicate expression to trigger one or more than one actions at the same time. The action schemas will be discussed below in detail.

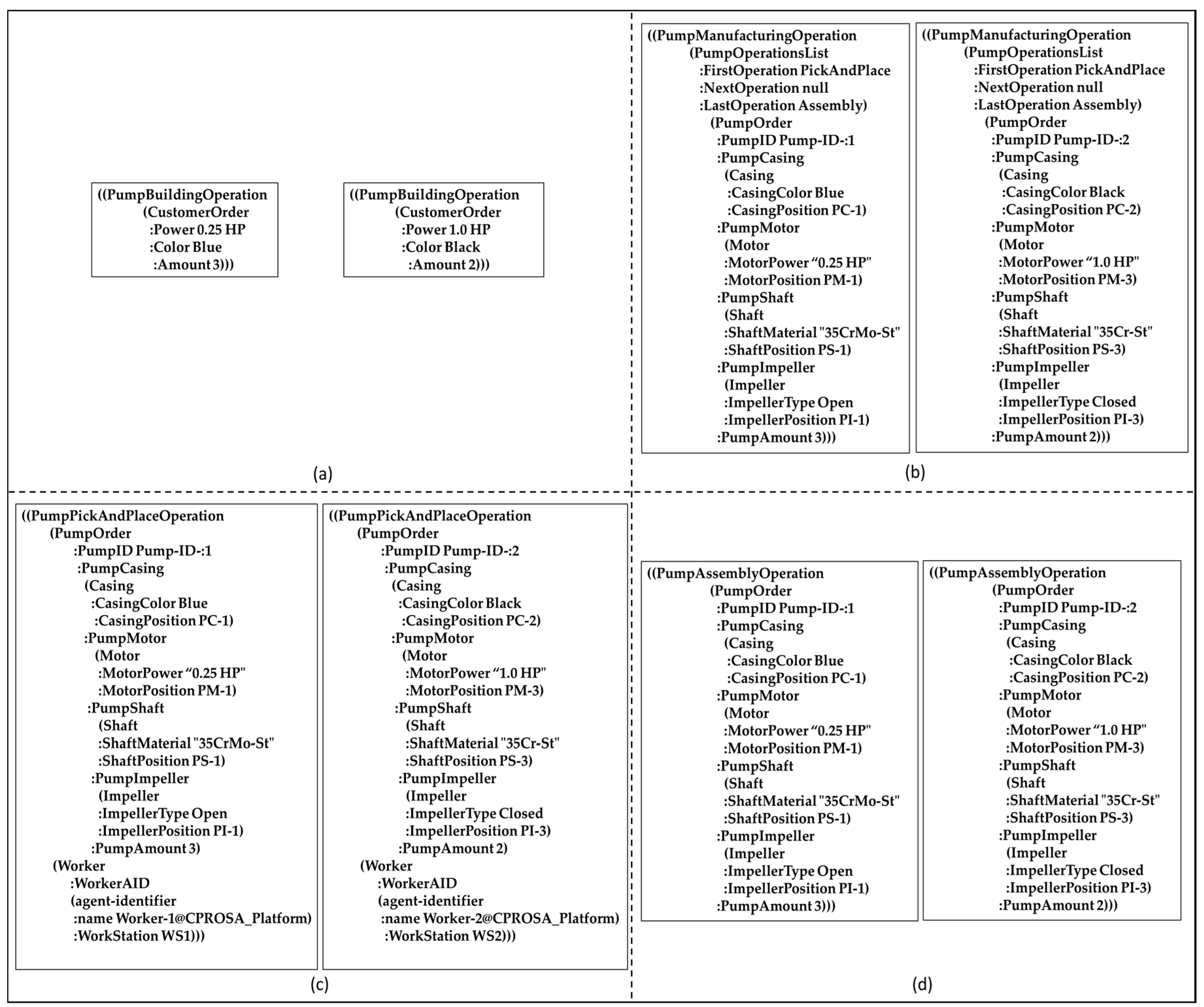

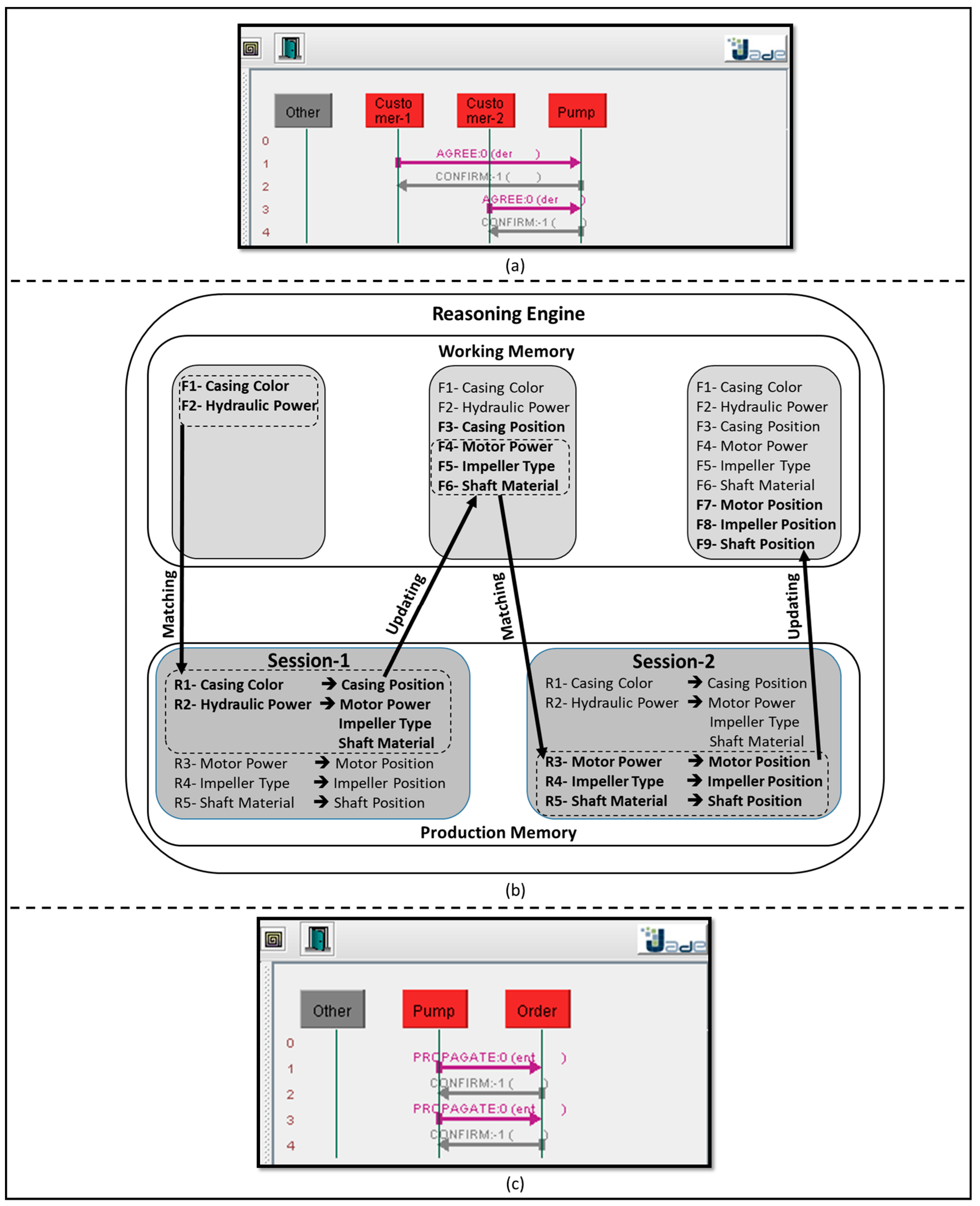

- Pump-Building-Operation: this action schema expects a pump customer-order concept as an input, and it can be deployed by either customer-1 or customer-2 agents. An example of this operation can be seen at the ACL-message content in Figure 12a.

- Pump-Manufacturing-Operation: this action schema expects a Pump-Order and a Pump-Operations-List concept schema as an input, and it is deployed by the pump agent. A detailed example of this operation can be seen at the ACL-message content in Figure 12b.

- Pump-Pick-And-Place-Operation: this action schema expects two concept schema inputs; the first concept schema input is the pump-order which contains the detailed specifications of the pump. Therefore, the cobot can use this information especially the pump features positions to perform the pick operation. The second concept schema input is the target worker. Therefore, the cobot can use the worker workstation location to place the pump features at this location. This action schema is deployed by the orders agent to assign a task to the robot agent. A detailed example of this operation can be seen at the ACL-message content in Figure 12c.

- Pump-Assembly-Operation: this action schema expects one concept schema input which is the pump-order. This operation is beneficial for the worker to provide him with the required features to build a customized pump. Moreover, it provides the amount of required units. This action schema is deployed by the orders agent to assign a task to any of the worker agents based on their status. A detailed example of this operation can be seen at the ACL-message content in Figure 12d.

5.3. Pump-Orders Building

5.4. Pump-Orders Execution

6. Discussion, Conclusions, and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Singh, B.; Sellappan, N.; Kumaradhas, P. Evolution of Industrial Robots and Their Applications. Int. J. Emerg. Technol. Adv. Eng. 2013, 3, 763–768. [Google Scholar]

- Shimon, Y. Handbook of Industrial Robotics; Wiley: New York, NY, USA, 1999; ISBN 0-471-17783-0. [Google Scholar]

- Kutta, A. Robotics; I.K. International Publishing House (Penguin Group): New Delhi, India, 2007; ISBN 978-81-89866-38-9. [Google Scholar]

- Krüger, J.; Lien, T.K.; Verl, A. Cooperation of human and machines in assembly lines. CIRP Ann.-Manuf. Technol. 2009, 58, 628–646. [Google Scholar] [CrossRef]

- Morioka, M.; Sakakibara, S. A new cell production assembly system with human–robot cooperation. CIRP Ann.-Manuf. Technol. 2010, 59, 9–12. [Google Scholar] [CrossRef]

- Heyer, C. Human-robot interaction and future industrial robotics applications. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 4749–4754. [Google Scholar]

- Makris, S.; Karagiannis, P.; Koukas, S.; Matthaiakis, A.S. Augmented reality system for operator support in human–robot collaborative assembly. CIRP Ann.-Manuf. Technol. 2016, 65, 61–64. [Google Scholar] [CrossRef]

- Lasota, A.; Rossano, F.; Shah, A. Safe Close-Proximity Human-Robot Interaction with Standard Industrial Robots. In Proceedings of the IEEE International Conference on Automation Science and Engineering (CASE), Taipei, Taiwan, 18–22 August 2014. [Google Scholar]

- Lasota, A.; Fong, T.; Shah, A. A Survey of Methods for Safe Human-Robot Interaction. Found. Trends Robot. 2017, 5, 261–349. [Google Scholar] [CrossRef]

- Meziane, R.; Otis, M.; Ezzaidi, H. Human-robot collaboration while sharing production activities in dynamic environment: SPADER system. Robot. Comput.-Integr. Manuf. 2017, 48, 243–253. [Google Scholar] [CrossRef]

- Sadik, A.; Bodo, U.; Adel, O. Using Hand Gestures to Interact with an Industrial Robot in a Cooperative Flexible Manufacturing Scenario. In Proceedings of the 3rd International Conference on Mechatronics and Robotics Engineering, Paris, France, 8–12 February 2017; pp. 11–16. [Google Scholar]

- Gleeson, B.; MacLean, K.; Haddadi, A.; Croft, E.; Alcazar, J. Gestures for industry Intuitive human-robot communication from human observation. In Proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013. [Google Scholar]

- Sadik, A.; Bodo, U. Combining Adaptive Holonic Control and ISA-95 Architectures to Self-Organize the Interaction in a Worker-Industrial Robot Cooperative Workcell. Future Internet 2017, 9, 35. [Google Scholar] [CrossRef]

- Sadik, A.; Bodo, U. A Novel Implementation Approach for Resource Holons in Reconfigurable Product Manufacturing Cell. In Proceedings of the 13th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2016), Lisbon, Portugal, 29–31 July 2016; pp. 130–139. [Google Scholar]

- Sadik, A.; Bodo, U. Applying the PROSA Reference Architecture to Enable the Interaction between the Worker and the Industrial Robot—Case Study: One Worker Interaction with a Dual-Arm Industrial Robot. In Proceedings of the 9th International Conference on Agents and Artificial Intelligence (ICAART-2017), Porto, Portugal, 24–26 February 2017; pp. 190–199. [Google Scholar]

- Smirnov, A.; Kashevnik, A.; Mikhailov, S.; Mironov, M.; Mikhail, P. Ontology-based collaboration in multi-robot system: Approach and case study. In Proceedings of the 11th System of Systems Engineering Conference (SoSE), Kongsberg, Norway, 12–16 June 2016. [Google Scholar]

- Sadik, A.; Bodo, U. A Holonic Control System Design for a Human & Industrial Robot Cooperative Workcell. In Proceedings of the 2016 International Conference on Autonomous Robot Systems and Competitions (ICARSC), Bragança, Portugal, 4–6 May 2016; pp. 118–123. [Google Scholar]

- Müller, R.; Vette, M.; Mailahn, O. Process-oriented Task Assignment for Assembly Processes with Human-robot Interaction. Proc. CIRP 2016, 4, 210–215. [Google Scholar] [CrossRef]

- Bikas, C.; Argyrou, A.; Pintzos, G.; Giannoulis, C.; Sipsas, K. An automated assembly process planning system. Proc. CIRP 2016, 44, 222–227. [Google Scholar] [CrossRef]

- Sadik, A.; Bodo, U. Flow Shop Scheduling Problem and Solution in Cooperative Robotics—Case-Study: One Cobot in Cooperation with One Worker. Future Internet 2017, 9, 48. [Google Scholar] [CrossRef]

- Jennings, N.; Wooldridge, M. Agent Technology, 1st ed.; Springer: Berlin, Germany, 1998; pp. 3–28. [Google Scholar]

- Shen, W.; Hao, Q.; Yoon, H.; Norrie, D. Applications of agent-based systems in intelligent manufacturing: An updated review. Adv. Eng. Inform. 2006, 20, 415–431. [Google Scholar] [CrossRef]

- Jade Site. Available online: http://jade.tilab.com/ (accessed on 8 January 2017).

- FIPA Site. Available online: http://www.fipa.org/ (accessed on 1 February 2017).

- Bellifemine, F.; Caire, G.; Greenwood, D. Developing Multi-Agent Systems with JADE, 1st ed.; Wiley: Chichester, UK, 2008. [Google Scholar]

- Ordóñez, A.; Eraso, L.; Ordóñez, H.; Merchan, L. Comparing Drools and Ontology Reasoning Approaches for Automated Monitoring in Telecommunication Processes. Proc. Comput. Sci. 2016, 95, 353–360. [Google Scholar] [CrossRef]

- Al-Ajlan, A. The Comparison between Forward and Backward Chaining. Int. J. Mach. Learn. Comput. 2015, 5, 106–113. [Google Scholar] [CrossRef]

- Drools Guide. Available online: https://docs.jboss.org/drools/release/5.2.0.CR1/drools-expert-docs/html_single/ (accessed on 18 April 2017).

- Sottara, D.; Mello, P.; Proctor, M. A configurable rete-oo engine for reasoning with different types of imperfect information. IEEE Trans. Knowl. Data Eng. 2010, 22, 1535–1548. [Google Scholar] [CrossRef]

- Leitão, P.; Restivo, F. Implementation of a Holonic Control System in a Flexible Manufacturing System. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2008, 38, 699–709. [Google Scholar] [CrossRef]

- Botti, V.; Giret, A. Holonic Manufacturing Systems. In ANEMONA—A Multi-Agent Methodology for Holonic Manufacturing Systems, 1st ed.; Springer: London, UK, 2008; pp. 7–20. [Google Scholar]

- Babiceanu, R.; Chen, F. Development and Applications of Holonic Manufacturing Systems: A Survey. J. Intell. Manuf. 2006, 17, 111–131. [Google Scholar] [CrossRef]

- Van Brussel, H.; Wyns, J.; Valckenaers, P.; Bongaerts, L.; Peeters, P. Reference architecture for holonic manufacturing systems: PROSA. Comput. Ind. 1998, 37, 255–274. [Google Scholar] [CrossRef]

- Black, G.; Vyatkin, V. Intelligent Component-Based Automation of Baggage Handling Systems with IEC 61499. IEEE Trans. Autom. Sci. Eng. 2008, 7, 699–709. [Google Scholar] [CrossRef]

- Balakirsky, S. Ontology based action planning and verification for agile manufacturing. Robot. Comput.-Integr. Manuf. 2015, 33, 21–28. [Google Scholar] [CrossRef]

- Fiorini, S.R.; Carbonera, J.L.; Gonçalves, P.; Jorge, V.A.; Rey, V.F.; Haidegger, T.; Abel, M.; Redfield, S.A.; Balakirsky, S.; Ragavan, V.; et al. Extensions to the core ontology for robotics and automation. Robot. Comput.-Integr. Manuf. 2015, 33, 3–11. [Google Scholar] [CrossRef]

- Rodrigues, N. Development of an Ontology for a Multi-Agent System Controlling a Production Line. MSc Thesis, Instituto Politécnico de Bragança, Bragança, Portugal, 2012. [Google Scholar]

- Freitas, F.L.; Bittencourt, G. An ontology-based architecture for cooperative information agents. Int. Jt. Conf. Artif. Intell. 2003, 18, 37–42. [Google Scholar]

- Takeda, H.; Iwata, K.; Takaai, M.; Sawada, A.; Nishida, T. An ontology-based cooperative environment for real-world agents. Proc. Second Int. Conf. Multiagent Syst. 1996, 353, 360. [Google Scholar]

- Neches, R.; Fikes, R.; Finin, T.; Gruber, T.; Patil, R.; Senator, T.; Swartout, W. Enabling Technology for Knowledge Sharing. Al Mag. 1991, 12, 36–56. [Google Scholar]

- Gruber, T. Toward principles for the design of ontologies used for knowledge sharing. Int. J. Hum.-Comput. Stud. 1995, 43, 907–928. [Google Scholar] [CrossRef]

- Wang, H.; Gibbins, N.; Payne, T.; Redavid, D. A formal model of the Semantic Web Service Ontology (WSMO). Inf. Syst. 2012, 37, 33–60. [Google Scholar] [CrossRef]

- Fensel, D. Ontology-based knowledge management. Robot. Comput.-Integr. Manuf. 2002, 35, 56–59. [Google Scholar] [CrossRef]

- Lai, L. A knowledge engineering approach to knowledge management. Inf. Sci. 2007, 117, 4072–4094. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sadik, A.R.; Urban, B. An Ontology-Based Approach to Enable Knowledge Representation and Reasoning in Worker–Cobot Agile Manufacturing. Future Internet 2017, 9, 90. https://doi.org/10.3390/fi9040090

Sadik AR, Urban B. An Ontology-Based Approach to Enable Knowledge Representation and Reasoning in Worker–Cobot Agile Manufacturing. Future Internet. 2017; 9(4):90. https://doi.org/10.3390/fi9040090

Chicago/Turabian StyleSadik, Ahmed R., and Bodo Urban. 2017. "An Ontology-Based Approach to Enable Knowledge Representation and Reasoning in Worker–Cobot Agile Manufacturing" Future Internet 9, no. 4: 90. https://doi.org/10.3390/fi9040090