An LSTM Based Generative Adversarial Architecture for Robotic Calligraphy Learning System

Abstract

:1. Introduction

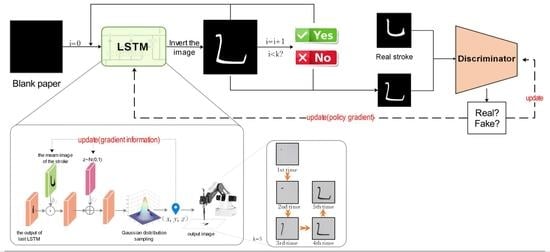

2. Proposed Framework

2.1. Framework Architecture

2.2. Stroke Generation Module

2.3. Stroke Discrimination Module

2.4. Training Algorithm

| Algorithm 1 Training Procedure Pseudocode |

Require: Real stroke images database Xreal , mean of real stroke images h0, random number c0, blank vector p0.

|

3. Experimentation

3.1. Training Data

3.2. Training Process and Writing Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Pujol, F.A.; Tomás, D. Introducing Sustainability in a Robotic Engineering Degree: A Case Study. Sustainability 2020, 12, 5574. [Google Scholar] [CrossRef]

- Rivera, R.G.; Alvarado, R.G.; Martínez-Rocamora, A.; Auat Cheein, F. A Comprehensive Performance Evaluation of Different Mobile Manipulators Used as Displaceable 3D Printers of Building Elements for the Construction Industry. Sustainability 2020, 12, 4378. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, H.; Wan, X.; Skitmore, M.; Sun, H. An Intelligent Waste Removal System for Smarter Communities. Sustainability 2020, 12, 6829. [Google Scholar] [CrossRef]

- Gualtieri, L.; Palomba, I.; Merati, F.A.; Rauch, E.; Vidoni, R. Design of Human-Centered Collaborative Assembly Workstations for the Improvement of Operators’ Physical Ergonomics and Production Efficiency: A Case Study. Sustainability 2020, 12, 3606. [Google Scholar] [CrossRef]

- Chao, F.; Huang, Y.; Zhang, X.; Shang, C.; Yang, L.; Zhou, C.; Hu, H.; Lin, C.M. A robot calligraphy system: From simple to complex writing by human gestures. Eng. Appl. Artif. Intell. 2017, 59, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Zeng, H.; Huang, Y.; Chao, F.; Zhou, C. Survey of robotic calligraphy research. CAAI Trans. Intell. Syst. 2016, 11, 15–26. [Google Scholar] [CrossRef]

- Jian, M.; Dong, J.; Gong, M.; Yu, H.; Nie, L.; Yin, Y.; Lam, K. Learning the Traditional Art of Chinese Calligraphy via Three-Dimensional Reconstruction and Assessment. IEEE Trans. Multimed. 2020, 22, 970–979. [Google Scholar] [CrossRef]

- Gao, X.; Zhou, C.; Chao, F.; Yang, L.; Lin, C.M.; Xu, T.; Shang, C.; Shen, Q. A data-driven robotic Chinese calligraphy system using convolutional auto-encoder and differential evolution. Knowl. Based Syst. 2019, 182, 104802. [Google Scholar] [CrossRef]

- Gan, L.; Fang, W.; Chao, F.; Zhou, C.; Yang, L.; Lin, C.M.; Shang, C. Towards a Robotic Chinese Calligraphy Writing Framework. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 493–498. [Google Scholar]

- Zhang, X.; Li, Y.; Zhang, Z.; Konno, K.; Hu, S. Intelligent Chinese calligraphy beautification from handwritten characters for robotic writing. Vis. Comput. 2019, 35, 1193–1205. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Min, H.; Zhou, H.; Xu, H. Robot Brush-Writing System of Chinese Calligraphy Characters. In Intelligent Robotics and Applications; Yu, H., Liu, J., Liu, L., Ju, Z., Liu, Y., Zhou, D., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 86–96. [Google Scholar]

- Rahmatizadeh, R.; Abolghasemi, P.; Bölöni, L.; Levine, S. Vision-based multi-task manipulation for inexpensive robots using end-to-end learning from demonstration. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 3758–3765. [Google Scholar]

- Chao, F.; Lv, J.; Zhou, D.; Yang, L.; Lin, C.; Shang, C.; Zhou, C. Generative Adversarial Nets in Robotic Chinese Calligraphy. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1104–1110. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Gregor, K.; Danihelka, I.; Graves, A.; Rezende, D.J.; Wierstra, D. DRAW: A Recurrent Neural Network for Image Generation. arXiv 2015, arXiv:1502.04623. [Google Scholar]

- Im, D.J.; Kim, C.D.; Jiang, H.; Memisevic, R. Generating images with recurrent adversarial networks. arXiv 2016, arXiv:1602.05110. [Google Scholar]

- Lian, Z.; Zhao, B.; Xiao, J. Automatic Generation of Large-Scale Handwriting Fonts via Style Learning; Siggraph Asia 2016 Technical Briefs (SA’16); ACM: New York, NY, USA, 2016; pp. 1–4. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chao, F.; Lin, G.; Zheng, L.; Chang, X.; Lin, C.-M.; Yang, L.; Shang, C. An LSTM Based Generative Adversarial Architecture for Robotic Calligraphy Learning System. Sustainability 2020, 12, 9092. https://doi.org/10.3390/su12219092

Chao F, Lin G, Zheng L, Chang X, Lin C-M, Yang L, Shang C. An LSTM Based Generative Adversarial Architecture for Robotic Calligraphy Learning System. Sustainability. 2020; 12(21):9092. https://doi.org/10.3390/su12219092

Chicago/Turabian StyleChao, Fei, Gan Lin, Ling Zheng, Xiang Chang, Chih-Min Lin, Longzhi Yang, and Changjing Shang. 2020. "An LSTM Based Generative Adversarial Architecture for Robotic Calligraphy Learning System" Sustainability 12, no. 21: 9092. https://doi.org/10.3390/su12219092